Course

This article is a valued contribution from our community and has been edited for clarity and accuracy by DataCamp.

Interested in sharing your own expertise? We’d love to hear from you! Feel free to submit your articles or ideas through our Community Contribution Form.

What is Data Integration?

Data drives every decision we make these days, and understanding and utilizing data from diverse sources is essential. Data integration is the process by which data from multiple sources is combined and made available in a unified and cohesive manner. Its primary aim is to offer a holistic view, allowing businesses to derive valuable insights, streamline operations, and make decisions based on data rather than theory.

ETL and ELT: Two Varying Data Integration Processes

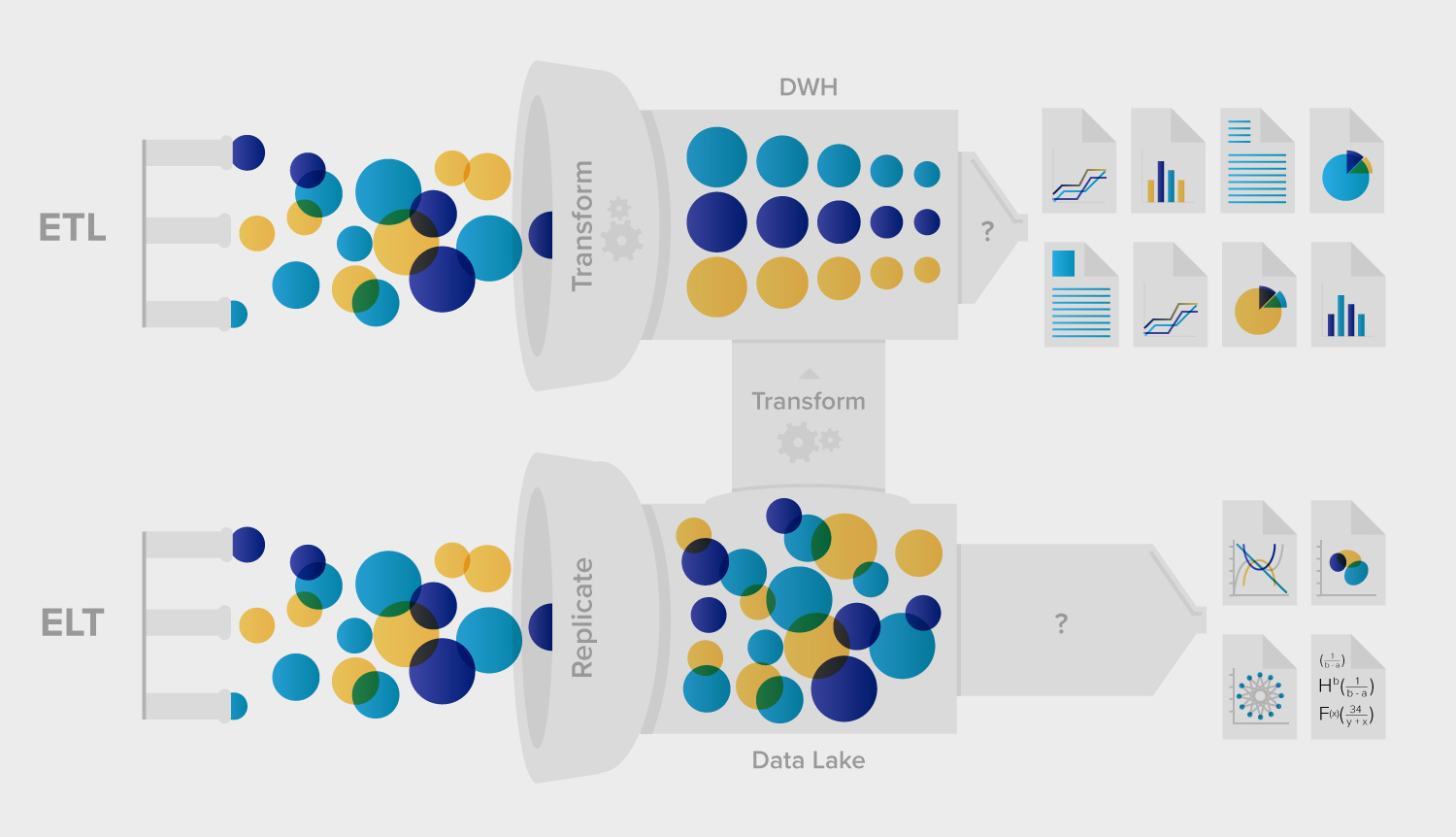

Amidst the plethora of data integration strategies and tools available, ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) stand as the two predominant methodologies. These methods represent distinct approaches to data integration, each with its advantages and applications.

What is ETL (Extract, Transform, Load)?

ETL, as the acronym suggests, consists of three primary steps:

- Extract: Data is gathered from different source systems.

- Transform: Data is then transformed into a standardized format. The transformation can include cleansing, aggregation, enrichment, and other processes to make the data fit for its purpose.

- Load: The transformed data is loaded into a target data warehouse or another repository.

Use Cases and Strengths of ETL

ETL is especially well-suited for scenarios where:

- Data sources are of a smaller scale, and transformations are complex.

- There's a need to offload the transformation processing from the target system.

- Data security is a priority, requiring transformations to mask or encrypt sensitive data before it lands in a warehouse.

ETL is an excellent choice when you need to ensure data consistency, quality, and security. It processes data before it reaches the warehouse, reducing the risk of sensitive data exposure and ensuring that the data conforms to business rules and standards.

ETL Data Integration with Python

Python, a versatile and widely-used programming language, has become a go-to tool for ETL data integration. Its rich ecosystem of libraries and frameworks facilitates every step of the ETL process, making it a go-to choice for data engineers.

Key Python libraries for ETL

- pandas: A powerful library for data manipulation and analysis, pandas simplifies the extraction and transformation of data with its DataFrame structure.

- SQLAlchemy: This library provides a consistent way to interact with databases, aiding in both the extraction and loading phases. Check out DataCamp’s SQLAlchemy tutorial for more info.

- PySpark: For big data processing, PySpark offers distributed data processing capabilities, making it suitable for large-scale ETL tasks.

- Luigi and Apache Airflow: These are workflow management tools that help in orchestrating and scheduling ETL pipelines.

Advantages of using Python for ETL

- Flexibility: Python's extensive libraries allow for custom ETL processes tailored to specific needs.

- Scalability: With tools like PySpark, Python can handle both small and large datasets efficiently.

- Community support: A vast community of data professionals means abundant resources, tutorials, and solutions are available for common ETL challenges.

Incorporating Python into ETL processes can streamline data integration and produce a blend of efficiency, flexibility, and power. Whether dealing with traditional databases or big data platforms, Python's capabilities in ETL are limitless.

What is ELT (Extract, Load, Transform)?

ELT takes a slightly different approach:

- Extract: Just as with ETL, data is collected from different sources.

- Load: Instead of transforming it immediately, raw data is directly loaded into the target system.

- Transform: Transformations take place within the data warehouse.

The Rise of ELT with Cloud Computing

ELT's increasing popularity is closely tied to the advent of cloud-based data warehouses like Snowflake, BigQuery, and Redshift. These platforms possess immense processing power, enabling them to handle large-scale transformations within the warehouse efficiently.

Advantages of ELT

- Flexibility: As raw data is loaded first, businesses can decide on the transformation logic later, offering the ability to adapt as requirements change.

- Efficiency: Capitalizing on the robust power of modern cloud warehouses, transformations are faster and more scalable.

- Suitability for large datasets: ELT is generally more efficient for large datasets as it leverages the power of massive parallel processing capabilities of cloud data warehouses.

ETL vs ELT: A Comparative Analysis

Key similarities and differences

While both ETL and ELT involve extracting data and loading it into a warehouse, their key distinction lies in the location and timing of the transformation process. ETL transforms data before it reaches the warehouse, while ELT does so afterward.

Speed and efficiency

Generally, ELT's data ingestion speed surpasses that of ETL due to reduced data movement. However, the overall speed can be influenced by factors like the complexity of transformations and the capabilities of the data warehouse.

Data transformation and compliance

In ETL, transformations occur in an intermediary system, which can offer more granular control over the process. This is vital for businesses with stringent compliance and data handling requirements. In contrast, ELT relies on the target system's capabilities, which might expose raw, unmasked data until transformations are complete.

Making the Right Choice: ELT vs ETL

Factors to consider

When deciding between ETL and ELT, consider:

- Business Type: A startup might prioritize flexibility (ELT), while a healthcare provider might prioritize data security (ETL).

- Data Needs: Are real-time insights essential, or is daily batch processing sufficient?

- Infrastructure: The choice of data warehouse, existing tools, and IT capabilities can influence the decision.

The role of data integration platforms

Modern data integration platforms can blur the lines between ETL and ELT, offering tools that combine the strengths of both approaches. These platforms can guide businesses in choosing and executing the right strategy based on their unique requirements.

Conclusion

The decision between ETL and ELT isn't black and white. Both methodologies have their merits, and the optimal choice often depends on a company's specific needs and circumstances. By understanding the intricacies of each approach and leveraging modern data integration platforms, data leaders can make informed decisions, driving their businesses toward a more informed future.

To get started with ETL, DataCamp’s ETL with Python course is the ideal resource, covering various tools and how to create efficient pipelines. If you're looking to begin a career in data engineering, check out our Data Engineer Certification to prove your credentials to employers.

Strategic, results-oriented marketing leader with over 15 years of experience enabling growth initiatives across diverse verticals and industries.