Track

Hadoop experts write applications and analyze constantly changing data to obtain insights and maintain data security. That’s why hiring managers have strict criteria to find the best fit for the position and can ask you anything from basic to advanced.

In this article, we’ve collected 24 most commonly asked Hadoop interview questions and answers.

This article is designed to help you prepare thoroughly for your next big data job interview. It covers fundamental concepts and advanced scenarios. Whether you are a beginner or an experienced professional, you'll find valuable insights and practical information to boost your confidence and improve your chances of success.

Basic Hadoop Interview Questions

Interviewers typically start an interview by asking basic questions to assess your understanding of Hadoop and its relevance in managing big data.

Even if you’re an experienced engineer, make sure you have these questions covered.

1. What is big data?

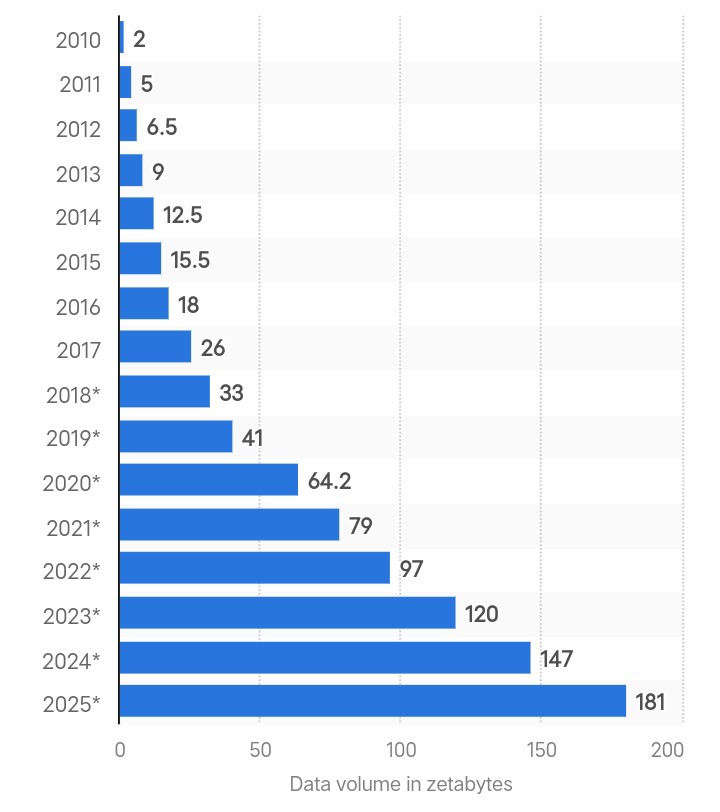

Global data creation in Zettabytes. Source: Statista.

Big data refers to massive amounts of complex data generated at high speed from multiple sources. The total amount of data created globally was 180 zettabytes in 2025 and is projected to triple by 2029.

As data generation accelerates, traditional analysis methods will fail to provide real-time processing and data security. That’s why companies use advanced frameworks like Hadoop to process and manage growing volumes of data.

If you want to start your career in big data, check out our guide on big data training.

2. What is Hadoop, and how does it solve the problem of big data?

Hadoop is an open-source framework for handling large datasets distributed across multiple computers. It stores data across multiple machines as small blocks using the Hadoop Distributed File System (HDFS).

With Hadoop, you can add more nodes to a cluster and handle extensive data without expensive hardware upgrades.

Even big companies like Google and Facebook rely on Hadoop to manage and analyze terabytes to petabytes of daily data.

3. What are the two main components of Hadoop?

The following are the two main components of Hadoop:

- Hadoop Distributed File System (HDFS): It manages data storage by breaking large files into block-sized chunks, each 128 MB by default, and distributes them across multiple nodes in a cluster.

- MapReduce: It is a programming model that Hadoop relies on for data processing. It's a two-phase process consisting of mapping and reducing jobs. Mapping takes the data and converts it into another set of data. The reducer takes the value pairs output from the map job as input and combines them into smaller pairs. The goal is to process them into a user-defined reduce function.

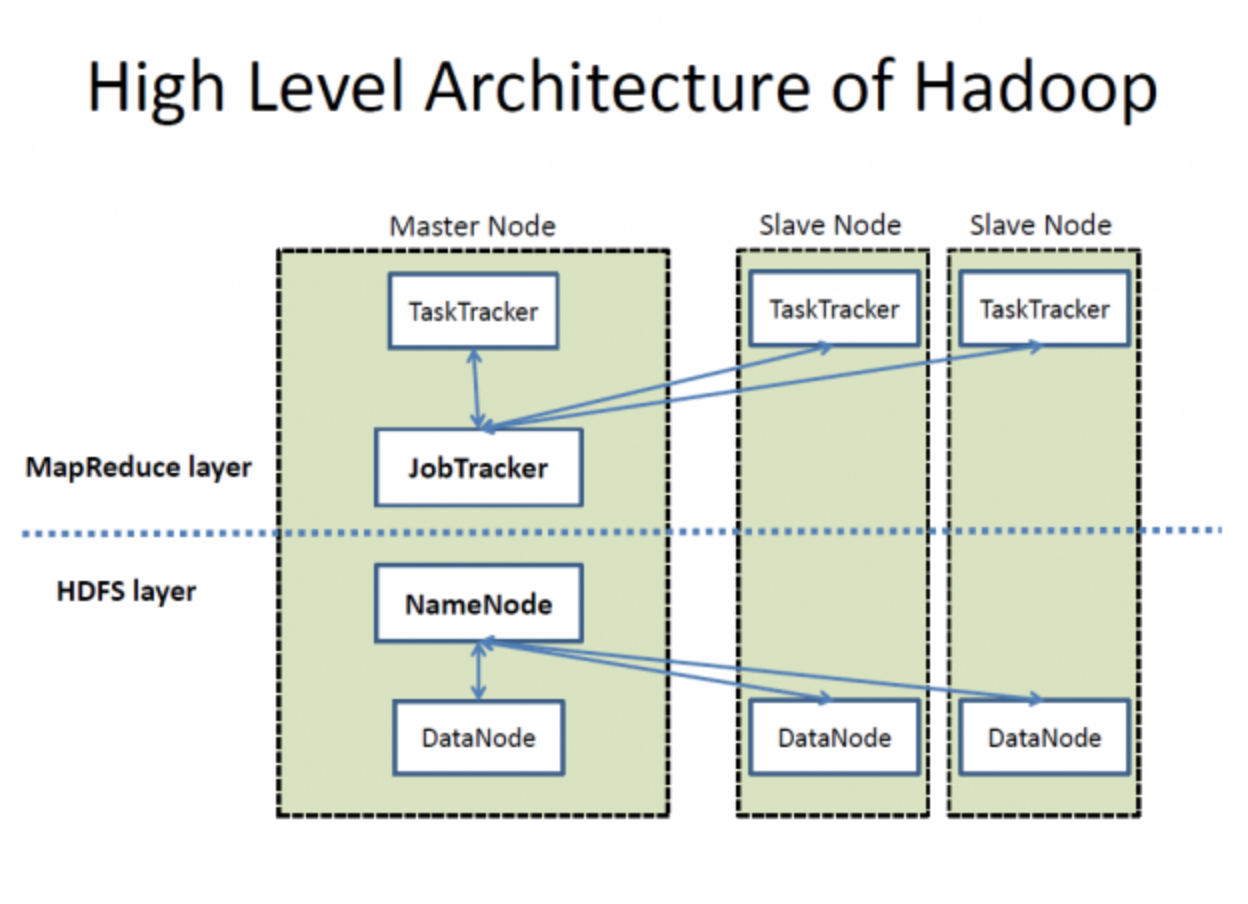

High-level architecture of Hadoop. Source: Wikimedia Commons

4. Define the role of NameNode and DataNode in Hadoop.

HDFS consists of a NameNode and multiple DataNodes for data management, as seen in the image above. Here's how they work:

- As a master server in HDFS, NameNode handles operations like opening, closing, and renaming files. It maintains metadata of permissions and the location of the blocks.

- DataNodes, on the other hand, act as worker nodes to store the actual data blocks of a file. When a client needs to read or write data, it gets the location of the data blocks from NameNodes. After that, the client communicates directly with the relevant DataNodes to perform the required operations.

5. Explain the role of Hadoop YARN.

In Hadoop 1.x, MapReduce handles resource management and job scheduling using JobTracker. However, slow processing is a significant drawback.

Hadoop 2.0 introduced YARN (Yet Another Resource Negotiator) to address these issues. YARN decouples resource management and job scheduling into separate components, which improves scalability and resource utilization. The primary components of YARN are:

- ResourceManager (RM): Manages resources across the cluster.

- NodeManager (NM): Manages resources and monitoring of individual nodes.

- ApplicationMaster (AM): Manages the lifecycle of applications, including their resource needs and execution.

How YARN works:

- Job submission: The client submits an application to the YARN cluster.

- Resource allocation: The ResourceManager starts allocating resources by coordinating with the ApplicationMaster.

- ApplicationMaster registration: The ApplicationMaster registers itself with the ResourceManager.

- Container negotiation: The ApplicationMaster negotiates resources (containers) from the ResourceManager.

- Container launch: Once resources are allocated, the ApplicationMaster instructs NodeManagers to launch containers.

- Application execution: The application code is executed within the allocated containers.

Intermediate Hadoop Interview Questions

The intermediate questions are more focused on assessing your knowledge of the technicalities of the Hadoop framework. The interviewer can ask you about the Hadoop cluster, its challenges, and the comparison of different versions.

6. What is a Hadoop cluster, and is there a specific cluster size companies prefer?

A Hadoop cluster is a collection of interconnected master and slave nodes designed to store and process large datasets in a distributed manner. The cluster architecture ensures high availability, scalability, and fault tolerance.

Components of a Hadoop Cluster:

- Master nodes: These nodes manage the cluster's resources and the distributed file system. Key components include:

- NameNode: Manages the Hadoop Distributed File System (HDFS) namespace and regulates access to files by clients.

- ResourceManager: Manages the allocation of resources for running applications.

- Slave nodes: These nodes are responsible for storing data and performing computations. Key components include:

- DataNode: Stores actual data blocks in HDFS.

- NodeManager: Manages the execution of containers on the node.

There are two primary ways to set up a Hadoop cluster:

- On-premises cluster: Use commodity hardware components to build your cluster. This approach can be cost-effective and customizable based on specific requirements.

- Cloud-based cluster: Opt for cloud-based services such as Amazon EMR, Google Cloud Dataproc, or Azure HDInsight. This method offers flexibility, scalability, and reduced management overhead.

Comparison of On-Premises and Cloud-Based Clusters:

|

Feature |

On-premises cluster |

Cloud-based cluster |

|

Setup cost |

Higher initial investment |

Pay-as-you-go model |

|

Scalability |

Limited by physical hardware |

Virtually unlimited |

|

Maintenance |

Requires in-house management |

Managed by the cloud provider |

|

Flexibility |

Customizable hardware and software |

Pre-configured options |

|

Deployment time |

Longer setup time |

Quick and easy deployment |

There's no specific preference for cluster size. Cluster size is easily scalable and entirely dependent on the storage requirements. Small companies may rely on clusters with around 20 nodes, while businesses like Yahoo operate (or used to) on clusters as extensive as containing 40,000 nodes.

7. What are some Hadoop ecosystem projects?

Different industries have specific data analysis and processing needs. So, Hadoop has released many projects to provide solutions that cater to them as part of its ecosystem. The list of Hadoop projects is broader than you can imagine—but here are the most essential ones:

- MapReduce: A programming model for processing large datasets with a parallel, distributed algorithm.

- Apache Hive: A data warehouse infrastructure for data summarization, query, and analysis using HiveQL.

- Apache HBase: A scalable, big data store providing real-time read/write access to large datasets.

- Apache Pig: A high-level platform for creating MapReduce programs with Pig Latin.

- Apache Sqoop: A tool for transferring bulk data between Hadoop and structured datastores like relational databases.

- Apache Flume: A service for efficiently collecting, aggregating, and moving large amounts of log data.

- Apache Oozie: A workflow scheduler system to manage Hadoop jobs and their dependencies.

- Apache Zookeeper: A centralized service for maintaining configuration information and providing distributed synchronization.

- Apache Spark: A fast, general-purpose cluster-computing system with in-memory processing.

- Apache Storm: A real-time computation system for processing large streams of data.

- Apache Kafka: A distributed streaming platform for handling real-time data feeds.

8. What are some common challenges with Hadoop?

Although the Hadoop framework is excellent at managing and processing enormous amounts of valuable data, it poses some critical challenges.

Let’s understand them:

- The biggest issue with Hadoop is that its NameNode has a single point of failure, which causes data loss in case of failure.

- Hadoop is vulnerable to security threats. You can't directly control your data, and there's no way to know if it's being misused.

- Hadoop's MapReduce framework processes data in batches and doesn't support real-time data processing.

- This framework works well with high-capacity data but cannot process small files. When data is smaller than the default HDFS block size, it overloads the NameNode and causes latency issues.

9. What is HBase, and what is the role of its components?

HBase is a database designed for quick access to large files. It allows you to read and write big datasets in real time by storing data in columns and indexing them with unique row keys.

This setup enables quick data retrieval and efficient scans, which is suitable for large and sparsely populated tables because we can add as many nodes as needed.

HBase has three components:

- HMaster: It manages region servers and is responsible for creating and removing tables.

- Region server: It handles read and write requests and manages region splits.

- ZooKeeper: It maintains cluster state and manages server assignments

10. What makes Hadoop 2.0 different from Hadoop 1.x?

This table compares both Hadoop versions side-by-side:

|

Criteria |

Hadoop 1.x |

Hadoop 2.0 |

|

NameNode management |

A single NameNode manages the Namespace |

Multiple NameNodes handle namespaces through HDFS federation |

|

Operating system support |

There's no support for Microsoft Windows |

Added support for Microsoft |

|

Job and resource management |

Uses JobTracker and TaskTracker for job and resource management |

Replaced them with YARN to separate both tasks |

|

Scalability |

Can scale up to 4,000 nodes per cluster |

Can scale up to 10,000 nodes per cluster |

|

DataNode size |

Has DataNode size of 64 MB |

Has doubled the size to 128 MB |

|

Task execution |

Uses slots that can run either Map or Reduce tasks |

Uses containers that can run any task |

If you’re applying for a data engineering job, check out our comprehensive article on data engineering interview questions.

Advanced Hadoop Interview Questions

Now, this is where things get interesting. These interview questions are asked to test you on an advanced level. These questions are particularly important for senior engineers.

11. What is the concept of active NameNode and standby NameNode in Hadoop 2.0?

In Hadoop 2.0, the Active NameNode manages the file system namespace and controls clients' access to files. On the contrary, the Standby NameNode is a backup and maintains enough information to take over if the Active NameNode fails.

This addresses the single point of failure (SPOF) issue, common in Hadoop 1. x.

12. What is the purpose of the distributed cache in Hadoop? Why can't HDFS read small files?

HDFS is inefficient at processing thousands of small files, which results in increased latency. That’s why distributed cache allows you to store read-only, archive, and jar files and make them available for tasks in MapReduce.

Suppose you need to run 40 jobs in MapReduce, and each job needs to access the file from HDFS. In real-time, this number can grow to hundreds or thousands of reads. The application will frequently locate these files in HDFS, which will overload the HDFS and affect its performance.

However, a distributed cache can handle many small-sized files and doesn't compromise access time and processing speed.

13. Explain the role of checksum in detecting corrupted data. Also, define the standard error-detecting code.

Checksums identify corrupted data in HDFS. When data enters the system, it creates a small value known as a checksum. Hadoop recalculates the checksum when a user requests data transfer. If the new checksum matches the original, the data is intact; if not, it is corrupt.

Error detecting code for Hadoop is CRC-32.

14. How does HDFS achieve fault tolerance?

HDFS achieves fault tolerance through a replication process that ensures reliability and availability. Here's how the replication process works:

- Initial block distribution: When a file is saved, HDFS divides it into blocks and assigns them to different DataNodes. For example, a file divided into blocks A, B, and C might be stored on DataNodes A1, B2, and C3.

- Replication: Each DataNode holding a block replicates that block to other DataNodes in the cluster. For example, DataNode A1, which holds block A, will create additional copies on other DataNodes, such as D1 and D2. This means if A1 crashes, copies of A1 on D1 and D2 will be available.

- Handling failures: If DataNode D1 fails, you can retrieve the required blocks (B and C) from other DataNodes such as B2 and C3.

15. Can you process a compressed file with MapReduce? If yes, what formats does it support, and are they splittable?

Yes, it's possible to process compressed files with MapReduce. Hadoop supports multiple compression formats, but not all of them are splittable.

Following are the formats that MapReduce supports:

- DEFLATE

- gzip

- bzip2

- LZO

- LZ4

- Snappy

Out of all these, bzip2 is the only splittable format.

HDFS divides very large files into smaller parts, each 128 MB. For example, HDFS will break down a 1.28 GB file into ten blocks. Each block is then processed by a separate mapper in a MapReduce job. But if a file is unsplittable, a single mapper handles the entire file.

Hive Interview Questions

Some jobs require expertise in Hive integration with Hadoop. In such a specific role, the hiring manager will focus on these questions:

16. Define Hive.

Hive is a data warehouse system that performs batch jobs and data analyses. It was developed by Facebook to run SQL–like queries on massive datasets stored in HDFS without relying on Java.

With Hive, we can organize data into tables and use a megastore to store metadata like schemas. It supports a spectrum of storage systems, such as S3, Azure Data Lake Storage (ADLs), and Google Cloud Storage.

17. What is a Hive Metastore (HMS)? How would you differentiate a Managed Metastore from an External Metastore?

The Hive Metastore (HMS) is a centralized database for metadata. It contains information about tables, views, and access permissions stored in HDFS object storage.

There are two types of HIVE Metastore tables:

- Managed Metastore: HIVE stores and manages this table in Hive service JVM. We can locate these tables using this default value

/user/hive/warehouse. - External Metastore: These tables run on an outside JVM. So, any change in Hive's metadata does not affect the data stored in these tables.

18. What programming languages does Hive support?

Hive provides robust support for integrating with multiple programming languages, enhancing its versatility and usability in different applications:

- Python: Data analysis and machine learning.

- Java: Enterprise applications and custom data processing.

- C++: Performance-critical applications and custom SerDes.

- PHP: Web-based applications accessing Hive data.

19. What is the maximum data size Hive can handle?

Due to its integration with HDFS and scalable architecture, Hive can handle petabytes of data. There is no fixed upper limit on the data size Hive can manage, making it a powerful tool for big data processing and analytics.

20. How many data types are in Hive?

Hive offers a rich set of data types to handle different data requirements:

- Built-in data types: Numeric, String, Date/Time, and miscellaneous for basic data storage.

- Complex data types: ARRAY, MAP, STRUCT, and UNIONTYPE for more advanced and structured data representations.

Built-in data types:

|

Category |

Data type |

Description |

Example |

|

Numeric types |

TINYINT |

1-byte signed integer |

127 |

|

SMALLINT |

2-byte signed integer |

32767 |

|

|

INT |

4-byte signed integer |

2147483647 |

|

|

BIGINT |

8-byte signed integer |

9223372036854775807 |

|

|

FLOAT |

Single-precision floating-point |

3.14 |

|

|

DOUBLE |

Double-precision floating-point |

3.141592653589793 |

|

|

DECIMAL |

Arbitrary-precision signed decimal number |

1234567890.1234567890 |

|

|

String types |

STRING |

Variable-length string |

'Hello, World!' |

|

VARCHAR |

Variable-length string with specified max length |

'Example' |

|

|

CHAR |

Fixed-length string |

'A' |

|

|

Date/Time types |

TIMESTAMP |

Date and time, including timezone |

'2023-01-01 12:34:56' |

|

DATE |

Date without time |

'2023-01-01' |

|

|

INTERVAL |

Time interval |

INTERVAL '1' DAY |

|

|

Misc. types |

BOOLEAN |

Represents true or false |

TRUE |

|

BINARY |

Sequence of bytes |

0x1A2B3C |

Complex Data Types:

|

Data Type |

Description |

Example |

|

ARRAY |

Ordered collection of elements |

ARRAY<STRING> ('apple', 'banana', 'cherry') |

|

MAP |

Collection of key-value pairs |

MAP<STRING, INT> ('key1' -> 1, 'key2' -> 2) |

|

STRUCT |

Collection of fields of different data types |

STRUCT<name: STRING, age: INT> ('Alice', 30) |

|

UNIONTYPE |

Can hold any one of several specified types |

UNIONTYPE<INT, DOUBLE, STRING> (1, 2.0, 'three') |

Scenario-Based Hadoop Interview Questions for Big Data Engineers

These questions test your ability to use Hadoop to handle real-life problems. They are particularly relevant if you’re applying for a data architect role but can be asked in any interview process that requires Hadoop knowledge.

21. You're setting up an HDFS cluster with Hadoop's replication system. The cluster has three racks: A, B, and C. Explain how Hadoop will replicate a file called datafile.text.

Here's how Hadoop will replicate the data:

- First replica on rack A: Hadoop places the initial replica on a randomly chosen node in rack A. This decision minimizes write latency by selecting a local or nearby node.

- Second replica on rack B: The second replica is placed on a node in a different rack, such as rack B. This ensures that the data is not lost if rack A fails, improving fault tolerance.

- Third replica on rack B: The third replica is placed on another node within rack B. Placing it on the same rack as the second helps balance the network load between racks and reduces inter-rack traffic.

Hadoop's replication strategy in an HDFS cluster with three racks (A, B, and C) is designed to optimize load distribution, enhance fault tolerance, and improve network efficiency. This way, the datafile.text is replicated in a way that balances performance and reliability.

22. You are working on a Hadoop-based application that interacts with files using LocalFileSystem. How will you handle data integrity with a checksum?

Using checksums in Hadoop's LocalFileSystem ensures data integrity by:

- Generating checksums: Automatically creating checksums for data chunks during file writes.

- Storing checksums: Saving checksums in a hidden

.filename.crcfile in the same directory. - Verifying checksums: Comparing stored checksums with actual data during reads.

- Detecting mismatches: Raising

ChecksumExceptionin case of discrepancies.

By following these steps, we can ensure that your application maintains data integrity and promptly detects any potential data corruption.

23. Suppose you're debugging a large-scale Hadoop job spread across multiple nodes, and there are unusual cases affecting the output. How would you deal with that?

Here's what we can do:

- Log potential issues: Use debug statements to log the potential problems to

stderr. - Update task status: Include error log locations in task status messages.

- Custom counters: Implement counters to track and analyze unusual conditions.

- Map output debugging: Write debug information to the map’s output.

- Log analysis program: Create a MapReduce program for detailed log analysis.

Following these steps, we can systematically debug large-scale Hadoop jobs and effectively track and resolve unusual cases affecting the output.

24. You're computing memory settings for a Hadoop cluster using YARN. Explain how you would set the memory for the NodeManager and MapReduce jobs.

When configuring memory settings for a Hadoop cluster using YARN, it’s important to balance the memory allocation between Hadoop daemons, system processes, and the NodeManager to optimize performance and resource utilization.

Setting memory for the NodeManager:

- Calculate the node's total physical memory: Determine the total physical memory available on each node in the cluster. For example, if a node has 64 GB of RAM, its total physical memory is 64,000 MB.

- Deduct memory for Hadoop daemons: Allocate memory for the Hadoop daemons running on the node, such as the DataNode and NodeManager. Typically, 1000 to 2000 MB per daemon is sufficient. For instance, if you allocate 1500 MB each for the DataNode and NodeManager, you need to reserve 3000 MB.

- Reserve memory for system processes: Set aside memory for other system processes and background services to ensure the operating system and essential services run smoothly. A typical reservation might be 2 GB (2000 MB).

- Allocate remaining memory for NodeManager: Subtract the reserved memory for Hadoop daemons and system processes from the total physical memory to determine the memory available for the NodeManager to allocate to containers.

Setting memory for MapReduce jobs:

- Adjust

mapreduce.map.memory.mb: This parameter defines the amount of memory allocated for each map task. Adjust this setting based on the memory requirements of your map tasks. - For example, setting

mapreduce.map.memory.mbto 2048 MB means each map task will be allocated 2 GB of memory. - Adjust

mapreduce.reduce.memory.mb: This parameter defines the amount of memory allocated for each reduce task. Adjust this setting based on the memory requirements of your reduce tasks. - For example, setting

mapreduce.reduce.memory.mbto 4096 MB means each reduce task will be allocated 4 GB of memory. - Configure container size: Ensure the container size is set appropriately in

yarn-site.xmlwith parameters likeyarn.nodemanager.resource.memory-mb, which should align with the memory allocated to the NodeManager. - For instance, if the NodeManager has 59,000 MB available, you might set

yarn.nodemanager.resource.memory-mbto 59,000 MB.

Summary of memory configuration:

|

Component |

Configuration |

Example Values |

|

NodeManager |

Total physical memory |

64,000 MB |

|

Memory for Hadoop daemons |

3000 MB |

|

|

Memory for system processes |

2000 MB |

|

|

Available memory for NodeManager |

59,000 MB |

|

|

MapReduce map task |

mapreduce.map.memory.mb |

2048 MB |

|

MapReduce reduce task |

mapreduce.reduce.memory.mb |

4096 MB |

|

Container size |

yarn.nodemanager.resource.memory-mb |

59,000 MB |

Final Thoughts

After understanding these questions, you should know basic terms and have a

strong command of the complexities of real-life Hadoop applications. In addition to this, you must adapt to new projects built on Hadoop to stay relevant and ace your interviews.

You can also expand your knowledge on other big data frameworks, such as Spark and Flink, as they're fast and have lower latency issues than Hadoop.

But if you’re looking for a structured learning path to master big data, you can check out the following resources:

- Big Data Fundamentals with PySpark: This course is an excellent starting point for learning the basics of big data processing with PySpark.

- Big Data with PySpark: This track provides a comprehensive introduction to big data concepts using PySpark, covering aspects like data manipulation, machine learning, and more.

Become a Data Engineer

FAQs

What additional skills should I learn to complement my Hadoop knowledge?

To enhance your career prospects, learn additional big data frameworks like Apache Spark and Apache Kafka. Understanding SQL and NoSQL databases such as MySQL and MongoDB is beneficial. Proficiency in Python, Java, and Scala, along with data warehousing tools like Apache Hive, is essential. Machine learning frameworks like TensorFlow and PyTorch can also be valuable.

How can I gain practical experience in Hadoop?

Gain practical experience by enrolling in online courses and certifications on platforms like DataCamp. Participate in real-world projects on GitHub or Kaggle. Look for internships at companies working with big data. Engage in hackathons and coding competitions. Contributing to open-source Hadoop projects is another effective way to gain experience and showcase your skills.

What are the career paths available for a Hadoop professional?

Career paths for Hadoop professionals include roles such as big data engineer, data scientist, data analyst, Hadoop administrator, ETL developer, and solutions architect. Each role involves different aspects of designing, managing, and analyzing large-scale data processing systems using Hadoop and related technologies.

Is it easy to get a Hadoop job as a fresher, or do I need to be experienced?

There's no doubt Hadoop interviews are hard to crack. However, it doesn't matter if you're a fresher or experienced professional—all you need is a strong understanding of theory and practical knowledge.

What are the common challenges faced by Hadoop professionals in their careers?

Common challenges include ensuring data security, optimizing Hadoop cluster performance, integrating with various data sources, keeping up with rapid technology advancements, and managing and scaling clusters. Efficient resource management to avoid bottlenecks is also critical. Addressing these challenges helps advance your career as a Hadoop professional

I'm a content strategist who loves simplifying complex topics. I’ve helped companies like Splunk, Hackernoon, and Tiiny Host create engaging and informative content for their audiences.