Track

Data engineering has become one of the most important roles in the tech industry, driving the backbone of data infrastructure for companies worldwide. As companies increasingly rely on data-driven decision-making, the demand for skilled data engineers continues to rise.

If you are preparing for a data engineering interview, you should be well-versed in general and technical questions that evaluate your experience, problem-solving abilities, and technical skills.

In this blog post, we provide a comprehensive guide to help you prepare for different stages of a data engineering interview, from the initial HR screening to in-depth technical evaluations.

Become a Data Engineer

Initial HR Data Engineer Interview Questions

In the initial HR interview round, the HR manager will question you about your work experience and the value you bring to the company. This stage is designed to assess your background, interpersonal skills, and overall fit for the company culture.

1. What makes you the best candidate for this position?

If the hiring manager selects you for a phone interview, they must have seen something they liked in your profile. Approach this question with confidence and talk about your experience and career growth.

It is important to review the company’s profile and job description before the interview. Doing so will help you understand what the hiring manager is looking for and tailor your response accordingly.

Focus on specific skills and experiences aligning with the job requirements, such as designing and managing data pipelines, modeling, and ETL processes. Highlight how your unique combination of skills, experience, and knowledge makes you stand out.

2. What are the daily responsibilities of a data engineer?

While there is no absolute answer, sharing your experiences from previous jobs and referring to the job description can provide a comprehensive response. Generally, the daily responsibilities of data engineers include:

- Developing, testing, and maintaining databases.

- Creating data solutions based on business requirements.

- Data acquisition and integration.

- Developing, validating, and maintaining data pipelines for ETL processes, modeling, transformation, and serving.

- Deploying and managing machine learning models in some cases.

- Maintaining data quality by cleaning, validating, and monitoring data streams.

- Improving system reliability, performance, and quality.

- Following data governance and security guidelines to ensure compliance and data integrity.

3. What is the toughest thing you find about being a data engineer?

This question will vary based on individual experiences, but common challenges include:

- Keeping up with the rapid pace of technological advancements and integrating new tools to enhance the performance, security, reliability, and ROI of data systems.

- Understanding and implementing complex data governance and security protocols.

- Managing disaster recovery plans and ensuring data availability and integrity during unforeseen events.

- Balancing business requirements with technical constraints and predicting future data demands.

- Handling large volumes of data efficiently and ensuring data quality and consistency.

4. What data tools or frameworks do you have experience with? Are there any you prefer over others?

Your answer will be based on your experiences. Being familiar with modern tools and third-party integrations will help you confidently respond to this question. Discuss tools related to:

- Database management (e.g., MySQL, PostgreSQL, MongoDB)

- Data warehousing (e.g., Amazon Redshift, Google BigQuery, Snowflake)

- Data orchestration (e.g., Apache Airflow, Prefect)

- Data pipelines (e.g., Apache Kafka, Apache NiFi)

- Cloud management (e.g., AWS, Google Cloud Platform, Microsoft Azure)

- Data cleaning, modeling, and transformation (e.g., pandas, dbt, Spark)

- Batch and real-time processing (e.g., Apache Spark, Apache Flink)

Remember, there is no wrong answer to this question. The interviewer is assessing your skills and experience.

5. How do you stay updated with the latest trends and advancements in data engineering?

This question evaluates your commitment to continuous learning and staying current in your field.

You can mention subscribing to industry newsletters, following influential blogs, participating in online forums and communities, attending webinars and conferences, and taking online courses. Highlight specific sources or platforms you use to stay informed.

6. Can you describe a time when you had to collaborate with a cross-functional team to complete a project?

Data engineering often involves working with various teams, including data scientists, analysts, and IT staff.

Share a specific example where you successfully collaborated with others, emphasizing your communication skills, ability to understand different perspectives, and how you contributed to the project's success. Explain the challenges you faced and how you overcame them to achieve the desired outcome.

Become a Data Engineer

Technical Data Engineer Interview Questions

Data engineering is highly technical, so it’s no surprise that most of your interview process will involve technical questions and exercises. In this section, we will cover different types of technical questions and answers, focusing on beginner, Python, SQL, project-based, and management questions.

Junior data engineer interview questions

The junior engineering interviews focus on tools, Python, and SQL queries. They may also involve questions about database management and ETL processes, including coding challenges and take-home tests.

When companies hire fresh graduates, they want to ensure you can handle their data and systems effectively.

7. Can you explain the design schemas relevant to data modeling?

There are three primary data modeling design schemas: star, snowflake, and galaxy.

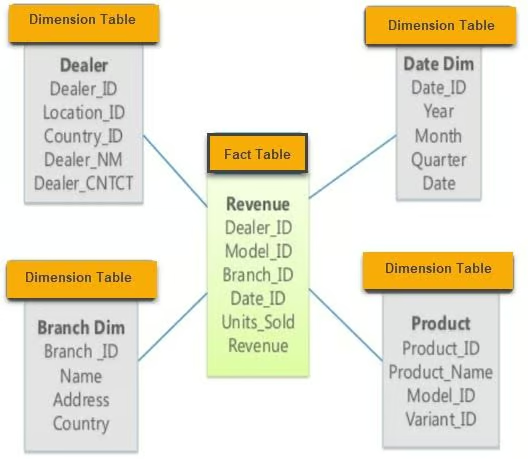

- Star schema: This schema contains various dimension tables connected to a central fact table. It is simple and easy to understand, making it suitable for straightforward queries.

Star schema example. Image from guru99

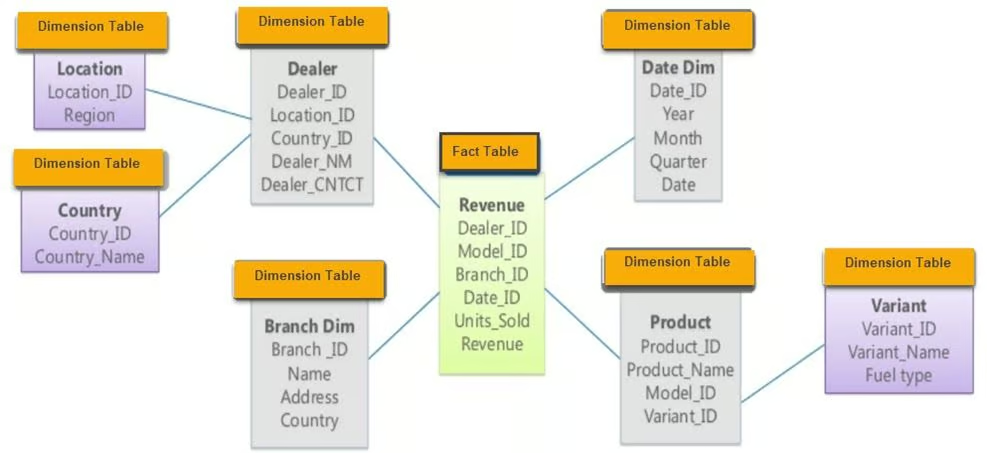

- Snowflake schema: An extension of the star schema, the snowflake schema consists of a fact table and multiple dimension tables with additional layers of normalization, forming a snowflake-like structure. It reduces redundancy and improves data integrity.

Snowflake schema example. Image from guru99

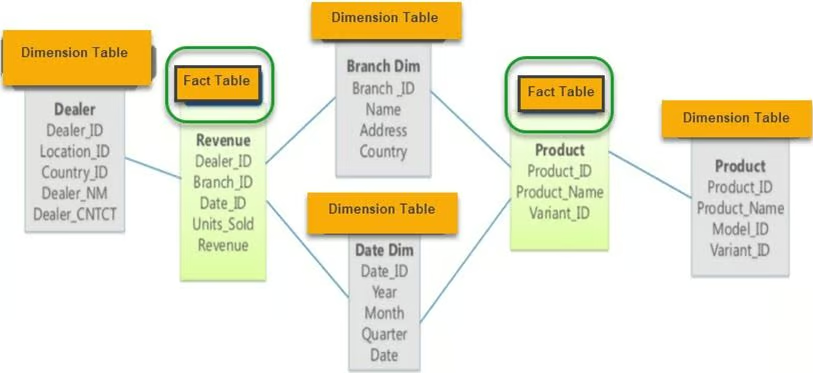

- Galaxy schema: Also known as a fact constellation schema, it contains two or more fact tables that share dimension tables. This schema is suitable for complex database systems that require multiple fact tables.

Galaxy schema example. Image from guru99

8. Which ETL tools have you worked with? What is your favorite, and why?

When answering this question, mention the ETL tools you have mastered and explain why you chose specific tools for certain projects. Discuss the pros and cons of each tool and how they fit into your workflow. Popular open-source tools include:

- dbt (data build tool): Great for transforming data in your warehouse using SQL.

- Apache Spark: Excellent for large-scale data processing and batch processing.

- Apache Kafka: Used for real-time data pipelines and streaming.

- Airbyte: An open-source data integration tool that helps in data extraction and loading.

If you need to refresh your ETL knowledge, consider taking the Introduction to Data Engineering course.

9. What is data orchestration, and what tools can you use to perform it?

Data orchestration is an automated process for accessing raw data from multiple sources, performing data cleaning, transformation, and modeling techniques, and serving it for analytical tasks. It ensures that data flows smoothly between different systems and stages of processing.

Popular tools for data orchestration include:

- Apache Airflow: Widely used for scheduling and monitoring workflows.

- Prefect: A modern orchestration tool with a focus on data flow.

- Dagster: An orchestration tool designed for data-intensive workloads.

- AWS Glue: A managed ETL service that simplifies data preparation for analytics.

10. What tools do you use for analytics engineering?

Analytics engineering involves transforming processed data, applying statistical models, and visualizing it through reports and dashboards.

Popular tools for analytics engineering include:

- dbt (data build tool): This is used to transform data in your warehouse using SQL.

- BigQuery: A fully managed, serverless data warehouse for large-scale data analytics.

- Postgres: A powerful, open-source relational database system.

- Metabase: An open-source tool that lets you ask questions about your data and display the answers in understandable formats.

- Google Data Studio: This is used to create dashboards and visual reports.

- Tableau: A leading platform for data visualization.

These tools help access, transform, and visualize data to derive meaningful insights and support decision-making processes.

11. What is the difference between OLAP and OLTP systems?

OLAP (Online Analytical Processing) analyzes historical data and supports complex queries. It’s optimized for read-heavy workloads and is often used in data warehouses for business intelligence tasks. OLTP (Online Transaction Processing) is designed for managing real-time transactional data. It’s optimized for write-heavy workloads and is used in operational databases for day-to-day business operations.

The main difference lies in their purpose: OLAP supports decision-making, while OLTP supports daily operations.

If you still have doubts, I recommend reading the OLTP vs OLAP blog post.

Python data engineer interview questions

Python is the most popular language in data engineering due to its versatility and the rich ecosystem of libraries available for data processing, analysis, and automation. Here are some questions related to Python that you might encounter in a data engineering interview.

12. Which Python libraries are most efficient for data processing?

The most popular data processing libraries in Python include:

- pandas: Ideal for data manipulation and analysis, providing data structures like DataFrames.

- NumPy: Essential for numerical computations, supporting large multi-dimensional arrays and matrices.

- Dask: Facilitates parallel computing and can handle larger-than-memory computations using a familiar pandas-like syntax.

- PySpark: A Python API for Apache Spark, useful for large-scale data processing and real-time analytics.

Each of these libraries has pros and cons, and the choice depends on the specific data requirements and the scale of the data processing tasks.

13. How do you perform web scraping in Python?

Web scraping in Python typically involves the following steps:

1. Access the webpage using the requests library:

import requests

from bs4 import BeautifulSoup

url = 'http://example.com'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')2. Extract tables and information using BeautifulSoup:

tables = soup.find_all('table')3. Convert it into a structured format using pandas:

import pandas as pd

data = []

for table in tables:

rows = table.find_all('tr')

for row in rows:

cols = row.find_all('td')

cols = [ele.text.strip() for ele in cols]

data.append(cols)

df = pd.DataFrame(data)4. Clean the data using pandas and NumPy:

df.dropna(inplace=True) # Drop missing values5. Save the data in the form of a CSV file:

df.to_csv('scraped_data.csv', index=False)In some cases, pandas.read_html can simplify the process:

df_list = pd.read_html('http://example.com')

df = df_list[0] # Assuming the table of interest is the first one14. How do you handle large datasets in Python that do not fit into memory?

Handling large datasets that do not fit into memory requires using tools and techniques designed for out-of-core computation:

- Dask: Allows for parallel computing and works with larger-than-memory datasets using a pandas-like syntax.

import dask.dataframe as dd

df = dd.read_csv('large_dataset.csv')- PySpark: Enables distributed data processing, which is useful for handling large-scale data.

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('data_processing').getOrCreate()

df = spark.read.csv('large_dataset.csv', header=True, inferSchema=True)- Chunking with pandas: Read large datasets in chunks.

import pandas as pd

chunk_size = 10000

for chunk in pd.read_csv('large_dataset.csv', chunksize=chunk_size):

process(chunk) # Replace with your processing function15. How do you ensure your Python code is efficient and optimized for performance?

To ensure Python code is efficient and optimized for performance, consider the following practices:

- Profiling: Use profiling tools like

cProfile,line_profiler, ormemory_profilerto identify bottlenecks in your code.

import cProfile

cProfile.run('your_function()')- Vectorization: Use

numpyorpandasfor vectorized operations instead of loops.

import numpy as np

data = np.array([1, 2, 3, 4, 5])

result = data * 2 # Vectorized operation- Efficient data structures: Choose appropriate data structures (e.g., lists, sets, dictionaries) based on your use case.

data_dict = {'key1': 'value1', 'key2': 'value2'} # Faster lookups compared to lists- Parallel processing: Utilize multi-threading or multi-processing for tasks that can be parallelized.

from multiprocessing import Pool

def process_data(data_chunk):

# Your processing logic here

return processed_chunk

with Pool(processes=4) as pool:

results = pool.map(process_data, data_chunks)- Avoiding redundant computations: Cache results of expensive operations if they need to be reused.

from functools import lru_cache

@lru_cache(maxsize=None)

def expensive_computation(x):

# Perform expensive computation

return result16. How do you ensure data integrity and quality in your data pipelines?

Data integrity and quality are important for reliable data engineering. Best practices include:

- Data validation: Implement checks at various stages of the data pipeline to validate data formats, ranges, and consistency.

def validate_data(df):

assert df['age'].min() >= 0, "Age cannot be negative"

assert df['salary'].dtype == 'float64', "Salary should be a float"

# Additional checks...- Data cleaning: Use libraries like

pandasto clean and preprocess data by handling missing values, removing duplicates, and correcting errors.

df.dropna(inplace=True) # Drop missing values

df.drop_duplicates(inplace=True) # Remove duplicates- Automated testing: Develop unit tests for data processing functions using frameworks like

pytest.

import pytest

def test_clean_data():

raw_data = pd.DataFrame({'age': [25, -3], 'salary': ['50k', '60k']})

clean_data = clean_data_function(raw_data)

assert clean_data['age'].min() >= 0

assert clean_data['salary'].dtype == 'float64'- Monitoring and alerts: Set up monitoring for your data pipelines to detect anomalies and send alerts when data quality issues arise.

from airflow import DAG

from airflow.operators.dummy_operator import DummyOperator

from airflow.operators.email_operator import EmailOperator

# Define your DAG and tasks...17. How do you handle missing data in your datasets?

Handling missing data is a common task in data engineering. Approaches include:

- Removal: Simply remove rows or columns with missing data if they are not significant.

df.dropna(inplace=True)- Imputation: Fill missing values with statistical measures (mean, median) or use more sophisticated methods like KNN imputation.

df['column'].fillna(df['column'].mean(), inplace=True)- Indicator variable: Add an indicator variable to specify which values were missing.

df['column_missing'] = df['column'].isnull().astype(int)- Model-based imputation: Use predictive modeling to estimate missing values.

from sklearn.impute import KNNImputer

imputer = KNNImputer(n_neighbors=5)

df = pd.DataFrame(imputer.fit_transform(df), columns=df.columns)18. How do you handle API rate limits when fetching data in Python?

To handle API rate limits, there are strategies such as:

- Backoff and retry: Implementing exponential backoff when rate limits are reached.

- Pagination: Fetching data in smaller chunks using the API’s pagination options.

- Caching: Storing responses to avoid redundant API calls.

Example using Python's time library and the requests module:

import time

import requests

def fetch_data_with_rate_limit(url):

for attempt in range(5): # Retry up to 5 times

response = requests.get(url)

if response.status_code == 429: # Too many requests

time.sleep(2 ** attempt) # Exponential backoff

else:

return response.json()

raise Exception("Rate limit exceeded")Python is undoubtedly one of the most important languages in data engineering. You can hone your skills by taking our Data Engineer in Python track, which covers a comprehensive curriculum to equip you with modern data engineering concepts, programming languages, tools, and frameworks.

SQL data engineer interview questions

The SQL coding stage is a significant part of the data engineering hiring process. Practicing various simple and complex scripts can help you prepare. Interviewers may ask you to write queries for data analytics, common table expressions, ranking, adding subtotals, and temporary functions.

19. What are Common Table Expressions (CTEs) in SQL?

CTEs are used to simplify complex joins and run subqueries. They help make SQL queries more readable and maintainable. Here’s an example of a CTE that displays all students with Science majors and grade A:

SELECT *

FROM class

WHERE id IN (

SELECT DISTINCT id

FROM students

WHERE grade = "A"

AND major = "Science"

);Using a CTE, the query becomes:

WITH temp AS (

SELECT id

FROM students

WHERE grade = "A"

AND major = "Science"

)

SELECT *

FROM class

WHERE id IN (SELECT id FROM temp);CTEs can be used for more complex problems and multiple CTEs can be chained together.

20. How do you rank the data in SQL?

Data engineers commonly rank values based on parameters such as sales and profit. The RANK() function is used to rank data based on a specific column:

SELECT

id,

sales,

RANK() OVER (ORDER BY sales DESC) AS rank

FROM bill;Alternatively, you can use DENSE_RANK() which does not skip subsequent ranks if the values are the same.

21. Can you create a simple temporary function and use it in an SQL query?

Like in Python, you can create functions in SQL to make your queries more elegant and avoid repetitive case statements. Here’s an example of a temporary function get_gender:

CREATE TEMPORARY FUNCTION get_gender(type VARCHAR) RETURNS VARCHAR AS (

CASE

WHEN type = "M" THEN "male"

WHEN type = "F" THEN "female"

ELSE "n/a"

END

);

SELECT

name,

get_gender(type) AS gender

FROM class;This approach makes your SQL code cleaner and more maintainable.

22. How do you add subtotals in SQL?

Adding subtotals can be achieved using the GROUP BY and ROLLUP() functions. Here’s an example:

SELECT

department,

product,

SUM(sales) AS total_sales

FROM sales_data

GROUP BY ROLLUP(department, product);This query will give you a subtotal for each department and a grand total at the end.

23. How do you handle missing data in SQL?

Handling missing data is essential for maintaining data integrity. Common approaches include:

- Using

COALESCE(): This function returns the first non-null value in the list.

SELECT id, COALESCE(salary, 0) AS salary FROM employees;- Using

CASEstatements: To handle missing values conditionally.

SELECT id,

CASE

WHEN salary IS NULL THEN 0

ELSE salary

END AS salary

FROM employees;24. How do you perform data aggregation in SQL?

Data aggregation involves using aggregate functions like SUM(), AVG(), COUNT(), MIN(), and MAX(). Here’s an example:

SELECT department,

SUM(salary) AS total_salary,

AVG(salary) AS average_salary,

COUNT(*) AS employee_count

FROM employees

GROUP BY department;25. How do you optimize SQL queries for better performance?

To optimize SQL queries, you can:

- Use indexes on frequently queried columns to speed up lookups.

- Avoid

SELECT *by specifying only the required columns. - Use joins wisely and avoid unnecessary ones.

- Optimize using subqueries by replacing them with CTEs when appropriate.

- Analyze query execution plans to identify bottlenecks. Example:

EXPLAIN ANALYZE

SELECT customer_id, COUNT(order_id)

FROM orders

GROUP BY customer_id;Solving SQL coding exercises is the best way to practice and revise forgotten concepts. You can assess your SQL skills by taking DataCamp’s Data Analysis in SQL test (you will need an account to access this assessment).

Project-related data engineer interview questions

After general rounds of interviews, you will usually progress into a technical stage which consists of coding challenges, problem-solving, database system design on a whiteboard, a take-home exam, and analytical questions.

This stage can be quite intense, so knowing some of the usual data engineering interview questions and answers can help you ace the interview.

26. Walk me through a project you worked on from start to finish.

This answer should come naturally if you have previously worked on a data engineering project as a student or a professional. That being said, preparing ahead of time is always helpful. Here's how to structure your response:

- Introduction and business problem:

- Start by explaining the context of the project. Describe the business problem you were solving and the project's goals.

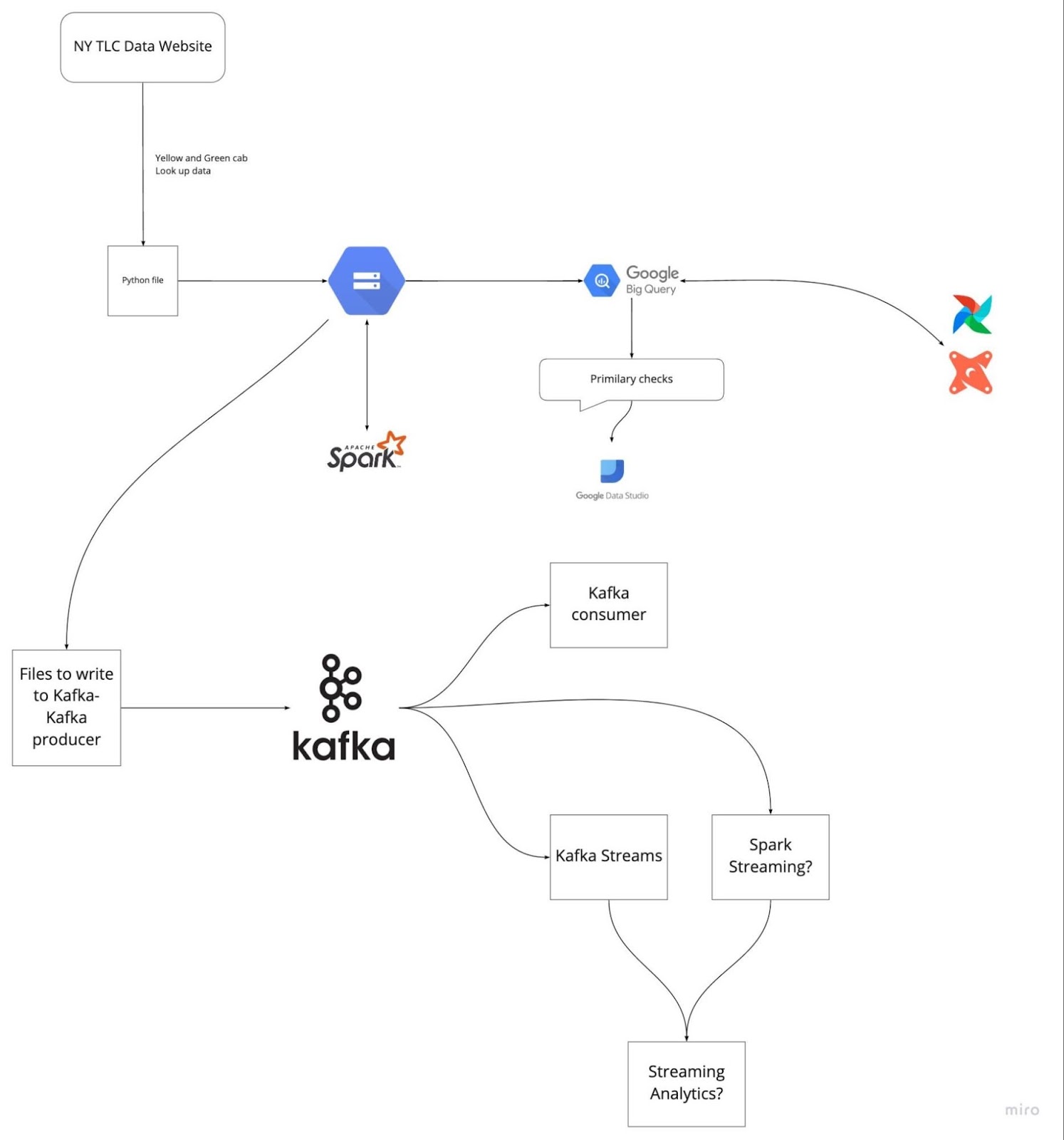

- Example: "In this project, we aimed to optimize the data pipeline for processing TLC Trip Record data to improve query performance and data accuracy for the analytics team."

- Data ingestion:

- Describe how you accessed and ingested the raw data.

- Example: "We ingested the raw TLC Trip Record data using GCP, Airflow, and PostgreSQL to ensure reliable data intake from multiple sources."

- Data processing and transformation:

- Explain the steps taken to clean, transform, and structure the data.

- Example: "We used Apache Spark for batch processing and Apache Kafka for real-time streaming to handle the data transformation. The data was cleaned, validated, and converted into a structured format suitable for analysis."

- Data storage and warehousing:

- Discuss the data storage solutions used and why they were chosen.

- Example: "The processed data was stored in Google BigQuery, which provided a scalable and efficient data warehousing solution. Airflow was used to manage the data workflows."

- Analytical engineering:

- Highlight the tools and methods used for analytical purposes.

- Example: "We used dbt (data build tool), BigQuery, PostgreSQL, Google Data Studio, and Metabase for analytical engineering. These tools helped in creating robust data models and generating insightful reports and dashboards."

- Deployment and cloud environment:

- Mention the deployment strategies and cloud infrastructure used.

- Example: "The entire project was deployed using GCP, Terraform, and Docker, ensuring a scalable and reliable cloud environment."

- Challenges and solutions:

- Discuss any challenges you faced and how you overcame them.

- Example: "One of the main challenges was handling the high volume of data in real-time. We addressed this by optimizing our Kafka streaming jobs and implementing efficient Spark transformations."

- Results and Impact:

- Conclude by describing the results and impact of the project.

- Example: "The project significantly improved the query performance and data accuracy for the analytics team, leading to faster decision-making and better insights."

Image from DataTalksClub/data-engineering-zoomcamp

Preparing ahead by reviewing the last five projects you have worked on can help you avoid freezing during the interview. Understand the problem statement and the solutions you implemented. Practice explaining each step clearly and concisely.

Data engineer manager interview questions

For engineering manager positions, the questions normally relate to decision-making, business understanding, curating and maintaining datasets, compliance, and security policies.

27. What is the difference between a data warehouse and an operational database?

A data warehouse serves historical data for data analytics tasks and decision-making. It supports high-volume analytical processing, such as Online Analytical Processing (OLAP). Data warehouses are designed to handle complex queries that access multiple rows and are optimized for read-heavy operations. They support a few concurrent users and are designed to retrieve fast and high volumes of data efficiently.

Operational Database Management Systems (OLTP) manage dynamic datasets in real time. They support high-volume transaction processing for thousands of concurrent clients, making them suitable for day-to-day operations. The data usually consists of current, up-to-date information about business transactions and operations. OLTP systems are optimized for write-heavy operations and fast query processing.

28. Why do you think every firm using data systems requires a disaster recovery plan?

Disaster management is the responsibility of a data engineering manager. A disaster recovery plan ensures that data systems can be restored and continue to operate in the event of a cyber-attack, hardware failure, natural disaster, or other catastrophic events. Relevant aspects include:

- Real-time backup: Regularly backing up files and databases to secure, offsite storage locations.

- Data redundancy: Implementing data replication across different geographical locations to ensure availability.

- Security protocols: Establishing protocols to monitor, trace, and restrict both incoming and outgoing traffic to prevent data breaches.

- Recovery procedures: Detailed procedures for restoring data and systems quickly and efficiently to minimize downtime.

- Testing and drills: Regularly testing the disaster recovery plan through simulations and drills to ensure its effectiveness and make necessary adjustments.

29. How do you approach decision-making when leading a data engineering team?

As a data engineering manager, decision-making involves balancing technical considerations with business objectives. Some approaches include:

- Data-driven decisions: Using data analytics to inform decisions, ensuring they are based on objective insights rather than intuition.

- Stakeholder collaboration: Working closely with stakeholders to understand business requirements and align data engineering efforts with company goals.

- Risk assessment: Evaluating potential risks and their impact on projects and developing mitigation strategies.

- Agile methodologies: Implementing agile practices to adapt to changing requirements and deliver value incrementally.

- Mentorship and development: Supporting team members' growth by providing mentorship and training opportunities and fostering a collaborative environment.

30. How do you handle compliance with data protection regulations in your data engineering projects?

Compliance with data protection regulations involves several practices, for example:

- Understanding regulations: Staying updated on data protection regulations such as GDPR, CCPA, and HIPAA.

- Data governance framework: Implementing a robust data governance framework that includes policies for data privacy, security, and access control.

- Data encryption: Encrypting sensitive data both at rest and in transit to prevent unauthorized access.

- Access controls: Implementing strict access controls ensures that only authorized personnel can access sensitive data.

- Audits and monitoring: Regularly conducting audits and monitoring data access and usage to detect and address any compliance issues promptly.

31. Can you describe a challenging data engineering project you managed?

When discussing a challenging project, you can focus on the following aspects:

- Project scope and objectives: Clearly define the project's goals and the business problem it aimed to solve.

- Challenges encountered: Describe specific challenges such as technical limitations, resource constraints, or stakeholder alignment issues.

- Strategies and solutions: Explain your methods to overcome these challenges, including technical solutions, team management practices, and stakeholder engagement.

- Outcomes and impact: Highlight the successful outcomes and the impact on the business, such as improved data quality, enhanced system performance, or increased operational efficiency.

32. How do you evaluate and implement new data technologies?

Evaluating and implementing new data technologies involves:

- Market research: Keeping abreast of the latest advancements and trends in data engineering technologies.

- Proof of concept (PoC): Conducting PoC projects to test the feasibility and benefits of new technologies within your specific context.

- Cost-benefit analysis: Assessing the costs, benefits, and potential ROI of adopting new technologies.

- Stakeholder buy-in: Presenting findings and recommendations to stakeholders to secure buy-in and support.

- Implementation plan: Developing a detailed implementation plan that includes timelines, resource allocation, and risk management strategies.

- Training and support: Providing training and support to the team to ensure a smooth transition to new technologies.

33. How do you prioritize tasks and projects in a fast-paced environment?

An effective way to prioritize tasks is based on their impact on business objectives and urgency. You can use frameworks like the Eisenhower Matrix to categorize tasks into four quadrants: urgent and important, important but not urgent, urgent but not important, and neither. Additionally, communicate with stakeholders to align priorities and ensure the team focuses on high-value activities.

FAANG Data Engineer Interview Questions

In this section, we explore the most frequently asked data engineering interview questions by Facebook, Amazon, and Google managers for data engineering positions.

Facebook Data Engineer Interview Questions

34. Why do we use clusters in Kafka, and what are its benefits?

A Kafka cluster consists of multiple brokers that distribute data across multiple instances. This architecture provides scalability and fault tolerance without downtime. If the primary cluster goes down, other Kafka clusters can deliver the same services, ensuring high availability.

The Kafka cluster architecture comprises Topics, Brokers, ZooKeeper, Producers, and Consumers. It efficiently handles data streams for big data applications, enabling the creation of robust data-driven applications.

35. What issues does Apache Airflow resolve?

Apache Airflow allows you to manage and schedule pipelines for analytical workflows, data warehouse management, and data transformation and modeling. It provides:

- Pipeline management: A platform to define, schedule, and monitor workflows.

- Centralized logging: Monitor execution logs in one place.

- Error handling: Callbacks to send failure alerts to communication platforms like Slack and Discord.

- User interface: A user-friendly UI for managing and visualizing workflows.

- Integration: Robust integrations with various tools and systems.

- Open source: It is free to use and widely supported by the community.

Amazon Data Engineer Interview Questions

36. You’re given an IP address as input as a string. How would you find out if it is a valid IP address or not?

To determine the validity of an IP address, you can split the string on “.” and create multiple checks to validate each segment. Here is a Python function to accomplish this:

def is_valid(ip):

ip = ip.split(".")

for i in ip:

if len(i) > 3 or int(i) < 0 or int(i) > 255:

return False

if len(i) > 1 and int(i) == 0:

return False

if len(i) > 1 and int(i) != 0 and i[0] == '0':

return False

return True

A = "255.255.11.135"

B = "255.050.11.5345"

print(is_valid(A)) # True

print(is_valid(B)) # False37. What are the various modes in Hadoop?

Hadoop mainly works in three modes:

- Standalone mode: This mode is used for debugging purposes. It does not use HDFS and relies on the local file system for input and output.

- Pseudo-distributed mode: This is a single-node cluster in which the NameNode and DataNode reside on the same machine. It is primarily used for testing and development.

- Fully distributed mode: This is a production-ready mode in which the data is distributed across multiple nodes, with separate nodes for the master (NameNode) and slave (DataNode) daemons.

Google Data Engineer Interview Questions

38. How would you handle duplicate data points in an SQL query?

To handle duplicates in SQL, you can use the DISTINCT keyword or delete duplicate rows using ROWID with the MAX or MIN function. Here are examples:

Using DISTINCT:

SELECT DISTINCT Name, ADDRESS

FROM CUSTOMERS

ORDER BY Name;Deleting duplicates using ROWID:

DELETE FROM Employee

WHERE ROWID NOT IN (

SELECT MAX(ROWID)

FROM Employee

GROUP BY Name, ADDRESS

);39. Given a list of n-1 integers, these integers are in the range of 1 to n. There are no duplicates in the list. One of the integers is missing in the list. Can you write an efficient code to find the missing integer?

This common coding challenge can be solved using a mathematical approach:

def search_missing_number(list_num):

n = len(list_num)

# Check if the first or last number is missing

if list_num[0] != 1:

return 1

if list_num[-1] != n + 1:

return n + 1

# Calculate the sum of the first n+1 natural numbers

total = (n + 1) * (n + 2) // 2

# Calculate the sum of all elements in the list

sum_of_L = sum(list_num)

# Return the difference, which is the missing number

return total - sum_of_L

# Validation

num_list = [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 13]

print("The missing number is", search_missing_number(num_list)) # The missing number is 12How to Prepare for the Data Engineer Interview

Before appearing for an interview, you should review all the concepts and standard terms used in data engineering. Here are some of the most important areas to focus on:

- Master SQL: You should practice creating, modifying, and managing databases. Moreover, you should master data analytics, modeling, and transformation.

- Solve coding challenges: Solve Python, Scala, or C++ coding challenges. Most companies assess programming skills by giving take-home exams and live coding challenges.

- Design an ETL pipeline: practice to create data, ETL, or delivery pipelines. You must understand how to test, validate, scale, and maintain data pipelines.

- Analytics engineering: practice loading, transforming, and data analytics. Learn to create a dashboard for data quality and system performance.

- Review potential questions: Prepare for the interview by reviewing sample mock questions. A simple Google search will give you access to hundreds of questions.

- Learn about modern data engineering tools: even if you don’t have experience with modern data engineering tools, you should know how they work and how they integrate with other tools. Companies are always looking for better tools to improve performance at a lower cost.

- Learn batch processing and streaming: Apache Spark is used for batch processing, and Apache Kafka is used for data streaming. These tools are in high demand, and they will help you land a job in the top companies.

- Environment: Sometimes, the interviewer will ask about cloud computing (GCP, AWS, Azure), Docker, scripting, Terraform, and Kubernetes. You can use these tools to set up cloud or on-premise computer and storage resources. Understanding these technologies and integrating them into portfolio projects is a good practice.

Preparing for a data engineering interview can be challenging, but with the right resources and practice, you can excel and land your dream job. To further enhance your skills and knowledge, check out these valuable resources:

- Understanding Data Engineering: Gain a comprehensive understanding of data engineering concepts and principles.

- Data Engineer in Python: Master data engineering with Python through this career track, covering essential tools and frameworks.

- Database Design: Learn the fundamentals of database design to create efficient and scalable databases.

Take advantage of these courses to solidify your foundation and stay ahead in your data engineering career. Good luck with your interviews!

Become a Data Engineer

FAQs

What can I expect from a data engineer interview?

You can expect an HR phone screen, technical phone screen, take-home exam, coding challenge, on-site interview, whiteboard database and system designs, SQL interview, and finally, the “executive” interview to check cultural fit.

Some companies have three stages of interviews, while others have as many as nine stages. Organizations often have a high barrier of entry to test candidates on every level.

Are data engineers in demand?

Yes, every company that generates data needs data engineers to create pipelines, manage, and deliver data to various departments. By 2025, we will be producing 463 exabytes of data per day, and we will need more and more engineers to extract, transform, and manage the data pipelines and systems - weforum.org.

Do data engineers write code?

Yes, everyone related to the IT field must learn to write code, even at the managerial level. For data engineers, Python, SQL, Docker, Yaml, and Bash are necessary coding languages. They are used in infrastructure as code, pipelines, database management, streaming, web scraping, data processing, modeling, and analytics.

What is the difference between a data analyst and data engineer?

Data engineer collects, transforms, and prepares data for data analysts to extract valuable business insights. Data engineers manage the entire database systems and make sure they provide high-quality data for data analysis tasks such as analytical reports, dashboards, customer research, and forecasting.

What does a data engineer actually do?

Acquire data from multiple sources, create, validate, and maintain data pipelines, transform data using algorithms, perform analytical engineering, ensure compliance with data governance and security, and maintain entire database systems. They are responsible for providing high-quality data streams to various departments in a company. You can learn more about data engineering by reading What is Data Engineering? blog.

What skills do you need to be a data engineer?

You must know coding, data warehousing, ETL (Extract Transform Load), SQL queries, data analytics and modeling, critical things, and communication skills. Data engineering is learned through experience and overcoming complex challenges in the field. Our Data Engineering Certification is the perfect undertaking to build your skills and land a data engineer role.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.