Track

Kubernetes has become the default technology for managing containerized applications in modern cloud environments. As organizations shift towards microservices and cloud-native architectures, Kubernetes provides everything they need to automate deployment, scale applications, and ensure high availability.

Kubernetes is an open-source container orchestrator that simplifies the complex process of managing containers at scale. It abstracts infrastructure concerns, enabling developers to focus on building applications rather than worrying about the underlying servers.

Understanding Kubernetes architecture is essential for anyone looking to deploy and manage scalable, resilient, and production-grade applications.

Today, I want to share a deeper insight about the Kubernetes architecture with intermediate-level learners already familiar with basic Kubernetes concepts.

What is Kubernetes?

Kubernetes is a powerful distributed system designed to orchestrate and manage containerized applications at scale. It enables organizations to deploy workloads across multiple physical or virtual machines, called Nodes, ensuring that applications remain available, scalable, and resilient to failures.

One of Kubernetes’ core strengths is its ability to handle dynamic workloads efficiently. By automatically distributing containers across nodes, Kubernetes ensures that applications can scale horizontally, balancing workloads based on available resources. If a node becomes overloaded, Kubernetes reschedules workloads to healthy neighbor nodes, minimizing downtime and maintaining performance.

If you are still a beginner with this technology, check out this Introduction to Kubernetes course to get started.

A brief history of Kubernetes

Google originally developed Kubernetes as an open-source successor to Borg, their internal container management system. Released in 2014, Kubernetes quickly became the industry standard for container orchestration, supported by a vast ecosystem of tools and cloud providers. Today, it is maintained by the Cloud Native Computing Foundation (CNCF) and is widely adopted across industries for managing cloud-native applications.

What is Kubernetes Architecture?

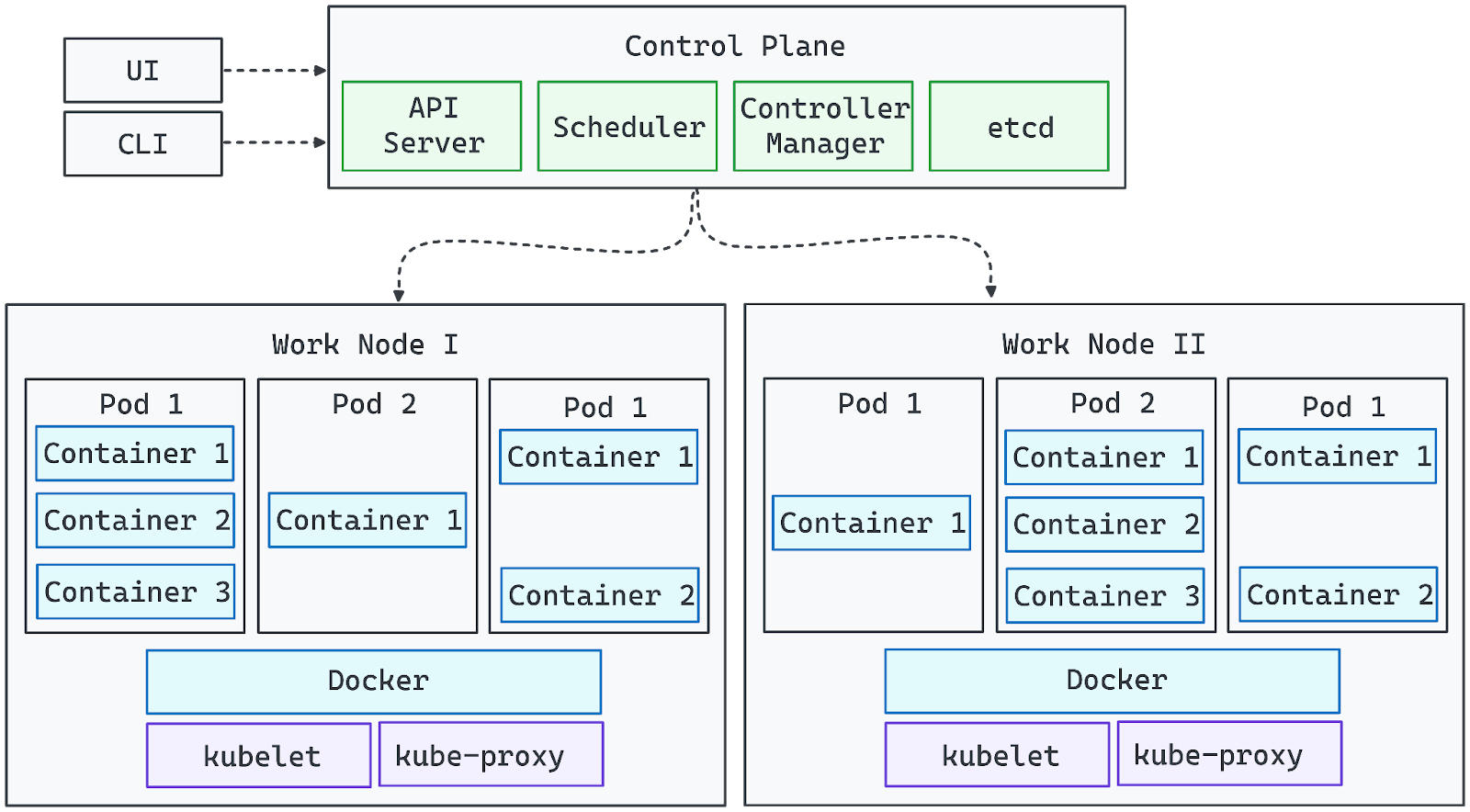

The following diagram visually represents Kubernetes' architecture:

Image by Author. The Kubernetes Architecture.

- The Control Plane components manage the cluster and ensure the desired state is maintained.

- The Worker Nodes execute workloads, running containers inside Pods.

- The API Server acts as the bridge between user interactions (via UI or CLI) and the cluster.

- Networking and storage add-ons extend Kubernetes capabilities for production environments.

With this foundation in place, we can now explore the detailed roles of each component in Kubernetes’ cluster architecture.

Core Components of Kubernetes Architecture

Kubernetes consists of two main layers: the Control Plane and Node Components. Let’s explore each in turn:

Control Plane

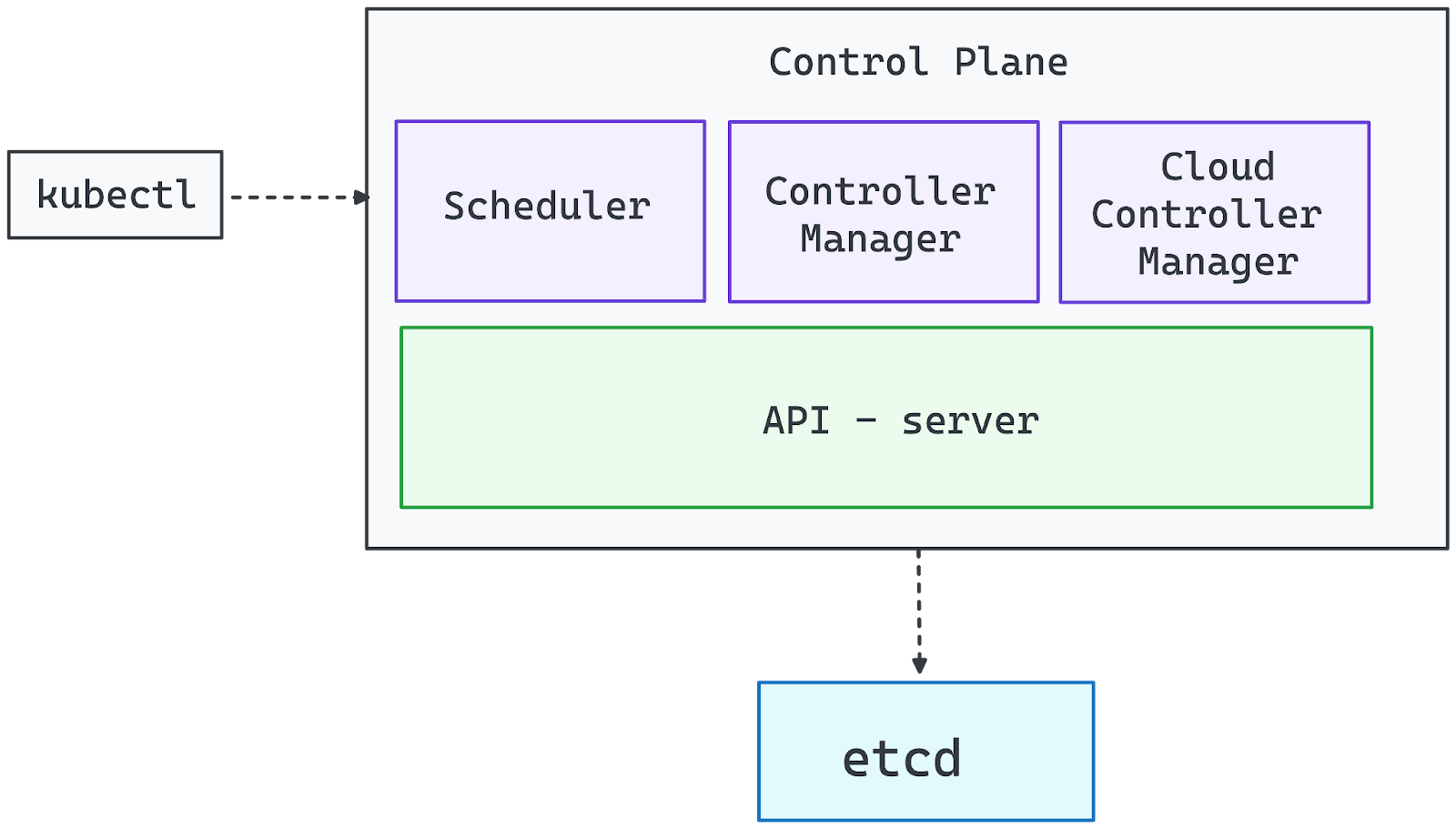

The Kubernetes Control Plane is the central management layer responsible for maintaining the cluster’s desired state, scheduling workloads, and handling automation. It ensures that applications run as expected by continuously monitoring cluster conditions and making necessary adjustments.

Image by Author. Control Plane components.

API Server (kube-apiserver)

The API Server is the gateway to the Kubernetes cluster. It processes all management requests, whether from the CLI (kubectl), UI dashboards, or automation tools. Without a running API Server, the cluster remains functional, but administrators lose direct control over deployments and configurations.

Controller Manager (kube-controller-manager)

Kubernetes operates on the controller pattern, where controllers monitor the system’s state and take corrective actions. The Controller Manager oversees these controllers, ensuring essential functions such as:

- Scaling workloads based on demand.

- Managing failed nodes and rescheduling workloads.

- Enforcing desired states (e.g., ensuring a Deployment always runs the specified number of replicas).

Scheduler (kube-scheduler)

The Scheduler assigns Pods to worker nodes, considering factors like:

- Resource availability (CPU, memory).

- Node affinity and anti-affinity rules.

- Pod distribution to prevent overload.

It follows a filtering and scoring process to select the optimal node for each new Pod, ensuring balanced resource utilization.

etcd (Distributed Key-Value Store)

etcd serves as Kubernetes’ single source of truth, storing all cluster data, including:

- Configuration settings.

- Secrets and credentials.

- Current and historical states of workloads.

Since compromising etcd grants full control over the cluster, it must be secured and given adequate hardware resources to maintain performance and reliability.

Cloud Controller Manager

For cloud-based Kubernetes deployments, the Cloud Controller Manager integrates the cluster with cloud provider services, handling:

- Provisioning load balancers.

- Allocating persistent storage volumes.

- Scaling infrastructure dynamically (e.g., adding virtual machines as nodes)

Node components

Worker Nodes are the computational backbone of a Kubernetes cluster, responsible for running application workloads. Each node operates independently while maintaining constant communication with the control plane to ensure smooth orchestration.

Kubernetes dynamically schedules Pods onto worker nodes based on resource availability and workload demands. Nodes can be physical or virtual machines, and a production-ready cluster typically consists of multiple nodes to enable horizontal scaling and high availability. Each worker node includes the following essential components:

Kubelet: The node agent

Kubelet is the primary node-level agent that manages the execution of containers. It:

- Continuously communicates with the API Server to receive instructions.

- Ensures Pods are running as defined in their specifications.

- Pulls container images and starts the required containers.

- Monitors container health and restarts them if necessary.

Without Kubelet, the node would be disconnected from the cluster, and scheduled workloads would not be managed properly.

Kube-Proxy: Networking and load balancing

Kube-Proxy is responsible for managing network communication between services running on different nodes. It:

- Configures network rules to allow seamless communication between Pods.

- Facilitates service discovery and load balancing for inter-Pod networking.

- Ensures traffic is routed correctly between nodes and external clients.

If Kube-Proxy fails, Pods on the affected node may become unreachable, disrupting network traffic within the cluster.

Container Runtime: Running containers

The container runtime is the software that executes containerized applications within Pods. Kubernetes supports multiple runtime options, including:

- containerd (most commonly used)

- CRI-O (lightweight and Kubernetes-native)

- Docker Engine (legacy support via dockershim)

The runtime interacts with the operating system to isolate workloads using technologies like cgroups and namespaces, ensuring efficient resource utilization.

How Worker Nodes Interact with the Control Plane

- Nodes join the cluster using a token issued by the control plane.

- Once a node is registered, the scheduler assigns workloads based on its available resources.

- The control plane continuously monitors node health and may reschedule workloads if a node becomes unhealthy or overloaded.

By combining Kubelet, Kube-Proxy, and a container runtime, worker nodes form a scalable and resilient execution layer that powers Kubernetes applications.

Extending Kubernetes with add-ons

Kubernetes is designed to be highly extensible, allowing you to customize and enhance its functionality through add-ons. While the control plane and worker nodes form the core infrastructure, add-ons provide networking, storage, monitoring, and automation capabilities that make Kubernetes more powerful and production-ready.

Networking solutions

Kubernetes networking follows a plugin-based approach, requiring a Container Network Interface (CNI)-compatible plugin to enable seamless communication between Pods. Some widely used networking solutions include:

- Calico: Secure network policies and high-performance routing.

- Cilium: eBPF-based networking and security.

- Flannel: Lightweight and easy-to-configure overlay networking.

Most managed Kubernetes distributions come preconfigured with a networking solution, but self-managed clusters require manual installation of a CNI plugin.

Storage solutions

Kubernetes provides dynamic storage provisioning through Storage Classes, allowing workloads to interact with various storage backends:

- Local storage: Uses a node’s filesystem.

- Cloud block storage: Integrates with cloud providers (e.g., AWS EBS, Azure Disk, GCP Persistent Disks).

- Distributed storage: Solutions like Ceph, Longhorn, and Rook enable persistent, replicated storage across nodes.

Since Kubernetes does not include a built-in container registry, you must use an external registry (e.g., Docker Hub, Harbor, or Amazon ECR) to store and distribute container images.

Monitoring and observability

Effective monitoring and logging are essential for managing Kubernetes workloads. Popular solutions include:

- Prometheus: Metrics collection and alerting.

- Grafana: Visualization dashboards.

- ELK Stack (Elasticsearch, Logstash, Kibana): Log aggregation and analysis.

These tools help track cluster health, diagnose issues, and optimize performance.

Ingress controllers

Ingress controllers manage external access to applications inside the cluster, handling HTTP/HTTPS traffic and load balancing. Popular options include:

- NGINX Ingress Controller – Widely used for managing web traffic.

- Traefik – Dynamic routing with built-in Let’s Encrypt support.

- HAProxy Ingress – High-performance load balancing.

Custom functionality with CRDs

Kubernetes allows users to extend its API through Custom Resource Definitions (CRDs), enabling the creation of custom objects and automation workflows.

Operators and controllers can automate complex tasks, such as:

- Provisioning databases when a custom PostgresDatabaseConnection object is added.

- Managing security policies dynamically.

- Scaling applications based on custom metrics.

By leveraging CRDs, Kubernetes can be transformed into a fully customizable platform, adapting to specific business needs.

Kubernetes Cluster Architecture

Kubernetes introduces several abstraction layers to help define and manage applications efficiently. These abstractions simplify deployment, scaling, and management across distributed environments.

Master and worker nodes

A Kubernetes cluster consists of two main types of nodes:

- Master nodes (Control Plane): Responsible for orchestrating and managing the cluster. These nodes run the API Server, Scheduler, Controller Manager, and etcd to ensure workloads are deployed and maintained as expected.

- Worker nodes (Data Plane): Execute application workloads by running pods, which contain containers. Each worker node is managed by the Kubelet, interacts with the Kube-Proxy for networking, and runs a container runtime like Docker or containerd.

Together, the master and worker nodes form a self-healing, scalable infrastructure where applications can run seamlessly. If you want to further understand containerization and virtualization, follow this expert-led Containerization and Virtualization track.

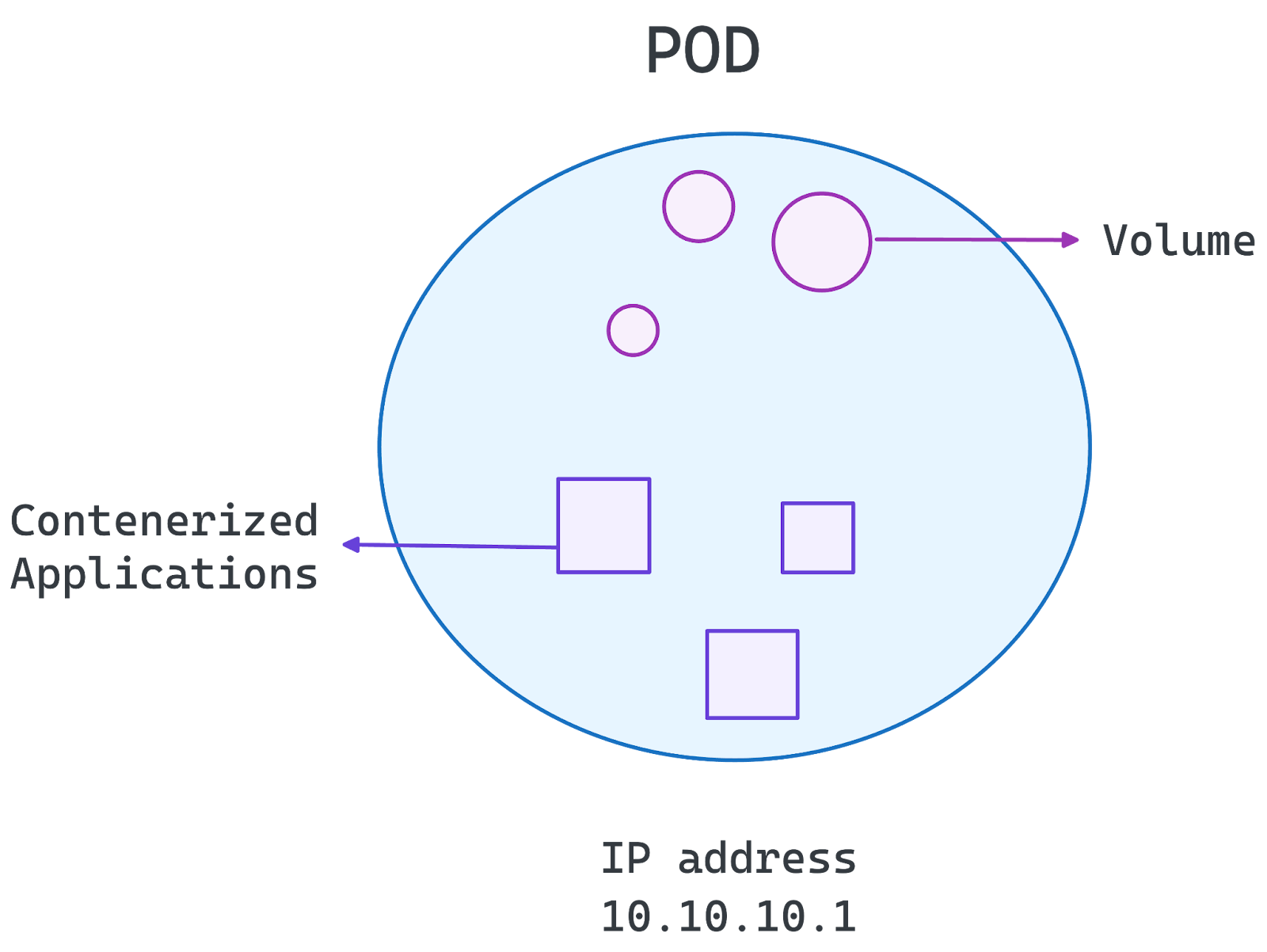

Pod architecture

At the core of Kubernetes is the Pod, which is the smallest deployable unit in the system. A Pod encapsulates one or more containers that share storage, networking, and configuration settings.

- Single-container Pods: The most common setup, where each Pod contains one container running a microservice.

- Multi-container Pods: Used when tightly coupled containers need to share resources and communicate efficiently.

Pods are ephemeral, meaning they can be rescheduled or restarted as needed. Kubernetes manages Pods through Deployments, StatefulSets, and DaemonSets, ensuring scalability and availability.

Image by Author. Pod’s Architecture.

Namespace and segmentation

Namespaces allow for logical segmentation within a Kubernetes cluster. They help:

- Isolate workloads for different teams, projects, or environments (e.g., dev, staging, production).

- Apply role-based access control (RBAC) to restrict permissions.

- Manage resource quotas to prevent excessive resource consumption.

By organizing workloads into namespaces, Kubernetes enables better security, resource management, and scalability within a single cluster.

With this foundation in place, we can now explore how Kubernetes architecture supports microservices deployments in the next section.

Kubernetes Microservices Architecture

Kubernetes is ideal for microservices, offering scalability, resilience, and automation for distributed applications. It enables:

- Automated scaling: Adjusts resources based on demand.

- Self-healing: Detects and replaces failed containers.

- Service discovery and load balancing: Efficient communication between microservices.

- Portability: Runs across cloud and on-premises environments.

- Declarative configuration: Uses YAML for predictable deployments.

One common concern of professionals is whether to use Kubernetes or Docker Compose for your microservices. If that’s your case, I recommend the following guide Docker Compose vs Kubernetes to further understand it.

Best practices

Below, we’ve highlighted some of the best practices to adhere to:

- Namespaces for workload isolation: Organize and isolate workloads for better security and resource management.

- Kubernetes Services & Ingress for traffic management: Enable efficient communication between microservices and external clients.

- Autoscaling & Rolling Updates for seamless performance: Use Horizontal Pod Autoscaler and rolling deployments to maintain availability.

- ConfigMaps & Secrets for secure configurations: Manage sensitive data and environment configurations securely.

- Monitoring & Logging with Prometheus and Grafana: Gain insights and troubleshoot issues effectively with robust observability tools.

By following these practices, Kubernetes streamlines microservices deployment, ensuring flexibility and high availability.

Use Cases of Kubernetes Architecture

Kubernetes is widely adopted across industries for its ability to scale applications, ensure high availability, and support multi-cloud environments. Its architecture enables seamless workload management, making it a preferred choice for modern cloud-native applications.

Application scalability

Kubernetes enables horizontal scaling, allowing applications to handle varying workloads efficiently:

- Horizontal Pod Autoscaler (HPA) adjusts the number of Pods based on CPU, memory, or custom metrics.

- Cluster Autoscaler dynamically adds or removes worker nodes to match demand.

- Load balancing ensures traffic is distributed evenly across replicas.

This makes Kubernetes ideal for applications with fluctuating traffic, such as e-commerce platforms, streaming services, and SaaS applications.

High availability and fault tolerance

Kubernetes ensures continuous operation by:

- Replicating workloads across multiple nodes to prevent service disruptions.

- Self-healing mechanisms that restart failed containers automatically.

- Leader election in the control plane to maintain stability in case of failures.

These features make Kubernetes suitable for mission-critical applications that require near-zero downtime.

Hybrid and multi-cloud deployments

Kubernetes abstracts infrastructure complexity, making it easier to deploy workloads across on-premises, hybrid, and multi-cloud environments:

- Consistent application management across AWS, Google Cloud, Azure, and on-premises clusters.

- Traffic routing between cloud providers for optimal latency and cost efficiency.

- Disaster recovery strategies with cross-cloud failover setups.

Conclusion

Kubernetes has revolutionized container orchestration, becoming the industry standard for deploying, managing, and scaling applications in modern cloud environments. Its architecture enables automated scaling, high availability, and cross-cloud compatibility, making it a powerful tool for organizations adopting microservices and cloud-native strategies.

By understanding Kubernetes architecture, developers and IT teams can:

- Optimize application performance through efficient scheduling and scaling.

- Enhance system resilience with self-healing and high availability.

- Ensure seamless multi-cloud and hybrid deployments, avoiding vendor lock-in.

From control plane management to worker node execution and customizable add-ons, Kubernetes provides the flexibility needed to run mission-critical applications at scale. As the cloud ecosystem evolves, Kubernetes remains at the forefront—empowering businesses to innovate with confidence.

Kubernetes is a powerful tool for managing modern applications, but mastering it requires hands-on experience. Continue your learning with:

- Introduction to Kubernetes course to learn the fundamentals of Kubernetes and deploy and orchestrate containers using Manifests and kubectl instructions.

- Docker Compose vs Kubernetes to choose the right tool for your needs.

- Containerization for Machine Learning to apply Kubernetes to AI/ML workflows.

Josep is a freelance Data Scientist specializing in European projects, with expertise in data storage, processing, advanced analytics, and impactful data storytelling.

As an educator, he teaches Big Data in the Master’s program at the University of Navarra and shares insights through articles on platforms like Medium, KDNuggets, and DataCamp. Josep also writes about Data and Tech in his newsletter Databites (databites.tech).

He holds a BS in Engineering Physics from the Polytechnic University of Catalonia and an MS in Intelligent Interactive Systems from Pompeu Fabra University.