Track

Containerization has transformed how engineering teams manage and scale applications, particularly in data management, analytics, and machine learning. By packaging applications into isolated and lightweight environments, containers ensure consistent performance from development to production.

Docker stands out as the most popular solution among the different platforms available. Its flexibility and simplicity enable data professionals to build reproducible, scalable, and efficient pipelines while fostering collaboration.

In this article, we’ll outline a practical learning plan for Docker, including steps to deploy your first simple application. Let’s dive in!

What is Docker and Why Learning It is Useful?

Docker is an open-source platform that simplifies the deployment, scaling, and management of applications using containerization.

Containers are lightweight, portable environments that include everything needed to run an application—code, runtime, libraries, and settings—for consistent performance across different systems. In data projects, Docker is used to build and manage these containers, allowing applications to run reliably across any infrastructure.

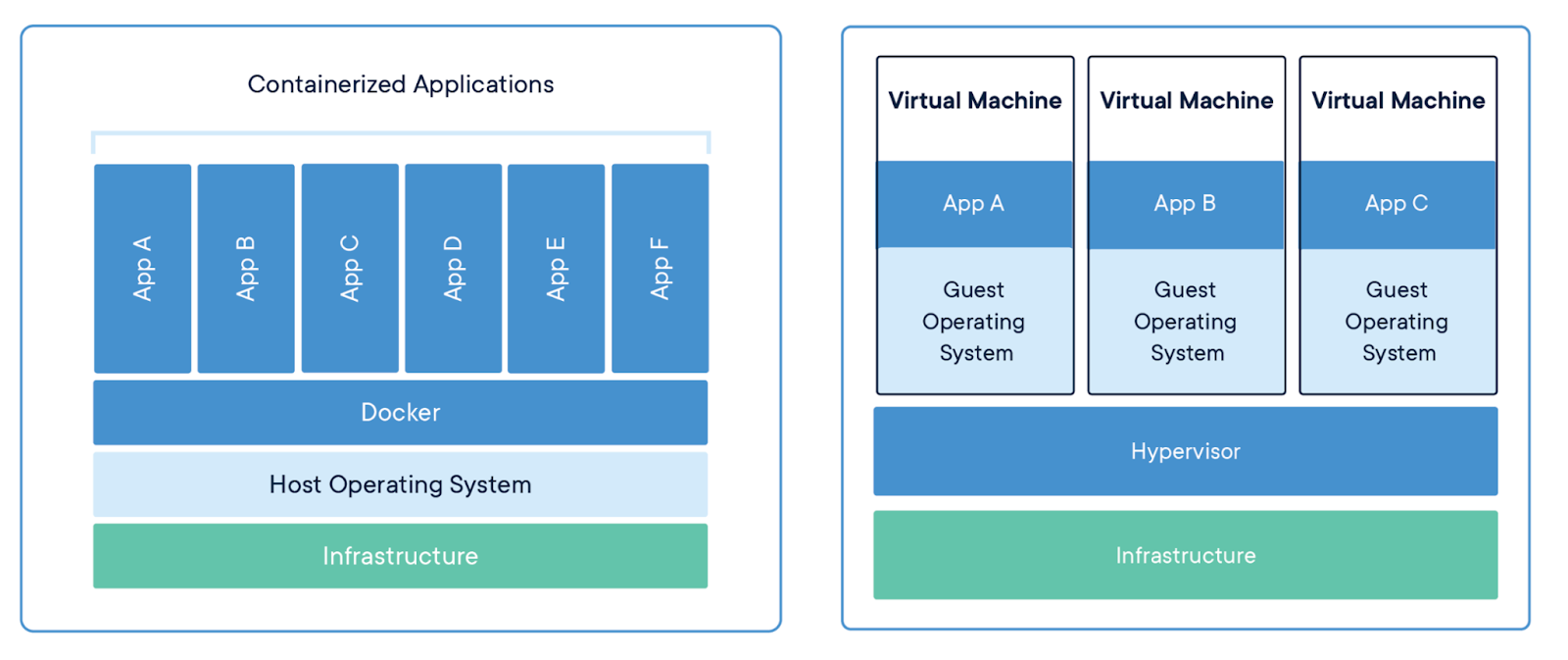

Unlike virtual machines (VMs), which require their own operating system and a hypervisor to manage them, Docker virtualizes only the application layer. This results in containers that are faster to start, less resource-intensive, and easier to configure.

Containerized applications versus virtual machines. Image source: Docker

For data professionals, Docker helps create reproducible environments, enabling data pipelines to run consistently from development to production. It minimizes dependency issues, streamlines workflows, and fosters team collaboration by providing standardized, shareable environments.

Additionally, Docker integrates with popular data tools like Jupyter, TensorFlow, and Apache Hadoop.

Mastering Docker can boost productivity, optimize workflows, and make your projects scalable and easily deployable!

Learn Docker from Scratch: Your First Deployment

The best way to learn Docker is by getting hands-on. So, let me guide you through your first simple deployment. After this, we will explore learning plans to deepen your knowledge.

Step 1: Understand core concepts

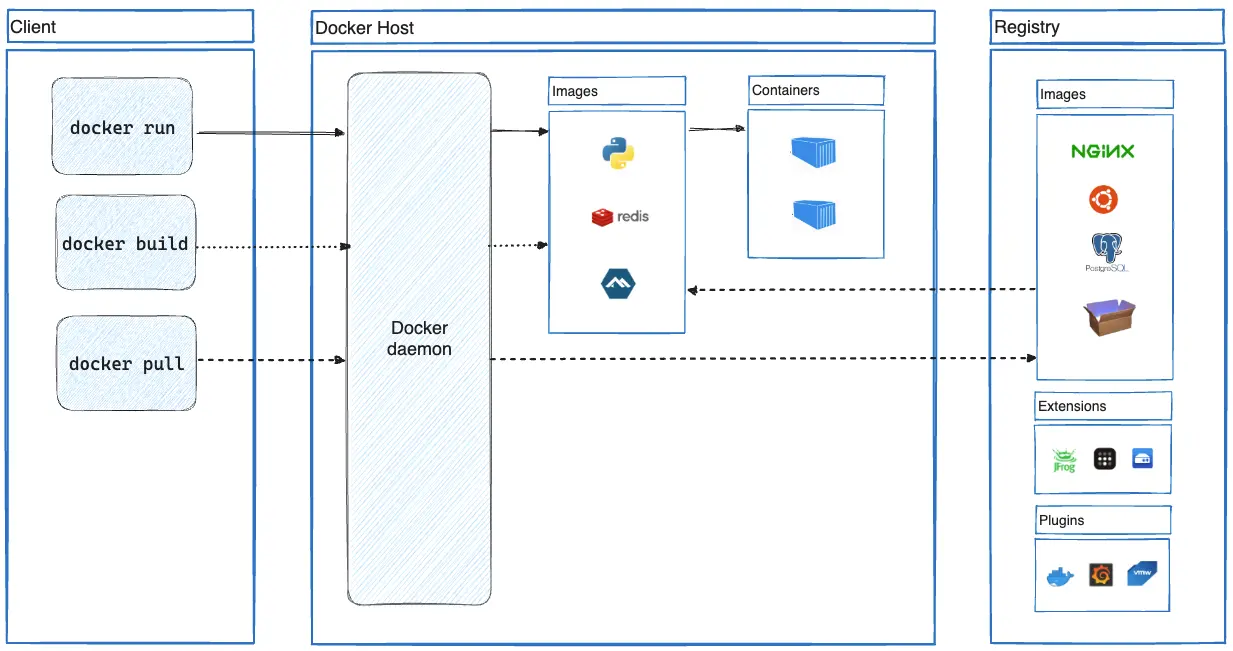

Before jumping into Docker hands-on, grasping some fundamental concepts is important. Here’s a breakdown of the main Docker concepts:

- Containers: Containers are lightweight, isolated units that package an application along with all its dependencies, ensuring it runs consistently across different environments.

- Images: A Docker image is a read-only template used to create containers. It includes everything required to run an application, such as the code, libraries, and system tools. Images are typically built from Dockerfiles.

- Dockerfile: A Dockerfile is a text file containing instructions on how to build a Docker image. It outlines steps like installing software, copying files, and configuring the environment needed to run the application.

- Docker Hub: Docker Hub is a public registry where you can store, share, and download Docker images. It serves as a central repository for Docker images, enabling easy distribution and reuse of pre-configured environments.

- Volumes: Volumes are a way to persist data generated and used in Docker containers. They allow you to manage and store data outside the container's lifecycle, ensuring that important data isn't lost when containers are stopped or removed.

- Networks: Docker networks facilitate communication between containers. Each container can be connected to one or more networks, enabling them to interact and share data securely.

Docker architecture overview. Image source: Docker

Understanding these core concepts is essential before you begin deploying applications with Docker. Mastering these basics will provide a solid foundation, making the hands-on practice more effective.

The Introduction to Docker course can significantly help solidify your current knowledge.

Step 2: Install Docker

To start using Docker, you'll need to install it on your system. Below are the instructions for different platforms. For more detailed guidance, follow the links to the official Docker documentation.

1. Installing Docker on Windows

Requirements:

- Windows 10 64-bit: Pro, Enterprise, or Education (Build 19041 or higher)

- Windows 11 64-bit: Home, Pro, Enterprise, or Education

- WSL 2 backend

Steps:

1. Enable WSL 2 (Windows Subsystem for Linux):

- Open PowerShell as Administrator.

- Run the following commands:

dism.exe /online /enable-feature /featurename:Microsoft-Windows-Subsystem-Linux /all /norestart

dism.exe /online /enable-feature /featurename:VirtualMachinePlatform /all /norestart wsl --set-default-version 22. Install Docker Desktop for Windows:

- Download Docker Desktop from Docker's official website.

- Run the installer and follow the prompts.

- Select the option to use WSL 2 as the default backend during installation.

3. Start Docker Desktop:

- Launch Docker Desktop from the Start menu.

- Docker should start automatically; if not, start it manually.

4. Verify installation:

- Open PowerShell or Command Prompt.

- Run the following command to check if Docker is installed correctly:

sudo docker --versionOfficial documentation: Docker Desktop for Windows

2. Installing Docker on macOS

Requirements:

- macOS 10.15 or newer

Steps:

1. Download Docker Desktop for macOS:

- Go to Docker’s official website and download the Docker Desktop installer.

2. Install Docker Desktop:

- Open the

.dmgfile you downloaded. - Drag the Docker icon to the Applications folder.

3. Start Docker Desktop:

- Open Docker from the Applications folder.

- Follow the installation wizard to complete the setup.

4. Verify installation:

- Open a terminal window.

- Run the following command to verify Docker installation:

docker --versionOfficial documentation: Docker Desktop for Mac

3. Installing Docker on Linux

Supported Distributions:

- Ubuntu

- Debian

- Fedora

- CentOS

- RHEL

Steps for Ubuntu/Debian:

1. Uninstall old versions:

- Before installing Docker Engine, remove conflicting packages using this command:

for pkg in docker.io docker-doc docker-compose podman-docker containerd runc; do sudo apt-get remove $pkg; done2. Set up Docker’s apt repository:

- Run the following commands:

sudo apt-get update

sudo apt-get install ca-certificates curl

sudo install -m 0755 -d /etc/apt/keyrings

sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

sudo chmod a+r /etc/apt/keyrings/docker.asc

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update3. Install Docker packages:

- Install Docker Engine and other necessary components:

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin4. Verify installation:

- Run the following command to check if Docker is installed:

sudo docker --versionOfficial documentation: Docker Engine on Debian

Become a Data Engineer

Step 3: Run your first container

Now that Docker is installed, it's time to run your first container. We'll start with the simple hello-world image, perfect for beginners.

1. Open your terminal (or Command Prompt on Windows) and run the following command:

sudo docker run hello-worldThis command tells Docker to look for the hello-world image locally. If it doesn’t find it, Docker will pull the image from Docker Hub and then run it.

If Docker is installed correctly, you’ll see a message that says, “Hello from Docker!” along with details about how the Docker process works. This output confirms that Docker successfully pulled the image, created a new container, and executed the code inside it.

Step 4: Build your first Docker image

In this step, you’ll create a custom Docker image for a simple data science project. This image will include Python and common data science libraries like pandas, NumPy, and scikit-learn.

1. Create a new directory:

-

- Start by creating a new directory for your project and navigating into it:

mkdir my-data-science-app

cd my-data-science-app2. Create a Dockerfile:

Inside this directory, create a file named Dockerfile (without any file extension):

# Use an official Python runtime as a parent image

FROM python:3.8-slim

# Set the working directory in the container

WORKDIR /app

# Copy the current directory contents into the container at /app

COPY . /app

# Install any needed packages specified in requirements.txt

RUN pip install --no-cache-dir -r requirements.txt

# Make port 8888 available to the world outside this container

EXPOSE 8888

# Run a Jupyter notebook server when the container launches

CMD ["jupyter", "notebook", "--ip=0.0.0.0", "--port=8888", "--no-browser", "--allow-root"]The Dockerfile does the following:

FROM python:3.8-slim: This sets the base image to Python 3.8 in its slim variant, a minimal version of Python, ideal for a lightweight data science environment.WORKDIR /app: This sets the working directory inside the container to /app. All subsequent commands will be executed within this directory.COPY . /app: This copies all files from your current directory on the host machine and puts them into the/appdirectory in the container. This typically includes your data science scripts and configuration files.RUN pip install --no-cache-dir -r requirements.txt: This installs the Python libraries listed inrequirements.txt. Common libraries for data science include pandas, NumPy, and scikit-learn.EXPOSE 8888: This makes port 8888 available to the host machine, the default port for Jupyter notebooks.CMD ["jupyter", "notebook", "--ip=0.0.0.0", "--port=8888", "--no-browser", "--allow-root"]: This command launches a Jupyter notebook server when the container starts, making it accessible from your web browser.

3. Create a requirements.txt File:

In the same directory, create a requirements.txt file to list the Python packages your application needs:

pandas

numpy

scikit-learn

matplotlibThis file lists the Python libraries that will be installed inside your Docker container.

4. Build the Docker image:

- With the Dockerfile and

requirements.txtready, build your Docker image using the following command:

sudo docker build -t my-data-science-app .The command above does the following:

docker build: Initiates the process of building a Docker image.-t my-data-science-app: The -t option tags the image as “my-data-science-app.”.: The dot at the end specifies the build context, which is the current directory. Docker will use the files in this directory to build the image.

5. Run the Docker image:

Once the build process is complete, you can run your new Docker image as a container:

sudo docker run -p 8888:8888 my-data-science-appLet’s break down the command:

docker run: Runs a container based on the specified image.-p 8888:8888: The -p option maps port 8888 on your local machine to port 8888 inside the container, allowing you to access the Jupyter Notebook server by navigating tolocalhost:8888in your web browser.my-data-science-app: This specifies the image to run.

Step 5: Use Docker Compose

Docker Compose is an essential tool for managing multi-container applications. In a data science project, you might need separate containers for different components, such as a Jupyter notebook, a database for storing data, and a data visualization tool.

Docker Compose allows you to define these services in a single YAML file, making it easy to spin up your entire data science environment with one command.

1. Create a docker-compose.yml file:

- In your project directory, create a file named

docker-compose.ymland write the following lines in the file:

version: '3.8'

services:

jupyter:

image: jupyter/scipy-notebook:latest

volumes:

- ./notebooks:/home/joelwembo/work

ports:

- "8888:8888"

environment:

- JUPYTER_ENABLE_LAB=yes

postgres:

image: postgres:13-alpine

environment:

POSTGRES_USER: myuser

POSTGRES_PASSWORD: mypassword

POSTGRES_DB: mydatabase

volumes:

- postgres_data:/var/lib/postgresql/data

ports:

- "5432:5432"

redis:

image: redis:alpine

ports:

- "6379:6379"

volumes:

postgres_data:

Let’s break down what the Docker Compose file does:

version: '3.8': This specifies the version of the Docker Compose file format. Version 3.8 is a commonly used version that supports many features.services:: The services section defines the different containers (services) that make up your application.jupyter:: This service represents a Jupyter notebook environment essential for interactive data analysis.image: jupyter/scipy-notebook:latest: This specifies the Docker image to use for the Jupyter notebook, which includes popular data science libraries like NumPy, pandas, and matplotlib.volumes: - ./notebooks:/home/joelwembo/work: This mounts the notebooks directory on your host machine to/home/joelwembo/workinside the container, allowing you to persist and share your notebooks. Note: This is my personal path and yours should look different.ports: - "8888:8888": This maps port 8888 on your host machine to port 8888 in the container, which is the default port for Jupyter notebooks.environment: - JUPYTER_ENABLE_LAB=yes: This enables JupyterLab, a more powerful interface for working with Jupyter notebooks.postgres:: This service runs a PostgreSQL database, which is often used to store and manage large datasets in data science projects.image: postgres:13-alpine: This specifies the Docker image for PostgreSQL version 13, based on the lightweight Alpine Linux distribution.environment:: These environment variables configure the database with a user, password, and database name.volumes: - postgres_data:/var/lib/postgresql/data: This mounts a Docker volume to persist the database data outside the container, so your data isn’t lost when the container is stopped.ports: - "5432:5432": This maps port 5432 on your host machine to port 5432 in the container, the default port for PostgreSQL.redis:: This service runs a Redis server, which can be used as a fast in-memory data store or cache.image: redis:alpine: This specifies the Redis image using the lightweight Alpine variant.ports: - "6379:6379": This maps port 6379 on your host machine to port 6379 in the container, the default port for Redis.volumes:: This section defines named volumes that can be shared between services or persist data across container restarts.postgres_data:: This creates a named volume for persisting PostgreSQL data.

To start your multi-container application, simply run the following:

sudo docker compose upThis command builds the images (if necessary) and starts the containers defined in your docker-compose.yml file. It brings up both the web application and the Redis service, allowing them to communicate with each other as defined.

You have now deployed your first Docker application! Isn’t that great? Now, let’s elaborate a plan for your continuous learning.

An Example Learning Plan for Docker

Here’s a week-by-week plan that you can follow. Feel free to tailor it to your specific needs.

If you need a more defined path, the Containerization and Virtualization with Docker and Kubernetes skill track is perfect for you. It contains four essential courses.

Week 1: Get familiar with Docker basics

- Objective: Understand Docker's core concepts and basic commands.

- Tasks:

- Install Docker and set up Docker Desktop.

- Learn Docker commands:

docker run,docker ps,docker stop,docker rm, etc. - Run simple containers for data exploration tools like Ubuntu or Alpine and try basic commands.

- Explore official Docker images and run containers for basic visualization tools (e.g., a simple matplotlib container).

- Resources: Containerization and Virtualization Concepts course, Introduction to Docker course.

Week 2: Build and run Docker images for data tools

- Objective: Learn to create and manage custom Docker images.

- Tasks:

- Write a Dockerfile for a basic data analysis environment (e.g., Python with Pandas and NumPy).

- Build the Docker image using docker build.

- Run containers from your custom image and test data tools like Jupyter Notebook.

- Create Dockerfiles for TensorFlow and PostgreSQL, ensuring proper configuration and linking.

- Resources: Introduction to Docker course.

Week 3: Explore Docker Compose

- Objective: Understand and utilize Docker Compose for multi-container setups.

- Tasks:

- Install Docker Compose and learn its basics.

- Write

docker-compose.ymlfiles to define multi-container applications. - Practice with examples like setting up a web application with a backend database (e.g., a Flask app with PostgreSQL).

- Experiment with different configurations and service dependencies.

- Resources: Intermediate Docker course.

Week 4: Dive deeper into Docker networking and volumes

- Objective: Master Docker’s networking and storage capabilities.

- Tasks:

- Learn about Docker networks and how to create them (bridge, host, overlay).

- Practice creating custom networks and connecting containers to them.

- Explore Docker volumes for persistent storage and practice creating and managing them.

- Implement a setup where containers communicate over a custom network and use volumes for data persistence.

- Resources: Intermediate Docker course.

Week 5: Deploy and manage Docker containers in production

- Objective: Understand container deployment and management in production.

- Tasks:

- Learn best practices for deploying Docker containers (e.g., using Docker Hub or a private registry).

- Explore container orchestration with Docker Swarm: creating and managing services.

- Understand scaling containers and managing logs.

- Experiment with deploying a containerized application to a staging or production environment.

- Resources: Intermediate Docker course.

Week 6: Introduction to Kubernetes for container orchestration

- Objective: Get acquainted with Kubernetes for scaling and managing containerized applications.

- Tasks:

- Install and set up a local Kubernetes environment (e.g., Minikube or Kind).

- Learn Kubernetes basics: pods, services, deployments, and namespaces.

- Deploy a simple containerized application on Kubernetes.

- Explore scaling and managing applications with Kubernetes, focusing on handling a containerized data tool like Jupyter.

- Resources: Introduction to Kubernetes course.

Tips and Ways to Learn Docker

Lastly, here are some tips and best practices to help you fast-track your Docker learning path.

Practice regularly

The key to mastering Docker is consistent, hands-on practice. Start by working on personal projects, like containerizing a simple website or setting up a local development environment.

As you become more comfortable, challenge yourself with more complex tasks, such as building a multi-container application or deploying a web server.

Regular experimentation with different use cases—like creating custom Docker images or configuring a CI/CD pipeline—will deepen your understanding and help solidify key concepts. Additionally, participating in online Docker challenges or exercises is a great way to test and enhance your skills in a structured, goal-oriented manner.

Use online resources

Online resources are invaluable for learning Docker. The official Docker documentation is an excellent place to begin, offering authoritative and up-to-date information.

Beyond that, there are plenty of comprehensive courses available on platforms like DataCamp, which can help guide you through the learning process.

For those who prefer visual learning, YouTube tutorials from Docker’s official channel and other educators provide useful, accessible content to reinforce your understanding.

Join the Docker community

Engaging with the Docker community is another powerful way to advance your learning. Participate in forums like Docker Community Forums and Reddit to exchange ideas, ask questions, and share experiences with other learners and professionals.

Attending Docker meetups, webinars, or conferences is also a great way to stay current with industry trends and broaden your network.

Work on open-source projects

Contributing to open-source projects that utilize Docker can be a fantastic way to gain practical, real-world experience.

Websites like GitHub offer opportunities to collaborate on Docker-related projects, allowing you to learn from others and apply your skills in diverse contexts. The collaboration inherent in open-source work helps expose you to different approaches and solutions, fostering a deeper understanding of Docker’s capabilities.

Stay updated

Finally, staying updated with Docker’s latest developments is essential for maintaining proficiency. Keep an eye on Docker’s official blog and release notes for updates on new features or changes. Joining community discussions on forums and social media will also inform you about emerging trends and best practices, helping you continuously refine your Docker skills.

With regular practice, active community engagement, and continuous learning, you’ll build a strong foundation in Docker and remain equipped to handle the latest developments in the field.

Conclusion

Docker has revolutionized application deployment and data management by packaging applications and their dependencies into isolated containers. This solves common challenges like environment inconsistencies and ensures workflows remain consistent, scalable, and efficient for data professionals.

This guide has provided the foundational steps to mastering Docker, from core concepts to hands-on deployments. To further deepen your knowledge, consider exploring additional resources like the Introduction to Docker and Intermediate Docker courses on DataCamp. As you advance, expanding your skills with orchestration tools like Kubernetes is equally important—check out the Introduction to Kubernetes course to get started.

Practicing regularly, using available resources, and staying engaged with the community will unlock Docker’s full potential for managing complex data environments.

Get certified in your dream Data Engineer role

Our certification programs help you stand out and prove your skills are job-ready to potential employers.

FAQs

How long does it take to learn Docker?

The time it takes to learn Docker depends on your experience level. For beginners, it can take a few days to get familiar with the basics, such as containers, images, and Dockerfiles. To achieve a deeper understanding, including concepts like Docker Compose and Kubernetes, it may take a few weeks of regular practice.

Do I need to learn Kubernetes after Docker?

While Docker is essential for containerization, Kubernetes is valuable for managing and orchestrating large-scale deployments across multiple containers. After mastering Docker, learning Kubernetes is highly recommended for working on complex applications or when you need to automate scaling and container management.

What projects can I build to practice Docker?

To gain hands-on experience with Docker, you can start with small projects such as containerizing a personal website or setting up a local development environment for a web app. As you advance, try building a multi-container application with Docker Compose or deploying a simple CI/CD pipeline. You can also practice by contributing to open-source projects that use Docker.

Is Docker necessary for data professionals?

Yes, Docker is incredibly useful for data professionals. It allows you to create reproducible environments that ensure your data pipelines work consistently from development to production. Docker also simplifies dependency management and enhances collaboration across teams, making it an essential tool for modern data workflows.

What are the best resources to learn Docker?

Some of the best resources to start learning Docker include:

- Docker's official documentation

- DataCamp's Introduction to Docker and Intermediate Docker courses

- YouTube tutorials from Docker’s official channel

- Docker community forums and GitHub repositories for hands-on learning and collaboration

AWS Certified Cloud Solutions Architect, DevOps, Cloud Engineer with extensive understanding of high availability architecture and concepts. I possess knowledge of cloud engineering and DevOps and am adept at utilizing open-source resources to execute enterprise applications. I build cloud-based applications using AWS, AWS CDK, AWS SAM, CloudFormation, Serverless Framework, Terraform, and Django.