Cursus

GLM-5 is Z.ai’s newest open-reasoning model, and it has quickly gained attention for its strong performance in coding, agent workflows, and long-context chat.

Many developers are already using it to one-shot websites, build small apps, and experiment with local AI agents.

The challenge is that GLM-5 is a very large model, and running it locally is not realistic on consumer hardware. Even quantized versions require hundreds of gigabytes of memory and a capable GPU setup.

In this tutorial, we walk through a practical way to run GLM-5 locally using a 2-bit GGUF quant on an NVIDIA H200 pod, serve it through llama.cpp, and connect it to Aider so you can use GLM-5 as a real coding agent inside your own projects.

I also recommend checking out our guide on running GLM 4.7 Flash Locally.

Prerequisites: What You Need to Run GLM-5

Before running GLM-5 locally, you’ll need the right model variant, enough memory to load it, and a working GPU software stack.

Hardware requirements depend on the quant size:

- 2-bit (281GB): fits on a ~300GB unified memory system, or works well with a 1×24GB GPU + ~300GB RAM using MoE offloading

- 1-bit: fits on ~180GB RAM

- 8-bit: requires ~805GB RAM

For best performance, your VRAM + system RAM combined should be close to the quant size. If not, llama.cpp can offload to SSD, but inference will be slower. Use --fit in llama.cpp to maximize GPU usage.

In our setup, we run GLM-5-UD-Q2_K_XL on an NVIDIA H200, with enough VRAM and system RAM to fit the model efficiently.

Software prerequisites:

- Installed GPU drivers

- CUDA Toolkit

- A working Python environment

How to Run GLM-5 Locally

Below, you can find the step-by-step instructions for running GLM-5 locally:

1. Set up your local environment

Even the 1-bit version of GLM-5 is too large to run on most consumer laptops, so for this tutorial, I will use Runpod with an NVIDIA H200 GPU.

Start by creating a new pod and selecting the latest PyTorch template.

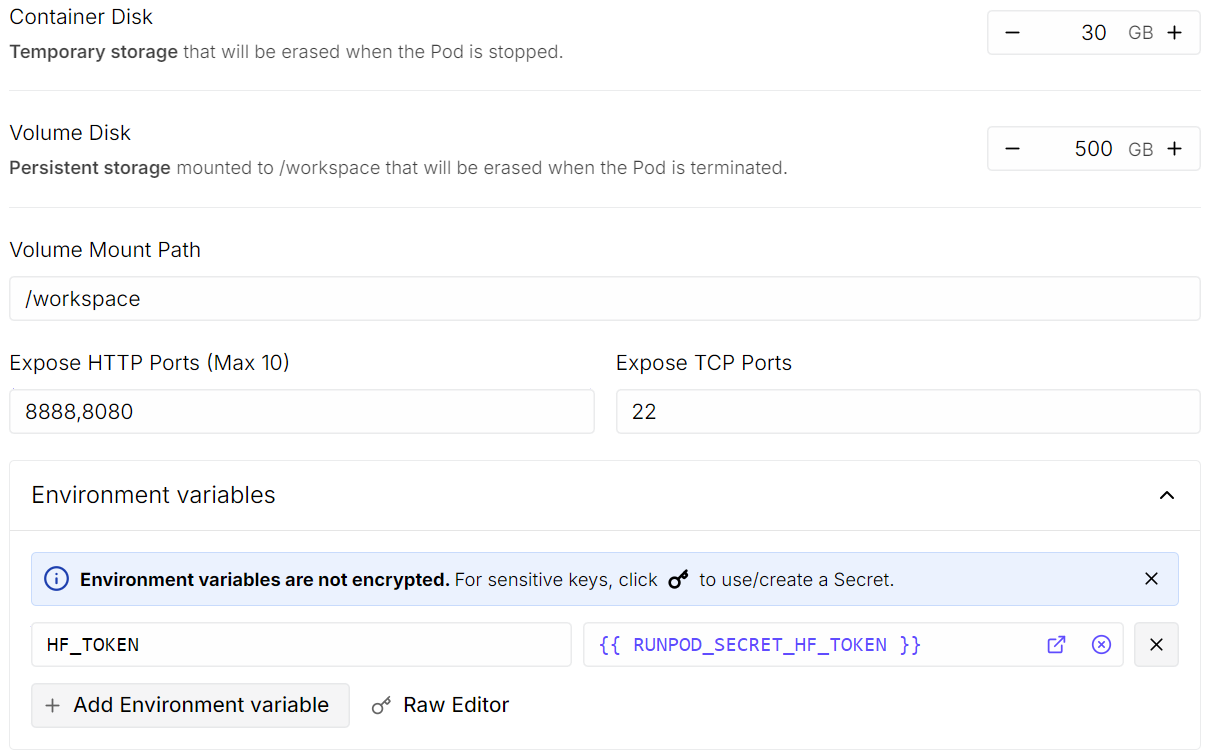

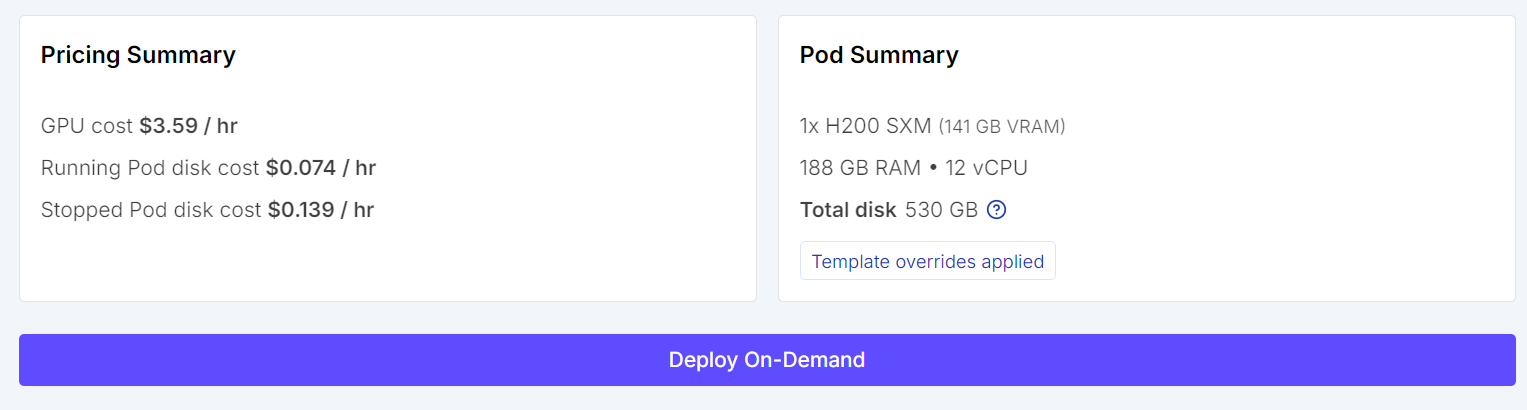

Then click Edit to adjust the pod settings:

- Increase the volume disk size to 500GB since our 2-bit model is ~280GB, and we need extra space for builds and experimentation.

- Open port 8080 so you can access the llama.cpp Chat UI directly in your browser.

- Add your Hugging Face token as an environment variable to speed up model downloads (you can generate a token from your Hugging Face account).

Once everything looks correct, review the pod summary and click Deploy On-Demand.

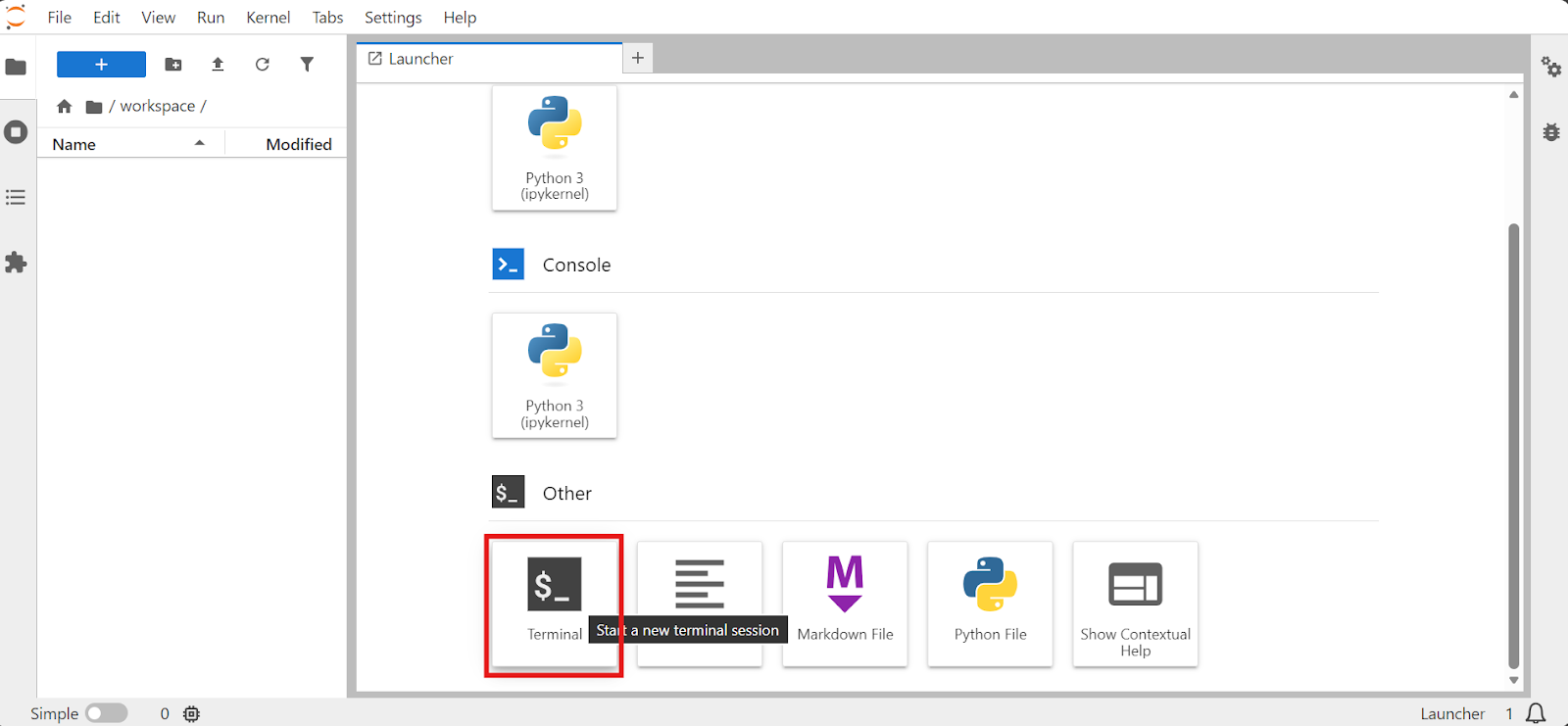

When the pod is ready, open JupyterLab, launch a Terminal, and work from there. Using the Jupyter terminal is convenient because you can run multiple sessions smoothly without relying on SSH.

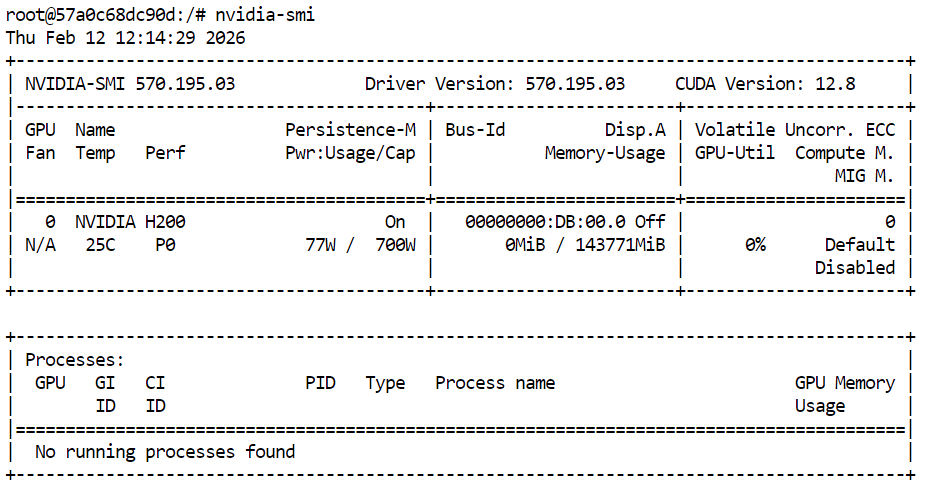

First, confirm the GPU is available:

nvidia-smi You should see the H200 listed in the output.

Next, install the required Linux packages needed to clone and build llama.cpp:

sudo apt update

sudo apt install -y git cmake build-essential curl jq2. Compile llama.cpp with CUDA support

Now that your Runpod environment is ready and the GPU is working, the next step is to install and compile llama.cpp with CUDA acceleration so GLM-5 can run efficiently on the H200.

First, move into the workspace directory and clone the official llama.cpp repository:

cd /workspace

git clone https://github.com/ggml-org/llama.cpp

cd llama.cppAt this point, it is important to note that the latest stable llama.cpp release does not yet fully support GLM-5 out of the box. You need to pull a specific upstream pull request that contains recent changes required for proper compatibility.

Fetch and checkout the updated branch:

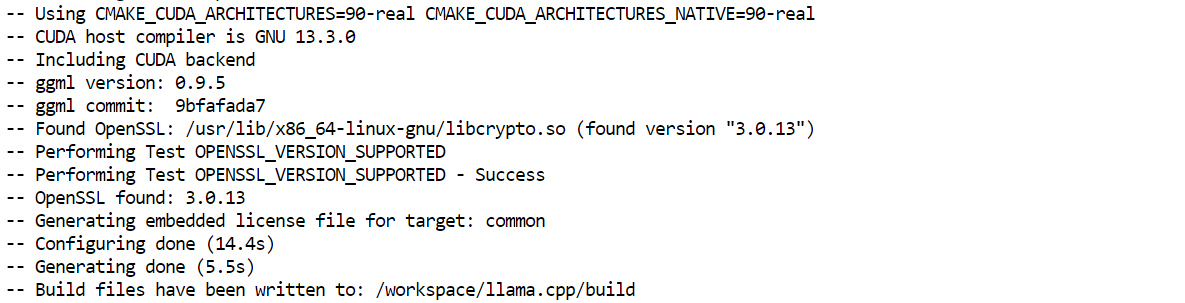

git fetch origin pull/19460/head:MASTER && git checkout MASTER && cd ..Next, we configure the build system so llama.cpp is compiled with CUDA enabled, allowing the model to use GPU acceleration instead of running entirely on CPU.

Run CMake with the CUDA flag turned on:

cmake llama.cpp -B llama.cpp/build \

-DBUILD_SHARED_LIBS=OFF -DGGML_CUDA=ON

This creates a dedicated build/ directory and ensures the llama.cpp server binaries will support NVIDIA GPU execution.

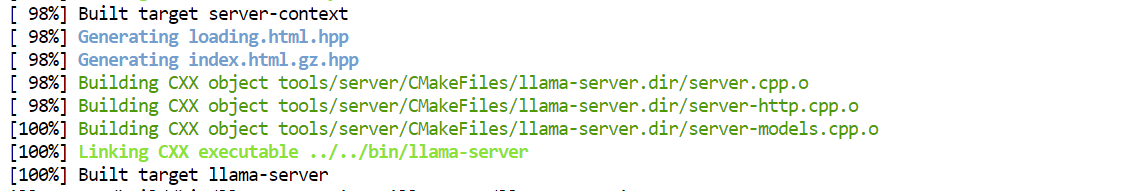

Once configuration is complete, build the llama-server target:

cmake --build llama.cpp/build --config Release -j --clean-first --target llama-server

This step may take a few minutes depending on the pod, but once finished you will have a CUDA-enabled server binary ready for running GLM-5.

Finally, copy the compiled executables into the main folder for easier access:

cp llama.cpp/build/bin/llama-* llama.cpp3. Download the GLM-5 model from Hugging Face

With llama.cpp compiled and ready, the next step is downloading the GLM-5 GGUF model files from Hugging Face.

Because these model checkpoints are extremely large, it is important to enable the fastest available download methods.

Hugging Face provides optional tools like hf_xet and hf_transfer, which significantly improve download speed, especially on cloud machines like Runpod.

Start by installing the required Hugging Face download utilities:

pip -q install -U "huggingface_hub[hf_xet]" hf-xet

pip -q install -U hf_transferThese packages allow faster parallel downloads and better performance when pulling hundreds of gigabytes of model shards.

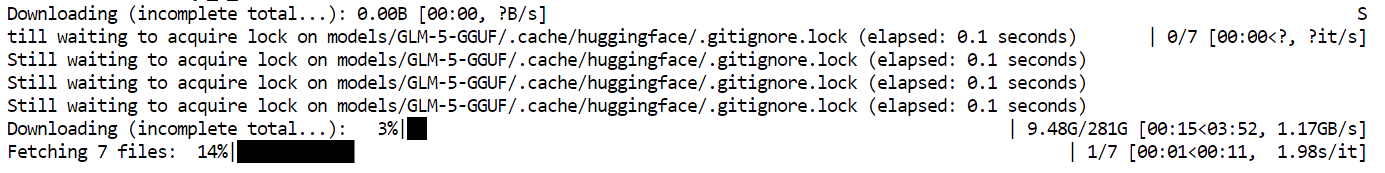

Now download the specific quantized model variant used in this tutorial. We only want the UD-Q2_K_XL files, not the full set of uploads:

hf download unsloth/GLM-5-GGUF \

--local-dir models/GLM-5-GGUF \

--include "*UD-Q2_K_XL*"This will save the model directly into the models/GLM-5-GGUF directory.

In our setup, downloads reach speeds of around 1.2 GB/s, because we enabled hf_xet and provided a Hugging Face token earlier. Anonymous downloads are usually much slower, so setting up authentication and transfer acceleration makes a big difference when working with models at this scale.

4. Start the GLM-5 model on the single GPU

Now that the model is downloaded and llama.cpp is compiled with CUDA support, we can start GLM-5 using the built-in llama-server.

Run the following command to launch the server:

./llama.cpp/llama-server \

--model models/GLM-5-GGUF/UD-Q2_K_XL/GLM-5-UD-Q2_K_XL-00001-of-00007.gguf \

--alias "GLM-5" \

--host 0.0.0.0 \

--port 8080 \

--jinja \

--fit on \

--threads 32 \

--ctx-size 16384 \

--batch-size 512 \

--ubatch-size 128 \

--flash-attn auto \

--temp 0.7 \

--top-p 0.95A few important arguments to understand here:

--host 0.0.0.0exposes the server so it can be accessed from your browser--port 8080matches the port we opened earlier in Runpod--fit onensures maximum GPU utilization before spilling into RAM--ctx-size 16384sets the context window for inference--flash-attn autoenables faster attention kernels when supported

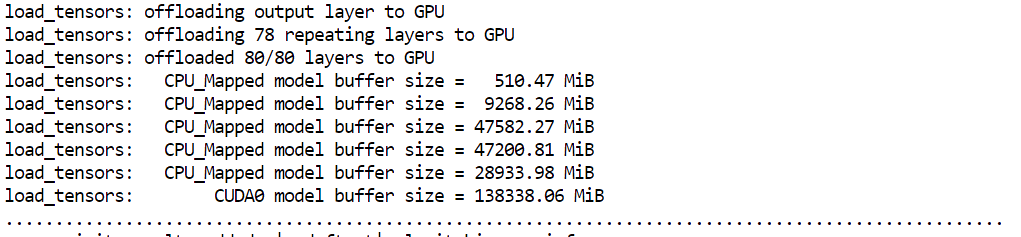

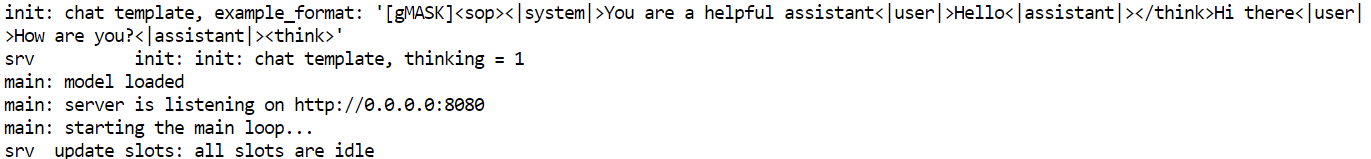

When you start the server, you will notice that llama.cpp uses almost all available GPU memory, with the remaining model layers offloaded into system RAM. This is expected and works well on H200 setups.

The model should load and start serving in under a minute. If your pod takes significantly longer, there may be an issue with the instance. In that case, it is usually faster to terminate the pod and launch a fresh one.

Once the server is running, verify that GLM-5 is available by querying the OpenAI-compatible endpoint:

curl -s http://127.0.0.1:8080/v1/models | jqYou should see "GLM-5" listed in the response, confirming the model is loaded and ready to use.

{

"models": [

{

"name": "GLM-5",

"model": "GLM-5",

"modified_at": "",

"size": "",

"digest": "",

"type": "model",

"description": "",

"tags": [

""

],

"capabilities": [

"completion"

],

"parameters": "",

"details": {

"parent_model": "",

"format": "gguf",

"family": "",

"families": [

""

],

"parameter_size": "",

"quantization_level": ""

}

}

],

"object": "list",

"data": [

{

"id": "GLM-5",

"object": "model",

"created": 1770900487,

"owned_by": "llamacpp",

"meta": {

"vocab_type": 2,

"n_vocab": 154880,

"n_ctx_train": 202752,

"n_embd": 6144,

"n_params": 753864139008,

"size": 281373251584

}

}

]

}5. Test the GLM-5 model using the chat UI

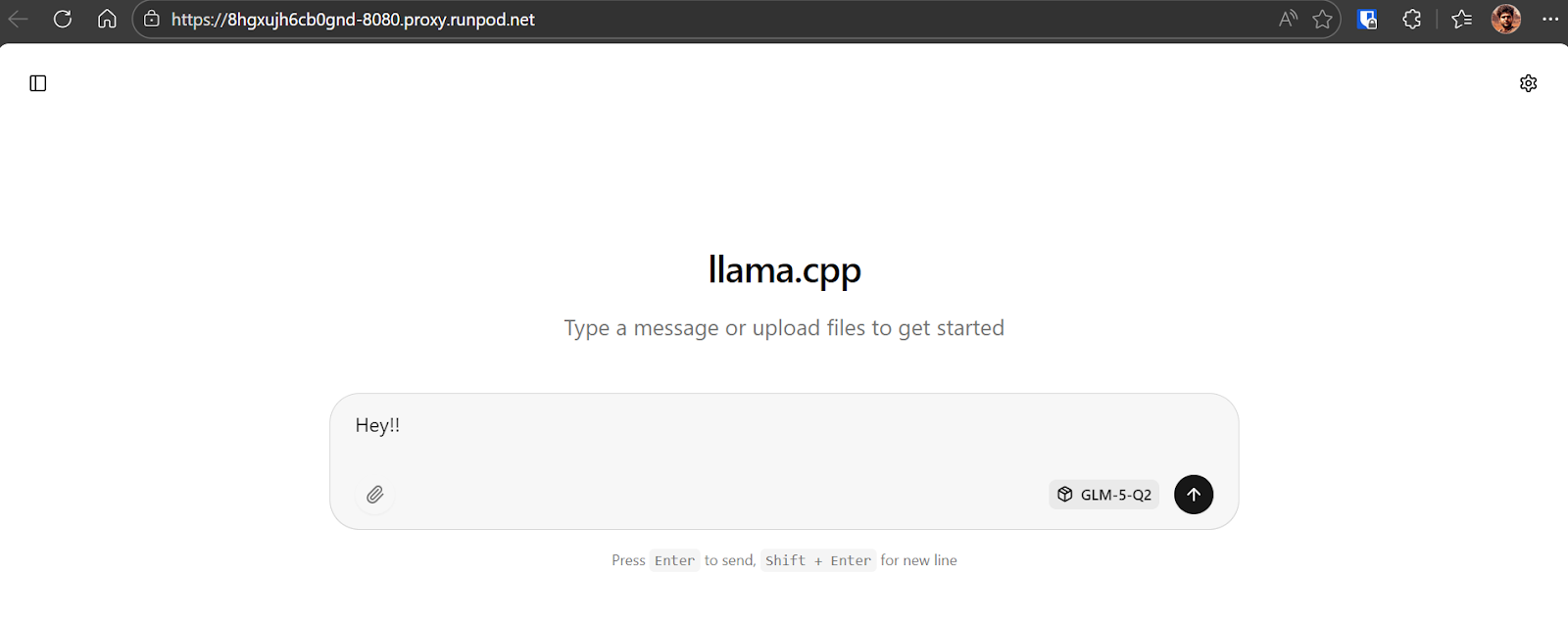

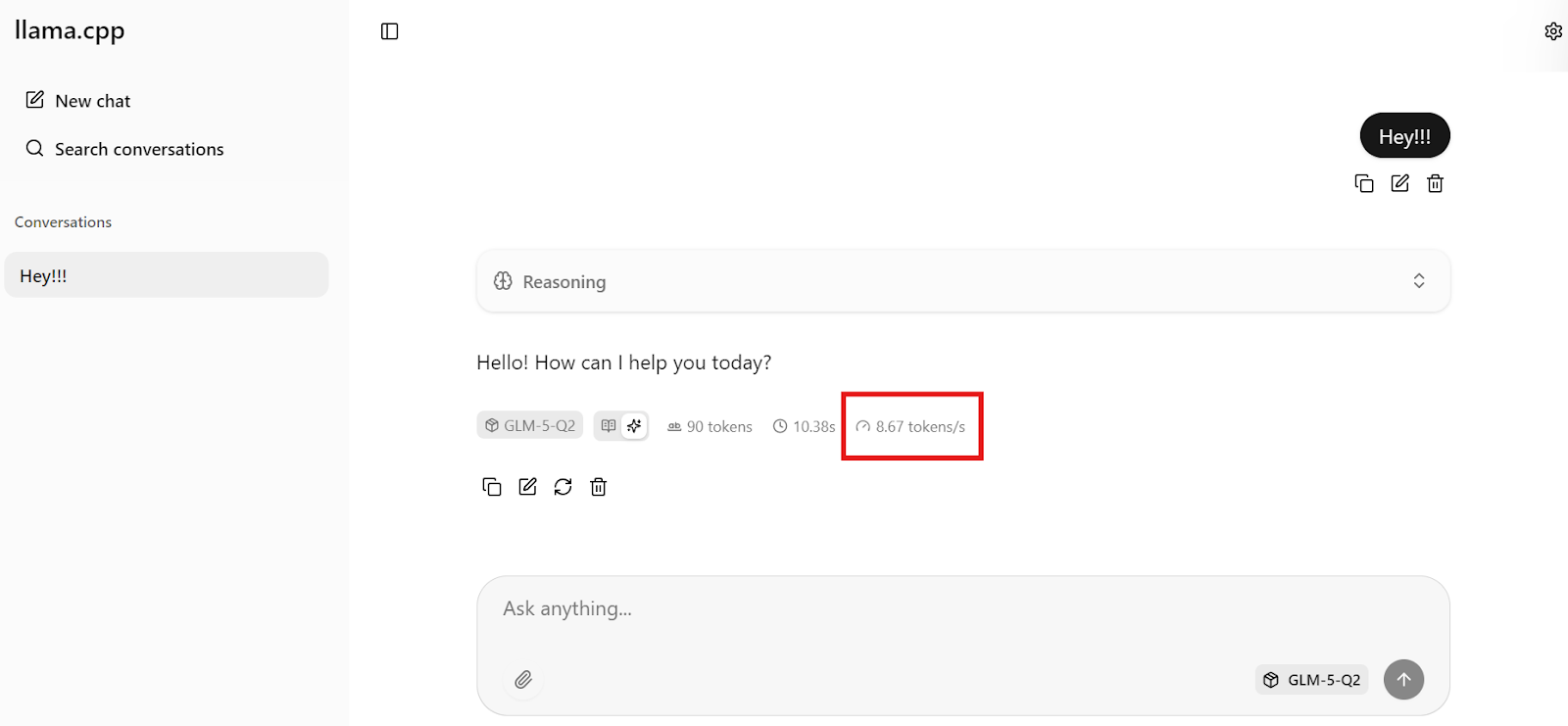

Once the server is running, you can test GLM-5 directly through the built-in llama.cpp Chat UI.

Normally, the WebUI is available locally at: http://127.0.0.1:8080

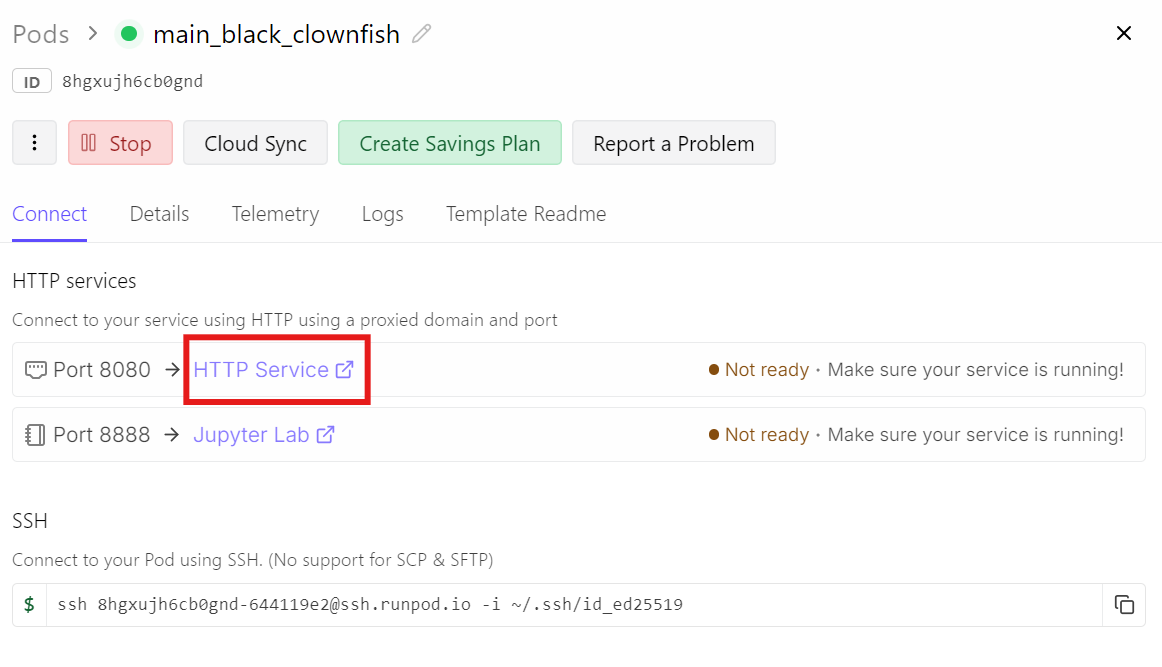

However, since we are running on Runpod in the cloud, this localhost link will not work from your machine.

Instead, go to your Runpod dashboard and click the HTTP Service link for port 8080. This is the public URL that forwards traffic to your running llama-server.

Opening that link will bring you to the Chat UI, with the GLM-5 model already loaded and ready.

Opening that link will bring you to the Chat UI, with the GLM-5 model already loaded and ready.

To confirm everything is working, send a simple message like “Hey!!”. You should see the model respond immediately.

In our case, inference runs at around 8.7 tokens per second, which is excellent performance considering the size of GLM-5 and the 281GB quantized checkpoint.

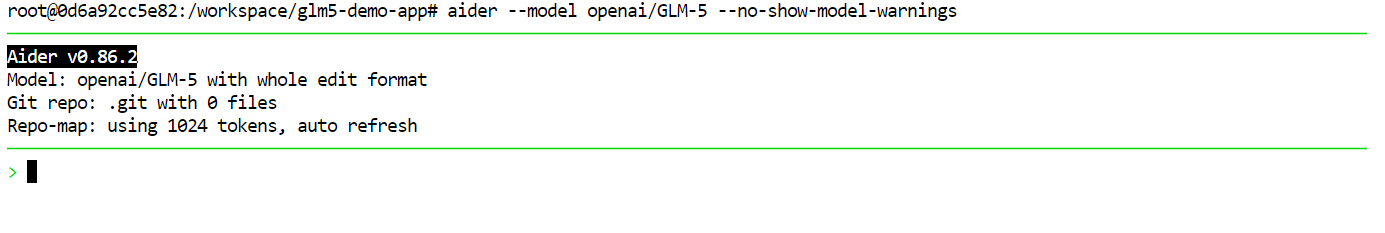

6. Install and connect to Aider

Aider is a terminal-based AI pair programming tool that works directly inside your project folder.

You chat with it like a coding partner, and it can create, edit, and refactor files across your repo while keeping everything grounded in your actual codebase and git workflow.

It also supports connecting to any OpenAI-compatible API endpoint, which makes it a great fit for running against our local llama.cpp server.

First, install Aider:

pip install -U aider-chatNext, point Aider to your local llama.cpp OpenAI-compatible server. We set a dummy key because llama.cpp does not require a real OpenAI key:

export OPENAI_API_BASE=http://127.0.0.1:8080/v1

export OPENAI_API_KEY=local

export OPENAI_BASE_URL=$OPENAI_API_BASENow create a fresh demo project folder (so Aider has a clean repo to work in):

mkdir -p glm5-demo-app

cd glm5-demo-appFinally, start Aider and connect it to GLM-5 using the model alias we exposed earlier:

aider --model openai/GLM-5 --no-show-model-warningsAt this point, anything you ask inside Aider will be routed through your local GLM-5 server, and Aider will apply changes directly to files in glm5-demo-app.

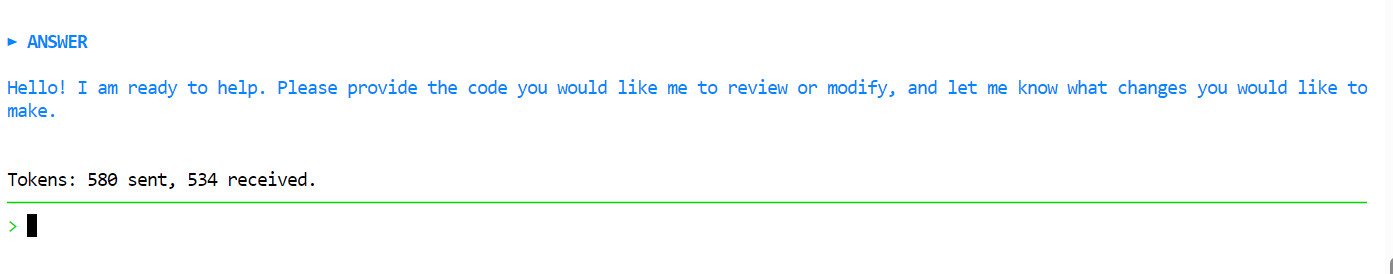

Use GLM-5 As Your Coding Agent

Use GLM-5 As Your Coding Agent

Once Aider is connected to GLM-5, you can use it like a coding agent inside your repo. Start with a simple greeting to confirm it responds quickly.

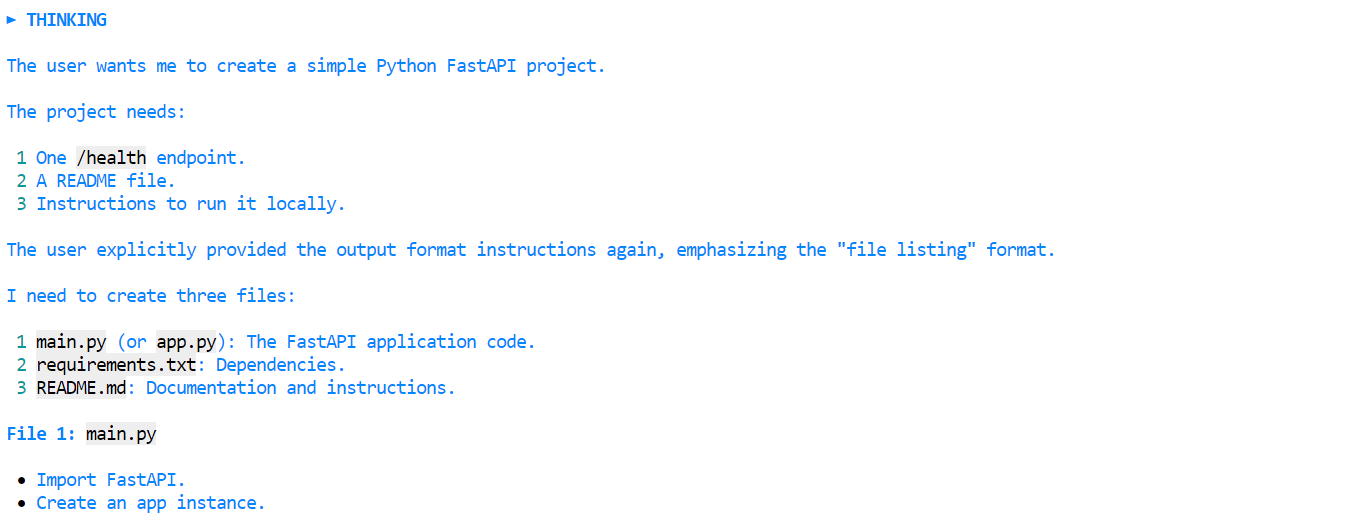

Then, give it a clear task prompt like this:

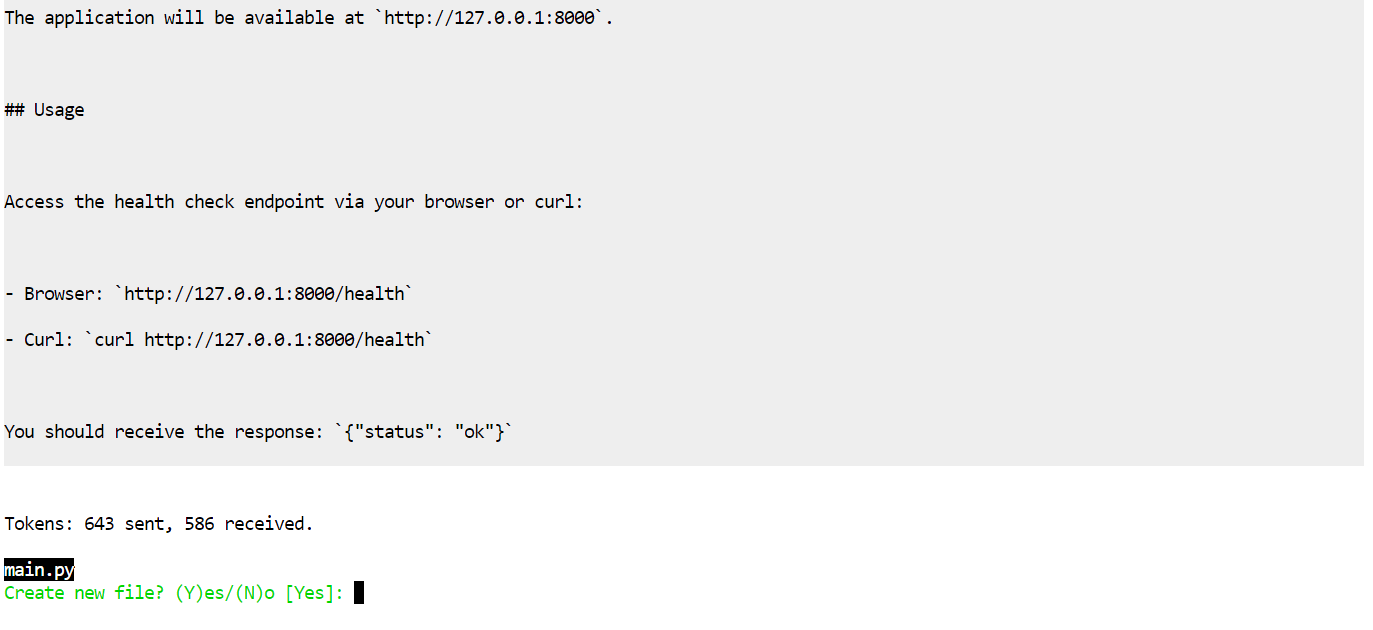

Create a simple Python FastAPI project with one /health endpoint, a README, and instructions to run it locally.

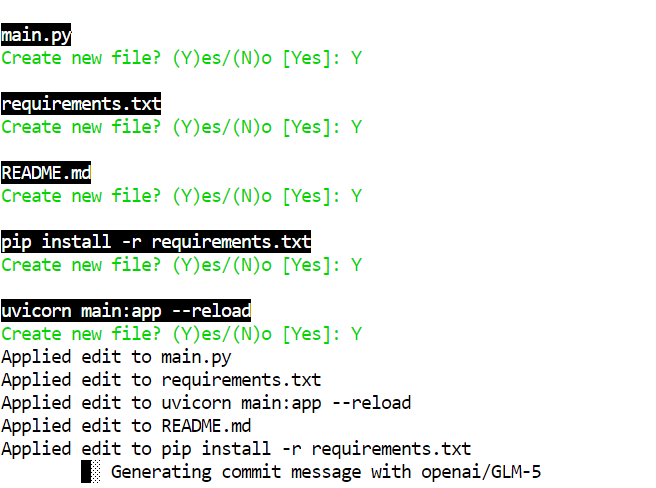

Aider will first propose a plan, then ask for permission to apply edits.

Accept the edits, and it will generate the files automatically.

On a 2-bit quant like GLM-5-UD-Q2_K_XL, you may see small mistakes, for example, creating a file like pip install -r requirements.txt, which is a mistake. The full model is less likely to make these errors, but the 2-bit model is still very usable with quick human review.

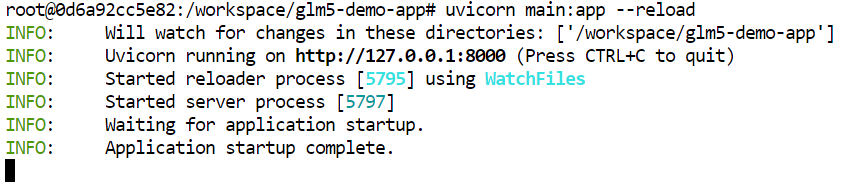

After Aider finishes writing the project, move into the folder, install dependencies, and run the server:

cd glm5-demo-app/pip install -r requirements.txtStart the FastAPI app with Uvicorn:

uvicorn main:app --reloadThe server will run on port 8000.

Test the health endpoint:

curl -s http://127.0.0.1:8000/healthYou should get:

{"status":"ok"}Final Thoughts

GLM-5 is quickly becoming one of the most talked-about open-weight models in the AI community, especially because it pushes open-source performance closer to that of proprietary models while being designed for deep reasoning, agentic workflows, and coding tasks.

Despite all the hype, running full-scale models locally is still a challenge for regular users.

Even with quantization, models like GLM-5 require hundreds of gigabytes of memory and fast GPUs, something many individuals don’t have on home machines.

This means most people either rely on cloud GPU pods (like the H200 setup in this tutorial) or use hosted API services.

The open-weight nature of GLM-5 is powerful because it lets you host and control your own instance without depending on proprietary API providers, but it also highlights why open source in AI doesn’t magically mean “runs on a laptop” for everyone.

In this tutorial, we saw how to overcome those hardware barriers using a 2-bit quantized version of GLM-5 on a Runpod H200 GPU. We walked through setting up the environment, compiling llama.cpp with CUDA support, downloading the model efficiently, launching the inference server, testing it via a browser UI, and finally connecting a coding tool like Aider to use GLM-5 as an agent for real development tasks.

GLM-5 FAQs

What is GLM-5 and why is it important?

GLM-5 is Z.ai’s newest open-reasoning model, designed specifically for complex tasks like coding, agentic workflows, and long-context chat. With over 750 billion parameters, it is a massive Mixture-of-Experts (MoE) model that rivals top proprietary models in logic and problem-solving. It is particularly notable for its ability to handle "one-shot" website generation and deep reasoning tasks that smaller open-source models often struggle with.

Is a 2-bit quantized model actually smart enough for coding?

Yes. While 2-bit quantization traditionally degrades performance, GLM-5’s sheer scale makes it highly resilient to compression. Even at 2-bit precision, the model retains the vast majority of its reasoning capabilities, allowing it to outperform many smaller FP16 models (like Llama-3-70B) in complex coding tasks. It is excellent for logic and architecture, though using tools like Aider is recommended to catch minor syntax errors.

How does GLM-5 differ from previous versions like GLM-4?

The biggest difference is scale and focus. GLM-5 is a generational leap in Deep Reasoning and Agentic capabilities. While GLM-4 was a strong generalist, GLM-5 operates as a massive MoE (Mixture-of-Experts) model designed to "think" through multi-step engineering problems. It also features a significantly larger context window (up to 200k in training), making it far superior for analyzing large codebases or long documents.

Why do I need to compile llama.cpp from source to run this?

Standard releases of llama.cpp often lag behind the very latest model architectures. Because GLM-5 uses a specific variation of the MoE architecture and new tensor operations, it requires specific upstream changes that haven't been merged into the main branch yet. Compiling from source with the CUDA flag ensures you have the exact kernels needed to offload the model layers to GPUs like the NVIDIA H200; otherwise, the model may fail to load.

Does GLM-5 support "Thinking" or "Reasoning" process tokens?

Yes, GLM-5 is marketed as an open-reasoning model. This means that for complex queries, it can generate internal "thought" chains to break down problems before outputting a final answer. When used with coding agents like Aider, this allows the model to plan a refactor or debug a cryptic error step-by-step, resulting in higher-quality code generation than standard "predict-the-next-token" models.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.