Track

Organizations struggle to secure, govern, and move big data sets in today's data environment. AWS Lake Formation and AWS Glue are two services that can help you overcome these challenges. AWS Glue performs data cleaning, restructuring, and data type conversion, while AWS Lake Formation builds data lakes and performs data security and management.

In this blog, we will demonstrate how to develop a secured, automated data pipeline using AWS Lake Formation and AWS Glue. We will develop an ETL job using AWS Glue to move and process sample data currently stored in an S3 bucket, and fine-grained access control will be made through AWS Lake Formation.

What is AWS Lake Formation?

AWS Lake Formation provides the necessary tools to create, manage, and secure data lakes on AWS. With Lake Formation, creating data lakes on Amazon S3 and managing security and permissions is easier.

Using AWS Lake Formation, you can:

- Quickly build a secure data lake.

- Centralize data storage in S3.

- Define granular access control policies.

- Apply data encryption and audit logs for compliance.

What is AWS Glue?

AWS Glue is a serverless data integration service that provides Extract, Transform, and Load (ETL) functionality for data preparation. It is an automated data preparation service that makes managing data cataloging, transformation, and loading easier. This is useful in consolidating data from various sources, such as S3, into data warehouses or databases.

With AWS Glue, you get:

- Glue Data Catalog: A centralized repository for managing metadata.

- ETL jobs: Serverless jobs to transform and move data.

- Glue Studio: A visual interface for designing ETL workflows.

If you’re new to Glue, check out our Getting Started with AWS Glue tutorial.

Why Integrate AWS Lake Formation and AWS Glue?

Integrating AWS Lake Formation and AWS Glue brings the best of both worlds: secure data lakes and automated ETL pipelines. Some benefits of this integration include:

- Automated data pipelines: Easily automate data transformation and movement while maintaining security.

- Centralized data management: Combine Lake Formation’s access control with Glue’s data transformation and cataloging capabilities.

- Improved security: Leverage Lake Formation's fine-grained permissions for controlling access to sensitive data.

Cloud Courses

Prerequisites for Integration

Before integrating AWS Lake Formation and AWS Glue, ensure you have the following:

- AWS account: Access to AWS Lake Formation and AWS Glue.

- Essential knowledge: Familiarity with data lakes, ETL, and AWS services.

- S3 bucket: A pre-configured S3 bucket to store your data.

- IAM roles: Necessary IAM roles and permissions are required to access both services.

- CloudFormation: A template is provided to deploy the base setup. You can find the template here.

Step-by-Step Guide to Integration

Once you have the prerequisites, just follow these steps:

Step 1: Setting up AWS Lake Formation

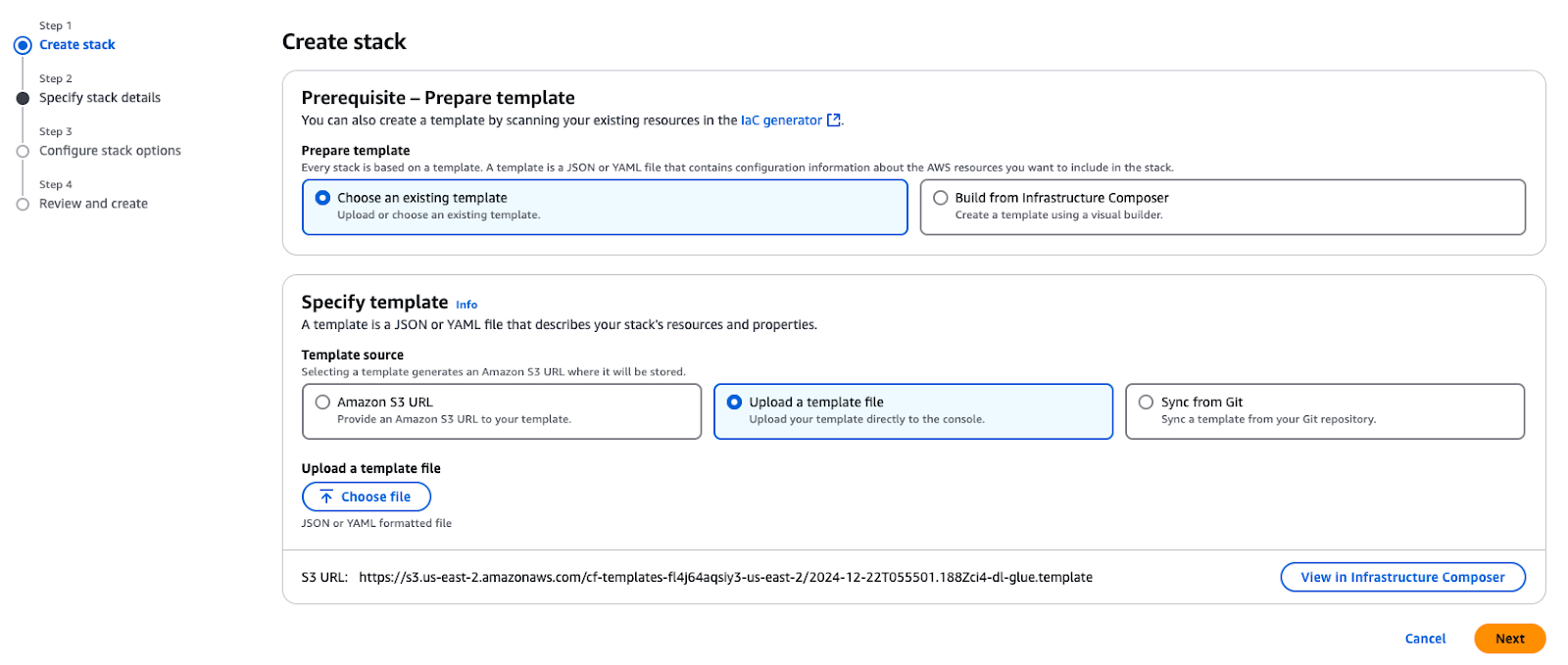

The first step is deploying the core AWS resources needed to build a secured data lake before starting the integration process. The AWS CloudFormation template helps organize and deploy all the required resources.

Use the provided CloudFormation template to create a stack in your AWS account. This stack provisions essential resources required for the use cases described in this tutorial.

Creating a stack in AWS CloudFormation

Upon deploying the stack, the following key resources will be created in your AWS account:

- Amazon VPC and subnet: A VPC and a public subnet for hosting resources with appropriate networking configurations.

- Amazon S3 buckets:

- Stores blog-related data, scripts, and results.

- Hosts the TPC data used for building the data lake.

- IAM roles and policies:

GlueServiceRole: Grants AWS Glue access to S3 and Lake Formation services.DataEngineerGlueServiceRole: Provides engineers with permissions for data access and processing.DataAdminUserandDataEngineerUser: Pre-configured IAM users for exploring and managing Lake Formation security.- AWS Glue Crawler: A TPC Crawler to scan and catalog the parquet data stored in the S3 bucket.

- Amazon EC2 instance: A helper instance (

EC2-DB-Loader) for pre-loading and transferring sample data into S3. - AWS Secrets Manager: A secret (

lf-users-credentials) to securely store user credentials for the pre-created IAM users.

After the stack is created, you will have the following:

- A sample TPC database stored in Amazon S3.

- Pre-created users and roles to explore security patterns and access controls.

- Glue configurations and crawlers to catalog and process your data.

- All the foundational components needed to follow the steps in this blog.

Step 2: Configuring data permissions

In this section, the Data Lake Administrator will configure AWS Lake Formation to make it available for data consumer personas, including the data engineers. The administrator will:

- Create a new database in AWS Lake Formation to store TPC data.

- Register metadata tables for the TPC dataset in the AWS Glue Data Catalog.

- Configure Lake Formation tags and policies to establish access permissions for different users.

These configurations provide secure, fine-grained access control and smooth coordination with other AWS services.

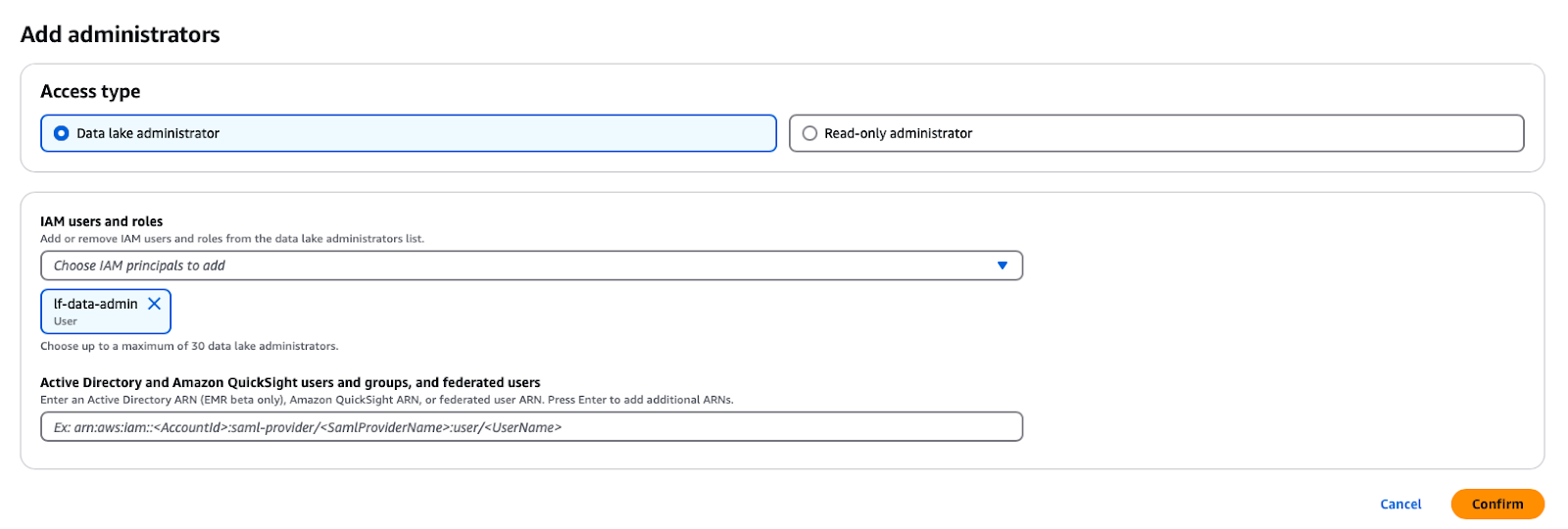

Setting up Data Lake Administrator

A Data Lake Administrator is an Identity and Access Management (IAM) user or role that can give any principle (including itself) permission on any Data Catalog entity. The Data Lake Administrator is usually the first user created to manage the Data Catalog and is commonly the user who is granted administrative privileges for the Data Catalog.

In the AWS Lake Formation service, you can open the prompt by clicking on the Administrative roles and tasks -> Add Administrators in the navigation panel button and then selecting the lf-data-admin IAM user from the dropdown list.

Adding a Data Lake Administrator in AWS Lake Formation

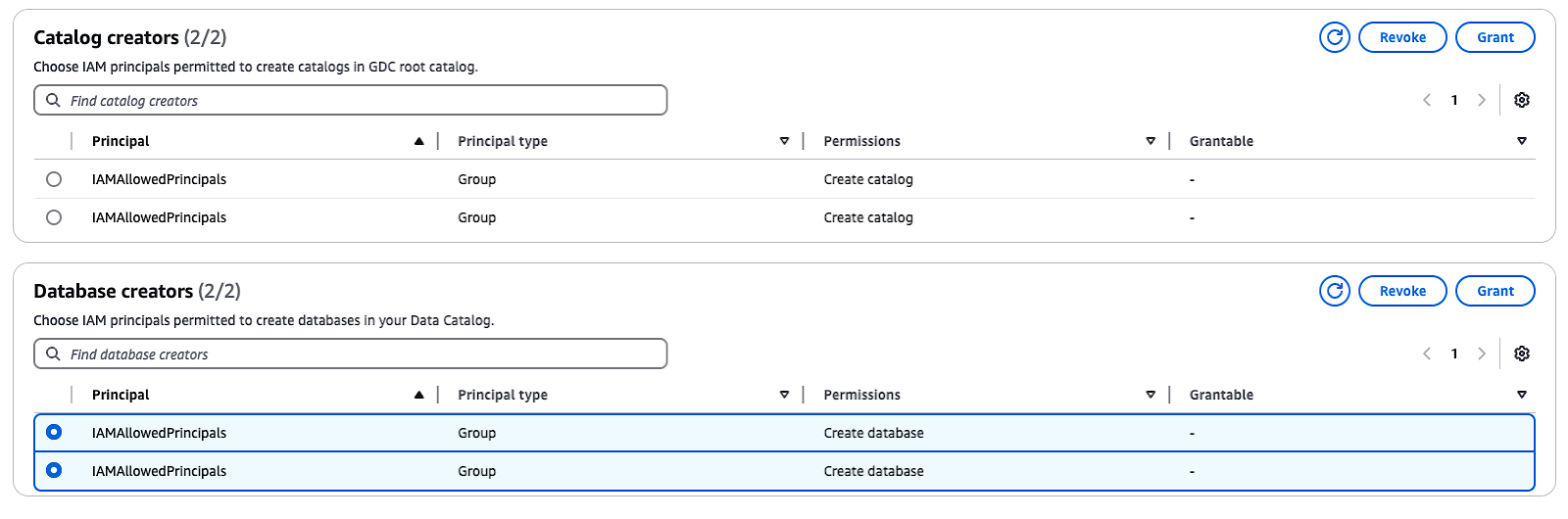

Changing catalog settings

By default, Lake Formation has the option “Use only IAM access control,” which is selected to be compatible with the AWS Glue Data Catalog. To enable fine-grained access control with Lake Formation permissions, you need to adjust these settings:

- In the navigation pane, under Administration, choose Administrative roles and tasks.

- If the

IAMAllowedPrincipalsgroup appears under Database creators, select the group and choose Revoke. - In the Revoke permissions dialog box, confirm that the group has the Create database permission and then click Revoke.

These steps will disable default IAM access control and allow you to implement Lake Formation permissions for enhanced security.

Managing catalog and database creators in AWS Lake Formation

Step 3: Using the AWS Glue Data Catalog

Now, it is time to put the Glue Data Catalog to use.

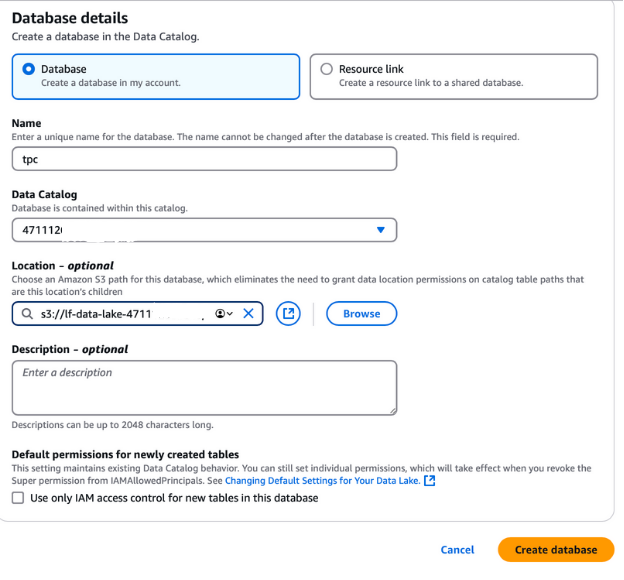

Creating a database

To create a database for the TPC data, log out from your current AWS session and log back in as the lf-data-admin user. Use the sign-in link provided in the CloudFormation output and the password retrieved from AWS Secrets Manager.

- Navigate to the AWS Lake Formation console and select Databases from the left navigation pane.

- Click on the Create database button.

- Name the database “tpc”, as it will be used for the TPC data lake.

- In the Location field, select the S3 data lake path created by the CloudFormation stack. This path can be found in the CloudFormation output tab and will be named

lf-data-lake-account-ID, whereaccount-IDis your 12-digit AWS account number. - Uncheck the option Use only IAM access control for new tables in this database to enable fine-grained access control.

- Leave all other options at their default values and click Create database.

This database will be the foundation for storing and managing metadata for the TPC data in the Lake Formation setup.

Creating a database in AWS Lake Formation

Hydrating the Data Lake

We use an AWS Glue Crawler to create tables in the AWS Glue Data Catalog. Crawlers are the most common way of creating tables in AWS Glue, as they can scan through multiple data stores at once and generate or update the details of the tables in the Data Catalog after the crawl is done.

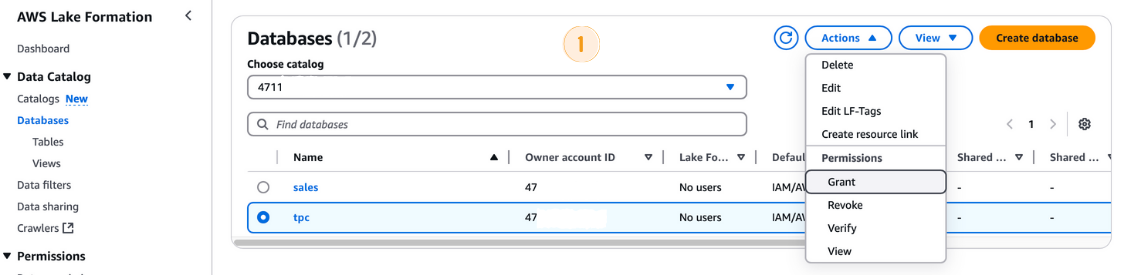

Before running the AWS Glue Crawler, you need to grant the required permissions to its IAM role:

- In the AWS Lake Formation console, navigate to Databases and select the

tpcdatabase. - From the Actions dropdown menu, choose Grant.

- In the Grant permissions dialog box:

- Under Principals, select the IAM role

LF-GlueServiceRole. - In the LF-Tags or catalog resources section, ensure Named data catalog resources is selected.

- Select

tpcas the database. - In the Database permissions section, check the Create table option.

- Click Grant.

Granting permissions in AWS Lake Formation

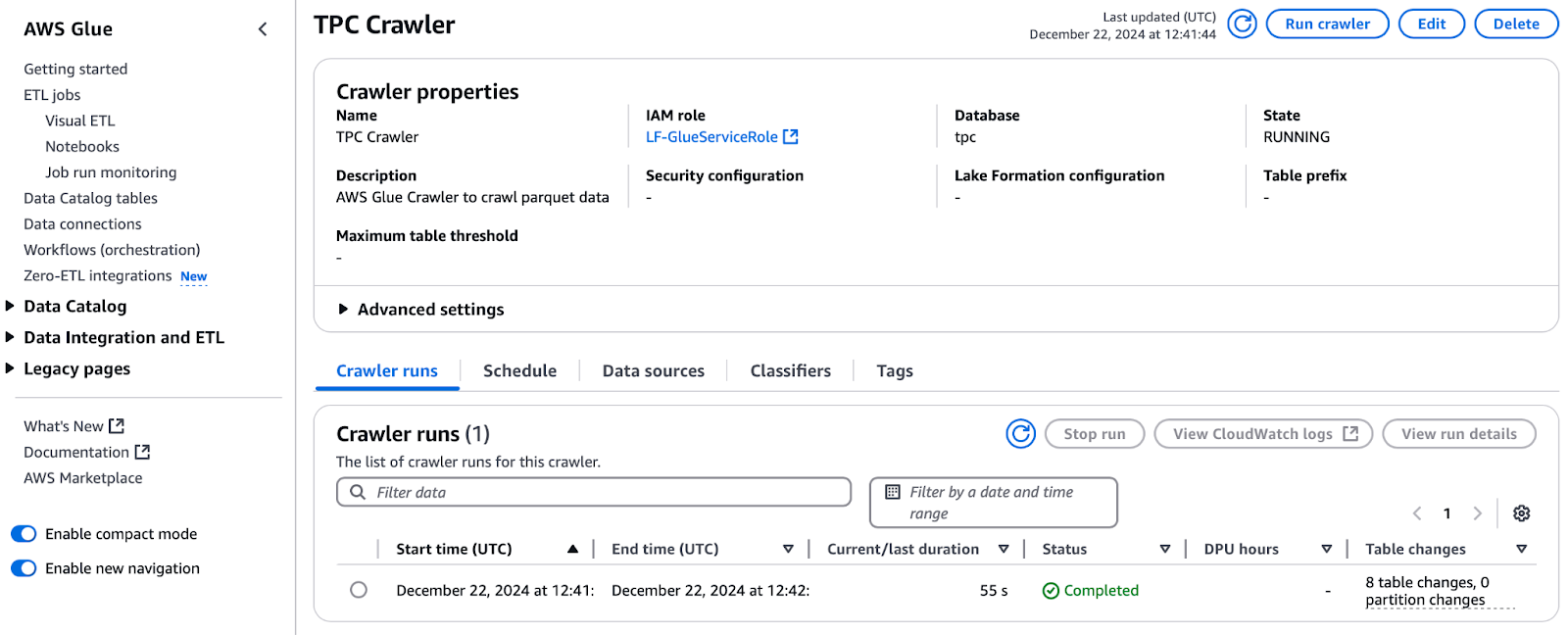

Running the AWS Glue Crawler

With permissions granted, proceed to run the crawler:

- In the AWS Lake Formation console, navigate to Crawlers and click to open the AWS Glue console.

- Select the crawler named TPC Crawler and click Run crawler.

- Monitor the progress by clicking the Refresh button.

- Once completed, the TPC database will be populated with tables. Check the Table changes from the last run column to see the number of tables added (e.g., 8 tables).

Running the TPC Crawler in AWS Glue

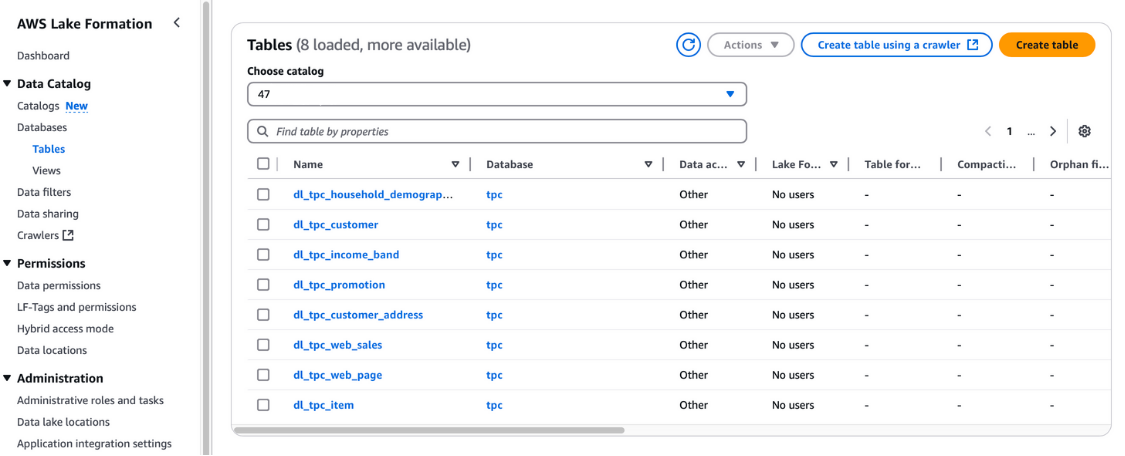

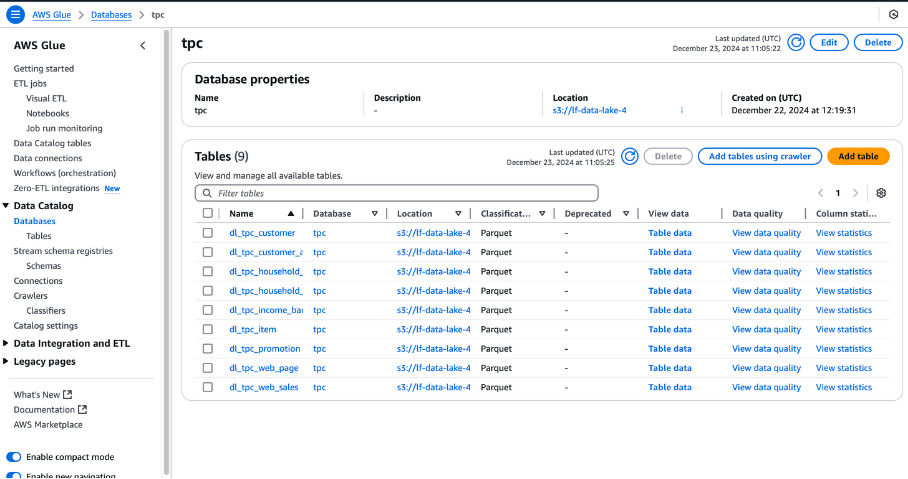

You can also verify these tables in the AWS Lake Formation console under Data catalog and Tables.

Viewing tables in AWS Lake Formation

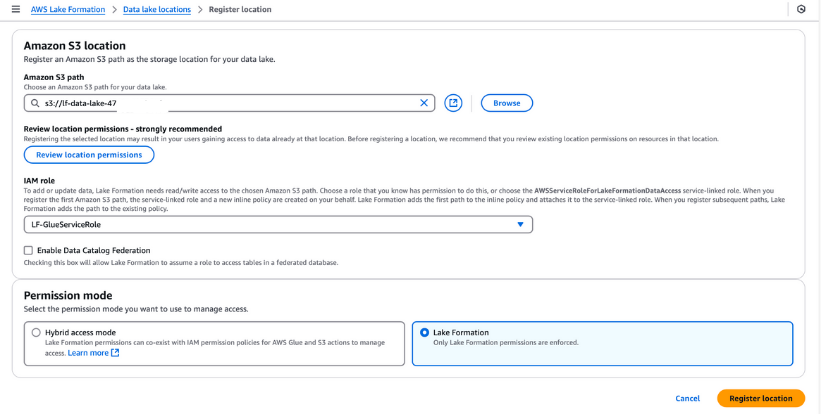

Registering Data Lake locations

You must register the S3 bucket in AWS Lake Formation for your data lake storage. This registration allows you to enforce fine-grained access control on AWS Glue Data Catalog objects and the underlying data stored in the bucket.

Steps to register a Data Lake location:

- Navigate to the Data Lake Locations section in the AWS Lake Formation console and click on Register location.

- Enter the path to the S3 bucket created through the CloudFormation template. This bucket will store the data ingested during the pipeline process.

- For the IAM Role, select the

LF-GlueServiceRolecreated by the CloudFormation template. - Under Permission mode, choose Lake Formation to enable advanced access control features.

- Click Register location to save your configuration.

AWS Cloud Practitioner

Once registered, verify your data lake location to ensure it is correctly configured. This step is essential for seamless data management and security in your Lake Formation setup.

Registering an Amazon S3 location in AWS Lake Formation

Lake Formation tags (LF-Tags)

LF-Tags can be used to organize resources and set permissions in many resources at once. It enables you to organize data using taxonomies and thus break down complex policies and manage permissions effectively. This is possible because with LF-Tags, you can separate policy from resource, and therefore, you can set up access policies even before resources are created.

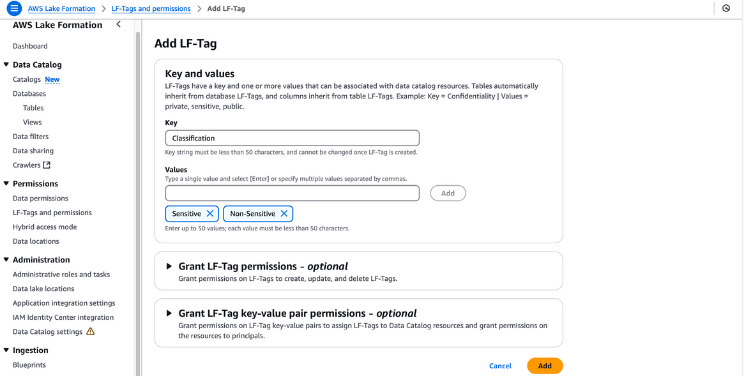

Steps to create and assign LF-Tags

- Create LF-Tags:

- Navigate to the Lake Formation console at AWS Lake Formation.

- In the navigation pane, choose LF-Tags and permissions, then click Add LF-Tag.

- Provide “Classification” as the key and add “Sensitive” and “Non-Sensitive” as the values. Click Add LF-Tag.

Creating LF-Tags in AWS Lake Formation

- Grant LF-tag permissions:

- Ensure the

lf-data-adminuser has LF-Tag permissions. Navigate to Tables in the Lake Formation console. - Select the table

dl_tpc_customer, click the actions menu, and choose Grant. - Under IAM users and roles, select

lf-data-admin. - For LF-Tags or catalog resources, select Named data catalog resources.

- Choose the

tpcdatabase and the tabledl_tpc_customer, grantingAltertable permissions. Click Grant.

Granting permissions in AWS Lake Formation

- Assign LF-tags to Data Catalog objects:

- Navigate to Tables and select the

dl_tpc_customertable. Open the table details and choose Edit LF-Tags from the actions menu. - Click Assign new LF-Tag, provide “Classification” as the key, and set “Non-Sensitive” as the value. Click Save.

- From the table's actions menu, choose Edit schema. Select columns such as

c_first_name,c_last_name, andc_email_address, and click Edit LF-Tags. - For the inherited key

Classification, change the value to “Sensitive” and click Save. Save this configuration as a new version. - Apply LF-Tags to additional tables:

- For the

dl_tpc_household_demographicstable, go to Actions and choose Edit LF-Tags. - In the dialog box, click Assign new LF-Tag. Set Group as the key and Analyst as the value. Click Save to finalize.

By following these steps, you can efficiently assign LF-Tags to your Data Catalog objects, ensuring secure, fine-grained access control across your data lake.

Step 4: Creating an ETL job in AWS Glue

AWS Glue Studio is a drag-and-drop user interface that helps users develop, debug, and monitor ETL jobs for AWS Glue. Data engineers leverage Glue ETL, based on Apache Spark, to transform data sets stored in Amazon S3 and load data in data lakes and warehouses for analysis. To effectively administer access when several teams collaborate on the same data sets, it is important to provide access and restrict it according to roles.

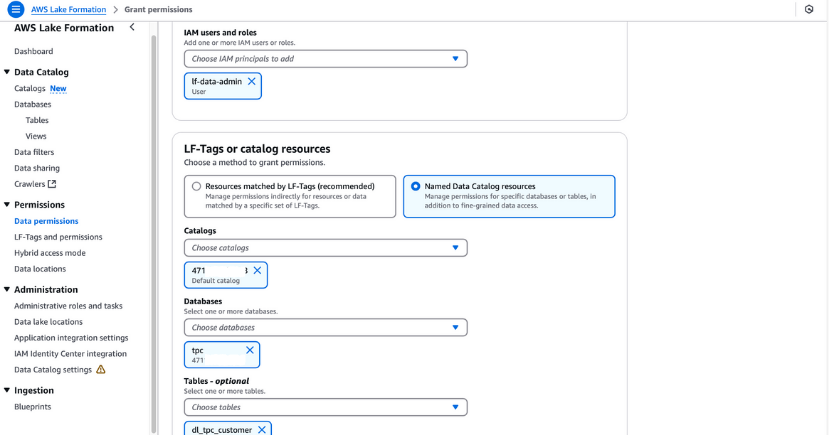

Granting permissions with AWS Lake Formation

Follow these steps to grant the required permissions to the lf-data-engineer user and the corresponding DE-GlueServiceRole role. This role is used by the data engineer to access Glue Data Catalog tables in Glue Studio jobs.

- Log in as the Data Lake Administrator:

- Use the

lf-data-adminuser to log into the AWS Management Console. Retrieve the password from AWS Secrets Manager and the login URL from the CloudFormation output.

- Use the

- Grant select permissions for a table:

- Navigate to Data lake permissions in the Lake Formation console.

- Click Grant, and in the dialog box, provide the following information:

- IAM users and roles: Enter

lf-data-engineerandDE-GlueServiceRole. - LF-Tags or catalog resources: Select Named data catalog resources.

- Databases: Choose

tpc. - Tables: Choose

dl_tpc_household_demographics. - Table permissions: Select Select and Describe.

- IAM users and roles: Enter

- Click Grant to save the permissions.

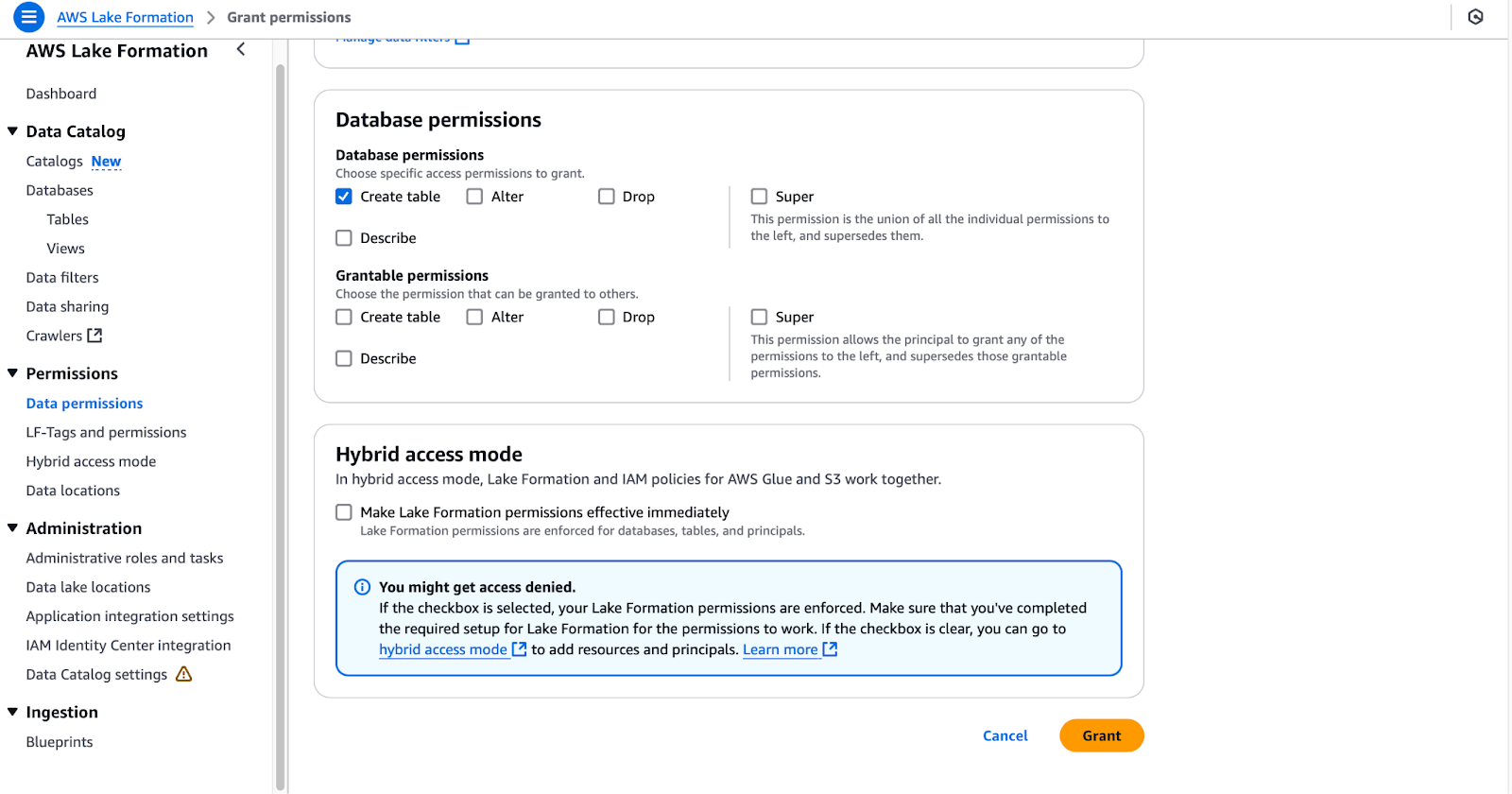

- Grant create table permissions for a database:

- Navigate to Data Lake Permissions again.

- Click Grant, and in the dialog box, provide the following information:

- IAM users and roles: Enter

DE-GlueServiceRole. - LF-Tags or catalog resources: Select Named data catalog resources.

- Databases: Choose

tpc(leave tables unselected). - Database permissions: Select Create table.

- IAM users and roles: Enter

- Click Grant to finalize the permissions.

Configuring database permissions in AWS Lake Formation

Completing these steps will give the lf-data-engineer user and DE-GlueServiceRole role the necessary permissions to work with Glue Data Catalog tables and manage resources in Glue Studio jobs. This setup ensures secure, role-based access to your data lake.

Creating Glue Studio ETL jobs

In this section, we will create an ETL job in AWS Glue Studio using SQL Transform.

- Log in to AWS as

lf-data-engineer:- Use the

lf-data-engineeruser to log into the AWS Management Console. Retrieve the password from AWS Secrets Manager and the login URL provided by the CloudFormation output.

- Use the

- Open Glue Studio and start a new job:

- Navigate to the Glue Studio console and click on Visual ETL.

- Rename the job to

LF_GlueStudioso it can be identified easily.

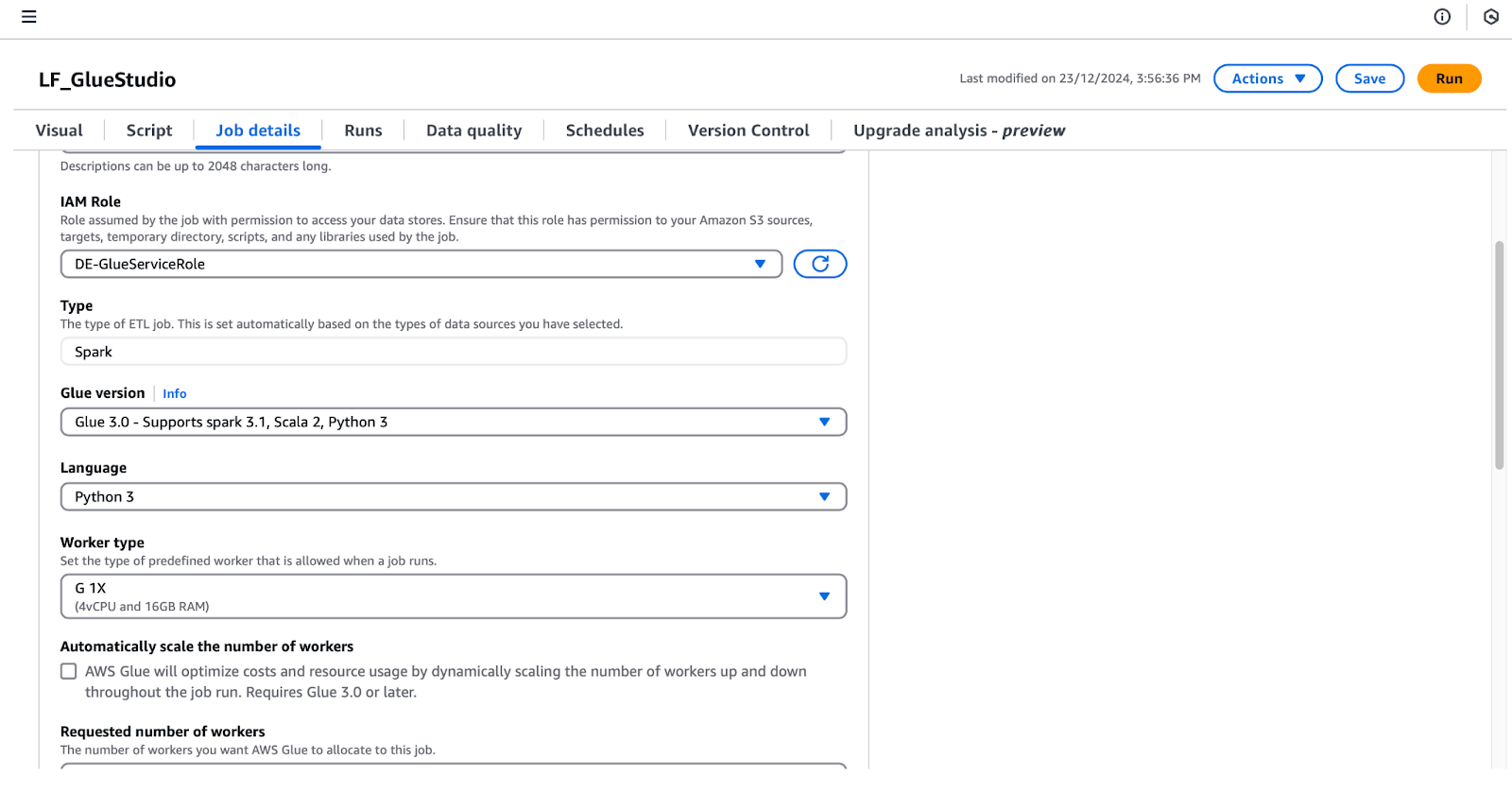

- Configure job details:

- Click the Job Details tab to set up the following configurations:

- IAM role: Select

DE-GlueServiceRole. - Glue version: Select Glue 5.0 - Support Spark 3.1, Scala 2, Python 3.

- Requested number of workers: Set to 5.

- Job bookmark: Select Disable.

- Number of retries: Set to 0.

- IAM role: Select

- Click the Job Details tab to set up the following configurations:

Configuring job details in AWS Glue Studio

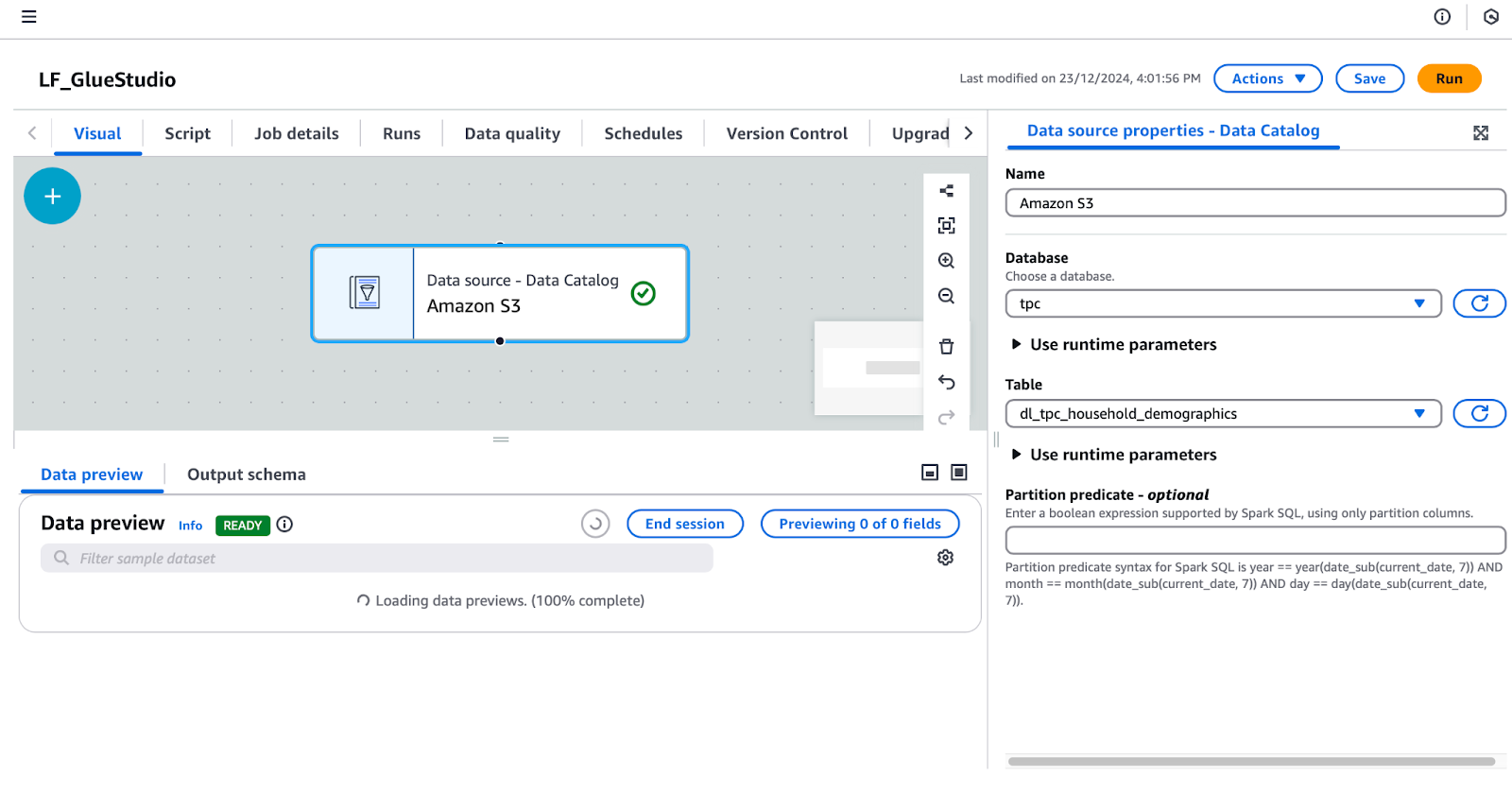

- Add a data source node:

- Under the Visual tab, click the + button and add an AWS Glue Data Catalog node to configure the data source. Rename this node to “Amazon S3”.

- Select the Amazon S3 node on the visual editor and configure its properties in the right panel:

- Under Data Source Properties - Data Catalog, set:

- Database:

tpc. - Table:

dl_tpc_household_demographics. (Note: Thelf-data-adminuser has only grantedlf-data-engineerSelect permission on this table. Other tables will not appear in the dropdown.)

Configuring a data source in AWS Glue Studio

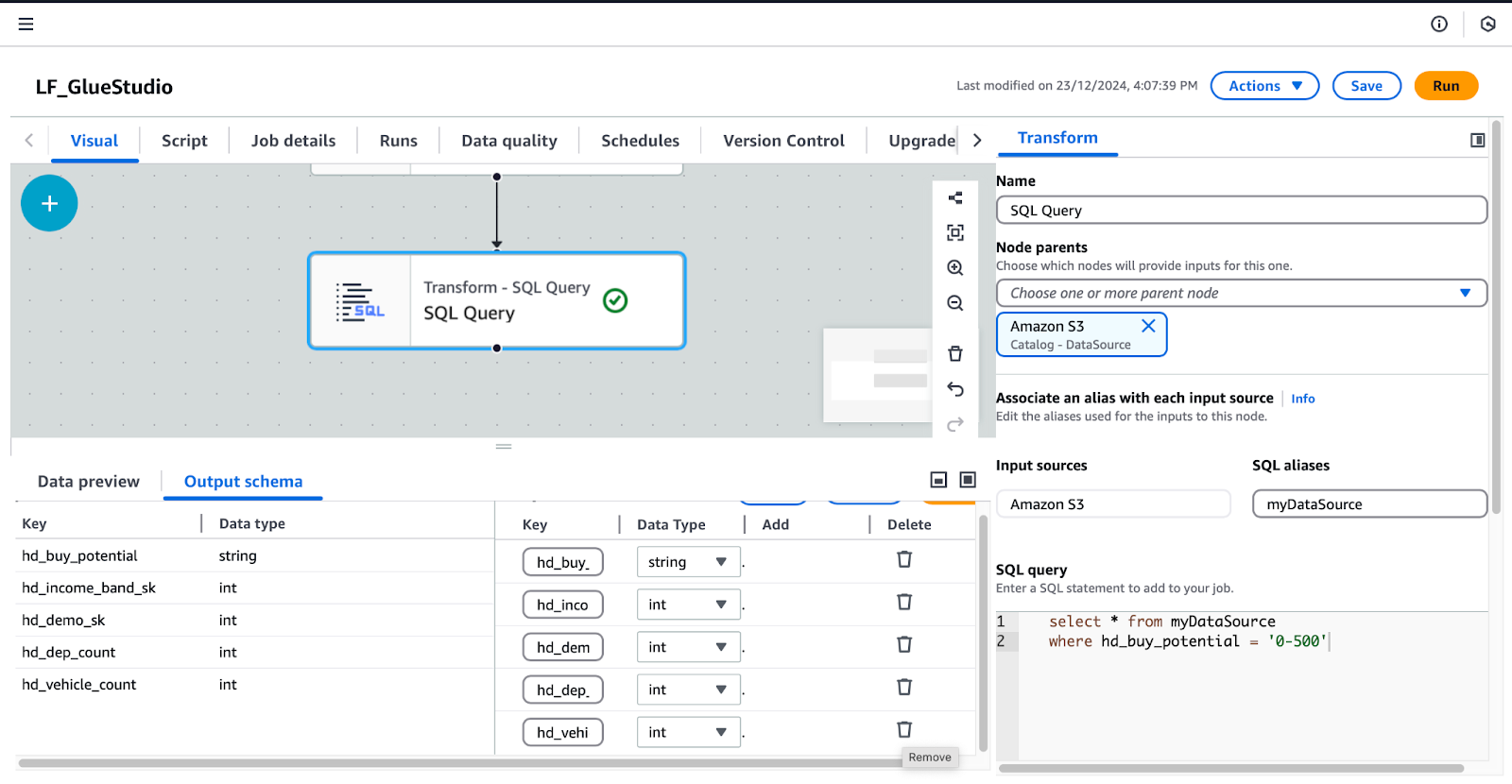

- Add and configure SQL Query node:

- With the Amazon S3 node selected, click the + button and add an SQL Query node as the transform.

- Select the SQL Query node and configure it:

- Under Transform, input your SQL query in the SQL Query field.

- Add this query:

/* Select all records where buy potential is between 0 and 500 */

SELECT *

FROM myDataSource

WHERE hd_buy_potential = '0-500';-

-

- Under Output Schema, click Edit and delete the keys

hd_dep_countandhd_vehicle_count. Click Apply.

- Under Output Schema, click Edit and delete the keys

-

Configuring SQL Query Transform in AWS Glue Studio

- Add a data target node:

- With the SQL Query node selected, click the + button and add a Data Target node. Select Amazon S3 Target from the search results.

- Configure the Data Target-S3 node in the right panel:

- Format: Select Parquet.

- Compression type: Select Snappy.

- S3 target location: Enter

s3://<your_LFDataLakeBucketName>/gluestudio/curated/. (Replace<your_LFDataLakeBucketName>with the actual bucket name from the CloudFormation Outputs tab.) - Data Catalog update options: Select Create a table in the Data Catalog and on subsequent runs, update the schema and add new partitions.

- Database: Select

tpc. - Table name: Provide a name for the output table. For example,

dl_tpc_household_demographics_below500. - Save and review the job:

- Click Save in the top-right corner to save the job.

- Optionally, switch to the Script tab to view the auto-generated script. You can download or edit this script if needed.

By following these steps, you will create a fully functional Glue Studio ETL job that transforms data and writes the results to a curated S3 folder while updating the Glue Data Catalog.

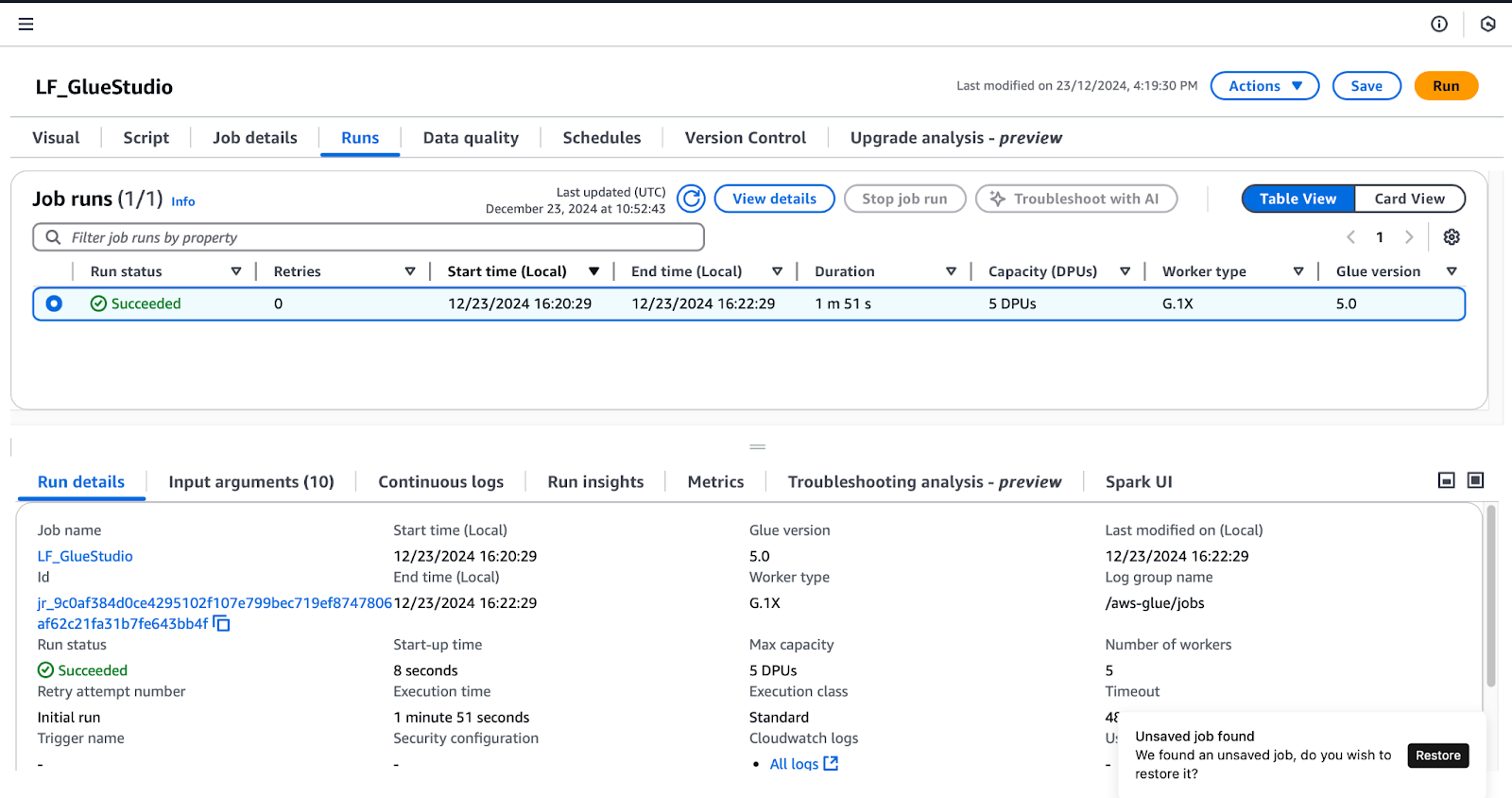

Step 5: Testing and validating the integration

To confirm the permissions granted in Lake Formation, follow these steps while logged in as the lf-data-engineer user (as detailed in the Create Glue Studio Jobs section):

- Run the

LF_GlueStudiojob: - Navigate to the LF_GlueStudio job and click the Run button in the top-right corner to execute the job.

- Monitor the job execution by selecting the Runs tab within the job interface.

Monitoring job runs in AWS Glue Studio

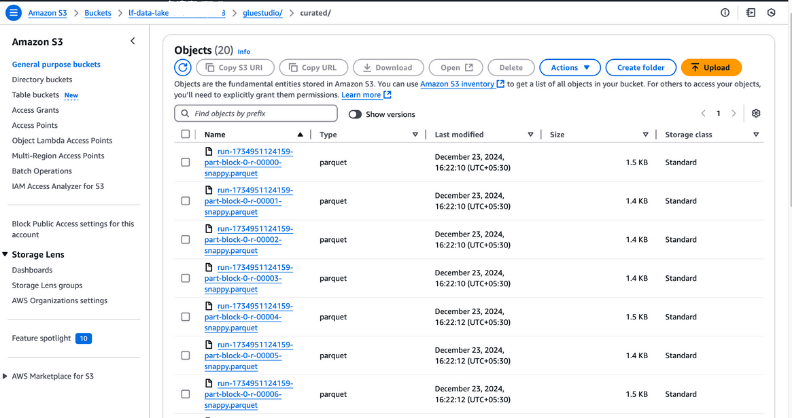

- Verify job output:

- Once the job run status shows Succeeded, go to the

curatedfolder in your S3 bucket to check for the output files. These files should be in Parquet format, as configured during the job creation.

Viewing output files in Amazon S3

- Verify the newly created table:

- Open the Glue Data Catalog and navigate to the database you specified during the job setup.

- Check that the new table is listed under the database, confirming that the output data has been cataloged successfully.

Viewing database tables in AWS Glue

Best Practices for Integrating AWS Lake Formation and AWS Glue

When integrating AWS Lake Formation and AWS Glue, following best practices can enhance data security, streamline operations, and optimize performance. Here's how you can make the most of these services:

Optimize data permissions

- Adopt the principle of least privilege: Grant users and roles access to only the resources they need. Avoid assigning broad permissions that could expose sensitive data or critical resources unnecessarily.

- Regularly audit access policies: Periodically review and update policies to comply with changing security and compliance requirements. Leverage tools like AWS IAM Access Analyzer to detect overly permissive policies.

- Leverage LF-Tags for policy management: Use Lake Formation Tags (LF-Tags) to group related resources and simplify permissions management. By applying tags like Sensitive or Non-Sensitive, you can create scalable, reusable policies that automatically apply to tagged resources.

- Decouple policies from resources: Define permissions using LF-Tags or templates before resources are created. This ensures a seamless integration process as new data sources or tables are added.

Monitor and debug jobs

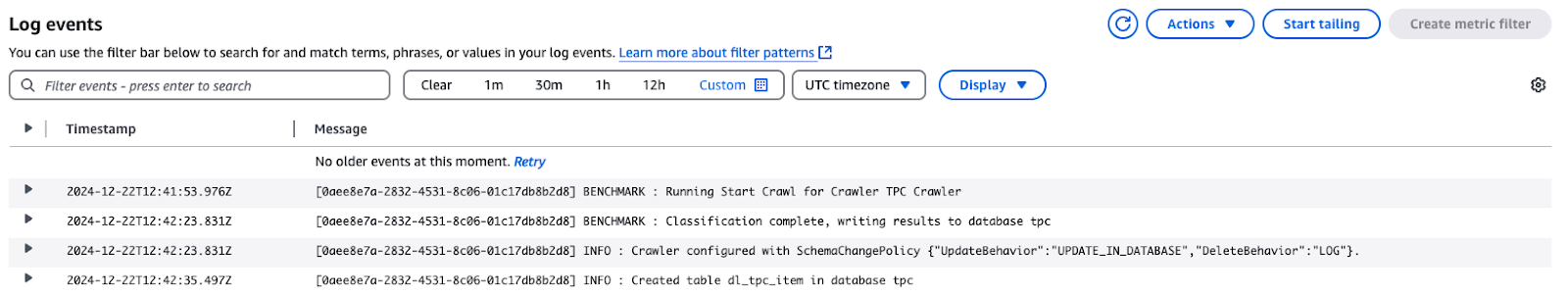

- Enable CloudWatch logs for Glue jobs: CloudWatch Logs can capture detailed logs of AWS Glue job executions, including errors and execution details. Use these logs to troubleshoot issues quickly.

Log events for TPC Crawler in AWS CloudWatch

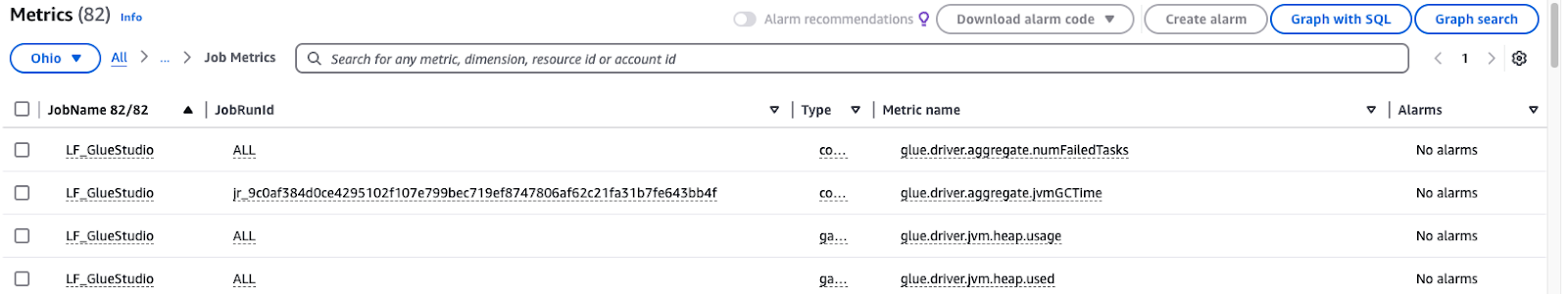

- Monitor Glue metrics: AWS Glue provides metrics to help identify inefficiencies or issues in your ETL workflows. Set up CloudWatch alarms to notify you about unusual patterns.

Monitoring AWS Glue Studio job metrics

Use partitioning and compression

- Optimize data partitioning: Divide datasets according to frequently asked-for characteristics, like department, date, or region. In Athena or Redshift, for instance, dividing sales data by month and year can significantly speed up queries and lower scan costs.

- Choose the right compression format: Use columnar storage formats made for analytics workloads, such as ORC or Parquet. Combine these with effective compression algorithms like Snappy to save storage and speed up queries.

- Automate partitioning in Glue jobs: AWS Glue jobs can dynamically partition data during the ETL process. Specify partition keys in your job configuration to guarantee that incoming data is stored in a structured format.

- Monitor and adjust strategies regularly: Assess how well your compression and partitioning work as your data volume increases. Examine query trends, then modify the partitioning schema to accommodate changing workloads.

Conclusion

Implementing AWS Lake Formation and AWS Glue helps the organization organize, safeguard, and process data. Using Lake Formation's extensive security measures and Glue's automation, you can optimize your ETL processes, meet all the policies regarding data governance, and provide easy access to data for users, including data engineers and data scientists.

Benefits include:

- Enhanced security: Fine-grained access control with LF-Tags ensures sensitive data is accessible only to authorized users.

- Automation: AWS Glue simplifies data preparation and transformation, reducing manual effort and errors.

- Scalability: Dynamic permissions and policy management enable seamless scaling as data grows.

- Efficiency: Optimized storage and query performance through partitioning and compression save time and costs.

As you deepen your understanding of data integration, it’s essential to complement your learning with practical, hands-on knowledge. For example, mastering data pipelines with ETL and ELT in Python can elevate your ability to process and transform data efficiently. Similarly, a solid grasp of Data Management Concepts will enhance how you structure and handle datasets. And to ensure your results are reliable, understanding the principles taught in the Introduction to Data Quality course is a must.

Cloud Courses

FAQs

What is the purpose of integrating AWS Lake Formation and AWS Glue?

By implementing AWS Lake Formation and AWS Glue, it is possible to develop secure, automated, and scalable data pipelines. This integration provides comprehensive access control, streamlined ETL processes, and a unified data management, analytics, and compliance platform.

What is the significance of LF-Tags in the AWS Lake Formation?

LF-Tags allow for managing permissions at large since, through them, data is organized by certain taxonomies, for instance, “Sensitive” or “Non-Sensitive.” They help in reducing the complexity of access policies as you can set permissions based on tags, and before resources are actually created, you are able to do so; this provides a certain level of flexibility as well as security.

What role does AWS Glue Studio play in this integration?

AWS Glue Studio is a data conversion tool with a drag-and-drop interface. Users can develop, monitor, and control ETL jobs through this interface. It makes data transformation processes more efficient and thus helps transform data for further analysis.

Can multiple teams access the same dataset securely?

Yes, AWS Lake Formation does offer the capability for fine-grained access control where you can grant users access to specific roles or even LF-Tags. This way, every team will only be able to view the data that concerns them, and the data itself will be protected.

What is the TPC dataset mentioned in the article?

The TPC dataset is a synthetic dataset widely available for training, benchmarking, and educational purposes. It contains information on various products, customers, demographic data, web sales, and other transaction-related data, making it more realistic.

Rahul Sharma is an AWS Ambassador, DevOps Architect, and technical blogger specializing in cloud computing, DevOps practices, and open-source technologies. With expertise in AWS, Kubernetes, and Terraform, he simplifies complex concepts for learners and professionals through engaging articles and tutorials. Rahul is passionate about solving DevOps challenges and sharing insights to empower the tech community.