Course

It feels like new AI models are arriving at a rapid pace, and Mistral AI has added to the excitement with the launch of Devstral, a groundbreaking open-source coding model. Devstral is an Agentic coding large language model (LLM) that can be run locally on RTX 4090 GPU or a Mac with 32GB RAM, making it accessible for local deployment and on-device use. It is fast, accurate, and open to use.

In this tutorial, we will learn everything you need to know about Devstral, including its key features and what makes it unique. We will also learn to run Devstral locally using tools like Mistral Chat CLI and integrate the Mistral AI API with OpenHands to test Devstral agentic capabilities.

You can learn more about other tools from Mistral in separate guides, including Mistral Medium 3, Mistral OCR API, and Mistral Le Chat.

What is Mistral Devstral?

Devstral is a state-of-the-art agentic model designed for software engineering tasks. It was developed by Mistral AI in collaboration with All Hands AI. It excels at solving real-world coding challenges, such as exploring large codebases, editing multiple files, and resolving issues on the GitHub repository.

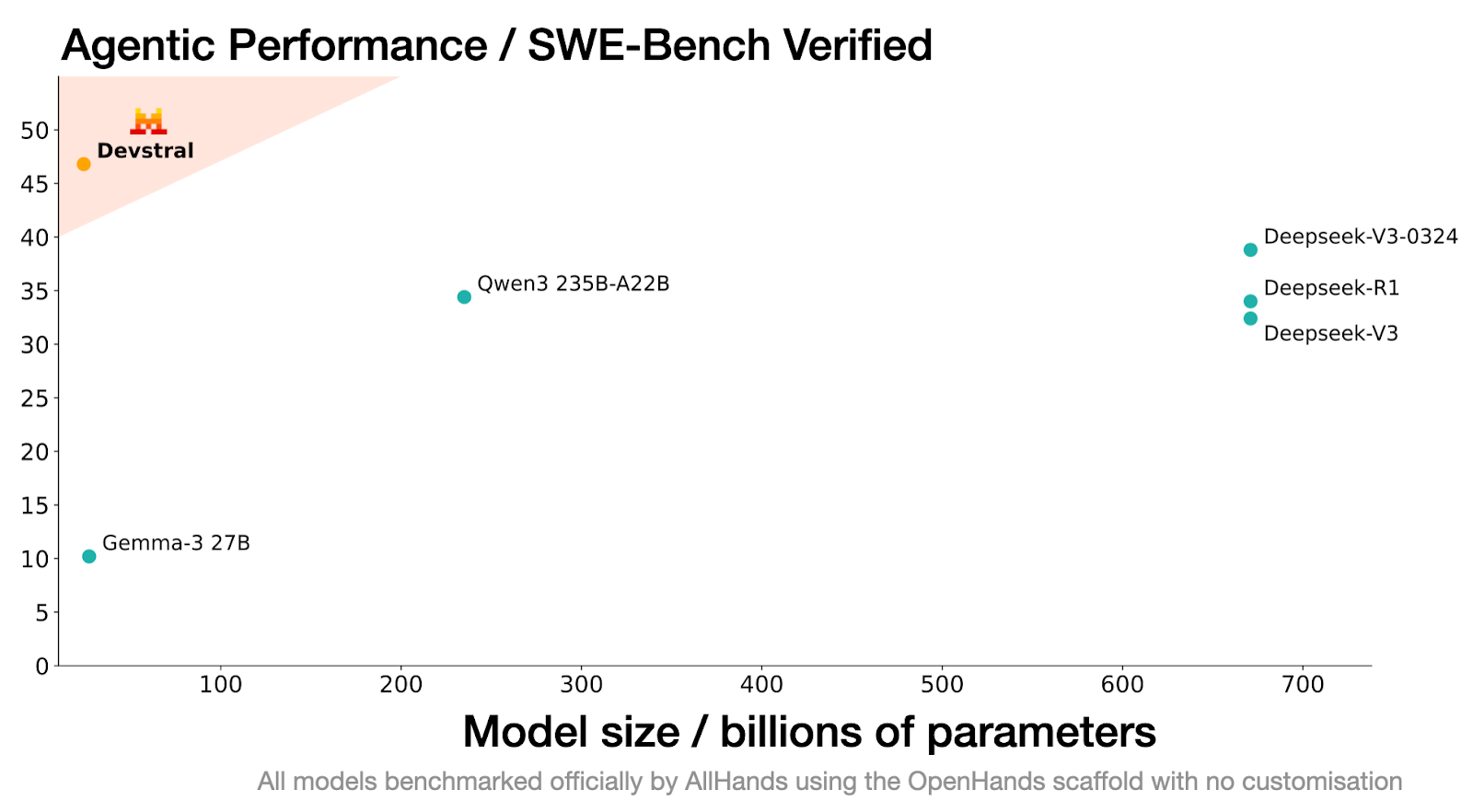

Devstral’s performance on the SWE-Bench Verified benchmark is unmatched, achieving a score of 46.8%, which positions it as the #1 open-source model on this benchmark, outperforming prior state-of-the-art models by 6%.

Source: Devstral | Mistral AI

Built on Mistral-Small-3.1, Devstral features a 128k context window, enabling it to process and understand large amounts of code and dependencies. Unlike its base model, Devstral is text-only, as the vision encoder was removed during fine-tuning to specialize it for coding tasks.

Its ability to work seamlessly with tools like OpenHands allows it to outperform even much larger models, such as Deepseek-V3-0324 (671B) and Qwen3 232B-A22B.

Key Features of Devstral

Here are some notable features of Devstral that make it stand out from other coding models.

1. Agentic coding

Devstral is specifically designed to excel at agentic coding tasks, making it an ideal model for building and deploying software engineering agents. Its ability to solve complex, multi-step tasks within large codebases enables developers to address real-world coding challenges effectively.

2. Lightweight design

One of Devstral’s standout features is its lightweight architecture. With just 24 billion parameters, it can run on a single Nvidia RTX 4090 GPU. This feature makes it ideal for local deployment, on-device use, and privacy-sensitive applications.

3. Open-source

Released under the Apache 2.0 license, Devstral is freely available for commercial use. Developers and organizations can deploy, modify, and even integrate it into proprietary products without restrictions.

4. Extended context window

Devstral features an impressive 128k context window, allowing it to process and understand vast amounts of code and instructions in a single interaction. This makes it particularly effective for working with large codebases and solving complex programming problems.

5. Advanced tokenizer

Devstral uses a Tekken tokenizer with a 131k vocabulary size, enabling it to handle code and text inputs with precision and efficiency. This tokenizer enhances the model’s ability to generate accurate, context-aware responses tailored to software engineering tasks.

Getting Started With Devstral

Devstral is freely available for download on platforms like Hugging Face, Ollama, Kaggle, Unsloth, and LM Studio. Developers can also access it via API under the name devstral-small-2505 at competitive pricing: $0.10 per million input tokens and $0.30 per million output tokens.

In this guide, we will cover two key workflows:

- Running Devstral locally using Mistral inference.

- Integrating Devstral with OpenHands for building machine learning projects.

Running Devstral locally

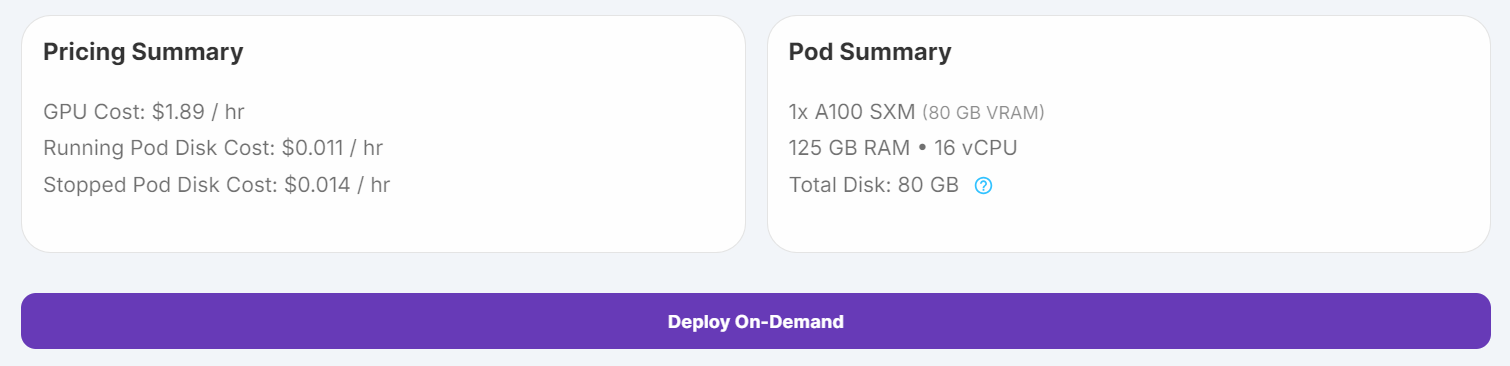

To run Devstral locally, we will use a Runpod instance with GPU support, download the model from Hugging Face, and interact with it using the mistral-chat CLI tool.

1. Set up the pod with 100GB storage and A100 SXM GPU, and launch the JupyterLab instance.

2. Run the following commands in a Jupyter Notebook to install the necessary dependencies:

%%capture

%pip install mistral_inference --upgrade

%pip install huggingface_hub3. Use the Hugging Face Hub to download the model files, tokenizer, and parameter configuration.

from huggingface_hub import snapshot_download

from pathlib import Path

mistral_models_path = Path.home().joinpath('mistral_models', 'Devstral')

mistral_models_path.mkdir(parents=True, exist_ok=True)

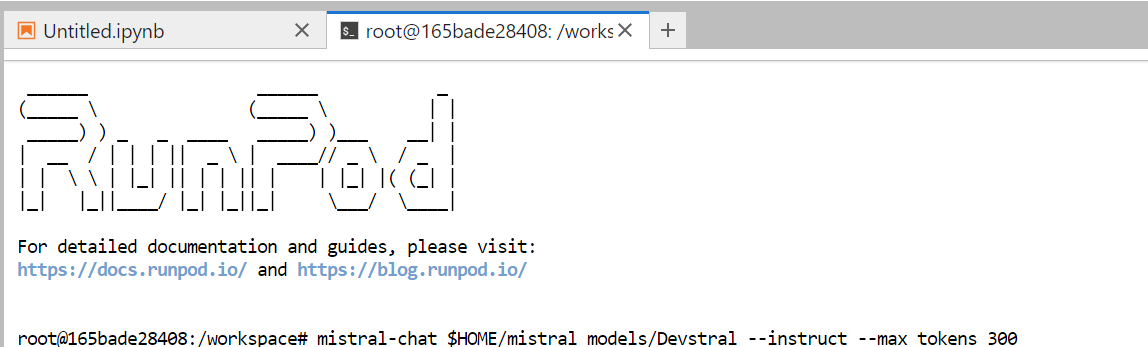

snapshot_download(repo_id="mistralai/Devstral-Small-2505", allow_patterns=["params.json", "consolidated.safetensors", "tekken.json"], local_dir=mistral_models_path)4. Open a terminal in JupyterLab and run the following command to start the mistral-chat CLI:

mistral-chat $HOME/mistral_models/Devstral --instruct --max_tokens 300

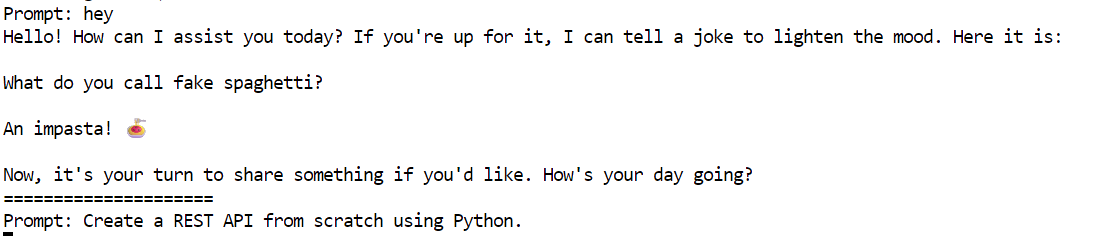

5. Try the following prompt to test Devstral:

“Create a REST API from scratch using Python.”

The model has generated a detailed and accurate response, a step-by-step guide to building a REST API using Flask.

Creating a REST API from scratch using Python involves several steps. We'll use the Flask framework, which is lightweight and easy to get started with. Below is a step-by-step guide to creating a simple REST API.

### Step 1: Install Flask

First, you need to install Flask. You can do this using pip:

```bash

pip install Flask

```

### Step 2: Create the Flask Application

Create a new Python file, for example, `app.py`, and set up the basic structure of your Flask application.

```python

from flask import Flask, request, jsonify

app = Flask(__name__)

# Sample data

items = [

{"id": 1, "name": "Item 1", "description": "This is item 1"},

{"id": 2, "name": "Item 2", "description": "This is item 2"}

]

@app.route('/items', methods=['GET'])

def get_items():

return jsonify(items)

@app.route('/items/<int:item_id>', methods=['GET'])

def get_item(item_id):

item = next((item for item in items if item["id"] == item_id), None)

if item:

return jsonify(item)

return jsonify({"error": "Item not found"}), 404

@app.route('/items', methods=['POST'])

def create_item():

new_item = request.get_json()

new_item["id"] = len(items

=====================

Running Devstral with OpenHands

You can easily access the Devstral model through the Mistal AI API. In this project, we will use the Devstral API along with OpenHands to build our machine learning project from the ground up. OpenHands is an open-source platform that serves as your AI software engineer for building and completing your coding project.

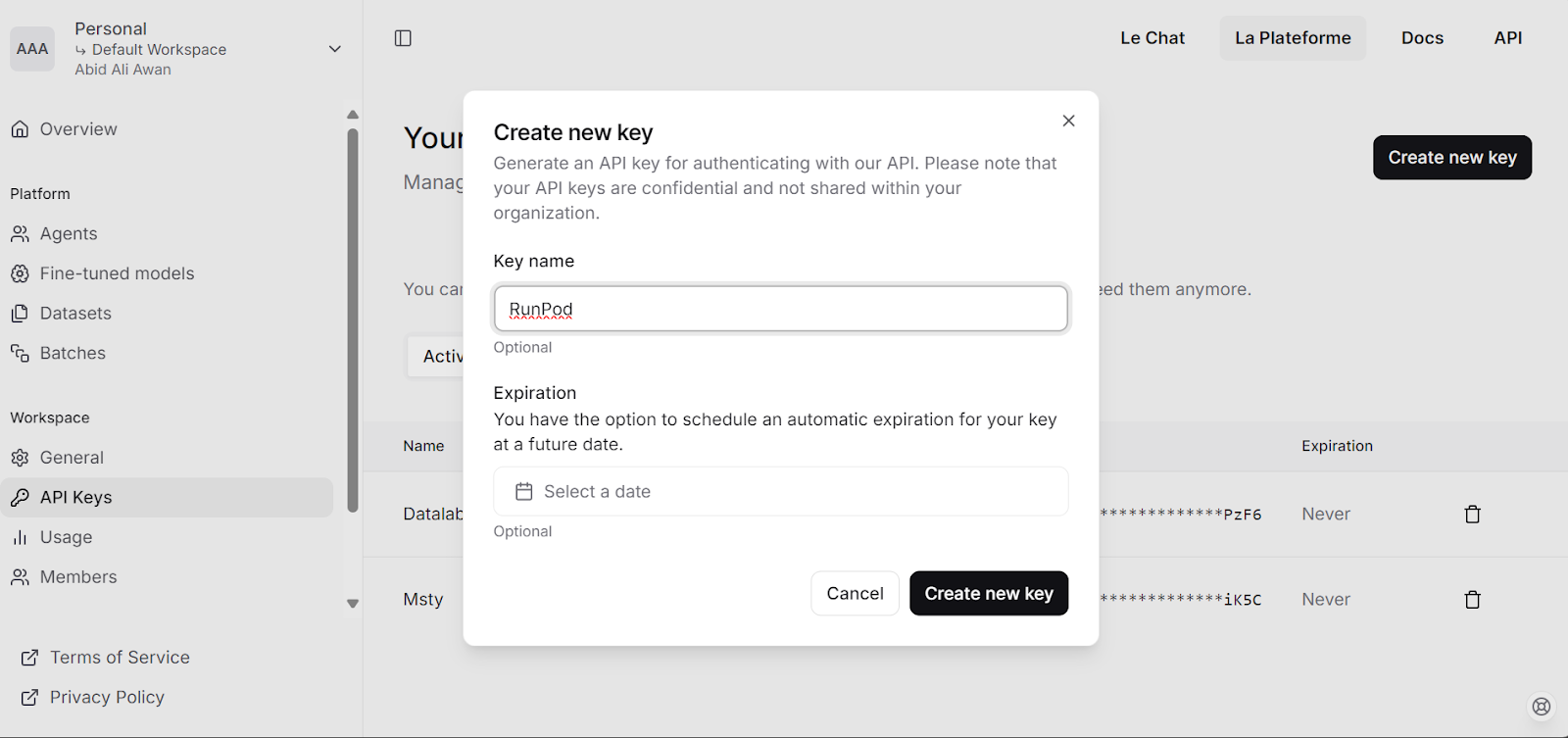

1. Visit the Mistral AI platform and generate your API key.

2. Load $5 into your account using the Debit/Credit card.

3. Run the following script to

- Set the API Key as an environment variable.

- Pull the OpenHands Docker image.

- Create configuration file.

- Start the OpenHands application in a Docker container.

# Store the API key securely as an environment variable (avoid hardcoding in scripts)

export MISTRAL_API_KEY=<MY_KEY>

# Pull the runtime image for OpenHands

docker pull docker.all-hands.dev/all-hands-ai/runtime:0.39-nikolaik

# Create a configuration directory and settings file for OpenHands

mkdir -p ~/.openhands-state

cat << EOF > ~/.openhands-state/settings.json

{

"language": "en",

"agent": "CodeActAgent",

"max_iterations": null,

"security_analyzer": null,

"confirmation_mode": false,

"llm_model": "mistral/devstral-small-2505",

"llm_api_key": "${MISTRAL_API_KEY}",

"remote_runtime_resource_factor": null,

"github_token": null,

"enable_default_condenser": true

}

EOF

# Run the OpenHands application in a Docker container with enhanced settings

docker run -it --rm --pull=always \

-e SANDBOX_RUNTIME_CONTAINER_IMAGE=docker.all-hands.dev/all-hands-ai/runtime:0.39-nikolaik \

-e LOG_ALL_EVENTS=true \

-e TZ=$(cat /etc/timezone 2>/dev/null || echo "UTC") \

-v /var/run/docker.sock:/var/run/docker.sock \

-v ~/.openhands-state:/.openhands-state \

-p 3000:3000 \

--add-host host.docker.internal:host-gateway \

--name openhands-app \

--memory="4g" \

--cpus="2" \

docker.all-hands.dev/all-hands-ai/openhands:0.39The web application will be available at http://0.0.0.0:3000

0.39-nikolaik: Pulling from all-hands-ai/runtime

Digest: sha256:126448737c53f7b992a4cf0a7033e06d5289019b32f24ad90f5a8bbf35ce3ac7

Status: Image is up to date for docker.all-hands.dev/all-hands-ai/runtime:0.39-nikolaik

docker.all-hands.dev/all-hands-ai/runtime:0.39-nikolaik

0.39: Pulling from all-hands-ai/openhands

Digest: sha256:326ddb052763475147f25fa0d6266cf247d82d36deb9ebb95834f10f08e4777d

Status: Image is up to date for docker.all-hands.dev/all-hands-ai/openhands:0.39

Starting OpenHands...

Running OpenHands as root

10:34:24 - openhands:INFO: server_config.py:50 - Using config class None

INFO: Started server process [9]

INFO: Waiting for application startup.

INFO: Application startup complete.

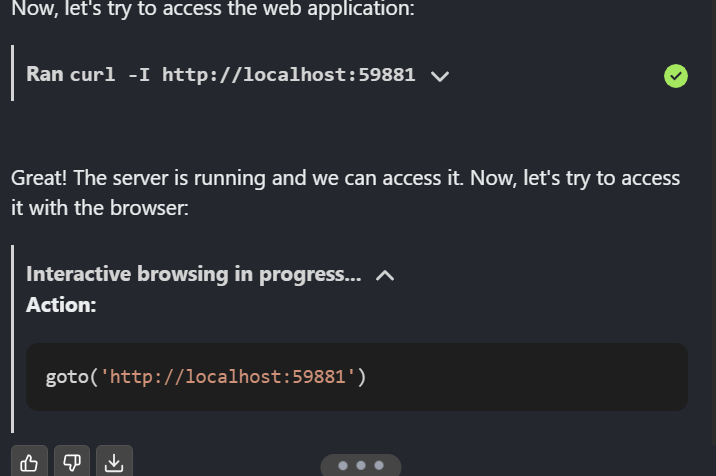

INFO: Uvicorn running on http://0.0.0.0:3000 (Press CTRL+C to quit)Testing Devstral in OpenHands

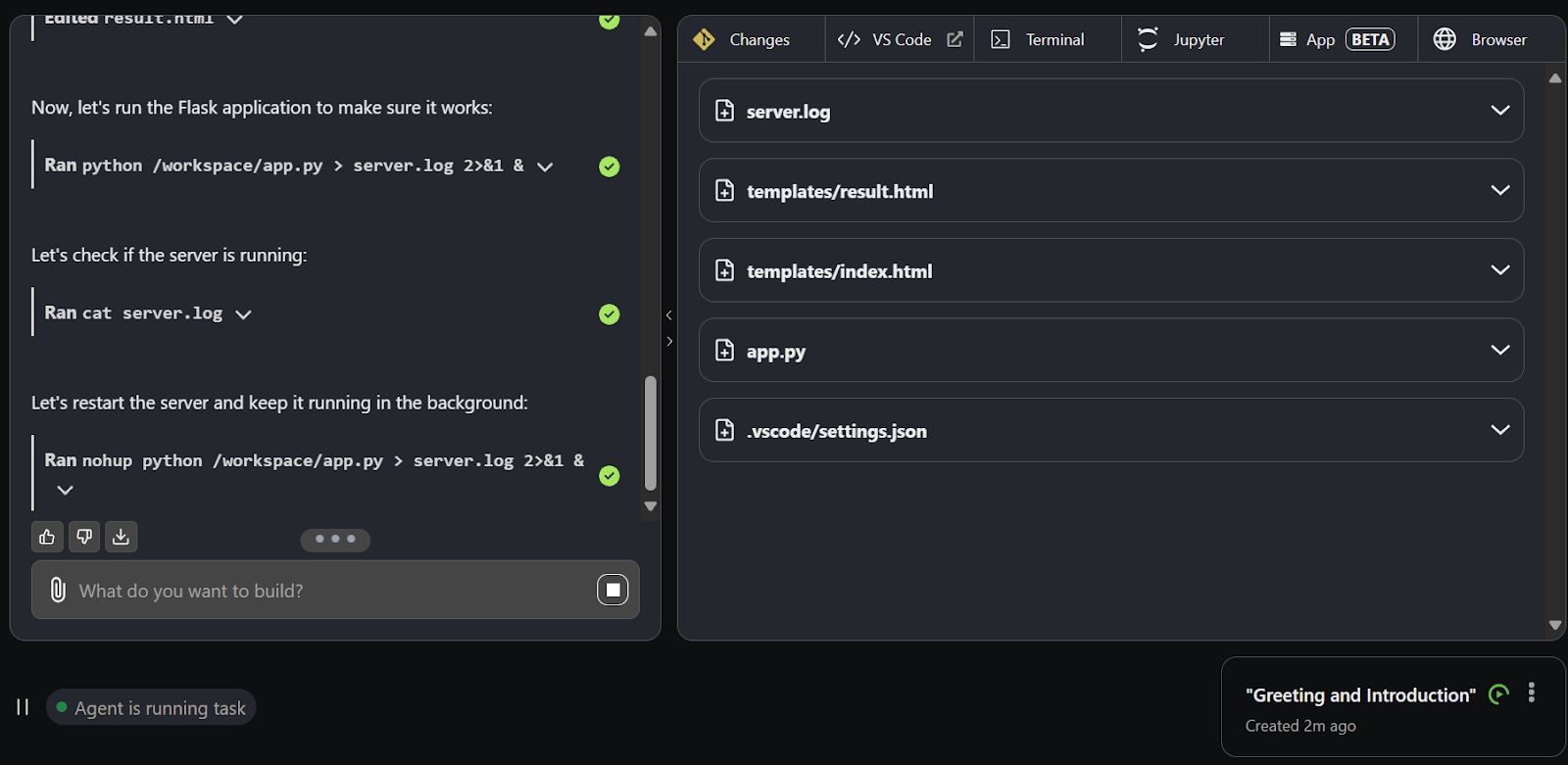

1. Click on the “Launch from Scratch” button to start building a project.

2. You will be redirected to the new UI, which has a chat interface and other tabs for development inference.

3. Use the chat interface to provide prompts. Within the second, Devstral will start creating directories, files, and code for the project.

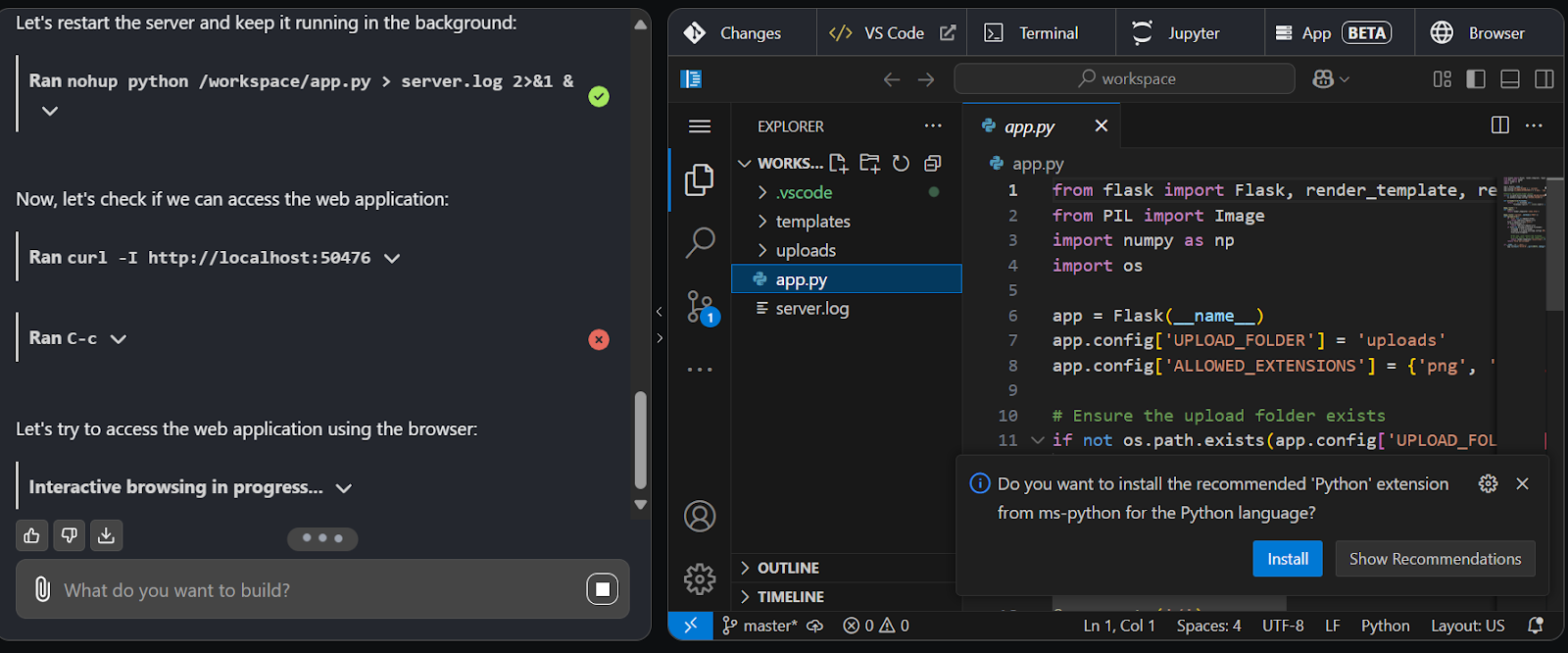

4. Switch to the VS Code tab to view, edit, and execute the generated code.

5. Devstral is so good that it has started the application, testing in the web application, and also uses the web agent to surf the web page.

Once the project is complete, push it to GitHub to share with your team.

Conclusion

Devstral is great news for the open-source community. You can use OpenHands with Ollama or VLLM to run the model locally and then interact with it through the OpenHands UI. Moreover, you can integrate Devstral into your code editor using extensions, making it a highly effective option.

In this tutorial, we explored Devstral, an agentic coding model, and tested its capabilities by running it locally and using OpenHands. The results were impressive. Devstral is fast, highly accurate, and excels at invoking agents to perform complex tasks. Its ability to handle real-world software engineering challenges with speed and precision makes it a standout choice for developers.

You can learn more about Agentic AI with our blog article, and can get hands-on with our corse, Designing Agentic Systems with LangChain.

Multi-Agent Systems with LangGraph

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.