Track

Qwen3 is one of the most complete open-weight model suites released so far.

It comes from Alibaba’s Qwen team and includes models that scale up to research-grade performance as well as smaller versions that can be run locally on more modest hardware.

In this blog, I’ll give you a quick overview of the full Qwen3 suite, explain how the models were developed, walk through benchmark results, and show you how you can access and start using them.

We’ve also published tutorials on running Qwen3 locally with Ollama and on fine-tuning Qwen3.

We keep our readers updated on the latest in AI by sending out The Median, our free Friday newsletter that breaks down the week’s key stories. Subscribe and stay sharp in just a few minutes a week:

What Is Qwen 3?

Qwen3 is the latest family of large language models from Alibaba’s Qwen team. All models in the lineup are open-weighted under the Apache 2.0 license.

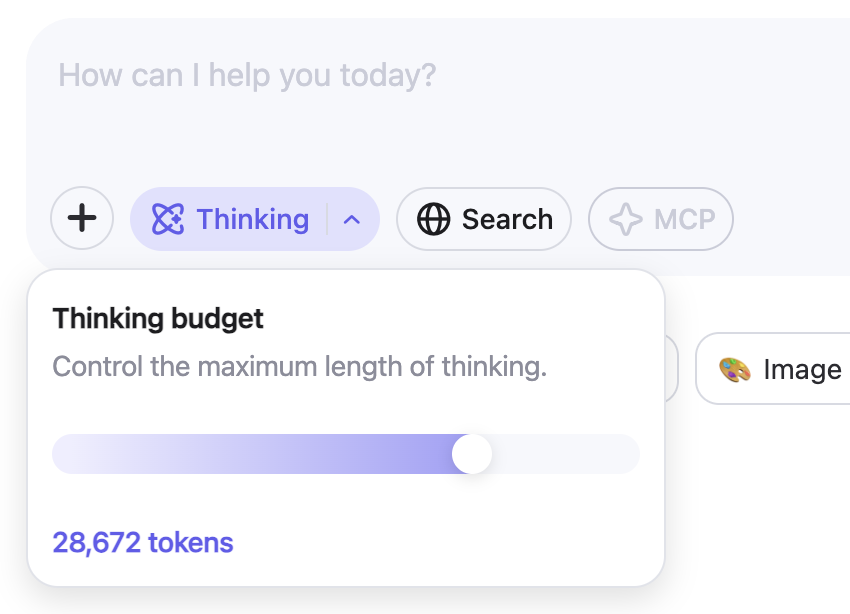

What caught my eye immediately was the introduction of a thinking budget that users can control directly inside the Qwen app. This gives regular users granular control over the reasoning process, something that previously could only be done programmatically.

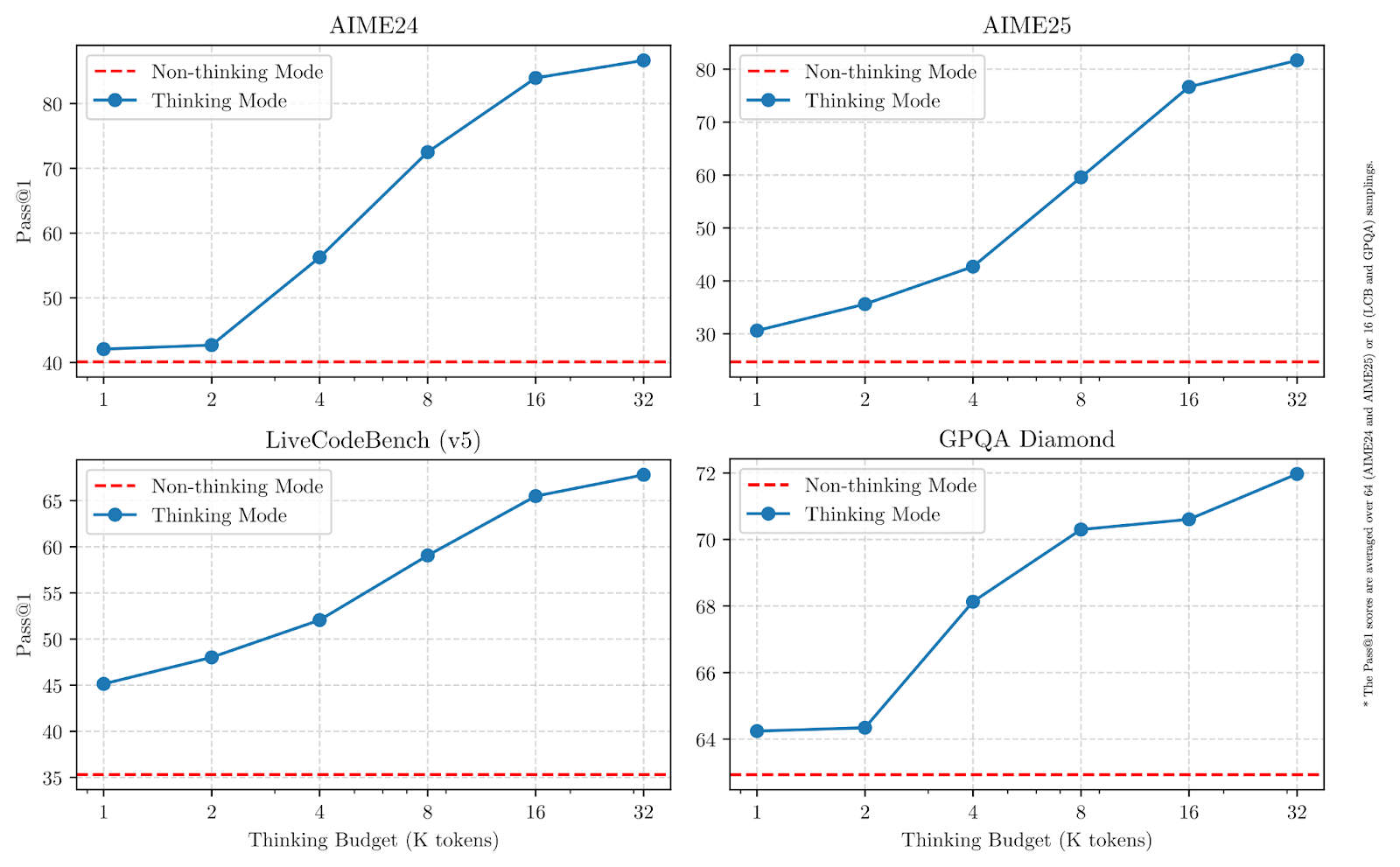

As we can see in the graphs below, increasing the thinking budgets significantly improves performance, especially for math, coding, and science.

Source: Qwen

In benchmark tests, the flagship Qwen3-235B-A22B performs competitively against other top-tier models and shows stronger results than DeepSeek-R1 across coding, math, and general reasoning. Let’s quickly explore each model and understand what it’s designed for.

Qwen3-235B-A22B

This is the largest model in the Qwen3 lineup. It uses a mixture-of-experts (MoE) architecture with 235 billion total parameters and 22 billion active per generation step.

In a MoE model, only a small subset of parameters is activated at each step, which makes it faster and cheaper to run compared to dense models (like GPT-4o), where all parameters are always used.

The model performs well across math, reasoning, and coding tasks, and in benchmark comparisons it outpaces models like DeepSeek-R1.

Qwen3-30B-A3B

Qwen3-30B-A3B is a smaller MoE model with 30 billion total parameters and just 3 billion active at each step. Despite the low active count, it performs comparably to much larger dense models like QwQ-32B. It’s a practical choice for users who want a mix of reasoning capability and lower inference costs. Like the 235B model, it supports a 128K context window and is available under Apache 2.0.

Dense models: 32B, 14B, 8B, 4B, 1.7B, 0.6B

The six dense models in the Qwen3 release follow a more traditional architecture where all parameters are active at every step. They cover a wide range of use cases:

Qwen3-32B, 14B, 8B support 128K context windows, while Qwen3-4B, 1.7B, 0.6B support 32K. All are open-weighted and licensed under Apache 2.0. Smaller models in this group are well-suited for lightweight deployments, while the larger ones are closer to general-purpose LLMs.

Which model should you choose?

Qwen3 offers different models depending on how much reasoning depth, speed, and computational cost you need. Here’s a quick overview:

|

Model |

Type |

Context Length |

Best For |

|

Qwen3-235B-A22B |

MoE |

128K |

Research tasks, agent workflows, long reasoning chains |

|

Qwen3-30B-A3B |

MoE |

128K |

Balanced reasoning at lower inference cost |

|

Qwen3-32B |

Dense |

128K |

High-end general-purpose deployments |

|

Qwen3-14B |

Dense |

128K |

Mid-range apps needing strong reasoning |

|

Qwen3-8B |

Dense |

128K |

Lightweight reasoning tasks |

|

Qwen3-4B |

Dense |

32K |

Smaller applications, faster inference |

|

Qwen3-1.7B |

Dense |

32K |

Mobile and embedded use cases |

|

Qwen3-0.6B |

Dense |

32K |

Very lightweight or constrained settings |

If you’re working on tasks that need deeper reasoning, agent tool use, or long context handling, Qwen3-235B-A22B will give you the most flexibility.

For cases where you want to keep inference faster and cheaper while still handling moderately complex tasks, Qwen3-30B-A3B is a strong option.

The dense models offer simpler deployments and predictable latency, making them a better fit for smaller-scale applications.

How Qwen3 Was Developed

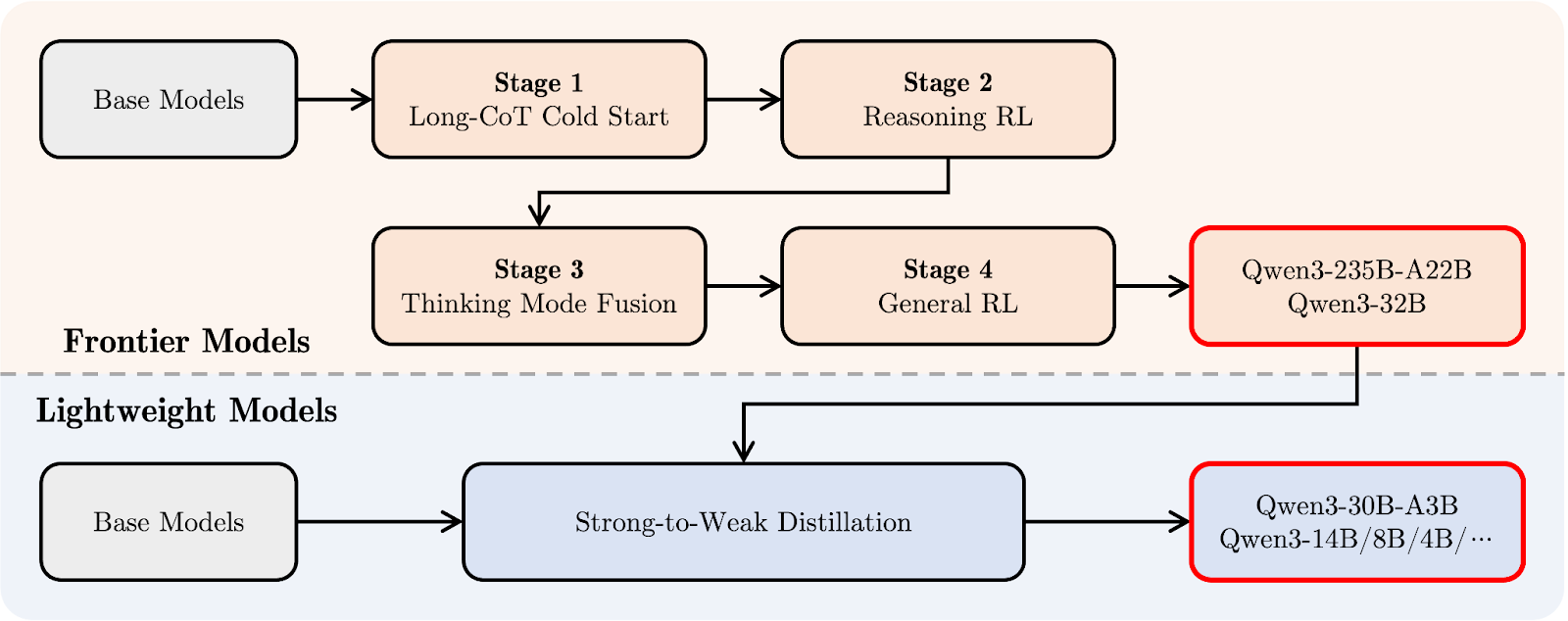

Qwen3 models were built through a three-stage pretraining phase followed by a four-stage post-training pipeline.

Pretraining is where the model learns general patterns from massive amounts of data (language, logic, math, code) without being told exactly what to do. Post-training is where the model is fine-tuned to behave in specific ways, like reasoning carefully or following instructions.

I’ll walk through both parts in simple terms, without getting too deep into technical details.

Pretraining

Compared to Qwen2.5, the pretraining dataset for Qwen3 was significantly expanded. Around 36 trillion tokens were used, doubling the amount in the previous generation. The data included web content, extracted text from documents, and synthetic math and code examples generated by Qwen2.5 models .

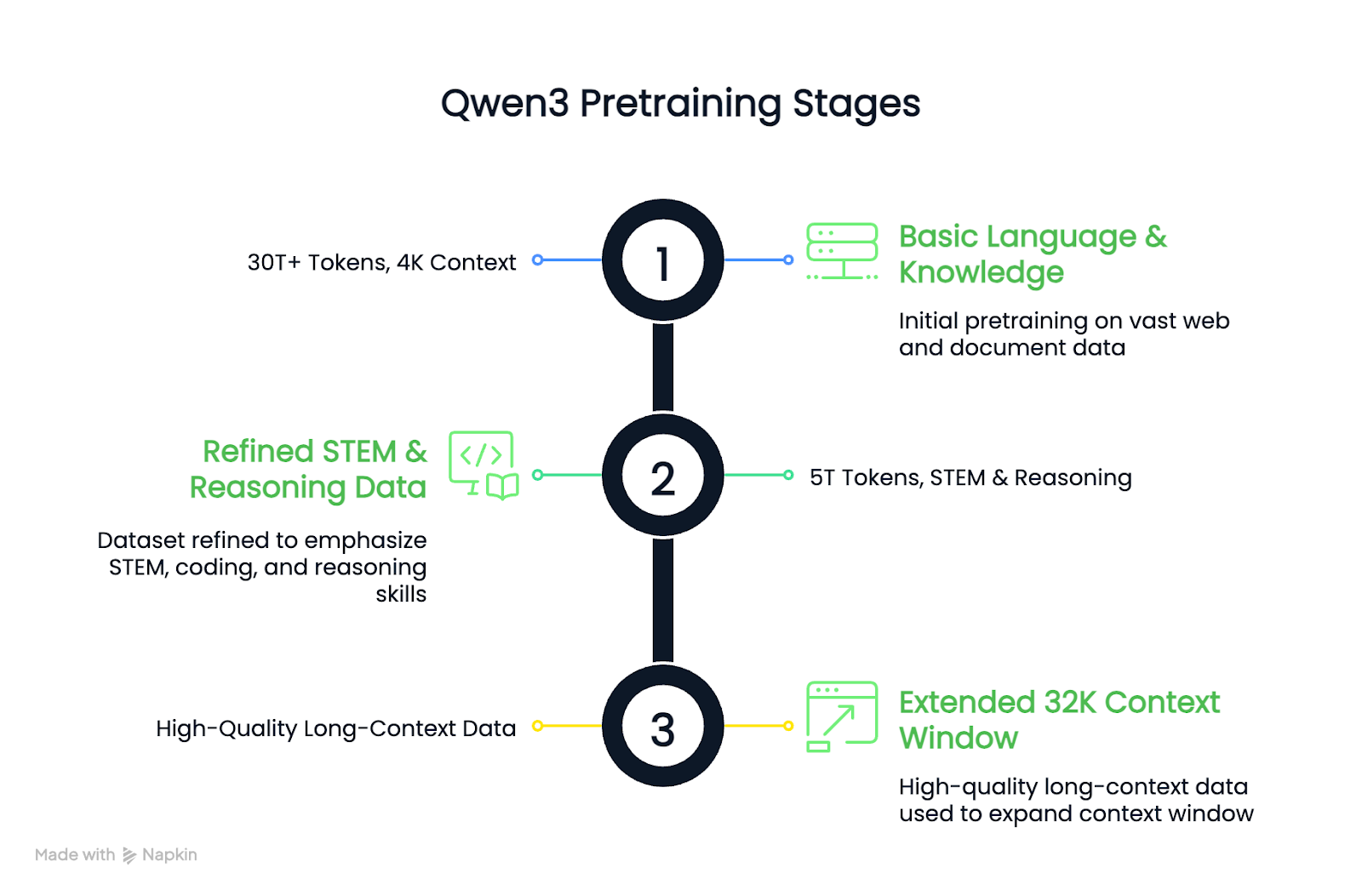

The pretraining process followed three stages:

- Stage 1: Basic language and knowledge skills were learned using over 30 trillion tokens, with a 4K context length.

- Stage 2: The dataset was refined to increase the share of STEM, coding, and reasoning data, followed by an additional 5 trillion tokens.

- Stage 3: High-quality long-context data was used to extend the models to 32K context windows.

The result is that dense Qwen3 base models match or outperform larger Qwen2.5 base models while using fewer parameters, especially in STEM and reasoning tasks.

Post-training

Qwen3’s post-training pipeline focused on integrating deep reasoning and quick-response capabilities into a single model. Let’s first take a look at the diagram below, and then I’ll explain it step-by-step:

Qwen 3 post-training pipeline. Source: Qwen

At the top (in orange), you can see the development path for the larger “Frontier Models,” like Qwen3-235B-A22B and Qwen3-32B. It starts with a Long Chain-of-Thought Cold Start (stage 1), where the model learns to reason step-by-step on harder tasks.

That’s followed by Reasoning Reinforcement Learning (RL) (stage 2) to encourage better problem-solving strategies. In stage 3, called Thinking Mode Fusion, Qwen3 learns to balance slow, careful reasoning with faster responses. Finally, a General RL stage improves its behavior across a wide range of tasks, like instruction following and agentic use cases.

Below that (in light blue), you’ll see the path for the “Lightweight Models,” like Qwen3-30B-A3B and the smaller dense models. These models are trained using strong-to-weak distillation, a process where knowledge from the larger models is compressed into smaller, faster models without losing too much reasoning ability.

In simple terms: the big models were trained first, and then the lightweight ones were distilled from them. This way, the full Qwen3 family shares a similar style of thinking, even across very different model sizes.

Qwen 3 Benchmarks

Qwen3 models were evaluated across a range of reasoning, coding, and general knowledge benchmarks. The results show that Qwen3-235B-A22B leads the lineup on most tasks, but the smaller Qwen3-30B-A3B and Qwen3-4B models also deliver good performance.

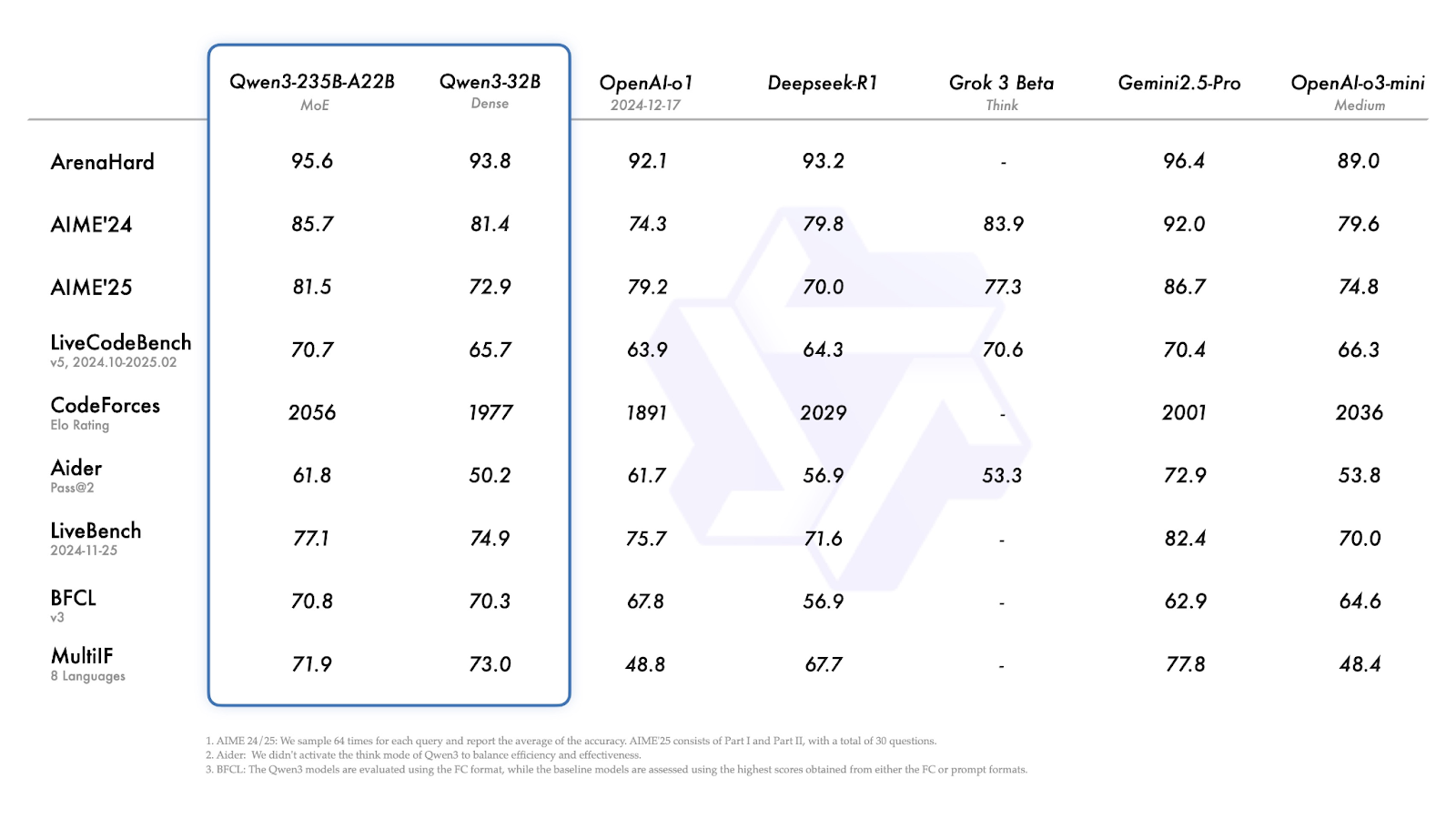

Qwen3-235B-A22B and Qwen3-32B

On most benchmarks, Qwen3-235B-A22B is among the top-performing models, though not always the leader.

Source: Qwen

Let’s quickly explore the results above:

- ArenaHard (overall reasoning): Gemini 2.5 Pro leads with 96.4. Qwen3-235B is just behind at 95.6, ahead of o1 and DeepSeek-R1. (Note that Gemini 3 is now available)

- AIME’24 / AIME’25 (math): Scores 85.7 and 81.4. Gemini 2.5 Pro again ranks higher, but Qwen3-235B still outperforms DeepSeek-R1, Grok 3, and o3-mini.

- LiveCodeBench (code generation): 70.7 for the 235B model—better than most models except Gemini.

- CodeForces Elo (competitive programming): 2056, higher than all other listed models including DeepSeek-R1 and Gemini 2.5 Pro.

- LiveBench (real-world general tasks): 77.1, again second only to Gemini 2.5 Pro.

- MultiIF (multilingual reasoning): The smaller Qwen3-32B scores better here (73.0), but it’s still behind Gemini (77.8).

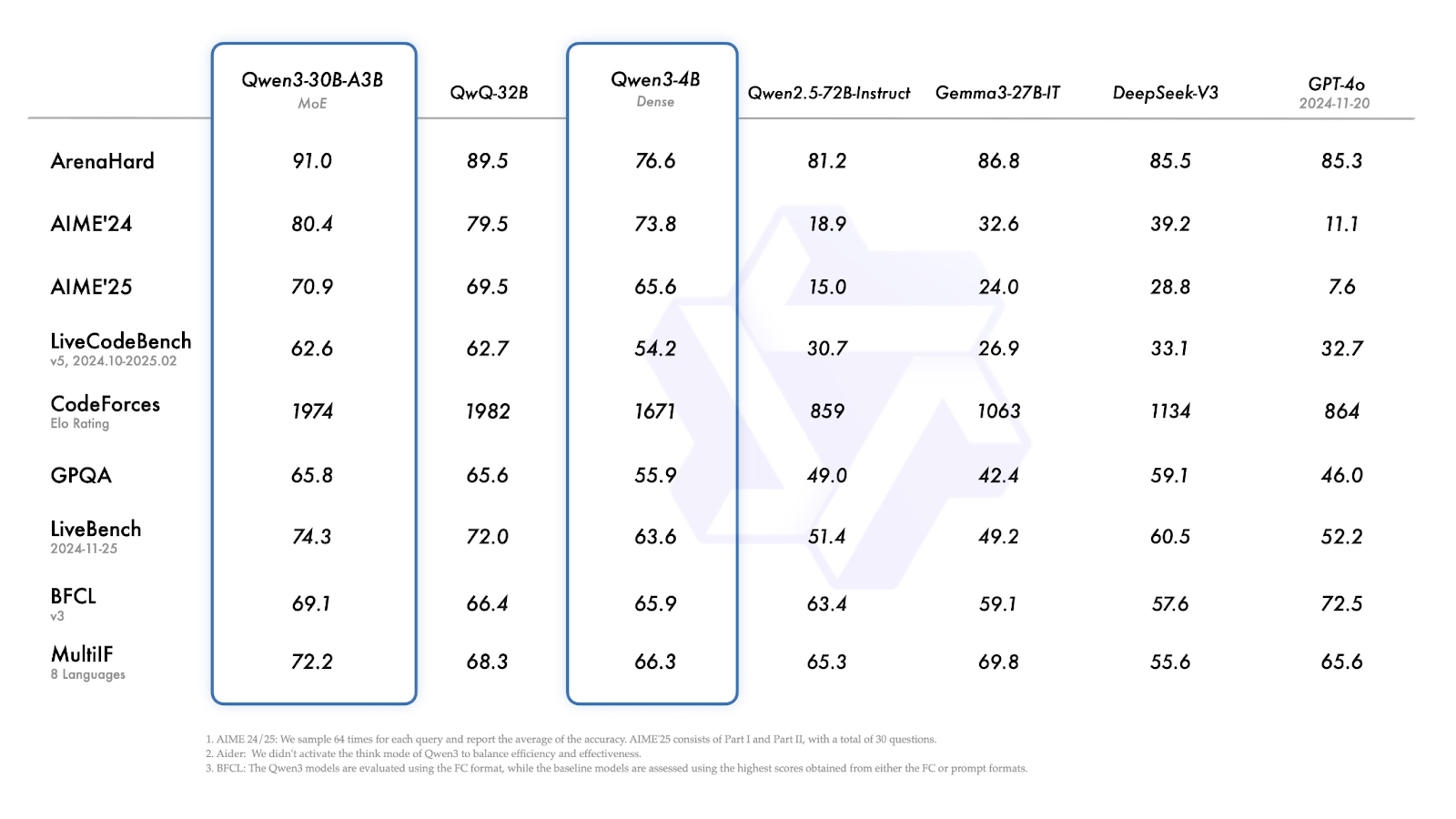

Qwen3-30B-A3B and Qwen3-4B

Qwen3-30B-A3B (the smaller MoE model) performs well across nearly all benchmarks, consistently matching or beating similar-sized dense models.

- ArenaHard: 91.0—above QwQ-32B (89.5), DeepSeek-V3 (85.5), and GPT-4o (85.3).

- AIME’24 / AIME’25: 80.4—slightly ahead of QwQ-32B, but miles ahead of the other models.

- CodeForces Elo: 1974—just under QwQ-32B (1982).

- GPQA (graduate-level QA): 65.8—roughly tied with QwQ-32B.

- MultiIF: 72.2—higher than QwQ-32B (68.3).

Source: Qwen

Qwen3-4B shows solid performance for its size:

- ArenaHard: 76.6

- AIME’24 / AIME’25: 73.8 and 65.6—clearly stronger than earlier and much larger Qwen2.5 models and models like Gemma-27B-IT.

- CodeForces Elo: 1671—not competitive with the larger models but on par with its weight class.

- MultiIF: 66.3—respectable for a 4B dense model, and notably ahead of many similarly sized baselines.

How to Access Qwen3

Qwen3 models are publicly available and can be used on the chat app, via API, downloaded for local deployment, or integrated into custom setups.

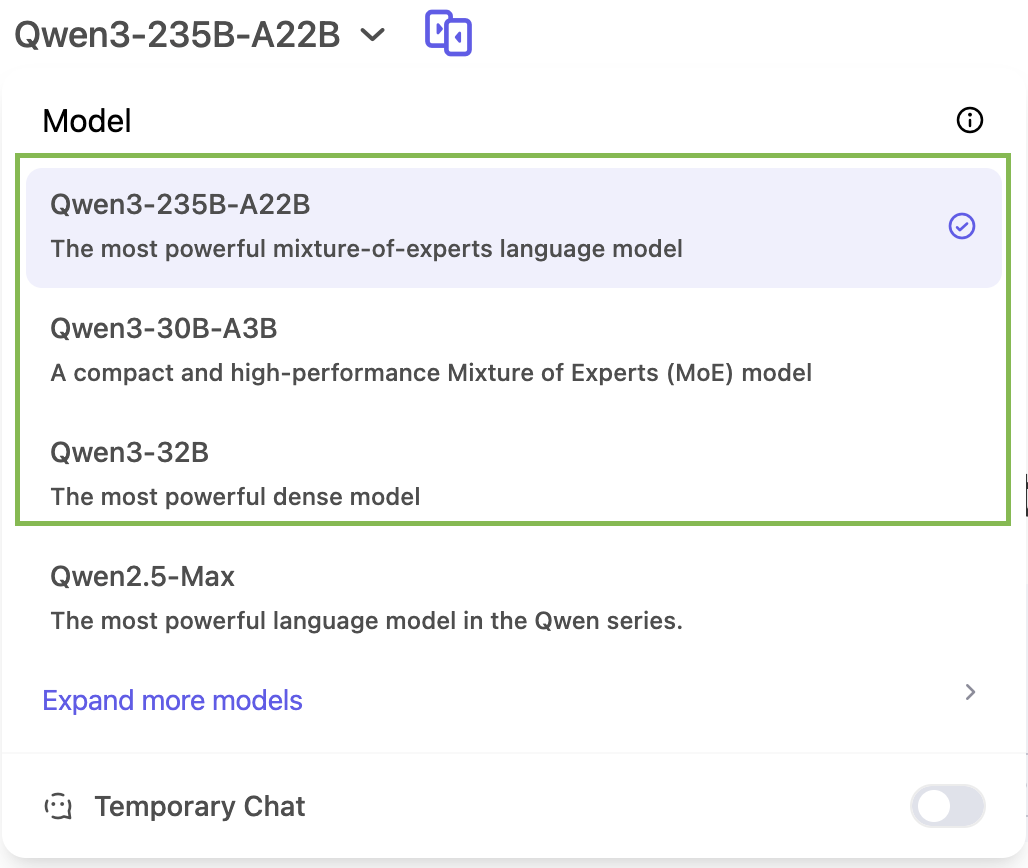

Chat interface

You can try Qwen3 directly at chat.qwen.ai.

You’ll only be able to access three models from the Qwen 3 family in the chat app: Qwen3-235B, Qwen3-30B, and Qwen3-32B:

Qwen 3 API access

Qwen3 works with OpenAI-compatible API formats through providers like ModelScope or DashScope. Tools like vLLM and SGLang offer efficient serving for local or self-hosted deployment. The official Qwen 3 blog has more details about this.

Open weights

All Qwen3 models—both MoE and dense—are released under the Apache 2.0 license. They’re available on:

Local deployment

You can also run Qwen3 locally using:

- Ollama

- LM Studio

- llama.cpp

- KTransformers

Conclusion

Qwen3 is one of the most complete open-weight model suites released so far.

The flagship 235B MoE model performs well across reasoning, math, and coding tasks, while the 30B and 4B versions offer practical alternatives for smaller-scale or budget-conscious deployments. The ability to adjust the model’s thinking budget adds an extra layer of flexibility for regular users.

As it stands, Qwen3 is a well-rounded release that covers a wide range of use cases and is ready to use in both research and production settings.

FAQs

Can I use Qwen3 in commercial products?

Yes. The Apache 2.0 license allows for commercial use, modification, and distribution with attribution.

Can I fine-tune Qwen3 models?

Yes, the Qwen3 models are open-weight, and you can fine-tune them.

Does Qwen3 support function calling or tool use?

Yes. Qwen3 can perform function calling when integrated into a reasoning framework like Qwen-Agent. It supports custom tool parsers, tool use configuration via MCP, and OpenAI-compatible interfaces.

Does Qwen3 offer multilingual support out of the box?

Yes. Qwen3 was trained on data from 119 languages and dialects, making it suitable for tasks like translation, multilingual QA, and global LLM deployments.

I’m an editor and writer covering AI blogs, tutorials, and news, ensuring everything fits a strong content strategy and SEO best practices. I’ve written data science courses on Python, statistics, probability, and data visualization. I’ve also published an award-winning novel and spend my free time on screenwriting and film directing.