Track

Are you struggling with inconsistent Docker builds across your development team? Build arguments might be what you're looking for. They're a go-to solution for creating flexible and standardized Docker images. They allow you to inject values into your build process without hardcoding them, which makes your Dockerfiles more portable and maintainable.

Docker build args solve common problems, such as handling different base images, package versions, or configuration settings across environments.

Docker's official documentation recommends build args for parameterizing builds rather than misusing environment variables. They're especially valuable in CI/CD pipelines where you need to create images with different configurations from the same Dockerfile.

> New to Docker and containers? Follow our guide to get up to speed quickly.

In this article, I'll show you how to use Docker build arguments to create more efficient, secure, and flexible containerization workflows.

Introduction to Docker Build Args

Build arguments (or build args) are variables that you pass to Docker at build time, and they're only available during the image construction process.

Unlike environment variables that persist in the container at runtime, build args only exist within the docker build command's context and disappear once your image is created. They work similarly to function parameters, allowing you to inject different values each time you build an image.

The primary use cases for build args revolve around creating flexible, reusable Dockerfiles.

You can use build args to specify different base images (like switching between Alpine or Debian), set application versions without modifying your Dockerfile, or configure build-time settings like proxy servers or repository URLs. This flexibility comes in handy in CI/CD pipelines where you might build the same Dockerfile with different parameters based on the deployment target.

Build args shine in situations where you need to customize your builds without maintaining multiple Dockerfile variants. They help you follow the DRY (Don't Repeat Yourself) principle by centralizing your container configuration logic while allowing customization.

They're also perfect for handling differences between development, testing, and production environments that should only affect how the image is built, not how the container runs.

Now that you know what build args are, let me show you how to use them.

Defining and Utilizing Build Arguments

Now let's get our hands dirty with the practical aspects of implementing build arguments. It's assumed you have Docker installed and configured.

Basic syntax and scope

You can declare build arguments in your Dockerfile using the ARG instruction, which can appear before or after the FROM instruction, depending on when you need to use them. Here's the basic syntax:

# Setting a build arg with a default value

ARG VERSION=latest

# Using the build arg

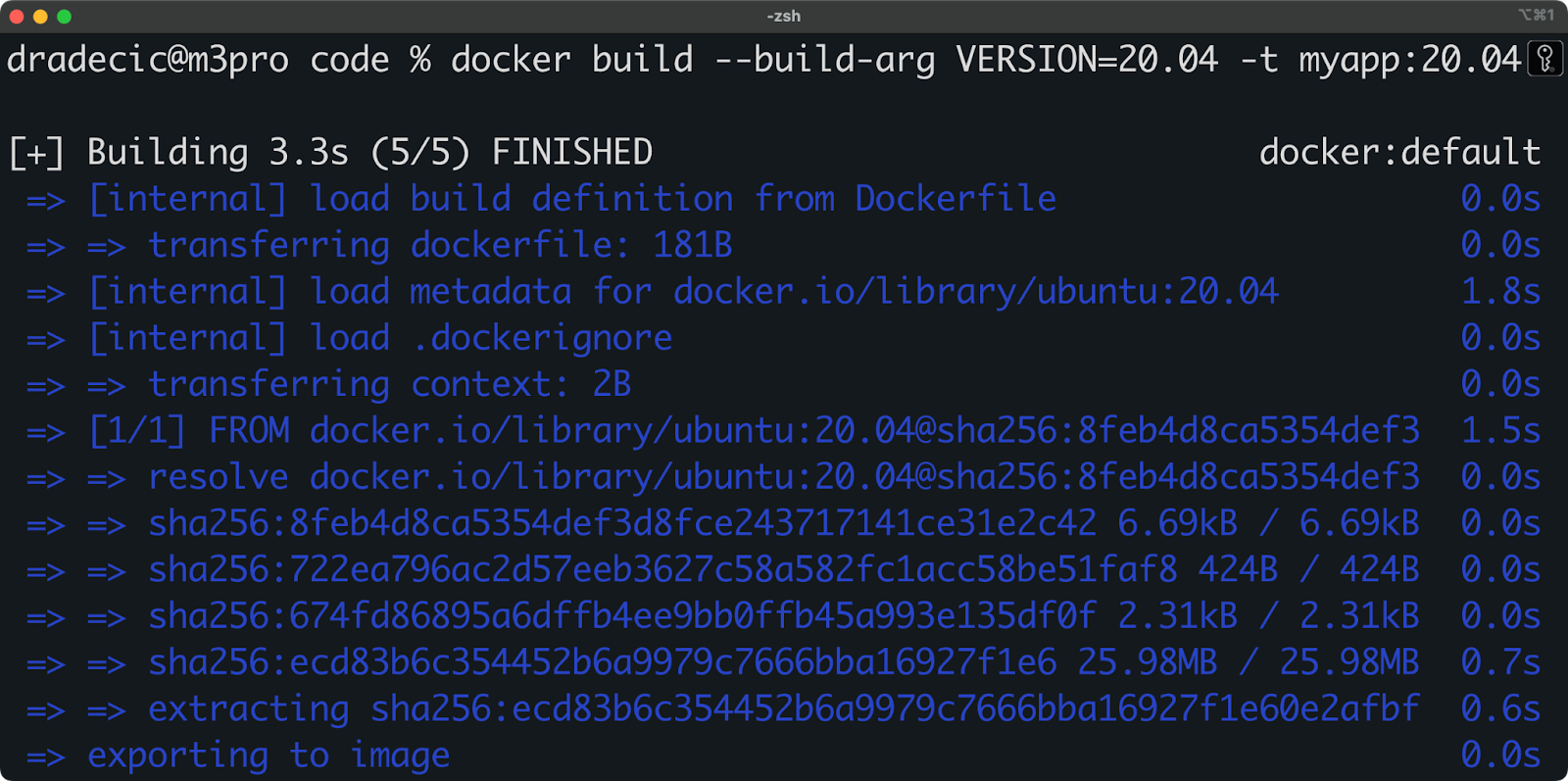

FROM ubuntu:${VERSION}You can provide values when building the image using the --build-arg flag:

docker build --build-arg VERSION=20.04 -t myapp:20.04 .

Image 1 - Basic Docker build args

The scope of build args follows specific rules that are essential to understand. Args declared before the FROM instruction are only available before FROM and must be redeclared afterward if you need them later.

This happens because each FROM instruction starts a new build stage with its own scope. This behavior is more or less mandatory for you to remember, especially in multi-stage builds.

Advanced declaration techniques

Let's create a complete Flask "Hello World" application that uses build args to support different configurations:

> Learn how to containerize an application using Docker.

First, create a simple Flask app (app.py):

from flask import Flask

app = Flask(__name__)

@app.route("/")

def hello_world():

return "Hello, World!"

if __name__ == "__main__":

app.run(host="0.0.0.0", debug=True)While here, also create a requirements.txt file:

flask==3.1.0Now let's create a Dockerfile that uses build args for flexible configuration:

# Define build arguments with default values

ARG PYTHON_VERSION=3.13

ARG ENVIRONMENT=production

# Use the PYTHON_VERSION arg in the FROM instruction

FROM python:${PYTHON_VERSION}-slim

# Redeclare args needed after FROM

ARG ENVIRONMENT

ENV FLASK_ENV=${ENVIRONMENT}

# Set working directory

WORKDIR /app

# Copy requirements file

COPY requirements.txt .

# Install dependencies based on environment

RUN if [ "$ENVIRONMENT" = "development" ]; then \

pip install -r requirements.txt && \

pip install pytest black; \

else \

pip install --no-cache-dir -r requirements.txt; \

fi

# Copy application code

COPY . .

# Expose port

EXPOSE 5000

# Set default command

CMD ["python", "app.py"]> Does the above Dockerfile look confusing? Learn Docker from scratch with our guide.

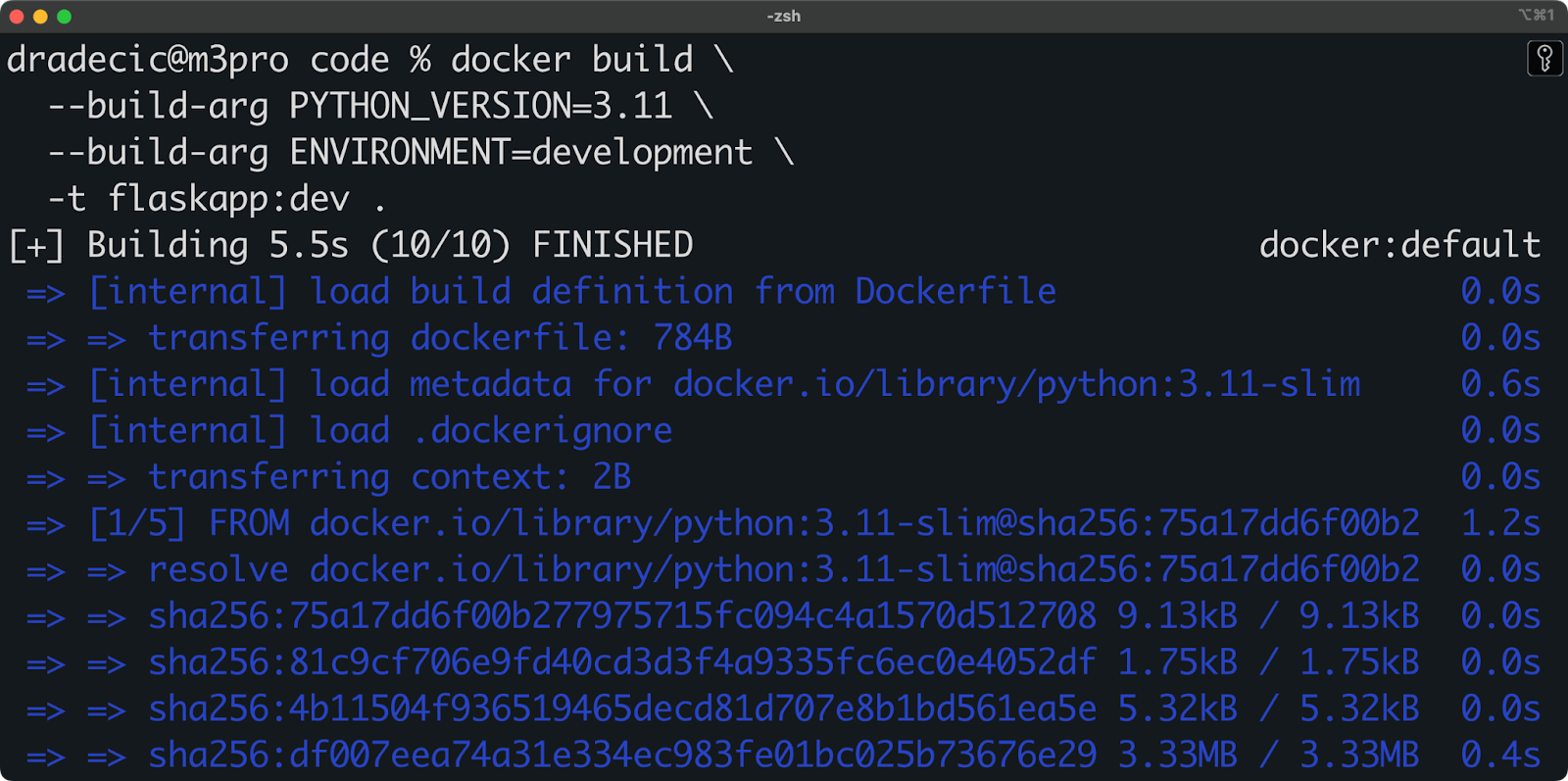

To build this image for development with a specific Python version, you can run the following from your Terminal:

# Building for development with Python 3.11

docker build \

--build-arg PYTHON_VERSION=3.11 \

--build-arg ENVIRONMENT=development \

-t flaskapp:dev .

Image 2 - Build args in a basic Python application

In multi-stage builds, build args require special handling. Here's a full example showing how to pass arguments through multiple stages with our Flask app:

# Dockerfile for a multi-stage build

ARG PYTHON_VERSION=3.13

# Build stage

FROM python:${PYTHON_VERSION}-slim AS builder

ARG ENVIRONMENT=production

ENV FLASK_ENV=${ENVIRONMENT}

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY . .

# In a real app, you might run tests or build assets here

RUN echo "Building Flask app for ${ENVIRONMENT}"

# Production stage

FROM python:${PYTHON_VERSION}-slim

ARG ENVIRONMENT

ENV FLASK_ENV=${ENVIRONMENT}

WORKDIR /app

COPY --from=builder /usr/local/lib/python*/site-packages /usr/local/lib/python*/site-packages

COPY --from=builder /app .

# Add metadata based on build args

LABEL environment="${ENVIRONMENT}" \

python_version="${PYTHON_VERSION}"

EXPOSE 5000

CMD ["python", "app.py"]You can now build this with the specific Python version and environment:

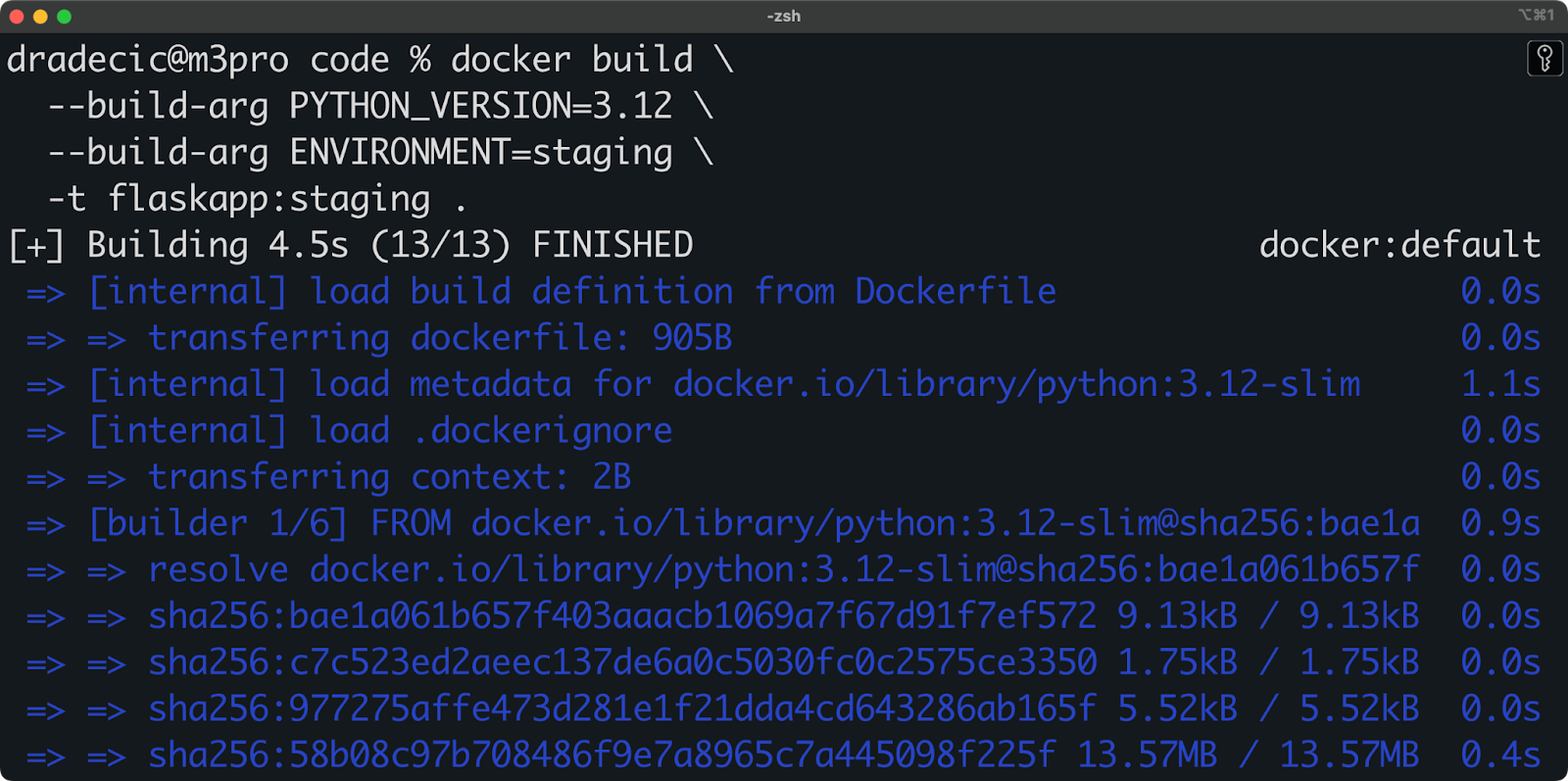

docker build \

--build-arg PYTHON_VERSION=3.12 \

--build-arg ENVIRONMENT=staging \

-t flaskapp:staging .

Image 3 - Build args in multi-stage builds

In short, this approach allows you to maintain a consistent configuration across all stages of your build.

Predefined build arguments

Docker provides several predefined build args that you can use without declaring them first. These are:

HTTP_PROXYandHTTPS_PROXY: Useful for builds in corporate environments with proxy servers.FTP_PROXY: Similar to HTTP proxies but for FTP connections.NO_PROXY: Specifies hosts that should bypass the proxy.BUILD_DATEandVCS_REF: Often used for image metadata and versioning.

These predefined args are handy when you're building images in environments with specific network configurations. For example, in a corporate network with strict proxy requirements, you can use:

docker build --build-arg HTTP_PROXY=http://proxy.example.com:8080 .This approach keeps your Dockerfile portable while still functioning in restricted environments.

Master Docker and Kubernetes

Security Implications and Risk Mitigation

While build arguments offer flexibility, they also introduce potential security risks that you need to understand to keep your Docker images secure.

Argument leakage vectors

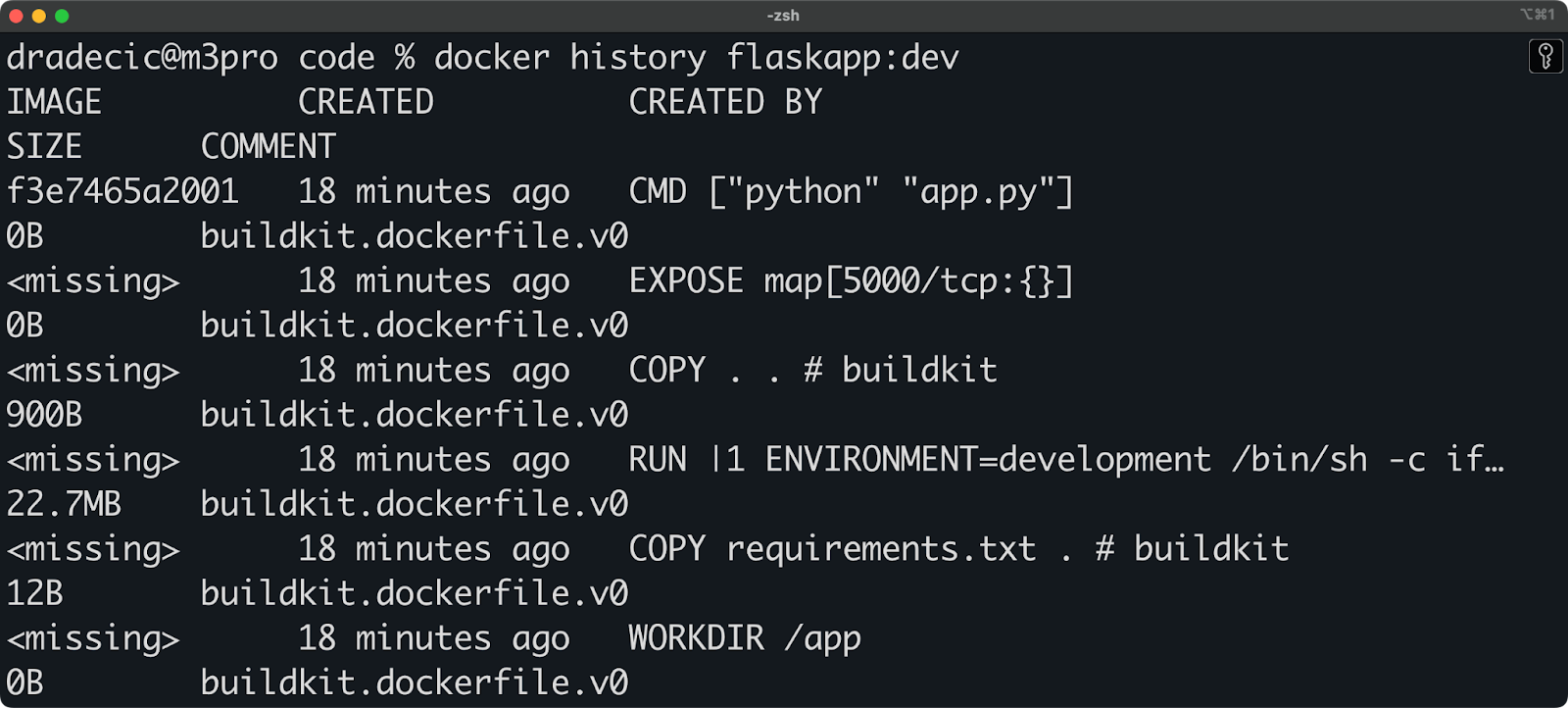

Build arguments aren't as private as you might think. The values of build args can leak through several vectors that many developers overlook. First, the Docker image history exposes all your build args, meaning anyone with access to your image can run docker history to see the values you used during build time, including potentially sensitive information like API keys or tokens.

docker history flaskapp:dev

Image 4 - Docker history

Intermediate layers can also leak build arguments. Even if you use a multi-stage build and don't copy the layer containing sensitive information, the build arg values remain in the layer cache on the build machine. This means anyone with access to the Docker daemon on your build server could potentially extract this information.

CI/CD logs pose another significant risk. Most CI/CD systems log the entire build command, including any build args passed on the command line.

For example, this entire command, including API_KEY, will be visible in CI/CD logs:

docker build --build-arg API_KEY=secret123 -t myapp:latest .These logs are often accessible to a broader group of developers or even stored in third-party systems, which increases the exposure surface of sensitive values.

Secure alternatives

For sensitive information, you should use Docker BuildKit's secrets feature instead of build args. BuildKit lets you mount secrets during the build process without persisting them in the final image or the history.

Here's an example Dockerfile that uses Docker BuildKit secrets:

FROM python:3.13-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

# Use secret mount instead of build arg

RUN --mount=type=secret,id=api_key \

cat /run/secrets/api_key > api_key.txt

EXPOSE 5000

CMD ["python", "app.py"]To build this image with a secret, first create the api_key.txt file:

echo "my-secret-api-key" > api_key.txtAnd then build the image with the secret:

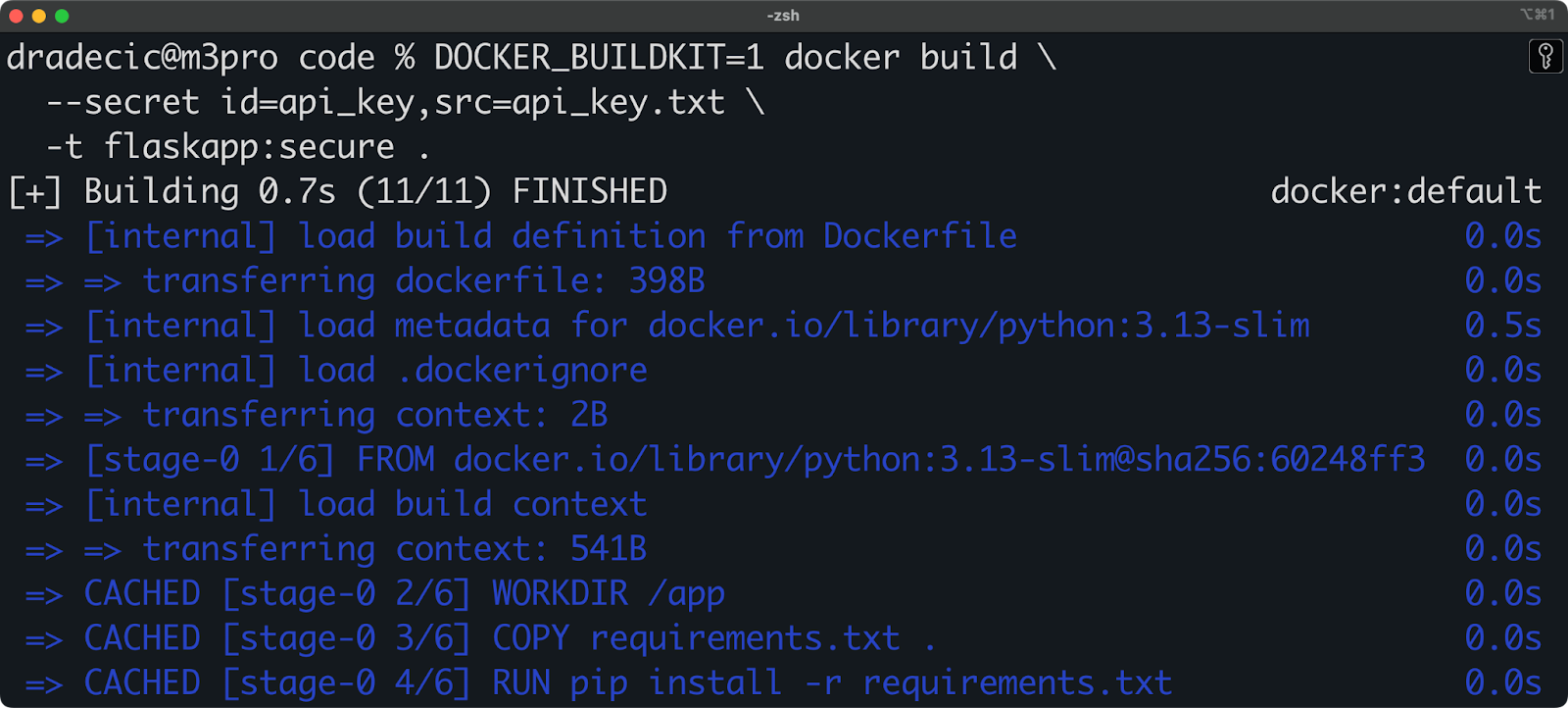

DOCKER_BUILDKIT=1 docker build \

--secret id=api_key,src=api_key.txt \

-t flaskapp:secure .

Image 5 - Building images with BuildKit secrets

For configuration that needs to be available at runtime, environment variables remain the preferred approach. They can be set when starting a container without being baked into the image:

# Set environment variables at runtime

docker run -e API_KEY=secret123 -p 5000:5000 flaskapp:latestTo safely handle sensitive configuration in production, consider using container orchestration platforms like Kubernetes, which provide secure methods like Secrets for managing sensitive information. Docker Swarm also offers similar capabilities with its secrets management.

> Docker isn't the only containerization platform. Read about Podman to find out which tool is better-suited for you.

Remember, the rule of thumb is: use build args for build-time configuration that isn't sensitive, use BuildKit secrets for sensitive build-time needs, and use environment variables or orchestration platform secrets for runtime configuration.

Integration with Container Ecosystems

Now with the basics out of the way, let's explore how to leverage build arguments within broader Docker ecosystems and modern build tools.

Docker Compose

Docker Compose makes it easy to define and share build arguments across your multi-container applications.

If you want to follow along, here's a complete project structure for two simple Flask services:

flask-project/

├── docker-compose.yml # Main compose configuration

├── .env # Environment variables for compose

├── web/

│ ├── Dockerfile # Web service Dockerfile

│ ├── app.py # Flask application

│ └── requirements.txt # Python dependencies

├── api/

│ ├── Dockerfile # API service Dockerfile

│ ├── app.py # API Flask application

│ └── requirements.txt # API dependenciesI'll quickly go over the file contents one by one:

web/app.py

from flask import Flask, render_template

app = Flask(__name__)

@app.route("/")

def index():

return render_template("index.html", title="Flask Web App")

@app.route("/about")

def about():

return "About page - Web Service"

if __name__ == "__main__":

app.run(host="0.0.0.0", port=5000, debug=True)web/requirements.txt

flask==3.1.0web/Dockerfile

# Web service Dockerfile

ARG PYTHON_VERSION=3.13

FROM python:${PYTHON_VERSION}-slim

# Redeclare the build arg to use it after FROM

ARG PYTHON_VERSION

ARG ENVIRONMENT=production

# Set environment variables

ENV PYTHONDONTWRITEBYTECODE=1

ENV PYTHONUNBUFFERED=1

ENV FLASK_ENV=${ENVIRONMENT}

WORKDIR /app

# Copy and install requirements

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Copy application code

COPY . .

# Create a simple template folder and file if not exists

RUN mkdir -p templates && \

echo "<html><body><h1>Hello from Web Service</h1><p>Python version: ${PYTHON_VERSION}</p><p>Environment: ${ENVIRONMENT}</p></body></html>" > templates/index.html

EXPOSE 5000

CMD ["python", "app.py"]api/app.py

from flask import Flask, jsonify

app = Flask(__name__)

@app.route("/api/health")

def health():

return jsonify({"status": "healthy"})

@app.route("/api/data")

def get_data():

return jsonify(

{"message": "Hello from the API service", "items": ["item1", "item2", "item3"]}

)

if __name__ == "__main__":

app.run(host="0.0.0.0", port=8000, debug=True)api/requirements.txt

flask==3.1.0api/Dockerfile

ARG PYTHON_VERSION=3.13

FROM python:${PYTHON_VERSION}-slim

# Redeclare the build arg to use it after FROM

ARG PYTHON_VERSION

ARG ENVIRONMENT=production

# Set environment variables

ENV PYTHONDONTWRITEBYTECODE=1

ENV PYTHONUNBUFFERED=1

ENV FLASK_ENV=${ENVIRONMENT}

WORKDIR /app

# Copy and install requirements

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Copy application code

COPY . .

# Add metadata label based on build args

LABEL python.version="${PYTHON_VERSION}" \

app.environment="${ENVIRONMENT}"

EXPOSE 8000

CMD ["python", "app.py"].env

PYTHON_VERSION=3.10

ENVIRONMENT=developmentdocker-compose.yml

services:

web:

build:

context: ./web

dockerfile: Dockerfile

args:

PYTHON_VERSION: ${PYTHON_VERSION:-3.13}

ENVIRONMENT: ${ENVIRONMENT:-development}

ports:

- "5000:5000"

depends_on:

- api

api:

build:

context: ./api

dockerfile: Dockerfile

args:

PYTHON_VERSION: ${PYTHON_VERSION:-3.13}

ENVIRONMENT: ${ENVIRONMENT:-production}

ports:

- "8000:8000"In a nutshell, this is a really simple way of starting two applications in isolated environments, both at the same time, both with a specific set of build args.

You can use this command to run the multi-container setup:

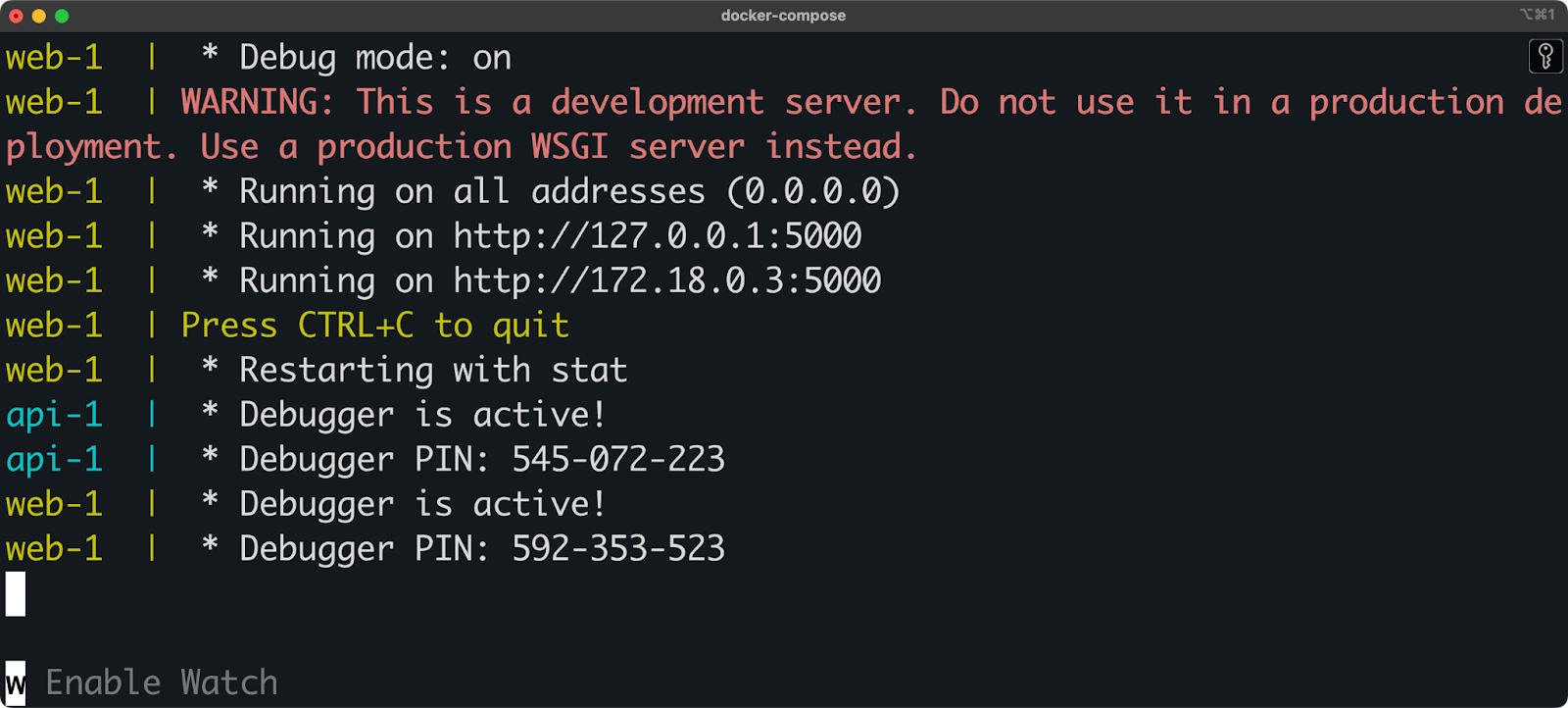

docker compose up --build

Image 6 - Build args with Docker Compose

After a couple of seconds, you'll see both services running:

Image 7 - Build args with Docker Compose (2)

It's quite a verbose setup for applications as simple as these two, but you get the big picture—build arguments are set in the compose file and referenced in individual Dockerfiles.

On a side note, you can also pass variable values from your environment to build args, which is handy for CI/CD pipelines:

services:

web:

build:

context: .

args:

- PYTHON_VERSION=${PYTHON_VERSION:-3.10}And to override these values when running docker-compose, you can use environment variables:

PYTHON_VERSION=3.10 docker-compose up --buildThis approach allows you to maintain consistent builds across your team while still offering flexibility when needed.

BuildKit features

BuildKit, Docker's modern build engine, supercharges your build process with features that make using build args that much more powerful. When you enable BuildKit (by setting DOCKER_BUILDKIT=1), you unlock a couple of new capabilities.

Inline cache improves build performance by leveraging the remote image's build cache. You can specify a source image to use as a cache with the --cache-from flag, and BuildKit will intelligently use that image's layers:

DOCKER_BUILDKIT=1 docker build \

--build-arg PYTHON_VERSION=3.10 \

--cache-from flaskapp:dev \

-t flaskapp:latest .Another valuable BuildKit feature is deterministic outputs, which ensure that your builds are reproducible. This is crucial when you want exactly the same image regardless of when or where it's built:

# Build with deterministic output

DOCKER_BUILDKIT=1 docker build \

--build-arg PYTHON_VERSION=3.10 \

--build-arg BUILD_DATE=2025-05-01 \

--output type=docker,name=flaskapp:stable \

.BuildKit's secret redaction in logs protects sensitive information during builds.

Unlike regular build args, which appear in build logs, BuildKit automatically hides the values of secrets:

# Create a file with the API key

echo "my-api-key-value" > ./api-key.txt

# Build with secret (value won't appear in logs)

DOCKER_BUILDKIT=1 docker build \

--secret id=api_key,src=./api-key.txt \

-t flaskapp:secure .In your Dockerfile, you'd access this secret with:

RUN --mount=type=secret,id=api_key \

cat /run/secrets/api_key > /app/config/api_key.txtThese BuildKit features work together with build args to create a more secure, efficient, and reproducible build system that can adapt to virtually any containerization workflow.

Best Practices for Enterprise Adoption

Implementing Docker build arguments across large teams requires standardized approaches, at least if you want to minimize confusion and maximize security and performance benefits.

When adopting build args in enterprise environments, start with proper argument validation.

Always provide sensible default values for every build argument to ensure builds don't fail unexpectedly when arguments aren't passed. You should also add validation checks within your Dockerfile to verify that critical build args meet your requirements.

Here's an example that validates Python version:

ARG PYTHON_VERSION=3.9

# Validate Python version

RUN if [[ ! "$PYTHON_VERSION" =~ ^3\.[0-9]+$ ]]; then \

echo "Invalid Python version: ${PYTHON_VERSION}. Must be 3.x"; \

exit 1; \

fiLayer caching optimization is crucial for reducing build times in CI/CD pipelines.

Order your Dockerfile instructions to maximize cache hits by placing rarely changing content (like dependency installation) before frequently changing content (like application code).

When using build args that might change often, place them after stable operations to avoid invalidating the entire cache:

FROM python:3.13-slim

WORKDIR /app

# Dependencies change less frequently - good cache utilization

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Build args that might change often come AFTER stable operations

ARG BUILD_NUMBER

ARG COMMIT_SHA

# Now these args won't invalidate the dependency cacheMetadata management through build args improves traceability and compliance. Use build args to inject build-time metadata as labels:

ARG BUILD_DATE

ARG VERSION

ARG COMMIT_SHA

ARG BUILD_URL

LABEL org.opencontainers.image.created="${BUILD_DATE}" Can you please provide me with access to this document?

org.opencontainers.image.version="${VERSION}" \

org.opencontainers.image.revision="${COMMIT_SHA}" \

org.opencontainers.image.url="${BUILD_URL}"These standardized labels follow the Open Container Initiative (OCI) specification, which means your images will be compatible with different container management tools.

Documentation is often overlooked, but it is critical for enterprise adoption.

Create a clear README section that documents all available build args, their purpose, default values, and usage examples. Include which build args are required versus optional, and document any interactions between different arguments. Consider adding this information to your CI/CD pipeline configuration files as comments as well, to help developers understand what values they need to provide:

# Example documented CI configuration

build_job:

script:

# BUILD_NUMBER: Unique identifier for this build (required)

# ENVIRONMENT: Target deployment environment [dev|staging|prod] (required)

# PYTHON_VERSION: Python version to use (optional, default: 3.9)

- docker build

--build-arg BUILD_NUMBER=${CI_PIPELINE_ID}

--build-arg ENVIRONMENT=${DEPLOY_ENV}

--build-arg PYTHON_VERSION=${PYTHON_VERSION:-3.9}

-t myapp:${CI_PIPELINE_ID} .For truly enterprise-grade adoption, consider implementing a build argument schema that can be versioned and validated. This approach lets you evolve your build configuration over time while maintaining backward compatibility.

Comparative Analysis: Build Args vs. Environment Variables

If you want to create secure and flexible Docker images that follow best practices, you must understand when to use build arguments and when to use environment variables.

Here's a table you can reference at any time:

|

Feature |

Build arguments |

Environment variables |

|

Availability |

Only available during image build |

Available during both build and container runtime |

|

Persistence |

Not persisted in the final image (unless explicitly converted to |

Persisted in the image and available to running containers |

|

Security |

Visible in image history with docker history |

Not visible in image history but accessible to processes inside the container |

|

Usage |

Ideal for build-time configuration like versions, build paths, compiler flags |

Ideal for runtime configuration like connection strings, feature flags, log levels |

|

Declaration |

Defined with |

Defined with |

|

Default values |

Can provide default values: |

Can provide default values: |

|

Scope |

Scoped to each build stage, must be redeclared after each |

Available across all build stages and inherited by child images |

|

Override method |

|

|

The key difference lies in their timing and purpose. Build args configure the build process itself, while environment variables configure the running application. For example, you'd use a build arg to select which base image to use (ARG PYTHON_VERSION=3.10), but you'd use an environment variable to tell your application which port to listen on (ENV PORT=8080).

From a security perspective, build args pose a higher risk for sensitive information because they remain in the image history.

Consider this workflow for handling different types of configuration:

# Build-time configuration (non-sensitive)

ARG PYTHON_VERSION=3.13

FROM python:${PYTHON_VERSION}-slim

# Build-time secrets (use BuildKit secrets)

RUN --mount=type=secret,id=build_key \

cat /run/secrets/build_key > /tmp/key && \

# Use the secret for build operations

rm /tmp/key

# Runtime configuration (non-sensitive)

ENV LOG_LEVEL=info

# Runtime secrets (should be injected at runtime, not here)

# This would be provided at runtime with:

# docker run -e API_KEY=secret123 myimageIn practice, a common pattern is to convert selected build args to environment variables when they need to be available at runtime:

ARG APP_VERSION=1.0.0

# Make the version available at runtime

ENV APP_VERSION=${APP_VERSION}For enterprise applications, the separation allows for different teams to manage different aspects of the application lifecycle. DevOps teams can modify build args for image construction, while operators can adjust environment variables during deployment without rebuilding images.

Summing up Docker Build Args

Docker build args aren't just another technical feature - they're your go-to tool for creating images that work consistently across your team and environments.

I've walked through how to use them in simple Python projects, all while keeping your sensitive information safe. You've also seen how to integrate build args with tools like Docker Compose.

Think of build args as the foundation of a flexible containerization strategy. Start small by parameterizing your Python version in your next project. Once that feels comfortable, try adding conditional logic for different environments. Before you know it, you'll create sophisticated multi-stage builds that your team can customize without modifying the Dockerfile.

Ready to dive deeper? These courses from DataCamp are your best next stop:

Master Docker and Kubernetes

FAQs

What are Docker build arguments?

Docker build arguments (build args) are variables you pass to the Docker build process that are only available during image construction. They let you customize your Dockerfile without modifying it, making your container builds more flexible and reusable. Think of them as parameters you can adjust each time you build an image, perfect for handling different environments or configurations.

How do build args differ from environment variables in Docker?

Build args exist only during image construction, while environment variables persist in the running container. Build args are defined with the ARG instruction and passed via the --build-arg flag when building, making them ideal for things like selecting base images or setting build-time configurations. Environment variables, defined with ENV, are designed for runtime configuration that your application needs while running.

What security concerns should I be aware of when using Docker build args?

Build args aren't as private as many developers assume - their values are visible in image history and CI/CD logs. Never use build args for sensitive information like API keys or passwords since anyone with access to your image can see these values. For sensitive data, use Docker BuildKit secrets instead, which don't persist in the final image or history.

How can I pass build args through CI/CD pipelines like GitHub Actions?

Most CI/CD platforms support Docker build args through their configuration files. In GitHub Actions, you can pass build args in your workflow YAML using the build-args parameter under the docker/build-push-action. You can reference environment variables, repository secrets, or hardcoded values depending on your security needs. This lets you automate builds with different configurations based on branch, environment, or other CI variables.

Is there a limit to how many build args I can use in a Dockerfile?

There's no hard technical limit on the number of build args, but too many can make your Dockerfile difficult to understand and maintain. For data science projects, focus on parameterizing critical variables like Python version, framework choices, or dataset configurations. If you find yourself with more than 8-10 build args, consider breaking your Dockerfile into smaller, more focused files or using Docker Compose to manage complexity.