Course

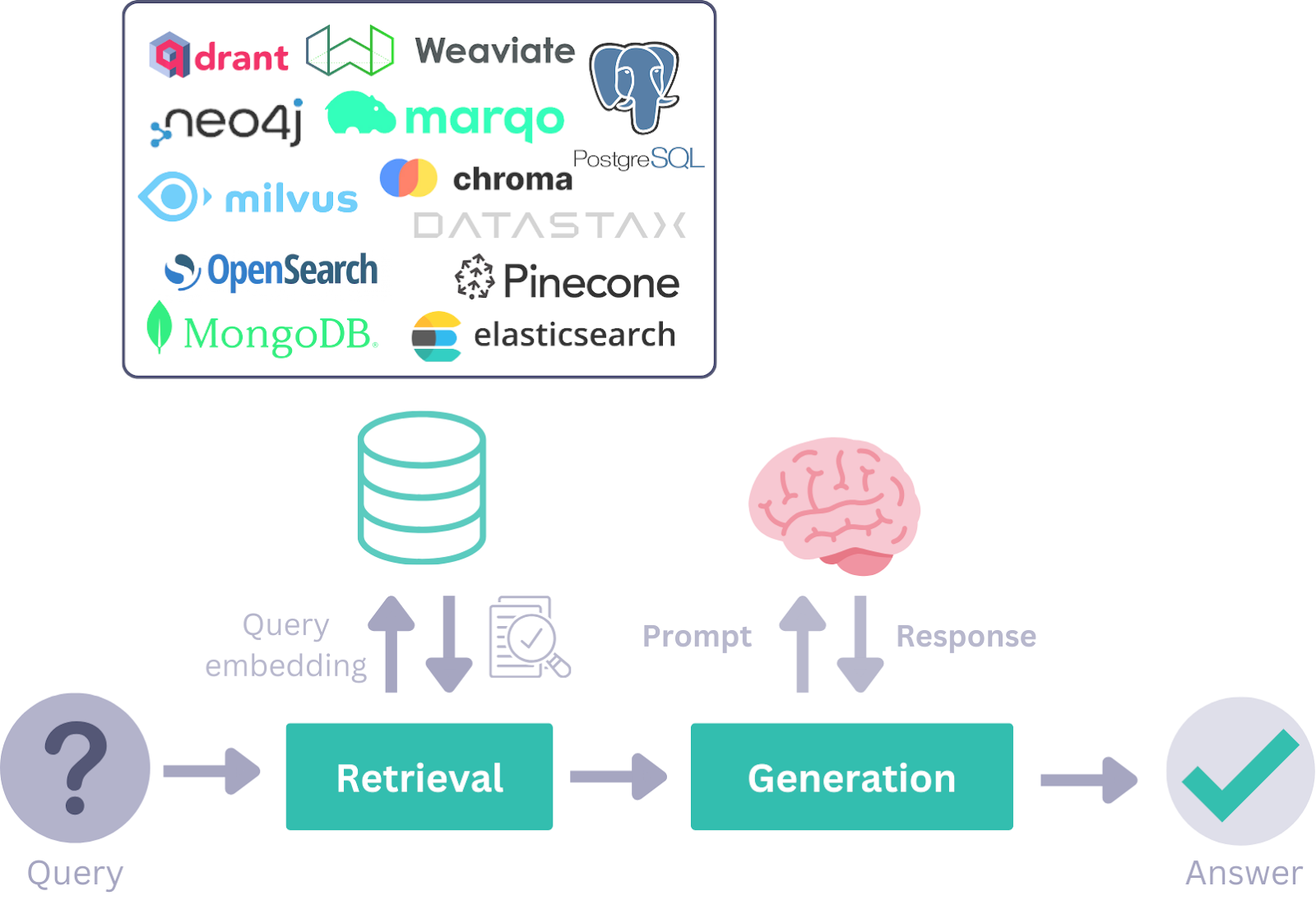

During the Retrieval-Augmented Generation (RAG) era, frameworks such as LangChain became popular for developing AI applications. However, as the world shifts towards Agentic AI workflows, frameworks like Haystack AI are becoming prominent due to their flexibility, modularity, and effectiveness in handling a wide range of use cases.

In this tutorial, we will learn about Haystack AI, explore its key components and use cases, and learn how to build an AI agentic workflow that integrates multiple tools. An agentic workflow refers to systems where language models autonomously invoke tools and components based on user queries to achieve a goal.

If you’re new to the topic, be sure to check our tutorials on Agentic AI and Agentic RAG.

We keep our readers updated on the latest in AI by sending out The Median, our free Friday newsletter that breaks down the week’s key stories. Subscribe and stay sharp in just a few minutes a week:

What is Haystack AI?

Haystack is an open-source framework designed for building highly customizable, production-ready applications powered by Large Language Models (LLMs). It enables developers to create a wide range of AI-driven systems, including RAG pipelines, agent-based workflows, state-of-the-art search systems, and even fully autonomous AI applications.

Haystack’s modular architecture allows developers to integrate leading AI technologies and tools, including OpenAI, Hugging Face Transformers, Chroma, MCP tools, Elasticsearch, and more.

It is simpler than Langchain and offers a wide array of tools for creating your components and agents with just a few lines of code.

At its core, Haystack is structured around components and pipelines, which work together with LLM providers, document stores, tools, agents, and a rich ecosystem of integrations. These building blocks provide developers with the ability to design, customize, and deploy end-to-end AI systems.

Source: Haystack Concepts Overview

Key Components of Haystack

Using the following Haystack components, you can build robust RAG workflows, agentic pipelines, or even combine both for advanced AI applications.

1. Components

Haystack offers various components for specific tasks like retrieval, generation, or document storage. These components are Python classes with callable methods that are initialized with parameters and executed using the run() method.

The Component API streamlines the process of creating custom components, including those for third-party APIs and databases.

2. Generators

Generators are responsible for producing text responses based on the prompts they receive. In the backend, these generators leverage APIs provided by LLM providers and are tailored to meet specific requirements.

There are two types of generators:

1. Chat generators: These are designed for conversational contexts and enable chat completions by interacting with a series of messages.

2. Non-chat generators: These are used for simpler text generation tasks, such as translation or summarization.

3. Retrievers

Retrieve relevant documents from a document store based on user queries. This system is customized for specific document stores, allowing them to handle unique database requirements with custom parameters. For example, the Elasticsearch document store has both retriever and document store packages available.

4. Document stores

The document storage interface in Haystack effectively manages documents. It includes functions like write_documents () and delete_documents () to handle data management. Components can easily interact with the Document Store to read or write documents. A DocumentWriter component can be used to write data into Document Stores for more complex workflows.

5. Data classes

Data classes simplify communication between components in a straightforward and modular way. Information is exchanged within the system as inputs or outputs in pipelines.

There are two types of data classes:

- Document class: This class includes text, metadata, tables, or binary data. Documents can be stored in Document Stores or transferred between components.

- Answer class: This class contains the generated answer, the original query, and related metadata.

6. Pipelines

Pipelines combine components, document stores, and integrations into customizable workflows. They support features such as simultaneous flows, standalone components, loops, as well as preprocessing, indexing, and querying steps. Pipelines can be saved in formats like YAML or TOML for reuse or sharing.

7. Agents

AI agents are autonomous systems that use large language models to make decisions and tackle complex tasks. You can build tools to connect with an API, give them to the agent, and then ask a question. The agent will analyze the query and use the appropriate tools based on your question.

Get Started with Haystack AI

In this section, we will apply Haystack's key components to build an AI Agentic workflow that will be used with the RAG and web access tool, depending on the user query.

1. Setting up the environment

For this guide, we will be using DataLab as our coding environment. To get started, we will install the following Python packages: Haystack, OpenAI, Tavil, and itertools. Here is what each package is used for:

- Haystack: For creating pipelines and agents to build LLM-based applications.

- Tavil: For accessing web search functionality via APIs.

- OpenAI: For using LLMs and embedding models.

- itertools: For advanced iteration tools and efficient looping in Python.

!pip install -qU \

"haystack-ai[agentst]" \

tavily-python \

openai \

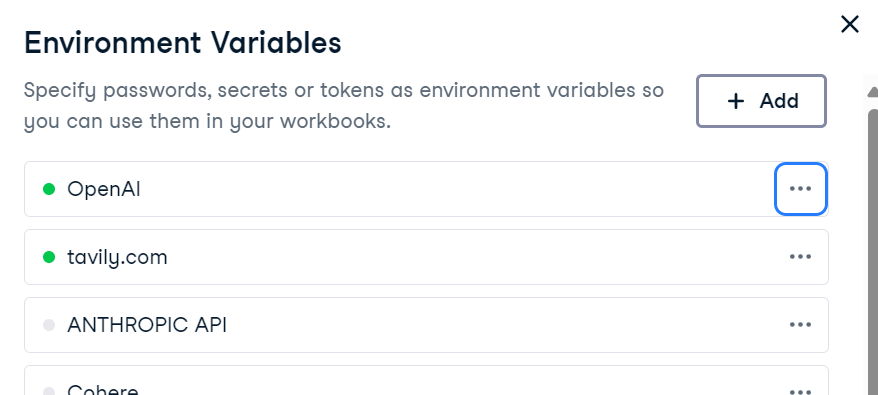

more_itertoolsTo use OpenAI and Tavily, set their API keys as environment variables. If you're using DataLab, you can add environment variables by navigating to the Environment tab and selecting the Environment Variables option.

Alternatively, you can set them programmatically in Python:

import os

os.environ["OPENAI_API_KEY"] = "sk-..." # ← paste your OpenAI key

os.environ["TAVILY_API_KEY"] = "tvly-..." # ← paste your Tavily key2. Preparing the knowledge base

We will create a knowledge base using Haystack's Document data class. This knowledge base will contain information about Islamabad city.

from haystack.dataclasses import Document

from typing import List, Any, Dict

docs: List[Document] = [

Document(content="Islamabad experiences a humid subtropical climate with hot summers and mild winters."),

Document(content="Peak tourist season in Islamabad is during spring (March to May) and autumn (September to November) due to pleasant weather."),

Document(content="Faisal Mosque, one of the largest mosques in the world, is an iconic landmark in Islamabad designed by Turkish architect Vedat Dalokay."),

Document(content="Islamabad was purpose-built as the capital of Pakistan in the 1960s, designed by Greek architect Constantinos Apostolos Doxiadis."),

Document(content="The city is known for its well-planned infrastructure, wide roads, and green spaces, making it one of the most organized cities in Pakistan."),

]3. Building the document store pipeline

The document store acts as a vector store, storing text embeddings and enabling efficient retrieval based on user queries.

Here, we will:

- Create an in-memory document store.

- Build a Haystack pipeline with components like an OpenAI embedder and a document writer.

- Convert the documents into embeddings and store them into a document store.

from haystack.document_stores.in_memory import InMemoryDocumentStore

from haystack.components.embedders import OpenAIDocumentEmbedder

from haystack.components.writers import DocumentWriter

from haystack import Pipeline, component

document_store = InMemoryDocumentStore(embedding_similarity_function="cosine")

indexing_pipeline = Pipeline()

indexing_pipeline.add_component("embedder", OpenAIDocumentEmbedder(model="text-embedding-3-small"))

indexing_pipeline.add_component("writer", DocumentWriter(document_store=document_store))

indexing_pipeline.connect("embedder", "writer")

indexing_pipeline.run({"embedder": {"documents": docs}})After running the pipeline, the documents are successfully stored as embeddings:

Calculating embeddings: 1it [00:00, 1.34it/s]

{'embedder': {'meta': {'model': 'text-embedding-3-small',

'usage': {'prompt_tokens': 128, 'total_tokens': 128}}},

'writer': {'documents_written': 5}}4. Creating the RAG Tool

Once the document store is populated, we will create a custom RAG search tool.

This tool will:

- Convert user queries into embeddings.

- Perform similarity searches in the document store.

- Retrieve relevant documents.

from haystack.tools import ComponentTool

from haystack.components.embedders import OpenAIDocumentEmbedder, OpenAITextEmbedder

from haystack.components.retrievers.in_memory import InMemoryEmbeddingRetriever

@component()

class RagSearcher:

"""Query ‑> top‑k docs from the private store"""

def __init__(self, document_store, top_k: int = 3):

self.text_embedder = OpenAITextEmbedder(model="text-embedding-3-small")

self.retriever = InMemoryEmbeddingRetriever(document_store=document_store, top_k=top_k)

@component.output_types(documents=List[Document])

def run(self, text: str) -> Dict[str, Any]:

emb_out = self.text_embedder.run(text=text)

docs_out = self.retriever.run(query_embedding=emb_out["embedding"])

return {"documents": docs_out["documents"]}

rag_tool = ComponentTool(

component=RagSearcher(document_store),

name="rag_search",

description="Semantic search over the Islamabad knowledge base."

)5. Creating a web search tool

Since Tavily does not have native Haystack components, we will create a custom web search tool using the Tavily API. This tool will fetch live web search results and return them as Document objects.

import os

import requests

from haystack import component

from haystack.dataclasses import Document

from typing import List

@component

class TavilyWebSearch:

def __init__(self, api_key: str, top_k: int = 3):

self.api_key = api_key

self.top_k = top_k

def run(self, query: str):

resp = requests.post(

"https://api.tavily.com/search",

json={

"api_key": self.api_key,

"query": query,

"max_results": self.top_k,

"include_answer": True,

},

timeout=15,

)

resp.raise_for_status()

data = resp.json()

docs: List[Document] = []

if answer := data.get("answer"):

docs.append(Document(content=answer, meta={"source": "tavily:direct_answer"}))

for hit in data.get("results", []):

docs.append(

Document(

content=hit["content"],

meta={

"title": hit["title"],

"url": hit["url"],

},

)

)

return {"documents": docs}

web_tool = ComponentTool(

component=TavilyWebSearch(api_key=os.environ["TAVILY_API_KEY"], top_k=3),

name="web_search",

description="Live web search via Tavily ."

)6. Building an Agent

We will create a generator using the OpenAI functions and the latest gpt-4.1-mini model. First, we will develop the system prompt that will help the Agent determine which tools to use for specific user requests. Finally, we will create the Agent with the generator, system prompt, and a list of tools.

Based on the system prompt, the Agent will first check the information using the RAG search. If the user’s question pertains to the latest news, weather, or breaking news, the Agent will directly use the web search tool.

from haystack.components.generators.chat import OpenAIChatGenerator

from haystack.components.agents import Agent

generator = OpenAIChatGenerator(model="gpt-4.1-mini")

system_prompt = """

You are a helpful assistant.

- Use rag_search first to retrieve information from the knowledge base.

- Use web_search only when the query requires fresh, real-time, or external information (e.g., weather, breaking news).

"""

agent = Agent(

chat_generator=generator,

system_prompt=system_prompt,

tools=[rag_tool, web_tool],

)7. Testing the RAG tool

We will ask a question about Islamabad to invoke the RAG tool to answer.

from haystack.dataclasses import ChatMessage

msg = ChatMessage.from_user("What is the peak tourist season in Islamabad?")

resp = agent.run(messages=[msg])

print(resp["messages"][-1].text)We have highly contextual awareness answers, but how do we know it has used the RAG tools to answer?

The peak tourist season in Islamabad is during spring (March to May) and autumn (September to November).8. Analyzing tools used

To check which tools are invoked, we will create a function that will extract information about the tools.

def tools_used(run_output: dict) -> list[str]:

seen, ordered = set(), []

for msg in run_output["messages"]:

for call in msg.tool_calls:

if call.tool_name not in seen:

ordered.append(call.tool_name)

seen.add(call.tool_name)

return ordered

print("Tools invoked →", tools_used(resp))For the first test, the agent only used therag_search tool to answer the question.

Tools invoked → ['rag_search']9. Testing the web tool

We will now ask about Islamabad's weather. Instead of invoking the RAG tool, it will use the web search tool to answer the question.

msg = ChatMessage.from_user("What is the temperature in Islamabad now?")

resp = agent.run(messages=[msg])

print(resp["messages"][-1].text)

print("Tools invoked →", tools_used(resp))The answer is very accurate, and it has used the web search tool to answer the question.

The current temperature in Islamabad is 31°C with a real-feel temperature of 32°C.

Tools invoked → ['web_search']If you are having issues running the code above, please refer to the Datalab Notebook: Haystack AI Tutorial — DataLab.

Haystack Use Cases

We have covered the basics of the Haystack AI framework. The next step in your learning journey is to create a fully functional AI application. Here are some of the use cases developers are exploring:

- Multimodal AI: Develop systems that process and integrate various input types, including text and images, for applications such as image captioning, audio transcription, and image generation.

- Conversational AI: Provide chat interfaces for multi-turn, dynamic chatbots that can engage in meaningful conversations.

- Agentic pipelines: Use functional calling to enable language models to interact with tools and access external capabilities.

- Advanced RAG: Combine document retrieval with LLM-based generation to implement a variety of retrieval and generation strategies.

- MCP tools integration: Seamlessly connect LLMs with external tools, APIs, and data sources using the Model Context Protocol.

- Model evaluation: Evaluate pipelines for accuracy, relevance, and reliability.

- Multi-agent applications: Create systems where multiple agents collaborate effectively to solve complex, multi-step tasks.

Final Thoughts

Having worked with many LLM frameworks, I find Haystack to be one of the most intuitive and flexible options available. It feels more natural to use, less complex, and offers greater control over your workflows.

One of its standout features is the ease with which you can create custom components for your pipelines, making it highly adaptable to a wide range of use cases.

I particularly enjoyed experimenting with agents and tools and understanding how to invoke each one effectively. Working with the system prompt helped me understand how to use the tools individually and in sequence.

Furthermore, the system prompt can be easily customized to include additional details, such as displaying sources and document IDs, which can be important for your application.

If you’re new to the world of AI agents, check out the resources below to learn more:

Multi-Agent Systems with LangGraph

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.