Course

The SOLAR-10.7B project represents a significant leap forward in the development of large language models, introducing a new approach to scaling these models in an effective and efficient manner.

This article starts by explaining what the SOLAR-10.7 B model is before highlighting its performance against other large language models and diving into the process of using its specialized fine-tuned version. Lastly, the reader will understand the potential applications of the fine-tuned SOLAR-10.7 B-Instruct model and its limitations.

What is SOLAR-10.7B?

SOLAR-10.7B is a 10.7 billion parameters model developed by a team at Upstage AI in South Korea.

Based on the Llama-2 architecture, this model surpasses other large language models with up to 30 billion parameters, including the Mixtral 8X7B model.

To learn more about Llama-2, our article Fine-Tuning LLaMA 2: A Step-by-Step Guide to Customizing the Large Language Model provides a step-by-step guide to fine-tune Llama-2, using new approaches to overcome memory and computing limitations for better access to open-source large language models.

Furthermore, building upon the robust foundation of SOLAR-10.7B, the SOLAR 10.7B-Instruct model is fine-tuned with an emphasis on following complex instructions. This variant demonstrates enhanced performance, showcasing the model's adaptability and the effectiveness of fine-tuning in achieving specialized objectives.

Finally, SOLAR-10.7B introduces a method called Depth Up-Scaling, and let’s further explore that in the following section.

The Depth Up-Scaling method

This innovative method allows for the expansion of the model's neural network depth without requiring a corresponding increase in computational resources. Such a strategy enhances both the efficiency and the overall performance of the model.

Essential elements of Depth Up-Scaling

The Depth Up-Scaling is based on three main components: (1) Mistral 7B weight, (2) Llama 2 framework, and (3) Continuous pre-training.

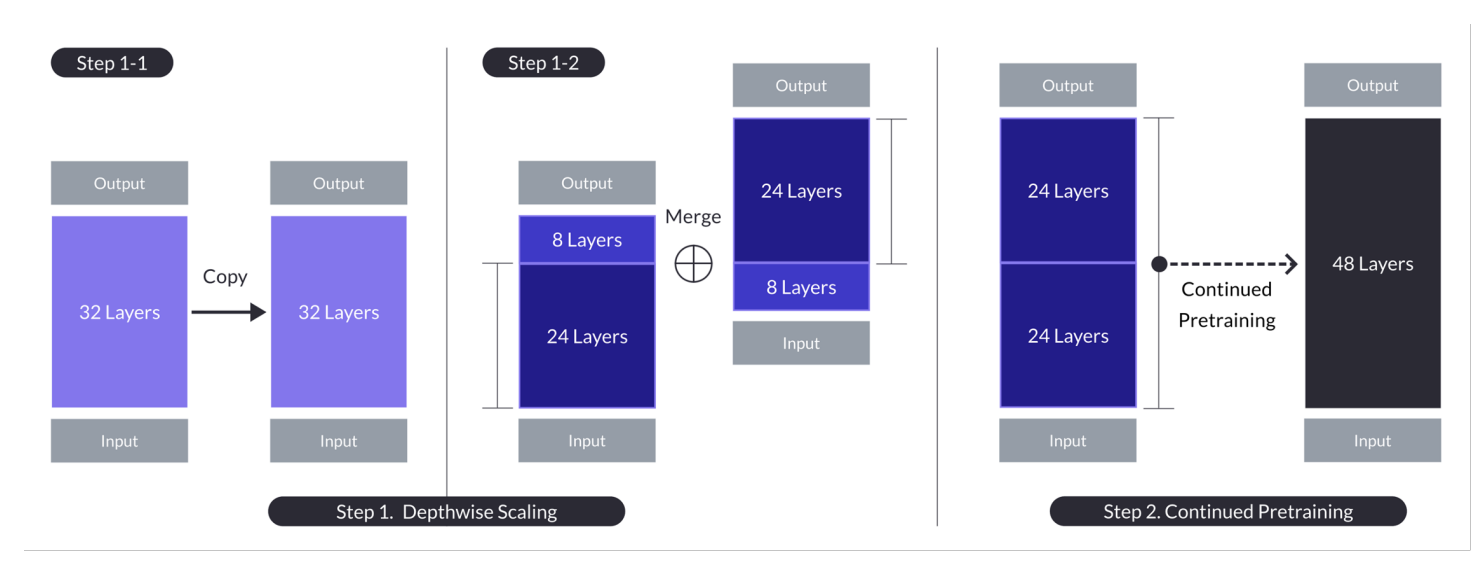

Depth up-scaling for the case with n = 32, s = 48, and m = 8. Depth up-scaling is achieved through a dual-stage process of depthwise scaling followed by continued pretraining. (Source)

Base Model:

- Utilizes a 32-layer transformer architecture, specifically the Llama 2 model, initialized with pre-trained weights from Mistral 7B.

- Chosen for its compatibility and performance, aiming to leverage community resources and introduce novel modifications for enhanced capabilities.

- Serves as the foundation for depthwise scaling and further pretraining to scale up efficiently.

Depthwise Scaling:

- Scales the base model by setting a target layer count for the scaled model, considering hardware capabilities.

- Involves duplicating the base model, removing the final m layers from the original and the initial m layers from the duplicate, then concatenating them to form a model with s layers.

- This process creates a scaled model with an adjusted number of layers to fit between 7 and 13 billion parameters, specifically using a base of n=32 layers, removing m=8 layers to achieve s=48 layers.

Continued Pretraining:

- Addresses the initial performance drop after depthwise scaling by continuing to pre-train the scaled model.

- Rapid performance recovery was observed during continued pre-training, which was attributed to reducing heterogeneity and discrepancies in the model.

- Continued pre-training is crucial for regaining and potentially surpassing the base model's performance, leveraging the depthwise scaled model's architecture for effective learning.

These summaries highlight the key strategies and outcomes of the Depth Up-Scaling approach, focusing on leveraging existing models, scaling through depth adjustment, and enhancing performance through continued pretraining.

This multifaceted approach SOLAR-10.7B achieves and, in many cases, exceeds the capabilities of much larger models. This efficiency makes it a prime choice for a range of specific applications, showcasing its strength and flexibility.

How Does The SOLAR 10.7B Instruct Model Work?

SOLAR-10.7B instruct excels at interpreting and executing complex instructions, making it incredibly valuable in scenarios where precise understanding and responsiveness to human commands are crucial. This capability is essential for developing more intuitive and interactive AI systems.

- SOLAR 10.7B instruct results from fine-tuning the original SOLAR 10.7B model to follow instructions in QA format.

- The fine-tuning mostly uses open-source datasets along with synthesized math QA datasets to enhance the model’s mathematical skills.

- The first version of the SOLAR 10.7B instruct is created by integrating the Mistral 7B weights to strengthen its learning capabilities for effective and efficient information processing.

- The backbone of the SOLAR 10.7B is the Llama2 architecture, which provides a blend of speed and accuracy.

Overall, the fine-tuned SOLAR-10.7B model's importance lies in its enhanced performance, adaptability, and potential for widespread application, driving forward the fields of natural language processing and artificial intelligence.

Potential Applications of the Fine-Tuned SOLAR-10.7B Model

Before diving into the technical implementation, let’s explore some of the potential applications of a fine-tuned SOLAR-10.7B model.

Below are some examples of personalized education and tutoring, enhanced customer support, and automated content creation.

- Personalized education and tutoring: SOLAR-10.7B-Instruct can revolutionize the educational sector by providing personalized learning experiences. It can understand complex student queries, offering tailored explanations, resources, and exercises. This capability makes it an ideal tool for developing intelligent tutoring systems that adapt to individual learning styles and needs, enhancing student engagement and outcomes.

- Better customer support: SOLAR-10.7B-Instruct can power advanced chatbots and virtual assistants capable of understanding and resolving complex customer inquiries with high accuracy. This application not only improves customer experience by providing timely and relevant support but also reduces the workload on human customer service representatives by automating routine queries.

- Automated content creation and summarization: For media and content creators, SOLAR-10.7B-Instruct offers the ability to automate the generation of written content, such as news articles, reports, and creative writing. Additionally, it can summarize extensive documents into concise, easy-to-understand formats, making it invaluable for journalists, researchers, and professionals who need to quickly assimilate and report on large volumes of information.

These examples underscore the versatility and potential of SOLAR-10.7B-Instruct to impact and improve efficiency, accessibility, and user experience across a broad spectrum of industries.

A Step-By-Step Guide to Using SOLAR -10.7B Instruct

We have enough background about the SOLAR-10.7B model, and it is time to get our hands dirty.

This section aims to provide all the instructions to run the SOLAR 10.7 Instruct v1.0 - GGUF model from upstage.

The codes are inspired by the official documentation on Hugging Face. The main steps are defined below:

- Install and import the necessary libraries

- Define the SOLAR-10.7B model to use from Hugging Face

- Run the model inference

- Generate the result from users’s request

Libraries Installation

The main libraries used are transformers and accelerate.

- The transformers library provides access to pre-trained models, and the version specified here is 4.35.2.

- The accelerate library is designed to simplify running machine learning models on different hardware (CPUs, GPUs) without having to deeply understand the hardware specifics.

%%bash

pip -q install transformers==4.35.2

pip -q install accelerateImport Libraries

Now that the installation is completed, we proceed by importing the following necessary libraries:

- torch is the PyTorch library, a popular open-source machine learning library used for applications like computer vision and NLP.

- AutoModelForCausalLM is used to load a pre-trained model for causal language modeling , and AutoTokenizer is responsible for converting text into a format that the model can understand (tokenization).

import torch

from transformers import AutoModelForCausalLM, AutoTokenizerGPU Configuration

The model being used is the version 1 of the SOLAR-10.7B model from Hugging Face.

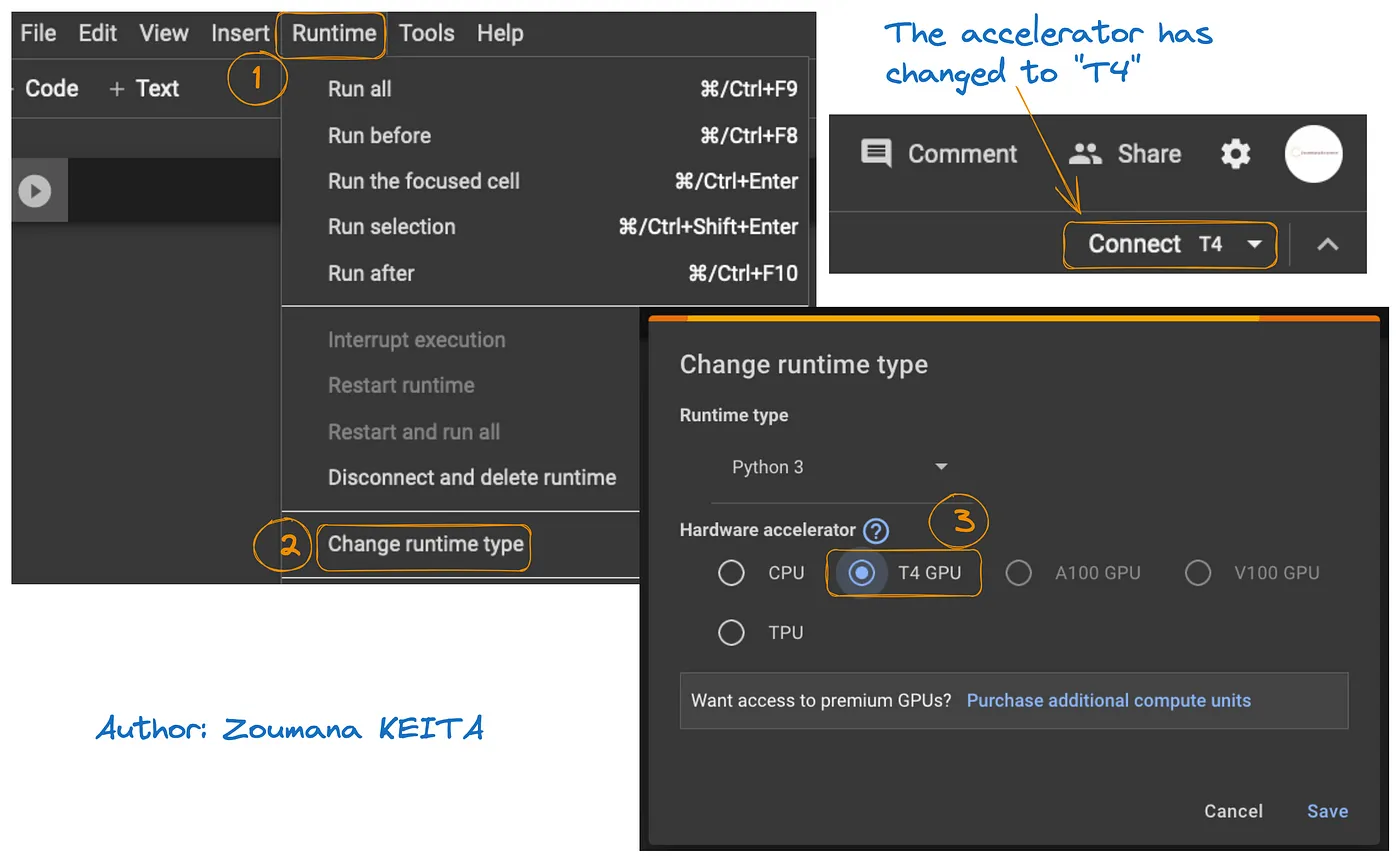

A GPU resource is necessary for speeding up the model loading and inference process.

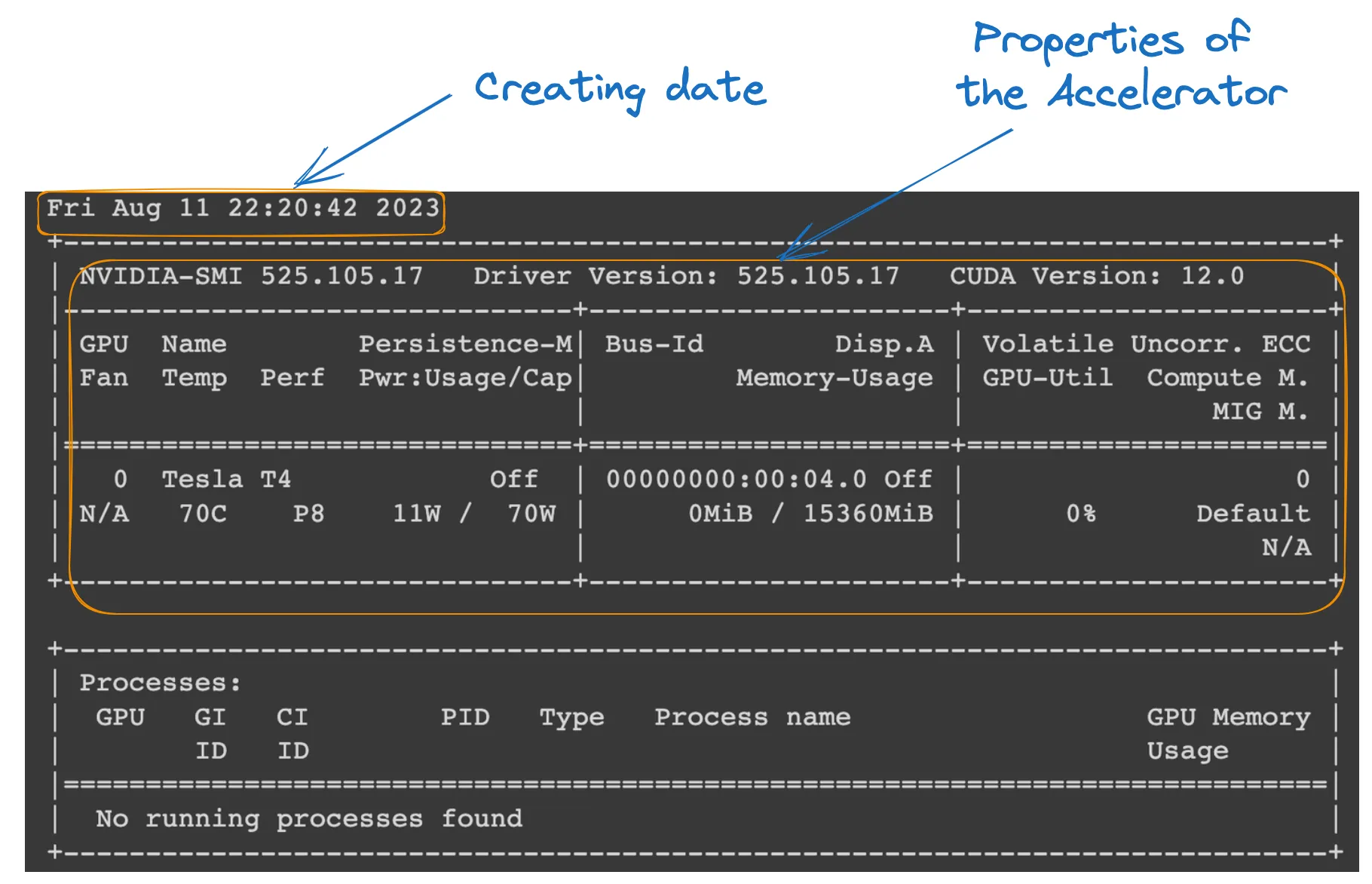

The access to GPU on Google Colab is illustrated in the graphic below:

- From the Runtime tab, select Change runtime

- Then, choose T4 GPU from the Hardware accelerator section and Save the changes

This will switch the default runtime to T4:

We can check the properties of the runtime by running the following command from the Colab notebook.

!nvidia-smi

GPU properties

Model definition

Everything is set up; we can further with the loading of the model as follows:

model_ID = "Upstage/SOLAR-10.7B-Instruct-v1.0"

tokenizer = AutoTokenizer.from_pretrained(model_ID)

model = AutoModelForCausalLM.from_pretrained(

model_ID,

device_map="auto",

torch_dtype=torch.float16,

)- model_ID is a string that uniquely identifies the pre-trained model we want to use. In this case, "Upstage/SOLAR-10.7B-Instruct-v1.0" is specified.

- AutoTokenizer.from_pretrained(model_ID) loads a tokenizer pre-trained on the model_ID specified, preparing it for processing text input.

- AutoModelForCausalLM.from_pretrained() loads the causal language model itself, with device_map="auto" to automatically use the best available hardware (the GPU we have set up), and torch_dtype=torch.float16 for using 16-bit floating-point numbers to save memory and potentially speed up computations.

Model inference

Before generating a response, the input text (user's request) is formatted and tokenized.

- user_request contains the question or input for the model.

- conversation formats the input as part of a conversation, tagging it with a role (e.g., 'user').

- apply_chat_template applies a conversational template to the input, preparing it for the model in a format it understands.

- tokenizer(prompt, return_tensors="pt") converts the prompt into tokens and specifies the tensor type ("pt" for PyTorch tensors), and .to(model.device) ensures the input is on the same device (CPU or GPU) as the model.

user_request = "What is the square root of 24?"

conversation = [ {'role': 'user', 'content': user_request} ]

prompt = tokenizer.apply_chat_template(conversation, tokenize=False, add_generation_prompt=True)

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)Result generation

The final section uses the model to generate a response to the input question and then decodes and prints the generated text.

- model.generate() generates text based on the provided inputs, with use_cache=True to speed up generation by reusing previously computed results. max_length=4096 limits the maximum length of the generated text.

- tokenizer.decode(outputs[0]) converts the generated tokens back into human-readable text.

- print statement displays the generated answer to the user's question.

outputs = model.generate(**inputs, use_cache=True, max_length=4096)

output_text = tokenizer.decode(outputs[0])

print(output_text)The successful execution of the code above generates the following result:

By replacing the user request with the following text, we get the response generated

user_request = "Tell me a story about the universe"

Limitations of the SOLAR-10.7B model

Despite all the benefits of the SOLAR-10.7B, it has its own limitations like any other large language model, and the main ones are highlighted below:

- Thorough hyperparameter exploration: the need for a more thorough exploration of hyperparameters of the model during the Depth Up-Scaling (DUS) is a key limitation. This caused the removal of 8 layers from the base model due to hardware limitations.

- Computational demanding: the model is significantly demanding in computation resources, and this limits its use by individuals and organizations with lower computational capabilities.

- Vulnerability to bias: potential bias in the training data could impact the performance of the model for some use cases.

- Environmental concern: the training and inferencing of the model requires significant energy consumption, which can raise environmental concerns.

Conclusion

This article has explored the SOLAR-10.7B model, highlighting its contribution to artificial intelligence through the depth up-scaling approach. It has outlined the model’s operation and potential applications, and provided a practical guide for its use, from installation to generating results.

Despite its capabilities, the article also addressed the SOLAR-10.7B model's limitations, ensuring a well-rounded perspective for users. As AI continues to evolve, SOLAR-10.7B exemplifies the strides made toward more accessible and versatile AI tools.

For those looking to delve deeper into AI's potential, our tutorial, FLAN-T5 Tutorial: Guide and Fine-Tuning, offers a complete guide to fine-tuning a FLAN-T5 model for a question-answering task using transformers library and running optimized inference on a real-world scenario. You can also find our Fine-Tuning GPT-3.5 tutorial and our code-along on fine-tuning your own LlaMA 2 model.

A multi-talented data scientist who enjoys sharing his knowledge and giving back to others, Zoumana is a YouTube content creator and a top tech writer on Medium. He finds joy in speaking, coding, and teaching . Zoumana holds two master’s degrees. The first one in computer science with a focus in Machine Learning from Paris, France, and the second one in Data Science from Texas Tech University in the US. His career path started as a Software Developer at Groupe OPEN in France, before moving on to IBM as a Machine Learning Consultant, where he developed end-to-end AI solutions for insurance companies. Zoumana joined Axionable, the first Sustainable AI startup based in Paris and Montreal. There, he served as a Data Scientist and implemented AI products, mostly NLP use cases, for clients from France, Montreal, Singapore, and Switzerland. Additionally, 5% of his time was dedicated to Research and Development. As of now, he is working as a Senior Data Scientist at IFC-the world Bank Group.