Track

Every time we click, post, or stream something, we create data that can be processed into meaningful insights and predictions with the right tools. By 2028, it’s estimated that we’ll generate around 394 zettabytes of data annually (source: Statista).

Being able to process that data in real time allows us to take instant action, such as detecting fraud or making personalized recommendations. That’s where Apache Kafka comes into play. It empowers businesses to update their data strategies with event streaming architecture.

This guide will explore how to learn Apache Kafka from scratch. I’ll help you craft a learning plan, share my best tips for learning it effectively, and provide useful resources to help you find roles that require Apache Kafka.

Introduction to Apache Kafka

What Is Apache Kafka?

Processing data in real time gives us great opportunities. If a bank detects a weird purchase with our credit card, they can stop the transaction immediately. Or, if we are searching for a product, product suggestions can pop up as we browse the website. This speed means better decisions, happier customers, and a more efficient business.

Apache Kafka is a high-throughput, distributed data store optimized for collecting, processing, storing, and analyzing streaming data in real time. It allows different applications to publish and subscribe to streams of data, enabling them to communicate and react to events as they happen.

Because it efficiently manages data streams, Kafka has become very popular. It is used in different systems, from online shopping and social media to banking and healthcare.

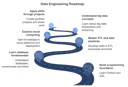

What makes Apache Kafka popular?

Apache Kafka has become popular among those data practitioners who face the challenges of handling real-time data streams. We can explain its popularity by several key factors:

- High throughput: Kafka can handle huge amounts of data with great speed and efficiency, making it ideal for processing data streams in real time.

- Fault tolerance: Kafka's distributed architecture ensures that our data is replicated across multiple brokers, conferring our application resilience against failures and ensuring data durability.

- Scalability: Kafka can easily scale to handle growing data volumes and user demands by adding more brokers to the cluster.

- Real-time processing: Kafka's ability to handle real-time data streams enables our applications to respond to events as they occur, giving us real-time decision-making opportunities.

- Persistence: Kafka stores data durably, allowing our applications to access historical data for analysis and auditing purposes.

Main features of Apache Kafka

Apache Kafka is currently used in applications requiring real-time processing and analyzing data. But what features make it a powerful tool for handling streaming data? Let’s have a look at them:

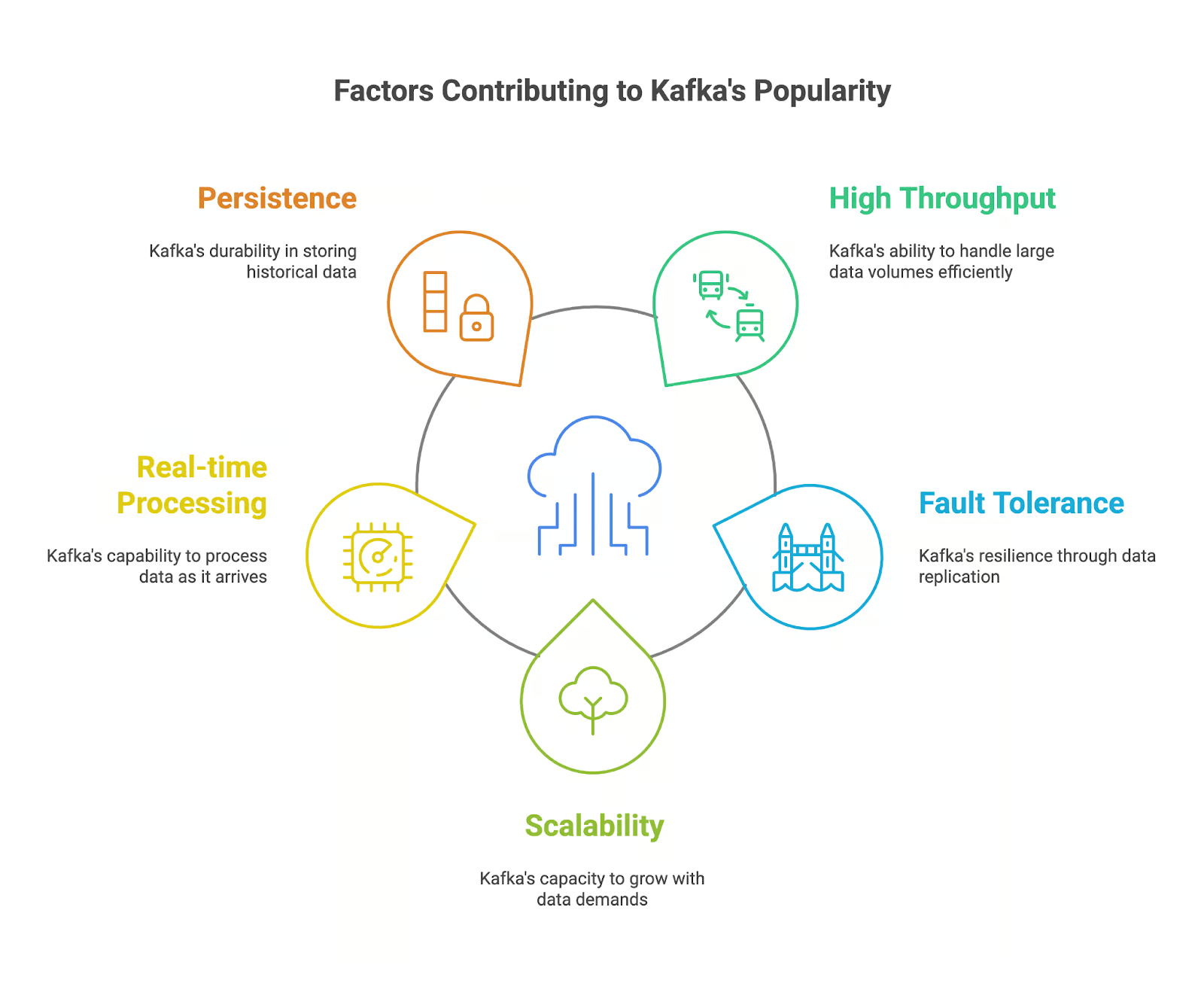

- Publisher-subscriber model: Kafka follows a publisher-subscriber model, where producers publish data on topics, and consumers subscribe to these topics to receive data.

- Topics and partitions: Data in Kafka is organized into topics, further divided into partitions for scalability and parallelism.

- Brokers and clusters: Kafka brokers are responsible for storing and serving data. A Kafka cluster consists of multiple brokers working together to provide fault tolerance and high availability.

- Producers and consumers: Producers publish data to Kafka topics, while consumers subscribe to topics and process the data.

- ZooKeeper integration: Kafka relies on ZooKeeper to manage cluster metadata and ensure broker coordination.

The publisher-subscriber model that Kafka relies on. Source: Amazon

Why Is Learning Apache Kafka so Useful?

Learning Apache Kafka makes you a valuable candidate for employers and companies that generate and process data at high rates. Apache Kafka, with its powerful features and widespread use, has become a key tool for building modern data architectures and applications.

There is a demand for skills in Apache Kafka

I’ve mentioned that Kafka has become very popular in recent years. It’s estimated that Apache Kafka is currently used by over 80% of the Fortune 100 companies.

The increasing usage of Kafka across various industries, along with its role in enabling real-time data solutions, makes Kafka skills highly valuable in the job market. According to PayScale and ZipRecruiter, as of November 2024, the average salary of an engineer with Kafka skills in the United States is $100,000 per year.

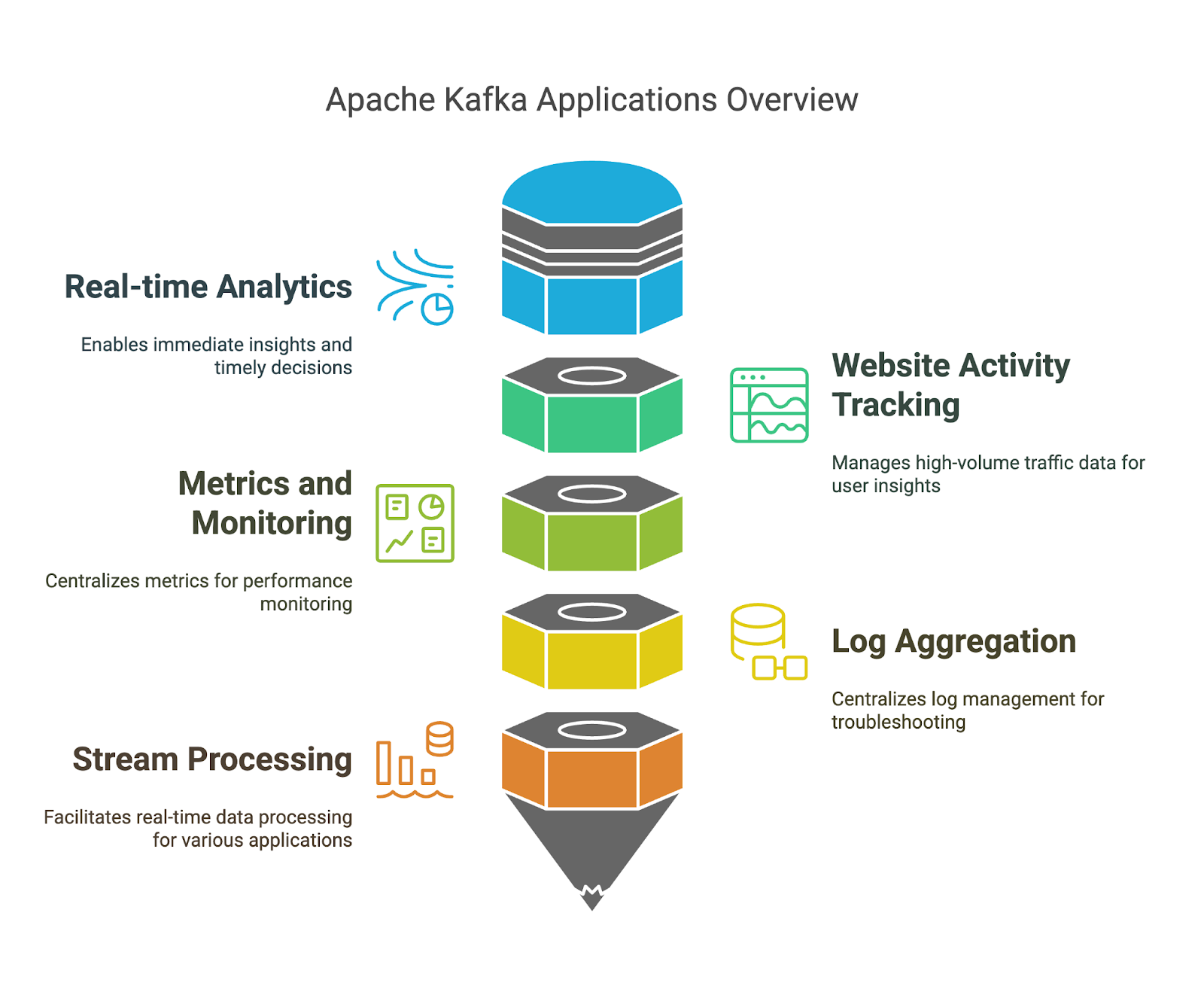

Apache Kafka has a variety of applications

We’ve already mentioned the strengths of Apache Kafka, but let’s look at a few specific examples of where you can use them:

- Real-time analytics: Using Kafka, we can perform real-time data ingestion and processing which helps us gain immediate insights from streaming data and make timely decisions.

- Website activity tracking: Because Kafka can handle high-volume website traffic data, we can understand user behavior, website performance, and popular content.

- Metrics and monitoring: Kafka can collect and process metrics from various systems and applications. We can take advantage of its centralized platform for monitoring performance and identifying potential issues.

- Log aggregation: Kafka can aggregate logs from multiple sources. We can leverage its centralized log management and analysis for troubleshooting and security monitoring.

- Stream processing: Kafka Streams allows us to build applications that process data in real time. This allows us to develop efficient models for fraud detection, anomaly detection, and real-time recommendations.

How to Learn Apache Kafka from Scratch in 2026

If you learn Apache Kafka methodically, you have more chances of success. Let’s focus on a few principles you can use in your learning journey.

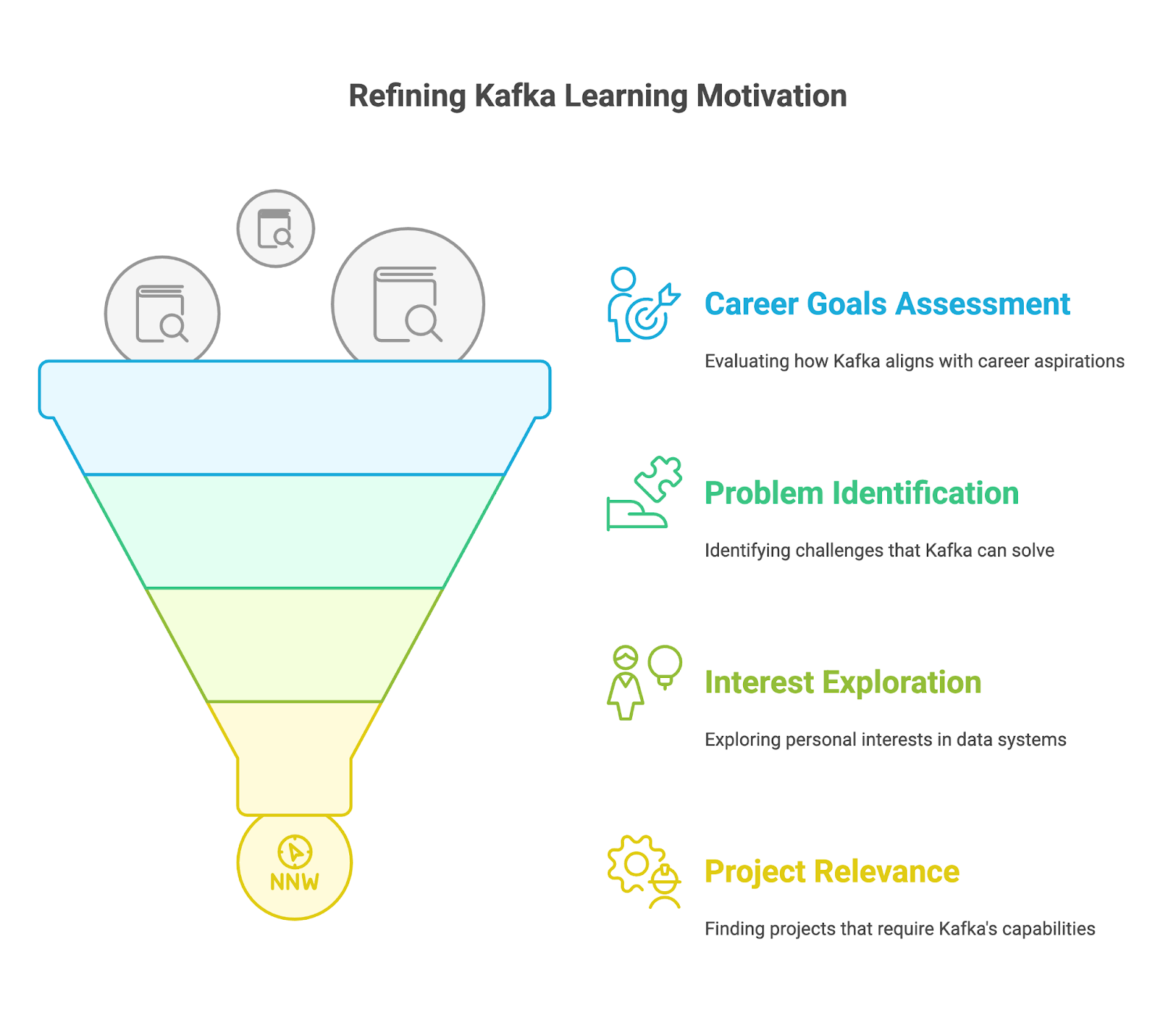

1. Understand why you’re learning Apache Kafka

Before you learn technical details, define your motivation for learning Apache Kafka. Ask yourself:

- What are my career goals?

- Is Kafka a skill you need to advance in your current role or land a dream job?

- Are you aiming for a career transition into big data engineering, real-time application development, or data streaming?

- Which opportunities do you see opening up by mastering Kafka? Think about specific roles like Kafka Engineer, Data Architect, or Software Engineer with a focus on real-time systems.

- What problems am I trying to solve?

- Are you facing challenges with existing messaging systems that Kafka could potentially address?

- Do you need to build applications that can handle the complexities of real-time data streams?

- Are you processing high volumes of events from IoT devices, social media feeds, or financial transactions?

- Are you struggling to integrate data from different sources and systems reliably and efficiently?

- What interests me?

- Does the idea of building highly scalable and fault-tolerant distributed systems excite you?

- Are you interested in processing data in real-time and the potential it unlocks for businesses and applications?

- Are you interested in exploring the broader ecosystem of big data technologies and how Kafka fits into the picture?

- Do I have a specific project in mind that requires Kafka's capabilities?

- Are you working on a personal project that involves real-time data streaming, such as building a chat application?

- Does your company have an upcoming project that requires Kafka expertise, such as migrating to a new messaging system, implementing real-time analytics, or building a microservices architecture?

2. Start with the basics of Apache Kafka

After you identify your goals, master the basics of Kafka and understand how they work.

Installing Apache Kafka

To learn Apache Kafka, we first need to get it on our local machine. Because Kafka is developed using Java, we should ensure we have Java installed. Then, we need to download the Kafka binaries from the official website and extract them.

We now start ZooKeeper, followed by the Kafka server, using the provided scripts. Finally, we can use the command-line tools to create topics and try producing and consuming some messages to grasp the basics. DataCamp's Apache Kafka for Beginners article can help you get up to speed.

Understanding the publisher-subscriber model

The first concept we should learn is the publisher-subscriber model in Kafka. This is one of the key reasons why Apache Kafka can handle streaming data efficiently. Understand how producers publish messages and consumers subscribe to these topics, receiving only messages relevant to their interests.

DataCamp's Introduction to Apache Kafka course provides an introduction, offering interactive exercises and practical examples.

Exploring Kafka components

When describing the publisher-subscriber model, we have mentioned some key Kafka components: Producer and Consumer. However, we should explore all core components. We should learn how Kafka organizes data into topics and partitions, and understand how partitions contribute to scalability and fault tolerance.

We should also understand how brokers form a cluster to provide high availability and fault tolerance.

Check the DataCamp's Introduction to Apache Kafka course as well as the Apache Kafka for Beginners Article to have a clear picture of these topics.

3. Master intermediate Apache Kafka skills

Once you're comfortable with the basics, it's time to explore intermediate Apache Kafka skills.

Kafka Connect for Data Integration

One of the key features of Apache Kafka is its ability to handle large volumes of streaming data. Kafka Connect is an open-source, free component of Apache Kafka that simplifies data integration between file systems, databases, search indexes, and key-value stores.

Learning how to use Connect can help us unlock efficient data flow for analytics, application integration, and building a robust, scalable data architecture. Check our Apache Kafka for Beginners Article for more details.

Kafka Streams for stream processing

Another advanced feature of Kafka is Streams. We should learn how to build stream processing applications directly within Kafka with the help of Streams. It offers APIs and a high-level Domain-Specific Language for handling, converting, and evaluating continuous streams of data. Learn more details on Apache Kafka for Beginners Article.

Monitoring and managing Kafka

As with any other technology, it is always good to keep track of its performance. To do that, we should learn how to monitor the health and performance of our Kafka cluster. We can explore tools and techniques for monitoring key metrics, managing topics and partitions, and ensuring optimal performance.

4. Learn Apache Kafka by doing

Taking courses about Apache Kafka is an excellent way to get familiar with the technology. However, to get proficient in Kafka, you need to solve challenging and skill-building problems, such as those you’ll face on real-world projects.

You can start by experimenting with simple data streaming tasks, like producing and consuming messages, then gradually increase complexity by exploring topics like partitioning, fault tolerance, and integration with external systems using Kafka Connect.

Here are some ways to practice your skills:

- Set up a local Kafka cluster: Install Kafka on your local machine and experiment with producing and consuming messages. Use a cloud provider like Confluent Cloud, AWS MSK, or Azure HDInsight Kafka to experience a managed Kafka environment. This is great for learning about scaling and operational aspects.

- Participate in webinars and code-alongs. Check for upcoming DataCamp webinars or Confluent Webinars where you can follow along with Apache Kafka tutorials and code examples. This will help you reinforce your understanding of concepts and gain familiarity with coding patterns.

- Contribute to open-source projects. Contribute to Apache Kafka projects on platforms like GitHub to gain experience collaborating with others and working on real-world projects. Apache Kafka itself offers such opportunities.

5. Build a portfolio of projects

You will complete different projects as you keep moving in your Apache Kafka learning journey. To showcase your Apache Kafka skills and experience to potential employers, you should compile them into a portfolio. This portfolio should reflect your skills and interests and be tailored to the career or industry you're interested in.

Try to make your projects original and showcase your problem-solving skills. Include projects that demonstrate your proficiency in different aspects of Kafka, such as building data pipelines with Kafka Connect, developing streaming applications with Kafka Streams, or working with Kafka clusters. Document your projects thoroughly, providing context, methodology, code, and results. You can use platforms like GitHub to host your code and Confluent Cloud for a managed Kafka environment.

6. Keep challenging yourself

Learning Kafka is a continuous journey. Technology constantly evolves, and new features and applications are being developed regularly. Kafka is not the exception to that.

Once you’ve mastered the fundamentals, you can look for more challenging tasks and projects such as performance tuning for high-throughput systems, and implementing advanced stream processing with Kafka Streams. Focus on your goals and specialize in areas that are relevant to your career goals and interests.

Keep up to date with the new developments, like new features or KIP proposals, and learn how to apply them to your projects. Keep practicing, seek out new challenges and opportunities, and embrace the idea of making mistakes as a way to learn.

An Example Apache Kafka Learning Plan

Even though each person has their way of learning, it’s always a good idea to have a plan or guide to follow for learning a new tool. I’ve created a potential learning plan outlining where to focus your time and efforts if you’re just starting with Apache Kafka.

Month 1-3 learning plan

- Core concepts. Install Apache Kafka and start ZooKeeper and the Kafka server. Understand how to use the command-line tools to create topics.

- Java and Scala. Because Kafka is developed in these programming languages, you can also learn the basics of Java and Scala.

- Publisher-subscriber model. Understand the core system behind Kafka, the publisher-subscriber model. Explore how messages are produced and consumed asynchronously. Complete the Introduction to Apache Kafka course.

- Kafka components: Master the key concepts and elements of Kafka, including the topics and partitions, brokers and clusters, producers, and consumers. Understand their roles and how they interact to ensure fault tolerance and scalability. Explore tools like Kafka Manager to visualize and monitor your Kafka cluster.

Month 4-6 learning plan

- Kafka Connect. Learn to connect Kafka with various systems like databases (MySQL, PostgreSQL), cloud services (AWS S3, Azure Blob Storage), and other messaging systems (JMS, RabbitMQ) using Kafka Connect. Explore different connector types (source and sink) and configuration options.

- Kafka Streams. Explore concepts like stateful operations, windowing, and stream-table duality. Learn to write, test, and deploy stream processing applications within your Kafka infrastructure.

- Monitoring and managing Kafka. Learn to monitor key Kafka metrics like throughput, latency, and consumer lag using tools like Burrow, and Kafka Manager. Understand how to identify and troubleshoot performance bottlenecks and ensure the health of your Kafka cluster.

Month 7 onwards

- Develop a project. Dive into advanced Kafka topics like security, performance tuning, and advanced stream processing techniques. Contribute to the Kafka community and build real-world projects.

- Get Kafka certification. Prepare for and obtain a Kafka certification, such as the Confluent Certified Developer for Apache Kafka. Check this Kafka certification guide.

- Data Engineer with Python Track. Complete the Data Engineer track on DataCamp, which covers essential data engineering skills like data manipulation, SQL, cloud computing, and data pipelines.

Six Tips for Learning Apache Kafka

I imagine that by now, you are ready to jump into learning Kafka and get your hands on a large dataset to practice your new skill. But before you do, let me highlight these tips that will help you navigate the path to Apache Kafka proficiency.

1. Narrow your scope

Kafka is a versatile tool that can be used in many ways. You should identify your specific goals and interests within the Kafka ecosystem. What aspect of Kafka are you most drawn to? Are you interested in data engineering, stream processing, or Kafka administration? A focused approach can help you gain the most relevant aspects and knowledge of Apache Kafka to fulfill your interests.

2. Practice frequently and constantly

Consistency is key to mastering any new skill. You should set aside dedicated time to practice Kafka. Just a short amount of time every day will do. You don’t need to tackle complex concepts every day. You can do hands-on exercises, work through tutorials, and experiment with different Kafka features. The more you practice, the more comfortable you'll become with the platform.

3. Work on real projects

This is one of the key tips, and you will read it several times in this guide. Practicing exercises is great for gaining confidence. However, applying your Kafka skills to real-world projects is what will make you excel at it.

Start with simple projects and questions and gradually take on more complex ones. This could involve setting up a simple producer-consumer application, then advancing to building a real-time data pipeline with Kafka Connect, or even designing a fault-tolerant streaming application with Kafka Streams. The key is to continuously challenge yourself and expand your practical Kafka skills.

4. Engage in a community

Learning is often more effective when done collaboratively. Sharing your experiences and learning from others can accelerate your progress and provide valuable insights.

To exchange knowledge, ideas, and questions, you can join some groups related to Apache Kafka, and attend meet-ups and conferences. you can join online communities like the Confluent Slack channel or Confluent Forum where you can interact with other Kafka enthusiasts. You can also join virtual or in-person meet-ups, featuring talks by Kafka experts, or conferences, such as ApacheCon.

5. Make mistakes

As with any other technology, learning Apache Kafka is an iterative process. Learning from your mistakes is an essential part of this process. Don't be afraid to experiment, try different approaches, and learn from your errors.

Try different configurations for your Kafka producers and consumers, explore various data serialization methods (JSON, Avro, Protobuf), and experiment with different partitioning strategies. Push your Kafka cluster to its limits with high message volumes, and observe how it handles the load. Analyze consumer lag, fine-tune configurations, and understand how your optimizations impact performance.

6. Don’t rush

Take your time to truly grasp core concepts like topics, partitions, consumer groups, and the role of ZooKeeper. If you build now a solid foundation, it will be easier for you to understand more advanced topics and troubleshoot issues effectively. Break down the learning process into smaller steps, and allow yourself time to understand the information. A slow and steady approach often leads to deeper understanding and mastery.

Best Ways to Learn Apache Kafka

Let’s cover a few efficient methods of learning Apache Kafka.

Take online courses

Online courses offer an excellent way to learn Apache Kafka at your speed. DataCamp offers a Kafka intermediate course. This course covers core concepts of Kafka, including the publisher-subscriber model, topics and partitions, brokers and clusters, producers, and consumers.

Follow online tutorials

Tutorials are another great way to learn Apache Kafka, especially if you are new to the technology. They contain step-by-step instructions on how to perform specific tasks or understand certain concepts. For a start, consider these tutorials:

Read blogs

To have a deeper knowledge of the advantages of Apache Kafka, you should also understand what are the key similarities and differences with other technologies. You can read articles on how Kafka compares with other tools such as the following:

Discover Apache Kafka through books

Books are an excellent resource for learning Apache Kafka. They offer in-depth knowledge and insights from experts alongside code snippets and explanations. Here are some of the most popular books on Kafka:

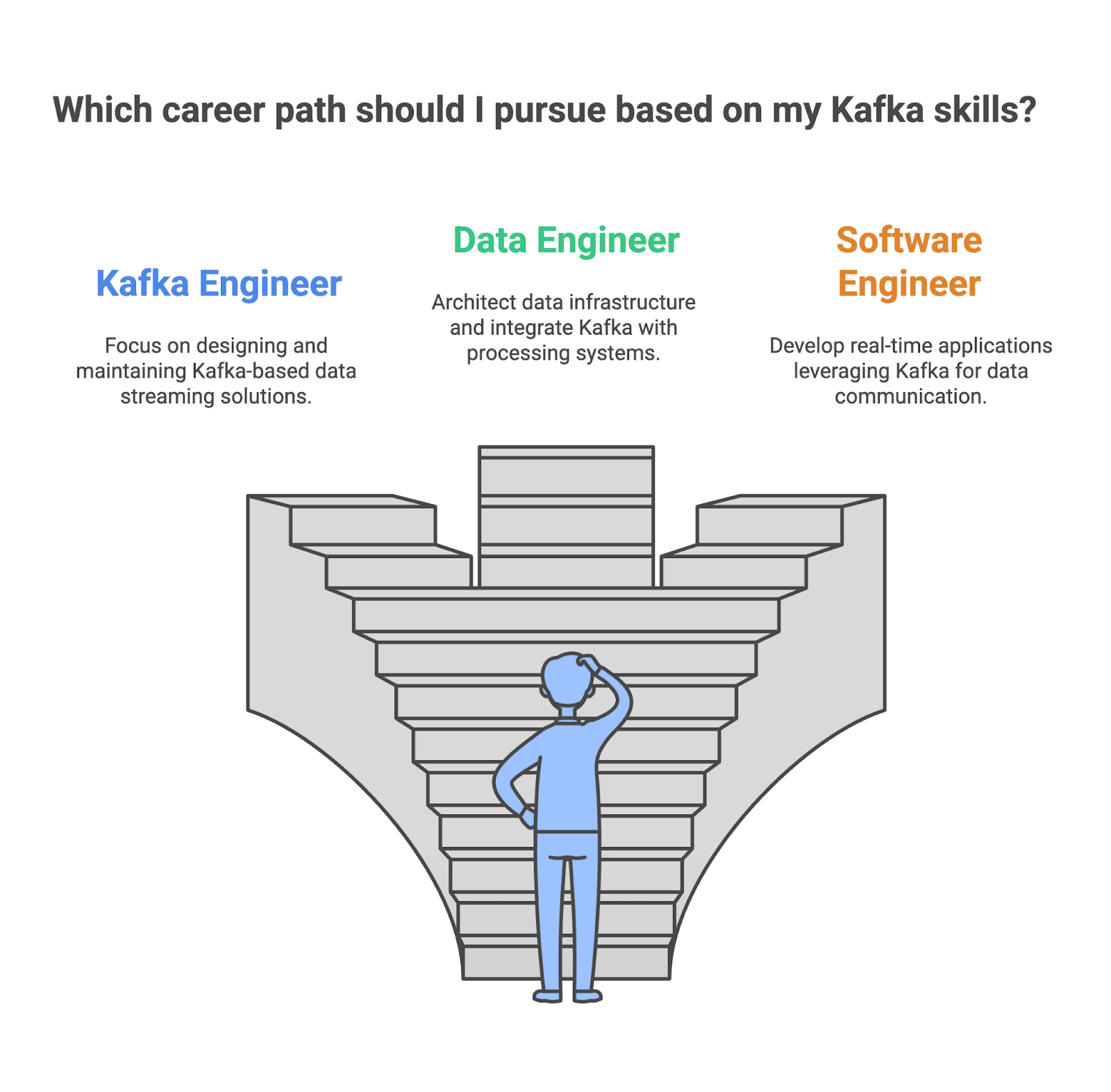

The Different Careers in Apache Kafka Today

Because the adoption of Apache Kafka continues to grow, so do the career opportunities for professionals with Kafka skills, from specific Kafka engineers to software engineers. If you are evaluating the fit of your Kafka skills, consider these roles:

Kafka engineer

As a Kafka engineer, you are responsible for designing, building, and maintaining Kafka-based data streaming solutions with high availability and scalability. You will need to ensure the reliable and efficient flow of data within the company, as well as, monitor and optimize Kafka performance to ensure optimal throughput and latency.

- Key skills:

- Strong understanding of Kafka architecture and components.

- Proficiency in Kafka administration and configuration.

- Experience with data pipelines and ETL processes.

- Knowledge of stream processing concepts and Kafka Streams.

- Key tools used:

- Apache Kafka

- Kafka Connect

- Kafka Streams

- ZooKeeper

- Monitoring tools

Data engineer

As a data engineer, you are the architect of data infrastructure, responsible for designing and building the systems that handle a company’s data. Kafka often plays an important role in these data pipelines, enabling efficient and scalable real-time data streaming between different systems. You will need to ensure that Kafka integrates seamlessly with other components.

- Key skills:

- Strong understanding of data warehousing and data modeling concepts.

- Proficiency in data processing tools like Spark and Hadoop.

- Experience with cloud platforms like AWS, Azure, or GCP.

- Knowledge of Kafka for real-time data streaming.

- Key tools used:

- Apache Kafka

- Apache Spark

- Hadoop

- Hive

- Cloud platforms (AWS, Azure, GCP)

Software engineer

As a software engineer, you will use Kafka to build real-time applications like chat platforms and online games. You will need to integrate it into the application code to ensure smooth data flow by connecting with various messaging systems and APIs. You will also optimize these applications for scalability and performance, allowing them to handle large amounts of real-time data.

- Key skills:

- Strong programming skills in languages like Java, Python, or Scala.

- Understanding of Kafka's publisher-subscriber model and API.

- Experience with building distributed systems and applications.

- Knowledge of software engineering best practices.

- Key tools used:

- Apache Kafka

- Programming languages (Java, Python, Scala)

- Messaging systems (e.g., RabbitMQ, ActiveMQ)

- Development tools and frameworks

|

Role |

What you do |

Your key skills |

Tools you use |

|

Kafka Engineer |

Design and implement Kafka clusters for high availability and scalability. |

Kafka architecture, components, administration and configuration, stream processing concepts. |

Apache Kafka, Kafka Connect, Kafka Streams, ZooKeeper |

|

Data Engineer |

Design and implement data warehouses and data lakes, integrate Kafka with other data processing and storage systems |

Proficiency in data processing tools, experience with cloud platforms, and knowledge of Kafka. |

Apache Kafka, Apache Spark, Hadoop, Hive, Cloud platforms |

|

Software Engineer |

Design and develop applications that leverage Kafka for real-time data communication. |

Programming skills, distributed systems and applications, Kafka publisher-subscriber knowledge. |

Apache Kafka, Java, Python, Scala, RabbitMQ, ActiveMQ, Development tools and frameworks. |

How to Find a Job That Uses Apache Kafka

While having a degree can be very valuable when pursuing a career in a data-related role that uses Apache Kafka, it's not the only path to succeed. More and more people from diverse backgrounds and with different experiences are starting to work in data-related roles. With dedication, consistent learning, and a proactive approach, you can land your dream job that uses Apache Kafka.

Keep learning about Kakfa

Stay updated with the latest developments in Kafka. Follow influential professionals who are involved with Apache Kafka on social media, read Apache Kafka-related blogs, and listen to Kafka-related podcasts.

Engage with influential figures, such as Neha Narkhede, who co-created Kafka and is also the CTO of Confluent. You'll gain insights into trending topics, emerging technologies, and the future direction of Apache Kafka. You can also read the Confluent blog, which offers in-depth articles and tutorials on a wide range of Kafka topics, from architecture and administration to use cases and best practices.

You should also check out industry events, such as webinars at Confluent or the annual Kafka Summit.

Develop a portfolio

You need to stand out from other candidates. One good way to do it is to build a strong portfolio that showcases your skills and completed projects.

Your portfolio should contain projects that demonstrate your proficiency in building data pipelines, implementing stream processing applications, and integrating Kafka with other systems.

Develop an effective resume

Hiring managers have to review hundreds of resumes and distinguish great candidates. Also, many times, your resume is passed through Applicant Tracking Systems (ATS), automated software systems used by many companies to review resumes and discard those that don't meet specific criteria. So, you should build a great resume and craft an impressive cover letter to impress both ATS and your recruiters.

Get Noticed by a hiring manager

If you get noticed by the hiring manager or your effective resume goes through the selection process, you should next prepare for a technical interview. To be ready, you can check this article on 20 Kafka Interview Questions for Data Engineers.

Conclusion

Learning Apache Kafka can open doors for better opportunities and career outcomes. The path to learning Kafka is rewarding but requires consistency and hands-on practice. Experimenting and solving challenges using this tool can accelerate your learning process and provide you with real-world examples to showcase your practical skills when you are looking for jobs.

FAQs

Why is the demand for Apache Kafka skills growing?

Businesses need to handle real-time data streams, build scalable applications, and integrate diverse systems, all of which Kafka excels at.

What are the main features of Apache Kafka?

Apache Kafka's main features are high throughput, fault tolerance, scalability, and real-time data streaming capabilities, making it ideal for building robust data pipelines and distributed applications.

What are some of the roles that use Apache Kafka?

Data Engineer, Kafka Engineer, and Software Engineer.