Track

Data contracts are the backbone of data quality and scalability for distributed data solutions. They specify the format, schema, and protocols governing the exchange between database entities. These formal agreements eliminate ambiguities and undocumented assumptions about the data.

In this article, I will clarify the concept of data contracts by offering both foundational and advanced techniques to facilitate their effective implementation.

Understanding Data Contracts

A single data contract delineates the precise parameters for data exchange between two models. These formal agreements ensure that there are no ambiguities regarding the data format and schemas.

Data contract definitions and validation are crucial for effective cross-team collaboration.

In a nutshell, a data contract is a formal agreement between the process that changes the original state of our data (producers) and destinations (consumers). It’s very similar to how business contracts work. They represent obligations between suppliers and consumers of a business product. Data contracts do the same with data products, i.e., tables, views, data models, etc.

The aim is to mitigate data pipeline downstream disruptions and make data transformations stable and reliable.

The main components of a data contract are schema (columns and formats), semantic layer part (measures, calculations, and restrictions), service level agreements (SLAs), and data governance.

The benefits of data contracts include:

- Data quality automation and checks when new data outputs are created or updated.

- Enabling efficient scaling, especially for distributed data architecture, e.g., data mesh.

- Improving the data development lifecycle with a focus on building tools for contract validation.

- Fostering collaboration through feedback between data producers and consumers.

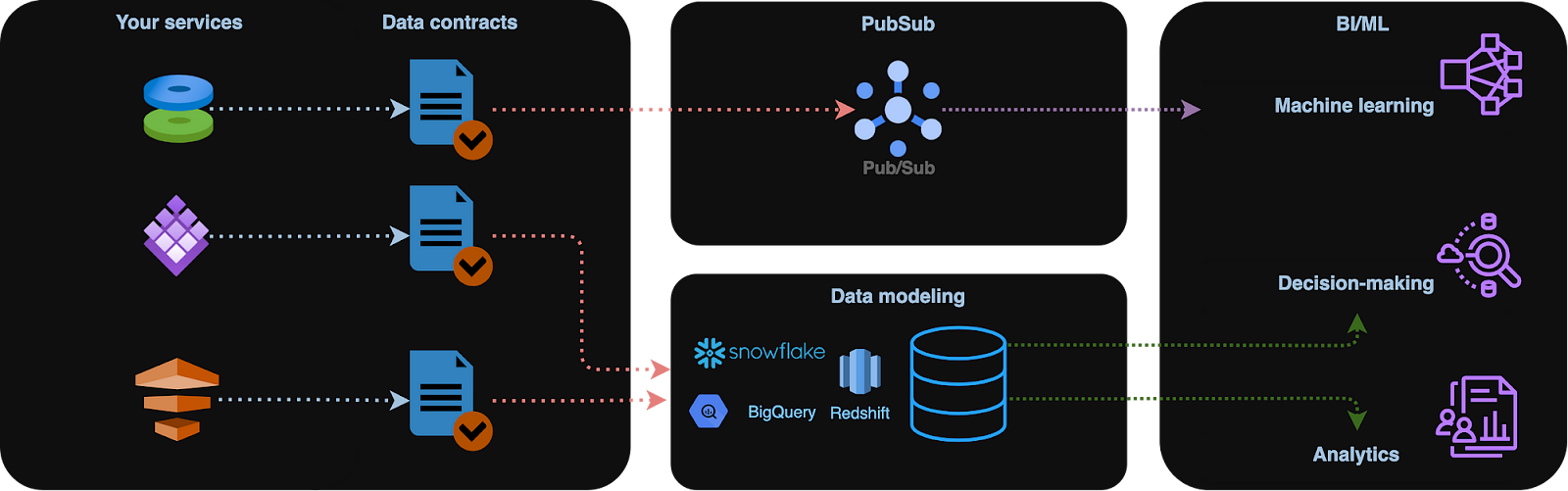

Data contracts. Image by author.

Data contract example with dbt

In a data contract, schemas define attribute names, data types, and whether attributes are mandatory. They may also specify the format, length, and acceptable value ranges for columns.

Let’s consider a dbt model schema defined as follows in a YAML file. Our table schema is defined under columns:

models:

- name: dim_orders

config:

materialized: table

contract:

enforced: true

columns:

- name: order_id

data_type: int

constraints:

- type: not_null

- name: order_type

data_type: stringNow let’s imagine we define our dim_orders model as this:

select

'abc123' as order_id,

'Some order type' as order_typeHaving a contract as enforced: true in our model definition will trigger the following error if we try to materialize dim_orders into a table in our data platform:

20:53:45 Compilation Error in model dim_customers (models/dim_orders.sql)

20:53:45 This model has an enforced contract that failed.

20:53:45 Please ensure the name, data_type, and number of columns in your contract match the columns in your model's definition.

20:53:45

20:53:45 | column_name | definition_type | contract_type | mismatch_reason |

20:53:45 | ----------- | --------------- | ------------- | ------------------ |

20:53:45 | order_id | TEXT | INT | data type mismatch |

20:53:45

20:53:45

20:53:45 > in macro assert_columns_equivalent (macros/materializations/models/table/columns_spec_ddl.sql)The same would have happened with extra columns, SLA checks, and missing metadata, should we have chosen to define them.

A more advanced dbt example would contain enforced model constraints:

# models/schema.yaml

models:

- name: orders

# required

config:

contract:

enforced: true

# model-level constraints

constraints:

- type: primary_key

columns: [id]

- type: FOREIGN_KEY # multi_column

columns: [order_type, SECOND_COLUMN, ...]

expression: "OTHER_MODEL_SCHEMA.OTHER_MODEL_NAME (OTHER_MODEL_FIRST_COLUMN, OTHER_MODEL_SECOND_COLUMN, ...)"

- type: check

columns: [FIRST_COLUMN, SECOND_COLUMN, ...]

expression: "FIRST_COLUMN != SECOND_COLUMN"

name: HUMAN_FRIENDLY_NAME

- type: ...

columns:

- name: FIRST_COLUMN

data_type: DATA_TYPE

# column-level constraints

constraints:

- type: not_null

- type: unique

- type: foreign_key

expression: OTHER_MODEL_SCHEMA.OTHER_MODEL_NAME (OTHER_MODEL_COLUMN)

- type: …Providing a schema definition as a set of rules and constraints applied to the columns of a dataset offers very important information for processing and analyzing data.

Schemas tend to change over time.

This is a common scenario. Let’s imagine our source table added an extra contracted column:

select

'abc123' as order_id,

'Some order type' as order_type,

'USD' as currencyIt’s essential to consider schema changes while performing incremental updates because otherwise, the output of the downstream incremental model would invalidate the contract.

This can be solved by adding on_schema_change: append to the dbt incremental strategy.

Schema validations can be explicit or implicit.

Some big data file formats, like AVRO and Parquet, support onboard schema and implicit schema definitions by default, so there is no need for extra external validation.

On the contrary, schema-less data file formats like JSON require external schema validation. Some Python libraries, such as pydantic or a simple @dataclass, can perform this:

from pydantic import BaseModel

class ConnectionDataRecord(BaseModel):

user: str

ts: int

record = ConnectionDataRecord(user="user1", ts=123456789)If we break the rules and assign some values that don’t match our criteria, the exception will be thrown. For example, the exception will be raised if we try to call ConnectionDataRecord('', 1).

Become a Data Engineer

Semantic Data Contracts

Semantic data validations ensure that data is logically consistent and aligns with the business logic.

Semantic validations have to be explicitly enforced.

Indeed, unlike schema checks, semantic data contracts rely on business logic and must be implemented externally.

Semantics in many use case scenarios looks like an extension of schema-validated contracts. Often, it depends on business rules and represents a set of row conditions that our data model must comply with.

An example of a semantic contract can be the following:

- Metric deviation: from the moving average or any other threshold. Indeed, we can live with the number of active users for yesterday falling below 75% of the 7-day moving average but won’t tolerate 0%.

- Business logic: Transaction monitoring alert flags and fraud prevention scores. In this scenario, the payout must be 0.

- Data lineage: This describes how data entities evolve. For instance,

transaction_completed_atcan’t happen beforecreated_at. - Referential integrity: Entity relationships are important and can be described using semantic contracts, too. Consider the dbt code below. It explains that every refund’s

refund_idmaps back to a validtransaction.id.

- name: refunds

enabled: true

description: An incremental table

columns:

- name: refund_id

tests:

- relationships:

tags: ['relationship']

to: ref('transactions')

field: idIt is up to the end user to set the alert level on semantic contracts, and this is often implemented with custom data quality tests.

Various business logic rules often refer to data integrity. Illogical data scenarios may arise from errors in database and server configurations or the unintentional injection of test data into the production environment. Data integrity checks are designed to validate data against those business rules, helping to identify values that don’t look right or are illogical.

Database entities always relate to each other. This is called entity relationship and is often presented by entity relationship diagram (ERD). Inadequate referential integrity can lead to issues such as missing or incomplete data. Data contracts must address these potential pitfalls to ensure data integrity and accuracy.

For instance, a common relationship is a one-to-many association between customers and orders where a single customer may have multiple orders. In such a scenario, an order is considered valid only if it contains a valid customer_id within the customers dataset.

This type of constraint, a referential integrity constraint, ensures that the relationship between entities is accurately maintained.

Service Level Agreements (SLAs) in Data Contracts

SLAs are an extra level of data quality checks that can be implemented using data contracts.

SLAs refer to data freshness.

Since data models are regularly updated with new data, SLAs may include checks for the latest time by which new data is expected to be available or the maximum allowable delay. For instance, in dbt, it can be achieved using freshness tests:

- name: orders

enabled: true

description: A source table declaration

tests:

- dbt_utils.recency: # https://github.com/dbt-labs/dbt-utils#recency-source

tags: ['freshness']

datepart: day

field: timestamp

interval: 1Suppose we want to generate a report on yesterday's facts; to do that, we want to ensure the data exists. Indeed, it would be odd not to see the new orders for a few days or more.

In the example below, we can test our dataset for unexpected order delays using the test config in dbt. This allows you to select and reference the test using that specific name.

version: 2

models:

- name: orders

columns:

- name: status

tests:

- accepted_values:

name: unexpected_order_status_today

values: ['placed', 'shipped', 'completed', 'returned']

config:

where: "order_date = current_date"By defining a custom name, you gain complete control over how the test is displayed in log messages and metadata artifacts.

Similarly, in real-time data pipelines, data is typically expected to be no more than a few hours old. SLAs are crucial for stream-processing applications where data is being processed in real time with minutes or even seconds of latency.

In streaming apps, we want to check for the maximum allowable delay for late-arriving events and metrics like Mean Time Between Failures (MTBF) and Mean Time to Recovery (MTTR). Implementing this involves meticulously tracking incidents and extracting relevant data from application monitoring and incident management tools such as PagerDuty, Datadog, and Grafana.

Data Governance Contracts

Correctly handling Personally Identifiable Information (PII) is integral to the data transformation process. For many companies, it is crucial that these datasets are at least GDPR-compliant and adhere to data privacy regulations such as HIPAA or PCI DSS.

Data governance contracts ensure adequate pseudonymization or data masking policies are in place.

Consider the dbt code below. Contracts enforced as tests require a user_email to be a SHA256 hash (masked):

models:

- name: customer_data

columns:

- name: user_email

tests:

- dbt_expectations.expect_column_values_to_not_match_regex:

regex: "^(?!.*\b@\b).* # Ensure identifiers do not contain emails

flags: i # Case-insensitive matchingOn the other hand, we might want to enforce pattern matching for other tables. For instance, in the example below, the transaction_reference field must follow the [“TRX-%”, “%-2023”] pattern:

models:

- name: transaction_data

columns:

- name: transaction_reference

tests:

- dbt_expectations.expect_column_values_to_match_like_pattern_list:

like_pattern_list: ["TRX-%", "%-2023"]

match_on: anyData governance contracts can also be beneficial when they include columns with sensitive data, metadata (data owners, etc.), and user roles authorized to access a data product.

For instance, we can indicate our data model owner and model development lifecycle stage using the meta field in dbt:

# models/schema.yaml

version: 2

models:

- name: users

meta:

owner: "@data_mike"

model_maturity: in dev

contains_pii: true

columns:

- name: email

meta:

contains_pii: trueThe meta field allows you to set metadata for a resource, which is then compiled into the manifest.json file generated by dbt and can be viewed in the automatically generated documentation.

We can use packages like dbt-checkpoint to scan it during the pull request to ensure that our mandatory meta fields are present. The hook fails if any model (from a manifest or YAML files) does not have specified meta keys.

The model’s meta keys must be in the YAML file or the manifest.

Data Contract Implementation Patterns

Contract validation can be performed in streaming data pipelines (per row) before data is ingested and after it — at the source (source data model layer).

When we ingest data “as is,” the validation process acts like a transformation step, enforcing contract rules and filtering out invalid data, i.e., into a dedicated database view or a table where data can be investigated further.

Validation checks can be applied post-facto when data is ingested directly into a raw data lake.

The primary advantage of real-time data contract validation is its ability to filter out invalid records before they reach their final destination — a data lake or a data warehouse. This approach is prevalent in event-driven or real-time data processing, such as Change Data Capture (CDC) events. In this type of validation, certain aspects of the contract are verified as data flows through the pipeline.

Data Contracts Tools

The data community is gradually recognizing the potential benefits of data contracts, a constantly evolving data engineering area. Many tools exist in this space, some still in their early stages. dbt can be considered a universal framework for data contracts.

Similar contracts can be implemented using Google’s Dataform at source (source data model layer), i.e., when the data has been successfully ingested into our data warehouse. We would want to use simple row conditions for this.

Consider this example below. It applies certain row conditions for our table:

-- my_table.sqlx

config {

type: "table",

assertions: {

nonNull: ["user_id", "customer_id", "email"]

}

}

SELECT …Below is another example of data contract implementation, which can be achieved using Soda.io, a niche data quality framework:

# Checks for basic validations

checks for dim_customer:

- row_count between 10 and 1000

- missing_count(birth_date) = 0

- invalid_percent(phone) < 1 %:

valid format: phone number

- invalid_count(number_cars_owned) = 0:

valid min: 1

valid max: 6

- duplicate_count(phone) = 0Adjusting the alert settings allows you to configure a check to issue a warning instead of failing the validation. A Soda scan runs the checks specified in an agreement, checks the YAML file or inline within a programmatic invocation, and returns a result for each check: pass, fail, or error.

From my experience, the introduction of data contracts in many companies remains fragmented, depending on factors such as data pipeline design patterns (batch or real-time), data serialization choices, and the systems used for storage and processing.

Data contract implementation depends on business requirements and logic.

Great Expectations, a Python library, can be used to implement semantic-level data contracts. It can be installed using pip: pip install great_expectations.

After running great_expectations init, we can proceed with data validation:

Using v3 (Batch Request) API

___ _ ___ _ _ _

/ __|_ _ ___ __ _| |_ | __|_ ___ __ ___ __| |_ __ _| |_(_)___ _ _ ___

| (_ | '_/ -_) _ | _| | _|\ \ / '_ \/ -_) _| _/ _ | _| / _ \ ' \(_-<

\___|_| \___\__,_|\__| |___/_\_\ .__/\___\__|\__\__,_|\__|_\___/_||_/__/

|_|

~ Always know what to expect from your data ~

Let's create a new Data Context to hold your project configuration.

Great Expectations will create a new directory with the following structure:

great_expectations

|-- great_expectations.yml

|-- expectations

|-- checkpoints

|-- plugins

|-- .gitignore

|-- uncommitted

|-- config_variables.yml

|-- data_docs

|-- validations

OK to proceed? [Y/n]:Consider the code snippet below. It explains how to create a data check definition for the price column:

"expectation_type": "expect_column_values_to_match_regex",

"kwargs": {

"column": "price",

"mostly": 1.0,

"regex": "^\\$([0-9],)*[0-9]+\\.[0-9]{2}$"

},Data Contract Best Practices

There is no right or wrong answer here as, from my experience, a successful data contract implementation relies heavily on business requirements. To make data contracts work effectively, follow these key best practices:

- Scalability: I recommend adding mechanisms for extensibility (e.g.,

on_schema_change: append) and versioning, which would allow for modifying data contract terms without disrupting existing pipeline integrations. Design data contracts with an eye toward future changes and scalability. This approach ensures that the contract can adapt to evolving needs and growth. - Clear rules: I recommend using straightforward and concise language in the data contract to prevent misunderstandings and misinterpretations. The contract should be written and named in a way accessible and comprehensible to all involved parties, regardless of their technical expertise.

- Collaboration: The collaborative effort ensures a comprehensive and well-rounded understanding of requirements, addressing various perspectives and needs. When creating data contracts, engage diverse stakeholders, including data producers, data engineers, data scientists, and representatives from business, IT, legal, and compliance departments.

- Metadata: I recommend providing data contracts with thorough documentation and metadata. Comprehensive documentation aids in the smooth application and maintenance of the contract. This should include detailed descriptions, field definitions, validation rules, and other relevant information supporting a clear understanding and effective contract implementation.

- Regular reviews: Ongoing review helps maintain the contract’s relevance and effectiveness over time. Implement a structured approach for monitoring and data contract updates. Regular reviews ensure the contract remains current, aligns with evolving business requirements, and complies with new or changing regulations.

Conclusion

There has recently been a shift toward distributed data ownership, where domain teams are responsible for their data products. This change forced organizations to redefine data quality expectations, formalized through data contracts.

Semantic data validations ensure that data is logically consistent and aligns with the business logic. They help to check data pipelines for any outliers in data, value, lineage, and referential integrity. Inadequate referential integrity can lead to missing or incomplete data, which is why it is also important.

Data governance contracts can also be implemented in CI/CD pipelines and become very useful when they indicate columns with sensitive data, metadata (data owners, etc.), and user roles authorized to access a data product.

A model’s metadata, along with any other mandatory metafields defined by the developer, aids in monitoring the model’s resource usage and performance.

Service Level Agreements (SLAs) within data contracts outline specific commitments related to data freshness, completeness, and failure recovery.

Data contracts are essential to modern data modeling techniques and help ensure the data platform is prone to errors and scales well. Resolving data quality issues might be expensive. For enterprise-level companies, maximizing return on investment (ROI) from data becomes increasingly important by leveraging existing data quality tools supporting data contract validation.

Become a Data Engineer

FAQs

How do data contracts differ from data validation and data testing?

Data contracts are formal agreements specifying data producers' and consumers' format, schema, and exchange protocols. They establish clear expectations and responsibilities for data quality. On the other hand, data validation and testing are processes that check if the data meets these expectations. Validation ensures the data conforms to the defined contract, while testing evaluates the data's accuracy and reliability.

Can data contracts be used in real-time streaming data pipelines?

Yes, data contracts can be implemented in real-time streaming data pipelines. They help filter out invalid data before it reaches its destination, ensuring that only data adhering to predefined rules and formats is processed. This approach is beneficial in event-driven or real-time processing, where data integrity and timeliness are critical.

How do you handle versioning in data contracts when schema changes are frequent?

Handling versioning in data contracts involves maintaining backward compatibility and tracking schema evolution. One approach is to use versioned schemas, where each contract version is associated with a specific schema version. Tools like on_schema_change: append in dbt can help manage schema changes without disrupting existing integrations, allowing for gradual transitions and updates.

What role do data contracts play in data mesh architecture?

In a data mesh architecture, data ownership is distributed across domain teams; data contracts ensure consistent data quality and interoperability between domains. They formalize the expectations between data producers and consumers, helping to align teams on data standards and reducing the risk of data quality issues as data flows across different domains.

How can data contracts help comply with privacy regulations like GDPR or HIPAA?

Data contracts can enforce data governance policies, including privacy regulations like GDPR or HIPAA. They can define rules for data masking, pseudonymization, and access controls, ensuring that sensitive data is handled appropriately. By embedding these rules into the contract, organizations can automate compliance checks and reduce the risk of privacy breaches.

Passionate and digitally focused, I thrive on the challenges of digital marketing.

Before moving to the UK, I gained over ten years of experience in sales, corporate banking risk, and digital marketing, developing expertise in risk management, mathematical modeling, statistical analysis, business administration, and marketing.

After completing my MBA at Newcastle, I'm now eager to pursue a career in data-driven marketing, computer science, or AI, with the potential to progress to a PhD. These fields offer the practical application of science, ongoing professional development, innovation, and the opportunity to contribute to a dynamic industry.