Track

The current generative AI revolution wouldn’t be possible without the so-called large language models (LLMs). Based on transformers, a powerful neural architecture, LLMs are AI systems used to model and process human language. They are called “large” because they have hundreds of millions or even billions of parameters, which are pre-trained using a massive corpus of text data.

Start our Large Language Models (LLMs) Concepts Course today to learn more about how LLMs work.

LLM are the foundation models of popular and widely-used chatbots, like ChatGPT and Google Gemini. In particular, ChatGPT is powered by GPT-5, a LLM developed and owned by OpenAI.

ChatGPT and Gemini, as well as many other popular chatbots, have in common that their underlying LLM are proprietary. That means that they are owned by a company and can only be used by customers after buying a license. That license comes with rights, but also with possible restrictions on how to use the LLM, as well as limited information on the mechanisms behind the technology.

Yet, a parallel movement in the LLM space is rapidly gaining pace: open-source LLMs. Following rising concerns over the lack of transparency and limited accessibility of proprietary LLMs, mainly controlled by Big Tech, such as Microsoft, Google, and Meta, open-source LLMs promise to make the rapidly growing field of LLMs and generative AI more accessible, transparent, and innovative.

This article aims to explore the top open-source LLMs available in 2026. Although it’s been only a few years since the launch of ChatGPT and the popularization of (proprietary) LLMs, the open-source community has already achieved important milestones, with a good number of open-source LLMs available for different purposes. Keep reading to check the most popular ones!

In Summary: The Top Open-Source LLMs in 2026

- GLM 4.6: 200K token context, stronger agentic reasoning and coding, outperforming GLM-4.5 and DeepSeek-V3.1.

- gpt-oss-120B: OpenAI’s 117B-parameter open-weight model with chain-of-thought access, reasoning tiers, and single-GPU deployment.

- Qwen3-235B-Instruct-2507: 1M+ token context, 22B active parameters, state-of-the-art multilingual reasoning and instruction following.

- DeepSeek-V3.2-Exp: Experimental sparse-attention model matching V3.1 performance at far lower compute cost.

- DeepSeek-R1-0528: Reasoning-enhanced upgrade with major gains in math, logic, and coding (AIME 2025: 87.5%).

- Apriel-1.5-15B-Thinker: Multimodal (text+image) reasoning model from ServiceNow delivering frontier-level results on a single GPU.

- Kimi-K2-Instruct-0905: 1T-parameter MoE with 256K context; excels in long-term agentic and coding workflows.

- Llama-3.3-Nemotron-Super-49B-v1.5: NVIDIA’s optimized 49B reasoning model for RAG and tool-augmented chat.

- Mistral-Small-3.2-24B-Instruct-2506: Compact 24B model with upgraded instruction following and reduced repetition errors.

Develop AI Applications

Benefits of Using Open-Source LLMs

There are multiple short-term and long-term benefits to choosing open-source LLMs instead of proprietary LLMs. Below, you can find a list of the most compelling reasons:

Enhanced data security and privacy

One of the biggest concerns of using proprietary LLMs is the risk of data leaks or unauthorized access to sensitive data by the LLM provider. Indeed, there have already been several controversies regarding the alleged use of personal and confidential data for training purposes.

By using open-source LLM, companies will be solely responsible for the protection of personal data, as they will keep full control of it.

Cost savings and reduced vendor dependency

Most proprietary LLMs require a license to use them. In the long term, this can be an important expense that some companies, especially SME ones, may not be able to afford. This is not the case with open-source LLMs, as they are normally free to use.

However, it’s important to note that running LLMs requires considerable resources, even only for inference, which means that you will normally have to pay for the use of cloud services or powerful infrastructure.

Code transparency and language model customization

Companies that opt for open-source LLMs will have access to the workings of LLMs, including their source code, architecture, training data, and mechanism for training and inference. This transparency is the first step for scrutiny but also for customization.

Since open-source LLMs are accessible to everyone, including their source code, companies using them can customize them for their particular use cases.

Active community support and fostering innovation

The open-source movement promises to democratize the use and access of LLM and generative AI technologies. Allowing developers to inspect the inner workings of LLMs is key for the future development of this technology. By lowering entry barriers to coders around the world, open-source LLMs can foster innovation and improve the models by reducing biases and increasing accuracy and overall performance.

Addressing the environmental footprint of AI

Following the popularization of LLMs, researchers and environmental watchdogs are raising concerns about the carbon footprint and water consumption required to run these technologies. Proprietary LLMs rarely publish information on the resources required to train and operate LLMs, nor the associated environmental footprint.

With open-source LLM, researchers have more chances to know about this information, which can open the door for new improvements designed to reduce the environmental footprint of AI.

9 Top Open-Source Large Language Models For 2026

1. GLM 4.6

GLM-4.6 is a next-generation large language model that succeeds GLM-4.5. It is designed to enhance agentic workflows, provide robust coding assistance, facilitate advanced reasoning, and generate high-quality natural language. The model is targeted for both research and production environments, focusing on longer-context comprehension, tool-augmented inference, and writing that aligns more naturally with user preferences.

Source:zai-org/GLM-4.6

Compared to GLM-4.5, GLM-4.6 introduces several key improvements: the context window has been expanded from 128K to 200K tokens, allowing for more complex agentic tasks. The coding performance has also been enhanced, yielding higher benchmark scores and stronger results in real-world applications.

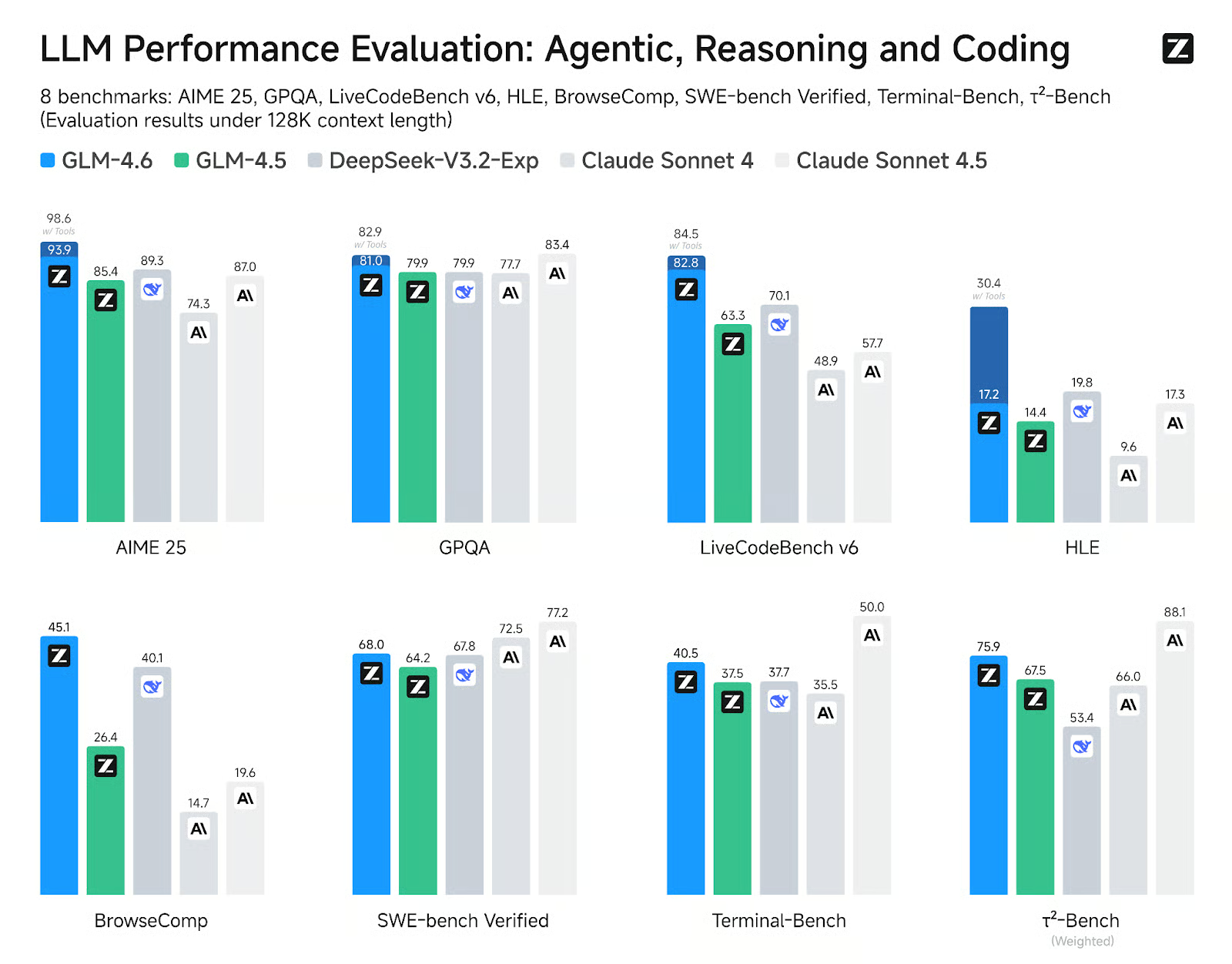

GLM-4.6 shows clear gains across eight public benchmarks related to agents, reasoning, and coding, outperforming GLM-4.5, and demonstrating competitive advantages over leading models such as DeepSeek-V3.1-Terminus and Claude Sonnet 4.

2. gpt-oss-120B

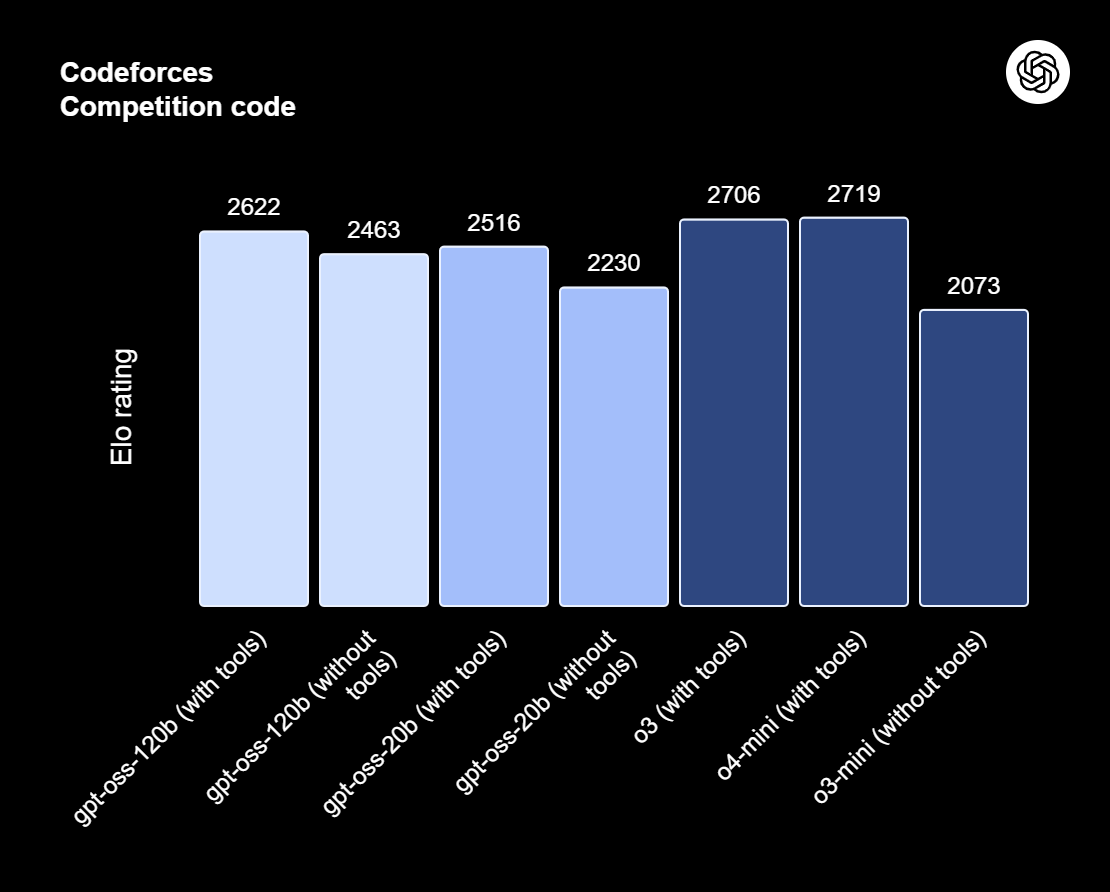

gpt-oss-120b is the pinnacle of the gpt-oss series—OpenAI’s open-weight models designed for advanced reasoning, agentic tasks, and versatile developer workflows. This series includes two versions: gpt-oss-120b, which is intended for production-grade, general-purpose use cases that require high-level reasoning and can operate on a single 80GB GPU (featuring 117 billion parameters, with 5.1 billion active); and gpt-oss-20b, which is optimized for lower latency and local or specialized deployments (with 21 billion parameters and 3.6 billion active). Both models are trained using the harmony response format and should be used with the harmony framework to function effectively.

Source: Introducing gpt-oss | OpenAI

The gpt-oss-120b also offers configurable reasoning efforts: low, medium, or high, to balance depth and latency. It provides full chain-of-thought access for debugging and auditing purposes. These models can be fine-tuned and come with built-in agentic capabilities, such as function calling, web browsing, Python code execution, and structured outputs.

Thanks to MXFP4 quantization of MoE weights, gpt-oss-120b can run on a single 80GB GPU, while gpt-oss-20b can operate within a 16GB environment. Read our article on 10 ways to access GPT-OSS 120B for free.

3. Qwen3 235B 2507

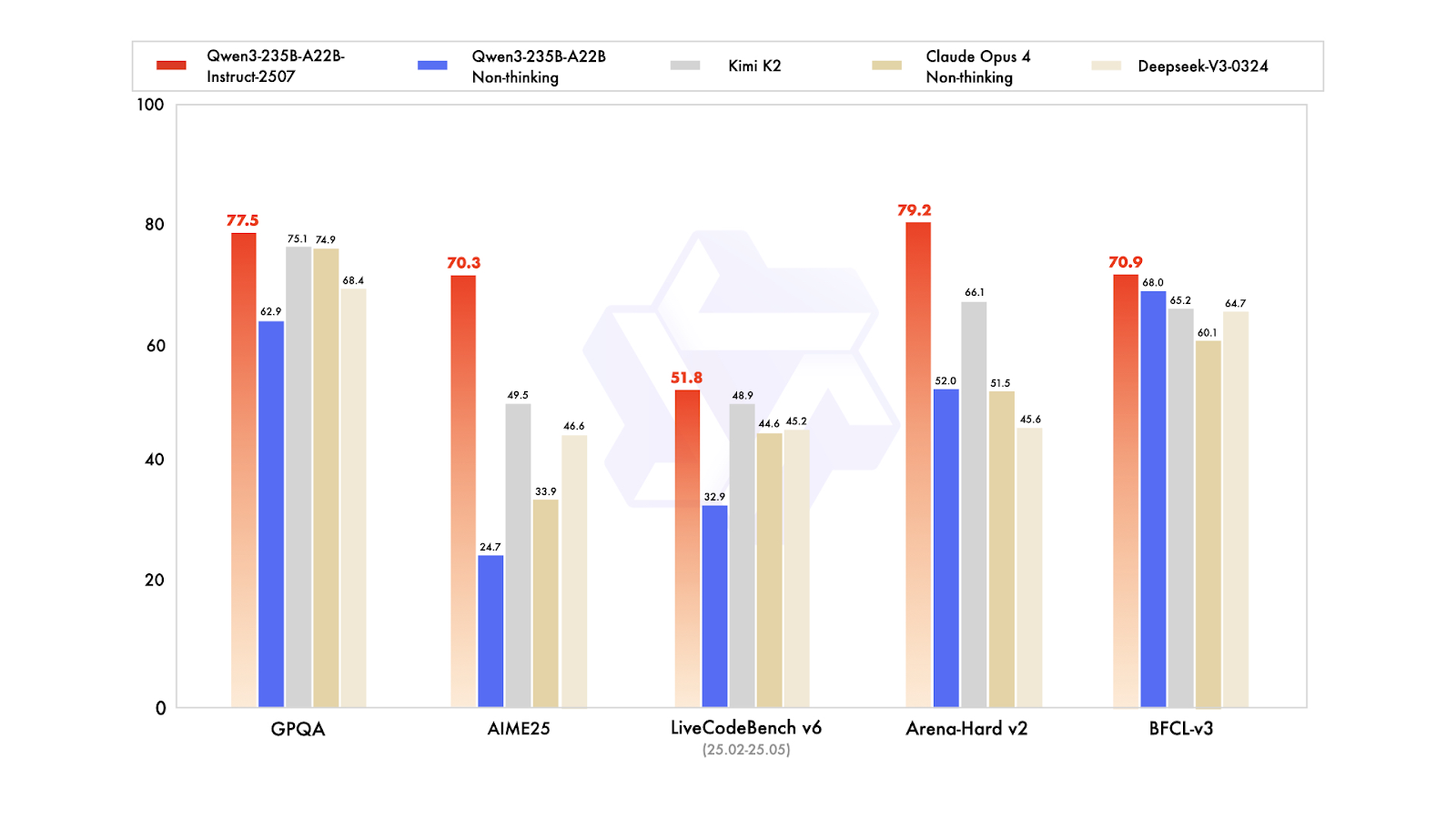

Qwen3-235B-A22B-Instruct-2507 is the flagship non-thinking model in the Qwen3-MoE family, designed for high-precision instruction following, rigorous logical reasoning, multilingual text comprehension, mathematics, science, coding, tool use, and tasks requiring very long contexts. It is a mixture of experts (MoE) causal language model featuring a total of 235 billion parameters, with 22 billion active parameters (utilizing 128 experts with 8 active at a time). The model comprises 94 layers, includes a GQA mechanism with 64 query heads and 4 key-value heads, and has a native context window of 262,000 tokens, which can be extended to approximately 1.01 million tokens.

The latest Instruct-2507 update introduces significant improvements in general capabilities and expands long-tail knowledge coverage across multiple languages. It also offers markedly better preference alignment for open-ended tasks and enhances writing quality, particularly for 256,000+ long-context understanding.

On public benchmarks, it demonstrates exceptional results. In practice, this positions Instruct-2507 as a top-tier non-thinking model, outperforming both the previous Qwen3-235B-A22B non-thinking variant and leading competitors such as DeepSeek-V3, GPT-4o, Claude Opus 4 (non-thinking), and Kimi K2.

You can learn more about Qwen3 in our full article.

4. DeepSeek V3.2 Exp

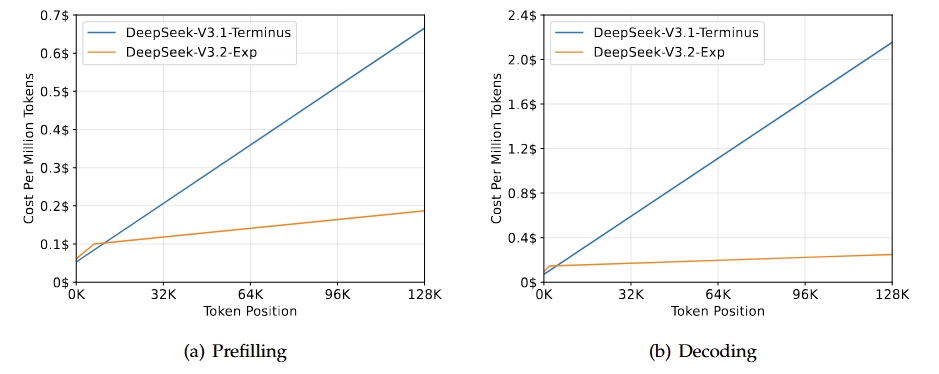

DeepSeek-V3.2-Exp is an experimental, intermediate version leading to the next generation of the DeepSeek architecture. It builds on V3.1-Terminus and introduces DeepSeek Sparse Attention to enhance training and inference efficiency, particularly in long-context scenarios. This model aims to improve transformer efficiency for extended sequences while maintaining the output quality expected from the Terminus lineage.

Source: DeepSeek-V3.2-Exp

The primary outcome of this release is that it matches the overall capabilities of V3.1-Terminus while providing significant efficiency improvements for long-context tasks. Evaluations and third-party analyses show that its performance is comparable to Terminus, with a notable reduction in computational costs. This confirms that sparse attention can enhance efficiency without compromising quality.

Read our full guide to DeppSeek-V3.2-Exp to work through a demo project.

5. DeepSeek R1 0528

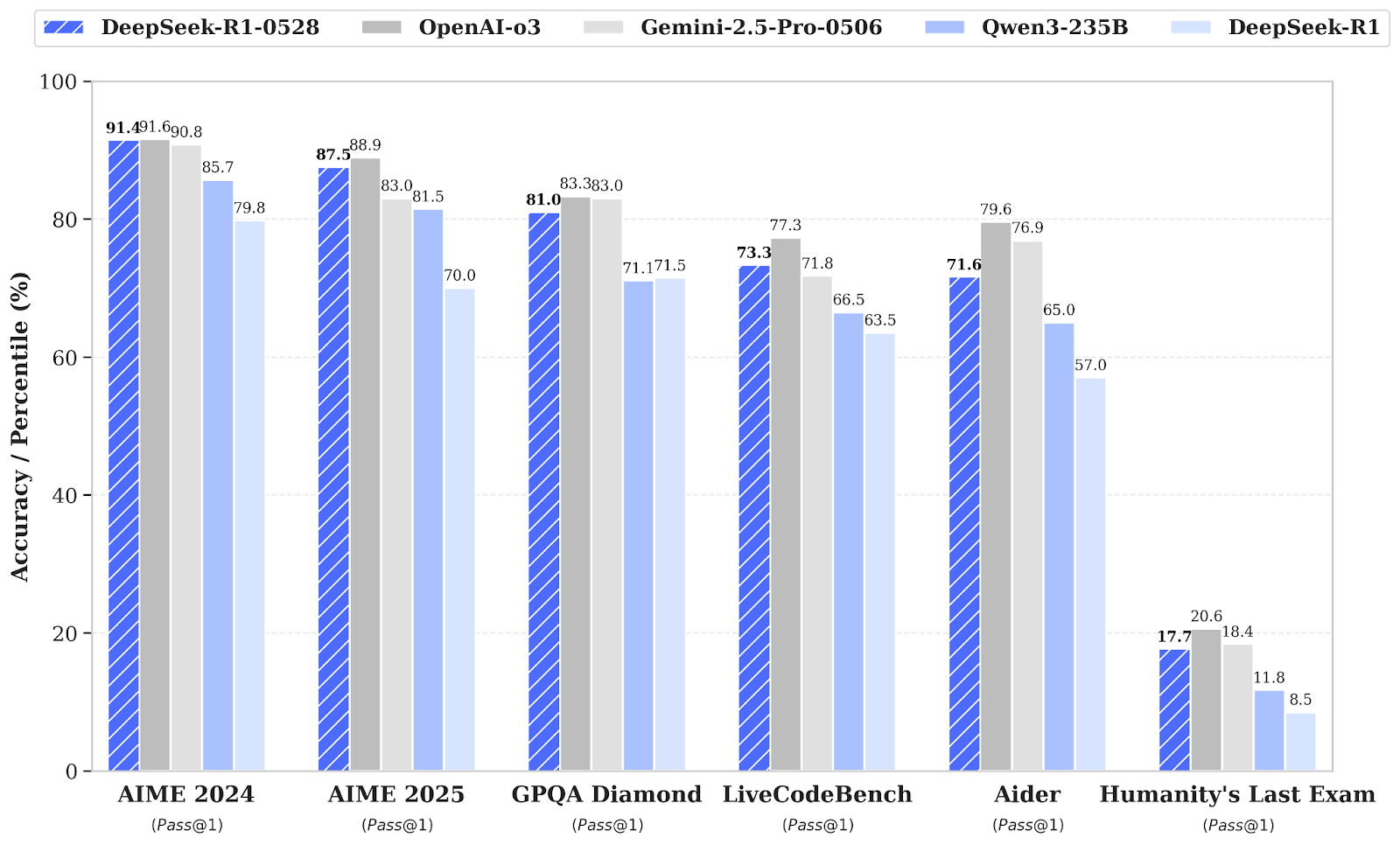

DeepSeek-R1 has received a minor version upgrade to DeepSeek-R1-0528, which enhances its reasoning and inference capabilities through increased computational power and algorithmic optimizations post-training. As a result, there are significant improvements across various areas, including mathematics, programming, and general logic. The overall performance is now closer to leading systems such as O3 and Gemini 2.5 Pro.

In addition to raw capabilities, this update emphasizes practical utility with better function calling and coding workflows, reflecting a focus on producing more reliable and productivity-oriented outputs.

Source: deepseek-ai/DeepSeek-R1-0528

Compared to the previous DeepSeel R1 version, the upgraded model shows substantial progress in complex reasoning. For instance, on the AIME 2025 exam, accuracy improved from 70% to 87.5%, supported by deeper analytical thinking (with the average number of tokens per question increasing from approximately 12,000 to 23,000).

Broader evaluations also show positive trends in areas such as knowledge, reasoning, and coding performance. Examples include improvements in LiveCodeBench, Codeforces ratings, SWE Verified, and Aider-Polyglot, indicating enhanced problem-solving depth and superior real-world coding capabilities.

6. Apriel-v1.5-15B-Thinker

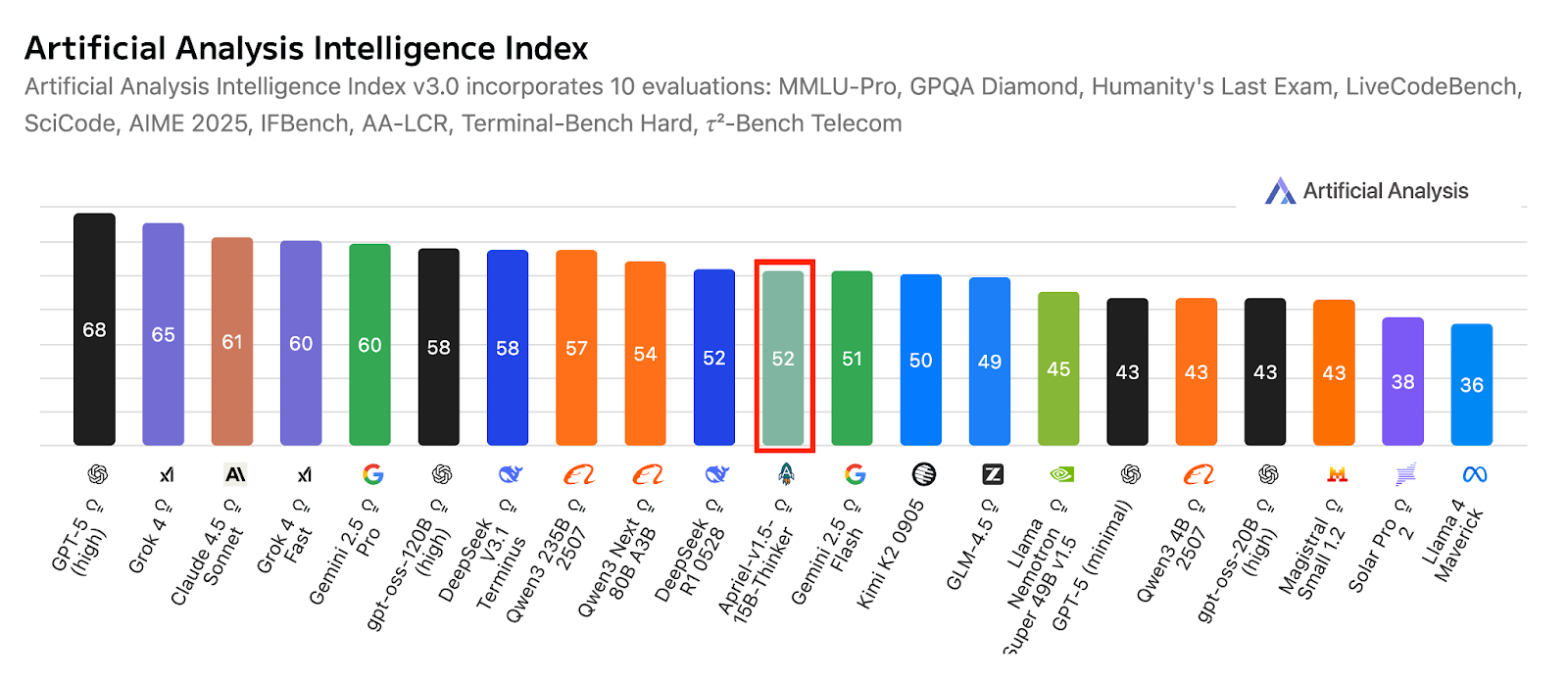

Apriel-1.5-15b-Thinker is a multimodal reasoning model in ServiceNow’s Apriel SLM series. It delivers competitive performance with just 15 billion parameters, aiming for frontier-level results within the constraints of a single-GPU budget. This model not only adds image reasoning capabilities to the previous text-only model but also deepens its textual reasoning abilities.

As the second model in the reasoning series, it has undergone extensive continual pretraining across both text and image domains. The post-training involves text-only supervised fine-tuning (SFT), without any image-specific SFT or reinforcement learning. Despite these limitations, the model targets state-of-the-art quality in text and image reasoning for its size.

Source: ServiceNow-AI/Apriel-1.5-15b-Thinker

Designed to run on a single GPU, it prioritizes practical deployment and efficiency. Evaluation results indicate strong readiness for real-world applications, with an Artificial Analysis index score of 52, positioning the model competitively against much larger systems. This score also reflects its coverage in comparison to leading compact and frontier peers, all while maintaining a small-model footprint suited for enterprise use.

7. Kimi K2 0905

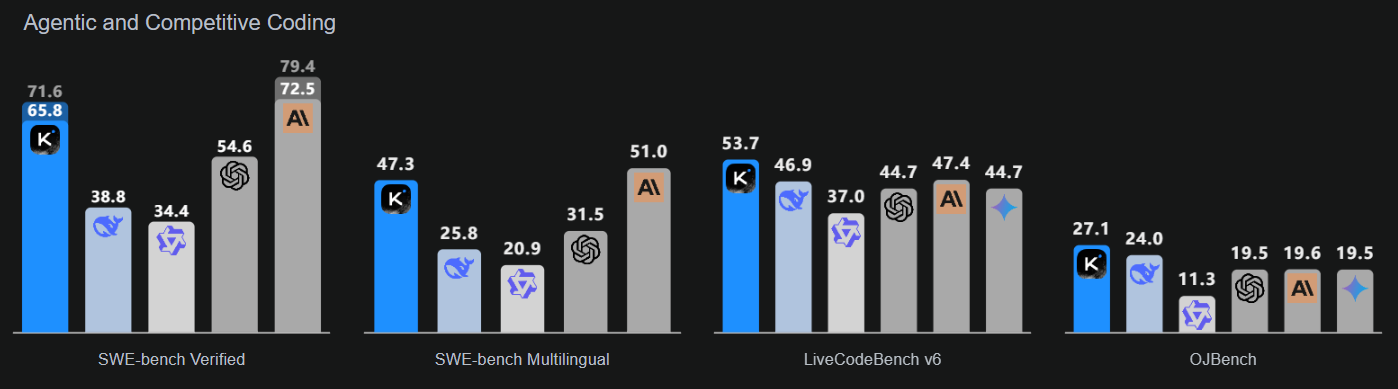

Kimi-K2-Instruct-0905 is the latest and most advanced model in the Kimi K2 lineup. It is a cutting-edge Mixture-of-Experts language model, featuring a total of 1 trillion parameters and 32 billion activated parameters. This model is specifically designed for high-end reasoning and coding workflows.

K2-Instruct-0905 significantly enhances K2's ability to handle long-term tasks with a 256,000-token context window, an increase from the previous 128,000 tokens. It aims to support robust agent-based use cases, which include tool-augmented chat and code assistance. As the flagship release of the K2 Instruct series, it focuses on strong developer ergonomics and reliability for production-quality applications.

Source: Kimi K2: Open Agentic Intelligence

This model emphasizes three key areas: enhanced coding intelligence for agent-based tasks, which shows clear improvements in public benchmarks and real-world applications; an improved user interface that enhances both aesthetics and functionality; and an extended 256,000-token context length that allows for more extensive planning and editing loops.

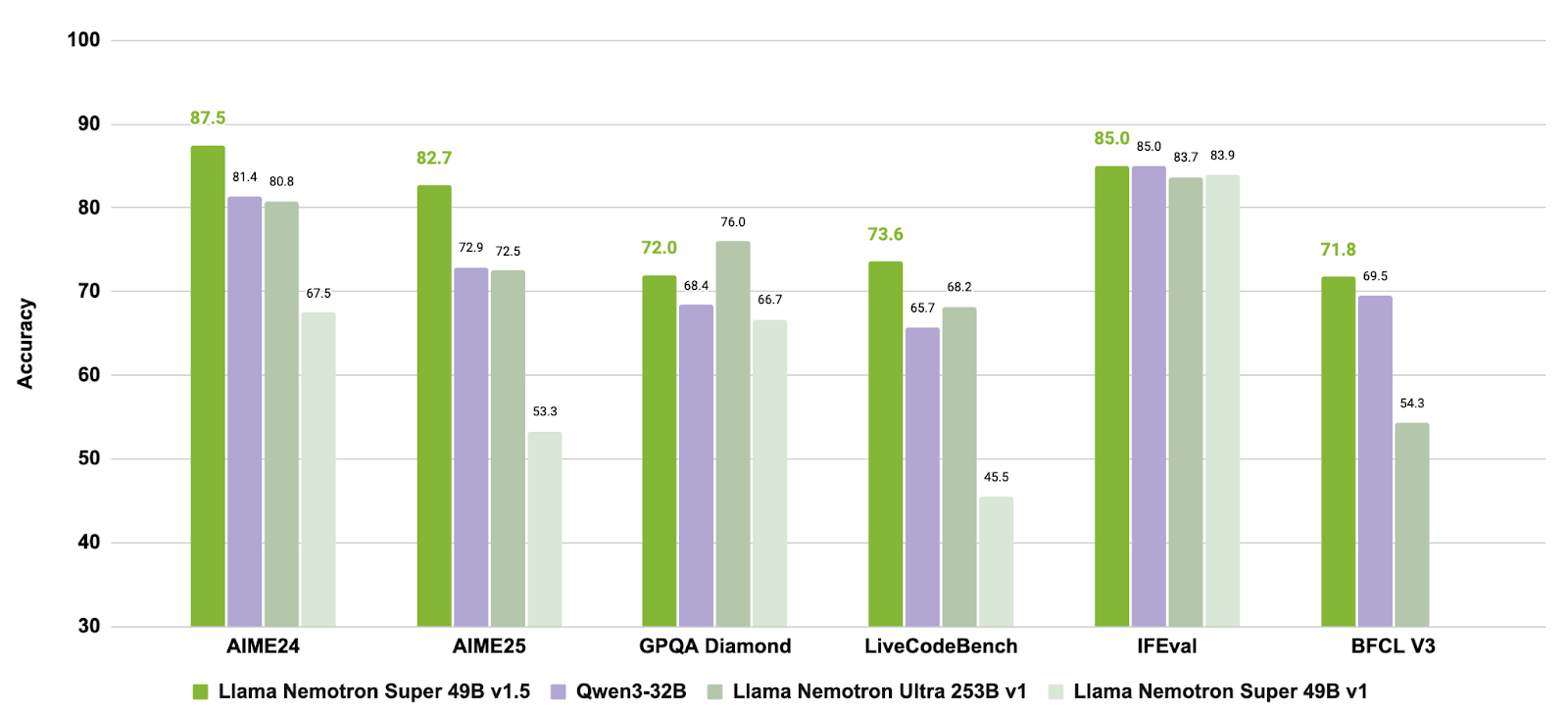

8. Llama Nemotron Super 49B v1.5

Llama-3_3-Nemotron-Super-49B-v1_5 is an upgraded 49 billion parameter model in NVIDIA’s Nemotron line, derived from Meta’s Llama-3.3-70B-Instruct. It is specifically designed as a reasoning model for human-aligned chat and agentic tasks, such as retrieval-augmented generation (RAG) and tool calling.

This model has undergone post-training to enhance its reasoning abilities, preference alignment, and tool usage. It also supports long-context workflows of up to 128,000 tokens, making it suitable for complex, multi-step applications.

Source: nvidia/Llama-3_3-Nemotron-Super-49B-v1_5

By combining targeted post-training for reasoning and agent behaviors with support for long-context tasks, Llama-3.3-Nemotron-Super-49B-v1.5 provides a balanced solution for developers who need advanced reasoning capabilities and robust tool usage without sacrificing runtime efficiency.

9. Mistral-small-2506

Mistral-Small-3.2-24B-Instruct-2506 is a significant upgrade over Mistral-Small-3.1-24B-Instruct-2503, enhancing instruction following, reducing repetition errors, and providing a more robust function calling template, all while maintaining or slightly improving overall capabilities. As a 24B-parameter instruct model, it is widely accessible across platforms, including AWS marketplaces, where it is noted for improved instruction adherence.

Source: mistralai/Mistral-Small-3.2-24B-Instruct-2506

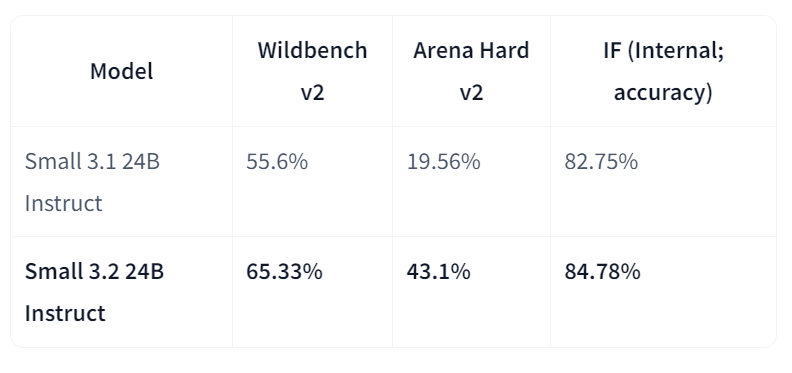

In direct comparisons with version 3.1, Small-3.2 shows clear advancements in assistant quality and reliability. It boosts instruction-following performance on Wildbench v2 (from 55.6% to 65.33%) and Arena Hard v2 (from 19.56% to 43.1%), while internal instruction-following accuracy rises from 82.75% to 84.78%. Repetition failures on challenging prompts are reduced by half (from 2.11% to 1.29%). Meanwhile, STEM performance remains comparable, with MATH at 69.42%, and HumanEval+ Pass@5 at 92.90%.

The Top Open-Source LLMs in 2026 Compared

In the table below, you can see a comparison of the top models:

| Model | Key Strengths | Notable Upgrades / Features |

|---|---|---|

| GLM 4.6 | Strong reasoning, agentic workflows, and coding capabilities | Expanded context window from 128K → 200K; improved benchmark performance vs. GLM-4.5 and DeepSeek-V3.1 |

| gpt-oss-120B | Open-weight GPT model for advanced reasoning and agentic tasks | Configurable reasoning depth, chain-of-thought access, function calling, and harmony response format |

| Qwen3-235B-Instruct-2507 | Multilingual, high-precision reasoning and instruction following | 1M+ token context, preference-aligned writing, outperforming GPT-4o and Claude Opus 4 (non-thinking) |

| DeepSeek-V3.2-Exp | Efficient long-context processing via sparse attention | Matches V3.1 performance with reduced compute; optimized transformer efficiency |

| DeepSeek-R1-0528 | Advanced reasoning, math, and programming ability | 17.5% AIME 2025 improvement; enhanced analytical depth and coding reliability |

| Apriel-1.5-15B-Thinker | Multimodal (text + image) reasoning on a single GPU | Continual pretraining across text and image; frontier-level reasoning for compact model size |

| Kimi-K2-Instruct-0905 | High-end reasoning and agent-based coding workflows | 256K-token context, improved developer ergonomics, and tool-augmented task support |

| Llama-3.3-Nemotron-Super-49B-v1.5 | Balanced reasoning and tool-usage model | NVIDIA-tuned for RAG and agentic applications; long-context support up to 128K tokens |

| Mistral-Small-3.2-24B-Instruct-2506 | Compact and reliable instruction-following | Reduced repetition errors (−50%) and major gains on WildBench v2 and Arena Hard v2 |

Choosing the Right Open-Source LLM for Your Needs

The open-source LLM space is rapidly expanding. Today, there are many more open-source LLMs than proprietary ones, and the performance gap may be bridged soon as developers worldwide collaborate to upgrade current LLMs and design more optimized ones.

In this vibrant and exciting context, it may be difficult to choose the right open-source LLM for your purposes. Here is a list of some of the factors you should think about before opting for one specific open-source LLM:

- What do you want to do? This is the first thing you have to ask yourself. Open-source LLMs are always open, but some of them are only released for research purposes. Hence, if you’re planning to start up a company, be aware of the possible licensing limitations.

- Why do you need a LLM? This is also extremely important. LLMs are currently in vogue. Everyone’s speaking about them and their endless opportunities. But if you can build your idea without needing LLMs, then don’t use them. It’s not mandatory (and you will probably save a lot of money and prevent further resource use).

- How much accuracy do you need? This is an important aspect. There is a direct relationship between the size and accuracy of state-of-the-art LLMs. This means, overall, that the bigger the LLM in terms of parameters and training data, the more accurate the model will be. So, if you need high accuracy, you should opt for bigger LLMs, such as LLaMA or Falcon.

- How much money do you want to invest? This is closely connected with the previous question. The bigger the model, the more resources will be required to train and operate the model. This translates into additional infrastructure to be used or a higher bill from cloud providers in case you want to operate your LLM in the cloud. LLMs are powerful tools, but they require considerable resources to use them, even open-source ones.

- Can you achieve your goals with a pre-trained model? Why invest money and energy in training your LLM from scratch if you can simply use a pre-trained model? Out there there are many versions of open-source LLMs trained for a specific use case. If your idea fits in one of these use cases, just for it.

Upskilling Your Team with AI and LLMs

Open-source LLMs aren't just for individual projects or interests. As the generative AI revolution continues to accelerate, businesses are recognizing the critical importance of understanding and implementing these tools. LLMs have already become foundational in powering advanced AI applications, from chatbots to complex data processing tasks. Ensuring that your team is proficient in AI and LLM technologies is no longer just a competitive advantage—it's a necessity for future-proofing your business.

If you’re a team leader or business owner looking to empower your team with AI and LLM expertise, DataCamp for Business offers comprehensive training programs that can help your employees gain the skills needed to leverage these powerful tools. We provide:

- Targeted AI and LLM learning paths: Customizable to align with your team’s current knowledge and the specific needs of your business, covering everything from basic AI concepts to advanced LLM development.

- Hands-on AI practice: Real-world projects that focus on building and deploying AI models, including working with popular LLMs like GPT-4 and open-source alternatives.

- Progress tracking in AI skills: Tools to monitor and assess your team’s progress, ensuring they acquire the skills needed to develop and implement AI solutions effectively.

Investing in AI and LLM upskilling not only enhances your team’s capabilities but also positions your business at the forefront of innovation, enabling you to harness the full potential of these transformative technologies. Get in touch with our team to request a demo and start building your AI-ready workforce today.

Conclusion

Open-source LLMs are in an exciting movement. With their rapid evolution, it seems that the generative AI space won’t necessarily be monopolized by the big players who can afford to build and use these powerful tools.

We’ve only seen eight open-source LLMs, but the number is much higher and rapidly growing. We at DataCamp will continue to provide information about the latest news in the LLM space, providing courses, articles, and tutorials about LLMs. For now, check out our list of curated materials:

FAQs

What are open-source LLMs?

Open-source large language models (LLMs) are models whose source code and architecture are publicly available for use, modification, and distribution. They are built using machine learning algorithms that process and generate human-like text, and being open-source, they promote transparency, innovation, and community collaboration in their development and application.

Why are open-source LLMs important?

Open-source LLMs democratize access to cutting-edge AI, allowing developers worldwide to contribute to and benefit from AI advancements without the high costs associated with proprietary models. They enhance transparency, fostering trust and enabling customization to meet specific needs.

What are the common challenges with open-source LLMs?

Challenges include high computational demands for running and training, which can be a barrier for individuals or small organizations. Maintaining and updating the models to stay current with the latest research and security standards can also be demanding without structured support.

Are there FREE resources to learn open-source LLMs?

Yes! If you are a university teacher or student, you can use DataCamp Classrooms to get our entire course catalog for FREE, which includes courses in open-source LLMs.

Earn a Top AI Certification

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.