Artificial Intelligence (AI) is transforming Europe at a staggering pace, with more than a third of European businesses adopting AI in 2023. With this rapid evolution comes the pressing need for robust regulatory frameworks to ensure AI technologies are ethical, safe, and beneficial.

In the US, the US Blueprint for AI Bill of Rights was published, and in China, the Generative AI Measures was released. Now, the EU (European Union) has taken a significant step forward by publishing the EU AI Act.

The EU AI Act is a groundbreaking initiative drafted by the EU AI Office to regulate the future of AI within Europe.

In this summary guide, we'll cover the EU AI Act, its key provisions, and how it will impact businesses and individuals within the EU and globally. If you’re keen to get hands-on with learning, check out our EU AI Act Fundamentals skill track and sign up for our webinar on Understanding Regulations for AI in the USA, the EU, and Around the World.

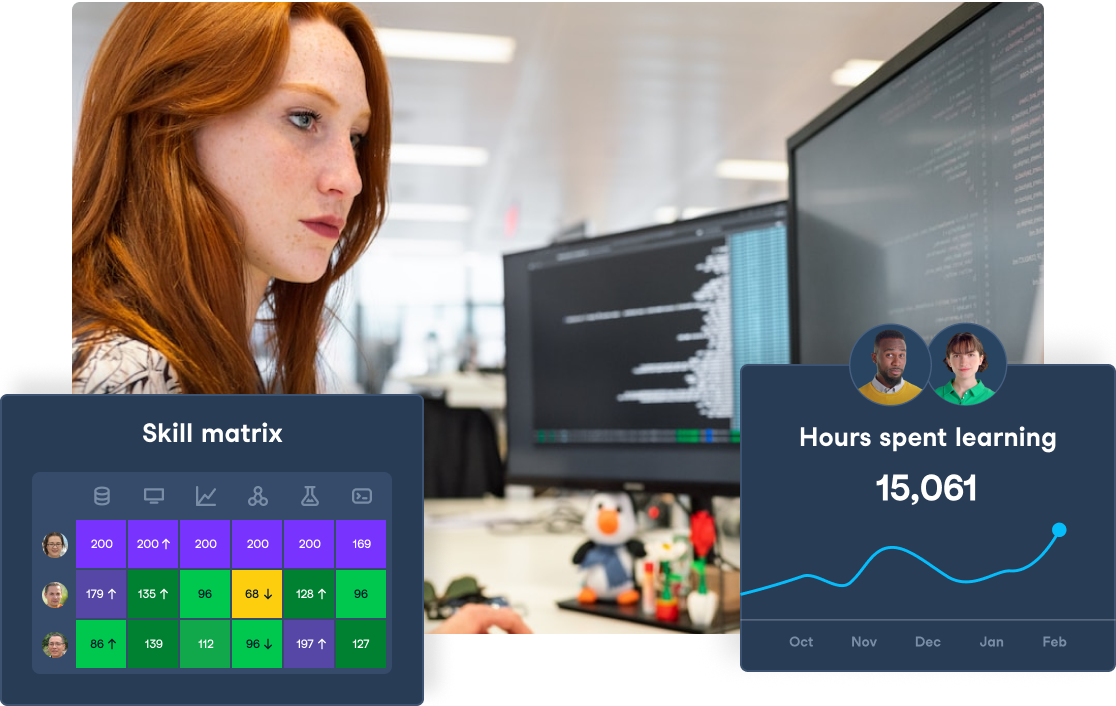

Prepare Your Team for the EU AI Act

Ensure compliance and foster innovation by equipping your team with the AI literacy skills they need. Start building your AI training program with DataCamp for Business today.

What is the EU AI Act?

The EU AI Act is a comprehensive regulatory framework governing the deployment and use of AI systems within the European market. It aims to protect individual rights and ensure the safety and transparency of AI technologies while also fostering innovation across the European Union. By setting stringent standards and compliance requirements, the Act aspires to position the EU as a global leader in AI governance.

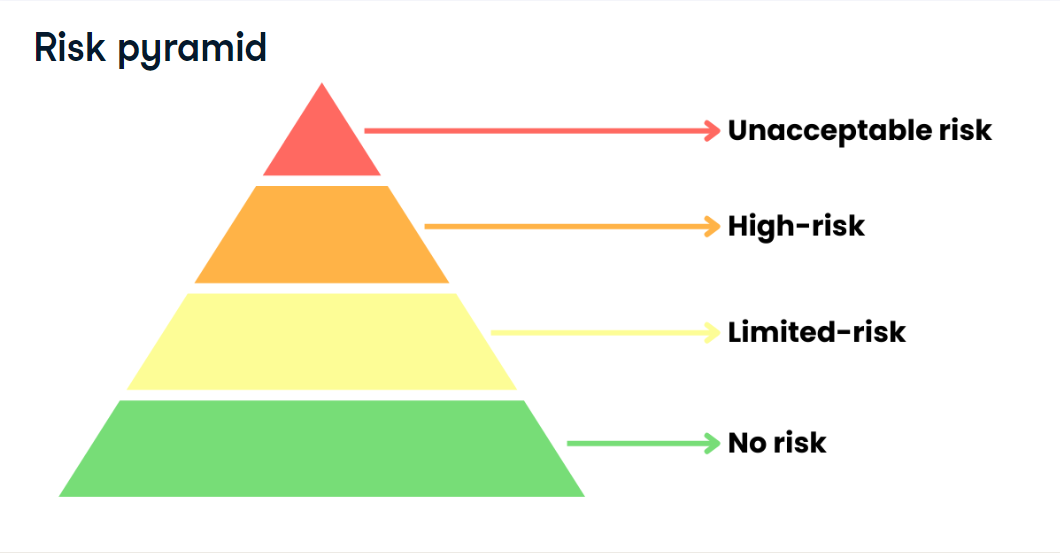

The act classifies AI systems into four risk levels: unacceptable, high, limited, and minimal. It prohibits applications posing unacceptable risks, like government-run social scoring, and imposes strict requirements on high-risk systems. The Act also includes safeguards for general-purpose AI, restrictions on biometric identification, and bans on AI that exploits user vulnerabilities.

Published on August 1, 2024, after approval by the European Parliament and EU Council, the Act has staggered implementation timelines based on the type of AI system, with "unacceptable risk" bans starting after six months and general-purpose AI regulations after 12 months. Non-compliance can lead to fines of up to €35 million or 7% of global annual revenue, whichever is higher.

The need for AI regulation

We’ve explored the concept of regulations in AI on the DataFramed podcast on Trust and Regulation in AI with Bruce Schneier. We’ve seen a large shift in the state of AI in recent years, with the pace of change coming at an increasing rate.

As a society, we must keep up with these innovations to ensure that end-users are protected from risks they pose. We also must consider the ethics of AI and have frameworks in place that ensure its responsible use. The emphasis should be on the organizations creating and implementing these powerful AI tools to do so ethically and in the public's best interests rather than simply for profit.

As Bruce Schneier, cryptographer, computer security professional, and privacy specialist, explains,

AI pretends to be a person. AI pretends to have a relationship with you. It doesn't. And it is social trust. So, in the same way, you trust your phone, your search engine, your email provider. It is a tool, and, like all of those things, it is a pretty untrustworthy tool. Right? Your phone, your email provider, your social networking platform. They all spy on you, right? They are all operating against your best interest. My worry is that AI is going to be the same, that AI is fundamentally controlled by large for-profit corporations.

Bruce Schneier, Security Technologist

Key Provisions of the EU AI Act

The key components of the EU AI Act are:

1. Risk-based classification

AI systems generally fall into four main risk categories under the EU AI Act:

- Unacceptable risk

- High-risk

- Limited risk

- Minimal/no risk.

According to the European Commission report on the AI Act, each of these are classified according to two main factors:

- Sensitivity of the data involved

- The particular AI use case or application

The requirements vary for each risk category, with most of the text in the act addressing high-risk AI systems.

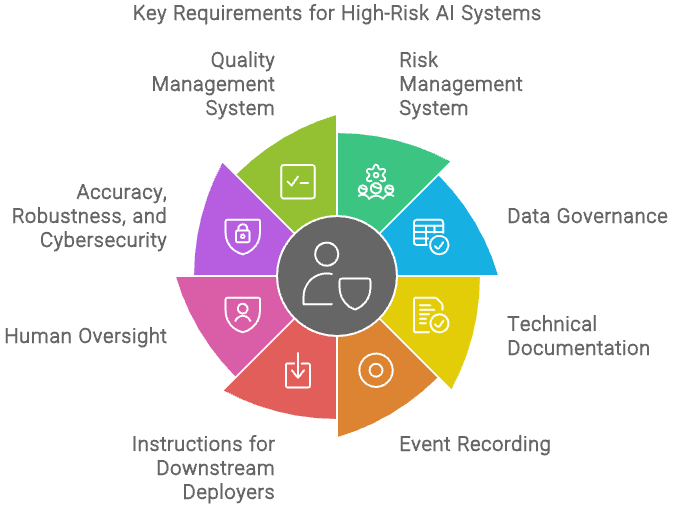

2. Requirements for high-risk AI systems

As for the high-risk category, the EU AI Act imposes requirements that organizations must meet for these systems to be deployed within the EU market.

These include:

- Having a risk management system for the system's lifecycle.

- Building up data governance programs to ensure training, validation, and testing datasets are relevant and sufficiently representative. The conduct must be done to the best extent possible to be free of errors and complete according to the intended purpose.

- Record technical documentation to show compliance to authorities when assessing for compliance.

- Designing their system to automatically record events relevant for identifying national-level risks and substantial modifications for record-keeping. This must be done throughout the system’s lifecycle.

- Providing downstream deployers instructions for use to enable compliance.

- Designing to allow deployers to implement human oversight.

- Designing to achieve appropriate levels of accuracy, robustness, and cybersecurity.

- Establishing a quality management system for compliance.

These are specified under Compliance with the Requirements (Art. 8–17).

Requirements for high-risk AI systems - Created Using Napkin.ai

Additionally, the Act mandates that organizations ensure their staff and others involved in the development or use of AI systems possess sufficient AI literacy. This includes understanding the technology, its applications, and potential risks.

Companies are responsible for providing appropriate training to ensure that AI systems are handled responsibly and ethically, in line with the specific contexts of their use. Later in this article, we’ll explore how DataCamp for Business can help your organization boost its AI literacy levels.

Elevate Your Organization's AI Skills

Transform your business by empowering your teams with advanced AI skills through DataCamp for Business. Achieve better insights and efficiency.

3. Prohibited AI practices

The EU AI Act explicitly prohibits certain practices that are considered unacceptable and pose extreme risks to individuals or society. These include:

- AI systems that exploit vulnerabilities of specific groups (e.g., children, persons with disabilities)

- Social scoring based on AI analysis of behavior

- Use of subliminal techniques to manipulate behaviors

These practices are specified under Prohibited Practices (Art. 5).

Risk Categories Under the EU AI Act

The EU AI Act categorizes AI systems into four risk levels—unacceptable risk, high-risk, limited risk, and minimal or no risk. Each category has distinct requirements and regulatory measures. In general, the higher the risk, the more regulatory obligations.

Source: Introduction to EU AI Act Course, DataCamp

1. Unacceptable risk

AI systems deemed to pose an unacceptable risk to people's safety or rights are prohibited. Examples include AI applications that manipulate human behavior, exploit vulnerabilities, or enable social scoring by governments.

According to Article 5, Prohibited AI Practices, these systems are not allowed to be placed on the market or used in the European Union.

Here's a summary of the prohibited AI practices:

- Deploying AI systems that can manipulate human behavior, decisions, or opinions through subliminal techniques

- Using AI systems to exploit vulnerabilities of particular groups (e.g., children, elderly persons with disabilities)

- Deploying social scoring based on AI analysis of behavior

- AI models involving the classification of natural persons or groups of persons

- Predicting the risk of a natural person committing a criminal offence

- Using AI systems that use untargeted scraping of facial images from the internet or CCTV footage for facial recognition

- Using AI to infer the emotions of a natural person in the areas of workplace and educational institutions

- Categorizing individually natural persons based on their biometric data

- Using 'real-time’ remote biometric identification systems other than for the purposes of law enforcement

2. High risk

A high-risk AI system, according to the Classification Rules for High-Risk AI Systems (Art. 6), is one where the AI system is intended to be used as a safety component of a product and is required to undergo a third-party conformity assessment.

In addition, any AI system, as stated in Annex III, belonging to the following use cases will also be considered a high-risk AI system:

- Biometrics

- Critical infrastructure

- Education

- Employment

- Essential services

- Law enforcement

- Migration

- Justice

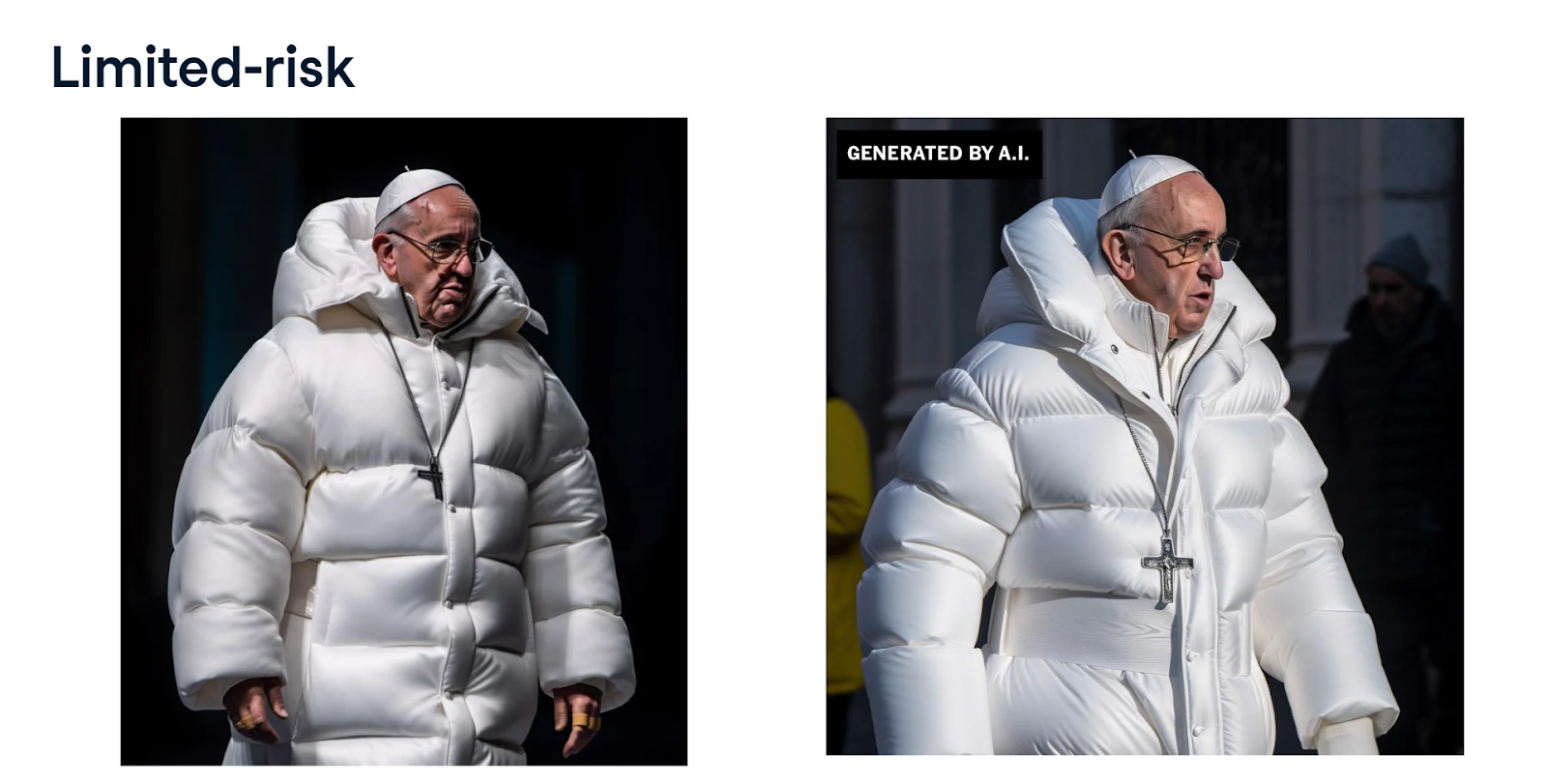

3. Limited risk

AI systems classified as "limited risk" are those that pose some potential risks to individuals or society but are not considered to be high-risk or unacceptable. These systems do not require the stringent regulatory oversight applied to high-risk AI but must still adhere to specific transparency obligations.

For limited risk AI systems, the Act typically requires providers to ensure that users are aware they are interacting with an AI system. This means, for example, that chatbots or other AI-driven interfaces must clearly disclose that the user is engaging with AI rather than a human. This transparency measure is intended to prevent confusion and ensure users are informed about the nature of the technology they are interacting with.

While limited risk AI systems are subject to these transparency obligations, they are not subjected to the more rigorous compliance requirements that apply to high-risk systems, such as extensive documentation, risk management processes, or mandatory assessments. This category is designed to balance the need for user awareness with the encouragement of innovation by not overburdening developers and providers with heavy regulation.

AI systems that use general purpose AI (GPAI), such as GPT-4, Midjourney, and Meta’s LLaMA models are also regulated in this category.

Source: Introduction to EU AI Act Course, DataCamp

A GPAI model is an AI model trained with a large amount of data using self-supervision at scale that displays significant generality and can carry out a wide range of distinct tasks with a variety of integrations with downstream systems or applications.

Regardless of the use cases of these GPAI models, regulations from the EU AI Act apply.

These are the requirements:

- Technical documentation must be recorded.

- Information and documentation are to be supplied to downstream providers.

- Policy to respect the Copyright Directive must be drafted.

- A detailed summary of the content used for training the GPAI model must be written.

More information can be found in Article 53: Obligations for Providers of General-Purpose AI Models.

If the GPAI is found to be a General-Purpose AI Model with Systemic Risk, a different set of obligations is required according to Article 55: Obligations for Providers of General-Purpose AI Models with Systemic Risk.

4. Minimal risk

The minimal risk category is an unregulated category.

Some examples of such AI systems may include:

- AI-enabled video games

- Spam filters

There is no current regulation for any AI systems within this category in the EU AI Act.

Overall Impact on the AI Market

The EU AI Act is expected to have far-reaching implications for individuals and organizations alike. The act will also be likely impacted by future EU rulemaking under the act and guidance issued by EU regulators.

Here are some possible impacts that may result from the implementation of the EU AI Act:

For ChatGPT/generative AI

For existing large language models with expansive influence over internet content and user behavior, like OpenAI's ChatGPT, Google's Gemini, Meta's Llama 3.1, and Claude AI, stricter compliance measures might be required to ensure their ethical use. This may lead to more oversight and limitations on how these models can be used, which could affect their popularity and profitability.

Additionally, OpenAI may have to modify its ChatGPT model to ensure that there are no prohibitive practices outlined in Article 5 of the EU AI Act if it falls under any of the banned use cases.

For businesses

This new act will bring about some changes in businesses outside of such LLM models as well.

Companies developing or using high-risk AI systems will have to comply with strict requirements and undergo testing, which may lead to increased compliance costs. Businesses must invest more in legal and technical expertise to ensure compliance. Consequently, startups may be hesitant to adopt any AI models involving higher-risk applications due to the compliance costs of AI commercialization.

This high cost of scaling an AI model might lead to market concentration in “big tech” companies monopolizing the industry.

Another impact would be the protection of trade secrets in companies with AI models as their main products. This new act forces transparency about the data used to train AI models, which are essential to operations in companies.

Additionally, these strict laws may hinder innovation and the entry of new technologies into the European market as well. For example, Meta withheld its latest multimodal artificial intelligence (AI) model from the European Union, indicating possible resistance to innovation.

Despite the challenges, the Act also offers opportunities for innovation. These new and clear standards provide a level playing field for businesses to develop AI technologies that meet ethical and safety benchmarks.

Organizations that prioritize compliance and ethical AI practices can gain a competitive edge, build trust with consumers, and position themselves as leaders in ethical AI. This differentiation can attract socially conscious consumers and investors, enhancing brand reputation and market share.

However, with these changes, the need for AI literacy becomes even greater, as we’ll discuss further down.

For consumers

For consumers, the Act brings about the most benefits in:

- Enhanced transparency and explainability of AI systems

- Greater trust in approved AI products

- Reliable protection from discriminatory AI practices

- Having a channel to address concerns

In the future, consumers may be able to demand compliance with the EU AI Act from companies developing or using high-risk AI systems. This will enable them to safeguard their data privacy and ensure that their personal information is not used in any unethical manner.

For EU member states

EU member states will have to adapt their existing national regulatory frameworks to the new EU AI Act. The EU Commission may also issue more specific guidelines and directives for member states to follow.

However, as outlined in a recent Reuters report,

"The accelerated uptake of AI last year has helped put Europe on track to meet its Digital Decade goals.”—Tanuja Randery, Managing Director at AWS

For global nations

The EU AI Act is likely to influence AI regulation as a global standard. As one of the first comprehensive AI regulatory frameworks, it sets a precedent that other regions may follow. Companies operating internationally must stay abreast of these developments to remain competitive.

When is the EU AI Act Effective? Implementation Timeline

Here is a tentative timeline for the implementation of the EU AI Act:

- 1 August 2024: Date of entry into force of the AI Act

- 2 February 2025: Prohibitions on certain AI systems start to apply (Chapter 1 and Chapter 2). This is where AI literacy requirements will begin to apply.

- 2 August 2025: The following rules start to apply: Notified bodies (Chapter III, Section 4), GPAI models (Chapter V), Governance (Chapter VII), Confidentiality (Article 78), Penalties (Articles 99 and 100)

- 2 August 2026: The remainder of the AI Act starts to apply, except Article 6(1).

- 2 August 2027: Article 6(1) and the corresponding obligations in the Regulation start to apply.

In summary, the act will not be fully implemented until 2027, giving companies and member states time to adapt and comply with the new regulations.

For a complete timeline, visit the EU AI Act Implementation Timeline.

EU AI Act: AI Literacy Requirements

In addition to regulating how AI models are developed, the EU AI Act also places an emphasis on training staff in AI literacy.

According to Chapter I: General Provisions, Article 4: AI literacy:

"Providers and deployers of AI systems shall take measures to ensure, to their best extent, a sufficient level of AI literacy of their staff and other persons dealing with the operation and use of AI systems on their behalf, taking into account their technical knowledge, experience, education, and training and the context the AI systems are to be used in, and considering the persons or groups of persons on whom the AI systems are to be used."

Put simply, this means that companies must ensure that their employees and any other individuals involved in the development or use of AI systems are adequately trained in understanding the technology, its applications, and potential risks.

This sentiment echoes what we’ve seen in the DataCamp State of Data & AI Literacy Report 2024. 62% of leaders we surveyed believe that AI literacy is important for their teams’ day-to-day tasks. Similarly, 70% of leaders identified a basic understanding of AI concepts as most important for their team, emphasizing the necessity for teams to grasp the core principles underlying AI technologies.

Despite this, data and AI training remain nascent in organizations. 26% of leaders report that they do not offer any form of AI training. An additional 26% of leaders point to AI training being reserved for technical roles only, whereas only 18% extend such training to non-technical staff.

Concerningly, only 25% of leaders belong to organizations that have established comprehensive, organization-wide AI literacy programs.

With DataCamp For Business you can start to build an AI literacy program that covers the necessary data and AI skills to make sure you’re compliant with the EU AI Act, whether you’re training a team of 2 or 10,000+. As well as covering how to work with and build AI tools, you can create a comprehensive training program that covers AI ethics, managing risk, and deploying AI models, starting with our EU AI Act Fundamentals skill track.

Prepare Your Team for the EU AI Act

Ensure compliance and foster innovation by equipping your team with the AI literacy skills they need. Start building your AI training program with DataCamp for Business today.

Conclusion

The EU AI Act represents a significant milestone in the regulation of artificial intelligence. It presents a balance between promoting innovation and ensuring ethical and safe use of AI technology.

While it may pose challenges for businesses, it also offers opportunities for growth and differentiation. For consumers, it provides a framework to trust and hold companies accountable for their use of AI. As for the global community, it sets a precedent for AI regulation that may be adopted by other regions in the future.

Exploring options to bring more exposure to the EU AI Act to your team? Learn more about the new act in our comprehensive Understanding the EU AI Act course and gain a full understanding of how to navigate regulations and foster trust with responsible AI in our EU AI Act Fundamentals skill track.

EU AI Act FAQs

Who governs the EU AI Act?

The European AI Office, established in February 2024 within the Commission, oversees the AI Act's enforcement and implementation with the member states.

When will the EU AI Act enter into force?

The act entered into force on 1 August 2024, with Prohibitions on certain AI systems starting on

2 February 2025, the rules on Notified bodies (Chapter III, Section 4), GPAI models (Chapter V), Governance (Chapter VII), Confidentiality (Article 78), Penalties (Articles 99 and 100) apply on 2 August 2025, and the remainder on 2 August 2026.

What are the potential consequences for non-compliance with the EU AI Act?

Non-compliance with certain AI practices can lead to fines up to 35 million EUR or 7% of a company's annual turnover.

Who will assess the compliance for the EU AI Act?

The EU AI Act states that each member country must have at least one authority responsible for assessing and monitoring AI conformity assessment bodies.

Who will the AI Act apply to?

The EU AI Act will apply to those involved in the operation, distribution or use of AI systems.

I'm Austin, a blogger and tech writer with years of experience both as a data scientist and a data analyst in healthcare. Starting my tech journey with a background in biology, I now help others make the same transition through my tech blog. My passion for technology has led me to my writing contributions to dozens of SaaS companies, inspiring others and sharing my experiences.