Track

AI is changing our world faster than ever—from breakthroughs in healthcare to self-driving cars, its potential to reshape industries is huge. But with this power come risks like bias, privacy issues, and job displacement. Without the right guardrails, these risks could lead to serious social, economic, and ethical problems.

Countries are tackling AI regulation in very different ways. The EU is taking the lead with detailed laws like the EU AI Act, which classifies AI by risk. The U.S. is more hands-off, letting the industry self-regulate. China, on the other hand, is pursuing rapid AI innovation while keeping strict state control, especially in sensitive areas like surveillance.

These varying approaches show just how hard it is to regulate a technology that crosses borders and industries. In this article, we’ll explore why building effective and balanced AI regulations is essential for ensuring AI benefits society while minimizing harm.

Learn about AI regulations in different markets with our webinar, Understanding Regulations for AI in the USA, the EU, and Around the World.

Prepare Your Team for the EU AI Act

Ensure compliance and foster innovation by equipping your team with the AI literacy skills they need. Start building your AI training program with DataCamp for Business today.

What is AI Regulation?

AI regulation refers to the laws, policies, and guidelines designed to oversee the development, deployment, and use of AI systems. The goal of AI regulation is to ensure that AI technologies are used ethically, safely, and responsibly while minimizing potential risks such as bias, privacy violations, and harm to individuals or society.

AI ethics is the field that studies how to develop and use AI in a way that is fair, accountable, transparent, and respects human values. Read through our AI Ethics: An Introduction blog to understand the short-term and long-term risks of AI.

AI regulation encompasses a broad range of issues, including:

- Data privacy: Ensuring that AI systems handle personal data in compliance with privacy laws.

- Bias and fairness: Preventing AI from perpetuating or amplifying discrimination and biases.

- Transparency: Making AI systems explainable and understandable, so their decisions are clear and accountable.

- Safety: Ensuring AI technologies do not cause harm, particularly in critical sectors like healthcare, finance, and autonomous systems.

- Accountability: Establishing clear responsibility for decisions made by AI systems, including legal frameworks for liability.

- Economic, environment and social impacts: Addressing potential job displacement, economic inequalities, environmental and societal changes resulting from AI.

Follow Datacamp’s AI Fundamentals track to discover the fundamentals of AI, dive into models like ChatGPT, and decode generative AI secrets to navigate the dynamic AI landscape.

Why is AI Regulation Necessary?

AI’s potential comes with significant risks, making regulation a necessity. One of the most pressing concerns is the possibility of harmful outcomes. For example, AI systems used in criminal justice have shown bias, disproportionately affecting marginalized communities. If not properly managed, AI could exacerbate existing social inequalities.

Moreover, the "black box" problem is a challenge unique to AI. Many AI systems operate in ways that even their creators cannot fully explain. Without transparency, it's difficult to understand how these systems make decisions, raising concerns about accountability.

Bias is another critical issue. AI systems are only as good as the data they are trained on, and if that data reflects societal biases, the AI will too. This can lead to unfair outcomes in areas such as hiring, lending, and law enforcement.

In 2023, Forbes reported on a class-action lawsuit against UnitedHealthcare (the largest health insurance provider in the US), alleging the wrongful denial of extended care claims for elderly patients through the use of an AI algorithm.

Without a regulatory framework, society may not be prepared for these economic shifts, leading to inequality and instability. Make sure to check out our article, AI in Healthcare: Enhancing Diagnostics, Personalizing Treatment, and Streamlining Operations; it explores how AI is influencing the future of healthcare and how businesses can stay afloat with new AI skills and technologies.

Finally, AI could disrupt the job market, displacing workers as automation becomes more prevalent. McKinsey reports that current Generative AI and other AI technologies have the potential to automate work activities that absorb up to 70 percent of employees’ time today.

These shifts will mean that up to 12 million workers in Europe and the United States will need to change jobs. Some workers may need support in reskilling and upskilling to be competitive in the new market.

The Current State of AI Regulation

Let’s take a look at what current legislation and regulations are in place to ensure the fair use of AI tools:

The European Union’s AI regulation

The European Union AI Act, proposed in April 2021, represents the first comprehensive legal framework aimed at regulating AI within the European Union (EU). The EU AI Act is a groundbreaking initiative drafted by the EU AI Office to regulate the future of AI within Europe. Its goal is to mitigate potential risks posed by AI systems while fostering innovation and competitiveness in the European AI market.

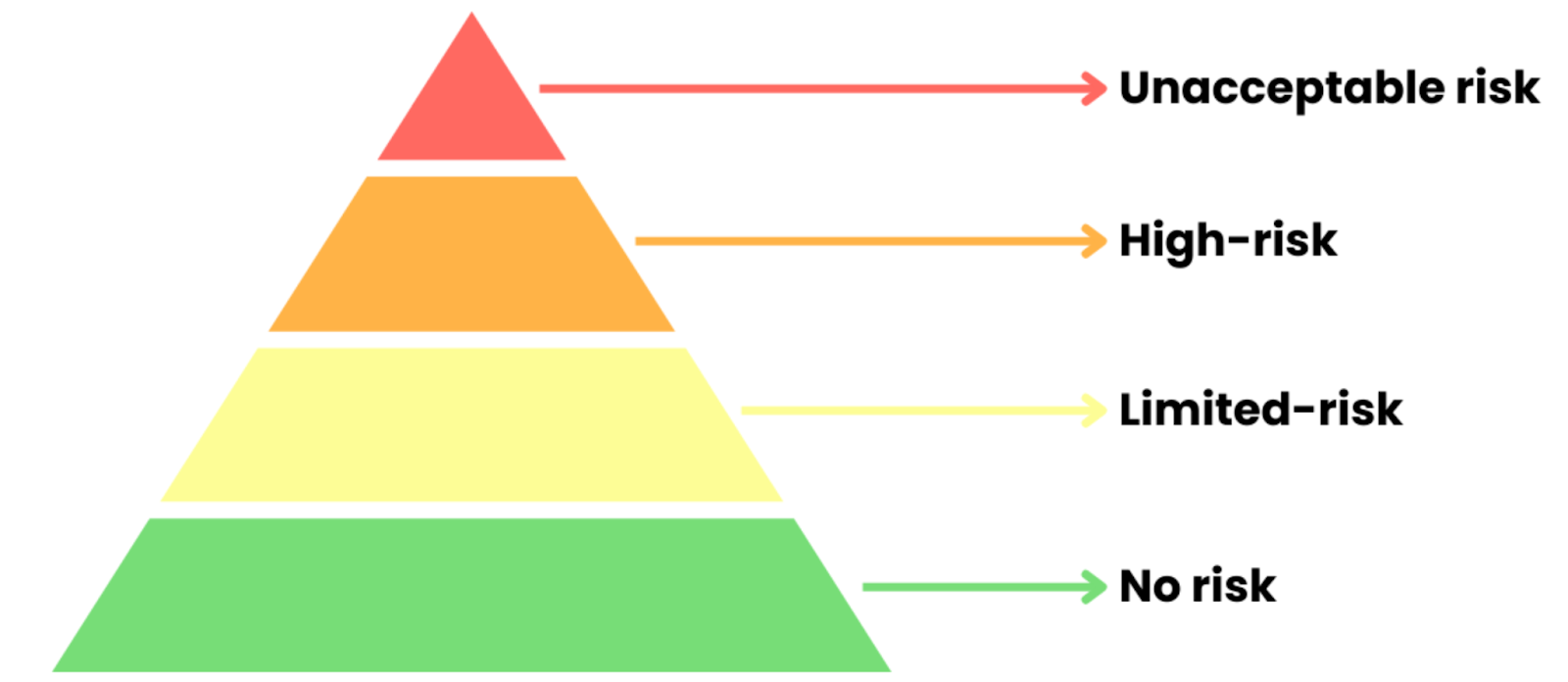

The EU AI Act is structured around a risk-based approach to regulation, which categorizes AI systems based on the level of risk they pose to individuals and society. The Act divides AI systems into four distinct risk categories:

Source: Datacamp Course ‘Understanding the EU AI Act’

- Unacceptable risk: AI systems that threaten safety, rights, or democratic values are banned (e.g., social scoring, harmful manipulation).

- High-risk AI: AI in critical areas like healthcare or law enforcement. Requires strict testing, transparency, and human oversight to meet EU standards before use.

- Limited risk AI: Includes chatbots and virtual assistants. Users must be informed when interacting with AI, but with fewer regulations.

- Minimal risk AI: Low-risk applications like spam filters or gaming AI. Largely exempt from regulation.

Make sure to follow our EU AI Act Fundamentals to master the EU AI Act and AI fundamentals. In the course you will learn to navigate regulations and foster trust with Responsible AI.

The EU AI Act places a strong focus on transparency, especially for Generative AI systems like ChatGPT that create content and engage directly with users. Depending on how they are used, these systems could be classified as either high-risk or limited-risk under the Act. One key requirement is that users must be clearly informed when they’re interacting with an AI. For generative models, this means:

- AI disclosure: Developers must clearly state when content is generated by AI. This helps users understand they’re communicating with a machine, not with a person.

- Explainability: Although generative AI systems can be complex, the EU AI Act demands a certain level of clarity. Those providing AI models need to provide enough information so users and regulators can grasp how the system works and makes decisions. This includes sharing details on the underlying algorithms and training data when necessary.

To enforce these rules, the EU are setting up supervisory bodies at both the national and EU levels to monitor compliance. These bodies can audit AI systems, investigate violations, and impose penalties of up to €30 million or 6% of global revenue, whichever is higher.

To learn more about the EU AI Act, make sure to follow our Understanding the EU AI Act course, where you will get to understand AI risk categories and compliance, with examples in biometric AI, education, and general-purpose models.

AI regulation in the United States

The United States adopts a more flexible, sectoral approach to AI regulation. While there is no comprehensive federal AI law, several existing laws, proposed bills, and guidelines at both federal and state levels are shaping the governance of AI systems.

Existing federal laws and regulations

Here are the laws and regulations that are currently in place in the US:

Federal Trade Commission (FTC) Act

The FTC has broad authority to protect consumers from unfair or deceptive practices. This includes regulating AI systems that could potentially lead to discriminatory practices or misleading outcomes, particularly in advertising and consumer data usage.

Civil rights laws

Various civil rights laws prohibit discrimination in employment, housing, and lending. These laws are relevant to AI applications that might inadvertently perpetuate bias, such as algorithms used for hiring or credit scoring. Agencies like the Equal Employment Opportunity Commission (EEOC) are increasingly scrutinizing AI systems for compliance with these laws.

Health Insurance Portability and Accountability Act (HIPAA)

In healthcare, AI applications must comply with HIPAA regulations regarding the privacy and security of health information. This includes ensuring that any AI used in patient care respects confidentiality and patient rights.

Proposed federal legislation

There is also legislation that has been proposed and is waiting validation:

Algorithmic Accountability Act

Proposed in 2022, this bill seeks to require companies to assess the impact of automated decision-making systems on consumers. It mandates audits for algorithms used in high-stakes areas to identify and mitigate bias.

AI Bill of Rights

In late 2022, the Biden administration released a blueprint for an ‘AI Bill of Rights’, outlining principles for the responsible use of AI, including fairness, transparency, and the right to appeal automated decisions.

State-level regulations

At the state level, various jurisdictions have taken the initiative to implement their own AI regulations, often focusing on data privacy and bias mitigation:

California Consumer Privacy Act (CCPA)

This landmark legislation gives consumers the right to know how their personal information is collected, used, and shared. It directly impacts AI systems that utilize personal data, requiring transparency and consumer consent.

New York City Automated Employment Decision Tools Law

Effective in 2023, this law mandates that companies using automated tools for hiring must conduct annual bias audits to assess the impact of their AI systems on different demographic groups.

Sector-specific regulations

As well as federal and state-level regulations, there are also some industry-specific AI regulations in place:

Autonomous vehicles

The U.S. Department of Transportation has issued guidelines for the testing and deployment of autonomous vehicles, emphasizing safety and accountability. These guidelines require companies to demonstrate that their self-driving technologies meet safety standards before they can be deployed on public roads.

Healthcare

AI applications in healthcare, such as diagnostic tools and treatment recommendations, are subject to scrutiny from the Food and Drug Administration (FDA), which is working to establish regulatory pathways for AI technologies to ensure they are safe and effective.

China’s approach to AI regulation

China’s approach to AI governance is state-led, balancing rapid innovation with tight regulation. It’s all about maintaining strong government control while ensuring security and promoting ethical use.

The framework includes a range of policies and guidelines that shape how AI is developed and used. Key features include:

Centralized governance

The Chinese government, through various ministries such as the Ministry of Science and Technology, plays a crucial role in regulating AI. This centralized control enables consistent enforcement of regulations across different sectors and regions.

Legislation and guidelines

Significant laws such as the Data Security Law and the Personal Information Protection Law set clear standards for data handling in AI applications. Additionally, the AI Ethical Guidelines outline principles that AI systems must adhere to, emphasizing human rights, privacy, and security.

Focus on national security

The regulatory landscape strongly emphasizes national security, with AI applications subjected to rigorous assessments to ensure they do not threaten public order or state security. This oversight is particularly relevant for technologies that could impact societal stability or governance.

The centralized governance model raises concerns about transparency and accountability, with limited public scrutiny potentially allowing unchecked government power in the AI space.

In 2023, China introduced the Regulations on the Administration of Generative Artificial Intelligence Services, which set forth a framework for the development, deployment, and use of generative AI technologies. Some of its standout features are:

- Mandatory security checks: Companies need government approval before launching content-generating AI, ensuring systems don’t pose risks to national interests or stability.

- Strict content monitoring: Generative AI outputs are closely regulated to prevent misinformation or content that disrupts social harmony, using censorship where necessary.

- High compliance standards: Companies must be transparent about their algorithms, data sources, and processes to meet strict regulations, enhancing accountability and preventing misuse.

As AI transforms industries, understanding its ethical and business risks is critical. Companies must not only leverage AI’s potential but also ensure it’s used responsibly and transparently.

In our webinar, “Understanding Regulations for AI in the USA, the EU, and Around the World,” Shalini Kurapati (CEO, Clearbox AI) and Nick Reiners (Senior Analyst, Eurasia Group)cover key AI policies, regulatory trends, and compliance strategies. You’ll learn how new regulations impact businesses and get practical tips for navigating AI governance, with a focus on the USA, EU, and global frameworks.

The Future of AI Regulation

Now that we understand the current landscape of regulations surrounding AI, it’s time to look at what’s next. As these tools continue to develop, so too must our laws and regulations evolve at the same time.

The need for international cooperation and a global regulatory framework

AI is a global technology, and effective regulation requires international cooperation. AI systems frequently cross borders, making it difficult for any single country to regulate them comprehensively.

AI applications in areas such as autonomous vehicles, healthcare, finance, and security often involve cross-border data flows, raising concerns about privacy, data protection, and ethical standards. This global nature necessitates a coordinated approach to AI governance. Goals of international cooperation are:

- Standard alignment: Establish shared principles like transparency, accountability, and fairness to create consistent global guidelines for AI use.

- Data privacy and protection: Set common rules for cross-border data sharing to protect privacy and ensure ethical AI applications while respecting national laws.

- Tackling AI bias: Collaborate globally to share best practices, detect bias, and develop strategies for fairer AI systems and equitable outcomes.

Check out our course, Responsible AI Data Management, where you can learn the fundamentals of responsible data practices, including data acquisition, key regulations, and data validation and bias mitigation strategies. Y

ou can apply these skills to use critical thinking on any data project, ensuring you have a successful, responsible, and compliant project from start to finish.

Challenges to a global AI regulatory framework

Despite the need for international cooperation, creating a unified global framework for AI regulation faces several challenges:

- Diverging national priorities: Different countries prioritize different aspects of AI regulation—e.g., the US focuses on innovation, the EU on privacy, and China on state control—making a unified global approach difficult.

- Geopolitical tensions: Rivalries between major AI players like the US and China lead to competing frameworks and concerns about technology transfer and national security, complicating cooperation.

- Ethical and cultural differences: Varied views on surveillance, data privacy, and human rights make it challenging to create universal ethical standards for AI regulation.

Efforts toward a global AI framework

Despite challenges, there are ongoing efforts to promote international cooperation in AI governance:

United Nations and AI for Good

The United Nations has initiated discussions on global AI regulation through forums like AI for Good, which brings together experts, policymakers, and industry leaders to explore AI’s potential benefits and risks. These discussions aim to shape international norms for AI development, particularly in areas like sustainability and human rights.

Global Partnerships on AI (GPAI)

Launched in 2020, GPAI is an international initiative that promotes the responsible development of AI through cross-border cooperation. It includes leading AI countries such as Canada, France, Japan, and India, among others, aiming to foster best practices, share data, and encourage innovation while upholding ethical standards.

AI Safety Summit

The UK hosted the first global AI Safety Summit in November 2023. It brought together global leaders, tech experts, and policymakers to address the critical challenges of ensuring the safe and ethical development of AI. The event underscored the importance of creating frameworks for AI safety, with a particular focus on aligning the development of AI with global security and ethical standards, while still fostering innovation.

Without public pressure for stronger ethical standards and transparency, companies may put profits over safety, and governments may avoid regulation until harm is evident. But when people demand accountability, it pushes lawmakers to act and create policies that ensure AI benefits society rather than undermining privacy or fairness.

As Bruce Schneier says on our DataFramed episode on Trust and Regulation in AI:

Nothing will change unless government forces it, and government will not force it unless we, the people, demand it.

Bruce Schneier, Security Technologist

The need for upskilling and reskilling

It’s not just legislation that needs updating in the wake of AI; the workforce must also adapt. As automation and intelligent systems take over routine tasks, new skill sets are becoming essential.

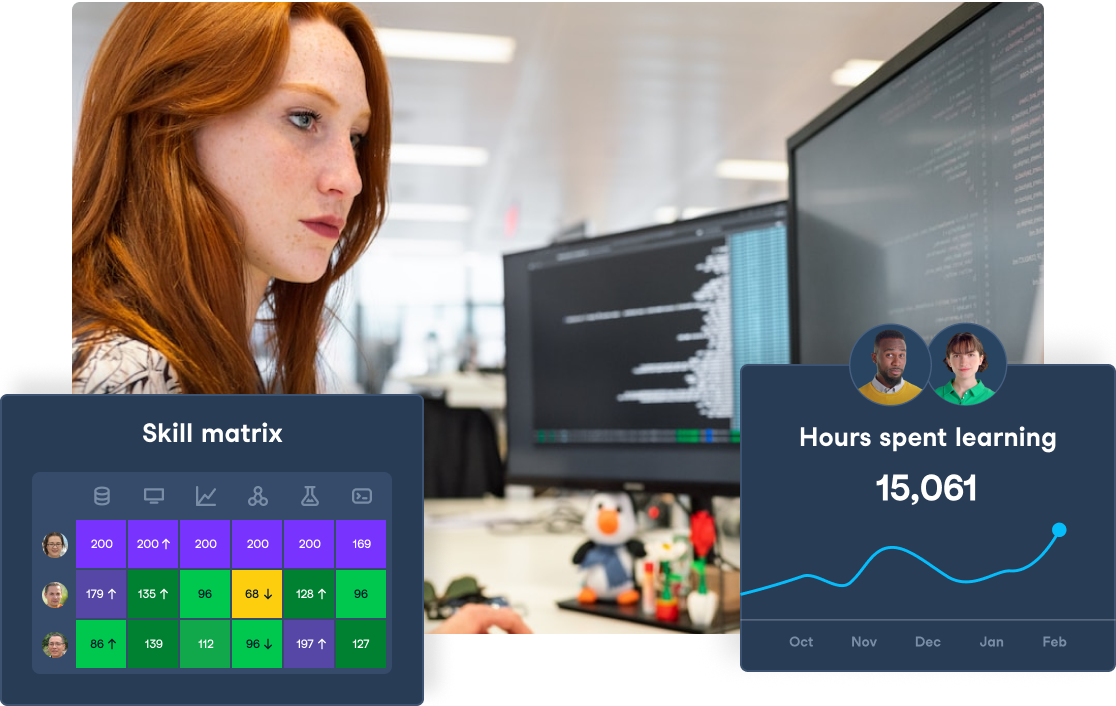

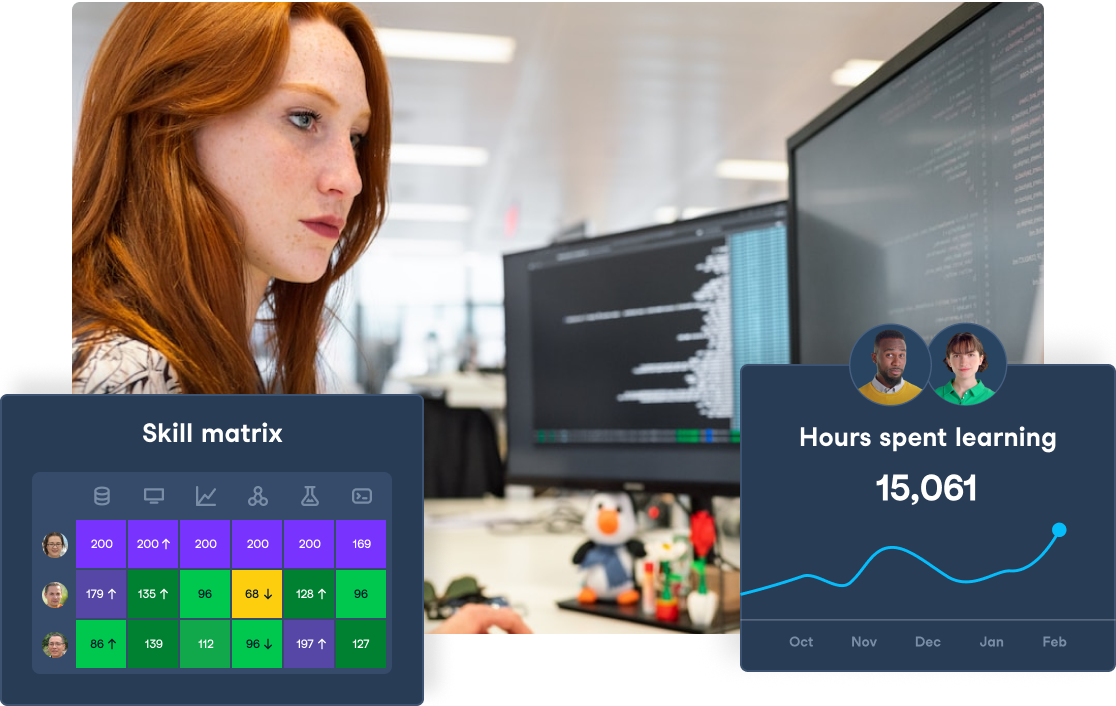

As we highlight in the State of Data & AI Literacy Report, this transition places a growing emphasis on equipping the workforce with AI literacy, technical proficiency, and data fluency to thrive in the evolving job landscape.

With 62% of leaders believing that AI literacy is now important for their teams’ day-to-day tasks, for organizations, the challenge is not only keeping up with technological change but also ensuring their teams are equipped to leverage these tools responsibly and strategically.

This requires a structured approach to learning—one that spans basic AI concepts, ethical considerations, and advanced technical training.

Organizations that invest in upskilling and reskilling are better positioned to mitigate job displacement, foster innovation, and remain competitive. Comprehensive learning programs that address these needs can bridge the gap, preparing both technical and non-technical teams to understand, deploy, and govern AI systems effectively.

DataCamp for Business can help your teams upskill in AI, no matter the scale of your organization. With custom learning paths, detailed reporting, and a dedicated success team, you can reach your AI goals in no time.

Elevate Your Organization's AI Skills

Transform your business by empowering your teams with advanced AI skills through DataCamp for Business. Achieve better insights and efficiency.

Conclusion

Regulating AI is key to ensuring it benefits society while managing its risks. With AI evolving so quickly across sectors, existing regulations often can’t keep up.

As AI advances into areas like generative models, autonomous decision-making, and deep learning, old rules may no longer be enough. The real challenge is creating adaptable regulations that can evolve with the technology—balancing innovation with responsible oversight.

If you’re looking to get a deeper understanding of AI and its impact, check out our AI Fundamentals skill track to build your knowledge and stay ahead of the curve.

I have worked in various industries and have worn multiple hats: software developer, machine learning researcher, data scientist, product manager. But at the core of it all, I am a programmer who loves to learn and share knowledge!