Track

Amazon released a new suite of state-of-the-art foundational models designed for affordable large-scale usage. Nova now joins Amazon's LLM ecosystem, integrated with their Amazon Bedrock service, and supports multiple modalities such as text, image, and video generation.

In this blog post, I’ll provide an overview of the new Amazon Nova models, explain how to access them via the Bedrock service, highlight the capabilities and benefits of each model, and demonstrate their use in action, including integration into a multi-agent application.

Develop AI Applications

What Are Amazon Nova Models?

Amazon’s Nova models are highly anticipated foundational models accessible through the Amazon Bedrock service. They are designed for a variety of applications, including rapid inference at low cost, multimedia understanding, and creative content generation. Let’s explore each model.

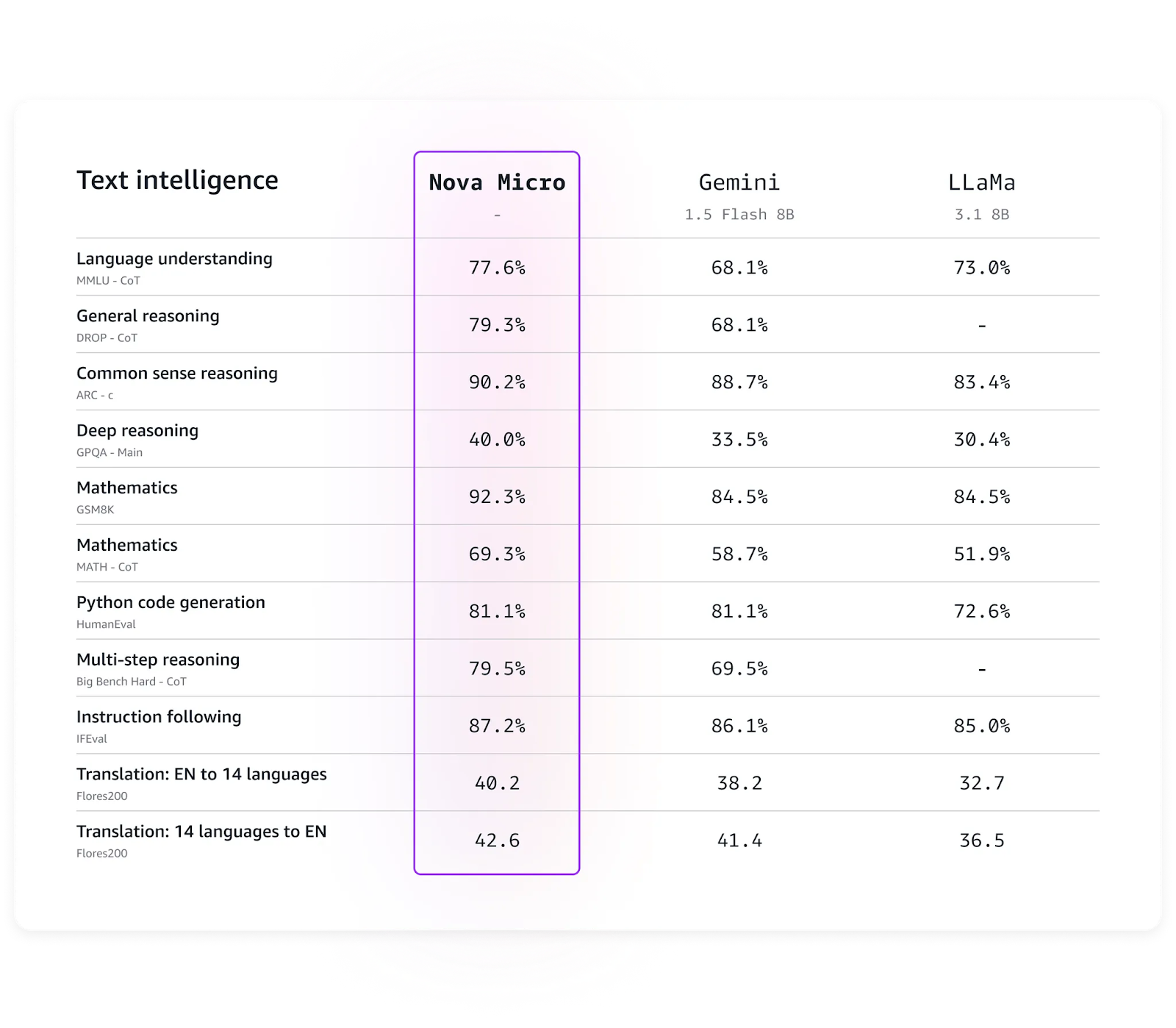

Amazon Nova Micro

The fastest model in the family, with the highest speed and low computation cost. Micro is best for applications requiring fast, only-text generation with a 200 token/second inference speed.

Some of Micro's best applications are real-time analysis, interactive chatbots, and high-traffic text generation services.

Nova Micro benchmarks. (Source: Amazon)

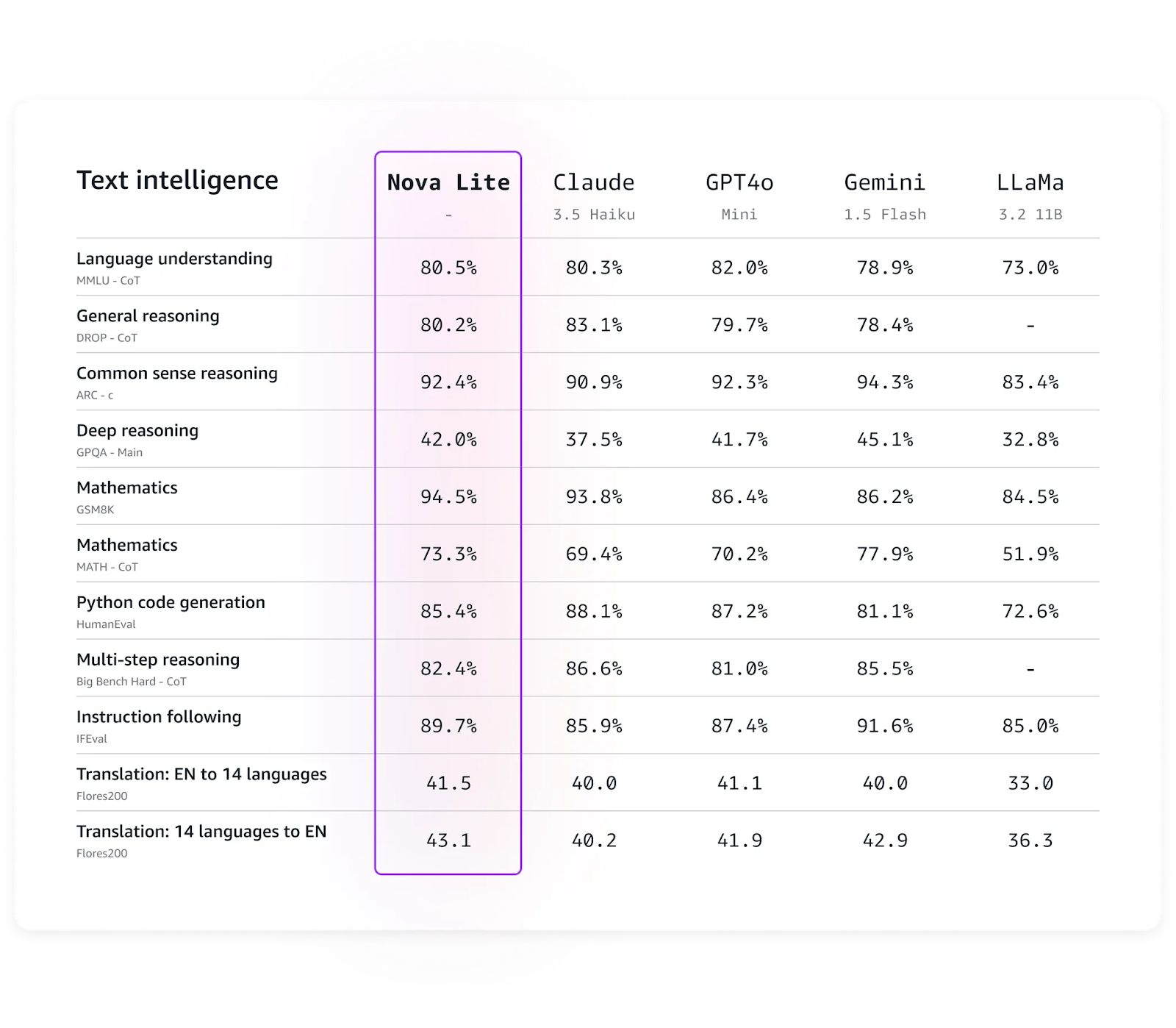

Amazon Nova Lite

The cost-efficient multimodal member of the Nova family, Lite is a good balance between speed and high accuracy across multiple tasks, particularly for reasoning and translation tasks when compared to its counterparts such as GPT-4o or Llama.

It can handle large volumes of requests efficiently while also maintaining strong accuracy. Lite can be an optimal choice for applications where speed is of high importance, and a model capable of handling multiple modalities is required.

Nova Lite benchmarks. (Source: Amazon)

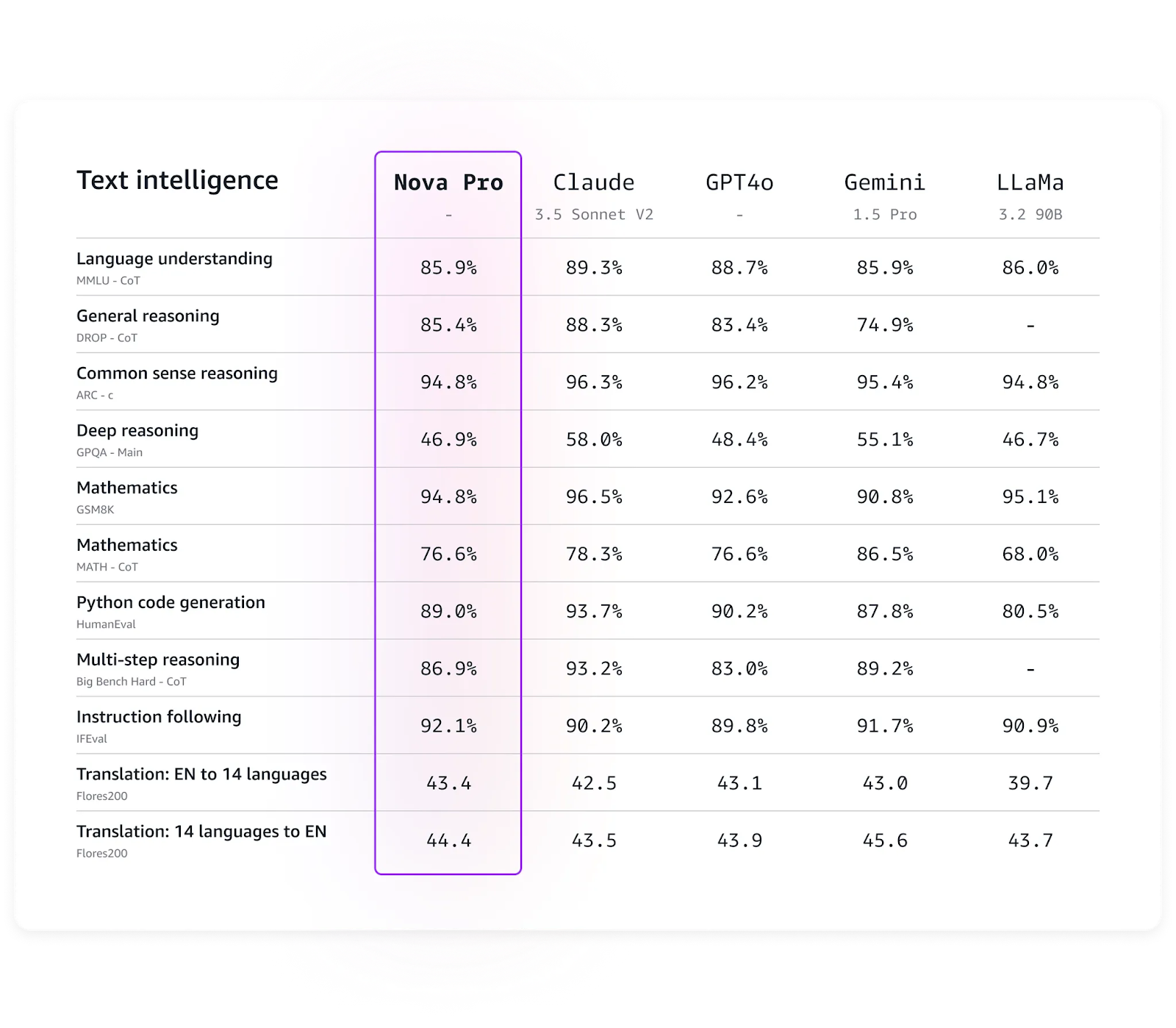

Amazon Nova Pro

The most advanced model in the Nova family for text processing, Nova Pro, offers impressive accuracy while maintaining relatively low computational costs compared to models with similar capabilities.

According to Amazon, Nova Pro is well-suited for applications such as video summarization, question answering, mathematical reasoning, software development, and AI agents capable of executing multi-step workflows. Like the Micro and Lite models, Nova Pro currently supports fine-tuning.

Nova Pro benchmarks. (Source: Amazon)

Amazon Nova Premier

The most capable multimodal model of the family, which is still yet to come in early 2025, is expected to be a step-up to the Pro model.

Amazon Nova Canvas

Canvas is Nova’s solution for image generation. It can generate high-quality images, give control over color scheme and style, and provide features such as inpainting, outpainting, extending images, style transfer, and background removal. The model seems efficient for creating marketing images, product mockups, etc.

Amazon Nova Reel

Nova Reel is a video generation model designed for high-quality and easily customizable video outputs. Nova Reel enables users to create and control visual style, pacing, and camera motion in videos. Reel, just like the other Nova models, comes with built-in safety controls that enable aligned content generation.

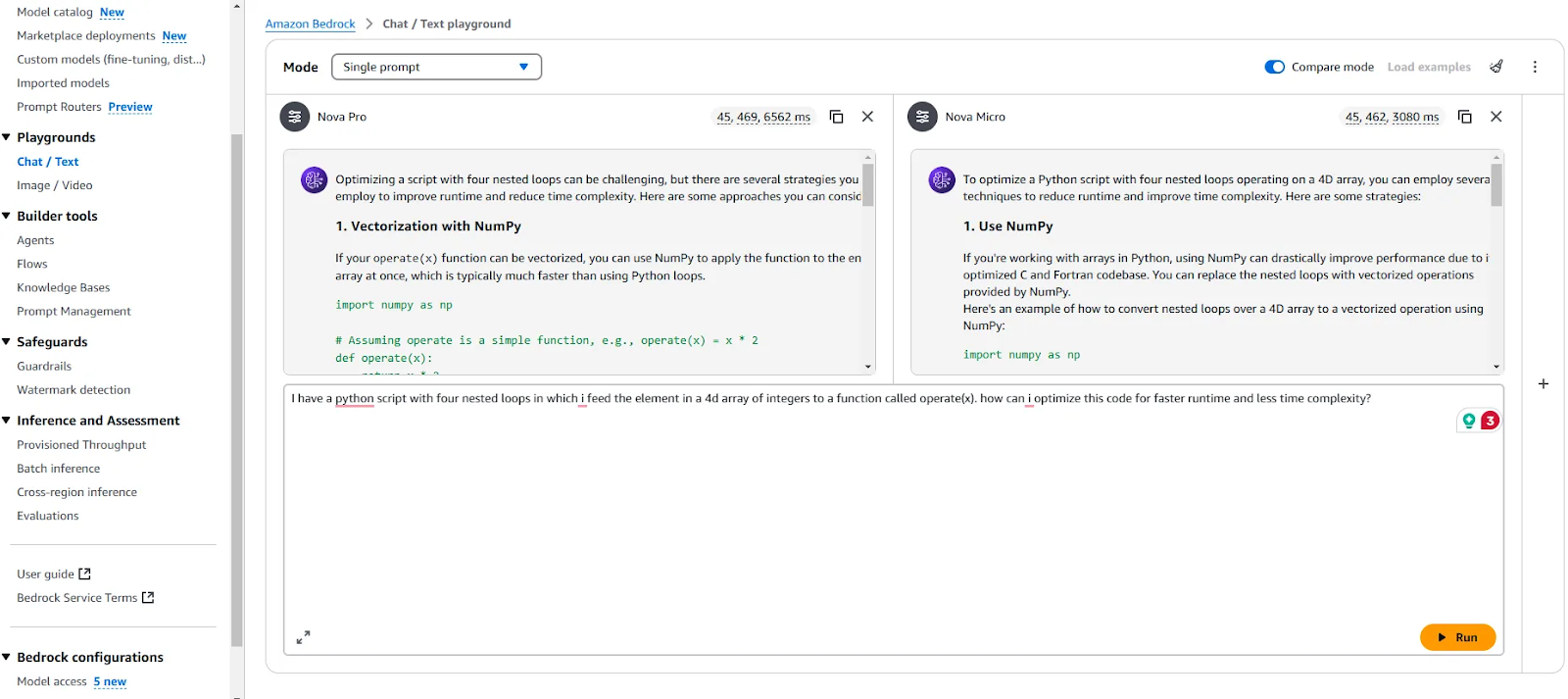

How to Access the Amazon Nova Models via the Amazon Bedrock Playground

You can use the Amazon Bedrock Playground to test and compare multiple models through a ready-to-use user interface.

I’ll assume you have the Amazon CLI and Bedrock configured and ready to use. If not, you can refer to my tutorial on AWS Multi-Agent Orchestrator, where I detail the steps to set up an environment for using the models provided by Bedrock services. Additionally, Nils Durner’s blog post offers step-by-step screenshots to guide you through setting up Bedrock services.

Amazon Bedrock Playground

When comparing the Nova Micro and Pro, I noticed that the accuracy gap between the two models is not noticeable. While Micro is more than twice as fast as Pro in text generation, it provides adequate answers for most regular use cases. Pro, on the other hand, tends to produce slightly more detailed and lengthier responses.

How to Access the Amazon Nova Models via the Amazon Bedrock API

To use the Nova models via the API and integrate them into your code, first ensure your AWS account, AWS CLI, and access to the models are properly set up (the documentation provides guidance for this).

Next, install the boto3 library, AWS’s SDK for Python, which enables you to work with their models.

pip install boto3You can interact with the models programmatically using a script like the one below:

import boto3

import json

client = boto3.client(service_name="bedrock-runtime")

messages = [

{"role": "user", "content": [{"text": "Write a short poem"}]},

]

model_response = client.converse(

modelId="us.amazon.nova-lite-v1:0",

messages=messages

)

print("\\n[Full Response]")

print(json.dumps(model_response, indent=2))

print("\\n[Response Content Text]")

print(model_response["output"]["message"]["content"][0]["text"])Demo Project With Nova Micro and AWS Multi-Agent Orchestrator

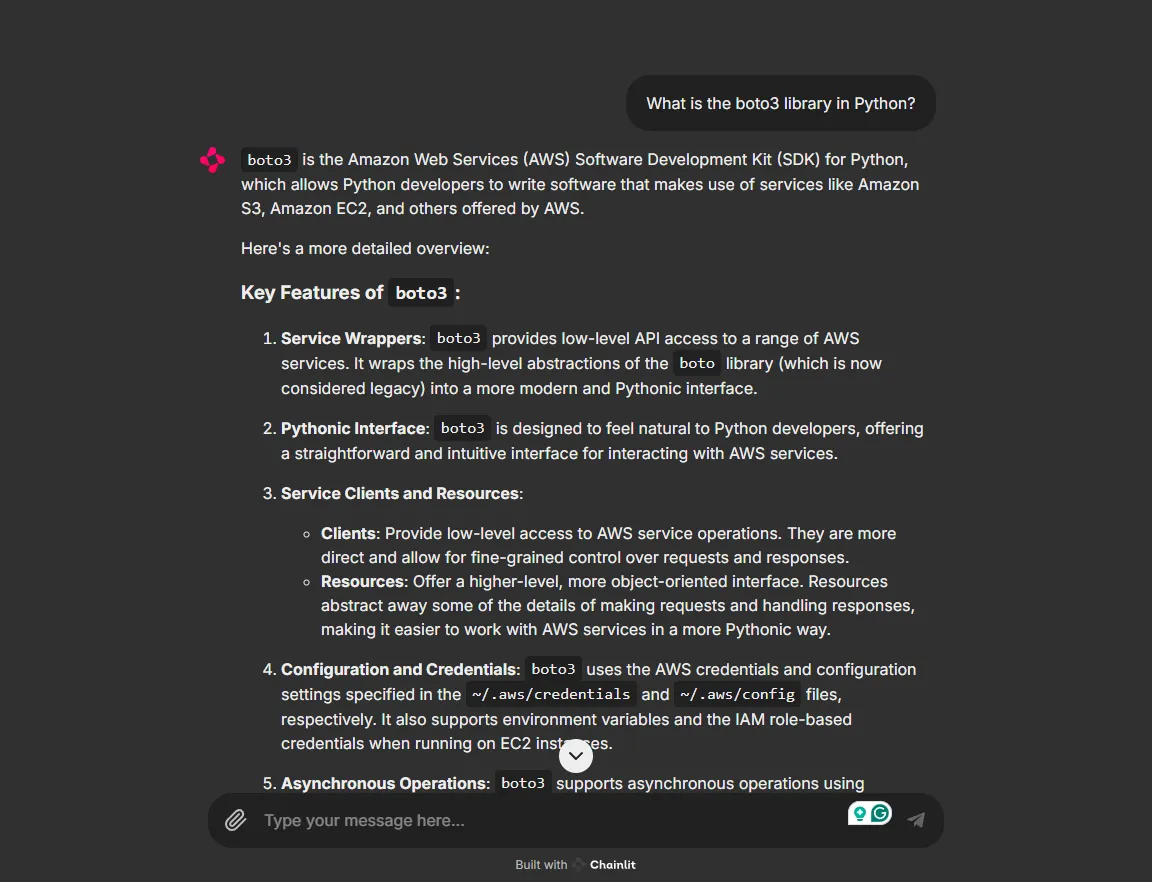

We now implement a demo project to test Nova Micro's agentic capabilities. We’ll use the AWS Multi-Agent Orchestrator framework to set up a simplified Python application consisting of two agents: Python Developer Agent and ML Expert Agent. If you want to set up the orchestrator, you can use this AWS Multi-Agent Orchestrator guide.

We will also use Chainlit, an open-source Python package, to implement a simple UI for the application. To begin with, install the necessary libraries:

chainlit==1.2.0

multi_agent_orchestrator==0.0.18We begin by importing the necessary libraries:

import uuid

import chainlit as cl

from multi_agent_orchestrator.orchestrator import MultiAgentOrchestrator, OrchestratorConfig

from multi_agent_orchestrator.classifiers import BedrockClassifier, BedrockClassifierOptions

from multi_agent_orchestrator.agents import AgentResponse

from multi_agent_orchestrator.agents import BedrockLLMAgent, BedrockLLMAgentOptions, AgentCallbacks

from multi_agent_orchestrator.orchestrator import MultiAgentOrchestrator

from multi_agent_orchestrator.types import ConversationMessage

import asyncio

import chainlit as clThe framework uses a Classifier to choose the best agent for an incoming user request. We use “anthropic.claude-3-haiku-20240307-v1:0” as the model for our Classifier.

class ChainlitAgentCallbacks(AgentCallbacks):

def on_llm_new_token(self, token: str) -> None:

asyncio.run(cl.user_session.get("current_msg").stream_token(token))

# Initialize the orchestrator

custom_bedrock_classifier = BedrockClassifier(BedrockClassifierOptions(

model_id='anthropic.claude-3-haiku-20240307-v1:0',

inference_config={

'maxTokens': 500,

'temperature': 0.7,

'topP': 0.9

}

))

orchestrator = MultiAgentOrchestrator(options=OrchestratorConfig(

LOG_AGENT_CHAT=True,

LOG_CLASSIFIER_CHAT=True,

LOG_CLASSIFIER_RAW_OUTPUT=True,

LOG_CLASSIFIER_OUTPUT=True,

LOG_EXECUTION_TIMES=True,

MAX_RETRIES=3,

USE_DEFAULT_AGENT_IF_NONE_IDENTIFIED=False,

MAX_MESSAGE_PAIRS_PER_AGENT=10,

),

classifier=custom_bedrock_classifier

)Next, we define two agents powered by Nova Micro, one acting as a Python developer expert and the other as an expert in machine learning.

def create_python_dev():

return BedrockLLMAgent(BedrockLLMAgentOptions(

name="Python Developer Agent",

streaming=True,

description="Experienced Python developer specialized in writing, debugging, and evaluating only Python code.",

model_id="amazon.nova-micro-v1:0",

callbacks=ChainlitAgentCallbacks()

))

def create_ml_expert():

return BedrockLLMAgent(BedrockLLMAgentOptions(

name="Machine Learning Expert",

streaming=True,

description="Expert in areas related to machine learning including deep learning, pytorch, tensorflow, scikit-learn, and large language models.",

model_id="amazon.nova-micro-v1:0",

callbacks=ChainlitAgentCallbacks()

))

# Add agents to the orchestrator

orchestrator.add_agent(create_python_dev())

orchestrator.add_agent(create_ml_expert())Finally, we set up the main body of the script for the Chainlit UI to handle the user requests and agent responses.

@cl.on_chat_start

async def start():

cl.user_session.set("user_id", str(uuid.uuid4()))

cl.user_session.set("session_id", str(uuid.uuid4()))

cl.user_session.set("chat_history", [])

@cl.on_message

async def main(message: cl.Message):

user_id = cl.user_session.get("user_id")

session_id = cl.user_session.get("session_id")

msg = cl.Message(content="")

await msg.send() # Send the message immediately to start streaming

cl.user_session.set("current_msg", msg)

response:AgentResponse = await orchestrator.route_request(message.content, user_id, session_id, {})

# Handle non-streaming responses

if isinstance(response, AgentResponse) and response.streaming is False:

# Handle regular response

if isinstance(response.output, str):

await msg.stream_token(response.output)

elif isinstance(response.output, ConversationMessage):

await msg.stream_token(response.output.content[0].get('text'))

await msg.update()

if __name__ == "__main__":

cl.run()The result is the Chainlit UI, which allows you to chat with the Nova models in practice and as you like.

Running our app on Chainlit

Image and video generation models are also available via the API. You can refer to the documentation for scripts demonstrating how to use them.

Conclusion

Amazon Nova models represent a step up in the foundation model ecosystem, combining state-of-the-art accuracy, speed, cost-effectiveness, and multimodal capabilities. As the Amazon LLM suite grows with new products, it is becoming a powerful choice for building affordable and scalable applications on the back of AWS.

Whether you’re developing agentic AI applications, creating chatbots for customer service, or exploring as a developer, experimenting with the Nova models is a worthwhile experience. It’s also beneficial to deepen your knowledge of AWS, Bedrock, and Amazon’s LLM tools.

In this article, we covered the key aspects of these models, how to experiment with them, and how to build a basic agentic AI application using the Nova models.

AWS Cloud Practitioner

Master's student of Artificial Intelligence and AI technical writer. I share insights on the latest AI technology, making ML research accessible, and simplifying complex AI topics necessary to keep you at the forefront.