Track

Meta AI has just introduced Llama 3.3, a 70-billion parameter model that delivers performance comparable to the much larger Llama 3.1 405B, but with far lower computational demands.

We find this especially interesting because it makes high-quality AI more accessible to developers who don’t have access to expensive hardware.

Designed for text-based tasks like multilingual chat, coding assistance, and synthetic data generation, Llama 3.3 focuses solely on text inputs and outputs—it’s not built for handling images or audio.

In this blog, we’ll walk you through what makes this model stand out and help you decide if it’s a good fit for your projects.

Develop AI Applications

What Is Llama 3.3?

Llama 3.3 is Meta AI’s latest large language model, and we see it as a step toward making advanced AI more accessible for a variety of projects. With 70 billion parameters, it offers performance on par with the much larger Llama 3.1 405B, but with significantly reduced hardware requirements. This means we can explore sophisticated AI applications without needing expensive, specialized setups.

The model is designed specifically for text inputs and outputs, so it doesn’t handle images, audio, or other media. We’ve found it to be particularly effective for tasks like multilingual chat, coding assistance, and synthetic data generation. With support for eight languages, including English, Spanish, Hindi, and German, it’s a strong option for projects requiring multilingual capabilities.

What stands out to us is its focus on efficiency. Llama 3.3 is optimized to run on common GPUs, which makes it practical for local deployments and easier to experiment with. It also incorporates alignment techniques to ensure its responses are helpful and safe, which we know is essential for sensitive applications.

In this section, we’ve outlined the basics of what Llama 3.3 is, but we’ll go deeper in the next sections. We’ll cover how it works, how to start using it, and how it performs in benchmarks, so you can determine if it’s the right fit for your work.

How Does Llama 3.3 Work?

Here’s how Llama 3.3 operates, broken down in a way that we hope makes sense whether you’re familiar with large language models or just starting to explore them.

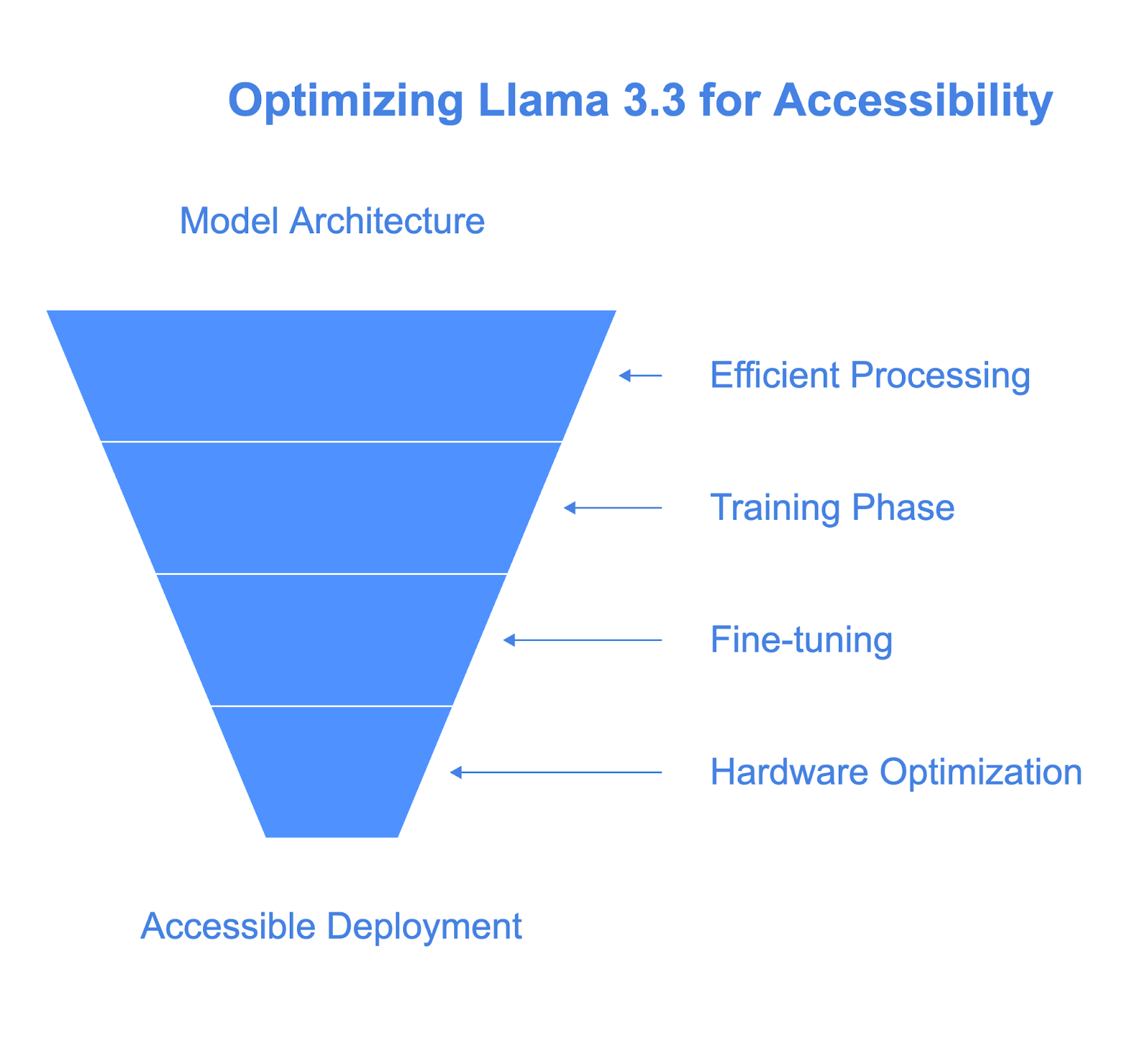

Architecture: Efficient and scalable

At the heart of Llama 3.3 is a transformer-based architecture with 70 billion parameters. If you’re not familiar, parameters are essentially the “knobs” the model adjusts during training to learn patterns and relationships in text. This is what allows Llama 3.3 to generate coherent, contextually relevant responses.

What’s different about Llama 3.3 is its use of Grouped-Query Attention (GQA). This makes the model more efficient by allowing it to process text faster and with fewer computational resources. That’s why it can achieve performance similar to the much larger Llama 3.1 405B while being far less demanding on hardware.

Training and fine-tuning

Training a model like Llama 3.3 starts with exposing it to a vast dataset—15 trillion tokens of text from publicly available sources. This gives the model its broad understanding of language and knowledge.

But we know raw training isn’t enough to make a model useful in real-world scenarios. That’s where fine-tuning comes in:

- Supervised fine-tuning (SFT): Here, the model learns from carefully selected examples of good responses. Think of this as providing a “gold standard” for how it should behave.

- Reinforcement learning with human feedback (RLHF): This involves collecting feedback from humans on how the model performs and using that feedback to refine its behavior.

This dual approach ensures Llama 3.3 aligns with human expectations, both in terms of usefulness and safety.

Designed for accessible hardware

Llama 3.3 is designed to run locally on common developer workstations, making it accessible for developers without enterprise-level infrastructure. Unlike larger models like Llama 3.1 405B, it requires significantly less computational power while maintaining strong performance.

This efficiency is largely due to Grouped-Query Attention (GQA), which optimizes how the model processes text by reducing memory usage and speeding up inference.

The model also supports quantization techniques, such as 8-bit and 4-bit precision, through tools like bitsandbytes. These techniques lower the memory requirements considerably without sacrificing much performance.

Additionally, it scales well across hardware setups, from single GPUs to distributed systems, offering flexibility for both local experiments and larger deployments.

In practice, this means we can experiment with or deploy Llama 3.3 on more affordable hardware setups, avoiding the high costs typically associated with advanced AI models. This makes it a practical choice for developers and teams looking to balance performance with accessibility.

Llama 3.3 Benchmarks

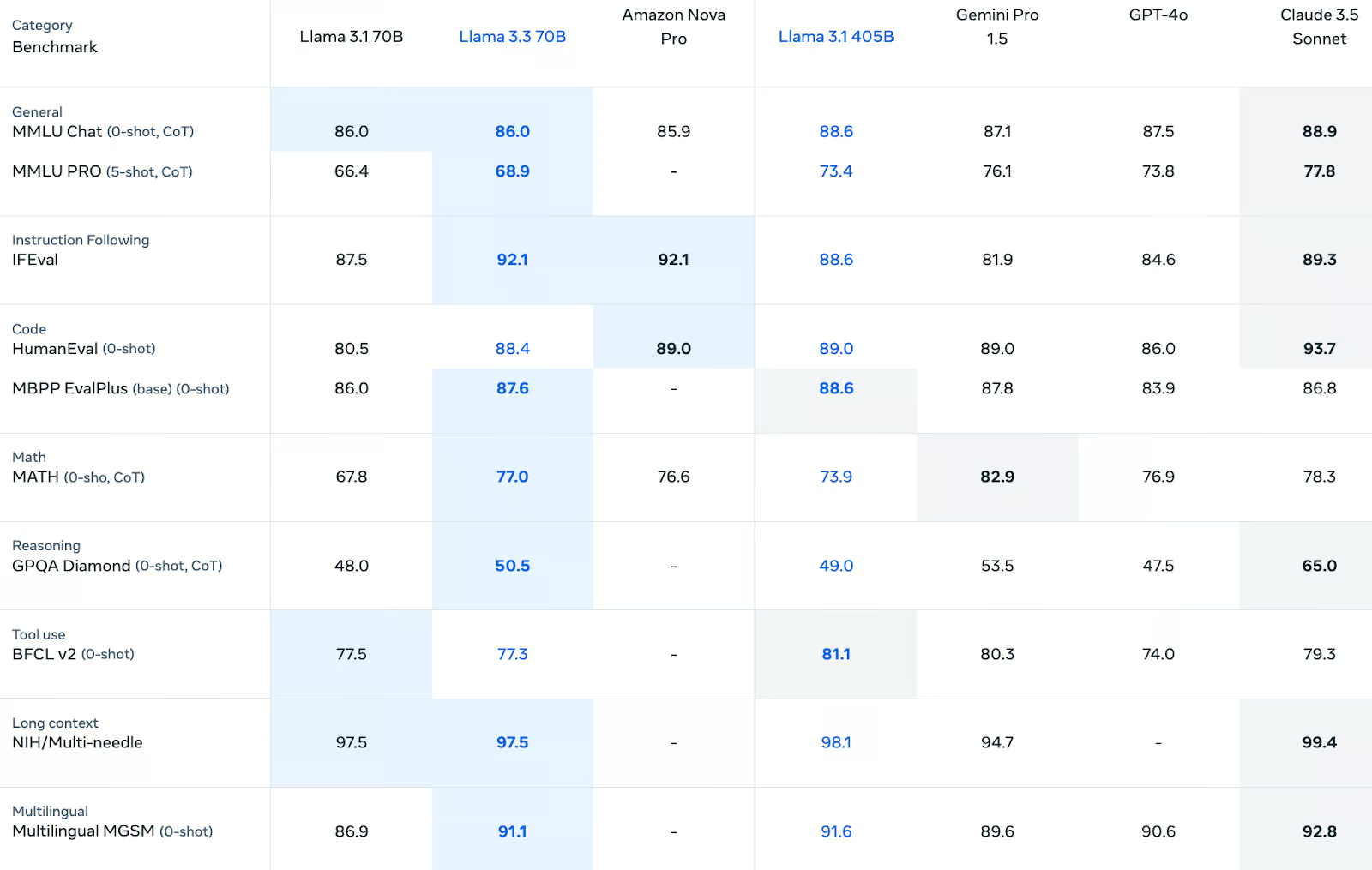

Llama 3.3 performs well across a variety of benchmarks, with standout results in instruction following, coding, and multilingual reasoning. While it doesn’t consistently outperform larger models like Llama 3.1 405B or Claude 3.5 Sonnet, it delivers reliable and competitive results in most categories. For developers looking for a balanced model that handles diverse tasks effectively, Llama 3.3 is a strong option.

General knowledge and reasoning

In general knowledge tasks, Llama 3.3 delivers solid results. It achieves an 86.0 score on MMLU Chat (0-shot, CoT), matching its predecessor Llama 3.1 70B and staying competitive with Amazon Nova Pro (85.9). However, it falls slightly short of the larger Llama 3.1 405B (88.6) and Claude 3.5 Sonnet (88.9).

On the more challenging MMLU PRO (5-shot, CoT) benchmark, Llama 3.3 improves over Llama 3.1 70B with a score of 68.9, but it remains behind both Llama 3.1 405B (73.4) and Claude 3.5 Sonnet (77.8).

For reasoning tasks, Llama 3.3 scores 50.5 on GPQA Diamond (0-shot, CoT), a slight improvement over Llama 3.1 70B (48.0). While this places it behind some competitors like Claude 3.5 Sonnet (65.0), it demonstrates some progress in structured reasoning.

Instruction following

Llama 3.3 excels at instruction-following tasks, scoring 92.1 on IFEval, which measures how well a model adheres to user instructions. This result puts it ahead of Llama 3.1 405B (88.6) and GPT-4o (84.6), and close to Claude 3.5 Sonnet (89.3). Its performance in this category highlights its alignment capabilities, which are crucial for applications like chatbots and task-specific assistants.

Coding capabilities

Coding benchmarks are a strong point for Llama 3.3. On HumanEval (0-shot), it scores 88.4, slightly behind Llama 3.1 405B (89.0) and on par with Gemini Pro 1.5. Similarly, in MBPP EvalPlus (base), it achieves 87.6, a slight improvement over Llama 3.1 70B (86.0). These results confirm its effectiveness in generating code and solving programming-related tasks.

Math and symbolic reasoning

In symbolic reasoning, Llama 3.3 shows significant progress. It scores 77.0 on the MATH (0-shot, CoT) benchmark, outperforming Llama 3.1 70B (67.8) and Amazon Nova Pro (76.6). However, it trails behind Gemini Pro 1.5 (82.9). While not the leader in this category, it performs well enough for many structured reasoning tasks.

Multilingual capabilities

Llama 3.3 demonstrates significant strength in multilingual reasoning, scoring 91.1 on MGSM (0-shot). This is a substantial improvement over Llama 3.1 70B (86.9) and places it close to Claude 3.5 Sonnet (92.8). Its performance in this category makes it a great choice for multilingual applications like translation and global customer support.

Tool use and long-context performance

For tool use, Llama 3.3 achieves 77.3 on BFCL v2 (0-shot), comparable to Llama 3.1 70B (77.5) but falling short of Llama 3.1 405B (81.1). In handling long-context inputs, it scores 97.5 on NIH/Multi-Needle, matching Llama 3.1 70B and slightly behind Llama 3.1 405B (98.1). These results indicate strong capabilities for tool-assisted workflows and extended input scenarios.

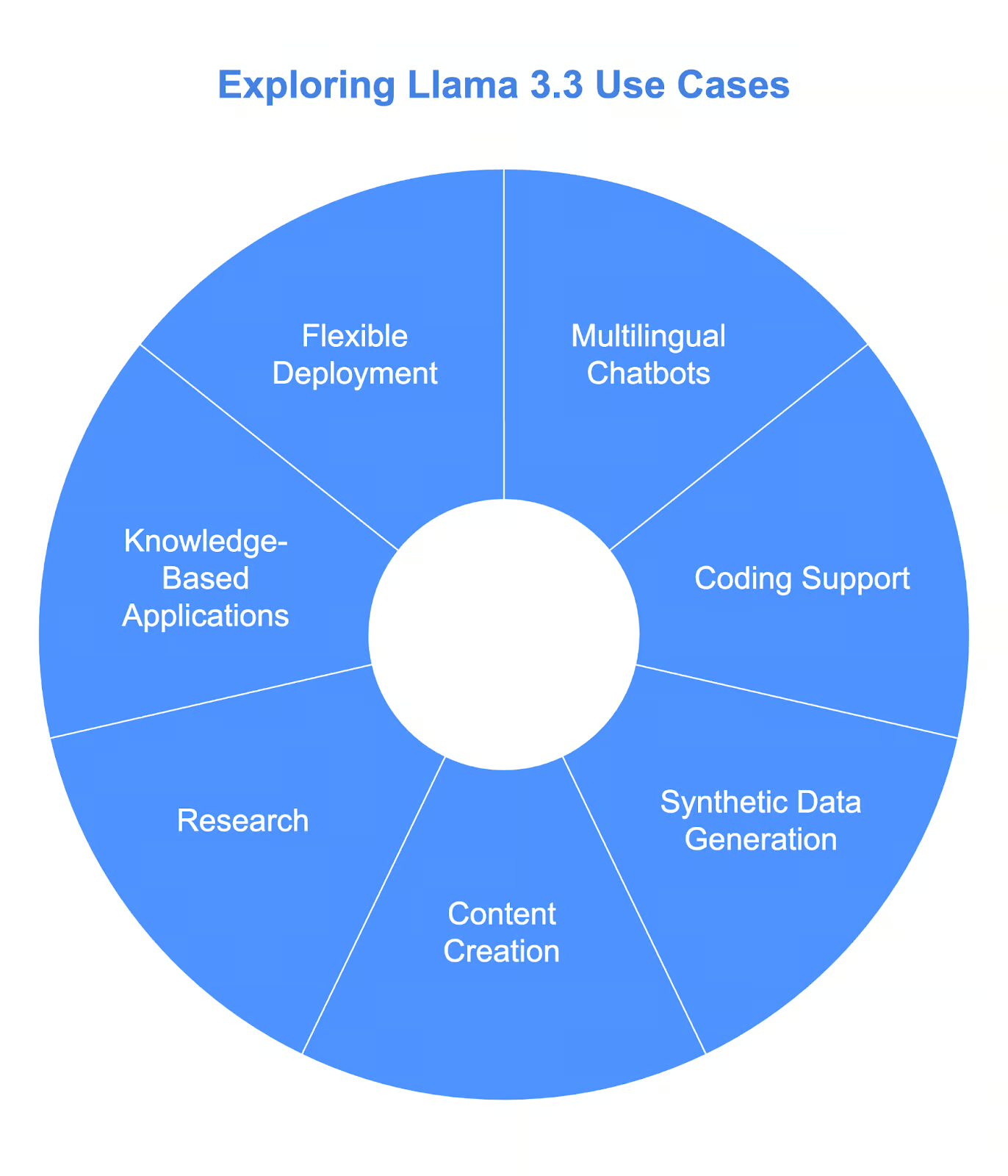

Llama 3.3 Use Cases

Llama 3.3 opens up a wide range of possibilities for developers and researchers, thanks to its balance of strong performance and hardware efficiency. We appreciate how it runs effectively on standard developer workstations, making it an approachable option for those without access to enterprise-level infrastructure. Here are some of the areas where it can be useful.

1. Multilingual chatbots and assistants

One of Llama 3.3’s strengths is its ability to handle multiple languages. With support for eight core languages—including English, Spanish, French, and Hindi—it’s ideal for building multilingual chatbots or virtual assistants.

What stands out to us is that you don’t need a data center to get started. Developers can prototype and deploy these systems on their own hardware, whether for customer support, educational tools, or other conversational applications.

For example, we could use Llama 3.3 to create a customer service chatbot that answers queries in multiple languages, all while running efficiently on a single GPU.

Project: Building RAG Chatbots for Technical Documentation

2. Coding support and software development

With strong scores on coding benchmarks like HumanEval and MBPP EvalPlus, Llama 3.3 is a reliable assistant for generating code, debugging, or even completing partially written scripts.

What we find particularly valuable is how well it works on personal hardware. Instead of relying on expensive cloud systems, you can run Llama 3.3 locally to automate repetitive tasks, generate boilerplate code, or create unit tests. For teams or solo developers, this makes advanced AI coding support both practical and affordable.

3. Synthetic data generation

Another area where Llama 3.3 shines is in generating synthetic datasets. Whether you’re building a chatbot, training a classifier, or working on an NLP project, the ability to generate high-quality, labeled data can save significant time and effort.

We think this use case is especially valuable for smaller teams who need domain-specific data but don’t have the resources to collect it manually. Since Llama 3.3 runs on developer-grade hardware, you can generate data locally, reducing costs and simplifying the workflow.

4. Multilingual content creation and localization

Llama 3.3’s multilingual capabilities also make it a strong candidate for content creation. We see its potential in producing localized marketing materials, translating technical documents, or creating multilingual blogs. Developers can fine-tune the model to adapt its tone or style, ensuring the output matches the intended audience.

For instance, you could use Llama 3.3 to draft a product description in multiple languages, improving the localization process without needing a dedicated team of translators.

5. Research and experimentation

For researchers, Llama 3.3 provides a solid platform for exploring language modeling, alignment, or fine-tuning techniques. What makes it particularly appealing is its efficiency—you don’t need access to massive cloud infrastructure to experiment with advanced AI.

This makes it a great tool for academic projects or industry research, especially when exploring areas like safety alignment or training smaller, specialized models through distillation.

6. Knowledge-based applications

Llama 3.3’s strong text-processing capabilities also lend themselves to applications like question answering, summarization, and report generation. These tasks often require handling large volumes of text efficiently, something Llama 3.3 can manage well even on personal hardware.

For example, we could use it to automatically summarize customer feedback or generate internal documentation, saving time while maintaining accuracy.

7. Flexible deployment for small teams

Finally, we think Llama 3.3 is a great option for startups, solo developers, or small teams who want advanced AI capabilities without the costs of enterprise-grade infrastructure. Its ability to run on local servers or even a single workstation makes it a practical solution for lightweight production systems.

How to Access Llama 3.3

Meta provides access to Llama 3.3 through their dedicated website: Llama Downloads. Here, you’ll find links to the official model card, licensing details, and additional documentation to help you get started.

For in-depth guidance, we recommend exploring the official resources:

- Model card and usage instructions on GitHub: Meta GitHub Repository.

- Hugging Face model page: Llama 3.3-70B on Hugging Face.

These platforms provide detailed documentation and community forums where you can ask questions or troubleshoot issues.

Llama 3.3 Pricing

From our perspective, this pricing makes Llama 3.3 particularly attractive for developers working on a budget or scaling projects with high token demands. Whether you’re running it locally or through a platform, these competitive rates ensure that high-quality AI is accessible without breaking the bank.

Input token costs

For processing 1 million input tokens, Llama 3.3 is priced at $0.1, which matches its predecessor, Llama 3.1 70B. Compared to other models, this is one of the lowest rates:

- Amazon Nova Pro charges $0.80, making it eight times more expensive for the same volume.

- GPT-4o and Claude 3.5 Sonnet cost $2.5 and $3.0, respectively, placing them significantly higher than Llama 3.3.

This low input cost makes Llama 3.3 particularly suitable for applications that require processing large amounts of text, such as chatbots or text analysis tools.

Output token costs

For 1 million output tokens, Llama 3.3 is priced at $0.4, again matching the cost of Llama 3.1 70B. Here’s how it compares:

- Amazon Nova Pro is priced at $1.8, over four times higher than Llama 3.3.

- GPT-4o charges $10.0, and Claude 3.5 Sonnet charges $15.0, making Llama 3.3 an economical option by a wide margin.

For applications that generate a high volume of text, such as synthetic data creation or content generation, Llama 3.3 offers significant cost savings.

Conclusion

We see Llama 3.3 as a compelling example of how large language models can balance capability with accessibility.

As a 70-billion parameter model, it delivers performance comparable to the much larger Llama 3.1 405B but with far lower computational requirements.

For developers without access to enterprise-level hardware, this model represents a practical way to integrate high-quality AI into their workflows.

FAQs

How is Llama 3.3 different from Llama 3.2?

Llama 3.3 improves on Llama 3.2 with better fine-tuning (SFT and RLHF), expanded safety features, multilingual support (8 languages), a longer context window (128k tokens), and stronger benchmark performance. It also adds tool-use capabilities, improved energy efficiency, and a robust responsible AI framework, making it more powerful and versatile.

How is Llama 3.3 different from Llama 405B?

Llama 3.3 delivers performance comparable to Llama 3.1 405B but is significantly more efficient, requiring far less computational power to run.

What languages does Llama 3.3 support?

Llama 3.3 natively supports English, French, German, Hindi, Italian, Portuguese, Spanish, and Thai. Developers can fine-tune it for other languages, but additional safeguards are required.

What benchmarks does Llama 3.3 excel in?

Llama 3.3 achieves high scores in benchmarks like MMLU, HumanEval, and MGSM, outperforming many other models in categories like reasoning, coding, and multilingual capabilities.

Can Llama 3.3 run on standard developer hardware?

Yes, Llama 3.3 is designed to run on common GPUs and developer-grade workstations, making it accessible for developers without enterprise-level infrastructure.

I’m an editor and writer covering AI blogs, tutorials, and news, ensuring everything fits a strong content strategy and SEO best practices. I’ve written data science courses on Python, statistics, probability, and data visualization. I’ve also published an award-winning novel and spend my free time on screenwriting and film directing.

I'm a data science writer and editor with contributions to research articles in scientific journals. I'm especially interested in linear algebra, statistics, R, and the like. I also play a fair amount of chess!