Course

Apple has long been a pioneer in technology, consistently setting the bar for innovation. Recently, Apple released a new open-source DCLM-7B large language model (LLM) for the community to use.

It’s encouraging to see a major player like Apple release their new model as open-source, as this move aligns with the growing trend of democratizing AI and making powerful tools accessible to a broader audience.

Develop AI Applications

DCLM-7B: Key Features and Capabilities

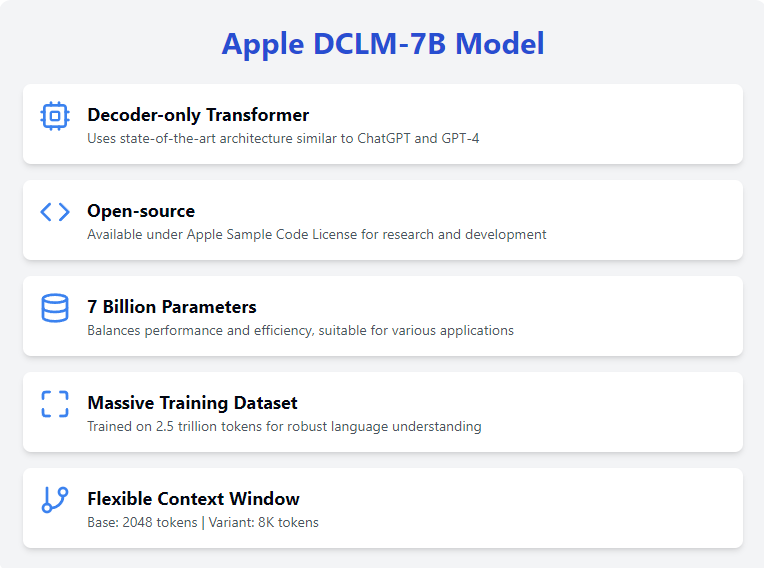

Apple's latest contribution, the DCLM-7B (DataComp for Language Models) base model, stands out as a noteworthy addition to the LLM field. Let’s explore its key features.

Decoder-only transformer

The DCLM-7B model utilizes a decoder-only Transformer architecture, which is a design where the model predicts one token at a time, and each generated token is fed back into the model to generate the next one.

This architecture is optimized for generating coherent and contextually relevant text, making it ideal for various natural language processing tasks. It is the same architecture used in state-of-the-art models like ChatGPT and GPT-4o, demonstrating its effectiveness in understanding and generating human-like text.

Open-source

The DCLM-7B model is available for research and development under the Apple Sample Code License. This open-source approach encourages widespread use and collaboration within the AI community.

By making this model accessible, Apple supports the democratization of AI, allowing researchers and developers from all over the world to experiment with and build upon the base model.

7 billion parameters

With 7 billion parameters, the DCLM-7B model strikes a balance between performance and computational efficiency.

This size makes it possible to run the model on most high RAM/VRAM rigs and cloud platforms, making it versatile and accessible for various applications. The substantial number of parameters enables the model to capture complex language patterns, enhancing its capability to perform a wide range of tasks.

Trained on a massive dataset

The model has been trained on an extensive dataset of 2.5 trillion tokens, providing a solid foundation for tackling a wide range of language tasks. This allows the DCLM-7B model to understand and generate text with a high degree of accuracy and relevance. Additionally, this makes the model a good choice for task-specific fine-tuning, as it has a robust base understanding of the English language.

Context window

The base DCLM-7B model has a context window of 2048 tokens, which allows it to process relatively long sequences of text. Although this is relatively small by today’s standards, Apple has also released a variant with an 8K token context window.

This extended context window provides even greater flexibility for handling longer inputs, making the model suitable for applications that require the processing of extended texts or documents, like Retrieval Augmented Generation (RAG).

Getting Started With DCLM-7B

Apple has made the DCLM-7B model compatible with Hugging Face’s transformers library, making it easy to access and use.

You can find the model’s webpage on Hugging Face, and check out the GitHub repository for more details. To use and access the model, we will need to install the transformers library:

pip install transformersAdditionally, we will need to install the open_lm framework:

pip install git+https://github.com/mlfoundations/open_lm.gitThe DCLM-7B model with full precision is quite large, approximately 27.5GB, requiring a significant amount of RAM or VRAM to run. You will need a high-end computer or some kind of cloud environment. I will be using Google Colab’s premium subscription notebook with 50GB of RAM and an L4 GPU.

With all the necessary libraries installed, we are ready to start using the model!

DCLM-7B: Example Usage

For the example, I will be running a basic example provided on the model's Huggingface webpage. First, we import all the necessary libraries:

from open_lm.hf import *

from transformers import AutoTokenizer, AutoModelForCausalLMThen, we need to download and initialize both the tokenizer and the model (notice that we are running the model with full precision floats on a CPU for this example):

tokenizer = AutoTokenizer.from_pretrained("apple/DCLM-Baseline-7B")

model = AutoModelForCausalLM.from_pretrained("apple/DCLM-Baseline-7B")And lastly, we run the example prompt:

inputs = tokenizer(["Machine learning is"], return_tensors="pt")

gen_kwargs = {"max_new_tokens": 50, "top_p": 0.8, "temperature": 0.8, "do_sample": True, "repetition_penalty": 1.1}

output = model.generate(inputs['input_ids'], **gen_kwargs)

output = tokenizer.decode(output[0].tolist(), skip_special_tokens=True)I got the following output:

[Machine learning is not the solution to everything, it just enables you to solve a problem that otherwise would have been impossible. The biggest challenge for me as a manager of an AI team was to identify those problems where machine learning can really add value and be successful.]Advanced Usage And Fine-Tuning

Fine-tuning the DCLM-7B model can help tailor it to specific tasks, enhancing its performance in your applications. Unfortunately, the DCLM-7B model is not supported by Huggingface’s peft library, and therefore we need to use transformers library to fine-tune it.

Without tools like LoRA, fine-tuning the model this large requires immense resources, as it is basically the same as just training it from scratch. Therefore I will just outline the fine-tuning process here without actually running it to see the results.

Preparing the dataset

To download and use an openly available dataset, we will be using Hugging Face’s datasets library. We install it with the following command:

pip install datasetsOnce installed, we import and use the load_dataset function, for this example I will be using the wikitext dataset:

from datasets import load_dataset

dataset = load_dataset('wikitext', 'wikitext-2-raw-v1')Now, we need to tokenize the dataset:

def tokenize_function(examples):

return tokenizer(examples['text'], padding='max_length', truncation=True, max_length=512)

tokenized_datasets = dataset.map(tokenize_function, batched=True)Now, we are ready to start fine-tuning!

Fine-tuning

For fine-tuning, we need to import and initialize TrainingArguments and Trainer objects and then run the train() function.

from transformers import TrainingArguments, Trainer

training_args = TrainingArguments(

report_to = "none",

output_dir="./results",

evaluation_strategy="epoch",

learning_rate=2e-5, # Controls how much to change the model weights during training

per_device_train_batch_size=2, # Number of samples per batch per device during training

per_device_eval_batch_size=2, # Number of samples per batch per device during evaluation

num_train_epochs=3, # Number of times the entire training dataset will be passed through the model

weight_decay=0.01, # Regularization technique to prevent overfitting

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=tokenized_datasets['train'],

eval_dataset=tokenized_datasets['test'],

data_collator=data_collator,

tokenizer=tokenizer,

)

trainer.train()Conclusion

Overall, Apple's DCLM-7B is a significant addition to the open-source language model landscape, offering researchers and developers a powerful tool for various NLP tasks.

As a decoder-only Transformer model, it is optimized for text generation, providing coherent and contextually relevant outputs. The model's availability under the Apple Academic Software License Agreement further encourages collaboration and innovation in the AI community.