Track

Businesses face challenges when transferring data as they shift workloads to the cloud or manage backups and disaster recovery. Outdated techniques—like manual uploads or custom scripts—lack automation and reliability, resulting in higher costs and operational overhead.

AWS DataSync makes it easier by offering a secure, automated method for transferring data sets between storage systems, on-site and AWS services, or across different AWS regions.

In this tutorial, I will guide you through:

- Setting up AWS DataSync – Creating and configuring agents for data transfer.

- Configuring data transfer tasks – Defining source and destination endpoints, filtering data, and scheduling transfers.

- Using DataSync for key use cases – Including cloud migration, backup, and disaster recovery.

What is AWS DataSync?

AWS DataSync is a data transfer service that aims to move large amounts of data between on-premises storage systems and cloud environments using AWS services. It streamlines data transfer processes by handling tasks like data validation and encryption while providing scheduling options. This makes it a dependable choice for migrations, data backups, and continuous data replication.

DataSync speeds up data transfer compared to methods involving intricate scripts or manual steps by enabling quick and secure automated data movement between various sources. It supports transfers between:

- On-premises storage (NFS, SMB, object storage) and AWS.

- Amazon S3, Amazon EFS, Amazon FSx, and Amazon S3 Glacier.

- AWS services across different regions and accounts.

> If you're new to AWS or want a refresher on key cloud tools, the AWS Cloud Technology and Services course offers a solid overview of core services like EC2, S3, and IAM.

Features of AWS DataSync

- High-Speed data transfer: Utilizing parallel and optimized data streams enables data transfer up to 10 times faster.

- Automated data validation: Verifies the accuracy of transferred data through checksum calculations to maintain data integrity.

- Seamless integration with AWS services: Natively supports Amazon S3, Amazon EFS, Amazon FSx for Windows, and FSx for Lustre as both source and destination.

- Incremental and scheduled transfers: Transfers only changed data (differential sync) to minimize bandwidth usage and costs and supports scheduling for periodic data sync.

- Security and encryption: Data transfers are safeguarded through end-to-end encryption using TLS for transit security and AES 256 for data-at-rest protection. AWS Identity and Access Management (IAM) also provides access control.

- Multi-Region and cross-account transfers: This facilitates copying data between regions and accounts on AWS for planning and sharing.

Now that the context is set, let’s get to the practical section of this tutorial!

AWS Cloud Practitioner

Setting Up AWS DataSync

Before transferring data, you need to configure AWS DataSync properly. This section covers the prerequisites and setup steps, including agent installation, IAM roles, and storage configuration.

Prerequisites for AWS DataSync

We will use AWS CloudFormation to automate the provisioning process and focus on data migration. We will create the following resources:

- An AWS VPC with public subnets for network connectivity.

- An NFS server on an Amazon EC2 instance to simulate an on-premises storage system.

- AWS DataSync agents on EC2 instances to facilitate data movement.

- IAM Roles and policies to grant required permissions for DataSync operations.

Login to the AWS Console

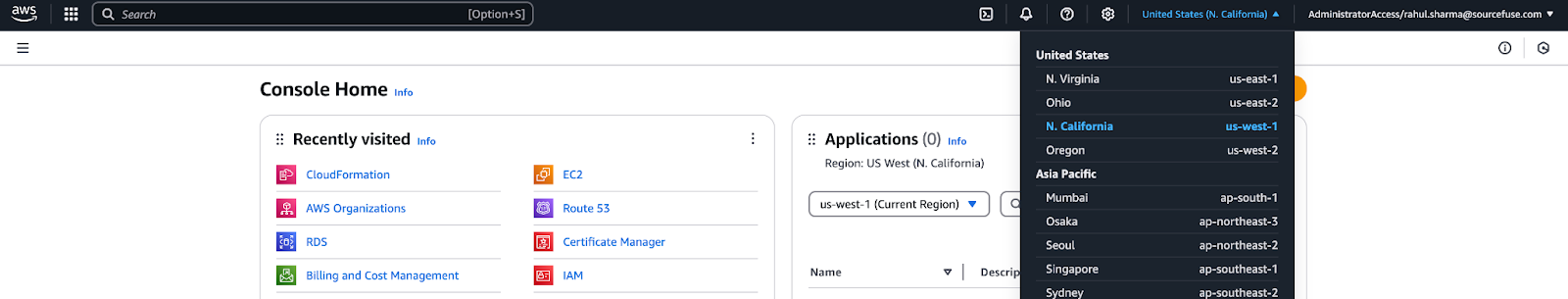

- Log in to the AWS Management Console using your credentials.

- Click on the drop-down menu at the top right corner of the screen, and select the “N. California” region for this tutorial.

Figure 1 - Selecting AWS Region in the AWS Management Console

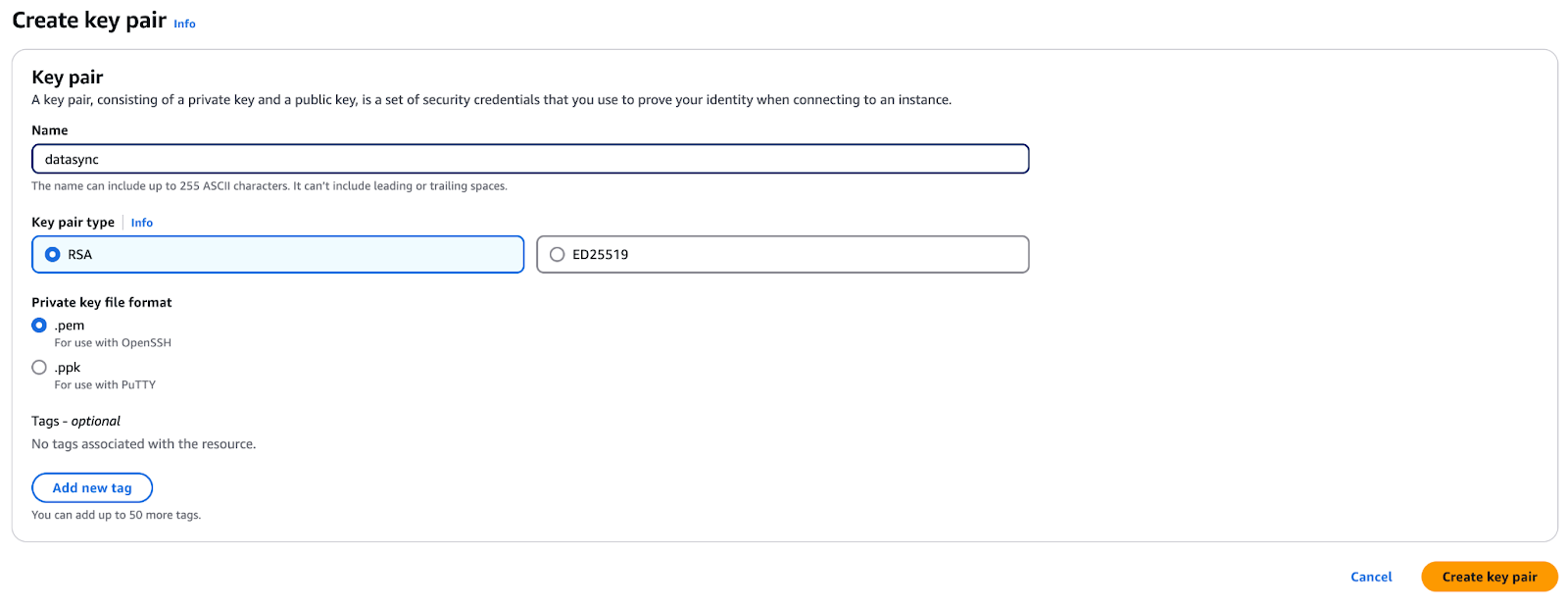

- Go to the EC2 Console and navigate to the Key Pair section. Ensure you’re in the same AWS region as the previous step.

- Click Create Key Pair and name it “datasync”.

Figure 2 - Creating a key pair for secure access in AWS EC2

- Click Create, and your browser will download a

datasync.pemfile. - Save this file securely—you’ll need it later in the tutorial.

Configure the environment

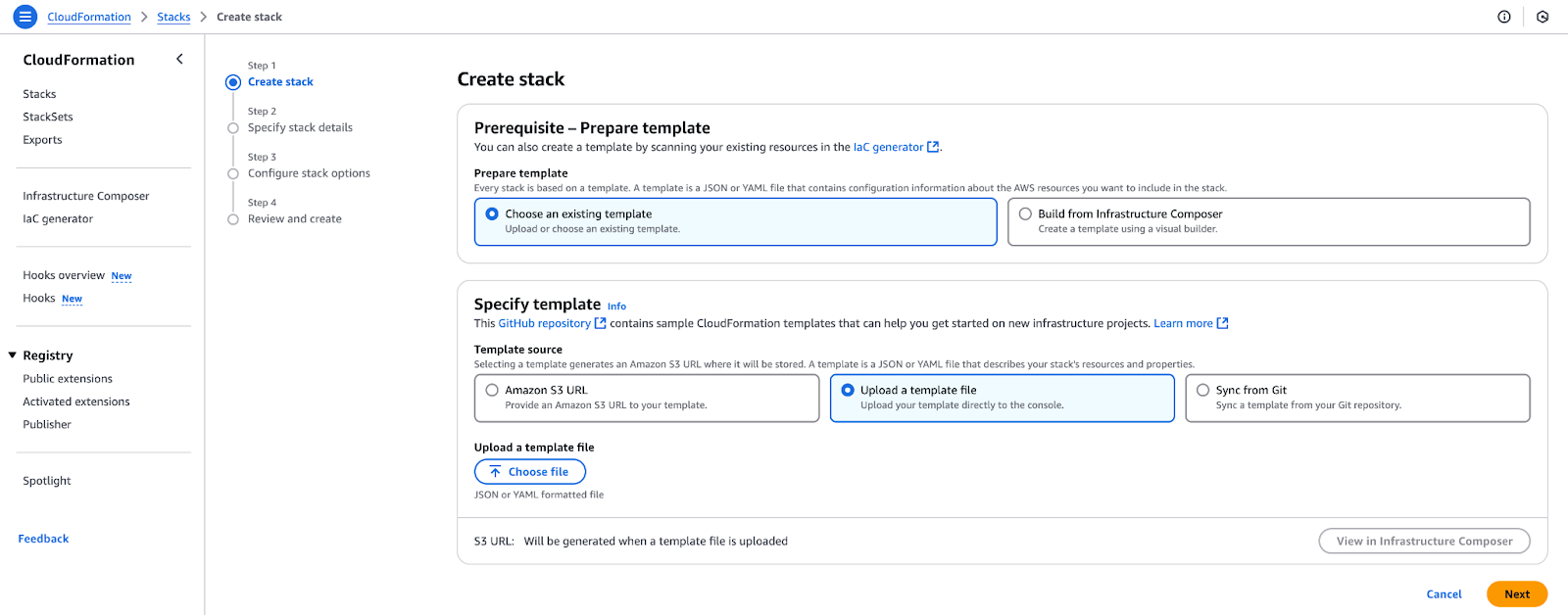

In this step, you'll use a CloudFormation template to set up the necessary AWS infrastructure for AWS DataSync, which was mentioned before.

- Open the AWS CloudFormation console and click Create stack.

- Select Template is ready, then choose Upload a template file.

Figure 3 - Uploading a CloudFormation template to create a new stack.

- Download the file from Github Gist and click Choose file, upload

datasync-onprem.yaml, and click Next. - Under the Parameters section, select an EC2 key pair to allow SSH login to instances created by this stack. Do not edit the values for AMI IDs, but click Next.

- On the Stack Options page, keep the default settings and click Next.

- Review the configuration and click Create stack.

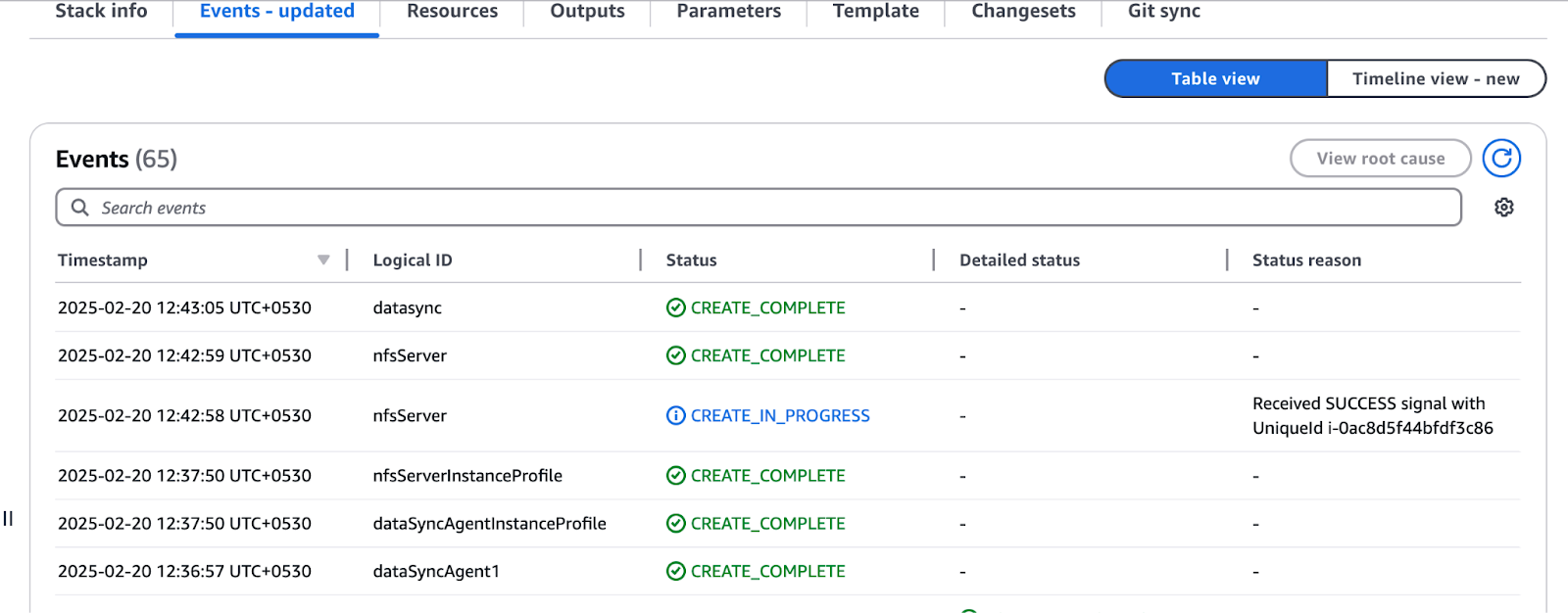

- You'll be redirected to the CloudFormation console, where the status will show “CREATE_IN_PROGRESS”. Wait until it changes to “CREATE_COMPLETE”.

Figure 4 - Reviewing resource creation status in AWS CloudFormation

- Once complete, navigate to the Outputs section and note the values—you'll need them for the rest of the tutorial.

- You need to deploy another stack by following the same steps as outlined earlier. This template does not require additional parameters. Download the template from GitHub Gist and proceed with the deployment.

Setting up the DataSync agent

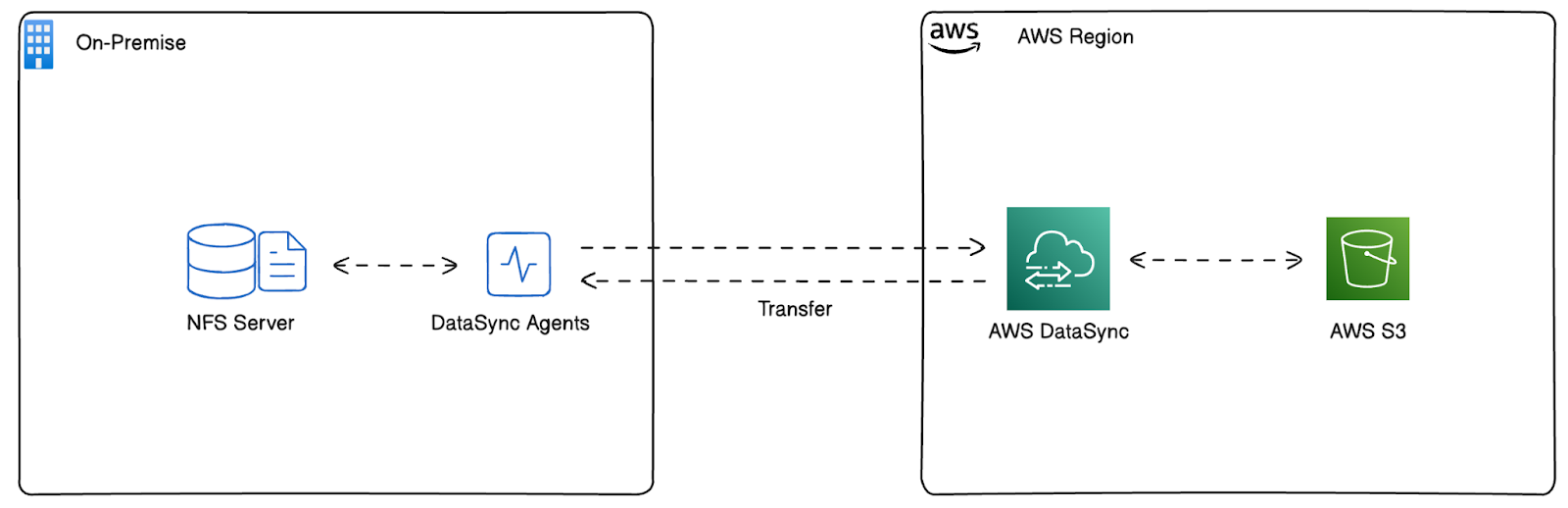

In this tutorial, we replicate an on-premises environment in AWS to emulate real-world data transfer scenarios using AWS DataSync.

Instead of using an actual on-premises infrastructure, we have launched an NFS server on an Amazon EC2 instance, which will be the source storage system. This allows us to test and configure AWS DataSync as if we were moving data from an on-premises location.

We have launched the AWS DataSync Agents on EC2 instances within the same environment to enable data movement. These agents are responsible for linking the NFS server to AWS DataSync, enabling data transfers to cloud storage services like Amazon S3.

This architecture allows us to mimic, configure, and validate AWS DataSync tasks to achieve a seamless workflow before implementing them in a real on-premises environment. In this tutorial, we will use this environment to register the agent, create the DataSync tasks, and move data around efficiently.

Figure 5 - Architecture deployed by AWS CloudFormation

Configuring the NFS server for AWS DataSync

Before setting up AWS DataSync, it's essential to understand the data you’ll be transferring and how it is organized. In this section, we will configure an NFS server deployed in our AWS environment to act as an on-premises storage system. This setup will allow us to simulate data migration to AWS services.

First, log in to the NFS server:

- Open the AWS Management Console and navigate to the “N. California” region.

- Select EC2 from the AWS services list.

- Locate the NFS server instance in the EC2 instance list.

- Click Connect and follow the instructions to connect using Session Manager or SSH.

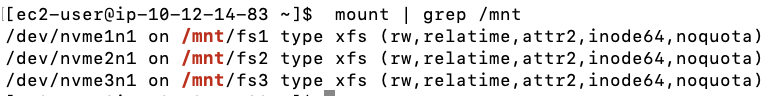

Then, browse the file systems. The NFS server contains three 200 GiB EBS volumes, each formatted with the XFS file system and pre-populated with sample datasets.

- Run the following command to verify the mounted file systems:

mount | grep /mnt

Figure 6 - Expected output of mount command

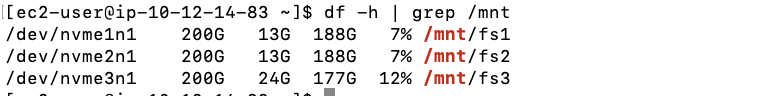

- Next, check the amount of data present in each file system:

df -h | grep /mnt

Figure 7 - Expected output of df command

As you can see, fs1 and fs2 contain 12 GiB of data, and fs3 contains 22 GiB of data.

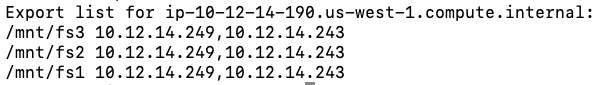

Now, let’s configure the NFS exports. To allow AWS DataSync agents to access the NFS server, configure the /etc/exports file:

- Open the

/etc/exportsfile as root using a text editor:

sudo nano /etc/exports- Add the following lines to define read-only (ro) exports for the DataSync agents:

/mnt/fs1 10.12.14.243(ro,no_root_squash) 10.12.14.249(ro,no_root_squash)

/mnt/fs2 10.12.14.243(ro,no_root_squash) 10.12.14.249(ro,no_root_squash)

/mnt/fs3 10.12.14.243(ro,no_root_squash) 10.12.14.249(ro,no_root_squash)Replace 10.12.14.243 and 10.12.14.249 with the private IPs of your DataSync agent EC2 instances from the CloudFormation outputs.

- Apply the new export settings by restarting the NFS service:

sudo systemctl restart nfs- Verify NFS exports:

showmount -e

Figure 8 - Expected output of showmount command

Configuring CloudWatch and activating AWS DataSync agents

With the NFS server configured, the next step is to enable AWS CloudWatch logging for DataSync and activate the DataSync agents in the “N. California” region. This ensures all file transfers are logged, providing visibility into errors or failures.

Before AWS DataSync can send logs to CloudWatch, we need to create a resource policy that grants DataSync the required permissions.

- Copy the following JSON policy and save it as

datasync-policy.jsonon your local machine:

{

"Statement": [

{

"Sid": "DataSyncLogsToCloudWatchLogs",

"Effect": "Allow",

"Action": [

"logs:PutLogEvents",

"logs:CreateLogStream"

],

"Principal": {

"Service": "datasync.amazonaws.com"

},

"Resource": "*"

}

],

"Version": "2012-10-17"

}- Then, run the following command in your terminal:

aws logs put-resource-policy --region us-west-1 --policy-name trustDataSync --policy-document file://datasync-policy.jsonThe above command allows AWS DataSync to write logs to CloudWatch, which will help monitor and debug transfer issues.

Now, let’s activate the DataSync agents. Although the DataSync agent EC2 instances were created in the “N. California” region, they must be activated in that region before use.

Note: If you need to install the DataSync agent in VMware or another on-premises environment, refer to the official AWS guide. However, since we are simulating an on-premises setup using EC2 instances, the DataSync agents in this tutorial are deployed following AWS best practices for cloud-based installations.

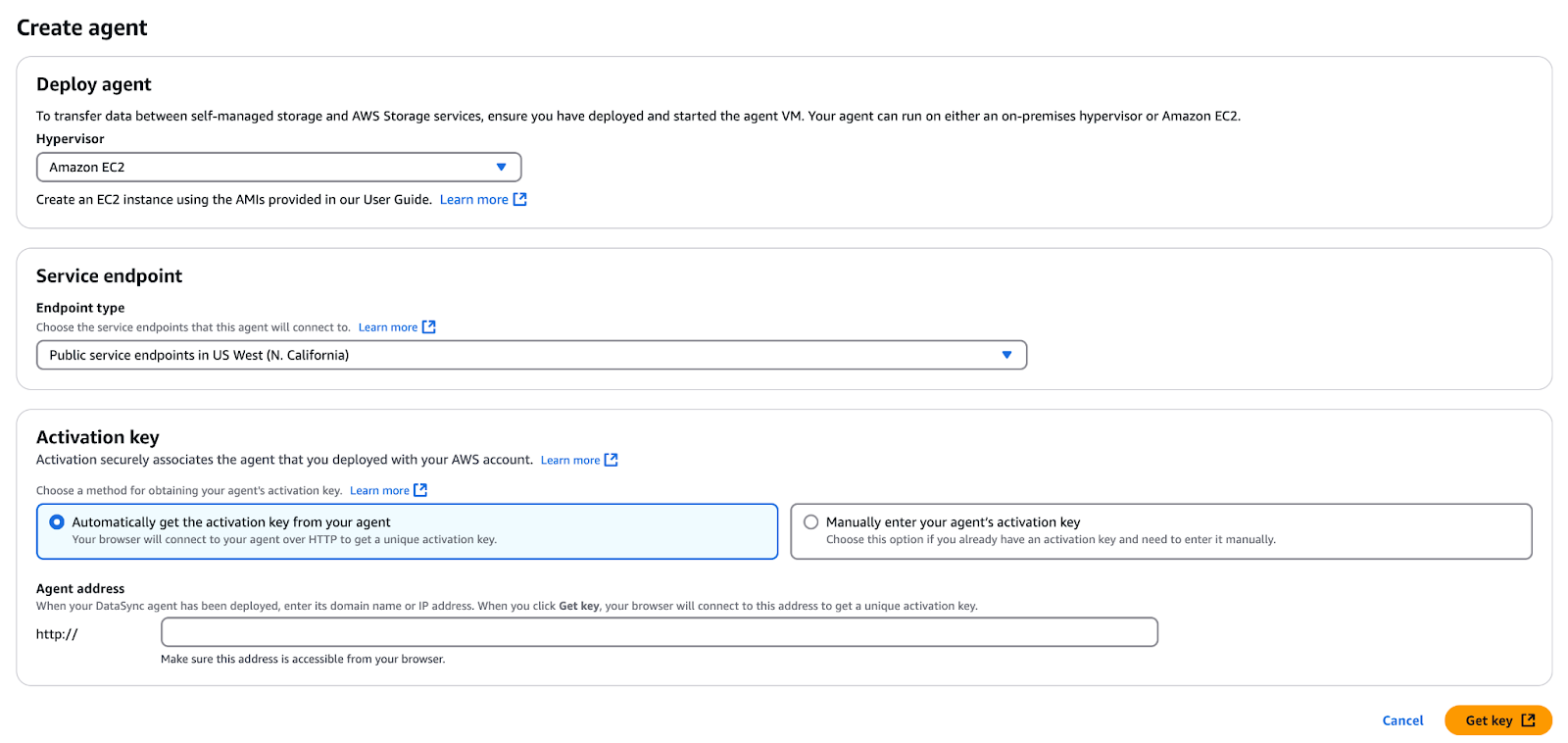

- Navigate to the AWS management console and select the “N. California” region.

- Open the AWS DataSync service.

- If no agents exist, click Get started; otherwise, click Create agent.

- In the Service endpoint section, leave it as “Public service endpoints…”.

- In the Activation key section, enter the public IP address of the first DataSync agent from the CloudFormation outputs:

- Agent 1 Public IP:

<Output from Cloudformation> - Agent 2 Public IP:

<Output from Cloudformation>

- Agent 1 Public IP:

Figure 8 - Create and activate DataSync agent

- Click Get key to retrieve the activation key.

- Once the activation is successful, enter an Agent name (e.g., "Agent 1" or "Agent 2").

- Apply any tags if needed.

- Click Create agent.

- Repeat the above steps for the second DataSync agent.

Creating a DataSync task

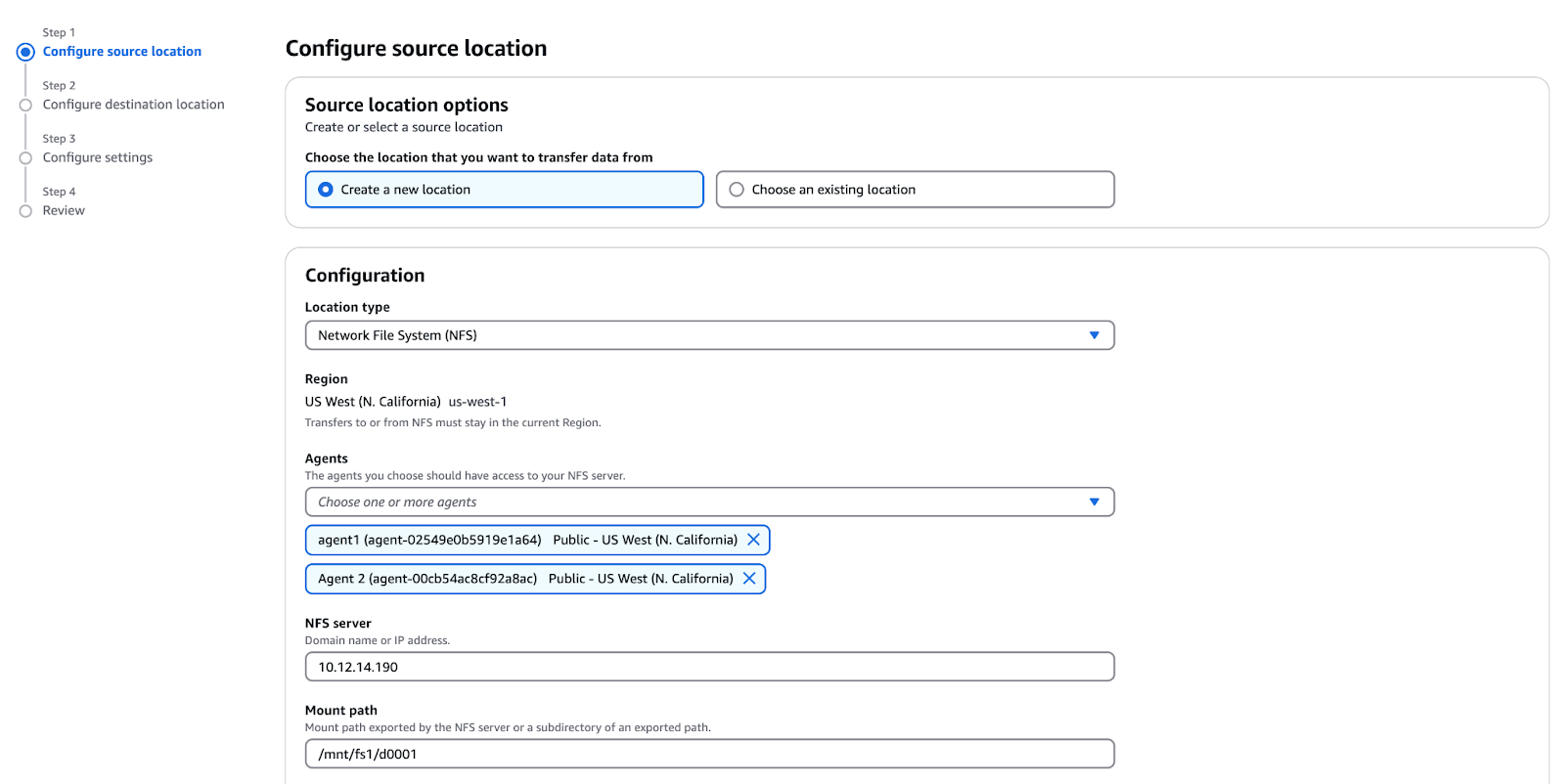

Now, let’s finally create a DataSync task! Follow these steps:

- Go to the AWS DataSync console.

- Click Tasks → Create task.

- Create source location:

- Location type: Network File System (NFS).

- Agents: Select both “Agent 1 private IP” and “Agent 2 private IP”.

- NFS Server Private IP: NFS private IP.

- Mount path:

/mnt/fs1/d0001(copies only thed0001directory).

Figure 10 - Configuring the source location for AWS DataSync

- Create destination location:

- Location type: Amazon S3.

- Select the S3 bucket from CloudFormation outputs.

- Storage class: Standard.

- IAM Role: Select from CloudFormation outputs

Configuring data transfer tasks

Once AWS DataSync is set up, the next step is configuring data transfer tasks. These tasks define how, when, and where data will be transferred. AWS DataSync supports various options for optimizing transfers, including file filtering, scheduling, and monitoring.

- Configure task settings:

- Task name: Test Task

- Exclude patterns:

*/.htaccessand*/index.html - Transfer mode: Transfer data that has only changed (determines whether DataSync transfers only the data and metadata that differ between the source and the destination.)

- Verify data: Select "Verify only the data transferred".

- Enable CloudWatch logging:

- Log group: Select

DataSyncLogs-datasync-incloud. - Click Next, review settings, and click Create task.

Performing Data Transfer with AWS DataSync

After configuring a DataSync task, you need to execute the transfer and monitor its progress. This section covers how to start a transfer manually or via the CLI, track its status, and troubleshoot common issues.

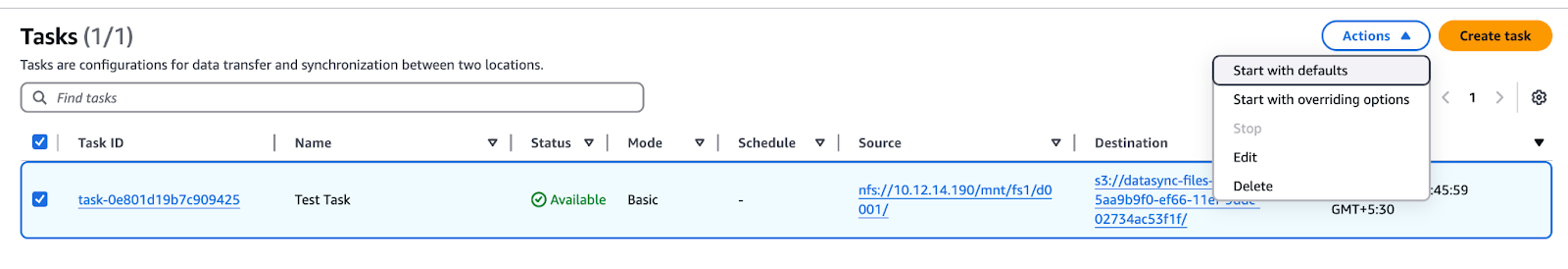

Starting the data transfer

- Wait for the task status to change from "Creating" to "Available."

- Click the Start button, review settings, and click Start.

Figure 11 - AWS DataSync task list showing options to start, edit, or delete the task

- Monitor the task progress:

- It will transition through these statuses: Launching → Preparing → Transferring → Verifying → Success.

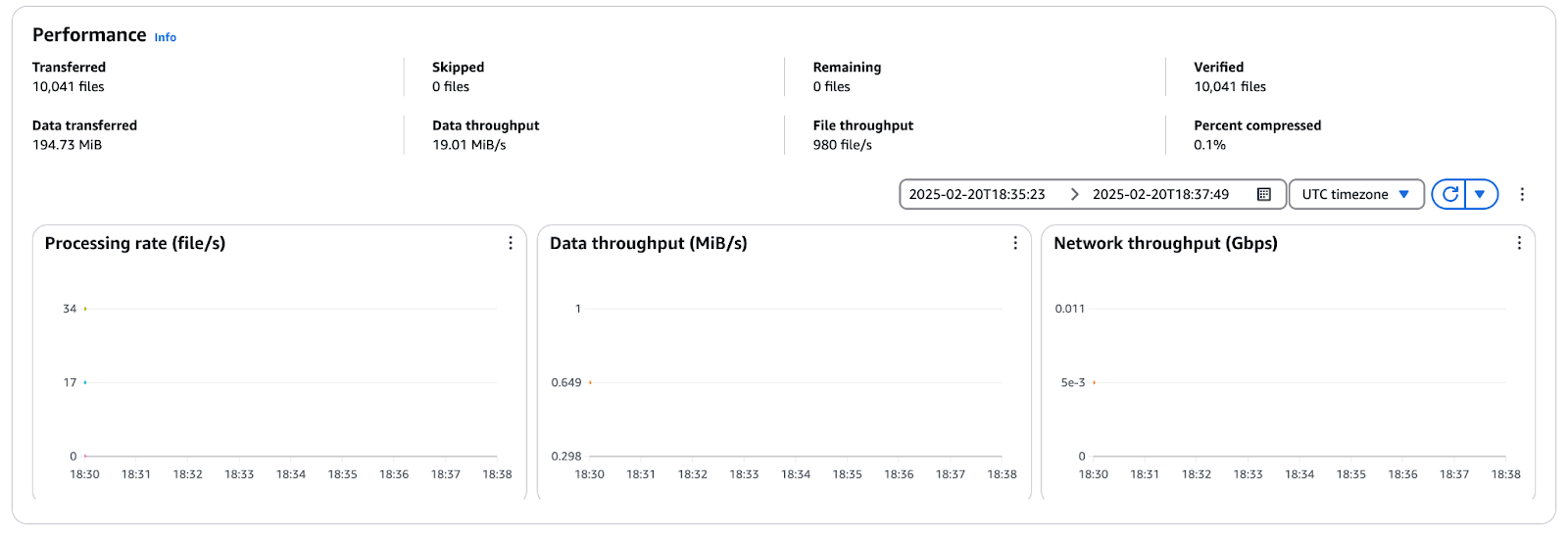

Monitoring the transfer progress

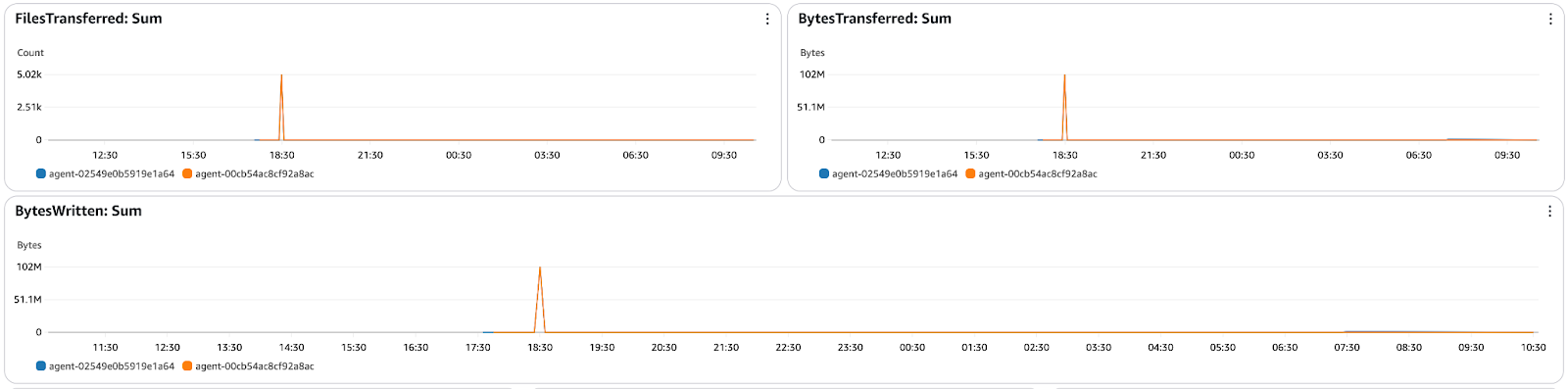

Click on the Task history tab and select the execution object to see transfer statistics in the task.

Figure 12 - AWS DataSync performance metrics showing file throughput, data throughput, and network utilization

Troubleshooting data transfer issues

During AWS DataSync transfers, common issues such as connectivity failures, permission errors, or slow transfer speeds may occur. To troubleshoot:

- Check CloudWatch logs – Identify errors related to file permissions, agent connectivity, or failed transfers.

- Verify network connectivity – Ensure the DataSync agents can reach both the source (NFS/SMB) and the destination (S3, EFS, FSx).

- Review IAM permissions – Confirm the DataSync service role has the necessary permissions to read/write data.

- Inspect agent configuration – Ensure agents are correctly activated, healthy, and able to communicate with the AWS DataSync service.

Post-Transfer Steps and Data Synchronization

Once the data transfer is complete, it's important to verify the integrity of the transferred files and set up ongoing synchronization if needed. This section explains how to check data accuracy, schedule incremental syncs, and clean up unused resources to optimize costs.

Verifying data integrity

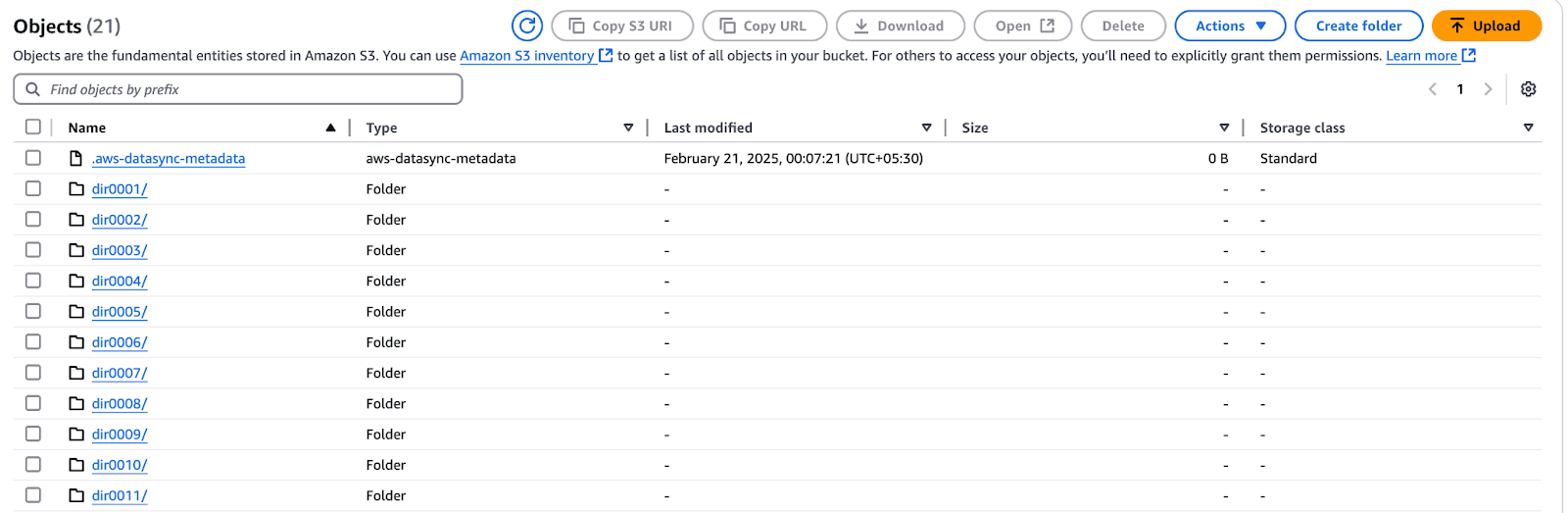

- Open the AWS S3 console and navigate to the bucket starting with “datasync”.

- Browse the bucket and verify that all expected files were transferred.

Figure 13 - AWS S3 bucket showing files transferred via AWS DataSync

- Ensure that

.htaccessandindex.htmlfiles were not copied.

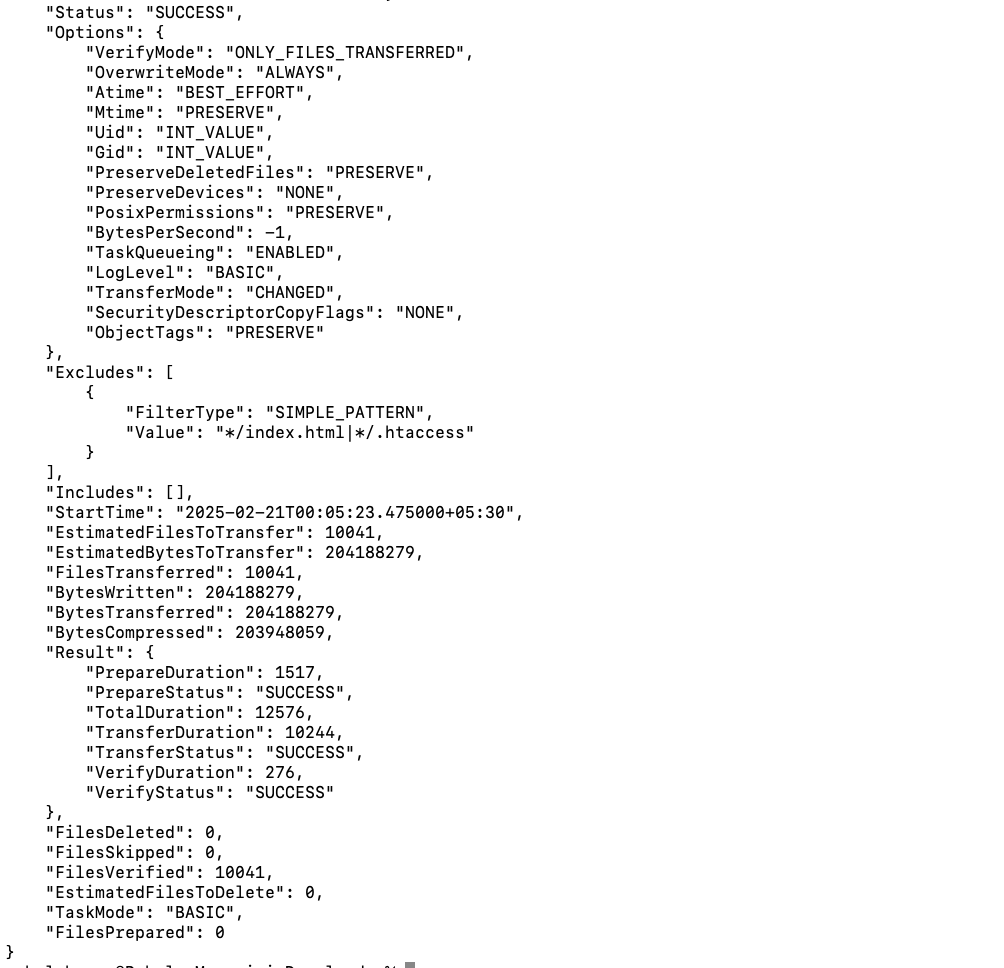

You can also check the task execution details via the AWS CLI:

- Get the task execution ARN:

aws datasync list-task-executions --region us-west-1 | grep exec-- Describe task execution:

aws datasync describe-task-execution --region us-west-1 --task-execution-arn <task-execution-arn>

Figure 14 - AWS DataSync task execution details

- Review key performance metrics:

EstimatedFilesToTransfer,BytesTransferred, transfer speed, and duration.

Ongoing data synchronization

In real-world scenarios, files continue to be added and modified after an initial transfer. AWS DataSync supports incremental transfers, ensuring only new or changed files are copied to the destination. In this section, we will modify data on fs2, run an incremental transfer, and optimize the process using filters.

Let’s start by modifying files on fs2.

- Log in to the NFS server via SSH:

ssh ec2-user@<NFS-Server-IP>- Create a new file and update the manifest list:

cd /mnt/fs2/d0001/dir0001

dd if=/dev/urandom of=newfile1 bs=1M count=1

echo "newfile1" >> manifest.lstThis adds one new file (newfile1) and modifies manifest.lst.

Then, re-run the task to sync changes:

- Go to the AWS DataSync console.

- Click Tasks → Test Task (previously created task).

- Click Start to launch a new execution.

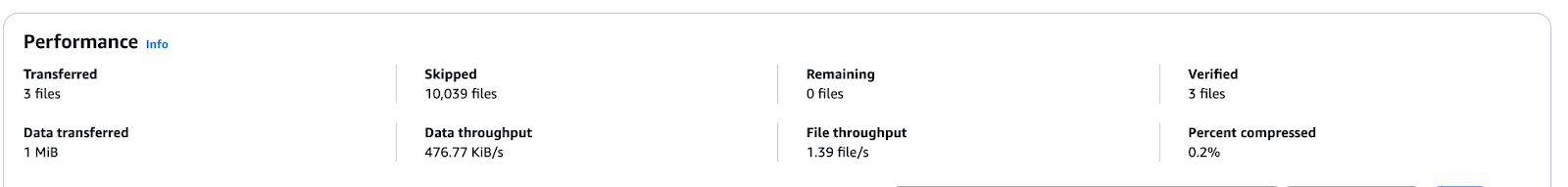

- Monitor progress under the Task history tab.

- Once completed, verify:

- 3 files transferred (

newfile1, updatedmanifest.lst, and folder update). - 1 MiB of data copied to S3.

Figure 15 - AWS DataSync performance metrics showing an incremental transfer

- Validate the transfer in S3:

- Go to the S3 bucket and confirm the presence of

newfile1. - Check

manifest.lstfor an updated timestamp.

Automating DataSync transfers with scheduled tasks

For environments where data changes frequently, scheduling periodic syncs ensures that the destination remains up to date with minimal manual intervention. AWS DataSync allows you to configure tasks at regular intervals (e.g., hourly, daily, or weekly), reducing operational overhead.

By setting up a recurring schedule, DataSync will automatically detect and transfer only the new and modified files, optimizing bandwidth usage and transfer efficiency.

To schedule a task:

- Go to the AWS DataSync console and edit an existing task.

- In the Schedule section, select “Daily”, “Weekly”, etc.

- Provide the specific time (optional).

- Save the settings, and DataSync will execute the task at the specified intervals.

Cleanup and cost optimization

Once your data transfer is complete, it’s essential to clean up unused resources to avoid unnecessary costs. Follow these steps:

- Stop and delete DataSync tasks:

- Go to the AWS DataSync console, select the task, and choose Stop if it's running.

- Click Actions → Delete to remove completed tasks.

- Delete DataSync agents:

- Navigate to Agents in the DataSync console.

- Select agents and click Delete to remove them.

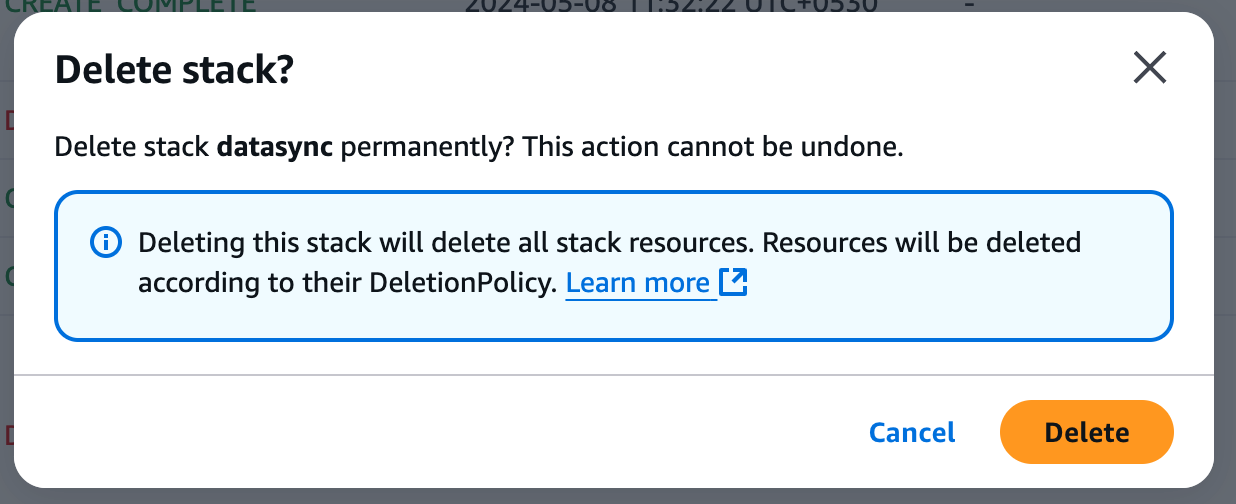

Delete the CloudFormation stack

- Open the AWS CloudFormation Console.

- Select the CloudFormation Stack created during the tutorial.

- Click Delete (top-right corner).

Figure 15 - Confirming Stack Deletion in AWS

- Confirm the deletion—CloudFormation will automatically remove all associated resources (this may take up to 15 minutes).

- Monitor the CloudFormation console to ensure the stack is fully deleted.

Optimize AWS DataSync costs

To minimize data transfer expenses, consider these best practices:

- Use incremental transfers – Configure DataSync to transfer only new or modified files to reduce unnecessary data movement.

- Optimize transfer frequency – Schedule tasks based on data change rates rather than running continuous syncs.

- Choose cost-effective storage – Store infrequently accessed data in Amazon S3 Glacier instead of Amazon EFS, which has higher costs.

By properly cleaning up resources and optimizing transfer strategies, you can significantly reduce AWS costs while maintaining efficient data synchronization.

Advanced AWS DataSync Features

Beyond basic transfers, AWS DataSync offers powerful capabilities that improve performance, cost efficiency, and security. This section explores key advanced use cases, including S3 integration and NFS-to-EFS migration.

Using DataSync with Amazon S3

AWS DataSync is a powerful tool for moving large amounts of data to and from S3 and is ideal for backup, archive, and cloud migration tasks. It enhances performance through multipart uploads, which divide large files into smaller parts and then transfer them in parallel. Furthermore, DataSync integration with S3 storage classes helps users save money by migrating less active data to S3 Glacier or S3 Intelligent-Tiering.

In this tutorial, we explained how to configure a DataSync task, set up an NFS source, and move files to an S3 bucket, all while excluding unwanted files. You can use these steps to schedule large data moves with little or no operational impact.

Migrating from NFS to Amazon EFS with DataSync

For organizations that need to migrate on-premises NFS shares to Amazon EFS, the solution is an automated, secure, and scalable one – AWS DataSync. The problem is that S3 is object storage. At the same time, Amazon EFS (Elastic File System) is a fully managed file storage service with POSIX compliance, making it a good choice for applications that need shared access and low latency performance.

Here’s how DataSync helps with NFS to EFS migration:

- Preserves metadata – Retains file permissions, timestamps, and ownership.

- Supports incremental syncs – Copies only changed or new files, reducing transfer costs.

- Automates migration – No need for manual scripting or complex data movement strategies.

Although this tutorial was based on NFS to S3 migration, the same DataSync principles can be used when migrating to Amazon EFS. The main variation is choosing EFS as a target so that data movement is well executed for applications that need a file system with dynamic scaling in AWS.

Best Practices for Using AWS DataSync

To get the most out of AWS DataSync, it's essential to follow best practices that enhance speed, security, and cost efficiency. This section covers key strategies to optimize data transfers, ensure data security, and manage monitoring effectively.

Optimize transfer speed and cost

For large-scale data migrations, AWS Direct Connect (DX) is a dedicated private network connection that avoids the public internet and allows faster, more secure transfers with lower latency. If DX is unavailable, other alternatives, like VPN connections or VPC peering, can enhance the transfer speeds while maintaining security.

Moreover, AWS DataSync has built-in compression that lessens the amount of data moved across the network, thus increasing the speeds and minimizing the bandwidth costs. However, since compression uses CPU resources, comparing the performance benefits with the possible system overhead is crucial.

Additionally, task scheduling and configuration can be optimized to improve DataSync performance further. Rescheduling the transfers to off-peak hours avoids network traffic and guarantees bandwidth availability, especially when moving large files. Fine-tuning of buffer size and several parallel transfer streams based on network and storage capacity drastically improves the throughput.

For small file workflows, increasing the level of parallelism reduces the time taken to transfer data. In contrast, effective buffer management enhances performance and reliability for large files.

Ensure data security

All data that AWS DataSync transfers is encrypted in transit through TLS to ensure secure network transfer. Encryption for destination storage services, including Amazon S3, Amazon EFS, and Amazon FSx, should also be enabled. To increase the level of protection when using S3, enable server-side encryption as well.

When assigning IAM roles for DataSync tasks, follow the principle of least privilege. To minimize security risks, only grant the necessary permissions to DataSync agents and task execution roles. Refrain from using policy keys based on tags and instead employ resource-based policies to avoid unanticipated data alterations.

For intra-VPC data transfers, enable VPC endpoints to attend DataSync traffic within the AWS network without involving the public internet. This helps to reduce security risks and improve performance when moving data between different AWS services.

The DataSync agent should be run securely, following new recommendations for OS security and network segmentation. It should also be up to date, and AWS security group policies should be enforced to deny unauthorized access.

Monitor and manage transfers effectively

Effective monitoring will help guarantee that data transfers are successful and optimized in AWS DataSync. This tutorial has also shown how to integrate with CloudWatch to provide real-time tracking of task execution, transfer speeds, error rates, and throughput. Reviewing task logs helps identify issues, check file integrity, and troubleshoot failed transfers.

Figure 16 - AWS DataSync CloudWatch monitoring dashboard showing files transferred, bytes transferred

Furthermore, CloudWatch alarms notify when transfers have failed or have performance issues, which can happen before they are supposed to and can be dealt with before they become a bigger problem.

Troubleshooting AWS DataSync

While AWS DataSync automates data transfers, you may encounter connectivity issues, permission errors, or data inconsistencies. This section provides solutions to common problems, explains how to debug using logs, and ensures smooth data synchronization.

Common errors and how to fix them

AWS DataSync users may encounter timeouts, permission issues, or data integrity errors during transfers. Here are some common problems and their solutions:

- Task timeout or slow transfers

- Cause: Network congestion, bandwidth limits, or high file counts.

- Solution: Increase bandwidth allocation and schedule transfers during off-peak hours.

- Permission denied errors

- Cause: IAM roles or NFS/SMB access restrictions.

- Solution: Ensure the DataSync IAM role has the correct permissions and verify NFS export or SMB share permissions allow agent access.

- Data integrity issues (corrupt or missing files)

- Cause: Incomplete transfers, interruptions, or verification misconfigurations.

- Solution: Enable data verification mode in task settings, check logs for skipped or failed files, and re-run the task if necessary.

- Agent connection failures

- Cause: Network misconfiguration, firewall blocks, or incorrect activation.

- Solution: Ensure the agent has internet access or configure VPC endpoints for private connectivity. If needed, re-register the agent.

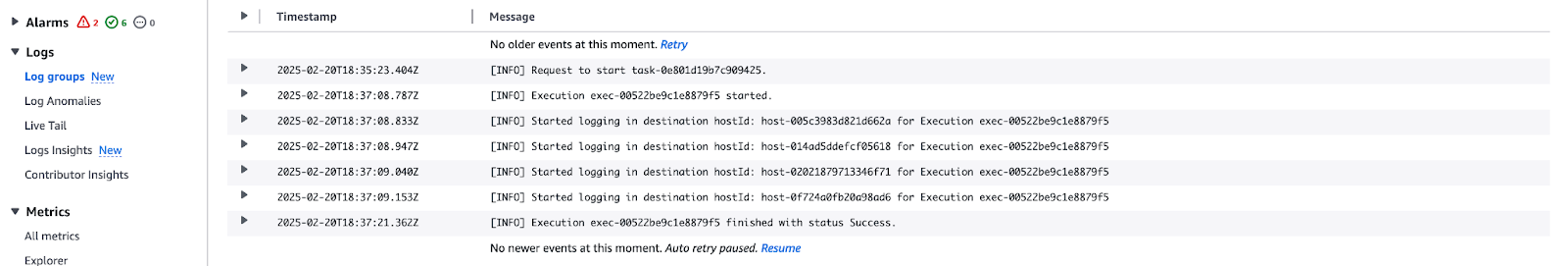

Debugging with logs and CloudWatch

To effectively troubleshoot AWS DataSync tasks, logs provide valuable insights into failed transfers, skipped files, and network errors. This tutorial has shown CloudWatch integration for monitoring and debugging DataSync executions.

Here’s how to check DataSync task logs:

- Navigate to CloudWatch Logs → Open the log group associated with the DataSync task.

- Look for error messages or transfer failures related to permission issues, timeouts, or network errors.

Figure 17 - AWS CloudWatch log stream for DataSync task execution

Then, set up CloudWatch Alarms for failures:

- Go to CloudWatch Metrics → Select AWS/DataSync.

- Create alarms for high failure rates, transfer speed drops, or agent connectivity issues.

Conclusion

AWS DataSync simplifies automated, secure, and efficient data transfers between on-premises environments and AWS storage services like Amazon S3, EFS, and FSx. This tutorial provided a practical, hands-on approach to configuring DataSync, setting up NFS as a source, and transferring data while ensuring security and performance.

We explored incremental transfers, task scheduling, and CloudWatch monitoring to optimize DataSync for cost, speed, and reliability. Additionally, troubleshooting steps and log analysis techniques help diagnose and resolve transfer issues effectively.

If you're new to AWS or want to deepen your understanding of cloud concepts and services, I recommend you check out these related learning resources:

- AWS Concepts

- AWS Cloud Technology and Services

- AWS Security and Cost Management

- AWS Cloud Practitioner (CLF-C02) Certification Track

These courses are a great way to build foundational knowledge and prepare for real-world cloud scenarios using AWS!

AWS Cloud Practitioner

Rahul Sharma is an AWS Ambassador, DevOps Architect, and technical blogger specializing in cloud computing, DevOps practices, and open-source technologies. With expertise in AWS, Kubernetes, and Terraform, he simplifies complex concepts for learners and professionals through engaging articles and tutorials. Rahul is passionate about solving DevOps challenges and sharing insights to empower the tech community.