Track

AWS generative AI represents the future of cloud services because it allows businesses to create automated content and knowledge work through foundation models that produce text, images, and video in real time.

In this article, I provide a comprehensive overview of the essential elements of deployment methods and governance frameworks required to develop production-ready generative AI solutions.

AWS is the leading cloud provider because it offers Amazon Bedrock alongside specialized compute infrastructure and broad integration capabilities that surpass other cloud platforms.

If you're new to AWS, this introductory course provides a solid foundation before diving into generative AI specifics.

Understanding Core Components

In this section, we will explore the building blocks that power AWS generative AI.

Foundation model ecosystem

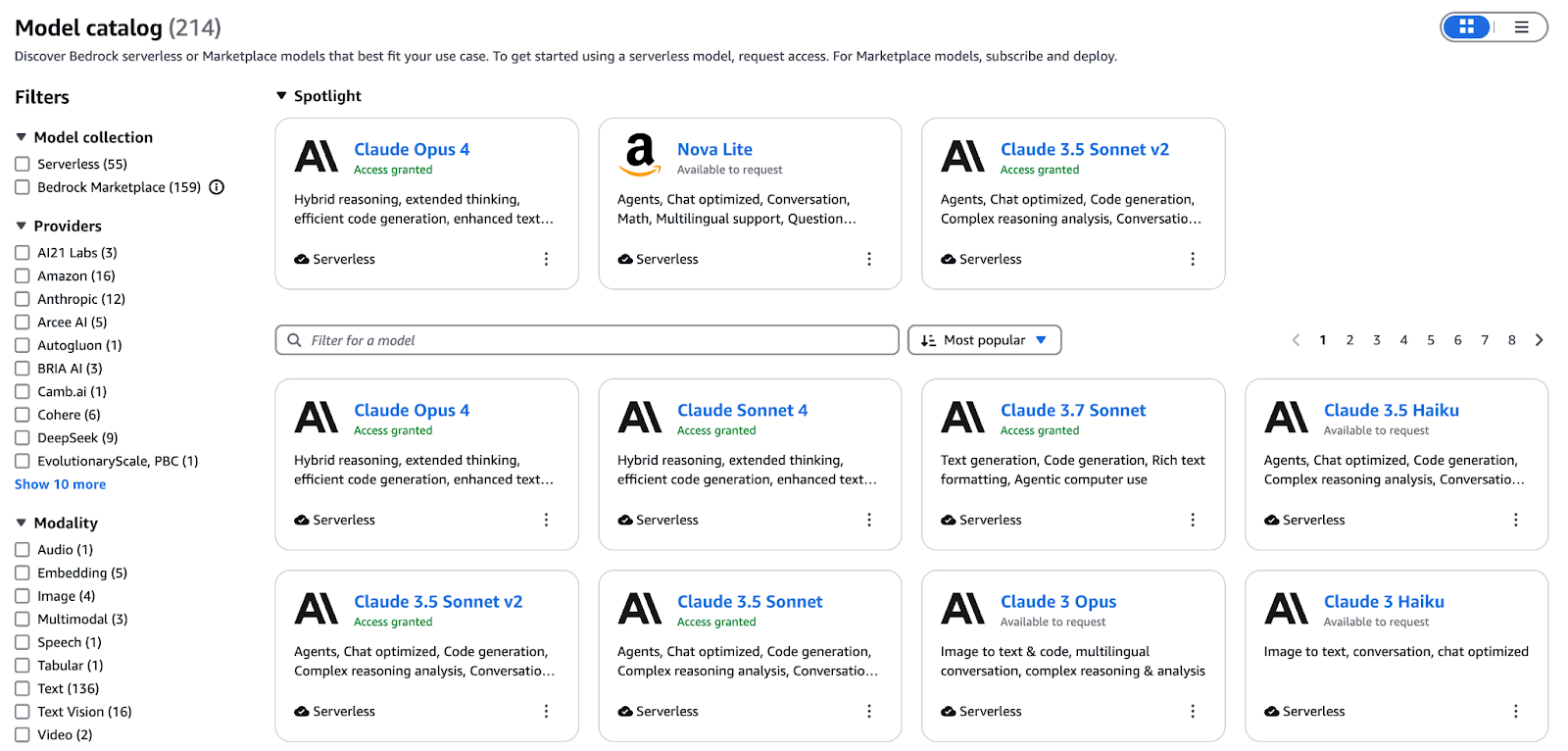

Bedrock serves as the managed control plane for all generative AI on AWS. It exposes a consistent API for deploying, scaling, and securing foundation models from endpoint provisioning to billing, so you can focus on crafting prompts and integrating AI instead of managing infrastructure.

A conceptual diagram illustrating the AWS foundation model ecosystem

The Bedrock catalogue unifies models for text, image, video, and embeddings under a single interface. Key offerings include:

- Titan Text (Premier, Express, Lite): Up to 32K-token context windows for complex dialogues

- Nova Canvas: High-fidelity text-to-image generation and inpainting for brand-safe creative assets

- Nova Reel: Short animated video clips generated from a single prompt

- Titan Multimodal Embeddings G1: Encodes text and images into a shared vector space for similarity search and RAG

Fine-tune models on your labelled data or continue pre-training on domain-specific corpora via the Bedrock console or SDK. Once training is completed, Bedrock’s evaluation suite runs side-by-side comparisons reporting on the accuracy, latency, and cost per token so you can objectively choose the optimal variant for production SLAs.

Beyond AWS’ Titan and Nova families, Bedrock gives you access to partner models from Anthropic, Cohere, Mistral, AI21 Labs, and more. Leverage this breadth to:

- Build RAG-powered knowledge assistants that surface precise answers from internal wikis

- Generate marketing copy and imagery at scale with a consistent brand voice

- Embed AI-driven code completion and review into developer workflows

- Augment training datasets by synthesizing text, images, or video tailored to your domain

To dive deeper into how Bedrock orchestrates these foundation models in production environments, check out this complete guide to Amazon Bedrock.

AI-optimized compute infrastructure

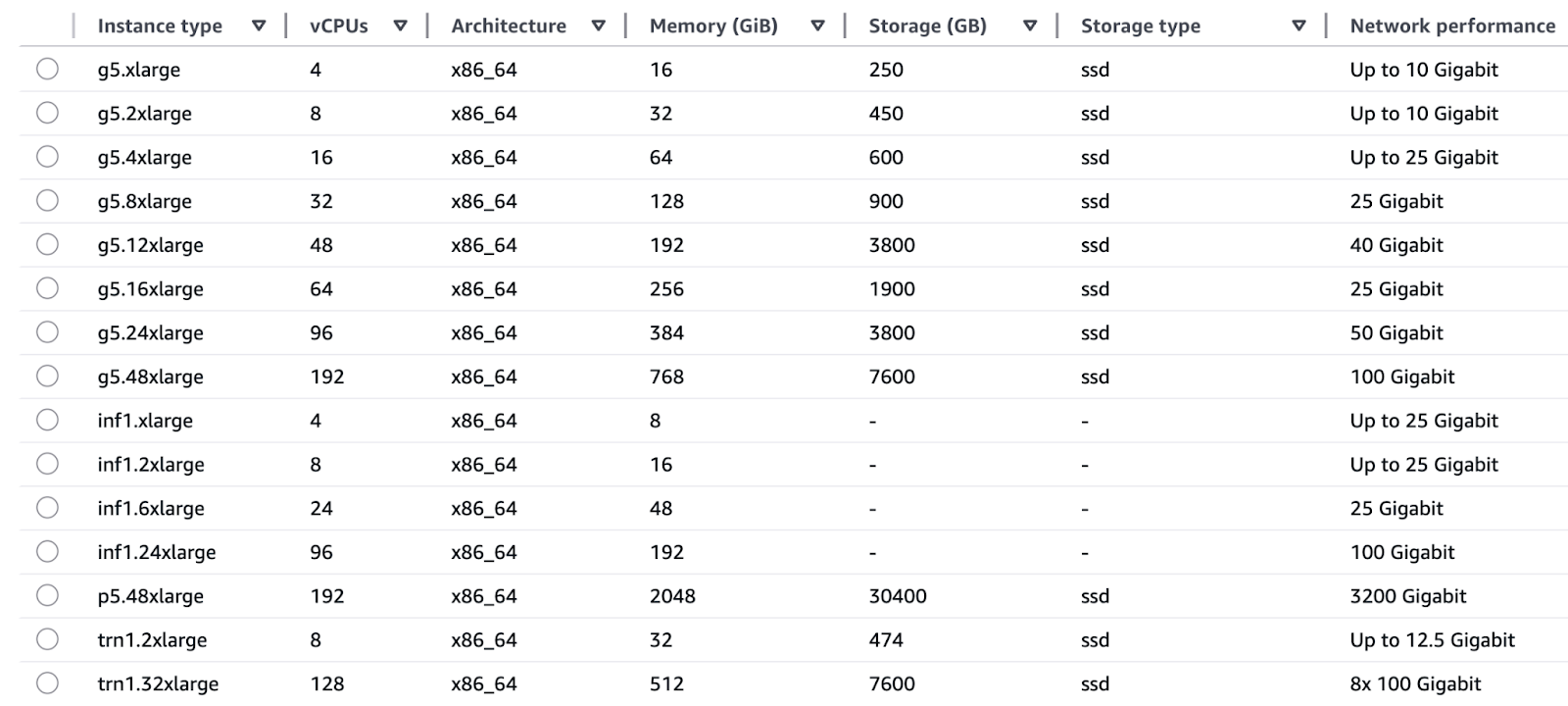

The EC2 instance families provided by AWS exist to meet the distinct needs of training and inference operations.

Training:

- The Trn1 (Trainium) instances operate with AWS Trainium chips to provide deep-learning training capabilities, including LLMs and diffusion models at 3 times higher speeds and reduced cost per hour when compared to GPU-based instances.

- The P5 and P4 (NVIDIA H100 and A100 GPUs) offer extensive parallel processing capabilities through mixed-precision support, which suits both research-level testing and large-scale production retraining processes.

Inference:

- The AWS Inferential chips used in Inf1 and Inf2 systems enable real-time inferences at single-digit millisecond latencies while providing a 2× lower cost per inference than GPU alternatives.

- G5 and G4dn (NVIDIA A10G and T4 GPUs) provide a cost-effective balance of performance that suits real-time GPU acceleration in chatbot and recommendation engine applications.

An illustrative diagram of AWS's AI-optimized compute infrastructure.

AWS achieves seamless integration between its custom-designed hardware and software components through Trainium for training and Inferentia for inference chips, which work directly with AWS services. This vertical integration yields:

- The superior price-performance ratio across TensorFlow and PyTorch workloads leads to a lower total cost of ownership.

- The combination of energy efficiency and simplified procurement helps teams escape GPU vendor lock-in.

- SageMaker and Bedrock implement automatic right-sizing features that enable users to select optimal silicon configurations through simple clicks without needing to manage chip-level details.

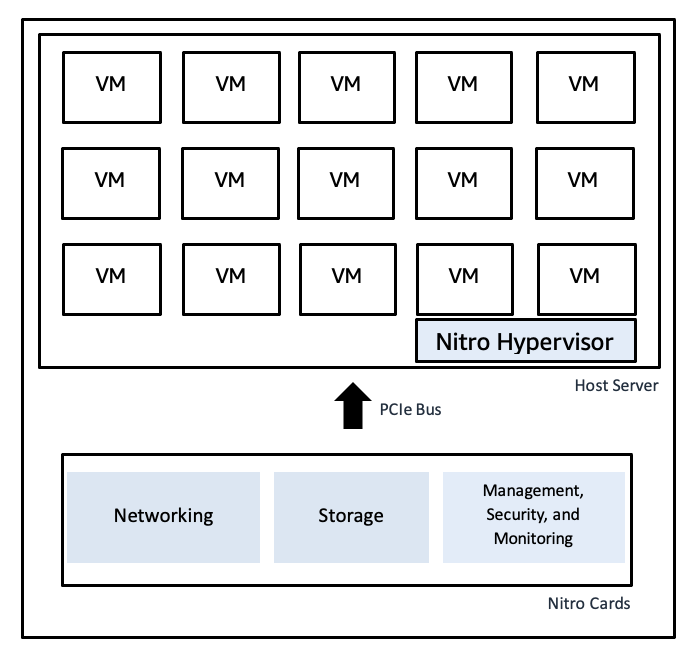

The AWS Nitro System uses dedicated hardware cards to perform virtualization functions, thus providing fast bare-metal speeds together with secure isolation:

- The Nitro Hypervisor maintains a minimal codebase, which reduces vulnerabilities while keeping workloads separate from each other.

- The hardware-accelerated networking and storage paths operate at high I/O throughput and low jitter levels, which prevent latency spikes for delivering consistent peak-load performance.

- The integration of Nitro with CloudTrail, CloudWatch, and AWS Config provides end-to-end compliance monitoring without any performance degradation.

An illustrative diagram of AWS's Nitro Hypervisor

An illustrative diagram of AWS's Nitro Hypervisor

Explore the broader ecosystem of compute, storage, and analytics in this course on AWS Cloud Technology and Services.

Data foundations for robust generative AI

Model training at high velocity and RAG pipelines need access to data that is clean and properly governed:

- The petabyte-scale data storage system of Amazon S3 integrates with Lake Formation for raw and curated dataset ingestion cataloguing and security features.

- The data warehousing solution Amazon Redshift operates as a platform for structured tables that provide fast analytics and data retrieval.

- The data transformation pipelines built with AWS Glue and Athena enable both feature and context preparation for generative workloads and ad-hoc query execution.

- Data governance and lineage mechanisms ensure model training uses trusted inputs while enterprise knowledge grounds outputs through access control and provenance tracking.

Enterprise integration framework

AWS generative AI capabilities directly integrate with its broader ecosystem to deliver end-to-end production-grade solutions. This section describes the process of combining Bedrock with its companion services to develop actual generative AI applications.

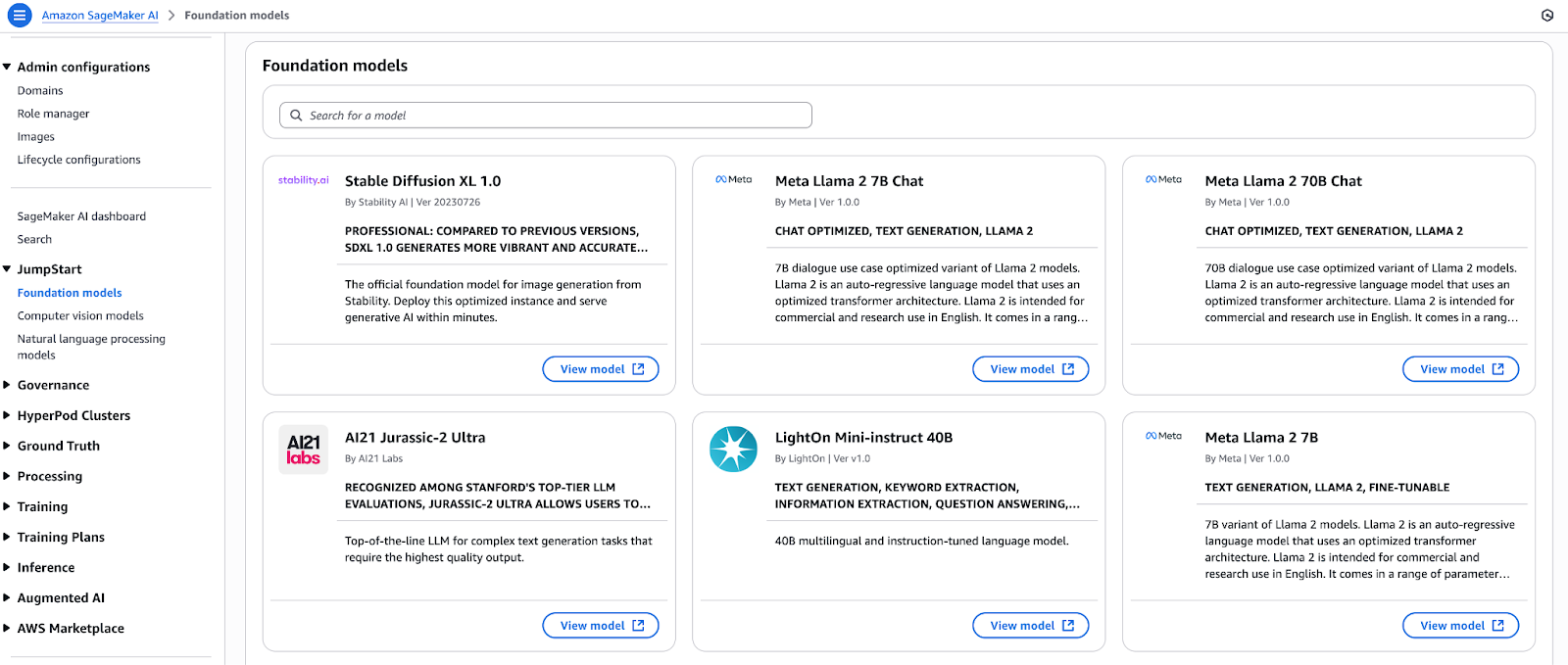

Unified ML lifecycle with SageMaker JumpStart and Bedrock

Select foundation models from SageMaker JumpStart’s model hub and immediately deploy them. You can deploy numerous open-source and proprietary models through a single button before registering them directly into Amazon Bedrock. The models entered into Bedrock gain access to:

- Content moderation and policy enforcement function through guardrail features.

- The knowledge bases provide Retrieval-Augmented Generation (RAG) capabilities for private data sources.

- The Converse API maintains consistency by providing an abstraction layer that eliminates model-specific variations.

The integrated architecture allows developers to create prototypes in SageMaker Studio and then enhance them in Bedrock before deploying them through CI/CD pipelines without code modification.

This SageMaker tutorial walks through the process of fine-tuning and deploying models using AWS’s flagship ML platform.

An illustrative diagram showing the unified ML lifecycle with SageMaker JumpStart and Bedrock.

An illustrative diagram showing the unified ML lifecycle with SageMaker JumpStart and Bedrock.

Orchestrating workflows with Amazon Bedrock Agents

Through Bedrock Agents, you can integrate generative AI into your operational applications by automatically fetching data and transforming it before executing actions. An Agent requires only basic configuration to:

- The system establishes secure connections to RESTful APIs along with databases and message queues.

- The system takes in documents, metrics, and log data while performing preprocessing tasks before making the data available to models.

- The system performs several background operations, which include enrichment validation and notification through sequential function calls.

- The system generates structured or conversational outputs for downstream system consumption.

The plumbing functions of Agents enable developers to concentrate on business logic instead of integration code, thus speeding up time-to-value.

Building conversational assistants with Amazon Q

Amazon Q serves as a fully managed service to develop RAG-powered business assistants.

The service integrates Bedrock multi-model functionality with:

- Data warehouse (Redshift), search engine (OpenSearch), and BI tool connectors are preconfigured.

- The system includes integrated features for managing multi-turn dialogues as well as session context and citation-style response generation.

- The platform features a low-code user interface development tool for creating prototypes swiftly.

Through Q, enterprise organizations create customer-facing chatbots along with executive dashboards and domain-specific help desks, which they deploy within weeks instead of months.

The Well-Architected Generative AI Lens implements best practices throughout every step of generative workload development.

Well-Architected Generative AI Lens

The Generative AI Lens of AWS extends the five pillars of the Well-Architected Framework to generative workloads. The framework includes automated model monitoring systems and drift detection mechanisms alongside retraining triggers with version artifact management and inference metric tracking.

Here are the pillars:

- Security: Enforce least-privilege IAM, use PrivateLink for Bedrock endpoints, encrypt data in transit and at rest, and apply content-filtering guardrails.

- Reliability: The solution uses AZ-based endpoint deployment, auto-scaling, circuit breakers, and Canary deployment for risk-free rollouts.

- Performance efficiency: Choose optimal instance families, cache frequent prompts and batch requests, and leverage serverless endpoints to scale to zero.

- Cost optimization: Model selection should follow latency requirements while using spot or reserved capacity, prompt engineering should minimize token usage, and cost analysis should be done in Cost Explorer.

- Sustainability: Right-size compute, use energy-efficient chips (Trainium, Inferentia), archive cold data in S3 Glacier, and adopt serverless pipelines to reduce idle resources.

For those pursuing certification, the AWS Cloud Practitioner track aligns well with the foundational knowledge covered here.

Accelerating adoption with AWS Partners

The AWS Partner Network provides support for businesses by accelerating their adoption of AWS services through solution accelerators and partner services. Every enterprise organization differs from others because AWS has established the Partner Network with specific generative AI accelerators for different industries and use cases.

- The AWS Partner Network includes Technology Partners who deliver pre-built applications along with connectors and domain-specific foundation models, which are available through AWS Marketplace.

- The Generative AI Accelerator program serves startups by offering credits along with mentorship and best-practice blueprints to accelerate proof-of-value development.

- The use of APN partners’ frameworks, together with AWS-validated solution patterns, allows teams to decrease risk during pilot projects while deploying generative AI throughout their organization with assurance.

Overview of Supporting AWS AI Services

The ability of Generative AI to generate new content does not align with the requirements of real-world applications, which need to process and understand data through various presentation formats.

AWS provides a collection of supporting services that integrate with your generative pipelines to convert unstructured documents into structured data, extract image insights, and enable natural conversations and output delivery in any language or voice.

Here are the most popular ones:

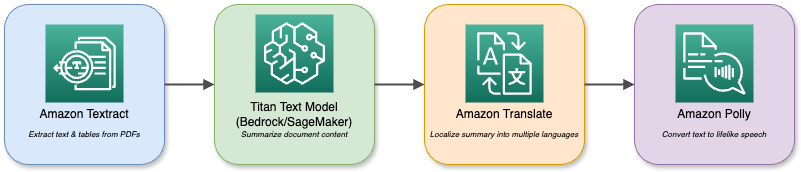

- Amazon Textract

- The system automatically detects printed text, tables, and form data within scanned documents and image files.

- The system utilizes RAG pipelines to process domain-specific documents, including contracts, reports, and invoices.

- The system organizes unorganized content to prepare it for prompt templates and fine-tuning datasets.

- Amazon Rekognition

- The system utilizes image and video analysis to identify objects, scenes, text, faces, and activities.

- The system generates contextual metadata (labels, confidence scores) for multi-modal generation.

- The system allows users to implement conditional logic (e.g., “only describe images containing people”) in their end-user applications.

- Amazon Polly

- The service transforms written text into human-like spoken audio in multiple voices and languages.

- The audio output capability transforms generative chatbots and assistants into interactive interfaces.

- The system supports SSML markup to control speech output through precise pronunciation, emphasis, and pause management.

- To learn how Polly enables human-like voice outputs in real applications, check out this hands-on tutorial.

- Amazon Lex

- The system develops conversational interfaces through automatic speech recognition (ASR) and natural language understanding (NLU).

- The system serves as the front-end interface for back-end generators, handling session management and slot-filling operations.

- The system connects directly to AWS Lambda to execute Bedrock or SageMaker endpoints for generating dynamic responses.

- Amazon Translate

- The service offers immediate neural machine translation services for over 70 different languages.

- The system adapts generative outputs to make them accessible to worldwide audiences.

- The system standardizes multilingual inputs, enabling a single model to serve users from different regions.

An illustrative diagram of AWS's various services and interactions

Your foundation models in Bedrock or SageMaker can be combined with these services to build complex multi-modal applications.

A document-analysis assistant, for example, uses Textract to ingest PDFs and then uses Titan Text to summarize them before translating the summary into multiple languages and playing it back through Polly. The composable ecosystem enables you to combine various AI capabilities to meet any enterprise requirement.

Implementation Patterns and Use Cases

Generative AI delivers its best results when you use well-architected patterns to address actual business problems.

This section presents four specific implementation blueprints, including retrieval-augmented generation and developer and enterprise assistants, as well as additional high-value scenarios that you can implement today.

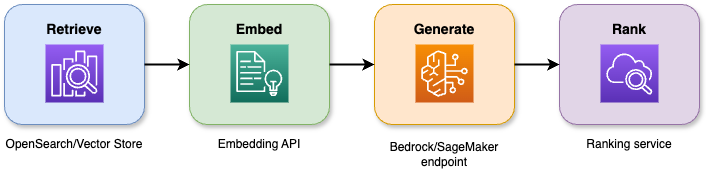

Retrieval-augmented generation (RAG) architectures

The combination of search capabilities with large language models in retrieval-augmented generation produces accurate responses that remain grounded in context. The four-stage pipeline operates as follows:

- The system retrieves the top N relevant documents or passages from a vector store or search index through querying.

- The system transforms both documents and user queries into semantic embeddings, which enables better context alignment.

- The system feeds both the query and the retrieved context to a foundation model to generate a response.

- The system employs a secondary model or heuristic to score and re-rank candidate outputs, presenting the most suitable answer.

An illustrative diagram showing a retrieval-augmented generation (RAG) architecture.

Benefits and accuracy gains:

- The system decreases hallucinations by 40 percent when compared to basic generation methods.

- The enterprise knowledge-base pilots demonstrate that answer relevance improves by 20–30 percent.

- The average user interaction time decreases by 25 percent when using virtual support systems.

Business applications:

- Internal wiki content serves as the foundation for chatbots and virtual assistants to provide ticket summaries and troubleshooting hints.

- RAG systems integrated with search user interfaces enable users to explore extensive document collections through natural language searches.

- The system enables compliance, legal, and regulatory assistants to provide source passage citations when users demand them.

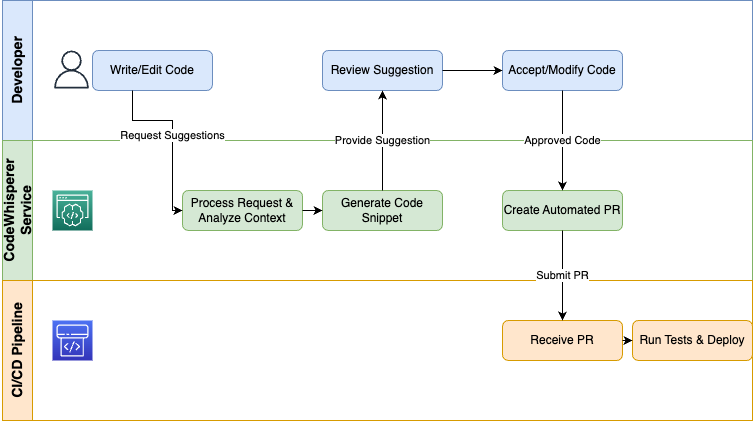

Code generation workflows

AWS CodeWhisperer delivers generative AI directly to developers through their IDEs and CI/CD pipelines to perform boilerplate automation and speed up coding processes:

- The system provides three main functions, including real-time code suggestions, snippet completions, as well as test generation that adapts to your current coding context.

- Teams achieve faster feature delivery at a rate of 30 percent, while their early prototype syntax errors decrease by 50 percent.

- The CodeCatalyst pipeline enables users to automatically create infrastructure IaC snippets, unit tests, and deployment scripts through CodeWhisperer integration.

- The platform supports multiple programming languages, including Python, Java, JavaScript/TypeScript, C#, Go and additional languages, which provide benefits to your entire technology stack.

An illustrative diagram showing a code generation workflow.

Organizations that use CodeWhisperer as a developer assistant eliminate repetitive work, enforce coding standards, and enable engineers to dedicate their time to valuable design and debugging activities.

Take into account that CodeWhisperer will become part of Amazon Q Developer.

Enterprise assistants

Amazon Q Business operates as a RAG-powered low-code solution, enabling users to develop conversational assistants that retrieve enterprise data through questions.

Architecture:

- The system retrieves data from Redshift, OpenSearch, SharePoint, and S3 through connectors.

- The RAG engine retrieves appropriate context before activating foundation models.

- The dialog manager controls the management of multi-turn sessions, including slot filling and state operations.

- The UI layer responds to chat widgets, voice channels, and Slack integrations.

Deployment scenarios:

- The automatic transcription and summarization of action items through meeting assistants enables teams to save more than two hours of work each week.

- The implementation of self-service bots deflects routine inquiries, resulting in a 30 percent reduction in handle time.

- Executive dashboards provide natural-language insights into sales, inventory, and KPIs for users who do not require BI training.

The assistants help organizations streamline knowledge work, reduce operational costs, and enable employees to achieve higher productivity levels.

Additional high-value use cases

The value of generative AI extends beyond RAG, code generation, and assistants to various other domains:

- These systems can generate customized product descriptions, email campaigns, and chat interactions based on individual profiles and behavioral analysis.

- The combination of Textract with form-parsing models and generation enables the automatic classification, markup, and routing of invoices, contracts, and claims, as well as downstream tasks (ticket creation, approval routing, and report generation) through model-driven decision logic.

- The analysis of support transcripts, social media feeds, and sales calls through conversational analytics reveals sentiment trends, compliance risks, and actionable recommendations.

Start with these patterns to establish pilots that demonstrate clear ROI before moving on to optimization and scaling across your organization.

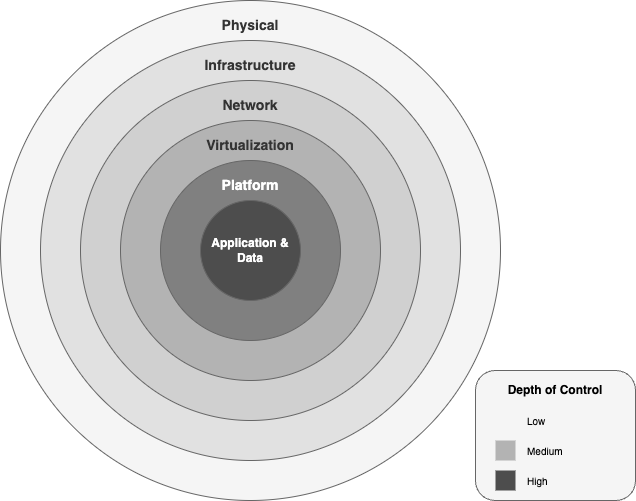

Security and Governance Model

Enterprises need to implement security controls and governance practices at every stage of the AI lifecycle when they adopt generative AI.

AWS provides a comprehensive security and compliance framework that spans from physical data centers to application-level policies, enabling confident innovation.

Data protection architecture

AWS protects generative AI workloads through six concentric security layers:

- The physical layer of data centers features multi-factor access controls, biometric screening, and 24/7 monitoring, which ensures that hardware contact remains restricted to authorized personnel.

- The AWS global network protects AI traffic through private fiber connections and DDoS mitigation, as well as AWS Shield Advanced, while maintaining high network throughput by isolating traffic from the public internet.

- Model endpoints and data pipelines remain secure through the combination of VPCs with granular security groups, network ACLs, and PrivateLink endpoints, which operate within your private networks.

- The Nitro System transfers virtualization operations to specialized hardware components, which provide bare-metal speed and hardware-based protection against unauthorized data sharing between virtual machines.

- The platform layer of Bedrock and SageMaker endpoints integrates with AWS KMS for customer-managed keys, uses TLS 1.2+ encryption for data transmission, and enforces IAM permissions based on the principle of least privilege.

- The application and data layer implement fine-grained resource policies with AES-256 encryption at rest and in transit and integrate audit logging through CloudTrail and CloudWatch to protect and record every API call.

An illustrative diagram of the security layers within the AWS generative AI framework.

Data isolation and compliance:

- Each Bedrock inference instance operates within your VPC while accessing and modifying S3 buckets that you manage.

- You select customer-managed CMKs through AWS KMS and have the option to use external key stores (XKS/HYOK) to meet sovereign compliance requirements.

- AWS maintains multiple certifications, including ISO 27001 and SOC 1/2/3 Fed, RAMP High, HIPAA, PCI, DSS, and GDPR, which automatically extend to your generative AI workloads without requiring duplicate efforts.

Responsible AI framework

The development of trust in generative AI requires implementing ethical and safety protocols at each stage of model invocation. AWS implements responsible AI governance through the following framework:

- The ethical principles of AWS prioritize fairness, privacy, transparency, and accountability to prevent discriminatory bias in models and maintain clear documentation of data lineage and decision logic.

- The Bedrock Guardrails system provides policy-based filters that check both input data and output results against categories that include hate speech and violence, self-harm and misinformation. The Invoke API and ApplyGuardrail API enable users to implement guardrails for safety checks either independently or through the Invoke API.

- The system logs all requests and guardrail decisions through CloudTrail, while model cards and evaluation reports display performance metrics, potential biases, and cost/latency trade-offs.

- The system provides continuous oversight through SageMaker Clarify's bias detection and human-in-the-loop review workflows for low-confidence outputs, as well as automated drift detection alerts for model retraining or revocation.

These layers and practices establish a defense-in-depth posture for enterprise generative AI, enabling you to achieve innovation while maintaining the highest standards of security, compliance, and ethical responsibility.

Cost Optimization Strategies

The process of controlling generative AI budgets demands both complete knowledge of pricing mechanisms and continuous cost management practices. This section explains AWS pricing for AI workloads while presenting a mid-sized deployment TCO example and strategies to reduce expenses.

Pricing model analysis

AWS generative AI pricing falls into four main dimensions:

- Compute costs

- Endpoint hours: charged per instance-hour, varying by family

- Inf1.xlarge at $0.228/hr

- G5.xlarge at $1.006/hr

- Training jobs: billed per instance-hour on Trn1, P4, or P5 hardware (e.g., Trn1.32xlarge at $21.50/hr )

- Token/request fees

- Prompt tokens: $0.0008 per 1 K tokens

- Completion tokens:$0.0016 per 1 K tokens

- Storage & data transfer

- Model artifacts: S3 Standard at $0.023/GB-month

- Data egress: $0.09/GB beyond free tier

- Customization & evaluation

- Fine-tuning: extra per-instance-hour training fees plus minimal data-processing charges.

- Evaluation runs: token fees for baseline vs. custom comparisons.

Cost optimization strategies

- Select the right silicon: Use Inferentia (Inf1/Inf2) for high-volume, low-latency inference and Trainium (Trn1) for large-scale training to lower $/TPU-hour.

- Batch and cache prompts: Group similar requests and cache popular answers to reduce repetitive token spend.

- Multi-model endpoints: Host several smaller models behind one endpoint—trading off peak latency for significantly lower idle-hour costs.

- Spot & reserved capacity: Commit to a fraction of baseline capacity with Reserved Instances or use Spot for non-critical batch workloads.

- Prompt engineering: Streamline instructions to minimize token usage per request without sacrificing quality.

Comparing architectures

|

Architecture |

Compute cost driver |

Token cost driver |

Best fit |

|

Real-time chatbots |

Many small endpoints running 24×7 |

High request rate, small tokens |

Customer support, Help desks |

|

Batch generation |

Short-lived large instances |

Few large token bursts |

Report generation, Data augmentation |

|

RAG pipelines |

Combined search + inference |

Retrieval token + generation token |

Knowledge-grounded assistants |

Total cost of ownership model

Below is a sample monthly TCO for a mid-sized generative AI pilot:

|

Cost item |

Unit cost |

Usage |

Monthly cost |

|

Inference endpoints (Inf1.xl) |

$0.228 /hr |

3 endpoints × 24 hr × 30 days |

$492.48 |

|

Token usage (Titan Express) |

$0.0024 /1 K tokens |

3 M tokens/day × 30 days |

$216.00 |

|

S3 storage (model artifacts) |

$0.023 /GB-month |

10 000 GB |

$230 |

|

Data egress |

$0.09 /GB |

1 TB |

$90 |

|

Glue ETL (feature prep) |

$0.44 /DPU-hour |

20 DPU-hrs |

$8.80 |

|

Total |

$1,037.28 |

Key optimization levers:

- Auto-scale to zero: Tear down idle endpoints when traffic slumps.

- Multi-model endpoints: Consolidate models to reduce idle-hour charges by up to 60%.

- Reserved & Spot: Lock in discounted rates for baseline capacity and spin up Spot for non-latency-sensitive jobs.

- Prompt compression: Refactor prompts to trim extraneous tokens, trimming 10–15 % of token spend.

Ongoing cost management:

- Use the AWS Pricing Calculator to model scenarios before launch.

- Monitor real usage in AWS Cost Explorer, setting budgets and alerts for anomalies.

- Tag all AI resources for clear charge-back and showback reporting.

The combination of pricing-aware architecture with targeted optimizations and continuous monitoring enables you to scale generative AI without experiencing excessive costs.

AWS Cloud Practitioner

Implementation Roadmap

The process of scaling generative AI from concept to production requires a systematic phased methodology. The roadmap provides a step-by-step approach, starting from initial assessments through proof-of-concept pilots to building an enterprise-grade platform that is resilient.

Assessment and pilot phases

The first step involves checking the feasibility and obtaining stakeholder agreement before proceeding with the full development of the architecture.

- Gap analysis and goal setting

- Conduct interviews with business owners to obtain use-case requirements, success criteria, and ROI targets.

- Review the current data sources, security quality controls, and ML capabilities.

- Identify compliance or privacy gaps (e.g., PII handling) that your pilot must address.

- Proof of concept (PoC) development

- Select a high-value use case (e.g., RAG-powered support bot or document summarizer) for the first phase.

- Set up minimal infrastructure: one Bedrock or SageMaker endpoint connected to a small dataset via S3 or OpenSearch.

- Define key metrics (accuracy, latency, cost per request) and instrument CloudWatch dashboards.

- Rapid prototyping with step-by-step guides

- Use AWS Solutions Library sample projects (e.g., RAG Chatbot, CodeWhisperer demo) to build the prototype.

- Iterate quickly on prompts and retrieval strategies, capturing learnings in a shared wiki.

- Stakeholder validation

- Show the functional prototype to end users and gather feedback on output quality and UX.

- Refine scope, add or remove data sources, and adjust model parameters based on real-world usage.

- Secure executive sponsorship and Budget for the next phase.

Foundation and scale phases

The Proof of Concept results in the development of the fundamental platform before the system can be widely adopted.

- Platform foundation build-out

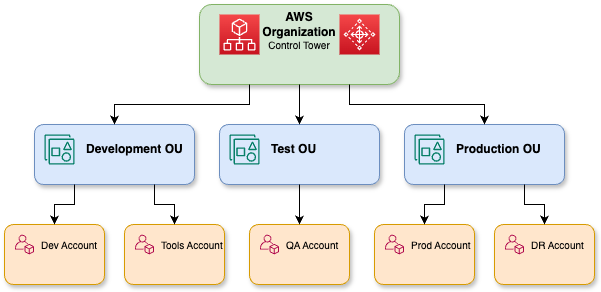

- The multi-account structure of AWS Control Tower needs to be established for dev, test, and prod OU boundaries in the landing zone.

- Implement IAM roles and policies, AWS SSO, and least-privilege controls for model access and data pipelines.

- The VPCs should be configured with PrivateLink endpoints. Bedrock/SageMaker KMS CMKs should be enabled, and GuardDuty monitoring should be deployed.

- Automate ETL with AWS Glue/Athena, catalog assets in Lake Formation, and sync knowledge stores for RAG.

A detailed representation of the AWS organizations.

- MLOps and model governance

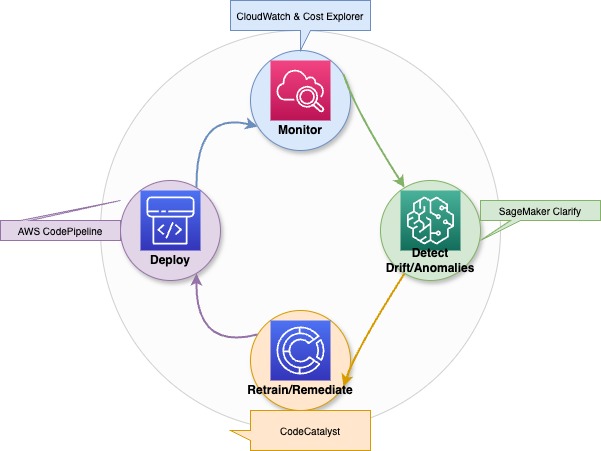

- The model retraining, evaluation, and deployment process should be automated through CI/CD pipelines that use CodeCatalyst or CodePipeline.

- A model registry should be established in SageMaker or a Git-backed catalog to version-based and fine-tuned artifacts.

- The system should automatically detect drift and perform scheduled retraining to ensure performance and compliance.

- Scaling to production

- The variable traffic patterns should be matched through the use of auto-scaling Bedrock or SageMaker endpoints.

- The use of multi-model endpoints allows for hosting several smaller FMs behind one API, resulting in up to a 60 % reduction in idle costs.

- The deployment should be done across multiple Availability Zones, and a canary rollout should be implemented to ensure zero downtime updates.

- AWS Partner Network and Innovation Centers

- Consulting Partners should be engaged for domain-specific accelerators in finance, healthcare, and retail.

- The AWS Marketplace should be used to obtain turnkey connectors and pre-trained domain models from Technology Partners.

- The AWS Innovation Centers and Community Builders should collaborate to access hackathons, architecture reviews, and hands-on workshops for best-practice adoption acceleration.

The phased approach of validation, followed by foundation building and then scaling with governance and partner support, will help you transform your generative AI pilot into an enterprise-wide, robust capability.

Optimize and govern phases

Continuous refinement and strong governance practices, implemented after scaling your generative AI platform, ensure the system performs well while maintaining security and regulatory compliance.

Continuous optimization

- Automated monitoring and alerting

- Bedrock/SageMaker endpoints require CloudWatch metrics (including latency, error rates, and throughput) and Cost Explorer dashboards for instrumentation.

- Set up anomaly detection alarms e.g., sudden token‐spend spikes or inference‐latency regressions and trigger auto‐remediation workflows (scale‐up/down, model rollback).

An illustrative diagram showing continuous optimization, drift detection, and retraining processes for generative AI models.

- Drift detection and retraining

- Use embedding‐based drift detectors or SageMaker Clarify to flag data distribution shifts.

- Drift thresholds trigger automated retraining pipelines through CodePipeline/CodeCatalyst to maintain both model accuracy and unbiased performance.

- Cost tuning

- Regularly review idle‐hour charges and consolidate models into multi‐model endpoints where feasible.

- Fine‐tune prompts to reduce unnecessary token usage and batch low‐priority requests.

Governance and compliance audits

- Scheduled audits

- Your organization should conduct quarterly assessments of IAM policies and KMS key usage, along with VPC endpoint configurations and CloudTrail logs, to confirm adherence to least-privilege access and encryption standards.

- AWS Audit Manager and AWS Config rules enable the automated collection of evidence for ISO, HIPAA, or SOC mandates.

- Policy enforcement

- Your organization must update Bedrock Guardrails with content policies and conduct category filter assessments during each release period.

- Your CI/CD pipeline should implement an approval gate that prevents model version deployment when security or fairness evaluation fails.

Ongoing training and skill development

- AWS Skill Builder

- The Generative AI learning path should enroll teams through AWS Skill Builder to learn about Bedrock fundamentals, RAG patterns, and secure deployment best practices.

- The hands-on labs teach students to set up Trn1/Inf1 instances while configuring Guardrails and performing bias detection with SageMaker Clarify.

- Certification and community

- Engineers should pursue AWS Certified Machine Learning – Specialty and AWS Certified Security – Specialty credentials.

- The AWS Community Builders and Innovation Center workshops enable you to stay up-to-date on new services, architecture reviews, and accelerator programs.

By integrating ongoing optimization and strict audits with organizational learning practices, you can sustain a resilient, trustworthy, and cost-effective generative AI capability.

Resources and Getting Started

The AWS generative AI journey can be accelerated through learning paths, communities, and hands-on projects that build confidence and drive real business impact.

AWS Skill Builder and training resources

- The Generative AI learning path on AWS Skill Builder offers comprehensive training on foundation models, RAG pipelines, and secure deployment best practices.

- The PartyRock and Bedrock Playground interactive platforms enable users to test prompt engineering, fine-tuning, and inference without requiring infrastructure setup.

- The guided labs show users how to create Trn1 and Inf1 instances and set up KMS-encrypted endpoints before deploying end-to-end RAG workflows.

Developer community and partner ecosystem

- The AWS Generative AI Community and Developer Center provides access to code samples, forums, and curated whitepapers about the Well-Architected Generative AI Lens.

- The AWS Partner Network provides prebuilt accelerators, domain-specific foundation models, and turnkey connectors, all of which are available in the AWS Marketplace.

- The AWS Innovation Centers and Community Builders offer workshops, hackathons, and architecture review sessions that help you speed up your implementation process.

Step-by-step guides and example projects

- The DataCamp platform offers step-by-step tutorials and solution templates that enable users to obtain code for customization through forking.

- The end-to-end example repositories demonstrate how to utilize Textract for data ingestion and SageMaker RAG orchestration, as well as Polly for voice output.

- Users can deploy fully functional projects through the platform, which allows them to modify prompts, models, and pipelines for enterprise use cases and performance measurement.

Conclusion

AWS generative AI provides a complete end-to-end system, including foundation models, optimized compute, governance, integration, and cost controls, to help enterprises innovate faster and reduce operational overhead while building trusted, multimodal applications at scale.

Through experimentation, continuous learning, and community collaboration, you will achieve the full potential of AWS generative AI to drive real business impact.

AWS Cloud Practitioner

Rahul Sharma is an AWS Ambassador, DevOps Architect, and technical blogger specializing in cloud computing, DevOps practices, and open-source technologies. With expertise in AWS, Kubernetes, and Terraform, he simplifies complex concepts for learners and professionals through engaging articles and tutorials. Rahul is passionate about solving DevOps challenges and sharing insights to empower the tech community.