Course

As AI becomes an inseparable part of our everyday lives, we need to take a deeper look at its potential risks and challenges. Issues such as bias, discrimination, breaching of privacy, or unintended consequences are among the hurdles we need to jump over.

AI governance emerges as a framework to address these challenges.

The goal of AI governance is to ensure responsible and ethical development, deployment, and use of AI technologies. If implemented in the right way, this guides the AI landscape towards a beneficial and impactful tool accessible by society.

Learn more about how the latest laws and regulations are impacting AI governance with our webinar, Understanding Regulations for AI in the USA, the EU, and Around the World.

Strengthen Your Data Privacy & Governance

Ensure compliance and protect your business with DataCamp for Business. Specialized courses and centralized tracking to safeguard your data.

What Is AI Governance?

AI governance refers to the measures and systematic methods designed to guide and oversee AI development and applications. It ensures that AI is aligned with ethical principles and the values of society. This includes laws, regulations, or any guidelines that direct both the creators and the users of AI.

To accomplish this objective, AI governance seeks to maintain principles such as:

- Minimizing risks: Avoiding bias and potential harms

- Fairness and transparency: Promoting understandable AI systems

- Accountability: Establishing responsibility for AI outcomes

- Public trust: How the society view AI technologies

Why Is Fair AI Important?

The development of AI applications is the product of many roles and practices. More often than not, AI applications are built on the foundation of data. Collecting, processing, and handling of data in itself is a delicate and sensitive matter.

Meanwhile, AI products are utilized in various fields and sectors, potentially on very large scales. For instance, ChatGPT is used weekly by over 200 million active users according to DemandSage.

Given the extensive number of individuals involved in developing AI-based solutions and the broad reach of their users, one can truly appreciate the complexity and intricacy of AI. There are numerous potential issues that could arise.

To gain perspective, imagine yourself applying to a job where the hiring process relies on an AI system that assesses you for being a suitable candidate. This system reviews candidates based on their resume and profile and filters the ones that are not deemed fit for the position.

However, if this AI system has inherent biases or flaws in its algorithm, it might unfairly discriminate against certain groups of people based on factors like age, gender, or ethnicity. If such an AI system was to be widely used by companies, the consequences would be significant.

This scenario illustrates why AI fairness is crucial—to ensure fairness, transparency, and accountability in AI systems that can significantly impact people's lives.

Surprisingly, such discriminations are not rare in humans either. Researchers have found there exists systematic illegal discrimination based on people’s names in the job application process. Ethnic minority names, even when having the same resumes as other candidates, face less success in their application.

So, if the human counterparts of an AI system can fall into such traps, it means that using AI for an unbiased decision-making process is challenging but also fruitful.

AI Governance: Key Principles and Frameworks

Effective AI governance stands on the pillar of societal and human values. Principles that guide the experts in evaluating their systems for alignment. These principles include:

- Transparency: AI systems should have clarity by providing clear insight on their mechanisms, data collection, and decision-making algorithms.

- Fairness: Ensuring impartial treatment and predictions by identifying and mitigating biases in the development and deployment of AI systems.

- Accountability: Establishing clear responsibilities for AI systems, including protocols for when harm occurs.

- Human-centric design: Prioritizing human values and well-being above all else.

- Privacy: Respect individual privacy rights with robust data protection measures.

- Safety and security: Focus on user safety and system security against malicious and unintended consequences.

Leading AI governance frameworks

Several organizations have developed frameworks to guide AI governance practices. Some of the most notable include:

- NIST AI Risk Management Framework: Developed by the U.S. National Institute of Standards and Technology, NIST offers an approach for recognizing, evaluating, and controlling associated risks of AI systems in their lifecycle.

- OECD AI Principles: The Organisation for Economic Co-operation and Development (OECD) has established a set of principles for trustworthy artificial intelligence. These principles prioritize human-centered values, fairness, transparency, robustness, and accountability.

- IEEE Ethically Aligned Design: The Institute of Electrical and Electronics Engineers (IEEE) has developed a framework that provides guidelines for the ethical design and implementation of autonomous and intelligent systems.

- EU Ethics Guidelines for Trustworthy AI: The European Union has provided guidelines for technical robustness, privacy, transparency, diversity, non-discrimination, societal welfare, and responsibility.

- Industry-specific frameworks: Various sectors have developed their AI governance frameworks customized to their specific needs and obstacles. For example:

- Healthcare: The WHO guidance on ethics and governance of AI for healthcare

- Finance: The Monetary Authority of Singapore's FEAT Principles (Fairness, Ethics, Accountability, and Transparency)

- Automotive: The Safety First for Automated Driving framework

These frameworks provide valuable guardrail for organizations looking to implement AI governance practices, including both high-level principles and practical recommendations.

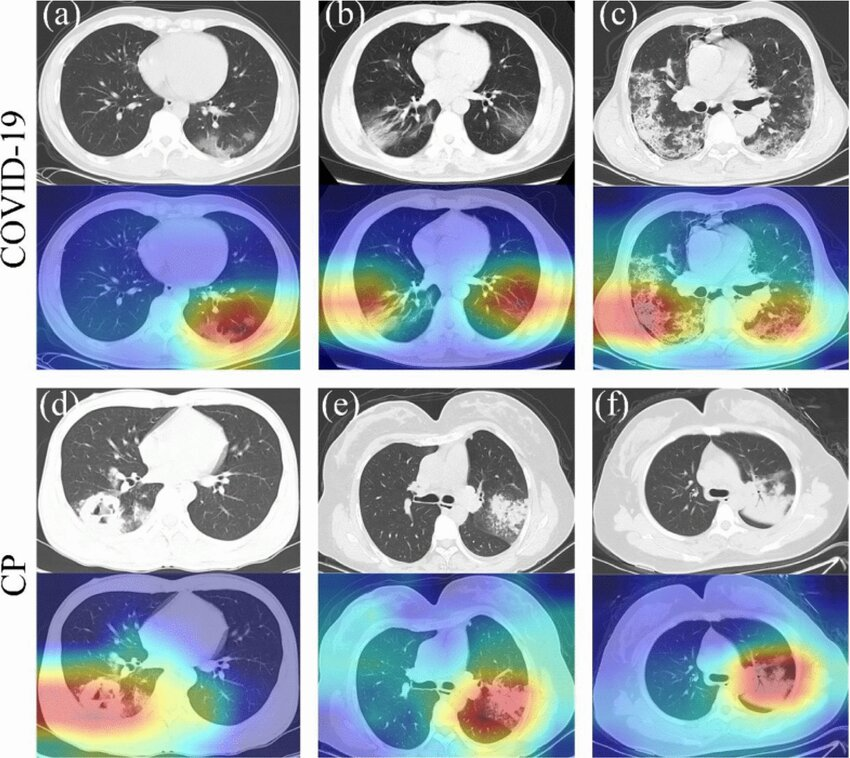

AI has applications in many fields, including healthcare. Interpretability and transparency are two important properties of such systems. The image below is an example of an AI system recognizing COVID-19 infections:

Source: Peng et al., 2022

Best Practices for Implementing AI Governance

Putting AI governance into practice involves taking a holistic approach that requires the collaboration of various stakeholders and multiple aspects of an organization. Here are some best practices for putting AI governance into action:

Leadership and cultural commitment

Leadership and cultural commitment are essential. Leaders should actively support and uphold ethical standards in AI development, ensuring these principles are used throughout the organization.

This involves creating and maintaining clear, well-documented guidelines for every phase of AI system management, including development, testing, deployment, and monitoring, to ensure that ethical considerations are integrated into the AI lifecycle.

Training and education

Ongoing training and education are just as important. All relevant staff should receive regular training focused on AI ethics and fairness to stay updated on best practices and emerging challenges.

Leadership should also be educated about the impact and significance of AI technologies. For AI developers, specialized training tailored to their specific roles and projects ensures they have the knowledge needed for responsible development and implementation.

Monitoring AI systems

I cannot overstate the importance of monitoring AI systems. Implement continuous monitoring systems to track AI performance and predictions. This helps in identifying and correcting any biases or unintended consequences promptly.

Regular assessment ensures that AI systems operate as intended and meet the required standards.

Documentation

Thorough documentation is essential for transparency and accountability in AI development. Maintain detailed records of each step in the AI development process, including data sources, model architectures, and performance metrics.

Establish a framework for ethical decision-making, either through internal policies or recognized external standards, to support clear and accountable practices throughout the AI lifecycle.

Engaging with stakeholders

Engaging with stakeholders is important for aligning AI systems with diverse needs and values. Communicate actively with users, communities, and experts to ensure that AI system designs and values are well-understood and address the needs of all involved.

Valuing diverse perspectives helps prevent unintended harms and ensures that AI systems are inclusive and equitable.

Continuous improvement

Continuous improvement is key to maintaining high standards in AI development. Define and track metrics related to ethics and bias to evaluate AI system performance and make iterative improvements based on this data.

Solicit and incorporate feedback from users and stakeholders to make sure the AI systems align with ethical standards.

Training 2 or more people? Check out our Business solutions

Get your team access to the full DataCamp library, with centralized reporting, assignments, projects and more

Tools and Technologies for AI Governance

As AI governance evolves, a growing ecosystem of tools and technologies is being built to support organizations in implementing the right governance practices. These tools target multiple aspects of AI governance, from bias detection to explainability and risk management.

- Bias betection and mitigation:

- IBM AI Fairness 360: An open-source toolkit that “can help examine, report, and mitigate discrimination and bias in machine learning models throughout the AI application lifecycle.”

- Aequitas: An open-source bias and fairness assessment toolkit for ML developers and data analysts.

- Explainability and interpretability:

- LIME (Local Interpretable Model-agnostic Explanations): A technique that explains the predictions of any black-box machine learning model.

- SHAP (SHapley Additive exPlanations): A game theory approach to explain the output of any machine learning model by analyzing the significance of each feature and their relationship to one another.

- Risk assessment and management:

- AI Risk Management Framework Navigator: Another tool developed by NIST to help organizations identify and mitigate the risks related to the deployment and operation of AI systems

- Privacy:

- OpenMined: An open-source project for building machine learning systems privacy is more crucial than often

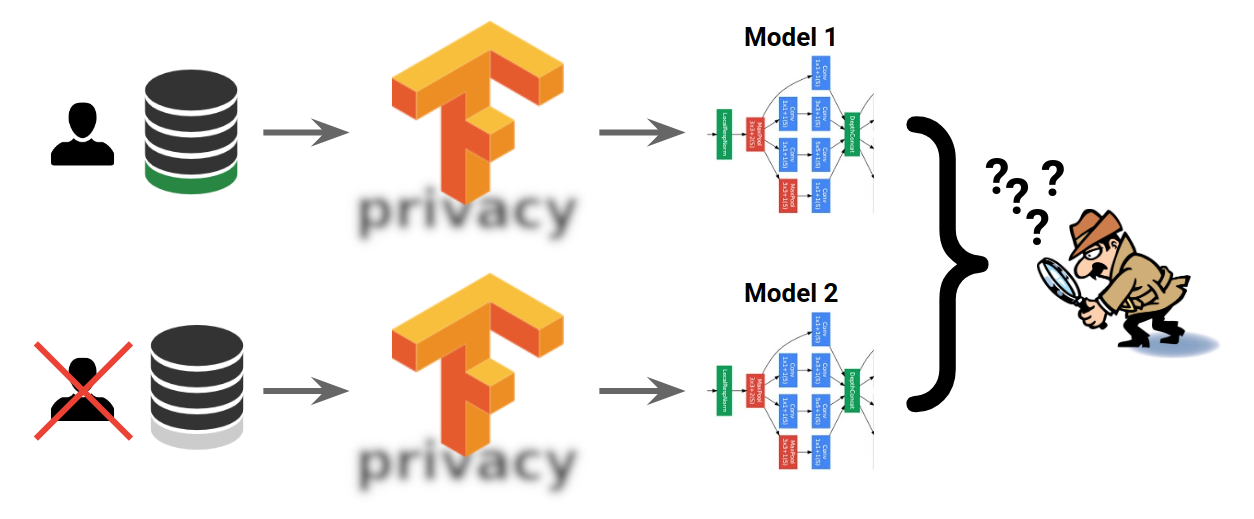

- TensorFlow Privacy: A library for training machine learning models with privacy for training data

- Model documentation:

- Model Cards: A framework by HuggingFace for transparent reporting of AI model information

- FactSheets: IBM's approach to increasing trust and transparency in AI

TensorFlow Privacy. (Source: TensorFlow)

These tools and technologies help ensure that AI systems are managed and maintained responsibly. Different departments and roles in an organization have their own tools and criteria regarding AI governance. However, it's important to note that tools alone are not sufficient. They must go hand in hand with robust processes and oversight to be effective.

Case Study: Microsoft's Responsible AI

Various companies have taken practical measures to implement AI governance. One such example is Microsoft’s Responsible AI Program. Microsoft is a leading player in today’s AI, but it has had its fair share of failed AI projects—most notably, Tay.

Being originally released by Microsoft in 2016, Tay was a chatbot that was supposed to learn and interact on Twitter. Due to a lack of safety measures, within only 24 hours, Twitter users have taught Tay to become fascist, offensive, and racist. Tay was learning from them without any guardrails on what to learn and what to avoid. Tay was eventually shut down, but it became a valuable lesson.

Microsoft emerged in developing and implementing AI governance practices through its Responsible AI program. One of the notable aspects of Microsoft's Responsible AI program is its operational structure. The company has established an AI ethics committee, known as the Aether Committee (AI, Ethics, and Effects in Engineering and Research), which reviews AI projects for potential ethical concerns. This committee is a gathering of experts from various fields to review the impacts of AI technologies on society and provide guidance.

Additionally, Microsoft has developed and released several tools and frameworks to help developers implement responsible AI practices in their daily work. The Responsible AI Toolbox is one example that helps assess and improve the fairness of AI systems.

The Regulatory and Policy Landscape

Regulations and governance, while being different in definition, take effect from one another. Governments and regulatory organizations around the world are developing new policies and regulations to govern AI development and use. Understanding this evolving landscape is needed for organizations that are implementing AI governance practices in their workflow.

The EU AI Act

One of the most significant developments in AI regulation is the European Union Artificial Intelligence Act (EU AI Act). Key takeaways of this Act include:

- Risk-based approach: AI systems are categorized based on their level of risk, with different requirements for each category.

- Prohibited AI practices: Certain AI applications, such as government social scoring, are prohibited.

- High-risk AI systems: Strict requirements are set for AI systems used in critical areas like healthcare, education, and law enforcement.

- Transparency requirements: Obligations to inform users when and in what form they are interacting with AI systems.

Other emerging regulations

While the EU AI Act is perhaps the most comprehensive AI regulation to date, other bodies are also developing their own approaches.

Canada Proposed Artificial Intelligence and Data Act (AIDA) as part of broader privacy reform, while California's AI safety bill, SB 1047 is by the date of writing this article, on its way to becoming law.

Implications for businesses

The evolving regulatory landscape has big implications for organizations developing AI systems:

- Compliance requirements: Organizations will need to ensure their AI systems abide by regulations. These regulations may be in contrast with their current practices, eventually requiring significant changes to their development and deployment procedures.

- Documentation and transparency: Increased emphasis on documenting AI development processes and providing explanations for AI-driven decisions. The extent of this requirement for transparency is of course different depending on the law.

- Risk management: Need for robust risk assessment, particularly for AI systems that may be classified as "high-risk."

- Global considerations: Organizations operating in multiple jurisdictions will need to address potentially conflicting regulatory requirements.

- Ethical considerations: Many regulations incorporate ethical principles, requiring organizations to more explicitly consider the ethical implications of their AI systems.

Whether or not your organization or team is subject to these laws, is a matter of your jurisdiction, the type of product you build, and the risk level associated with the product.

The Debate on AI Regulations: Pros and Cons

Regulations are one of the most debated topics in AI. While the majority of the AI community agrees that “some” sort of regulation is beneficial, nobody seems to agree on the nature, extent, and who must take charge of these laws.

The complexity of this issue has led to passionate arguments across industry, academia, government, and civil society, each debating the potential benefits and drawbacks of regulatory frameworks. Understanding these arguments is necessary in developing a right and unbiased perspective.

Arguments in favor of AI regulations

The supporters of AI regulations argue that these guidelines are essential for protecting the public and that well-designed regulations can keep the potential harms of AI under control. Privacy violations, bias, and job displacement are a number of such potential harms. They believe that a set of clear laws and standards can prevent a “race to the bottom” in terms of ethics. Moreover, these standards can play a crucial role in building public trust in AI technologies.

Another argument in favor of regulations is the broad societal impact of AI. As these technologies increasingly influence areas like employment, social interactions, and democratic processes, advocates argue that regulatory oversight is necessary to ensure that these impacts are taken into account and managed responsibly.

Arguments against AI regulations

Critics of AI regulations raise several concerns about their negative impacts. A primary argument against strict regulations is the fear that they could eventually cripple the innovation. They worry that overly restrictive rules could slow down AI, potentially putting brakes on the beneficial advancements in fields like healthcare, climate change, and scientific research, and the fast-paced nature of AI development means that regulations may quickly become outdated, potentially becoming irrelevant or even counterproductive.

Another significant concern is the compliance challenges for smaller companies and startups. The cost, manpower, and complexity of complying with extensive regulations could be oppressive for these entities, reducing competition and innovation in the AI sector. As a result, this could lead to the monopoly of AI development among a few large tech companies that own adequate resources to address these complex regulatory landscapes.

Many AI experts question whether regulating AI at the technology level, rather than the application level, is the right approach. They argue that, like other technologies such as electricity and the internet, AI is not inherently malicious. How one uses such technologies could be ill-intended or could be constructive. AI safety is the property of applications rather than the technology itself, as pointed out in this article.

Developer Responsibility

It’s also important to note that values that constitute AI systems as safe—such as fairness, bias, or transparency while having roots in society and ethical concerns, are also a technical matter.

It's crucial to acknowledge that biased AI models are also technically flawed. A model that exhibits bias is, by definition, not accurately representing or processing the full spectrum of data it's meant to handle.

This technical shortcoming can lead to reduced performance, inaccurate predictions, and unreliable outcomes. From this standpoint, addressing bias and promoting fairness in AI models is not just an ethical concern but a fundamental technical challenge that directly impacts the quality of the AI systems we build.

These values must be respected and instilled in AI systems by the developers building them, even without the requirement of laws or regulatory standards. Researchers and developers working on fairness and bias mitigation are often engaged in deeply technical work.

Consider an AI system developed to assist bank employees in evaluating loan applications. If, due to historical biases in lending practices, the training data reflects a pattern where certain racial groups were disproportionately denied loans or offered less favorable terms, the AI system learns to associate race with applicant scores, then this system is “technically bad” before it is “ethically unacceptable.” This example shows that addressing bias in AI systems is not just an ethical incentive but also a technical necessity.

Organizations and individuals can use transparency and documentation throughout the AI development lifecycle to address concerns and public trust. This “algorithmic transparency” includes clear communication of a model’s purpose, data sources, methodology, or potential limitations of the system. As a developer, you can be clear and transparent by communicating the:

- Model's training process

- Details about the dataset used

- Any data preprocessing steps

- The chosen algorithm

This transparency not only aids in identifying and correcting technical flaws but also demonstrates a commitment to ethical AI development, thereby increasing public confidence in the technology.

Conclusion

This article has explored the nature of AI governance, ethical considerations, regulatory frameworks, and technical implementations. The importance of AI governance lies in its potential to identify risks before they cause issues, gain public trust, and direct the power of AI for societal benefit and good.

We’ve also examined various aspects of AI governance, including the tools and technologies available, corporate initiatives, and the evolving regulatory landscape. We've also looked into the ongoing debate surrounding AI regulations, presenting arguments both for and against this oversight.

In the end, the responsibility for ethical and responsible AI development falls not just on regulatory bodies but also on the companies and individuals creating these technologies. As we've seen, addressing issues like bias and fairness in AI systems is not merely an ethical concern but a technical necessity.

Earn a Top AI Certification

Master's student of Artificial Intelligence and AI technical writer. I share insights on the latest AI technology, making ML research accessible, and simplifying complex AI topics necessary to keep you at the forefront.