Course

If you never want to deal with inconsistent, redundant data again, database normalization is the way to go.

You know the frustration of updating customer information in one table only to find outdated versions scattered across five others. Your queries return conflicting results, your reports show different numbers depending on which table you pull from, and you spend hours debugging data integrity issues that shouldn't exist. These problems only multiply as your database grows.

Database normalization eliminates these headaches by organizing your data according to proven mathematical principles. The process uses normal forms to make sure each piece of information exists in exactly one place, making your database reliable and efficient.

I'll show you the complete normalization process, from basic concepts to advanced normal forms, with hands-on examples that transform messy data into clean, maintainable database structures.

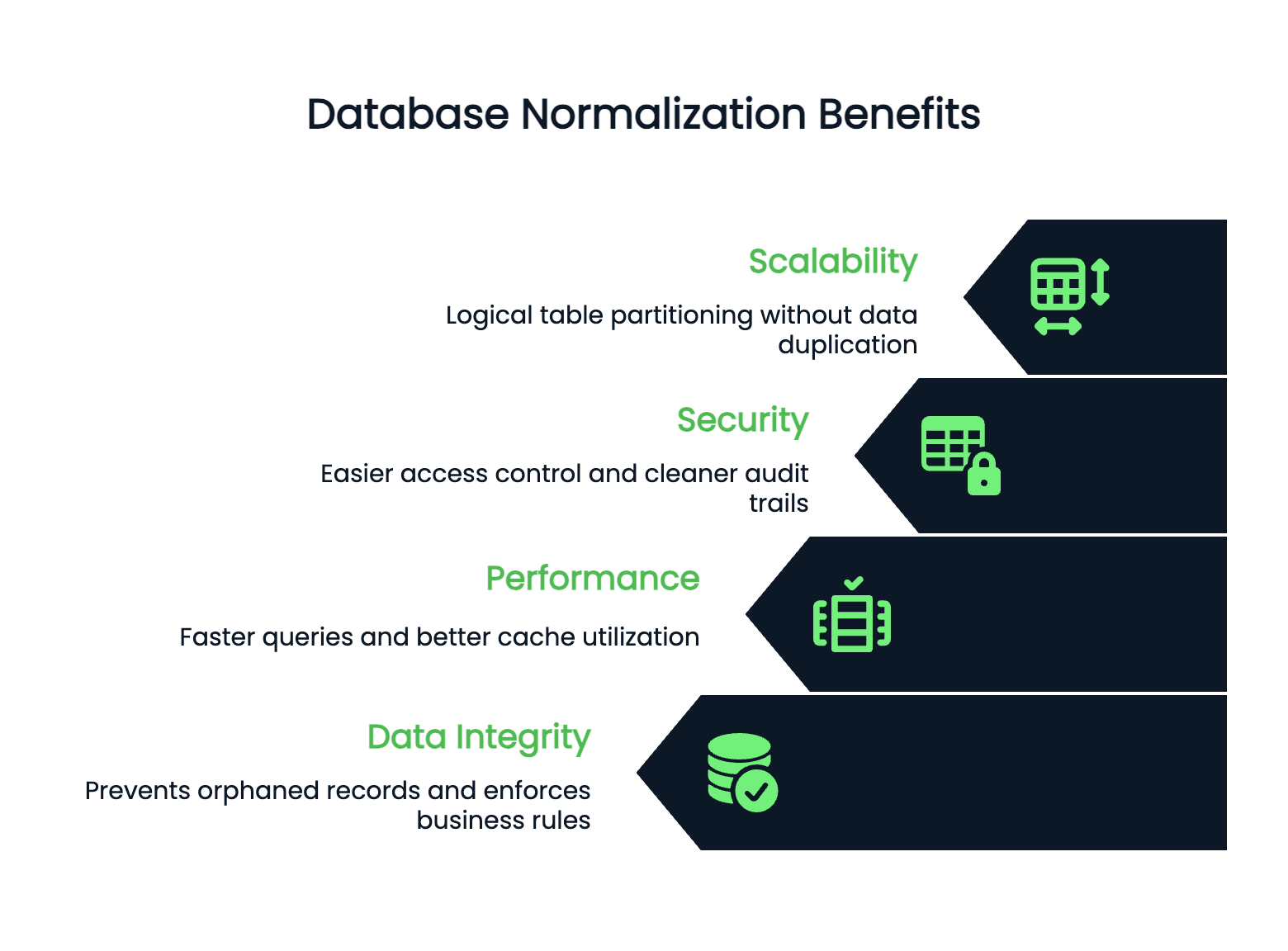

Why Is Normalization Important?

Normalization is what keeps your database from becoming a maintenance nightmare. Let's look at why proper normalization matters for real-world applications.

Data redundancy

Redundancy is the silent killer of database performance. When you store the same information in multiple places, you're not just wasting storage space - you're setting yourself up for inconsistencies that break your application logic.

Without normalization, updating a customer's address means hunting down every table that stores address data. Miss one, and your reports show conflicting information. Your users see different addresses on different screens. Your analytics become unreliable.

Normalization fixes this by making sure each piece of data lives in exactly one place. When you update that customer's address, it changes everywhere automatically because everything references the same source.

Data integrity

Integrity becomes bulletproof when you normalize correctly. Foreign key constraints prevent orphaned records. You can't accidentally delete a customer who still has active orders. Your database enforces business rules at the data level, not just in application code.

This means fewer bugs, cleaner code, and applications that behave predictably even when multiple systems access the same data.

Data anomalies

Modification anomalies disappear with proper normalization. These happen when you insert, update, or delete data and create inconsistencies or require complex workarounds.

Insert anomalies force you to add dummy data just to create a record. Update anomalies require you to change the same information across multiple rows. Delete anomalies remove more information than intended when you delete a single record.

Normalized databases eliminate these problems by organizing data so each fact appears once and only once.

Performance and scalability

Performance and scalability improve when your database structure is clean. Normalized tables are typically smaller, which means faster queries and better cache utilization. Indexes work more effectively on smaller, focused tables.

Your database can scale horizontally because normalized data has clear boundaries. You can partition tables logically without duplicating information across shards.

Security

Security becomes easier to manage in normalized databases. You can control access at the table level with confidence because sensitive data lives in specific, well-defined places. No need to worry about customer credit card numbers hiding in unexpected tables.

Audit trails are cleaner too - you know exactly where changes happen and can track them without hunting through redundant data scattered across your schema.

In summary, normalization transforms chaotic data into a reliable foundation that grows with your application.

Let's see what are prerequisites for normalization next.

Key Concepts and Prerequisites

Before you start normalizing tables, you need to understand what makes normalization work. Let's cover the essential concepts that'll guide your decisions throughout the process.

Understanding keys in database normalization

Keys are the foundation of relational database design - they identify records and connect tables together.

A primary key uniquely identifies each row in a table. No two rows can have the same primary key value, and it can't be null. Think of it like a social security number for your data - each record gets exactly one, and no duplicates exist.

CREATE TABLE customers (

customer_id INT PRIMARY KEY,

email VARCHAR(255),

name VARCHAR(100)

);Here, customer_id is the primary key. Every customer gets a unique ID that you'll use to reference that specific customer from other tables.

A candidate key is any column (or combination of columns) that could serve as a primary key. Your customers table might have both customer_id and email as candidate keys since both uniquely identify customers. You pick one as the primary key, and the others remain candidate keys.

Foreign keys create relationships between tables. They reference the primary key of another table and establish connections that maintain data integrity.

CREATE TABLE orders (

order_id INT PRIMARY KEY,

customer_id INT,

order_date DATE,

FOREIGN KEY (customer_id) REFERENCES customers(customer_id)

);The customer_id in the orders table is a foreign key. It must match a customer_id that exists in the customers table. This prevents orphaned orders and makes sure every order belongs to a real customer.

Keys enforce business rules at the database level, which makes your data more reliable than application-only validation.

Role of functional dependencies

Functional dependencies describe how columns relate to each other within a table. They're the mathematical foundation that drives normalization decisions.

A functional dependency exists when one column's value determines another column's value. We write this as A → B, meaning "A determines B" or "B depends on A."

In a customers table, customer_id → email because each customer ID maps to exactly one email address. If you know the customer ID, you can determine the email with certainty.

Image 1 - Functional dependency example

Here, customer_id → email and customer_id → name because the customer ID determines both the email and name.

Functional dependencies reveal redundancy problems.

If you have a table where order_id → customer_name but you're storing the customer name in every order row, you've got redundancy. The customer's name depends on their ID, not the order ID.

Dependency preservation means your normalized tables still maintain all the original functional dependencies. When you split a table during normalization, you shouldn't lose the ability to enforce business rules that existed in the original table.

Lossless decomposition guarantees you can reconstruct the original table by joining the normalized tables. You don't lose any information when you split tables - the joins bring back exactly the same data you started with.

These concepts work together: functional dependencies identify what needs to be separated, while dependency preservation and lossless decomposition ensure you don't break anything in the process.

Understanding these relationships helps you make smart normalization decisions that improve your database without losing functionality.

Step-by-Step Normalization Process

Now let's walk through the actual normalization process, starting with messy data and transforming it step by step. Each normal form builds on the previous one, so you can't go directly from unnormalized data to 3NF.

First normal form (1NF)

The first normal form eliminates repeating groups and makes sure every column contains atomic values. Learn more about it in the First Normal Form (1NF) in-depth guide.

Atomic values mean each cell holds exactly one piece of information - no lists, no comma-separated values, no multiple data points crammed into a single field. This is the foundation that makes everything else possible.

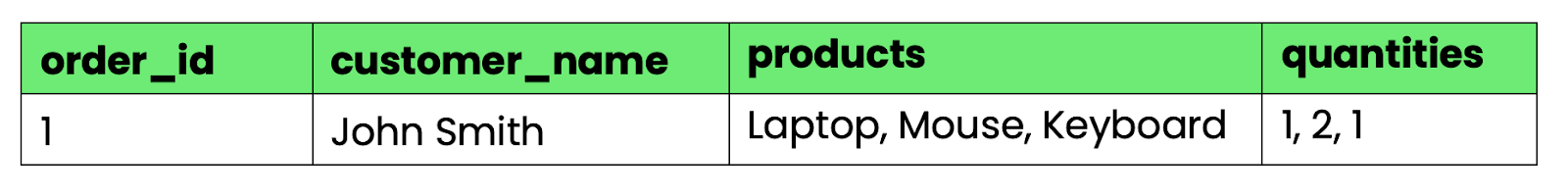

Here's what violates 1NF:

CREATE TABLE orders_bad (

order_id INT,

customer_name VARCHAR(100),

products VARCHAR(500),

quantities VARCHAR(50)

);

Image 2 - Table that violates 1NF

The products and quantities columns contain multiple values separated by commas. You can't easily query "all orders containing laptops" or calculate total quantities without string parsing.

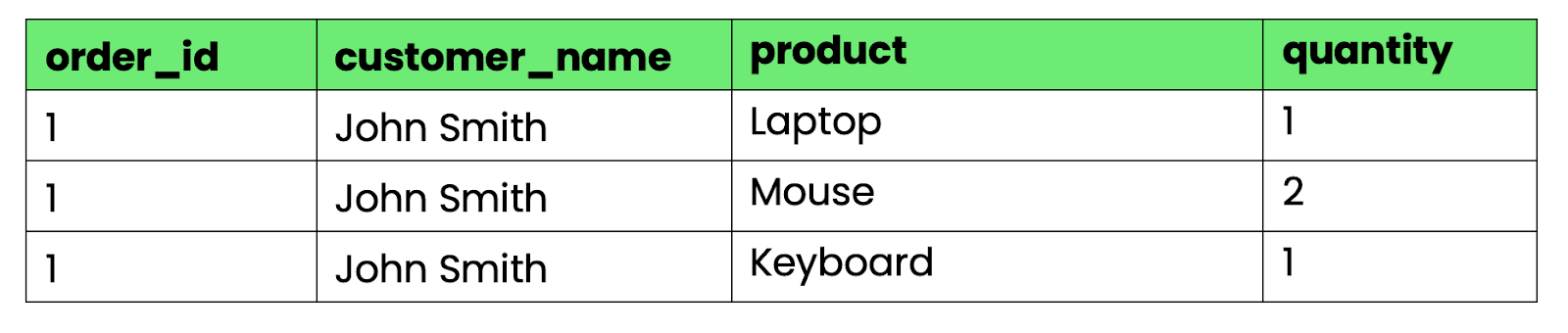

To convert this to 1NF, split the repeating groups into separate rows:

-- First normal form (1NF)

CREATE TABLE orders_1nf (

order_id INT,

customer_name VARCHAR(100),

product VARCHAR(100),

quantity INT

);

Image 3 - Table that satisfies 1NF

Now, each cell contains exactly one value. You can query, sort, and aggregate the data using standard SQL operations.

Second normal form (2NF)

Second normal form removes partial dependencies - when non-key columns depend on only part of a composite primary key.

There's more to the Second Normal Form (2NF) than meets the eye. Learn more in our in-depth guide.

A table is in 2NF if it's in 1NF and every non-key column depends on the entire primary key, not just part of it.

Our 1NF table has a problem. If we use order_id and product as a composite primary key, customer_name depends only on order_id, not on the product. This creates redundancy - the customer name repeats for every product in an order.

-- Still has partial dependencies

-- customer_name depends only on order_id, not on (order_id, product)

CREATE TABLE orders_1nf (

order_id INT,

customer_name VARCHAR(100), -- Partial dependency!

product VARCHAR(100),

quantity INT,

PRIMARY KEY (order_id, product)

);To achieve 2NF, split the table based on dependencies:

-- Orders table (customer info depends on order_id)

CREATE TABLE orders (

order_id INT PRIMARY KEY,

customer_name VARCHAR(100)

);

-- Order items table (quantity depends on both order_id and product)

CREATE TABLE order_items (

order_id INT,

product VARCHAR(100),

quantity INT,

PRIMARY KEY (order_id, product),

FOREIGN KEY (order_id) REFERENCES orders(order_id)

);Now customer_name appears only once per order, eliminating redundancy. Each table has columns that depend on the entire primary key.

Third normal form (3NF)

The third normal form eliminates transitive dependencies, which occur when non-key columns depend on other non-key columns instead of the primary key. Dive into the Third Normal Form (3NF) beyond the basics.

A transitive dependency exists when “Column A” determines “Column B”, and “Column B” determines “Column C”, creating an indirect dependency from A to C.

Let's expand our orders table with customer address information:

-- Has transitive dependencies

CREATE TABLE orders_2nf (

order_id INT PRIMARY KEY,

customer_name VARCHAR(100),

customer_city VARCHAR(50),

customer_state VARCHAR(50),

customer_zip VARCHAR(10)

);Here's the problem: customer_name → customer_city, and customer_city → customer_state. The state depends on the city, not directly on the order. This creates redundancy - every order from the same city repeats the state information.

To achieve 3NF, remove transitive dependencies by creating separate tables:

-- Customers table (removes transitive dependencies)

CREATE TABLE customers (

customer_id INT PRIMARY KEY,

customer_name VARCHAR(100),

city_id INT,

FOREIGN KEY (city_id) REFERENCES cities(city_id)

);

-- Cities table

CREATE TABLE cities (

city_id INT PRIMARY KEY,

city_name VARCHAR(50),

state VARCHAR(50),

zip VARCHAR(10)

);

-- Orders table (now references customer, not customer details)

CREATE TABLE orders (

order_id INT PRIMARY KEY,

customer_id INT,

order_date DATE,

FOREIGN KEY (customer_id) REFERENCES customers(customer_id)

);Now geographic information lives in one place. If a city changes states (rare but possible), you update one row instead of hunting through every order from that city.

Each normal form solves specific redundancy problems while maintaining the ability to reconstruct your original data through joins.

Advanced Normal Forms

The first three normal forms handle most real-world database problems, but some edge cases require deeper normalization. These advanced forms deal with specific dependency issues that 3NF can't solve.

Boyce-Codd normal form (BCNF)

BCNF fixes a subtle problem that 3NF misses: when a table has overlapping candidate keys.

3NF allows non-key columns to depend on candidate keys, but BCNF is more strict. In BCNF, every determinant (a column that determines another column) must be a superkey - either a primary or a candidate key.

Here's where 3NF breaks down:

-- Table in 3NF but violates BCNF

CREATE TABLE course_instructors (

student_id INT,

course VARCHAR(50),

instructor VARCHAR(50),

PRIMARY KEY (student_id, course)

);The business rules are:

- Each student can take multiple courses

- Each course has exactly one instructor

- Each instructor teaches exactly one course

This creates course → instructor and instructor → course dependencies. Both (student_id, course) and (student_id, instructor) are candidate keys, but course and instructor determine each other without being superkeys themselves.

The problem shows up when you try to add a new instructor without students. You can't insert "Professor Smith teaches Database Design" without also adding a student to that course.

To achieve BCNF, decompose based on the problematic dependency:

-- BCNF solution

CREATE TABLE course_assignments (

course VARCHAR(50) PRIMARY KEY,

instructor VARCHAR(50) UNIQUE

);

CREATE TABLE student_enrollments (

student_id INT,

course VARCHAR(50),

PRIMARY KEY (student_id, course),

FOREIGN KEY (course) REFERENCES course_assignments(course)

);Now you can add instructors without students, and the database structure matches the business rules exactly.

Fourth normal form (4NF)

4NF eliminates multi-valued dependencies - when one column determines multiple independent sets of values.

A multi-valued dependency exists when “Column A” determines multiple values in “Column B”, and those values are independent of other columns in the table.

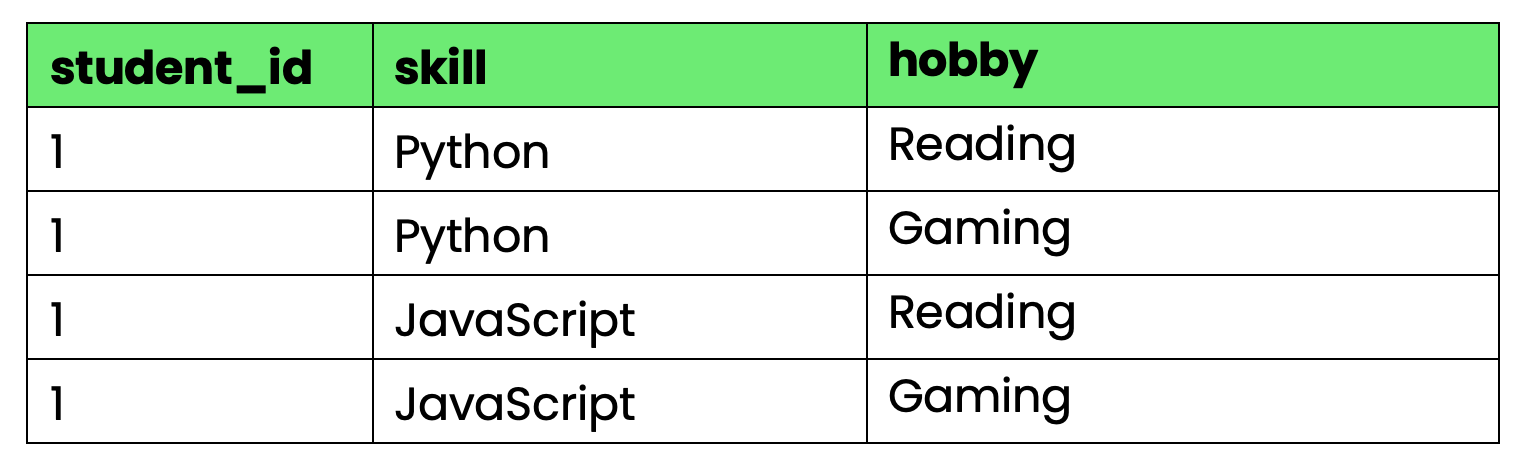

Consider this table tracking student skills and hobbies:

-- Violates 4NF due to multi-valued dependencies

CREATE TABLE student_info (

student_id INT,

skill VARCHAR(50),

hobby VARCHAR(50),

PRIMARY KEY (student_id, skill, hobby)

);

Image 4 - Table that violates 4NF

The problem: student_id determines both skills and hobbies, but skills and hobbies are independent of each other. When student 1 learns a new skill, you need to create rows for every hobby combination. When they pick up a new hobby, you need rows for every skill combination.

This creates explosive redundancy as the number of skills and hobbies grows.

To achieve 4NF, separate the independent multi-valued dependencies:

-- 4NF solution

CREATE TABLE student_skills (

student_id INT,

skill VARCHAR(50),

PRIMARY KEY (student_id, skill)

);

CREATE TABLE student_hobbies (

student_id INT,

hobby VARCHAR(50),

PRIMARY KEY (student_id, hobby)

);Now you can add skills and hobbies independently without creating Cartesian product explosions.

Fifth and sixth normal forms (5NF and 6NF)

5NF (Project-Join Normal Form) eliminates join dependencies: complex relationships that require three or more tables to reconstruct data without loss.

A join dependency exists when you can't reconstruct the original table by joining two decomposed tables, but you can reconstruct it by joining three or more tables.

Consider suppliers, parts, and projects with this rule: "A supplier can supply a part to a project only if the supplier supplies that part AND works on that project."

-- Original table with join dependency

CREATE TABLE supplier_part_project (

supplier_id INT,

part_id INT,

project_id INT,

PRIMARY KEY (supplier_id, part_id, project_id)

);To achieve 5NF, decompose into three binary relationships:

-- 5NF decomposition

CREATE TABLE supplier_parts (supplier_id INT, part_id INT);

CREATE TABLE supplier_projects (supplier_id INT, project_id INT);

CREATE TABLE project_parts (project_id INT, part_id INT);You can only reconstruct valid supplier-part-project combinations by joining all three tables, which enforces the business rule at the schema level.

6NF takes normalization to the extreme by putting each attribute in its own table with temporal keys.

6NF is designed for data warehouses and temporal databases where you need to track how every attribute changes over time independently.

-- 6NF example for temporal data

CREATE TABLE customer_names (

customer_id INT,

name VARCHAR(100),

valid_from DATE,

valid_to DATE

);

CREATE TABLE customer_addresses (

customer_id INT,

address VARCHAR(200),

valid_from DATE,

valid_to DATE

);This allows you to track when each attribute changed without affecting others, but it makes queries complex and is rarely used outside specialized temporal database systems.

Most applications stop at 3NF or BCNF. These advanced forms solve specific edge cases but add complexity that isn't worth it for typical business applications.

SQL Upskilling for Beginners

Advantages and Disadvantages of Normalization

Normalization isn't a silver bullet - it solves important problems but creates new challenges, mostly around SQL query complexity. Here's what you gain and what you give up when you normalize your database.

Advantages of normalization

- Reduced redundancy means your database stores each fact exactly once, cutting storage costs and eliminating sync issues. When customer data lives in a single table instead of scattered across dozens, updating an address becomes a one-row operation. No hunting through related tables, worrying about missed updates, or inconsistent data showing up in reports.

- Data consistency becomes automatic when there's only one source of truth. Your application can't display conflicting information because conflicting information can't exist in the first place.

- Updates become fast and reliable because you're changing one row instead of dozens. Insert a new customer once, reference them everywhere else with foreign keys. Delete an order without worrying about orphaned data in related tables.

- Security controls get simpler when sensitive data has clear boundaries. Customer payment information lives in a specific table with specific access controls. You don't need to worry about credit card numbers hiding in unexpected places.

- Scalability improves because normalized tables are smaller and more focused. Indexes work better on smaller tables. You can partition data logically without duplicating information across shards.

- Team collaboration becomes smoother when everyone understands where the data lives. New developers can navigate the schema faster. Database administrators can optimize performance with confidence. Business analysts can write reliable queries without second-guessing data quality.

- Backup and recovery strategies get cleaner because related data doesn't span multiple disconnected tables. Foreign key constraints ensure you can't restore partial data that breaks referential integrity.

Disadvantages and challenges of normalization

- Query complexity increases when simple questions require multiple joins to answer. Want to see a customer's order history with product names? In a denormalized table, that's one query. In a normalized database, you're joining the customers, orders, order items, and products tables. More joins mean more opportunities for mistakes and slower query execution.

- Performance can suffer when you're constantly joining tables instead of reading from single, wide tables. Each join adds overhead, especially when your database needs to access data from different storage locations.

- Development time increases because developers need to understand table relationships before writing queries. What used to be a simple

SELECTbecomes a multi-tableJOINwith proper foreign key handling. - Over-normalization creates artificial complexity when you split data that naturally belongs together. If you normalize a person's full name into separate first, middle, and last name tables, you've probably gone too far.

Here's a real example: An e-commerce site normalized product categories into six levels of hierarchy. Simple queries like "show all electronics" became seven-table joins that took seconds instead of milliseconds. The theoretical purity wasn't worth the practical pain.

- Read-heavy applications suffer when normalization optimizes for writes, but most operations are reads. Social media feeds, analytics dashboards, and reporting systems often perform better with some strategic denormalization.

- Maintenance overhead grows as the number of tables increases. More tables mean more indexes to maintain, more foreign key constraints to validate, and more complex backup procedures.

The key is finding the right balance for your specific use case - normalize enough to prevent data integrity problems, but not so much that you sacrifice performance and developer productivity.

Performance and Optimization

Normalization affects different types of systems in different ways - what helps transactional systems can hurt analytical ones. Here's how to optimize performance based on your workload patterns.

Considerations for OLTP and OLAP systems

OLTP systems benefit from normalization because they handle many small, focused transactions that modify specific records.

In an e-commerce application, when a customer updates their shipping address, you're changing one row in the customers table. Without normalization, you'd need to update address information in customers, orders, shipping addresses, and billing addresses tables, creating multiple writes with higher lock contention.

Normalized tables reduce lock contention because transactions affect smaller, more focused datasets. When “User A” updates their profile while “User B” places an order, they're likely touching different tables entirely. This means better concurrency and faster transaction processing.

Write operations become atomic and predictable in normalized systems. Insert a new order by writing to the orders table and the order_items table. If either operation fails, you can roll back cleanly without worrying about partial updates scattered across denormalized structures.

OLAP systems tell a different story—they need fast reads across large datasets and often aggregate data from multiple related tables.

Consider a sales analytics query: "Show monthly revenue by product category for the last two years." A normalized system requires joining orders, order items, products, and categories tables - potentially millions of rows with expensive aggregations.

A denormalized data warehouse table with pre-calculated monthly totals answers the same question with a simple GROUP BY query. The trade-off is storage space and update complexity for much faster query performance.

Hybrid approaches work well when you need both transactional integrity and analytical performance. Keep your OLTP system normalized for data integrity, then ETL into denormalized OLAP systems for fast reporting.

Techniques to mitigate normalization overhead

- Proper indexing strategy transforms join performance in normalized databases. Foreign key columns always need indexes. When you join customers and orders tables on customer ID, both tables should have indexes on that column. Without them, the database performs full table scans that kill performance.

-- Must-have indexes for normalized tables

CREATE INDEX idx_orders_customer_id ON orders(customer_id);

CREATE INDEX idx_order_items_order_id ON order_items(order_id);

CREATE INDEX idx_order_items_product_id ON order_items(product_id);- Composite indexes help with multi-column queries common in normalized schemas:

-- For queries filtering by customer and date range

CREATE INDEX idx_orders_customer_date ON orders(customer_id, order_date);- Query result caching eliminates repeated join overhead for commonly accessed data combinations. Redis or Memcached can store pre-computed results for expensive multi-table queries.

- Database connection pooling reduces the overhead of establishing connections for applications that make many small, normalized queries.

- Materialized views pre-compute complex joins and store results as physical tables:

-- Pre-computed customer order summary

CREATE MATERIALIZED VIEW customer_order_summary AS

SELECT

c.customer_id,

c.name,

COUNT(o.order_id) as total_orders,

SUM(o.total_amount) as lifetime_value

FROM customers c

LEFT JOIN orders o ON c.customer_id = o.customer_id

GROUP BY c.customer_id, c.name;- Horizontal sharding works well with normalized data because table relationships provide natural sharding boundaries. Shard by customer_id and related order data stays together.

- Read replicas handle analytical queries separately from transactional workloads. Route complex reporting queries to read-only replicas while keeping writes on the primary database.

- Database-specific optimizations make a huge difference:

- PostgreSQL: Use

EXPLAIN ANALYZEto identify slow joins, tune work_mem for sort operations - MySQL: Enable query cache for repeated

SELECTstatements, optimizeJOINbuffer size - SQL Server: Use query execution plans to identify missing indexes, enable page compression for large tables

- PostgreSQL: Use

The key is measuring before optimizing. Profile your actual queries to find bottlenecks, then apply targeted fixes rather than guessing what might help.

Denormalization: Strategic Trade-Offs

Sometimes breaking normalization rules makes sense - when read performance matters more than perfect data organization. Here's when and how to denormalize without creating a maintenance nightmare.

- Read-heavy applications with expensive joins are prime candidates for strategic denormalization.

- Real-time dashboards and analytics often need denormalized data to hit performance targets. When executives want to see live sales metrics updating every few seconds, you can't afford complex aggregations across normalized tables.

- E-commerce product catalogs frequently denormalize category information. Instead of joining products → subcategories → categories → main_categories, many sites store the full category path directly with each product: "Electronics > Computers > Laptops > Gaming."

- Common denormalization techniques include:

- Storing computed values: Keep running totals, counts, or averages that would otherwise require aggregation queries

- Flattening hierarchies: Store category paths, organizational structures, or nested data as flat fields

- Duplicating frequently accessed data: Copy customer names into order records, product titles into shopping cart items

- Pre-joining related data: Store user profile information with posts, comments, or activity records

The balance comes down to understanding your access patterns. If you read customer order summaries 100 times more often than you update customer information, duplicating the customer name in order records makes sense.

But denormalize selectively. Don't flatten your entire schema because one report runs slowly - fix that one report while keeping the rest normalized.

Start normalized, then denormalize based on real performance problems. Premature denormalization creates update complexity before you know if you actually need the performance boost.

Summing up Database Normalization

In plain English, database normalization eliminates data redundancy and ensures consistency.

It comes with trade-offs in query complexity and performance. The key is choosing the right level based on your workload. OLTP systems benefit from full normalization through 3NF, while read-heavy applications often need strategic denormalization for speed.

You don't have to pick just one approach. Keep your transactional database normalized for data integrity, then use denormalized views or separate analytical databases for reporting. This hybrid strategy gives you both reliability and performance where you need them most.

Start with proper normalization, then denormalize selectively based on real performance problems rather than theoretical concerns.

If you want to advance your database skills, these courses are a great next step:

Associate Data Engineer in SQL

FAQs

What are the main benefits of normalization in database management?

Normalization eliminates data redundancy, which reduces storage costs and prevents inconsistencies across your database. It makes updates faster and more reliable because you only need to change information in one place instead of hunting through multiple tables. Normalized databases also have better data integrity through foreign key constraints, cleaner security controls since sensitive data lives in specific tables, and improved scalability because smaller, focused tables perform better with indexes and partitioning.

How does normalization improve data integrity?

Normalization enforces data integrity at the database level through foreign key constraints and elimination of redundant data. When you can't accidentally delete a customer who still has active orders, or insert an order without a valid customer, your database maintains referential integrity automatically. Since each piece of information exists in only one place, you can't have conflicting versions of the same data scattered across multiple tables, which prevents the inconsistencies that break application logic.

What are the common pitfalls of normalization?

Over-normalization creates unnecessary complexity when you split data that naturally belongs together, like separating a person's name into multiple tables. This leads to excessive joins for simple queries and hurts performance. Another pitfall is normalizing without considering your actual access patterns - if you're constantly joining the same tables for common queries, you might need strategic denormalization. Poor indexing on foreign key columns also kills performance in normalized databases, making joins much slower than they should be.

How does denormalization impact database performance?

Denormalization improves read performance by eliminating joins, which can dramatically speed up common queries from 50ms to 5ms in high-traffic applications. However, it makes write operations more complex because updates must maintain consistency across multiple denormalized copies of the same data. This increases the risk of data inconsistencies and requires more application logic to keep everything in sync. Denormalization also uses more storage space since you're duplicating data across tables.

What are the best practices for deciding when to normalize or denormalize a database?

Start with proper normalization to Third Normal Form (3NF) to ensure data integrity, then denormalize selectively based on real performance problems rather than theoretical concerns. Measure your actual query patterns - if you're reading data 100 times more often than updating it, denormalization might make sense for those specific tables. Use hybrid approaches: keep your transactional database normalized for writes, then create denormalized views or separate analytical databases for reporting. Always profile performance before making changes, as proper indexing and query optimization often solve perceived normalization problems without schema changes.