Course

When I first encountered data modeling, it seemed like another technical step in working with databases. But as I explored it more, I realized how essential it is for ensuring data is well-structured, easily accessible, and ready for analysis. Without a solid data model, even the most powerful databases can become difficult to manage, leading to inefficiencies and inconsistencies.

Whether you're designing a database from scratch or refining an existing system, understanding data modeling is key to making data work for you.

In this post, we’ll explore fundamental data modeling techniques, best practices, and real-world examples to help you build effective models!

What is Data Modeling?

Data modeling is a detailed process that involves creating a visual representation of data and its relationships. It serves as a blueprint for how data is structured, stored, and accessed to ensure consistency and clarity in data management.

Defining data elements and their relationships helps teams organize information to support efficient storage, retrieval, and analysis—improving both performance and decision-making.

Types of data models

There are three main types of data models. Let’s explore them in this section.

Conceptual data model

A conceptual model provides a high-level view of the data. This model defines key business entities (e.g., customers, products, and orders) and their relationships without getting into technical details.

Logical data model

The logical model defines how the data will be structured. This model focuses on data organization without being tied to any specific database or technology. It includes detailed information about the data's attributes, relationships, and constraints, thus offering a bridge between business requirements and the physical implementation of the data.

Physical data model

A physical data model represents how data is actually stored in a database. This model defines the specific table structures, indexes, and storage mechanisms required to optimize performance and ensure data integrity. It translates the logical design into a format suitable for database systems.

Data Modeling Techniques

Data modeling is not a one-size-fits-all process. Different techniques are employed depending on the complexity of the data and the goals. In this section, we'll explore some of the most popular data modeling approaches.

Entity-relationship (ER) modeling

ER modeling is one of the most common techniques used to represent data. It’s concerned with defining three key elements:

- Entities (objects or things within the system).

- Relationships (how these entities interact with each other).

- Attributes (properties of the entities).

The ER model provides a clear, visual representation of how data is structured to help map the connections between different data points.

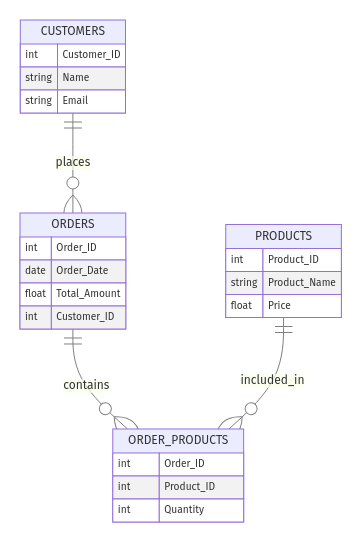

Example: E-commerce store

Consider an online store. You might have the following entities:

- Customers (with attributes like

Customer_ID,Name, andEmail) - Orders (with

Order_ID,Order_Date,Total_Amount) - Products (with

Product_ID,Product_Name,Price)

The relationships could be:

- "Customers place Orders" (One-to-Many)

- "Orders contain Products" (Many-to-Many)

Here’s how the ER looks like:

Example ER model for e-commerce store. Image by Author

Dimensional modeling

Dimensional modeling is widely used in data warehousing and analytics, where data is often represented in terms of facts and dimensions. This technique simplifies complex data by organizing it into a star or snowflake schema, which helps in efficient querying and reporting.

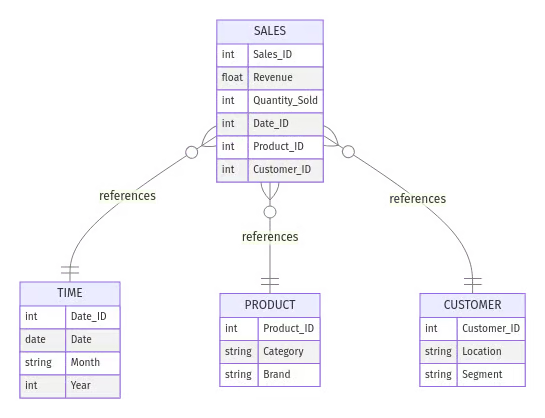

Example: Sales reporting

Imagine you need to analyze sales data. You would structure it as follows:

- Fact table:

Sales(stores transactional data, e.g.,Sales_ID,Revenue,Quantity_Sold)- Dimension tables:

Time(e.g.,Date,Month,Year)Product(e.g., Product_ID, Category, Brand)Customer(e.g.,Customer_ID,Location,Segment)

In a star schema, the Sales fact table directly links to the dimension tables, allowing analysts to efficiently generate reports such as total revenue per month or top-selling products by category. Here’s how the schema looks like:

Example star schema for sales reporting. Image by Author

💡 Want to dive deeper into dimensional data modeling? Check out our Star Schema vs. Snowflake Schema guide to understand when to use each for optimal performance.

Object-oriented modeling

Object-oriented modeling is used to represent complex systems, where data and the functions that operate on it are encapsulated as objects. This technique is useful for modeling applications with complex, interrelated data and behaviors – especially in software engineering and programming.

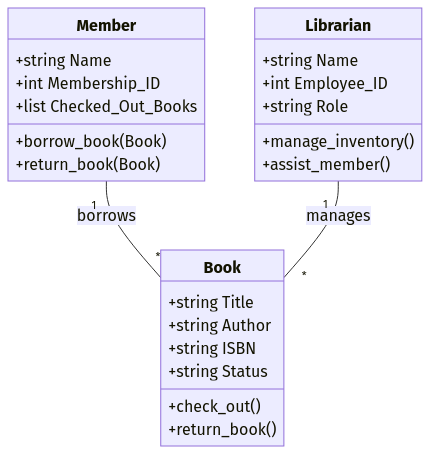

Example: Library management system

Suppose you're designing a library management system. You might define objects like:

- Book (

Title,Author,ISBN,Status) - Member (

Name,Membership_ID,Checked_Out_Books) - Librarian (

Name,Employee_ID,Role)

Each object includes both attributes (data fields) and methods (functions). For example, a Book object might have a method .check_out() that updates the book’s status when borrowed.

This approach is particularly beneficial in object-oriented programming (OOP) languages like Java and Python, where data models can be directly mapped to classes and objects.

Example of the object-oriented data model. Image by Author

💡 Want to learn how to implement object-oriented programming in Python? Check out DataCamp’s Object-Oriented Programming in Python course to master and apply OOP concepts in real-world projects.

NoSQL and document-based modeling

NoSQL and document-based modeling techniques are designed for flexible, schema-less databases.

This technique is often used when data structures are less rigid or evolve over time. These models allow storing and managing unstructured or semi-structured data, such as JSON documents, without predefined schemas.

In NoSQL databases like MongoDB, a document-based model organizes data into collections of documents, where each document can have a unique structure. This flexibility allows for faster iteration and scaling, particularly in big data environments or applications requiring high-speed data access.

Example: Storing user profiles in MongoDB

In a relational database, user profiles might be stored across multiple tables. But in a NoSQL document-based model like MongoDB, a user's data can be stored in a single JSON-like document:

{

"user_id": 123,

"name": "Alice Smith",

"email": "alice@example.com",

"address": {

"street": "123 Main St",

"city": "New York",

"zip": "10001"

},

"purchases": [

{ "product_id": 101, "price": 19.99 },

{ "product_id": 202, "price": 49.99 }

]

}Matching data models with data modeling techniques

Each data modeling technique aligns with different stages of database design, from high-level planning to physical implementation. Here’s how they connect with the types we saw earlier in the article:

- Conceptual data model → Entity-relationship (ER) modeling

- Defines high-level business entities and relationships without technical details.

- Example: An ER diagram showing how Customers, Orders, and Products relate.

- Logical data model → ER, dimensional, and object-oriented modeling

- Specifies data structure, attributes, and constraints without focusing on storage.

- Example: A star schema outlining a Sales fact table with dimension tables like Time, Product, and Customer.

- Physical data model → Dimensional, object-oriented, and NoSQL modeling

- Represents how data is physically stored and optimized in a database.

- Example: A MongoDB document storing user profiles as flexible JSON objects or an optimized relational schema in PostgreSQL.

Associate Data Engineer in SQL

Best Practices for Data Modeling

Building an effective data model isn’t just about choosing the right approach—it’s about following best practices that keep your model scalable, efficient, and aligned with business needs. A well-designed model helps prevent common issues like redundancy, performance bottlenecks, and difficulty adapting to future changes.

Below, we’ll cover key best practices to help you create data models that support long-term usability and performance.

Normalize early, denormalize when necessary

Normalization is a foundational concept in data modeling. It organizes data so that redundancy is minimized and consistency is ensured by structuring it into smaller, logically related tables. This process reduces the chances of anomalies and discrepancies, making the data easier to manage and update.

Example: Normalizing a customer database

Instead of storing customer and order details in one large table:

|

Order_ID |

Customer_Name |

Customer_Email |

Product |

Price |

|

101 |

Alice Smith |

alice@email.com |

Laptop |

1200 |

|

102 |

Alice Smith |

alice@email.com |

Mouse |

25 |

You normalize by separating customers and orders into two related tables:

- Customers(

Customer_ID,Name,Email) - Orders(

Order_ID,Customer_ID,Product,Price)

This avoids redundant customer data, making updates easier.

However, for reporting and analytics, denormalization may be necessary to optimize query performance. Instead of performing multiple joins across tables, a pre-aggregated denormalized table can speed up queries.

Knowing when to apply each technique is important!

💡Check out our in-depth tutorial on Normalization in SQL to understand how normalization improves data integrity and optimizes performance.

Future-proofing your data model

As businesses evolve, so do their data requirements. Designing a future-proof data model means creating one that’s flexible and scalable, ready to handle new data sources and changing demands.

Considering potential growth and future technological advancements allows you to factor in costly reworks and avoid them. Building for scalability, modularity, and maintainability from the outset ensures the model can adapt to evolving landscapes and continue delivering value over time.

Example: Planning for scalability in an e-commerce database

Imagine you’re designing a database for an online store. Initially, you track only domestic orders, but later, you expand globally. You'll need major modifications later if your schema only supports a single currency.

- Instead of a simple

Pricecolumn, includeCurrency_CodeandExchange_Ratefields. - Use a flexible schema that allows new attributes without restructuring the database.

Other ways to future-proof your model:

- Use UUIDs instead of auto-incremented IDs for scalability across distributed systems.

- Consider schema evolution in NoSQL databases, where documents can have optional fields that change over time.

Ensure data quality and consistency

A data model is only as good as the data it houses. Implementing strong data governance and validation techniques is crucial to maintaining data quality and consistency across the model.

Data validation ensures that the right type of data is entered and adheres to specific rules to reduce errors and enhance the reliability of business insights. This preserves the integrity of the data model and ensures that decision-makers can rely on the information it provides for accurate insights.

Example: Using data validation for product listings

Inconsistent product descriptions in an online store can cause reporting errors. To prevent this:

- Use

CHECKconstraints to enforce allowed values (e.g.,Stock_Quantity >= 0). - Implement foreign keys to ensure referential integrity.

- Automate data validation with tools to catch inconsistencies before they impact reporting.

💡Check out my Great Expectations tutorial to learn how to automate data validation using Python and ensure consistency in your data pipelines.

Focus on business requirements

A well-designed data model should directly support business goals. Before creating the schema, work closely with stakeholders to define:

- What insights do they need?

- How will the data be accessed and updated?

- What performance trade-offs are acceptable?

Example: Optimizing a retail dashboard for faster insights

A retail company wants to track sales trends in real time. Instead of using a fully normalized schema with multiple joins, you might design a denormalized reporting table that pre-aggregates daily sales, making dashboard queries significantly faster.

Business-aligned data model:

- Real-time data is stored in an OLTP (Online Transaction Processing) system for fast transactions.

- Aggregated sales reports are stored in an OLAP (Online Analytical Processing) database for analysis.

💡Explore our OLTP vs. OLAP guide to understand when to optimize for transactions vs. reporting.

By aligning the data model with business needs, you avoid unnecessary complexity and ensure stakeholders get the insights they need without performance issues.

Conclusion

Understanding and implementing the right data modeling techniques is essential if you’re looking to structure, manage, and optimize data effectively. As we’ve explored, the choice of modeling approach depends on business goals, data complexity, and system requirements.

To deepen your understanding of data modeling, data warehousing, and database design, I recommend exploring these resources:

- The Data Warehouse Toolkit – A foundational guide to dimensional modeling by Ralph Kimball, covering best practices for designing data warehouses.

- Introduction to Data Modeling in Snowflake – Learn how to design scalable data models in Snowflake.

- Data Warehousing Concepts – Understand core data warehousing principles, including dimensional modeling and ETL processes.

- Database Design – Master database structuring techniques to ensure efficiency, consistency, and scalability.

Become a Data Engineer