Course

Running large language models comes with real costs. You pay for every token processed, every GPU cycle used, and every layer of complexity added to a model. Even though prices have dropped, the bill still scales fast when you’re working with large applications, long prompts, or frequent updates.

I’ve seen how quickly this becomes a problem. Teams assume costs will stay manageable, only to realize they’re burning budget on oversized models, inefficient prompts, or idle hardware.

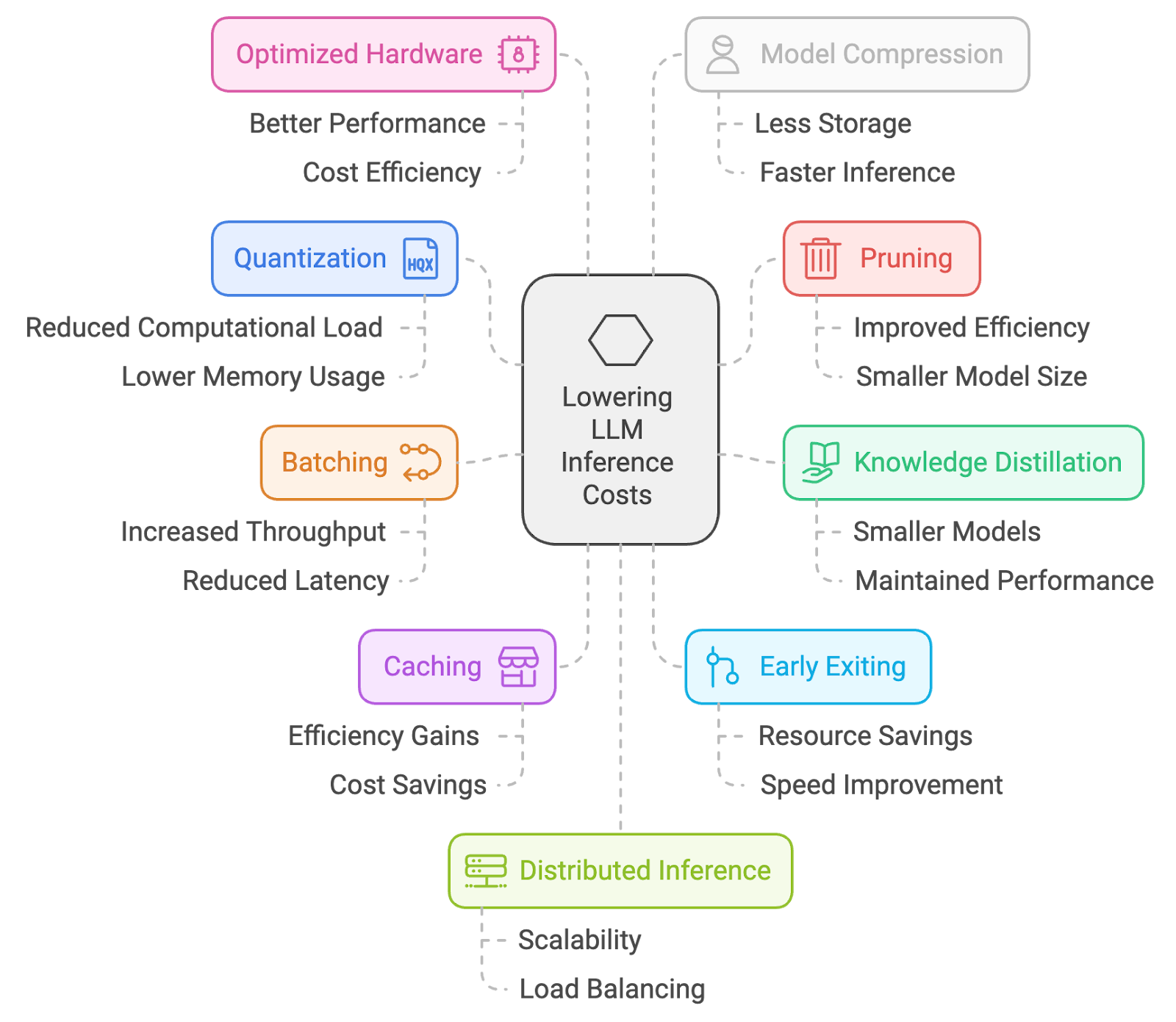

That’s why I’ve pulled together 10 practical ways to lower inference costs. From quantization and pruning to batching, caching, and prompt engineering, these are approaches I actually use to keep LLMs affordable without trading off too much performance.

1. Quantization

Quantization is a process of reducing the precision of model weights and activations, typically from 32-bit floating point numbers to lower-bit representations (e.g., 16-bit or even 8-bit). This reduces memory footprint and computational requirements, enabling faster inference on resource-constrained devices.

How it's done:

- Post-training quantization (PTQ): Convert pre-trained model weights to lower precision (e.g., 8-bit integers) without retraining. This is relatively simple but may lead to some accuracy loss.

- Quantization-aware training (QAT): Fine-tune the model with quantization techniques during training, resulting in better accuracy preservation.

How it helps:

- Smaller model size: This translates to faster loading times and less memory usage, which can significantly reduce costs, especially in cloud environments where you pay for resources used.

- Faster inference: With smaller numbers, calculations become quicker, leading to faster response times.

The main trade-off is a potential accuracy loss. While modern quantization techniques are quite good, there's always a chance of a slight drop in model accuracy.

2. Pruning

Pruning is a technique of removing less important or redundant weights from a neural network. By eliminating connections that have minimal impact on the model's performance, pruning reduces the model's size and computational complexity, leading to faster inference.

How it's done:

- Unstructured pruning: Removes individual weights based on their magnitude or importance.

- Structured pruning: Removes entire channels or filters, leading to more regular model structures that can be efficiently executed on hardware.

How it helps:

- Smaller model size: Similar to quantization, pruning results in a smaller, faster-to-load model.

- Reduced computations: Fewer connections mean fewer calculations during inference, leading to faster response times.

Similar to quantization, the main trade-off is a potential accuracy loss. Aggressive pruning can lead to a noticeable drop in performance. It's crucial to find the right balance.

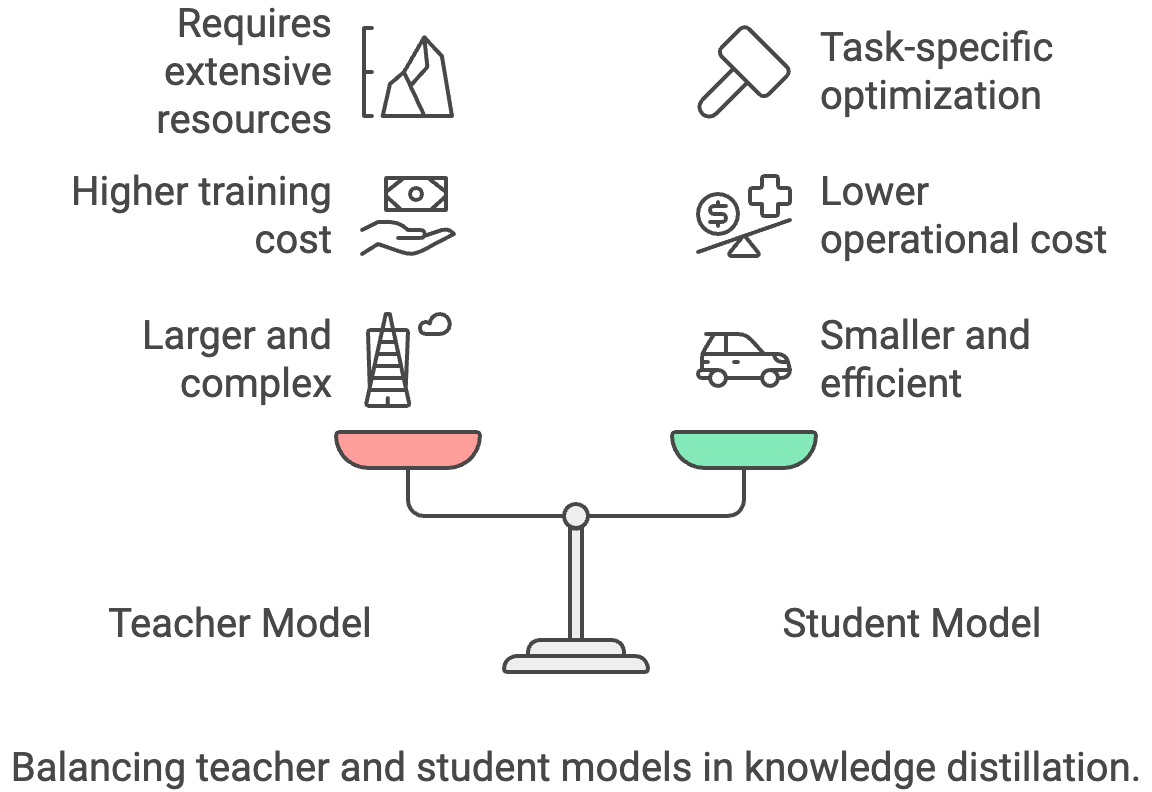

3. Knowledge Distillation

Knowledge distillation is a process of transferring knowledge from a large, complex "teacher" model to a smaller, more efficient "student" model. The student model learns to mimic the teacher's behavior, enabling it to achieve comparable performance with a smaller size and faster inference.

How it's done:

- Train a smaller student model: Use the larger teacher model's outputs (logits or soft labels) as additional training signals for the student model.

- Temperature scaling: Adjust the temperature parameter during distillation to control the softness of the teacher's output distribution.

How it helps:

- Drastically smaller model: Student models can be significantly smaller and faster than their teachers, leading to major cost savings.

- Task-specific optimization: You can fine-tune the student model for a particular task, making it even more efficient.

The main trade-off is that you need access to a powerful teacher model, which can be expensive to train or use.

4. Batching

Batching involves processing multiple input samples simultaneously in a batch during inference. This improves efficiency by using the parallel processing capabilities of the hardware, leading to faster overall inference.

How it's done:

- Accumulate requests: Collect multiple inference requests and process them together as a batch.

- Dynamic batching: Adjust batch size dynamically based on incoming request rates to balance latency and throughput.

How it helps:

- Improved throughput: Batching can significantly increase the number of requests processed per second, making your application more efficient.

- Better hardware utilization: GPUs are designed for parallel processing, and batching helps you take full advantage of their capabilities.

However, batching can introduce extra latency for individual requests, since the system may wait to accumulate enough inputs before processing. For real-time or low-latency applications, this added delay can degrade user experience if not carefully tuned. Dynamic batching helps mitigate the issue but adds complexity to the system. There’s also the risk of inefficient batching when traffic is uneven, which can reduce the expected cost savings.

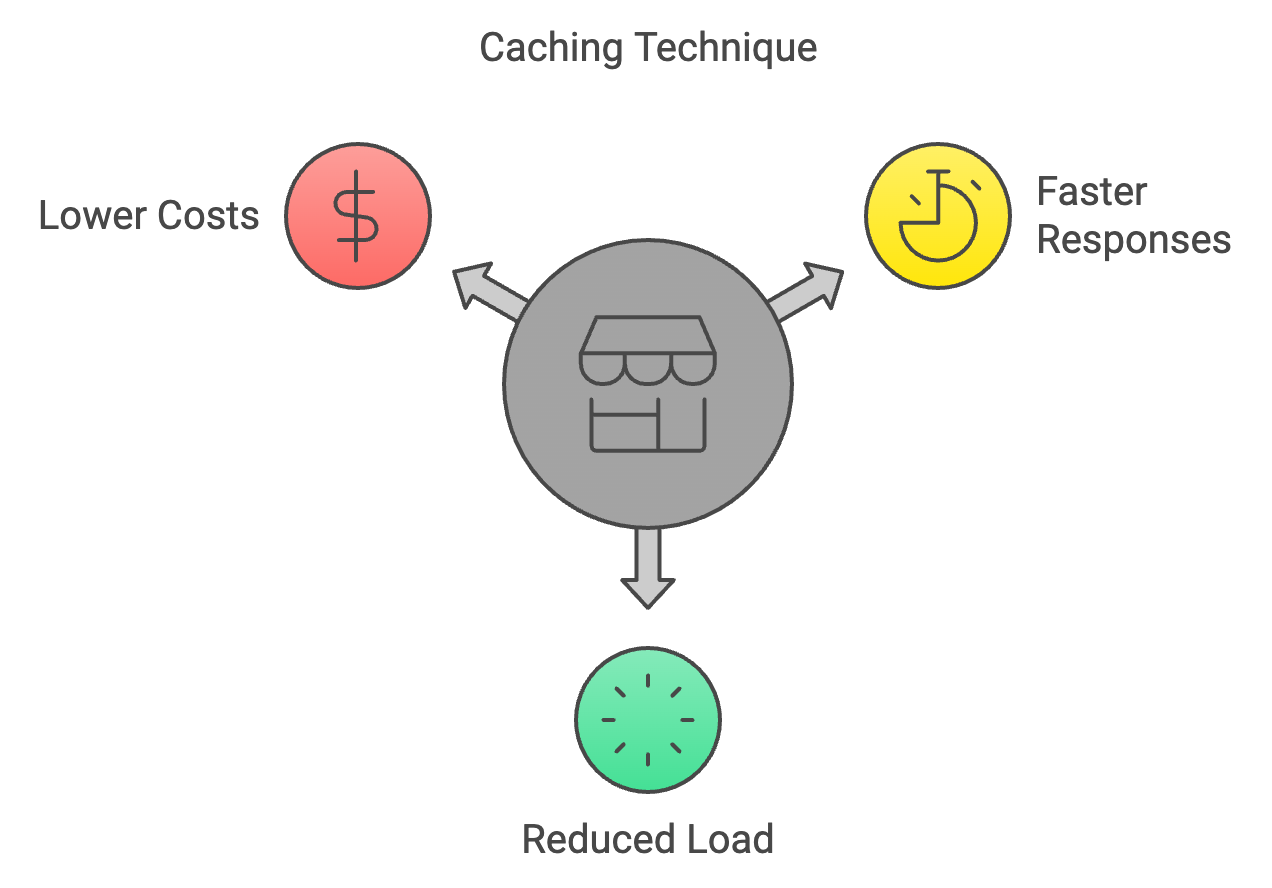

5. Caching

Caching is a technique of storing the results of previous computations and reusing them when encountering the same inputs again during inference. This avoids redundant calculations, speeding up the inference process.

How it's done:

- Store past computations: Maintain a cache of previous inputs and their corresponding outputs.

- Cache eviction policies: Implement strategies to remove less frequently used items from the cache when it reaches capacity.

How it helps:

- Faster responses for repeated requests: Caching is incredibly effective for applications where the same or similar requests are made frequently.

- Reduced computational load: Fewer computations mean lower costs.

However, caching is only useful when inputs repeat or overlap. In highly variable workloads, hit rates may be low, and the overhead of maintaining a cache can outweigh the benefits. Stale or outdated results can also create consistency issues if cached outputs no longer reflect the most relevant data. In addition, implementing and tuning cache eviction strategies adds engineering complexity, especially in distributed systems where cache synchronization becomes a challenge.

6. Early Exiting

Early Exiting involves stopping the computation early during inference if the model is confident enough about its prediction. This saves computational resources and accelerates inference for simpler cases where a full forward pass is unnecessary.

How it's done:

- Confidence threshold: Set a threshold for the model's confidence in its prediction. If the confidence exceeds the threshold at an intermediate layer, stop computation and return the current output.

- Adaptive early exiting: Dynamically adjust the threshold based on the input or model layer to balance speed and accuracy.

How it helps:

- Faster inference for easy cases: If the model can confidently predict the output early on, it saves time and computation.

- Reduced costs: Fewer computations mean lower costs.

The trade-off is that early exiting might lead to slightly lower accuracy in some cases, especially for complex inputs.

7. Optimized Hardware

This technique involves utilizing specialized hardware architectures and accelerators designed for efficient AI computations. These include GPUs, TPUs, and other dedicated AI chips that offer significant performance improvements compared to general-purpose CPUs for inference tasks.

How it's done:

- GPUs: Take advantage of the parallel processing capabilities of GPUs for matrix operations and neural network computations.

- TPUs: Use specialized tensor processing units designed specifically for AI workloads.

- Other AI accelerators: Explore emerging hardware options like FPGAs or dedicated AI chips.

How it helps:

- Significantly faster inference: GPUs and specialized chips are much faster than CPUs for the kind of calculations LLMs need.

- Better energy efficiency: This translates to lower energy costs in the long run.

However, specialized hardware often requires significant upfront investment and may lock you into a particular vendor ecosystem. GPUs, TPUs, or custom accelerators can also be harder to provision consistently in the cloud, especially during peak demand. On-premise deployments bring maintenance and scaling challenges, while cloud options may carry higher ongoing rental costs. Finally, adapting software to fully exploit specialized hardware can add engineering complexity and require ongoing optimization.

8. Model Compression

Model compression refers to using more than one technique to reduce the size and complexity of a model without significantly compromising its performance. This can involve pruning, quantization, knowledge distillation, or other methods aimed at creating a more compact and efficient model for faster inference.

How it's done:

- Combine multiple techniques: Apply a combination of quantization, pruning, knowledge distillation, and other methods to achieve optimal model size reduction.

- Tensor decomposition: Decompose large weight matrices into smaller, more efficient representations.

How it helps:

- Smaller model size: This translates to faster loading, less memory usage, and potentially lower costs.

Model compression can lead to accuracy degradation if techniques are applied too aggressively or without careful tuning. Combining methods like pruning, quantization, and distillation increases system complexity and may require additional retraining or fine-tuning cycles. Compressed models can also be less flexible for transfer to new tasks, since optimizations often narrow the range of scenarios where the model performs well. In some cases, engineering time and computational overhead spent on compression may offset short-term cost savings.

9. Distributed Inference

Distributed inference is an approach to dividing the inference workload across multiple machines or devices. This enables parallel processing of large-scale inference tasks, reducing latency and improving throughput.

How it's done:

- Model partitioning: Divide the model across multiple machines, each handling a portion of the computation.

- Load balancing: Distribute incoming requests across available machines to ensure efficient resource utilization.

How it helps:

- Handles larger models and higher traffic: Distributed inference lets you break down large tasks across multiple machines, handling bigger models and more requests simultaneously. This makes hardware more efficient and allows for flexible scaling in the cloud, all leading to cost savings.

Distributed inference introduces significant system complexity, as coordinating computation across multiple machines requires robust orchestration, synchronization, and fault tolerance. Network latency and bandwidth constraints can offset the performance gains, especially when large amounts of intermediate data need to be exchanged. It also increases infrastructure costs, since additional hardware and load-balancing mechanisms must be provisioned and maintained. Debugging and monitoring distributed systems can be more difficult, making reliability a challenge compared to single-node setups.

10. Prompt Engineering

Prompt engineering is a process of carefully crafting input prompts to guide large language models (LLMs) towards generating desired outputs. By formulating clear, concise, and specific prompts, users can improve the quality and controllability of LLM responses, making them more relevant and useful for specific tasks.

How it's done:

- Be clear and concise: Use specific and unambiguous language in your prompts.

- Provide context: Include relevant background information or examples to guide the LLM.

- Experiment and iterate: Test different prompt formulations and evaluate the results to find the most effective ones.

How it helps:

- More relevant and concise outputs: Good prompt engineering can reduce the number of tokens the model needs to generate, leading to faster and potentially cheaper inference.

- Improved accuracy: Clear prompts can help the model avoid misunderstandings and generate more accurate responses.

Prompt engineering requires ongoing experimentation and iteration, which can be time-consuming and inconsistent across use cases. Well-crafted prompts may not generalize, forcing you to redesign them when tasks or models change. Improvements in prompt design can also be fragile—small wording changes or model updates can alter results unpredictably. Finally, relying too heavily on prompt engineering without complementary techniques like context management or fine-tuning may limit scalability and long-term cost savings.

Bonus: Context Engineering

Context engineering is the practice of designing systems that control what information an LLM sees before generating a response. Instead of cramming everything into the context window, you select and organize only the most relevant details—such as user history, retrieved documents, or tool outputs—so the model can reason more efficiently.

How it’s done:

- Context summarization: Compress accumulated conversation history or documents into shorter summaries to preserve meaning while reducing tokens.

- Tool loadout management: Use retrieval systems to select only the most relevant tools or descriptions instead of passing every available option.

- Context pruning: Remove outdated or redundant information as new data arrives, keeping the active context lean and useful.

- Context validation and quarantine: Detect and isolate hallucinations or errors before they poison long-term memory.

How it helps:

- Lower token usage: Shorter and more focused contexts reduce the number of tokens processed, directly cutting costs.

- Improved accuracy: Cleaner context means the model is less likely to be distracted or confused by irrelevant or conflicting details.

- Faster inference: With fewer tokens and a more stable KV cache, requests complete more quickly, which saves compute resources.

The trade-off is that building effective context systems takes upfront engineering effort. You need retrieval, summarization, and validation pipelines in place, and poor design can cause context gaps that hurt performance. But when done well, context engineering makes large applications both more reliable and more affordable.

Conclusion

Lowering LLM inference costs isn’t about a single trick. It’s about combining approaches that make sense for your workload. Techniques like quantization, pruning, and knowledge distillation cut down model size. Batching, caching, and distributed inference improve how requests are processed. Hardware choices and model compression push efficiency further, while prompt and context engineering reduce unnecessary token usage at the source.

The trade-offs are real: each method brings complexity, potential accuracy loss, or infrastructure overhead. But the gains add up. By applying even a few of these techniques, you can keep models affordable, scale usage without runaway costs, and build systems that remain sustainable as models and applications grow.

Senior GenAI Engineer and Content Creator who has garnered 20 million views by sharing knowledge on GenAI and data science.