Track

Learning through building projects is the best way to deepen your understanding and practical skills in the field of Large Language Models (LLMs) and AI. By working on projects, you will gain knowledge of new tools, techniques, and models that can optimize your applications and enable you to build highly accurate and fast AI applications.

Troubleshooting these projects will provide valuable experience, enhance your chances of securing your dream job, and enable you to earn additional income by monetizing your projects and creating a marketable product.

In this blog, we will explore LLM projects designed for beginners, intermediates, final-year students, and experts. Each project includes a guide and source material to study and replicate the results.

If you are new to LLMs, please complete the AI Fundamentals skill track before jumping into the below-mentioned project. This will help you gain actionable knowledge on popular AI topics like ChatGPT, large language models, generative AI, and more.

Looking to get started with Generative AI?

Learn how to work with LLMs in Python right in your browser

LLMs Projects for Beginners

In the beginner projects, you will learn how to use the OpenAI API, generate responses, build AI assistants with multimodal capabilities, and serve API endpoints of your application using FastAPI.

Prerequisites: A basic understanding of Python is necessary.

1. Building a language tutor with Langflow

Project link: Langflow: A Guide With Demo Project

For many beginners, the biggest barrier to building AI apps is writing complex Python code from scratch. Langflow changes this by letting you build powerful AI workflows using a visual, drag-and-drop interface that connects easily to standard databases.

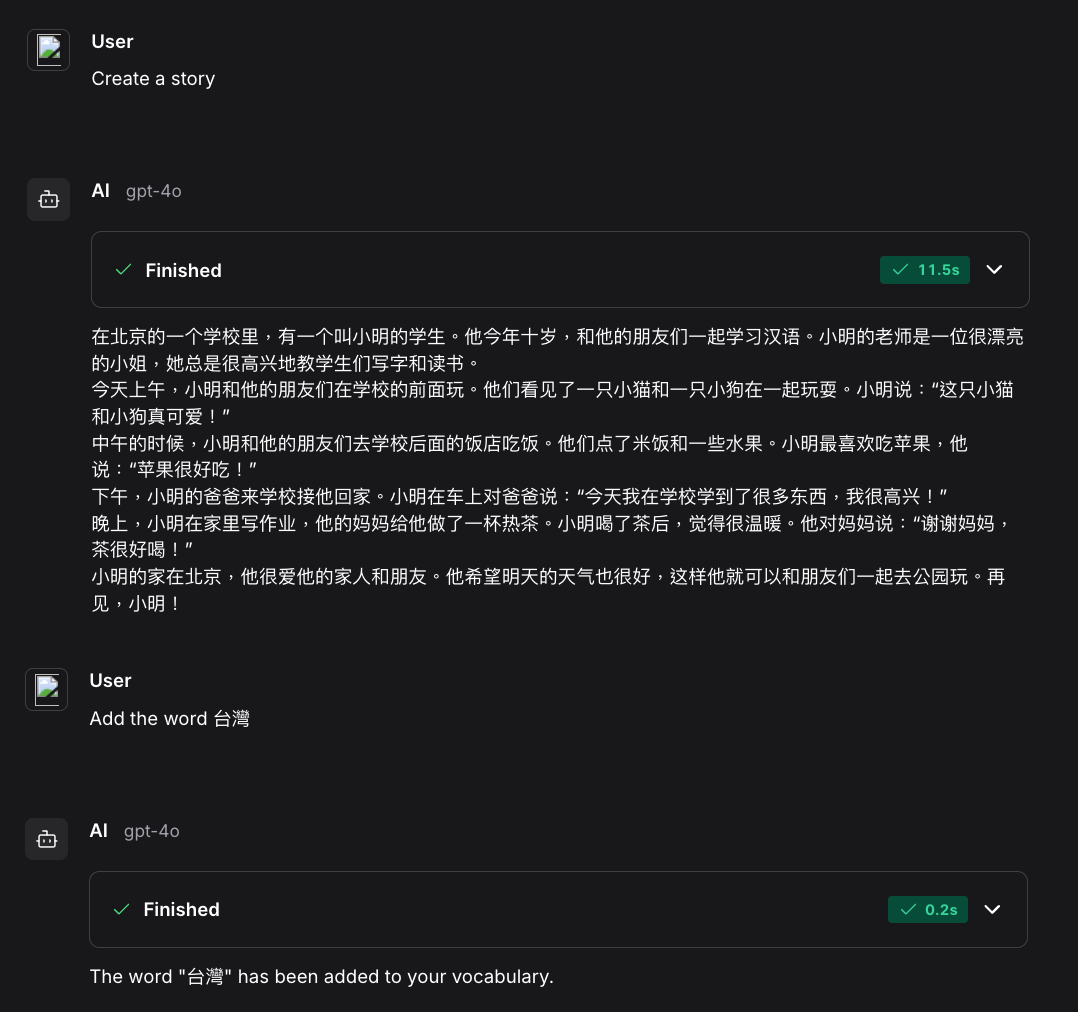

In this project, you will build a language tutor application without needing to be an expert coder. You will use Langflow to visually design an agent that generates reading passages based on a user's vocabulary list (stored in a Postgres database). This is an excellent introduction to "stateful" AI (apps that remember your data) while keeping the technical barrier low.

Source: Langflow: A Guide With Demo Project

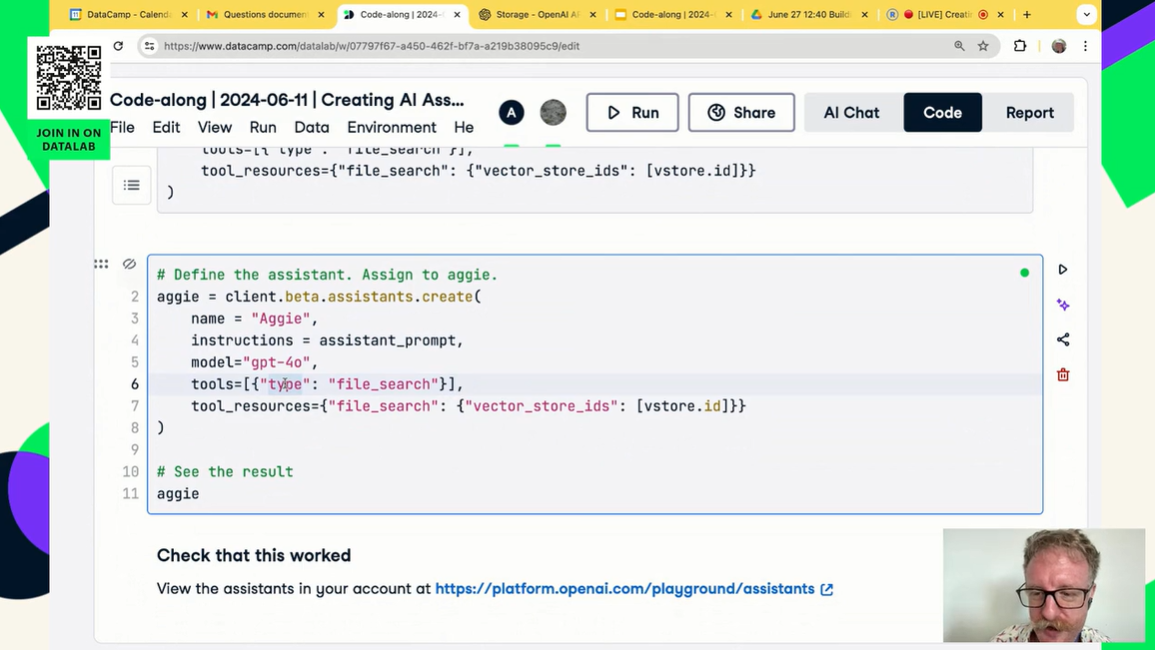

2. Building an AI Assistant for data science with multimodal capabilities

Project link: Creating AI Assistants with GPT-4o

In this project, you will be creating your own AI assistant similar to ChatGPT. You will learn to use the OpenAI API and GPT-4o to create a specialized AI assistant tailored for data science tasks. This includes providing custom instructions, handling files and data, and using multimodal capabilities.

The fun part is that the whole project only requires you to understand various functions of the OpenAI Python API. With a few lines of code, you can build your own automated AI that will process the data for you and present you with the analysis.

Source: Creating AI Assistants with GPT-4o

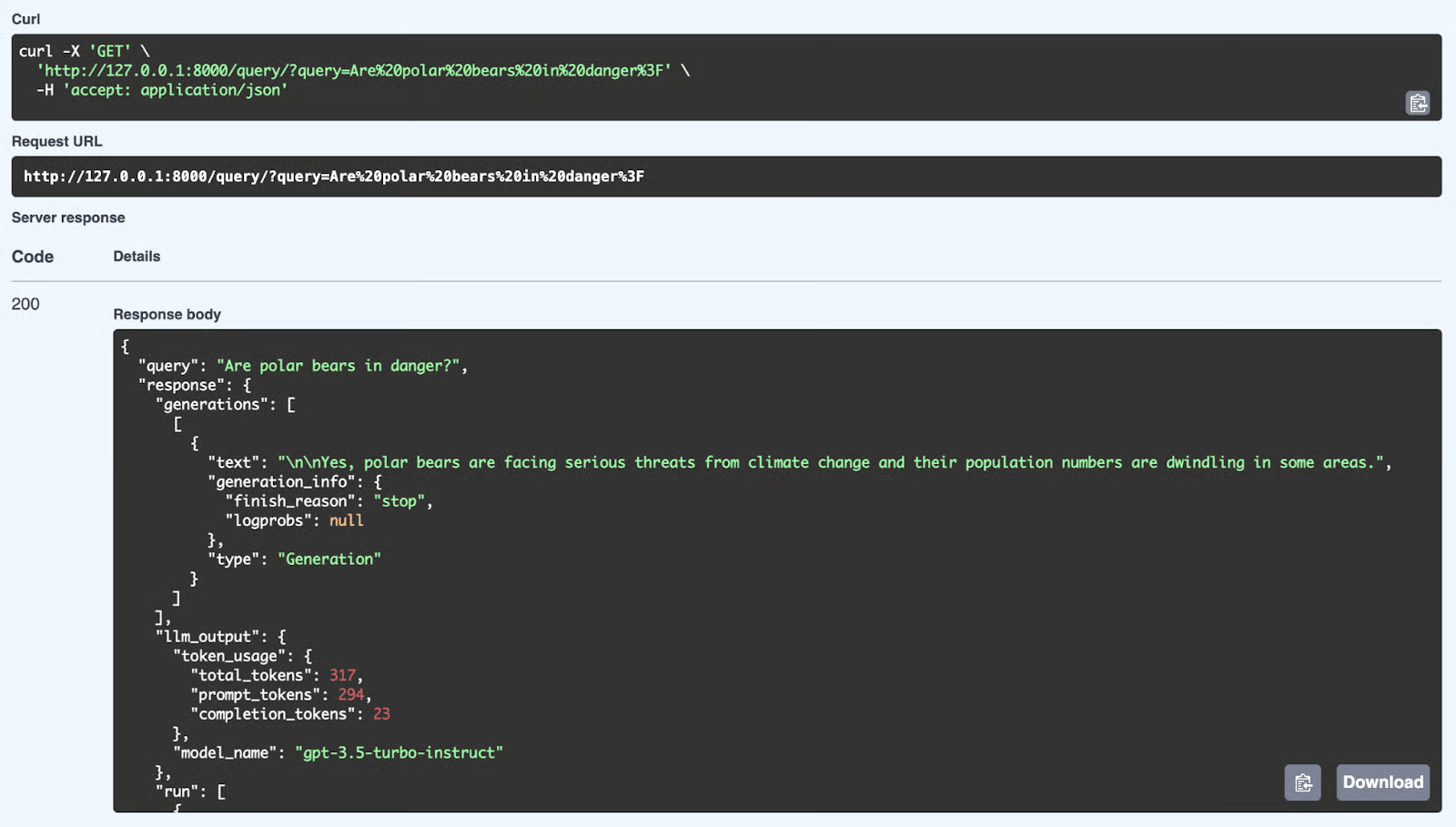

3. Serving an LLM Application as an API endpoint using FastAPI

Project link: Serving an LLM application as an API endpoint using FastAPI in Python

What is the next step after building your application using the OpenAI API? You will serve and deploy it to a server. This means creating a REST API application that anyone can use to integrate your AI application into their systems.

In this project, you will learn about FastAPI and how to build and serve AI applications. You will also learn how API works and how to test it locally.

Source: Serving an LLM application as an API endpoint using FastAPI in Python

Intermediate LLMs Projects

In the intermediate LLM projects, you will learn to integrate various LLM APIs like Groq, OpenAI, and Cohere with frameworks such as LlamaIndex and LangChain. You will learn to build context-aware applications and connect multiple external sources to generate highly relevant and accurate responses.

Prerequisites: Experience with Python and an understanding of how AI agents work.

4. Building and serving a RAG system with FastAPI

Project link: Building a RAG System with LangChain and FastAPI: From Development to Production

Building a RAG script in a notebook is a great start, but real-world AI needs to be deployed. To make your application accessible to users or other software, you must wrap it in a high-performance web API.

In this project, you will bridge the gap between data science and web engineering. You will use LangChain to build the logic for retrieving document data and FastAPI to serve that logic as a REST endpoint. You will learn how to handle API requests, process documents, and ship a production-ready backend for your AI application.

Source: Building a RAG System with LangChain and FastAPI

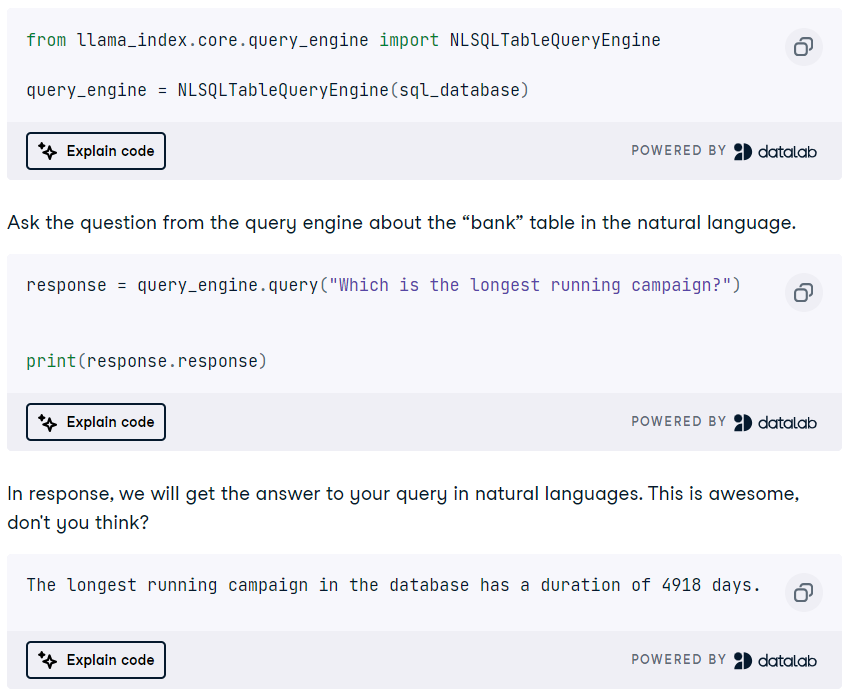

5. Building a DuckDB SQL query engine using an LLM

Project link: DuckDB Tutorial: Building AI Projects

DuckDB is a modern, high-performance, in-memory analytical database management system (DBMS) that can be used as a vector store and a SQL query engine.

In this project, you will learn about the LlamaIndex framework to build an RAG application using DuckDB as a vector store. Additionally, you will build a DuckDB SQL query engine that converts natural language into SQL queries and generates responses that can be used as context for the prompt. It is a fun project, and you will learn about a lot of LlamaIndex functions and agents.

Source: DuckDB Tutorial: Building AI Projects

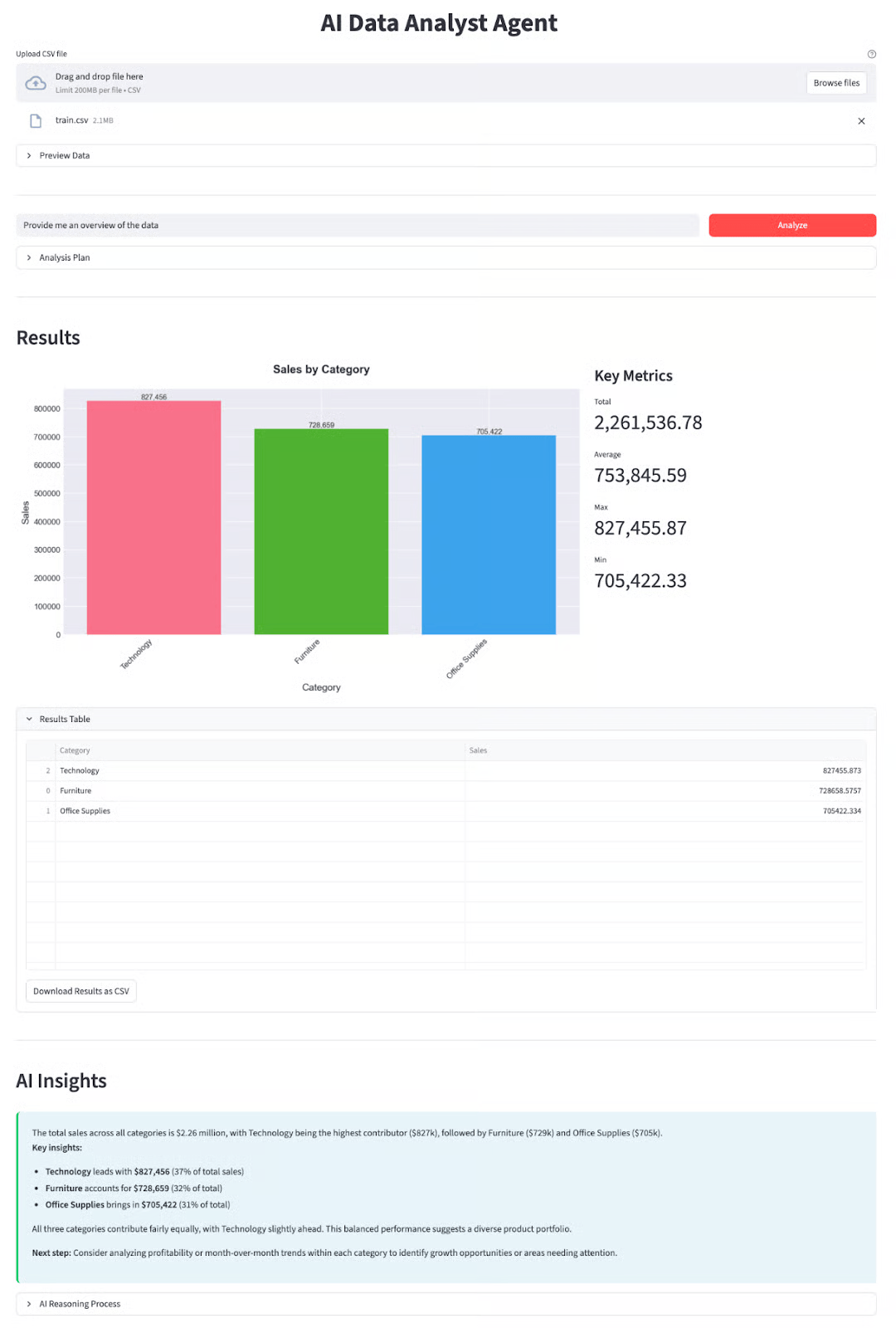

6. Building an autonomous data analyst with DeepSeek-V3.2

Project link: DeepSeek-V3.2-Speciale Tutorial: Build a Data Analyst Agent

DeepSeek’s models have become famous for their reasoning capabilities, making them ideal for complex tasks like data science. Moving beyond simple chats, this project focuses on "agentic" workflows where the AI actively uses tools to solve problems.

In this project, you will build a functional Data Analyst Agent that can ingest datasets, write and execute Python code, and generate insights automatically. You will learn how to leverage the DeepSeek-V3.2-Speciale model to orchestrate multi-step analysis tasks, a critical skill for the next generation of AI development.

Source: DeepSeek-V3.2-Speciale Tutorial: Build a Data Analyst Agent

LLM Projects for Final-Year Students

Final-year students have the opportunity to build proper LLM applications. Therefore, most projects mentioned in this section require knowledge about fine-tuning, LLM architectures, multimodal applications, AI agents, and, eventually, deploying the application on a server.

Prerequisites: Good understanding of Python language, LLMs, AI agents, and model fine-tuning.

7. Building multimodal AI applications with LangChain & the OpenAI API

Project link: Building Multimodal AI Applications with LangChain & the OpenAI API

Generative AI makes it easy to move beyond simply working with text. We can now combine the power of text and audio AI models to build a bot that answers questions about YouTube videos.

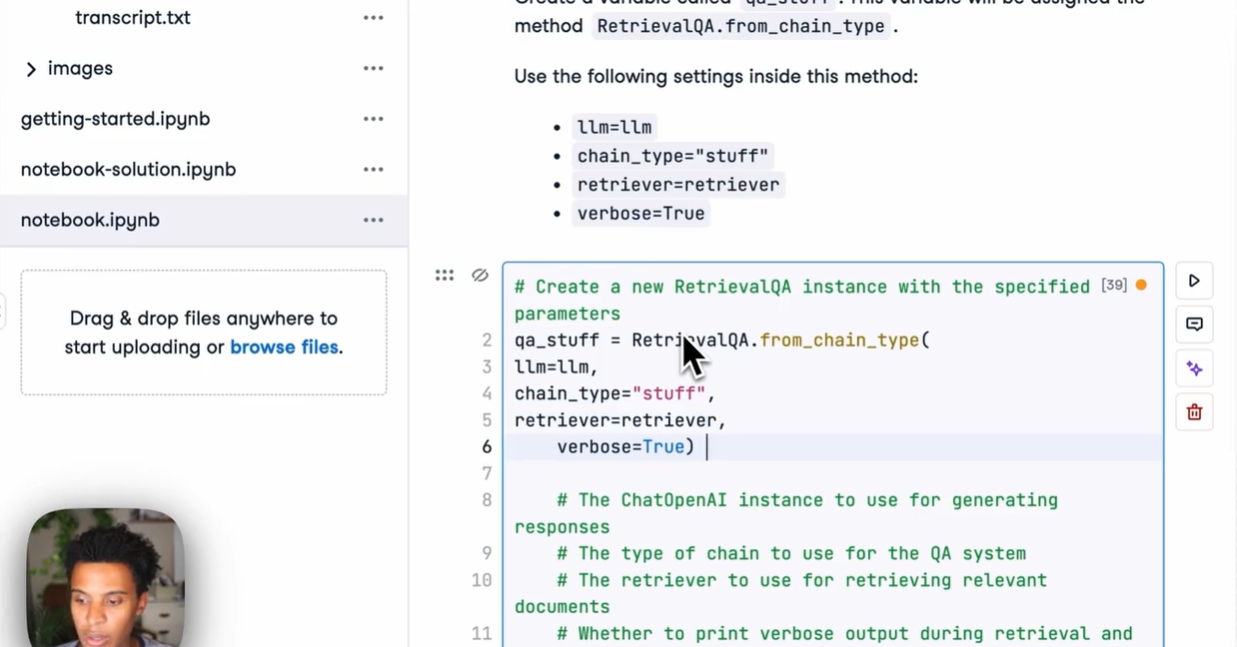

In this project, you will learn how to transcribe YouTube video content with the Whisper speech-to-text AI and then use GPT to ask questions about the content. You will also learn about LangChain and various OpenAI functions.

The project includes a video guide and code-along sessions for you to follow and build the application with the instructor.

Take the Developing LLM Applications with LangChain course to learn more about its features through interactive exercises, slides, quizzes, video tutorials, and assessment questions.

Source: Building Multimodal AI Applications with LangChain & the OpenAI API

8. Building a grounded Q&A app with NVIDIA Nemotron and Ollama

Project link: NVIDIA Nemotron 3 Nano Tutorial: Grounded Q&A with Ollama

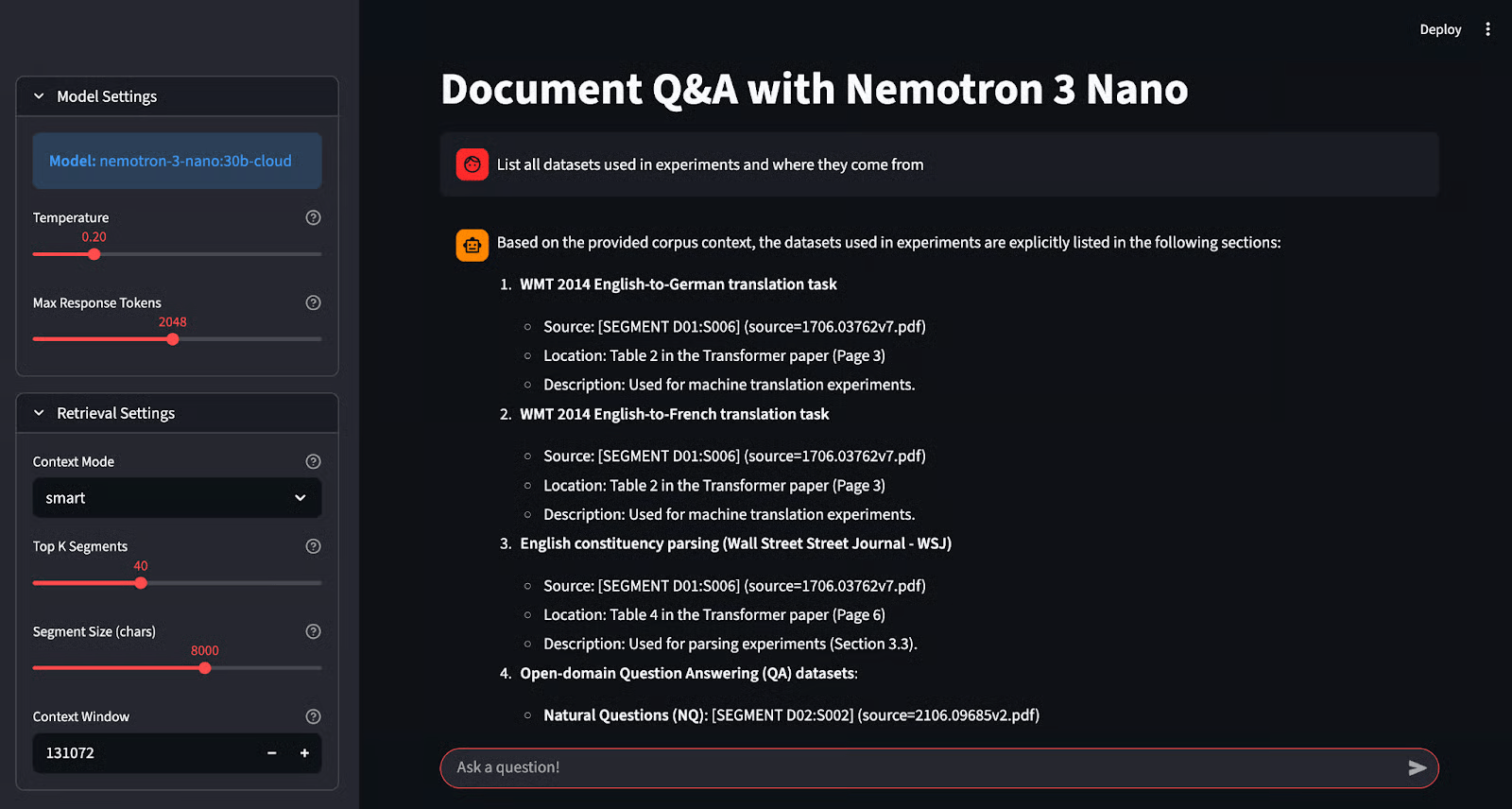

Running models locally is great for privacy, but maintaining accuracy with smaller models can be difficult. NVIDIA’s Nemotron-3-Nano is specifically designed to solve this by excelling at "grounded" generation, which means ensuring answers are strictly backed by provided facts.

In this project, you will learn to deploy this specialized model using Ollama and build a "Grounded Q&A" application with Streamlit. Unlike standard chatbots, this system cites its sources, allowing you to verify every answer. You will gain hands-on experience with local inference, hallucination prevention, and building trustworthy AI interfaces.

Source: NVIDIA Nemotron 3 Nano Tutorial: Grounded Q&A with Ollama

9. Building a multi-step AI agent using the LangChain and Cohere API

Project link: Cohere Command R+: A Complete Step-by-Step Tutorial

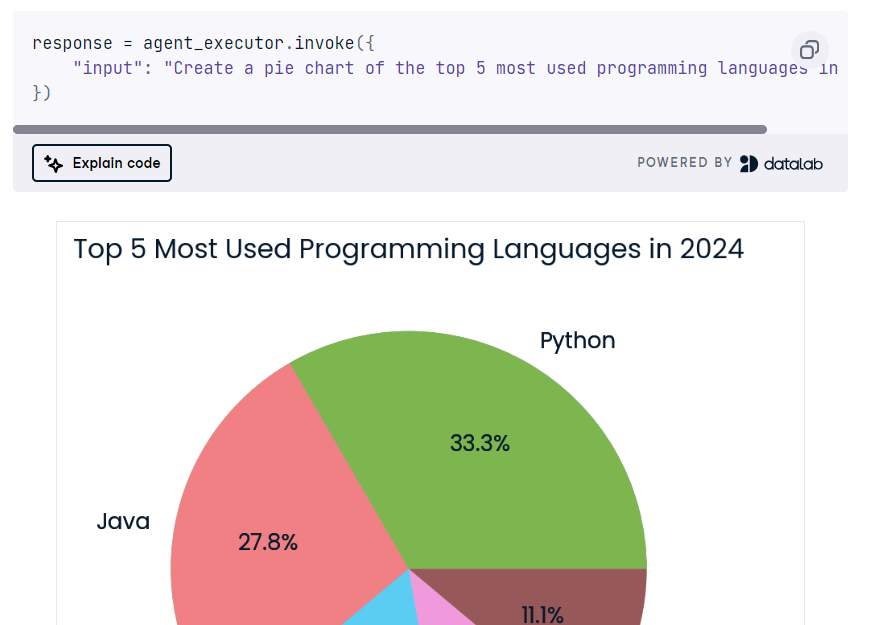

Cohere API is similar to OpenAI API and offers many features compared to other LLM frameworks. In this project, you will learn the core features of Cohere API and use them to build a multi-step AI agent.

You will also master the LangChain framework and chaining together various AI agents.

In the end, you will build an AI application that will take the user's query to search the web and generate Python code. Then, it will use Python REPL to execute the code and return the user's requested visualization.

Source: Cohere Command R+: A Complete Step-by-Step Tutorial

10. Deploying LLM applications with LangServe

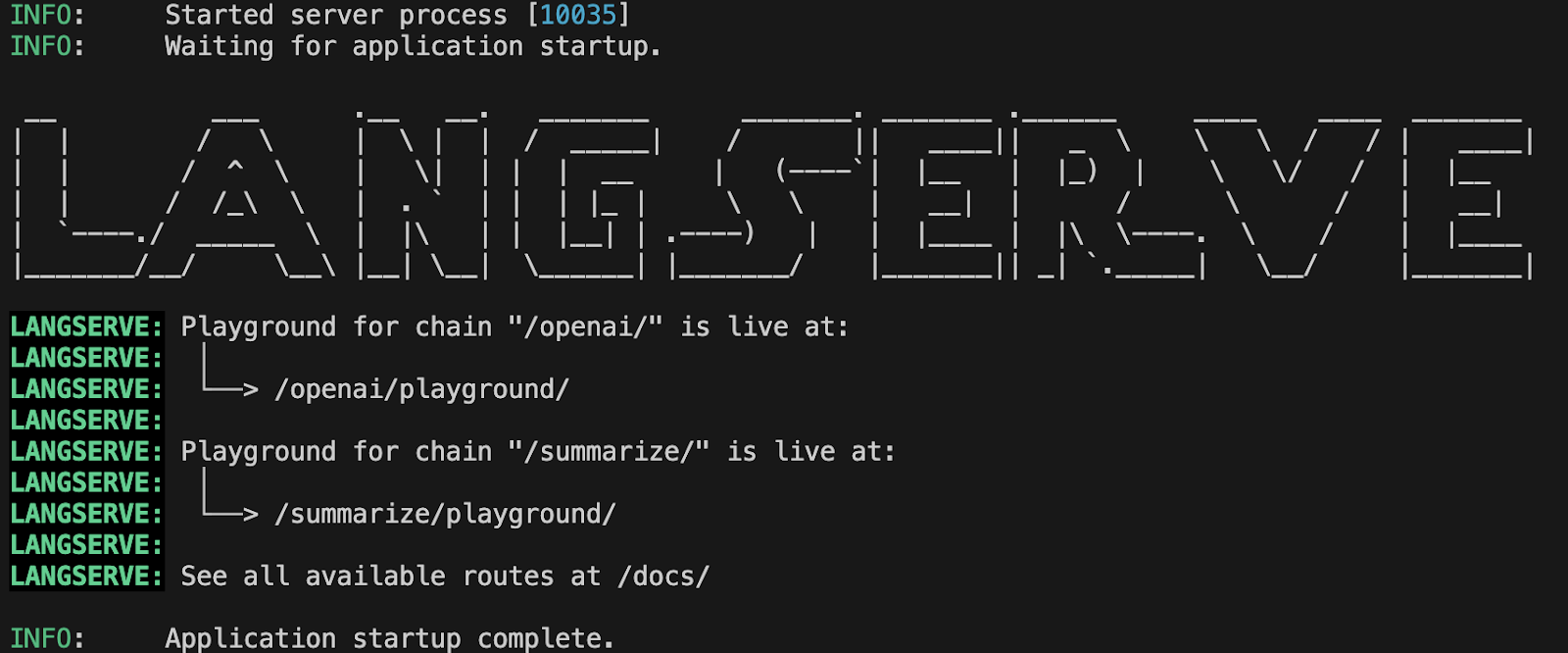

Project link: Deploying LLM Applications with LangServe

In this project, you will learn how to deploy LLM applications using LangServe. You will start by setting up LangServe, creating a server using FastAPI, testing the server locally, and then deploying the application. You will also learn to monitor LLM's performance using the Prometheus framework and then use Grafana to visualize the model performance in the dashboard.

There is a lot to learn, and it will help you get a job in the LLMOps field, which is growing in demand.

Source: Deploying LLM Applications with LangServe

Advanced LLMs Projects

The advanced LLM projects are designed for experts who want to build production-ready applications and improve already deployed LLM applications. By working on these projects, you will learn to infer and fine-tune on TPUs instead of GPUs for faster performance, automate taxonomy generation and text classification, and build AI applications in the Microsoft Azure Cloud.

Prerequisites: Good understanding of Python, LLMs, model fine-tuning, TPUs, and cloud computing.

11. Building a self-correcting RAG agent with LangGraph

Project link: Self-Rag: A Guide With LangGraph Implementation

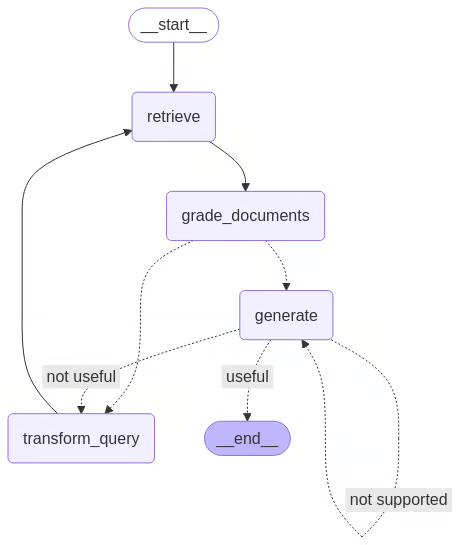

Traditional RAG systems often fail blindly when they retrieve irrelevant data. "Self-RAG" solves this by adding a critique loop: the model evaluates its own retrieved documents and generated answers, rejecting or regenerating them if they fall short of quality standards.

In this project, you will move from linear chains to cyclical agents using LangGraph. You will implement a sophisticated control flow where the AI actively grades its own work for relevance and hallucinations. This architecture represents the state-of-the-art in building reliable, autonomous AI systems.

Source: Self-Rag: A Guide With LangGraph Implementation

12. Building a TNT-LLM application

Project link: GPT-4o and LangGraph Tutorial: Build a TNT-LLM Application

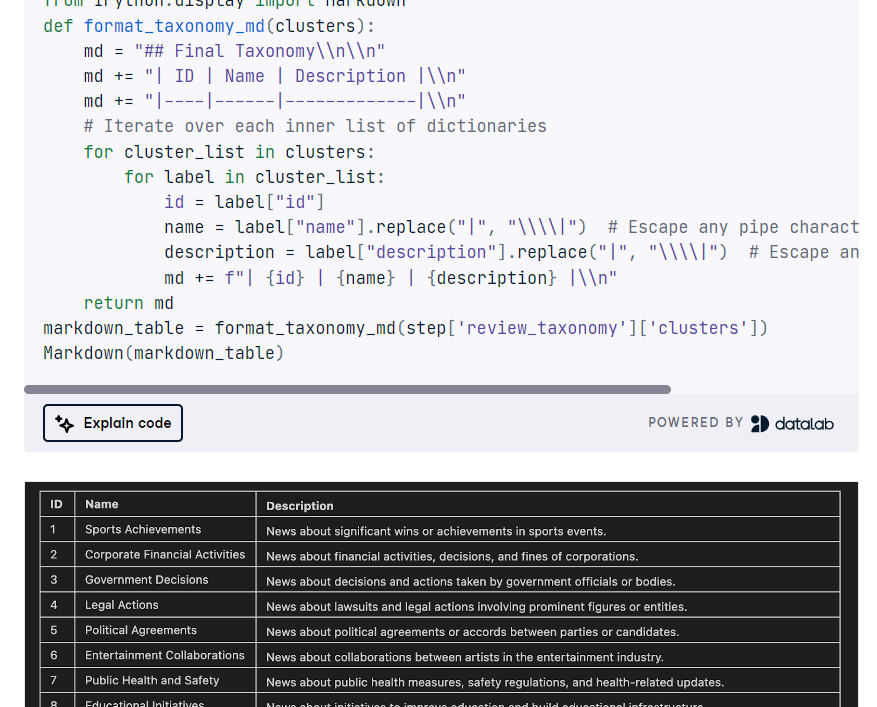

Microsoft's TNT-LLM is a cutting-edge system designed to automate taxonomy generation and text classification by leveraging the power of large language models (LLMs), such as GPT-4, to enhance efficiency and accuracy with minimal human intervention. In this project, you will implement TNT-LLM using LangGraph, LangChain, and OpenAI API.

You will define graph state class, load datasets, summarize documents, create minibatches, generate and update taxonomy, review it, orchestrate the TNT-LLM pipeline with StateGraph, and finally cluster and display the taxonomy.

Source: GPT-4o and LangGraph Tutorial

13. Building an AI application using Azure cloud

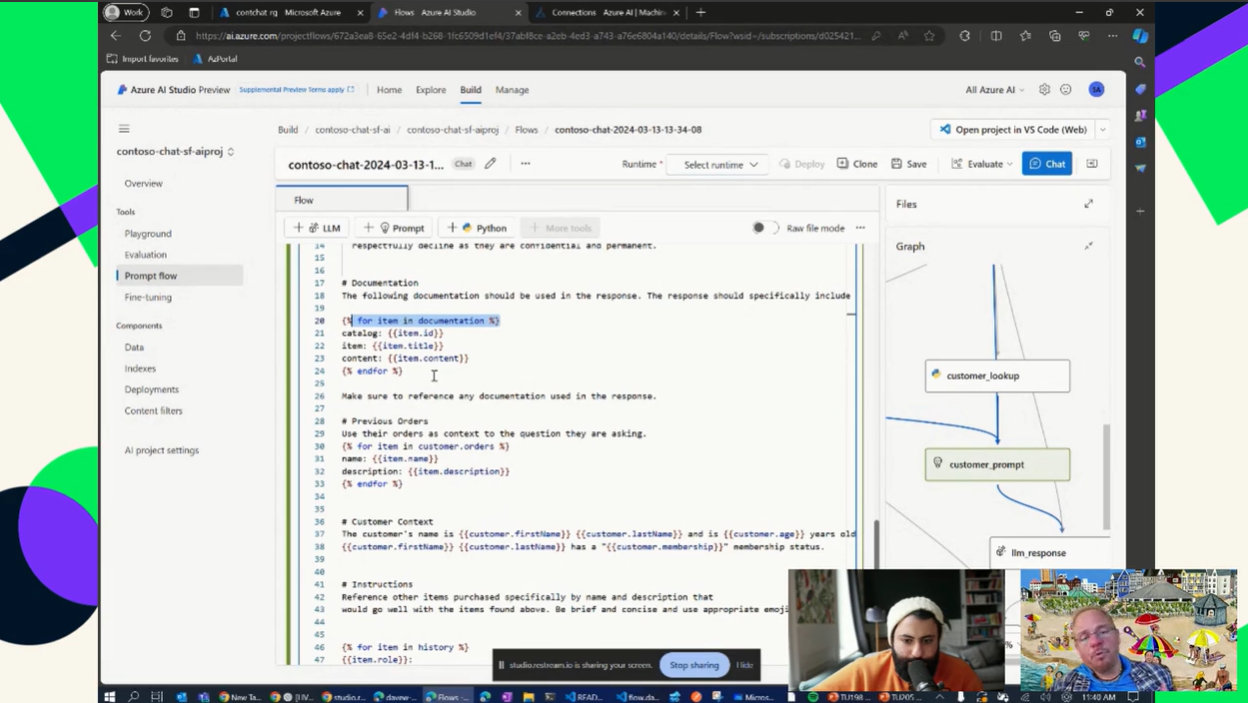

Project link: Using GPT on Azure

Gaining cloud computing experience is a crucial skill that will land you a position in any LLM-related field. Mastering the ability to build, automate, deploy, and maintain an LLM pipeline on Azure cloud will earn you the title of an expert in LLMs.

In this project, you will learn how to set up a pay-as-you-go instance of OpenAI and build simple applications that demonstrate the power of LLMs and Generative AI with your data. You will also use a vector store to build an RAG application and reduce hallucination in the AI application.

Source: Using GPT on Azure

Final Thoughts

Working through the projects mentioned above will provide you with enough experience to build your own product or even secure a job in the LLM field. The key to success is not just coding or fine-tuning, but documentation and sharing your work on social media to attract attention. Ensure your project is well-explained and user-friendly, and include a demo or usability guide in the documentation.

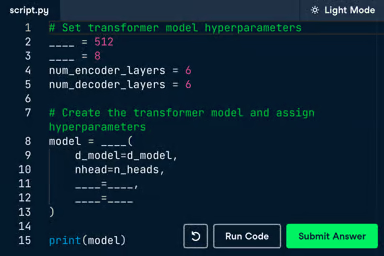

If you are interested in learning more about LLMs, we highly recommend exploring the Developing Large Language Models and skill track. It will teach the latest techniques for developing cutting-edge language models, mastering deep learning with PyTorch, building your own transformer model from scratch, and fine-tuning pre-trained LLMs from Hugging Face.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.