Course

Data warehousing technology has recently been consolidated to become more scalable and less expensive with the popularization of cloud services. One of the most common solutions is Google Cloud Platform’s data warehouse BigQuery.

The Stack Overflow 2024 survey confirms its increasing popularity, having reached an adoption percentage of 24.1% among the world's cloud users. The demand for BigQuery expertise has increased significantly in various industries, making the understanding of its fundamentals a professional necessity among data professionals.

In this article, you'll find a compilation of some BigQuery questions often asked during job interviews to help you get ready. If you're just beginning to learn about BigQuery, I suggest checking out the following the following guide about Data Warehousing on GCP first.

Basic BigQuery Interview Questions

Knowing the basics of BigQuery before tackling complex topics is essential. These questions evaluate your grasp of its core concepts, architecture, and functionality. If you are not able to answer the following questions, I encourage you to start from the beginning by checking the following Beginner's Guide to BigQuery and enrolling in our introductory BigQuery course.

What is BigQuery, and how does it differ from traditional databases?

Why it's asked: To evaluate your understanding of modern data warehouses and their advantages over traditional databases.

BigQuery is a fully managed, serverless data warehouse on Google Cloud designed for large-scale data analytics. It allows high-speed SQL queries on massive datasets without management of the infrastructure, and it enables users to focus on insights rather than maintenance.

Unlike traditional on-premises relational databases, which are typically row-based and constrained by hardware limitations, BigQuery is a cloud-native, columnar storage system that offers near-infinite scalability. Its distributed architecture and pay-as-you-go pricing model make it more efficient for handling analytical workloads than conventional databases.

What is a dataset in BigQuery?

Why it's asked: To test your knowledge of the data organization and the structure of BigQuery.

A dataset, in BigQuery, is defined as the topmost container that organizes tables, views, and other resources. This paves the way for access control and locating the data. By structuring data efficiently, datasets ensure better query performance and access management, making them a fundamental component of BigQuery's architecture.

How do you load data into BigQuery?

Why it's asked: To test your knowledge regarding different data ingestion methods.

BigQuery offers several data ingestion methods intended for different purposes.

- Batch load mode is typically used to upload a large historical dataset into BigQuery by using the BigQuery web UI, the bq command-line tool, or API calls.

- Streaming ingestion enables real-time data processing by pushing individual records or small batches using the BigQuery Streaming API.

- BigQuery's data transfer service can simplify scheduled data imports from Google Cloud storage, Google Ads, and SaaS sources.

For advanced ETL workflows, Google Cloud Dataflow and other pipeline tools facilitate seamless data movement into BigQuery. Considering the best suitable ingestion method depends on data volume, latency, and processing needs.

What data types does BigQuery support?

Why it's asked: To test your knowledge regarding BigQuery's data handling capabilities.

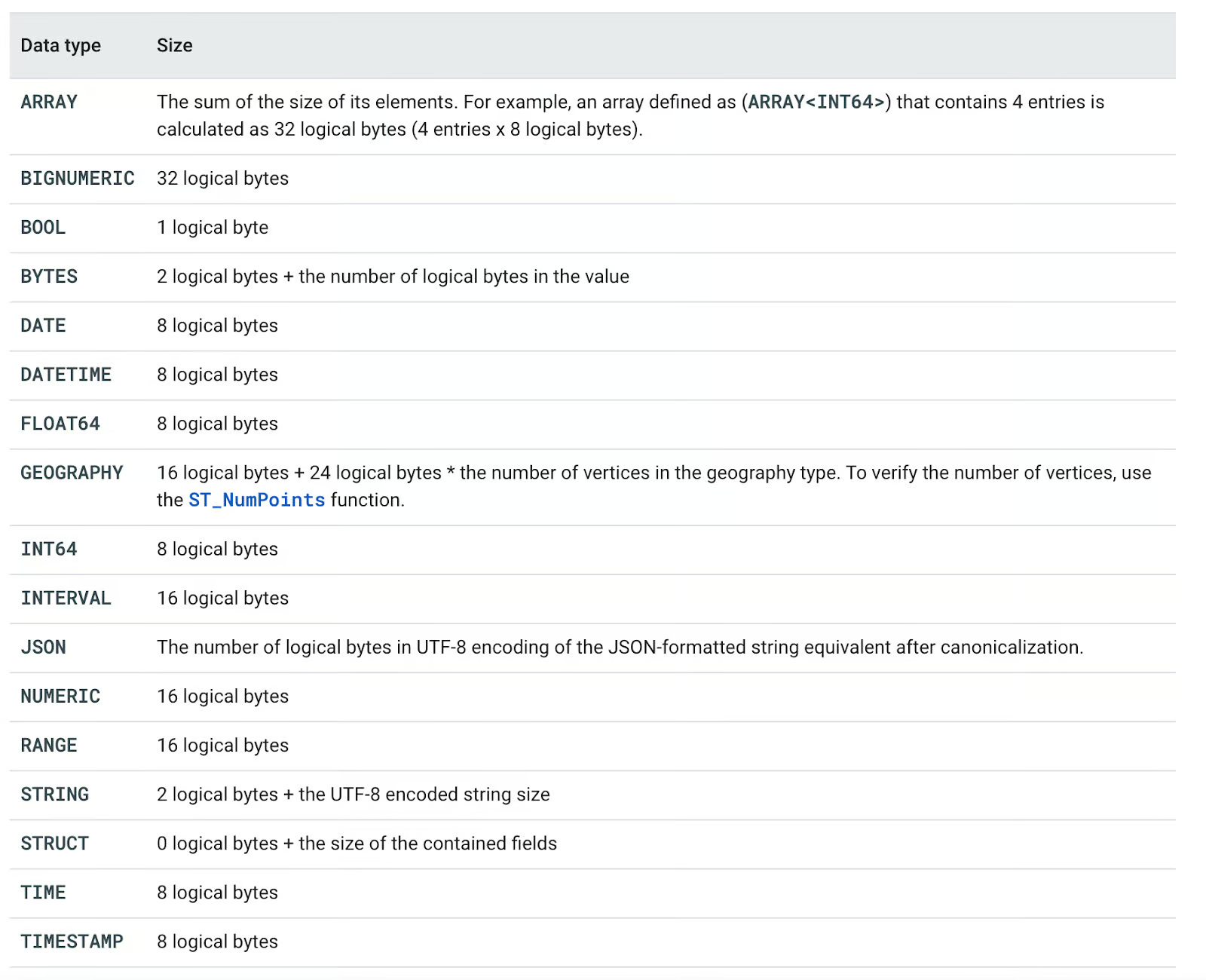

BigQuery supports a variety of data types, categorized into:

- Basic types: BOOL, INT64, FLOAT64, STRING, BYTES, DATE, DATETIME, TIME, TIMESTAMP

- Complex types: ARRAY, STRUCT, JSON

- Specialized types: NUMERIC, BIGNUMERIC, INTERVAL, GEOGRAPHY, RANGE

Each data type has a defined logical storage size, which impacts query performance and cost. For example, STRING storage depends on UTF-8 encoded length, whereas ARRAY<INT64> requires 8 bytes per element. Understanding these types helps in optimizing queries and managing costs efficiently.

You can observe all supported data types in the following table.

BigQuery data types. Image from Google Cloud Documentation.

What are the key advantages of using BigQuery?

Why it's asked: To make sure you understand the main perks of using BigQuery as a Data Warehouse.

Using BigQuery services brings five main benefits over traditional self-managed solutions:

- Scalability: All investments in hardware are initially postponed. It allows us to easily scale resources up or down, depending on business requirements.

- Flexibility: Infrastructure can be modified according to the business needs.

- High security: Automatic backups and disaster recovery features are default features of cloud solutions like GCP.

- Cost effective: Pay-as-you-go options allow customers to pay only for the services they are using.

- Data sharing and collaboration: Using cloud-based services fosters data sharing and collaboration.

BigQuery Advantages. Image by Author.

Technical BigQuery Interview Questions

Once you’ve grasped the fundamentals of BigQuery, it's time to dive into more technical aspects that interviewers commonly assess.

These questions go beyond basic definitions and test your ability to optimize performance, manage costs, and work with advanced features like partitioning, clustering, and security.

What is partitioning in BigQuery, and how does it improve performance?

Why it's asked: To assess your knowledge of data organization techniques and their impact on query efficiency.

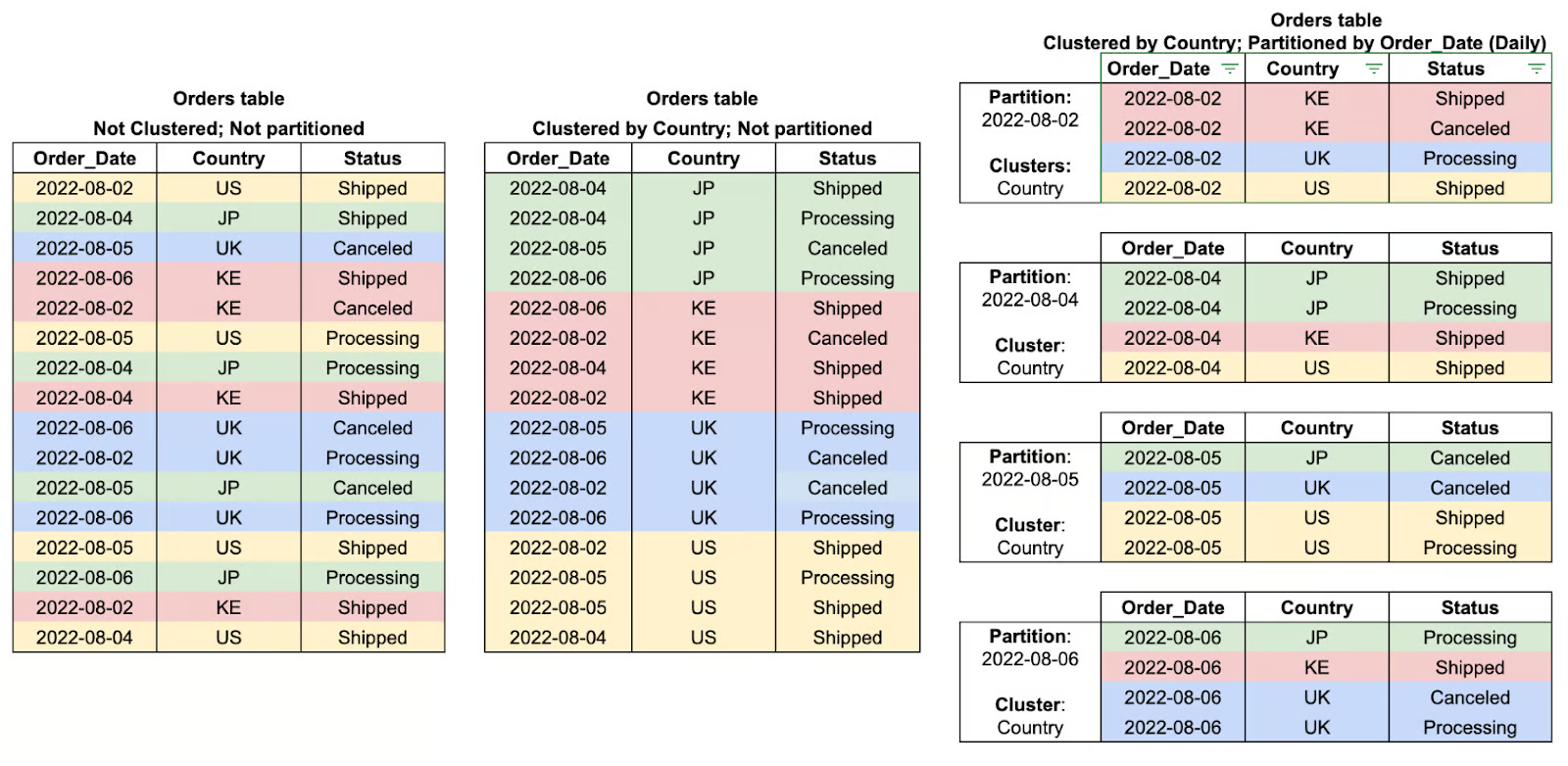

Partitioning in BigQuery is a method of subdividing large tables into smaller manageable pieces based on a certain criterion like date, ingestion time, or values of integers. Thus, as it segments data into partitions, BigQuery can restrict the amount of data that is scanned during queries, ramping up performance and cutting costs considerably.

For example, a partitioned table storing daily transaction data allows queries to filter specific date ranges efficiently instead of scanning the entire dataset. This makes partitioning particularly beneficial for time-series analysis and large-scale analytical workloads.

What is clustering in BigQuery, and how does it improve query performance?

Why it's asked: To evaluate your understanding of data organization strategies that optimize query efficiency and cost.

Clustering in BigQuery refers to the organization of data within partitions based on the values from one or more specified columns. By essentially grouping the related rows by clustering, BigQuery reduces the amount of data that is scanned, thus improving the performance of the queries and decreasing overall processing costs.

This way of optimizing queries has proved particularly efficient when filtering, sorting, or aggregating data based on clustered columns, where the query engine can skip all irrelevant data altogether rather than doing a full scan of the partition. Clustering works best when combined with partitioning and provides even more performance benefits when working with large datasets.

What is the difference between a table and a view in BigQuery?

Why it's asked: To evaluate your understanding of BigQuery’s data structures and their use cases.

In BigQuery, a table is a structured storage unit that physically holds data, while a view is a virtual table that dynamically retrieves data based on a predefined SQL query. The key distinction between them is that views cannot contain data by themselves but serve as a building block for writing large queries or enforcing security rules around data access by offering access to a subset of data without effectively duplicating it. Views also improve query execution times by permitting users to re-access data without having to reload or reorganize tables, which makes them powerful for analytics, data abstraction, and security enforcement.

So, to put it simply:

- A table is a structured storage unit that physically holds data

- A view is a virtual table that dynamically retrieves data based on a predefined SQL query without storing it

How does BigQuery handle data security?

Why it's asked: To check your knowledge about the BigQuery security combined with protecting sensitive data.

BigQuery employs a layered approach to data security beginning with a secure model that is intended to safeguard data at all levels of the data lifecycle.

- Data is encrypted at rest using Google-managed encryption keys by default, or through customer-managed keys if one desires to hold managerial control over them.

- Data is encrypted while in transit via HTTPS/TLS and in transit between clients and BigQuery.

- Identity and Access Management (IAM) control allows for fine-grained access control by providing user and service account roles and assignments to ensure that only authorized parties can either access or change data. .

- Audit logging is aimed at tracking user and system activity by giving an audit trail that could be used for monitoring and compliance.

These built-in security features help organizations maintain data confidentiality, integrity, and regulatory compliance.

What is the BigQuery Data Transfer Service, and how does it simplify data ingestion?

Why it's asked: To assess your understanding of automated data movement and integration within BigQuery.

The BigQuery Data Transfer Service (BQ DTS) automates and schedules data imports from various external sources into BigQuery, eliminating the need for manual ETL processes. It natively integrates with Google services like Google Ads, YouTube, and Google Cloud Storage, as well as third-party SaaS applications.

By enabling automated, scheduled data transfers, BQ DTS ensures that data remains up-to-date for analysis without requiring user intervention. This service is particularly useful for organizations managing recurring data ingestion workflows at scale, improving efficiency and reducing operational overhead.

What are nested and repeated fields in BigQuery, and why are they useful?

Why it's asked: To evaluate your understanding of BigQuery’s support for semi-structured data and its advantages over traditional relational models.

BigQuery allows for nested and repeated fields, enabling more efficient storage and querying of hierarchical or array-based data. Nested fields use the STRUCT data type, allowing a column to contain subfields, similar to a JSON object.

Repeated fields function as ARRAYS, enabling a single column to store multiple values. These structures help eliminate the need for complex JOIN operations, improve query performance, and make BigQuery well-suited for processing semi-structured data such as logs, event streams, and NoSQL-like datasets.

How can you schedule and automate jobs in BigQuery?

Why it's asked: To assess your knowledge of automating query execution and workflow management in BigQuery.

BigQuery offers multiple methods to schedule and automate jobs, ensuring recurring tasks run without manual intervention.

- Scheduled queries allow users to define recurring query executions directly through the BigQuery web UI or API.

- Cloud Scheduler provides a fully managed cron-like service that triggers queries at predefined intervals.

- For event-driven automation, Cloud Functions can execute BigQuery jobs in response to triggers from other Google Cloud services.

These automation tools help streamline data pipelines, reduce manual workload, and ensure timely data processing for analytics and reporting.

How does Big Query handle data partitioning and clustering?

Why it's asked: To assess your understanding of data organization strategies in BigQuery and their impact on query performance and cost efficiency.

Partitioning splits large tables into smaller segments, improving query performance by scanning only relevant data.

-

Time-based: By DATE, TIMESTAMP, DATETIME (e.g., daily sales_date partitions).

-

Integer Range: By INTEGER values (e.g.,

user_idranges). -

Ingestion-time: By data load timestamp (

_PARTITIONDATE).

It is best for Time-series data and reducing query costs when filtering by date or numeric ranges.

Clustering organizes data within a table or partition by sorting it based on selected columns, speeding up queries.

It is best for filtering and aggregating by frequently queried fields like region or user_id.

Table cluster and partition examples. Image by Google Cloud.

BigQuery Architecture Interview Questions

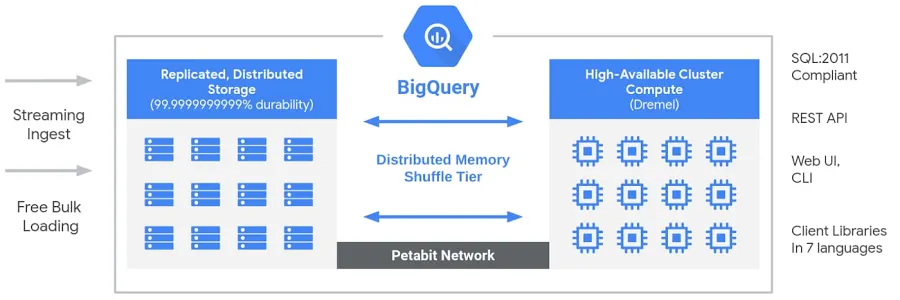

How is Google BigQuery's architecture designed, and what makes it unique?

Why it's asked: To evaluate your understanding of BigQuery’s serverless architecture and how it differs from traditional data warehouses.

BigQuery features a serverless, fully managed architecture that decouples storage and compute, allowing them to scale independently based on demand. Unlike traditional cloud data warehouses or on-premises massively parallel processing (MPP) systems, this separation provides flexibility, cost efficiency, and high availability without requiring users to manage infrastructure. BigQuery’s compute engine is powered by Dremel, a multi-tenant cluster that executes SQL queries efficiently, while data is stored in Colossus, Google’s global distributed storage system. These components communicate through Jupiter, Google’s petabit-scale network, ensuring ultra-fast data transfer. The entire system is orchestrated by Borg, Google’s internal cluster management system and a precursor to Kubernetes. This architecture enables users to run high-performance, scalable analytics on massive datasets without worrying about infrastructure management.

Big Query Architecture. Image by Google.

How does BigQuery separate storage and compute, and why is this beneficial?

Why it's asked: This question assesses your understanding of BigQuery’s architecture, specifically how its decoupled storage and compute model improves scalability, cost efficiency, and performance.

BigQuery follows a serverless, fully managed architecture where storage and compute are completely separated:

- Storage: Data is stored in Colossus, Google’s global distributed storage system. This ensures high availability, durability, and near-infinite scalability without requiring users to manage infrastructure.

- Compute: Query execution is handled by Dremel, a distributed query engine that dynamically allocates computational resources (slots) based on workload demand.

Key Benefits of Storage-Compute Separation:

- Independent scaling: You can scale storage without affecting compute and vice versa.

- Cost efficiency: You only pay for the data scanned during queries, avoiding unnecessary compute costs.

- Optimized performance: Queries run faster since storage doesn’t act as a bottleneck for computation.

- Multi-region storage: Data can be stored across different locations without impacting query speed.

This design allows BigQuery to process petabyte-scale queries efficiently while keeping costs low, making it an ideal choice for cloud-based analytics.

This architectural flexibility is crucial as the industry shifts focus toward massive computational power. As noted in the DataFramed podcast regarding data trends for 2025:

There's definitely a bet all the big players are making. They're building more compute, and so they will be scaling through more compute next year. And then the question is, OK, is there more data? And this is where it gets more nuanced. And I think it's a spectrum. It's not black and white.

Jonathan Cornelissen, Co-founder & CEO of DataCamp

What is Dremel, and how does it enable BigQuery’s fast query performance?

Why it's asked: To evaluate your knowledge of Dremel, BigQuery’s underlying query engine, and how its tree-based, columnar execution model enables high-performance data analytics.

Dremel is a distributed query execution engine that powers BigQuery. Unlike traditional databases that use row-based processing, Dremel employs a columnar storage format and a tree-based query execution model to optimize speed and efficiency.

How Dremel Enables Fast Queries:

- Columnar Storage: Instead of reading entire rows, Dremel scans only the necessary columns, reducing the amount of data processed.

- Tree-Based Execution: Queries are broken down into fragments and executed in parallel across thousands of nodes. Results are aggregated in a hierarchical tree structure, minimizing latency.

- Serverless Resource Allocation: Dremel dynamically assigns compute slots to queries based on complexity, ensuring efficient resource utilization.

Key Benefits of Dremel:

- Blazing-fast queries on petabyte-scale datasets.

- Cost-efficient processing by scanning only relevant columns.

- Automatic parallelization for large workloads without manual tuning.

You can learn more about Dremel in Google’s official documentation.

How does denormalization work in BigQuery, and when should it be used?

Why it's asked: To evaluate your understanding of data modeling strategies and their impact on query performance in analytical databases.

Denormalization in BigQuery intentionally introduces redundancy by merging tables and duplicating data to optimize query performance. Unlike normalization, which minimizes redundancy through smaller, related tables, denormalization reduces the need for complex joins, resulting in faster read times. This approach is especially useful in data warehousing and analytics, where read operations are more frequent than writes. However, denormalization increases storage requirements, which is why BigQuery recommends using nested and repeated fields to efficiently structure denormalized data while minimizing storage overhead.

Comparison of different data normalization strategies. Image by Google Cloud.

SQL-Specific Questions

Once you have a solid grasp of BigQuery’s architecture and optimization techniques, the next critical area to focus on is SQL proficiency. SQL is the backbone of working with BigQuery, and interviewers will often test your ability to write, optimize, and troubleshoot queries. To be better prepared, I encourage you to enroll in the SQL courses of DataCamp.

How do you perform a JOIN operation in BigQuery?

Why it's asked: To assess your ability to combine data from multiple tables using SQL JOIN operations.

BigQuery supports various types of JOINs to combine data from multiple tables based on a shared column. For example, to retrieve product details along with their sales information, you can use an INNER JOIN between the SALES TABLE and the PRODUCT TABLE on Product_Id:

SELECT

s.Id AS Sales_Id,

p.Product,

p.Price,

s.Purchase_date

FROM sales_table s

JOIN product_table p

ON s.Product_Id = p.Id;For additional details, such as delivery dates, you can join the DELIVERY TABLE:

SELECT

s.Id AS Sales_Id,

p.Product,

p.Price,

s.Purchase_date,

d.Deliver_date

FROM sales_table s

JOIN product_table p ON s.Product_Id = p.Id

JOIN delivery_table d ON s.Id = d.Sales_Id;Write a SQL query to select the top 10 most expensive products.

Why it's asked: To assess your ability to retrieve and sort data efficiently.

To find the top 10 most expensive products, use ORDER BY to sort by price in descending order and LIMIT to restrict the result:

SELECT

Product,

Price

FROM product_table

ORDER BY Price DESC

LIMIT 10;Write a SQL query to calculate the average number of sales per month.

Why it's asked: To evaluate your ability to perform time-based aggregations.

To compute the average number of sales per month, use the EXTRACT function to group sales by month and COUNT to count the number of sales per month:

SELECT

EXTRACT(MONTH FROM Purchase_date) AS Month,

COUNT(Id) AS Total_Sales,

AVG(COUNT(Id)) OVER () AS Average_Sales

FROM sales_table

GROUP BY Month;Write a SQL query to find the total number of deliveries by product.

Why it's asked: To assess your ability to aggregate data using GROUP BY and COUNT.

To count deliveries for each product:

SELECT

p.Product,

COUNT(d.Id) AS Total_Deliveries

FROM delivery_table d

JOIN product_table p

ON d.Product_Id = p.Id

GROUP BY p.Product;Write a SQL query to find the total number of deliveries for each product.

Why it's asked: To evaluate your ability to filter time-based data.

To get sales records from the last 30 days:

SELECT *

FROM sales_table

WHERE Purchase_date >= DATE_SUB(CURRENT_DATE(), INTERVAL 30 DAY);Write a SQL query to count the number of unique products sold in the last week.

Why it's asked: To assess your ability to perform distinct counts on time-based data.

To count distinct products sold in the past 7 days:

SELECT COUNT(DISTINCT Product_Id) AS Unique_Products_Sold

FROM sales_table

WHERE Purchase_date >= DATE_SUB(CURRENT_DATE(), INTERVAL 7 DAY);Write a SQL query to group sales data by product and calculate total revenue for each.

Why it's asked: To test your ability to use GROUP BY and aggregate sales.

To calculate total revenue per product:

SELECT

p.Product,

SUM(p.Price) AS Total_Revenue

FROM sales_table s

JOIN product_table p

ON s.Product_Id = p.Id

GROUP BY p.Product;Write a SQL query to calculate the percentage of total sales contributed by each product.

Why it's asked: To evaluate your ability to compute percentages using SQL.

To determine the percentage of total sales per product:

SELECT

p.Product,

SUM(p.Price) AS Product_Sales,

(SUM(p.Price) / (SELECT SUM(Price) FROM product_table)) * 100 AS Sales_Percentage

FROM sales_table s

JOIN product_table p

ON s.Product_Id = p.Id

GROUP BY p.Product;Write a SQL query to rank products based on total sales.

Why it's asked: To assess your ability to use window functions for ranking.

To rank products based on total sales:

SELECT

p.Product,

SUM(p.Price) AS Total_Sales,

RANK() OVER (ORDER BY SUM(p.Price) DESC) AS Sales_Rank

FROM sales_table s

JOIN product_table p

ON s.Product_Id = p.Id

GROUP BY p.Product;Write a SQL query to calculate the average delivery time for each product.

Why it's asked: To evaluate your ability to work with date differences in SQL.

To calculate the average delivery time per product:

SELECT

p.Product,

AVG(DATE_DIFF(d.Deliver_date, s.Purchase_date, DAY)) AS Avg_Delivery_Time

FROM sales_table s

JOIN delivery_table d

ON s.Id = d.Sales_Id

JOIN product_table p

ON s.Product_Id = p.Id

GROUP BY p.Product;Advanced and Scenario-Based Questions

Beyond foundational knowledge and SQL proficiency, real-world problem-solving skills are crucial for working with BigQuery at scale.

Advanced interview questions often focus on handling large datasets, optimizing performance, managing data pipelines, and troubleshooting common challenges in production environments.

Can you describe your experience with implementing data pipelines in BigQuery?

Why it's asked: To evaluate your practical knowledge of building, managing, and optimizing data pipelines in BigQuery, including ingestion, transformation, orchestration, and monitoring.

The interviewer is looking for insights into your hands-on experience with designing and implementing data pipelines in BigQuery. You should discuss:

- Data ingestion: Methods used (BigQuery Data Transfer Service, Cloud Storage, streaming ingestion).

- Data transformation: Use of SQL-based transformations, scheduled queries, or tools like Dataflow and dbt.

- Orchestration: How you manage pipeline workflows using Cloud Composer (Apache Airflow), Cloud Functions, or Cloud Scheduler.

- Monitoring and optimization: How you track pipeline performance, debug issues, and optimize query costs.

A strong response should include real-world examples of challenges you've faced and how you addressed them, such as handling large datasets, optimizing query performance, or ensuring data integrity in production pipelines.

Some good resources to implement such a project are:

- The following YouTube guide about ETL batch pipelines using BigQuery.

- The following end-to-end pipeline GitHub repo.

- The following ETL pipeline with Airflow and BigQuery GitHub repo.

How can you remove duplicate records from a column in a large BigQuery table while preserving the table name?

Why it's asked: To assess your ability to handle duplicate data efficiently in BigQuery without altering the table structure.

When dealing with a table containing millions of rows and duplicate values in a specific column, the best approach is to use the ROW_NUMBER() window function to identify and retain only the first occurrence of each duplicate while removing the rest.

You can achieve this by overwriting the table with a deduplicated version using a CREATE OR REPLACE TABLE statement:

CREATE OR REPLACE TABLE tableX AS

SELECT * EXCEPT(row_number)

FROM (

SELECT *,

ROW_NUMBER() OVER (PARTITION BY troubleColumn ORDER BY someOtherColumn) AS row_number

FROM tableX

)

WHERE row_number = 1;Here’s how it works:

-

ROW_NUMBER()assigns a unique row number to each record within the sametroubleColumnvalue, ensuring only one record per duplicate group is retained. -

The

PARTITION BYtroubleColumngroups rows based on duplicates in that column. -

The

ORDER BYsomeOtherColumn(e.g.,Purchase_date) ensures that a specific record is retained. -

The outer query filters only the first occurrence (

row_number = 1), effectively removing duplicates. -

The

CREATE OR REPLACE TABLEstatement ensures the table remains under the same name while replacing it with the cleaned data.

This approach efficiently eliminates duplicates without creating a new table or affecting the overall table structure.

How do you use BigQuery for data visualization and reporting, and what are the most common tools for it?

Why it's asked: To assess your ability to integrate BigQuery with visualization tools and effectively communicate insights through reporting.

BigQuery serves as a powerful backend for data visualization and reporting, enabling direct connections with tools like Google Looker Studio (formerly Data Studio), Tableau, and Power BI.

These tools allow users to query data in real-time and create custom dashboards, charts, and reports without manual data exports. Key techniques for visualization include:

- Aggregating and transforming data in BigQuery using SQL before visualization.

- Building interactive dashboards with dynamic filters and drill-down capabilities.

- Optimizing queries and materialized views to improve dashboard performance.

A strong response should highlight real-world use cases, such as:

- Tracking business KPIs

- Monitoring trends

- Generating automated reports

To implement your own Data Visualization project, you can follow DataCamp's specialized projects about Analyzing Electric Vehicle Charging Habits and Exploring London's Travel Network with BigQuery.

Questions about BigQuery Best Practices and Optimization

Working efficiently with BigQuery goes beyond writing SQL queries—it requires optimizing performance, managing costs, and implementing best practices to ensure smooth data operations. Since BigQuery operates on a pay-per-use model, understanding how to minimize data scans, leverage partitioning and clustering, and optimize query execution is crucial for both performance and cost efficiency.

What are the best practices for managing BigQuery costs efficiently?

Why it's asked: To evaluate your ability to optimize cost efficiency while working with large datasets in BigQuery.

To manage BigQuery costs effectively, you should:

-

Optimize queries to scan only necessary columns and rows, avoiding

SELECT *. -

Use partitioned and clustered tables to minimize data scanning and improve query performance.

-

Leverage cost monitoring tools such as budgets, alerts, and cost control policies in Google Cloud.

-

Take advantage of slot reservations for more predictable pricing and cost savings.

-

Regularly clean up unused tables and partitions to optimize storage costs.

A well-structured cost management strategy helps control expenses while maintaining efficient query execution.

What are the best practices for optimizing query performance in BigQuery?

Why it's asked: To assess your ability to write efficient queries that reduce execution time and cost.

To optimize query performance in BigQuery:

-

Design an efficient schema, selecting appropriate data types and avoiding excessive normalization.

-

Use partitioning and clustering to reduce the amount of data scanned.

-

Avoid

SELECT *, retrieving only the necessary columns. -

Optimize JOIN and GROUP BY operations, ensuring they follow best practices for large-scale queries.

-

Leverage caching and materialized views to store precomputed results and improve performance.

-

Use approximate aggregation functions, like

APPROX_COUNT_DISTINCT, when exact precision is not required.

By following these practices, you can speed up queries, reduce compute costs, and enhance overall efficiency.

What are BigQuery slots, and how do they impact query performance?

Why it's asked: To evaluate your understanding of BigQuery’s compute resources and their effect on query execution.

BigQuery slots are units of computational capacity used to process SQL queries. They are dynamically allocated based on workload demands, ensuring efficient resource utilization. Organizations can choose between:

- On-demand slots, which automatically scale based on query needs.

- Reserved slots, which provide more predictable performance and cost savings for consistent workloads.

Efficient slot management can reduce query execution time, optimize resource allocation, and lower overall costs.

How can you monitor and optimize BigQuery costs effectively?

Why it's asked: To assess your ability to track and control BigQuery expenses.

To monitor and optimize costs in BigQuery:

- Analyze query execution using query history and EXPLAIN plans to identify expensive operations.

- Enable audit logs to track usage patterns and detect inefficient queries.

- Set up budgets and cost alerts to prevent unexpected expenses.

- Optimize slot usage, leveraging reserved slots for steady workloads to reduce costs.

- Regularly clean up unused tables and partitions to avoid unnecessary storage fees.

By implementing these strategies, you can ensure cost-efficient data processing while maintaining performance and scalability.

Additional Tips for Acing Your BigQuery Interview

Here are some final tips to ace your coming interview about BigQuery.

Master the fundamentals. Understand BigQuery’s architecture, including serverless design, storage-compute separation, and Dremel query engine with our introductory BigQuery course. Learn datasets, tables, views, and materialized views to manage and structure data efficiently. Our SQL materialized view tutorial will help.

Sharpen your SQL & query optimization skills. Practice BigQuery-specific SQL functions (ARRAYs, STRUCTs, window functions) for complex queries with our Getting Started with BigQuery code-along. Optimize queries with partitioning, clustering, and approximate functions to reduce costs and improve performance following Google’s official documentation.

Gain hands-on experience. Work on real-world projects using public datasets and build reports with Looker Studio, Tableau, or Power BI with DataCamp projects. Trying our Analyzing Electric Vehicle Charging Habits and Exploring London's Travel Network are both great ways to improve your SQL skills.

Cloud Courses

Josep is a freelance Data Scientist specializing in European projects, with expertise in data storage, processing, advanced analytics, and impactful data storytelling.

As an educator, he teaches Big Data in the Master’s program at the University of Navarra and shares insights through articles on platforms like Medium, KDNuggets, and DataCamp. Josep also writes about Data and Tech in his newsletter Databites (databites.tech).

He holds a BS in Engineering Physics from the Polytechnic University of Catalonia and an MS in Intelligent Interactive Systems from Pompeu Fabra University.

FAQs

What is BigQuery, and how does it differ from traditional databases?

BigQuery is a fully managed, serverless data warehouse on Google Cloud designed for large-scale data analytics. Unlike traditional on-premises relational databases, which are typically row-based and constrained by hardware limitations, BigQuery is a cloud-native, columnar storage system that offers near-infinite scalability. Its distributed architecture and pay-as-you-go pricing model make it more efficient for handling analytical workloads than conventional databases.

What are the key advantages of using BigQuery?

BigQuery offers five key advantages: (1) Scalability – automatically scales resources up or down, (2) Flexibility – infrastructure adapts to business needs, (3) High Security – built-in encryption and access control, (4) Cost-effectiveness – pay-as-you-go pricing, and (5) Data sharing and collaboration – cloud-based solutions allow seamless access across teams.

What is partitioning in BigQuery, and how does it improve performance?

Partitioning in BigQuery divides large tables into smaller, manageable pieces based on criteria like date, ingestion time, or numeric values. This improves query performance by scanning only relevant partitions instead of the entire table, reducing query costs and execution time. It is particularly beneficial for time-series analysis and large datasets.

What is the difference between a table and a view in BigQuery?

A table physically stores data, while a view is a virtual table that dynamically retrieves data based on a predefined SQL query. Views help simplify complex queries, enforce data access controls, and improve query execution times without duplicating data.

How does BigQuery handle data security?

BigQuery employs multiple layers of security: (1) Data encryption at rest and in transit, (2) Identity and Access Management (IAM) for granular access control, (3) Audit logging to monitor usage and compliance, and (4) Customer-managed encryption keys for enhanced security control.