Track

Businesses depend on accurate data to make decisions—or at least, they should. A failed data pipeline or unreliable data can significantly disrupt organizational processes.

Picture this scenario:

A marketing team uses customer data to create targeted ads, but due to an unnoticed data issue, outdated or incorrect information gets sent out, maybe even to the wrong people.

The error might go unnoticed until customers start filing complaints. By then, it’s too late. The damage is done—time and money have already been wasted, and even more resources will likely be needed to address the fallout.

Data observability seeks to prevent this from happening. In this article, we will discuss how. Let’s get into it!

What is Data Observability?

Data observability refers to the ability to monitor and understand the health of your data as it moves through pipelines and systems. Its primary goal is to ensure data remains accurate, consistent, and reliable—so potential issues can be detected and resolved before they cause larger problems.

For example, if you’re a part of the sales team and rely on daily reports to track performance and determine the next steps, any issues with the data feeding those reports could throw off your entire strategy. Data observability enables you to catch these issues in real time, fix them, and keep things running smoothly.

Thus, at its core, data observability is monitoring your data as it moves through different systems to ensure it’s accurate and flowing as expected. All you are doing is spotting any issues before they influence critical decisions.

Specifically, data observability helps:

- Maintain data quality: Automatically detect missing or inaccurate data, enabling teams to fix problems before they lead to poor decisions.

- Prevent data downtime: Monitor pipelines for anomalies (e.g., drops in volume, broken schemas) and alert teams before disruptions escalate.

- Governance and compliance: Identify data quality or security breaches, helping meet privacy regulations like GDPR or CCPA.

- Build trust in data: Provide transparency into how data is collected, processed, and managed, fostering confidence in decision-making.

Become a Data Engineer

The Pillars of Data Observability

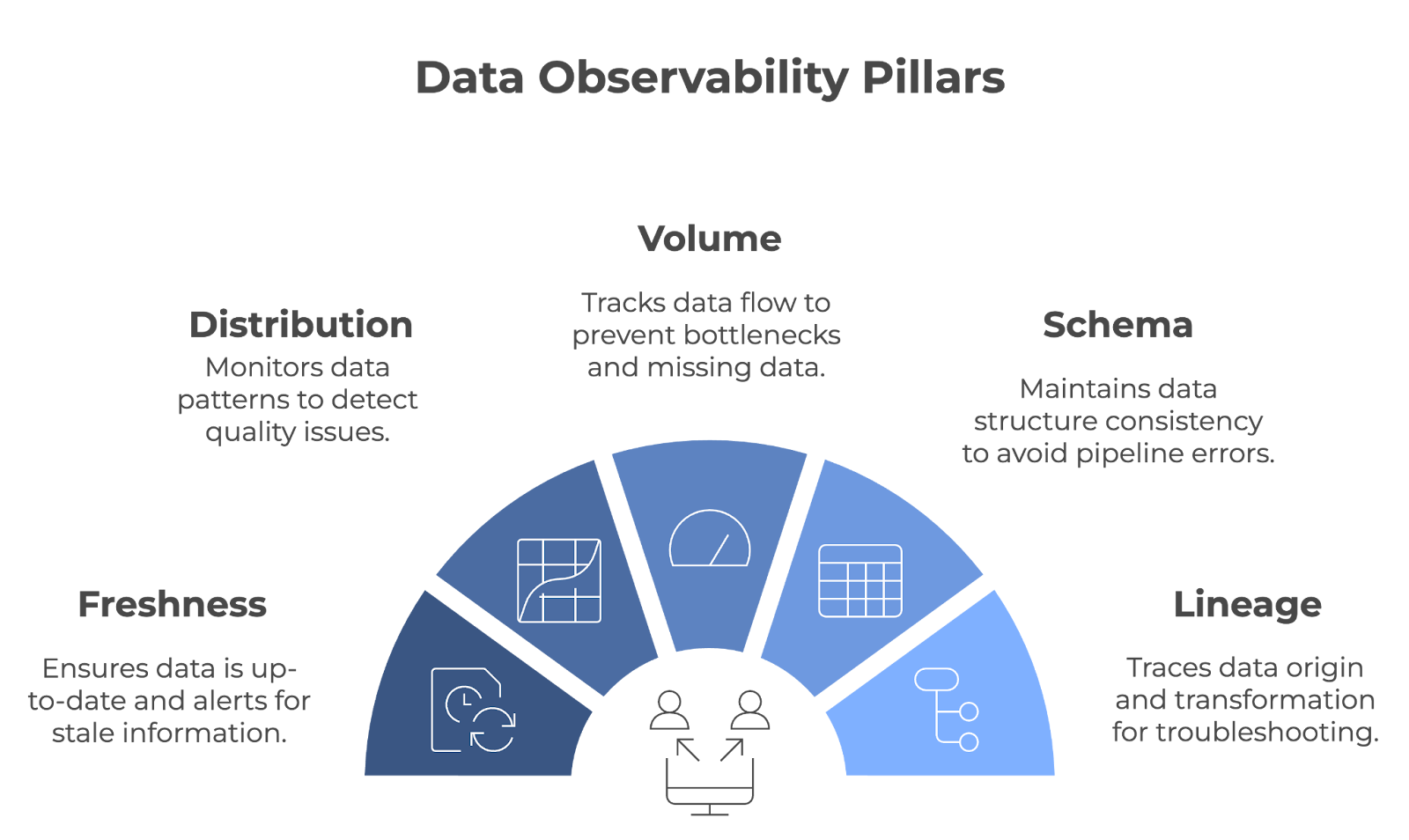

Data observability is built upon five key pillars: Freshness, distribution, volume, schema, and lineage. Together, these pillars ensure data pipelines run smoothly and that data remains accurate, complete, and reliable.

The five pillars of data observability. Image by Author (created with napkin.ai)

Let’s examine each pillar in detail!

Freshness

Freshness tells you how up-to-date your data is. It tracks when data was last updated and alerts you if it becomes stale. This pillar is important for teams relying on real-time or near-real-time data to make decisions.

- Example: Imagine a retailer adjusting inventory levels based on live sales data. If that data isn't updated on time, the company might end up with too much or too little stock. Freshness helps prevent this by making sure decisions are based on the latest information.

Distribution

Distribution refers to tracking the patterns and specific values in your data (e.g., mean, median, standard deviation, etc.). Monitoring these values helps to spot when something looks off, which may be a sign of data quality issues. Regular distribution checks guarantee data remains consistent over time.

- Example: An online platform would likely analyze customer engagement metrics. To check for consistency, they may monitor data distributions for metrics like session duration, conversion rates, etc. A sudden spike or drop in these metrics could signal issues with the data collection process or an underlying system error.

Volume

Data volume refers to the amount of data flowing through a system. This metric is tracked to ensure the expected amount of data is being ingested and processed without any unexpected drops or spikes. Data volume is monitored to detect issues like missing data or pipeline bottlenecks quickly.

- Example: A logistics company relying on GPS data from a fleet of vehicles would monitor the volume of location data being transmitted. If the volume of data drops unexpectedly, it could indicate communication failures with certain vehicles, resulting in tracking gaps.

Schema

Schema observability tracks the structure of the data—it’s important that it remains consistent over time. When your schema changes unexpectedly, it can break your data pipelines and lead to errors in processing.

- Example: Imagine your marketing team segments customers by age and location. To prevent your data pipeline from breaking, your team would need to make sure that changes to the schema (e.g., renaming columns, altering data types, etc.) do not cause errors in their reporting system. Any unnoticed changes could lead to flawed customer segmentation.

Lineage

Lineage provides a clear view of where your data comes from, how it’s transformed, and where it ends up. This is key for troubleshooting and maintaining data quality in complex systems. When issues arise, lineage allows you to trace data points back through the pipeline to find the source of the problem.

- Example: In a bank, data lineage might be used to trace a specific data point, such as an account balance, back to its source. If discrepancies arise, lineage allows data engineers to identify where in the pipeline the issue occurred.

How Data Observability Works

Throughout this article, we have alluded to data observability actively monitoring data pipelines, sending alerts when issues arise, and helping teams quickly find the root cause of problems.

Let’s explore how each component works in practice!

Monitoring data pipelines

Data observability tools notice errors by constantly monitoring the data pipelines. They can track many aspects of a data pipeline but are typically configured to focus on specific metrics or areas most critical to the organization’s needs.

These tools often integrate with technologies like Apache Kafka, Apache Airflow, or cloud-based services such as AWS Glue or Google Cloud Dataflow to gather insights at various stages of the pipeline. For example:

- Schema validation: Tools like Great Expectations can enforce schema rules, guaranteeing that incoming data adheres to predefined formats (e.g., data types, column presence, and length restrictions).

- Anomaly detection: Platforms like Monte Carlo or Databand.ai use machine learning to identify unusual patterns in data volume, processing times, or distributions that could signal an issue.

- Lineage tracking: Tools such as OpenLineage or Apache Atlas visualize how data flows through systems, making it easier to trace the impact of changes or errors.

Alerts and automation

What is the point of detecting issues fast if nobody is informed? Data observability tools automatically alert the right people and sometimes take action based on predefined configurations.

These alerts are often configured based on thresholds or triggers, such as missing records, schema changes, or unexpected data delays. For instance:

- If a pipeline in Apache Airflow fails, an observability tool might detect a sudden drop in data volume and automatically notify the data engineering team via Slack.

- Some platforms, like Datafold or Soda, offer automated remediation options. For example, a system could roll back a dataset to its last verified state or reroute incoming data to a backup storage location using cloud tools like AWS Lambda.

Root cause analysis

Root cause analysis is a systematic process to identify the fundamental reason behind a problem or issue. In the context of data observability, it involves tracing a data problem back to its origin rather than just addressing its symptoms.

Data observability platforms often provide rich diagnostic capabilities, integrating with log management systems like Elasticsearch or monitoring platforms like Prometheus and Grafana to surface granular details. For example:

- A tool might trace a failed transformation in dbt back to a schema mismatch in a source database.

- By analyzing lineage data, the platform can highlight which downstream reports or dashboards are affected, enabling teams to prioritize fixes accordingly.

Tools for Data Observability

Often, data engineers implement observability features in-house. However, several powerful tools are available today that enable teams to implement out-of-the-box data observability effectively. Let’s take a look at the most popular ones!

Monte Carlo

Monte Carlo is a powerful data observability platform designed to help teams maintain the health of their data systems. Created by a team of former engineers from companies like LinkedIn and Facebook, the platform was built out of a desire to solve the growing challenge of data downtime. It provides:

- Automated data quality checks: Tracks schema changes, freshness, volume, and distribution to detect anomalies.

- Root cause analysis: Helps teams pinpoint the source of issues, such as broken pipelines or missing data.

- End-to-end data lineage: Visualizes data flow to understand the impact of changes or disruptions.

Monte Carlo integrates with tools like Snowflake, dbt, and Looker, making it ideal for teams working in modern data ecosystems.

Bigeye

Bigeye was founded in 2020 by a group of data engineers. It's a data observability platform designed to maintain the quality and reliability of data throughout its lifecycle.

The platform focuses on automating data quality monitoring, anomaly detection, and data issue resolution to keep data pipelines flowing smoothly and ensure that stakeholders can rely on accurate data for their decision-making. Its features include:

- Customizable monitoring: Allows teams to set specific thresholds for metrics like data completeness, freshness, and accuracy.

- Anomaly detection: Uses machine learning to identify outliers or unexpected changes in data.

- Collaboration tools: Provides alerts and reports that integrate with Slack and Jira to streamline issue resolution.

Databand

Databand is a data observability platform to proactively manage data health by providing visibility into data pipelines. Founded in 2019, Databand was created to address the data environment's increasing complexity and scale.

The platform was built to help data engineers detect issues in real time, understand the flow of data, and guarantee the accuracy of datasets across the organization. Its features are:

- Pipeline monitoring: Tracks metrics such as runtime, data volume, and errors across tools like Apache Airflow and Spark.

- Data impact analysis: Highlights downstream dependencies to prioritize critical fixes.

- Integration capabilities: Works with platforms like BigQuery, Kafka, and Kubernetes for end-to-end visibility.

Datadog

Datadog is a cloud-based monitoring and analytics platform that provides real-time observability. Founded in 2010 by Olivier Pomel and Alexis Lê-Quôc, Datadog was created to address the complexity of modern cloud applications.

Datadog is ideal for companies that need comprehensive monitoring across various systems. The platform is especially useful for cloud-native companies or those with complex, distributed systems. Its features include:

- Unified monitoring: Tracks metrics, logs, and traces across applications, infrastructure, and data pipelines.

- Real-time alerting: Sends customizable notifications via email, Slack, or PagerDuty.

- Integration ecosystem: Supports over 500 integrations, including AWS, Google Cloud, and Docker.

Data Observability Tools Comparison

|

Tool |

Focus areas |

Integrations |

Ideal for |

|

Monte Carlo |

Data quality, anomaly detection, data lineage |

Snowflake, dbt, Looker |

Modern data ecosystems needing automated observability |

|

Bigeye |

Data quality monitoring, anomaly detection, user-friendly UI |

Slack, Jira, major data lakes, and warehouses |

Industries with high data reliability needs (e.g., finance, healthcare) |

|

Databand |

Pipeline monitoring, data impact analysis, real-time alerts |

Apache Airflow, Spark, BigQuery, Kafka |

Teams managing high-volume, complex data streams |

|

Datadog |

Unified monitoring, real-time alerting, cloud-native support |

AWS, Google Cloud, Docker, Kubernetes |

Companies with distributed systems or cloud-native setups |

Best Practices for Implementing Data Observability

To effectively implement data observability, focus on these best practices:

Start with high-impact pipelines

Focus on monitoring the most critical data pipelines first—those with the most significant business impact. You can mitigate risk by making sure these pipelines are healthy while gradually building a broader observability strategy across other pipelines.

Set clear metrics and KPIs

Clearly defined metrics are key to evaluating data health—you must know what success (and failure) looks like. These metrics must be aligned with the overall company goals to reflect what truly matters. This clarity will help you stay focused and evaluate their progress effectively.

Automate data quality monitoring

Monitoring and alerting systems reduce manual intervention and improve response times. Automated systems can detect issues early and trigger immediate actions to prevent data downtime. Make automation a priority.

Integrate observability with existing processes

Embedding observability into your daily processes enables you to respond faster and more effectively, thus making data management more proactive and less reactive.

Conclusion

Data observability is key for building high-quality, reliable data pipelines. By continuously monitoring critical aspects of data, teams can identify and address issues before they impact business outcomes. As data continues to drive business decisions, observability will remain a core element for managing and optimizing data pipelines.

To fully grasp the importance of observability and effectively implement it, it’s necessary to understand related concepts like data architecture, governance, and management. If you're looking to deepen your knowledge:

- Understanding Modern Data Architecture provides insights into how data is structured and managed within organizations.

- Explore Data Governance Concepts to understand how policies and processes ensure data quality, security, and compliance.

- Learn about the broader principles of managing data effectively with Data Management Concepts.

Finally, if you’re new to the field, Understanding Data Engineering is a great starting point to explore how data pipelines are designed and maintained!

Become a Data Engineer

FAQs

What’s the difference between data observability and data monitoring?

Data monitoring focuses on tracking specific metrics or systems, while data observability gives you a deeper understanding of how data behaves across its lifecycle. Namely, data observability provides insights into the root cause of data issues, thus offering a more comprehensive view than simple monitoring.

What industries benefit the most from data observability?

Industries with high data reliability needs—such as finance, healthcare, e-commerce, and logistics—benefit significantly from data observability. These industries rely heavily on accurate and timely data for compliance, customer satisfaction, and operational efficiency.

How do data observability tools handle real-time vs. batch data pipelines?

Data observability tools can monitor both real-time and batch pipelines. For real-time data, they track metrics like latency, throughput, and anomalies as they occur. For batch pipelines, they ensure data completeness, validate schema compliance, and check for anomalies after each run.

What are some challenges in implementing data observability?

Some common challenges include selecting the right tools for your data stack, aligning observability practices with business goals, and managing the additional overhead of monitoring complex pipelines. Ensuring team buy-in and allocating resources for setup and maintenance are also critical for successful implementation.