Course

DevOps is more than just a methodology. It is a cultural shift that blends software development (Dev) with IT operations (Ops) to create a more efficient, collaborative workflow.

The goal is to break down traditional silos between teams, enabling shared responsibility for software performance, security, and delivery.

Having adopted DevOps principles myself, I can say they really transform how teams build and deploy software. That’s why, in this guide, I’ll will walk you through a step-by-step roadmap to help you master DevOps.

> To get started with foundational DevOps concepts, check out the DevOps Concepts course.

Understanding the DevOps Mindset

To understand the DevOps mindset, it is essential to first define DevOps and recognize how it differs from traditional approaches.

What is DevOps?

DevOps is a philosophy that integrates development and operations teams to improve software delivery speed, reliability, and efficiency.

Traditional software development models, such as the Waterfall approach, often led to bottlenecks, slow deployments, and miscommunication between teams.

DevOps emerged as a solution to these challenges, which enables organizations to adopt a more agile and responsive approach to software delivery.

Unlike traditional models where developers write code and pass it off to operations for deployment, DevOps encourages close collaboration, shared responsibilities, and automation.

DevOps can help teams continuously integrate and deliver software with minimal disruptions.

This, in turn, ensures that applications remain scalable, secure, and high-performing.

> To better understand how DevOps compares with MLOps, check out MLOps vs DevOps: Differences, Overlaps, and Use Cases. You can also explore how automation scales in machine learning with this course on Fully Automated MLOps.

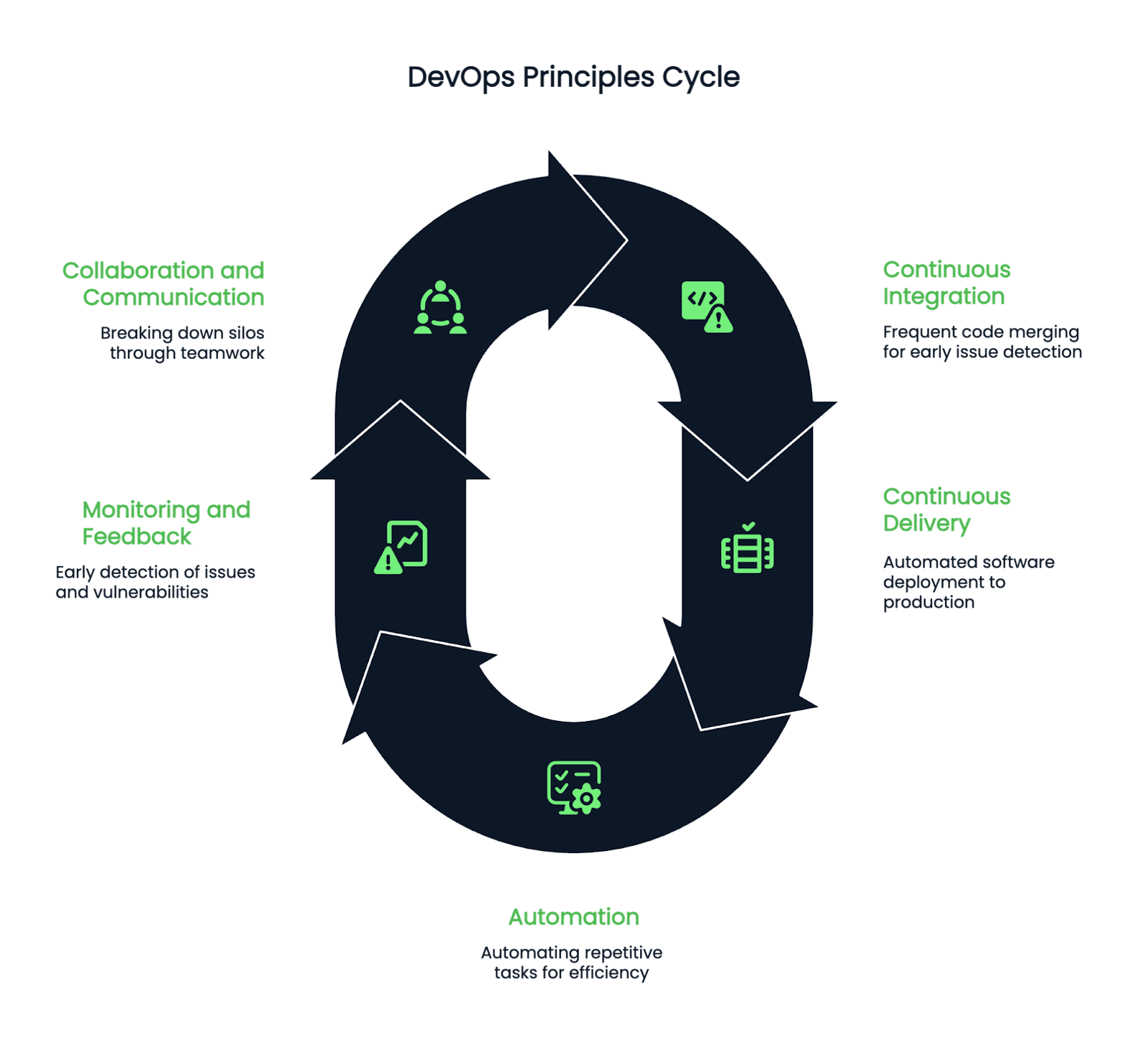

Key principles of DevOps

DevOps is guided by several fundamental principles that define its core practices:

- Continuous Integration (CI): Developers frequently merge code changes into a shared repository, which allows for early detection of integration issues and reduces potential deployment failures.

- Continuous Delivery (CD): Software is built, tested, and deployed automatically, which ensures that updates can be pushed to production environments with minimal human intervention.

- Automation: Repetitive tasks such as testing, configuration management, and infrastructure provisioning are automated to improve efficiency and reduce human errors.

- Monitoring and feedback: Observability tools enable teams to detect performance issues, security vulnerabilities, and system anomalies early in the development process.

- Collaboration and communication: Strong teamwork, transparency, and knowledge-sharing help break down silos between development and operations teams.

Image containing the five stages of the DevOps lifecycle. Created using Napkin AI.

Benefits of adopting DevOps

Implementing DevOps into your workflow can benefit both your development and operations.

- One key benefit is faster release cycles. Automating CI/CD pipelines helps teams deploy updates quickly and efficiently without extensive delays.

- Additionally, DevOps promotes higher software quality, as automated testing and continuous monitoring help catch bugs early in the development cycle.

- Another key advantage is reduced failure rates. Since DevOps emphasizes incremental changes and automated rollbacks, teams can swiftly recover from errors, minimizing downtime and operational risks.

- Finally, DevOps fosters better team alignment, as developers, testers, security engineers, and operations teams work collaboratively to ensure a smooth and efficient development process.

Prerequisite Knowledge and Skills

To succeed in DevOps, foundational knowledge in scripting, operating systems, and version control systems is important.

Basic programming/scripting

A foundational knowledge of programming and scripting is crucial for anyone looking to enter the DevOps field.

Scripting languages like Python, Bash, and PowerShell are widely used to automate repetitive tasks, such as software deployments, server configurations, and log analysis.

Python, in particular, is a powerful and versatile language commonly used for infrastructure automation, configuration management, and API integrations.

Bash scripting is equally important, especially for Linux-based environments.

Understanding how to write shell scripts, manage system processes, and automate command-line tasks can significantly enhance your efficiency as a DevOps engineer.

PowerShell is beneficial for those working in Windows environments. It provides automation capabilities for managing servers, Active Directory, and cloud services.

Understanding of operating systems

Since DevOps professionals manage infrastructure and cloud environments, a strong grasp of operating systems is essential.

Linux is the preferred operating system in most DevOps workflows due to its flexibility, performance, and extensive support for open-source tools.

Understanding Linux file systems, process management, user permissions, networking, and package management will help you navigate complex infrastructure challenges.

Knowledge of networking concepts such as DNS, HTTP, firewalls, and load balancing is also needed.

DevOps engineers often work with cloud-based and on-premises networks, which makes it essential to understand network security, IP addressing, and troubleshooting network-related issues.

Version control systems

Version control is an essential part of modern software development, and Git is the most widely used version control system.

DevOps engineers must be proficient in Git to manage source code efficiently, track changes, and collaborate with teams using platforms like GitHub, GitLab, or Bitbucket.

Key concepts to master include branching strategies, merging, pull requests, and resolving conflicts.

Learning GitOps, an extension of DevOps that uses Git repositories as the single source of truth for infrastructure automation, can also be beneficial.

> If you are curious about integrating GitOps into your workflow, check out the MLOps Concepts course.

Infrastructure and Configuration Management

Managing infrastructure, configurations, and secure access to sensitive information effectively is crucial for successful DevOps practices.

Infrastructure as Code (IaC)

Infrastructure as Code (IaC) is a DevOps practice that allows teams to define and manage infrastructure using code rather than manual configurations.

This approach ensures consistency, scalability, and repeatability across environments.

Popular IaC tools include Terraform and AWS CloudFormation. Terraform is a cloud-agnostic tool that allows engineers to provision infrastructure across multiple cloud providers using declarative configuration files.

AWS CloudFormation, on the other hand, is specifically designed to manage AWS resources through JSON or YAML templates.

Implementing IaC can help teams automate infrastructure provisioning, reduce configuration drift, and enhance collaboration through version-controlled infrastructure definitions.

Configuration management

Configuration management tools help maintain system consistency by automating software installations, system updates, and environment configurations.

Popular tools in this category include Ansible, Chef, and Puppet.

Ansible is a lightweight, agentless automation tool that uses YAML-based playbooks to configure servers and deploy applications.

Chef and Puppet, while more complex, provide powerful automation capabilities for managing large-scale infrastructure with declarative configurations.

Secrets and access management

Security is a critical aspect of DevOps, and managing sensitive information such as API keys, passwords, and certificates is essential.

HashiCorp Vault is a widely used tool for securely storing and managing secrets, which allows teams to control access to sensitive data through authentication policies.

AWS Secrets Manager provides similar functionality for cloud-native environments, helping organizations manage credentials securely.

Additionally, using environment variables to store secrets and integrating role-based access controls (RBAC) can further enhance security within DevOps pipelines.

Continuous Integration and Continuous Delivery (CI/CD)

Implementing effective CI/CD practices requires understanding core principles, leveraging suitable tools, and integrating automated testing.

CI/CD fundamentals

CI/CD is at the heart of DevOps, which ensures that code changes are continuously integrated, tested, and deployed.

Continuous Integration (CI) involves automating code integration from multiple developers, running automated tests, and generating build artifacts.

Continuous Delivery (CD) extends CI by automating software deployment to staging and production environments.

An effective CI/CD pipeline consists of several stages: source code integration, automated testing, build and packaging, artifact storage, and deployment.

This pipeline helps teams release software quickly, safely, and reliably.

> For an in-depth look at automating your CI/CD workflows, explore the CI/CD for Machine Learning course.

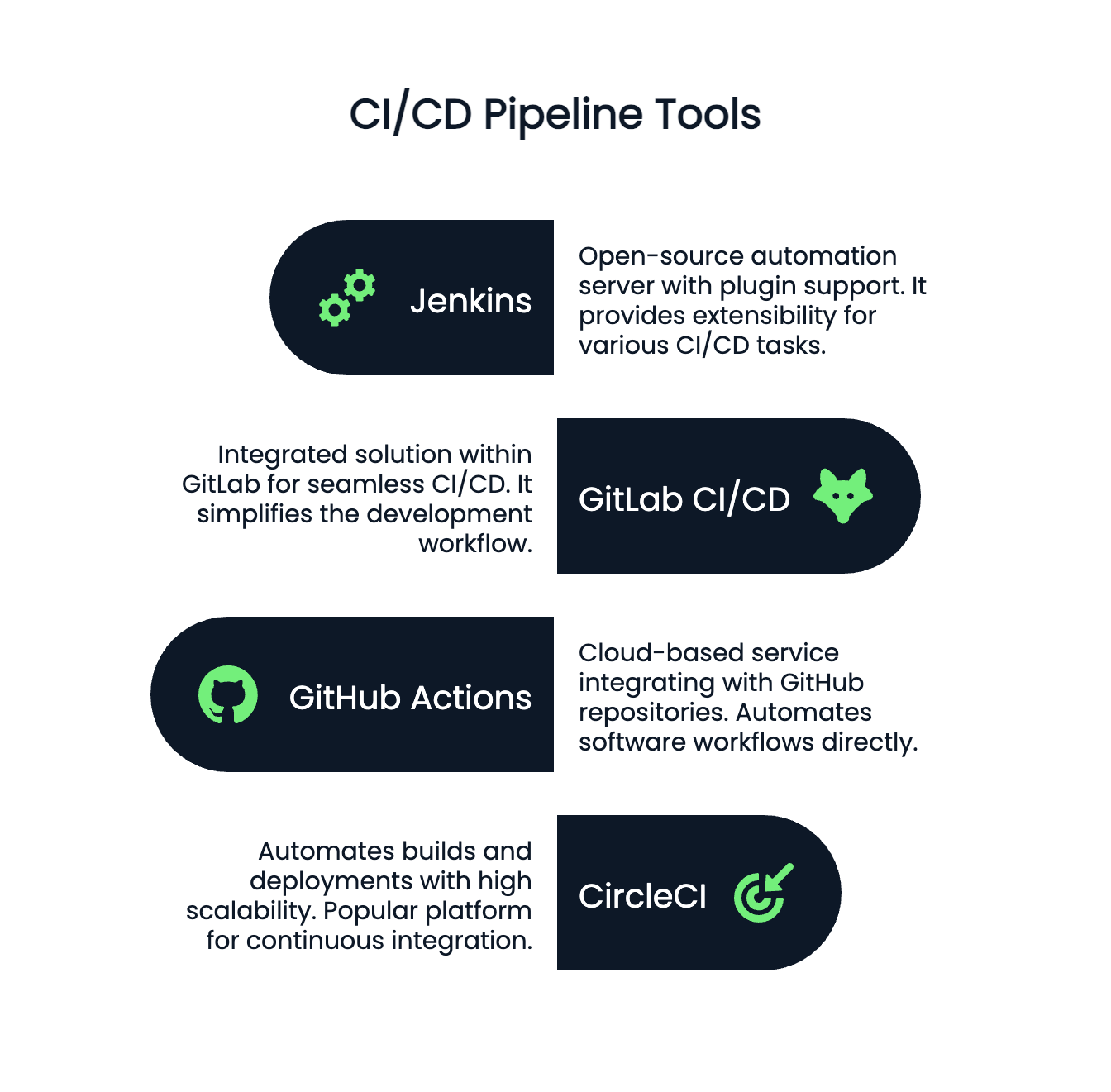

CI/CD tools

Several tools are commonly used to implement CI/CD pipelines:

- Jenkins: An open-source automation server that supports plugin-based extensibility.

- GitLab CI/CD: A built-in solution within GitLab that provides a seamless integration experience.

- GitHub actions: A cloud-based CI/CD service that integrates directly with GitHub repositories.

- CircleCI: A popular CI/CD platform that automates builds and deployments with high scalability.

Image highlighting Jenkins, GitHub Actions, GitLab CI/CD, and CircleCI as key tools for automation and continuous integration. Created using Napkin AI.

Building and testing automation

Automated testing ensures that code changes do not introduce bugs or vulnerabilities.

Unit tests, integration tests, and functional tests should be integrated into the CI/CD pipeline to catch errors early.

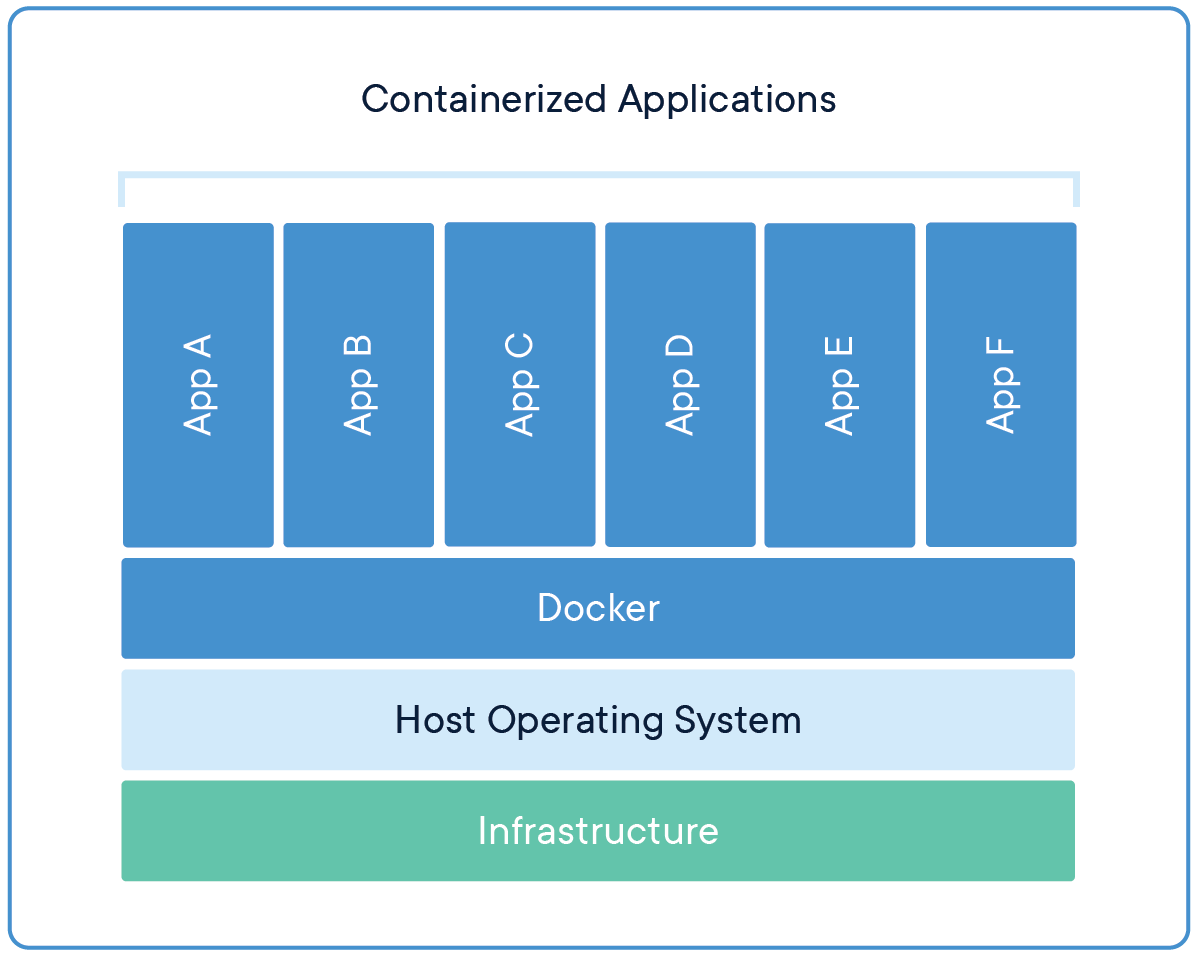

Containerization and Orchestration

Effectively managing containerized applications requires proficiency with Docker, orchestration through Kubernetes, and leveraging tools like Helm and Operators.

Docker basics

Containerization is a crucial part of the modern DevOps landscape.

It allows developers to package applications and their dependencies into portable containers that can run consistently across various environments.

Docker is the most popular containerization tool, which enables teams to build, ship, and run applications in isolated environments.

Image containing Docker architecture showing containerized applications running on Docker. Source: Docker Resources

A Docker container is a lightweight, standalone package that includes everything the software needs to run: the application code, runtime, system libraries, and settings.

Docker containers are defined using a Dockerfile, which outlines the steps to create the container.

Once the image is built, it can be stored in a Docker registry and shared with others.

Mastering Docker involves understanding how to create Docker images, write Dockerfiles, and run containers locally and in cloud environments.

Docker Compose, a tool for defining and running multi-container Docker applications, also helps manage complex systems with several interconnected services.

> For a step-by-step breakdown of how to learn Docker, this beginner's guide is a helpful resource.

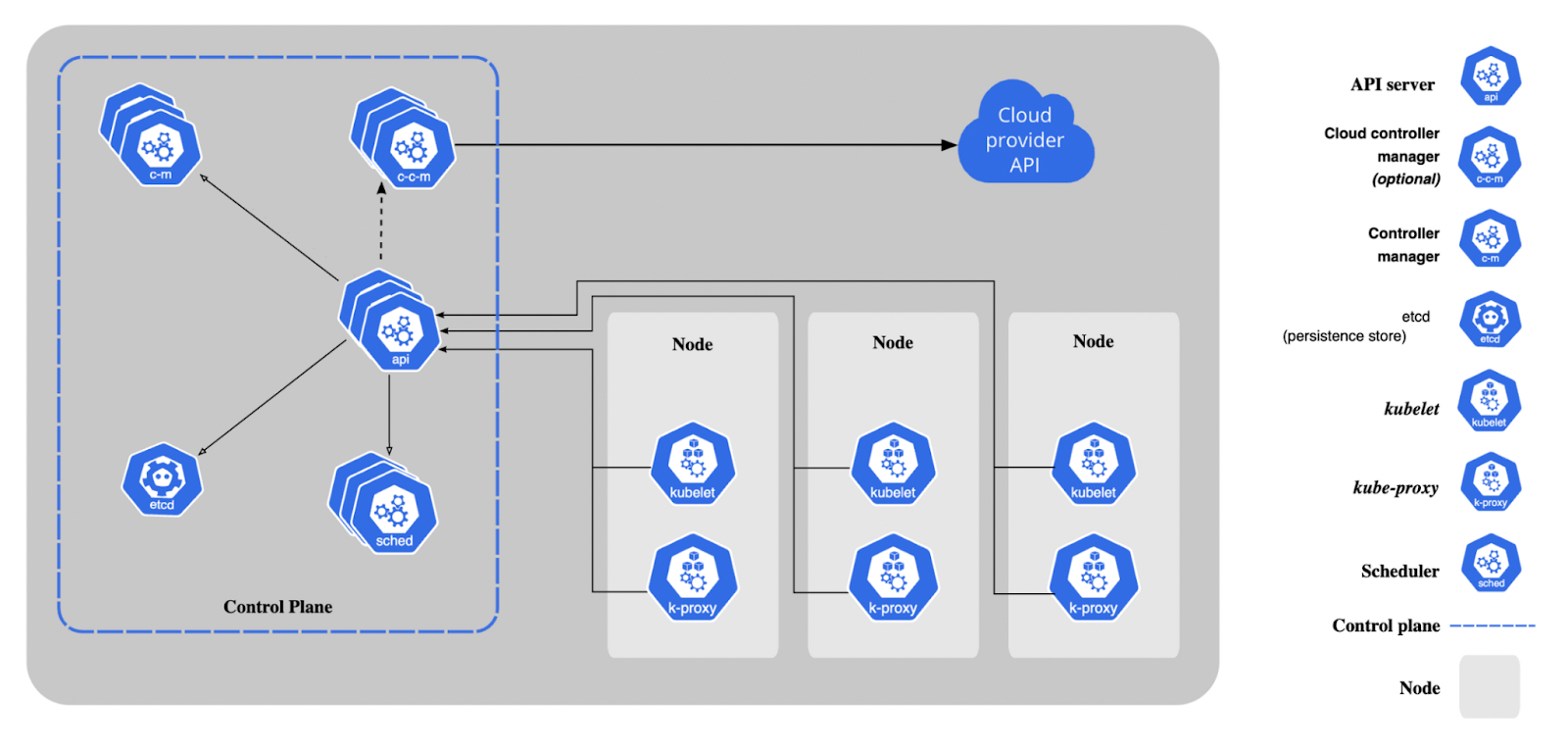

Kubernetes fundamentals

While Docker creates and manages individual containers, Kubernetes (K8s) is the orchestration platform that helps scale and manage containerized applications in production.

Kubernetes automates the deployment, scaling, and management of containerized applications, which makes it easier to handle large, distributed systems.

> If you're new to Kubernetes, this beginner-friendly course offers a hands-on introduction to container orchestration.

Kubernetes organizes containers into pods, which are the smallest deployable units in Kubernetes.

Each pod can contain one or more containers, and Kubernetes manages the pods across a cluster of machines.

Key concepts include services (to expose containers), deployments (to manage the lifecycle of applications), and namespaces (to organize resources within a cluster).

Image containing Kubernetes architecture showing the control plane components. Source: Kubernetes Docs

Kubernetes also provides advanced features like auto-scaling, self-healing, and rolling updates.

Learning Kubernetes is essential for DevOps engineers working with microservices architectures, as it ensures that applications are highly available, scalable, and resilient.

> If you want to understand how Kubernetes compares with Docker, read Kubernetes vs Docker: Differences Every Developer Should Know.

Helm and operators

For teams managing complex Kubernetes environments, Helm and Kubernetes Operators offer solutions to simplify and automate application management.

Helm is a package manager for Kubernetes, which allows you to define, install, and upgrade Kubernetes applications using Helm charts.

These charts are reusable configurations that define how Kubernetes resources should be deployed.

On the other hand, Kubernetes Operators are custom controllers that automate the management of complex, stateful applications in Kubernetes.

Operators extend Kubernetes to manage specific application states, such as deploying a database, handling backups, or scaling an application based on custom metrics.

Helm and Operators are both essential for managing sophisticated workloads and maintaining high availability and consistency in containerized environments.

Master Docker and Kubernetes

Cloud Platforms and Services

Navigating modern cloud environments involves understanding major cloud providers, mastering core cloud services, and effectively managing multi-cloud or hybrid strategies.

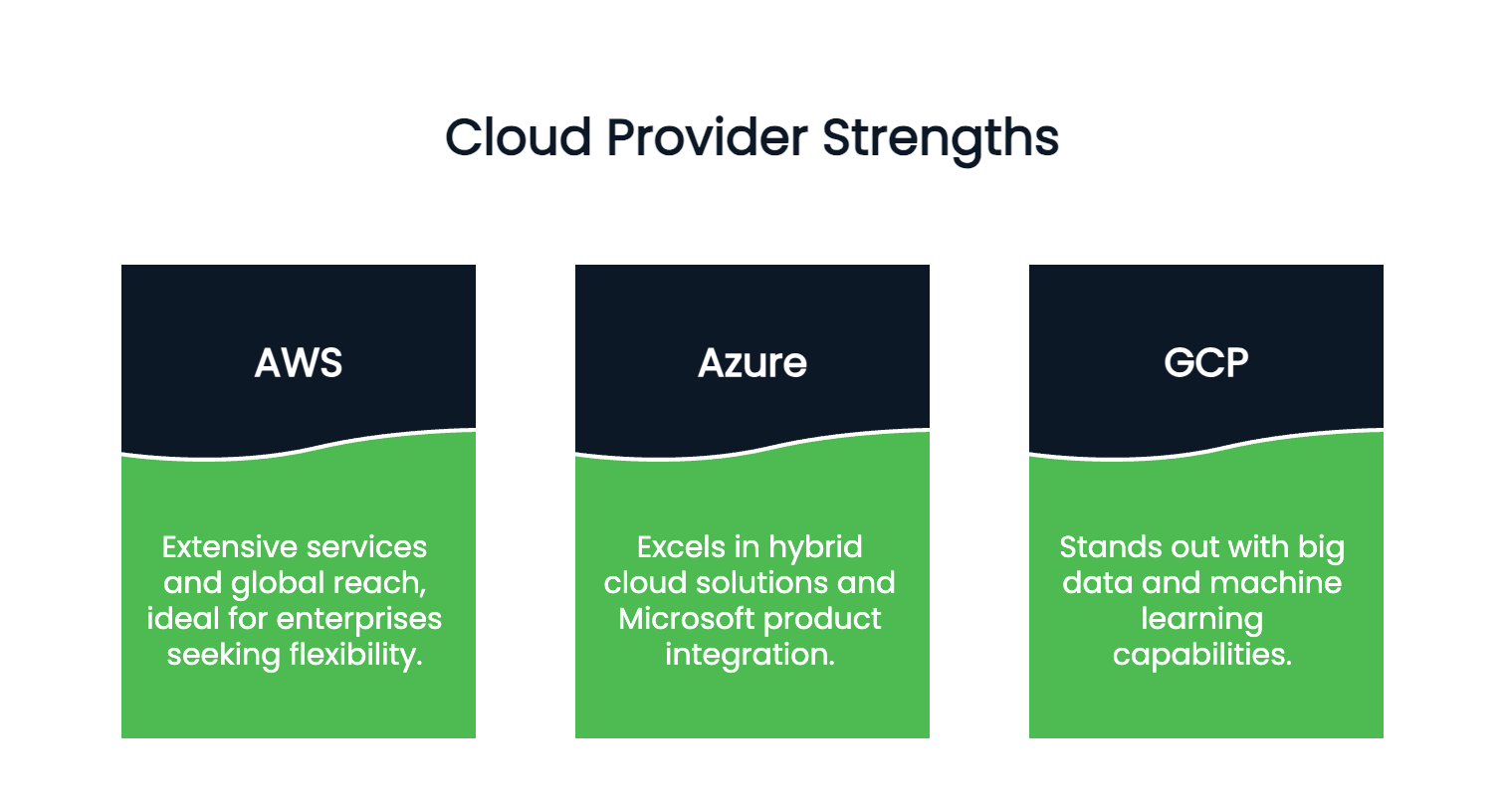

Cloud providers

The rise of cloud computing has transformed how organizations develop, deploy, and scale applications.

Popular cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) offer a wide range of services that support DevOps practices, from compute power and storage to networking and security.

Each cloud provider has its unique offerings and strengths.

- AWS is known for its extensive suite of services and global reach, making it ideal for enterprises looking for flexibility and scalability.

- Azure excels in hybrid cloud solutions and integration with Microsoft products.

- GCP stands out in big data and machine learning capabilities.

Image contrasting the strengths of the main cloud providers. Created with Napkin AI.

As a DevOps engineer, it's important to have a solid understanding of at least one cloud provider and its core services.

Having familiarity with multiple cloud platforms can also be beneficial, especially for organizations adopting multi-cloud strategies.

> Learn to build and deploy apps with Azure DevOps in this step-by-step tutorial.

Core cloud services to know

Cloud providers offer a variety of services that support DevOps workflows, including compute, storage, networking, and more.

Here are some essential cloud services you should be familiar with:

- Compute services: These services allow you to run applications and workloads in the cloud. AWS EC2, Azure Virtual Machines, and Google Compute Engine are examples of compute services that provide scalable virtual machines.

- Storage services: Cloud storage services like AWS S3, Azure Blob Storage, and Google Cloud Storage offer scalable and durable storage solutions for data. Understanding how to manage and secure data in the cloud is crucial for any DevOps engineer.

- Networking services: Cloud networking services, such as VPC (Virtual Private Cloud) in AWS, Azure Virtual Network, and Google Cloud VPC, help configure and manage networking resources.

You will need to be familiar with configuring subnets, load balancers, and VPNs to ensure seamless communication between services.

Multi-cloud and hybrid cloud strategies

Many organizations today adopt multi-cloud or hybrid cloud strategies to avoid vendor lock-in and take advantage of the unique strengths of each cloud provider.

In a multi-cloud environment, organizations use services from multiple cloud providers, while in a hybrid cloud setup, they combine on-premises infrastructure with cloud-based resources.

DevOps engineers need to understand how to manage and monitor multi-cloud environments effectively.

Tools like Terraform and Kubernetes can help automate infrastructure provisioning and orchestration across cloud platforms, ensuring consistent deployments and configurations.

Monitoring, Logging, and Alerting

Maintaining reliable and high-performing systems requires mastering observability practices, utilizing effective monitoring tools, and centralizing logs for analysis.

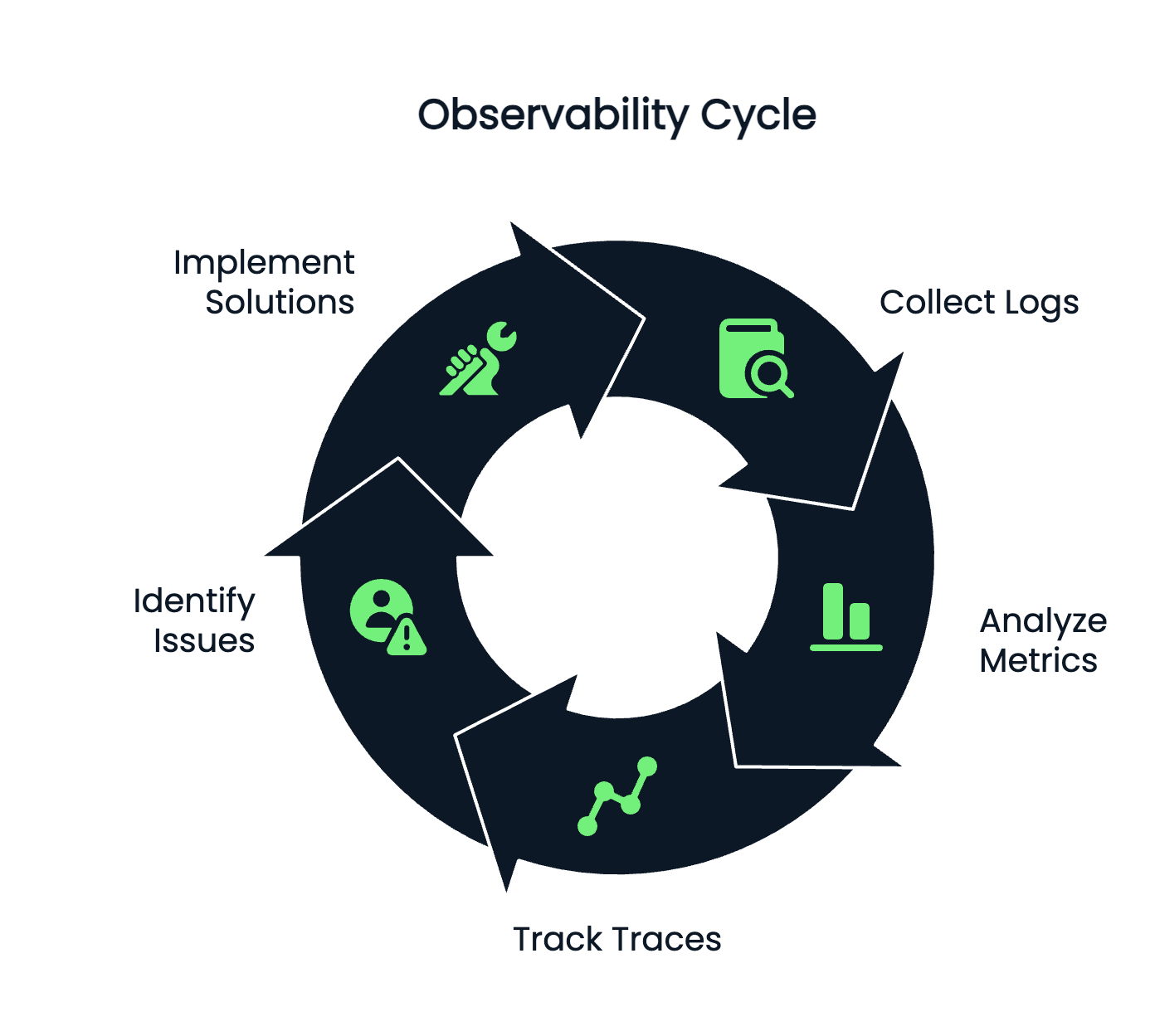

Observability concepts

Monitoring, logging, and alerting are critical aspects of DevOps that ensure the health and performance of applications and infrastructure.

Observability is the practice of gaining insights into the internal workings of a system based on external outputs, such as logs, metrics, and traces.

- Logs capture detailed information about system events and errors. They are essential for troubleshooting and debugging issues.

- Metrics provide quantitative data about the performance and behavior of applications, such as CPU usage, memory utilization, and response times.

- Traces offer a detailed view of the flow of requests through distributed systems, helping teams track the performance of individual services and identify bottlenecks.

Together, these three pillars (logs, metrics, and traces) form the foundation of observability.

They allow teams to proactively detect and resolve issues, which ensures a smooth and reliable user experience.

Image showing the observability lifecycle. Created with Napkin AI.

Monitoring tools

Monitoring tools enable teams to track the health and performance of systems in real-time.

Popular monitoring tools used in DevOps include:

- Prometheus: An open-source tool for monitoring and alerting, commonly used with Kubernetes. It collects and stores time-series data, allowing you to track system performance.

- Grafana: Often used alongside Prometheus, Grafana is a visualization tool that allows you to create real-time dashboards to monitor application metrics.

- Datadog: A cloud-based monitoring and analytics platform that provides infrastructure and application monitoring, log management, and user-experience monitoring.

- AWS CloudWatch: A monitoring service provided by AWS that helps you track the performance of AWS resources, set up alarms, and create custom metrics.

> To ensure efficient data pipeline deployment, take a look at the CI/CD in Data Engineering: A Guide for Seamless Deployment.

Log aggregation tools

Log aggregation tools help collect and centralize logs from various sources, which makes it easier to search, analyze, and visualize log data.

Some of the most widely used log aggregation tools include:

- ELK Stack (Elasticsearch, Logstash, Kibana): A powerful suite of tools that allows you to collect, store, and analyze log data. Elasticsearch is used for storing logs, Logstash for processing them, and Kibana for visualizing and exploring the data.

- Fluentd: An open-source data collector that unifies log data collection and transport across multiple sources. It is often used alongside Elasticsearch and Kibana for centralized logging.

Effective logging and monitoring are essential for maintaining system reliability, reducing downtime, and improving performance.

Security and Compliance

Ensuring robust security and compliance in DevOps requires integrating security practices throughout the pipeline, proactively identifying vulnerabilities, and automating adherence to regulatory standards.

DevSecOps

Security is a top priority in DevOps, and DevSecOps integrates security into every phase of the DevOps pipeline.

Rather than treating security as a separate function handled by a different team, DevSecOps encourages developers, operations engineers, and security experts to work together to ensure that security is embedded in the development process.

Automating security testing, code reviews, and vulnerability scanning enables DevSecOps teams to catch issues early, which reduces the risk of breaches and vulnerabilities in production.

Vulnerability scanning

DevOps engineers must implement security best practices, such as vulnerability scanning, to identify weaknesses in code and infrastructure.

Tools like Snyk, Trivy, and OWASP Dependency-Check help scan codebases and container images for known vulnerabilities, enabling teams to fix security issues before they reach production.

Compliance automation

Compliance is an important consideration in regulated industries, and DevOps teams must ensure that applications and infrastructure meet industry standards and legal requirements.

Compliance automation tools help track and enforce policies, conduct audits, and generate reports.

Frameworks like CIS (Center for Internet Security) and SOC2 (System and Organization Controls) provide guidelines for secure and compliant DevOps practices.

Soft Skills and Collaboration Tools

Succeeding in DevOps requires strong collaboration, effective communication, proficiency with Agile practices, and the ability to clearly document processes and knowledge.

Agile and Scrum practices

DevOps is closely tied to Agile methodologies, as both emphasize continuous improvement, collaboration, and iterative development.

Understanding Agile practices like Scrum and Kanban can significantly enhance your ability to manage development cycles and prioritize work effectively.

Scrum focuses on short, time-boxed iterations (called sprints) that deliver incremental improvements.

DevOps teams benefit from Agile principles by working in shorter cycles, ensuring faster delivery and more frequent releases.

Collaboration tools

DevOps is all about collaboration, and having the right tools for communication and project management is essential.

Popular collaboration tools include:

- Slack: A team messaging platform that helps facilitate communication between developers, operations, and other stakeholders.

- Jira: A project management tool used for tracking tasks, user stories, and issues in Agile workflows.

- Confluence: A collaboration platform for creating and sharing documentation, such as runbooks, deployment guides, and incident reports.

- Notion: A flexible workspace tool for organizing documentation, meeting notes, and project timelines.

Documentation and communication

Clear and effective documentation is key to a successful DevOps culture.

Whether it’s writing runbooks for incident response or documenting system architectures, detailed documentation ensures that teams can respond quickly to issues and share knowledge effectively.

Additionally, strong communication skills are critical in DevOps, as teams must collaborate across departments to achieve common goals.

Creating Your DevOps Learning Plan

Creating an effective DevOps learning plan involves structuring your skill development, which includes engaging in practical hands-on projects and pursuing relevant certifications.

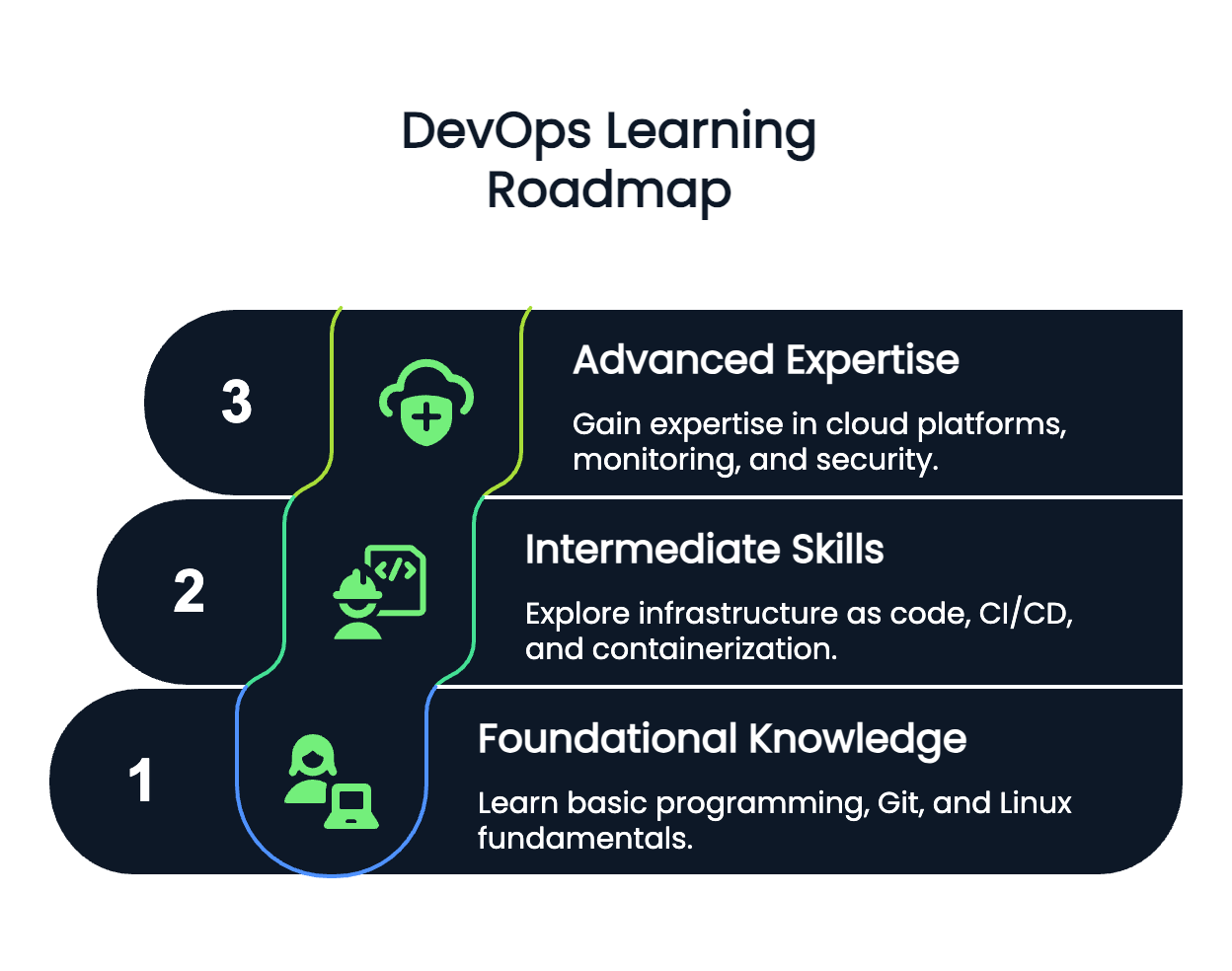

Learning paths and progression

DevOps is a broad field, and mastering it requires a structured approach.

As a beginner, focus on foundational knowledge such as scripting, version control, and understanding operating systems.

After you become comfortable with the basics, you can start learning more advanced topics like containerization, cloud platforms, and CI/CD pipelines.

Here is a suggested progression for your DevOps journey:

- Beginner: Learn basic programming/scripting, Git, Linux fundamentals, and version control.

- Intermediate: Explore infrastructure as code, CI/CD pipelines, containerization with Docker, and Kubernetes.

- Advanced: Gain expertise in cloud platforms, monitoring, logging, and security practices.

Image showing a suggested DevOps learning roadmap. Created with Napkin AI.

Hands-on projects

The best way to learn DevOps is through hands-on experience. Build and deploy your own CI/CD pipelines, experiment with containerization, and automate cloud infrastructure using tools like Terraform.

Practice with real-world projects, such as deploying a microservices-based application or automating the deployment of a web application.

Certification options

Certifications can help validate your skills and provide structure to your learning path.

Some of the popular certifications for DevOps professionals include:

- AWS DevOps Engineer (Professional)

- Certified Kubernetes Administrator (CKA)

- Docker Certified Associate

> Prepare for your certification with these curated AWS Certified DevOps Engineer: Study Plan, Tips, and Resources.

Conclusion

The DevOps journey is one of continuous learning and growth.

After understanding the DevOps roadmap, I think you will agree that it is not just about mastering tools and technologies, but also about embracing a mindset that drives collaboration, efficiency, and innovation.

With this, you should be well-equipped to take on the challenges of a modern DevOps role using the skills and practices mentioned in this guide.

If you have an interview coming up, brush up on common DevOps questions with 31 Top Azure DevOps Interview Questions or the Top 24 AWS DevOps Interview Questions.

Cloud Courses

FAQs

What is DevOps, and why is it important?

DevOps is a set of practices that combine software development (Dev) and operations (Ops) to improve collaboration, automation, and continuous delivery of applications. It helps organizations deliver software faster and with higher quality.

What tools should I learn first as a DevOps beginner?

As a beginner, focus on learning basic programming or scripting (Python, Bash), Git for version control, and understanding the fundamentals of operating systems, especially Linux.

Why is cloud computing important in DevOps?

Cloud computing provides scalable resources for application deployment, storage, and computing power. It is integral to DevOps because it enables rapid provisioning, automation, and scaling of applications in a flexible and cost-effective manner.

What is Infrastructure as Code (IaC), and why should I learn it?

Infrastructure as Code (IaC) allows you to define and manage your infrastructure using code, which makes it reproducible and automatable. Learning IaC tools - such as Terraform and AWS CloudFormation - is essential for managing cloud infrastructure efficiently in a DevOps pipeline.

How can I gain hands-on experience in DevOps?

To gain hands-on experience, start by working on projects like setting up a CI/CD pipeline, deploying applications with Docker, and automating infrastructure with tools like Terraform. Practical experience is key to mastering DevOps.

Are DevOps certifications worth pursuing?

Yes, DevOps certifications such as AWS DevOps Engineer, Certified Kubernetes Administrator (CKA), and Docker Certified Associate can validate your skills and help you advance your career by demonstrating your expertise in the field.

How long does it take to become a DevOps engineer?

It typically takes 6 to 18 months to become job-ready, depending on your starting point, time commitment, and practical project experience.

Do I need to know programming for DevOps?

Yes, a solid understanding of scripting (especially in Python, Bash, or PowerShell) is essential for automating tasks and managing infrastructure.

Is DevOps only for developers?

No. DevOps involves collaboration across development, operations, QA, and security. It's ideal for engineers with an interest in automation and systems.

Can I learn DevOps without a computer science degree?

Absolutely. Many successful DevOps engineers come from non-CS backgrounds. What matters most is practical knowledge, curiosity, and consistent learning.