Course

If you are a machine learning engineer, chances are you have used Docker in one of your projects—whether for deploying a model's inference endpoint or automating the machine learning pipeline for training, evaluation, and deployment. Docker simplifies these processes, ensuring consistency and scalability in production environments.

But here's the question: Are you aware of all the Docker images available on Docker Hub and GitHub? These pre-built images can save you time by eliminating the need to build images locally. Instead, you can pull and use them directly in your Dockerfiles or Docker Compose setups, accelerating development and deployment.

In this blog, we will explore the top 12 Docker container images designed for machine learning workflows. These include tools for development environments, deep learning frameworks, machine learning lifecycle management, workflow orchestration, and large language models.

If you are new to Docker, please take this Introduction to Docker Course to understand the basics.

Why use Docker Containers for Machine Learning?

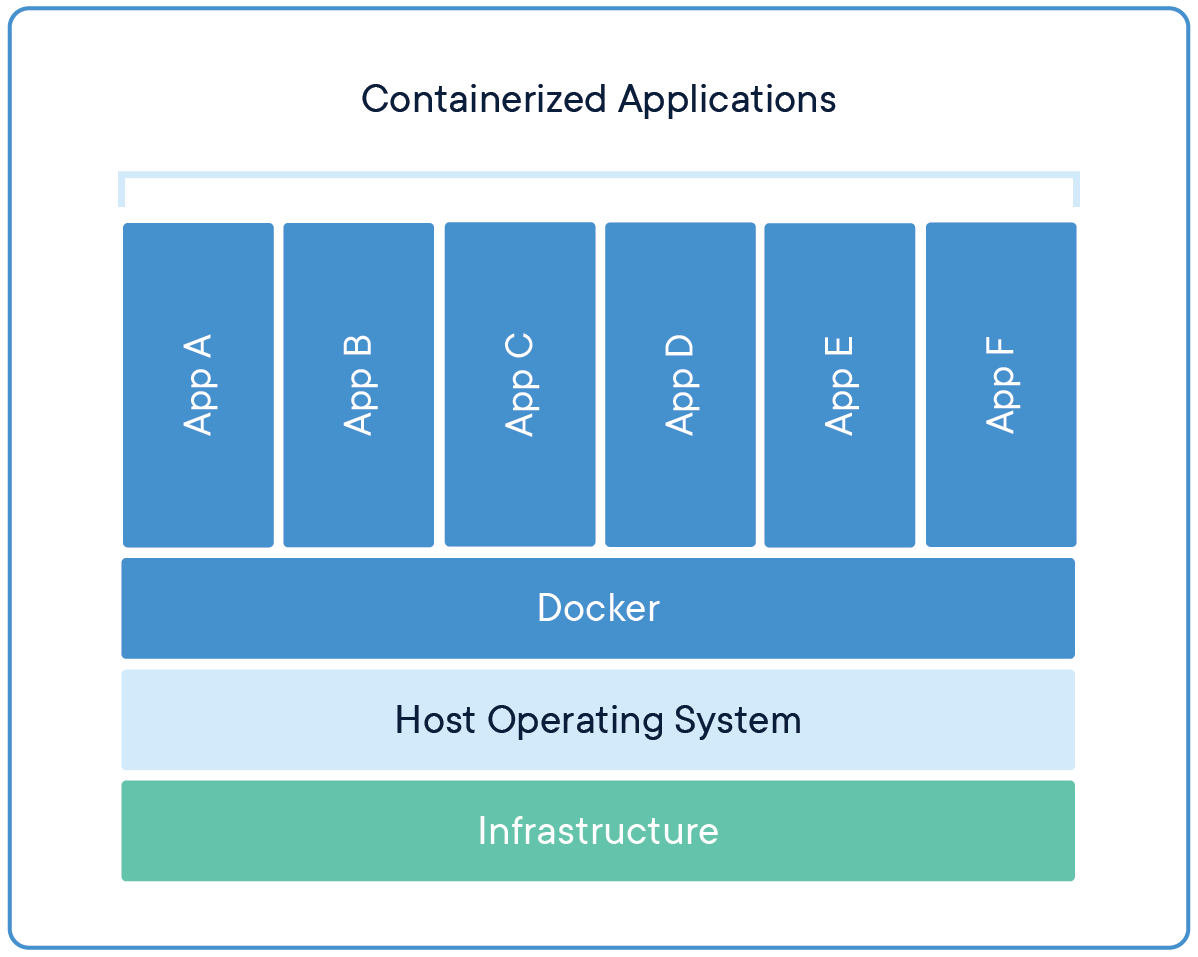

If you have ever heard the phrase "it works on my machine," then you understand the pain of maintaining a consistent developer environment across multiple platforms and stages. This is where Docker containers come in, as they encapsulate code, dependencies, and configurations into a portable image, ensuring consistency across different environments. The models and endpoint API behave identically during development, testing, and production deployments, regardless of variations in the underlying infrastructure.

By isolating dependencies—such as specific versions of Python, PyTorch, or CUDA—Docker ensures the reproducibility of experiments and reduces conflicts between libraries.

Source: Docker

You can share the Docker image with your team, and they can simply pull the image to create multiple instances. This process also streamlines deployment by packaging models into containers with APIs, enabling seamless integration with orchestration tools like Kubernetes for scalable production serving.

In short, as a machine learning engineer, you must know how to handle Docker images and deploy Docker containers in the cloud.

Understand the core concepts of containerization by enrolling in the short Containerization and Virtualization Concepts course.

Development Environment Docker Containers

To set up a seamless machine learning development workflow, you need a reliable environment with essential tools and libraries. These Docker container images provide pre-configured data science, coding, and experimentation setups.

1. Python

Python is the backbone of most machine learning projects, as nearly all major frameworks and applications, such as TensorFlow, PyTorch, and scikit-learn, are built around it. Its popularity extends to deployment, where Python is commonly used in Docker images to create consistent environments for model inference. For example:

FROM python:3.8

RUN pip install --no-cache-dir numpy pandasPython's simplicity, extensive library ecosystem, and compatibility with tools like Docker make it a go-to choice for machine learning development and deployment.

2. Jupyter Notebook data science stack

The Jupyter Docker Stacks provide a comprehensive environment for data science and machine learning, including Jupyter Notebook and a suite of popular data science libraries. You can easily pull the image from Docker Hub and use it locally. Additionally, you can deploy this instance on the cloud and share it with your team.

To run the Jupyter Data Science Notebook, use the following command:

docker run -it --rm -p 8888:8888 jupyter/datascience-notebookIt can also be used with a Dockerfile, so you don't have to install Python packages using pip. You only need to run the application or execute a Python script. Here’s a sample Dockerfile:

FROM jupyter/datascience-notebook:latestThis image includes popular libraries such as NumPy, pandas, matplotlib, and scikit-learn, along with Jupyter Notebook for interactive computing. It's ideal for data exploration, visualization, and machine learning experiments, making it a staple in the data science community.

For more information, check out the blog Docker for Data Science: An Introduction, where you can learn about Docker commands, dockerizing machine learning applications, and industry-wide best practices.

3. Kubeflow Notebooks

Kubeflow Notebook images are designed to work within Kubernetes pods, which are built for machine learning workflows on Kubernetes. You can choose from three types of notebooks: JupyterLab, RStudio, and Visual Studio Code (code-server).

Test it locally by running the following command:

docker run -it --rm -p 8888:8888 kubeflownotebookswg/jupyter-pytorchKubeflow Notebooks images are particularly useful in environments where Kubernetes is the underlying infrastructure. They facilitate collaborative and scalable machine learning projects and integrate seamlessly with Kubernetes for scalable and reproducible machine learning workflows.

Learn about Docker and Kubernetes by taking the Containerization and Virtualization with Docker and Kubernetes skill track. This interactive track will allow you to build and deploy applications in modern environments.

Deep Learning Framework Docker Containers

Deep learning frameworks require optimized environments for training and inference. These container images come pre-packaged with the necessary dependencies, saving time on installation and setup.

4. PyTorch

PyTorch is one of the leading deep learning frameworks known for its modular approach to building deep neural networks. You can create a Dockerfile and run your model inference easily without installing the PyTorch package using the pip command.

FROM pytorch/pytorch:latest

RUN python main.pyThe PyTorch Docker image is extensively used for training and deploying models in research and production settings. It provides an optimized environment for developing and deploying deep learning models, particularly in natural language processing and computer vision tasks.

5. TensorFlow

TensorFlow is another leading deep learning framework widely adopted in academia and industry. It works well with the Google ecosystem and all the TensorFlow-supportive packages for experimental tracking and model serving.

FROM tensorflow/tensorflow:latest

RUN python main.pyThe TensorFlow Docker image includes the TensorFlow Python package and its dependencies, often optimized for GPU acceleration. This makes it ideal for building and deploying machine learning models, especially in large-scale production environments.

6. NVIDIA CUDA deep learning runtime

The NVIDIA CUDA deep learning runtime is essential for accelerating deep learning computation on GPUs. You can easily add this to your Dockerfile, eliminating the need to manually set up CUDA for running GPU-accelerated machine learning tasks.

FROM nvidia/cuda

RUN python main.pyNVIDIA CUDA Docker images offer a runtime environment optimized for deep learning applications using NVIDIA GPUs, significantly enhancing the performance of deep learning models.

You can learn more about how GPUs accelerate data science workflows in the Polars GPU acceleration blog post.

Machine Learning Lifecycle Management Docker Containers

Managing the machine learning lifecycle—from experimentation to deployment—requires specialized tools. These Docker images streamline versioning, tracking, and deployment.

7. MLflow

MLflow is an open-source platform for managing the machine learning lifecycle, including experimentation, reproducibility, and deployment. You can run the MLflow server using the following command, which will provide the MLflow server for storing and versioning experiment experiments, models, and artifacts. It also provides an interactive dashboard for managers to view the experiments and models.

docker run -it --rm -p 9000:9000 ghcr.io/mlflow/mlflow8. Hugging Face Transformers

Hugging Face Transformers is widely used for various applications, from finding large language models to building image generation models. It is built on top of PyTorch, TensorFlow, and other major deep learning frameworks. This means you can use it to load any machine learning model, fine-tune it, track performance, and save your work to Hugging Face.

FROM huggingface/transformers-pytorch-gpu

RUN python main.pyHugging Face Transformers Docker images are popular for fine-tuning and deploying large language models quickly and efficiently. Overall, the modern AI ecosystem heavily depends on this package, and you can incorporate it into your Dockerfile to take advantage of all its features without worrying about dependencies.

Workflow Orchestration Docker Containers

Machine learning projects often involve complex workflows that require automation and scheduling. These workflow orchestration tools help streamline machine learning pipelines.

9. Airflow

Apache Airflow is an open-source platform for programmatically creating, scheduling, and monitoring workflows.

docker run -it --rm -p 8080:8080 apache/airflowAirflow is widely used for orchestrating complex machine learning pipelines. It provides a platform for authoring, scheduling, and monitoring workflows as directed acyclic graphs (DAGs), making it indispensable in machine learning engineering to automate and manage data workflows.

10. n8n

n8n is an open-source workflow automation tool gaining popularity due to its flexibility and ease of use, particularly in automating machine learning workflows. You can run it locally and build your own RAG application using the drag-and-drop interface.

docker run -it --rm -p 5678:5678 n8nio/n8nn8n provides a user-friendly interface for creating workflows integrating various services and APIs. It is especially useful for automating repetitive tasks and connecting different systems and services in machine learning projects.

To learn more about creating machine learning workflows in Python, consider taking the Designing Machine Learning Workflows in Python course.

Large Language Models Docker Containers

As LLMs become more prevalent, specialized Docker containers help deploy and scale these models efficiently.

11. Ollama

Ollama is designed to deploy and run large language models locally. Using the Ollama Docker, you can download and serve the LLMs, allowing you to integrate them into your applications. It is the easiest way to run both quantized and full large language models in production.

docker run -it --rm ollama/ollama12. Qdrant

Qdrant is a vector search engine used for deploying vector similarity search applications. It comes with a dashboard and a server for you to connect to send and retrieve vector data quickly.

docker run -it --rm -p 6333:6333 qdrant/qdrantQdrant provides tools for efficient similarity search and clustering of high-dimensional data. It is particularly useful in applications like recommendation systems, image and text retrieval, and semantic search, which are increasingly important in machine learning projects involving large language models.

Conclusion

In this blog, we explored 12 essential Docker container images tailored for machine learning projects. These images provide a comprehensive toolkit, from development environments to tools for large language models. By leveraging these containers, data scientists and engineers can build consistent, reproducible, and scalable project environments, streamlining workflows and enhancing productivity.

The next step in the learning process is to build your own project using these Docker container images!

If you want to learn how to build your own AI applications with the latest AI developer tools, check out the Developing AI Applications track.

Develop AI Applications

FAQs

Is Docker good for machine learning?

Yes, Docker is highly beneficial for machine learning projects. It provides a flexible way to manage environments and dependencies, ensuring consistency and reproducibility. By containerizing your machine learning models, you can streamline deployment and avoid issues caused by differences in system configurations.

What is the Docker Hub?

Docker Hub is a cloud-based repository where you can find and share container images. It offers a vast library of pre-built images, which can significantly accelerate development workflows and reduce setup time. Additionally, you can build your own Docker images and push them to your Docker Hub account, making them accessible to your team for collaboration.

Where can I find Docker containers?

Docker containers and images are stored in specific locations depending on your operating system:

- Ubuntu, Fedora, Debian: /var/lib/docker/

- Windows: C:\ProgramData\DockerDesktop

- MacOS: ~/Library/Containers/com.docker.docker/Data/vms/0/

What is the most popular use of Docker?

Docker is widely used for developing, testing, and deploying web applications. It simplifies handling complex integrations and dependencies, making it a go-to tool for modern software development.

Is Docker faster than VM?

Yes, Docker is generally faster than traditional virtual machines. Docker uses OS-level virtualization, which allows containers to share the host operating system's kernel.

What is alpine in Docker?

Alpine is a lightweight Linux distribution often used in Docker containers. It is known for its small size, security, and efficiency, making it ideal for creating minimal Docker images that reduce resource usage and improve performance.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.