Track

Retrieval-augmented generation (RAG) is quickly becoming one of the most practical evolutions in using large language models. It's not a new model or a replacement for LLMs; instead, it's an innovative system that helps them reason with facts pulled from real data sources. At its core, RAG solves some of the biggest challenges in generative AI: hallucinations, limited memory, and outdated knowledge. Combining retrieval and generation into a single pipeline lets models ground their answers in a current, relevant context, often specific to a business or domain.

This shift matters more than ever. In enterprise settings, LLMs are expected to answer with accuracy and explainability. Developers want outputs reflecting product documentation, internal wikis, or support tickets, not generic web knowledge. RAG makes that possible.

This guide explains how the RAG framework works, where it fits your AI stack, and how to use it with fundamental tools and data.

What Is a RAG Framework?

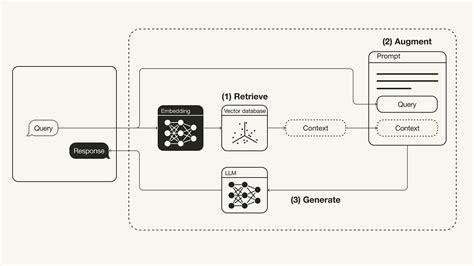

RAG stands for Retrieve, Augment, Generate. It's smart to give large language models (LLMs) access to external knowledge without retraining them.

Here’s the idea: when a user asks a question, instead of throwing that question straight at the model and hoping for a good answer, RAG first retrieves relevant information from a knowledge base. Then, it augments the original prompt with this extra context. Finally, the LLM generates a response using the question and the added information.

This approach helps solve key LLM limitations—like hallucinations, short memory (context windows), and knowledge cutoffs—by plugging in real, current, and relevant data on the fly.

Why Use a RAG Framework?

Standalone LLMs are impressive, but they have blind spots. They hallucinate, forget things, and know nothing newer than their last training run. RAG changes that. By combining LLMs with real-time retrieval, you get:

- Factual accuracy: Responses are based on your actual data, not guesses.

- Domain relevance: You can inject niche or internal knowledge.

- No retraining is needed: Just update your knowledge base—no fine-tuning is required.

- Scalability: Great for chatbots, search tools, support assistants, and more.

In short, RAG lets you build smarter, more trustworthy AI systems with far less effort.

The Best Open-Source RAG Frameworks

The RAG ecosystem is growing fast, with dozens of open-source projects helping developers build smarter, retrieval-augmented applications. Whether you're looking for something pluggable and straightforward or a full-stack pipeline with customizable components, there’s likely a tool for you.

Below, we'll review the top open-source RAG frameworks. Each entry highlights its GitHub traction, deployment style, unique strengths, primary use cases, and a visual to give you a quick feel for the project.

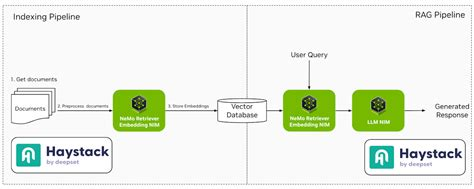

1. Haystack

Haystack is a robust, modular framework designed for building production-ready NLP systems. It supports various components like retrievers, readers, and generators, allowing seamless integration with tools like Elasticsearch and Hugging Face Transformers.

- GitHub stars: ~13.5k

- Deployment: Docker, Kubernetes, Hugging Face Spaces

- Standout features: Modular components, strong Hugging Face integration, multilingual support

- Use cases: Enterprise-grade QA systems, chatbots, internal document search (TrueFoundry, Anyscale, LobeHub)

2. LlamaIndex

LlamaIndex is a data framework that connects custom data sources to large language models. It simplifies the process of indexing and querying data, making it easier to build applications that require context-aware responses.

- GitHub stars: ~13k

- Deployment: Python-based, runs anywhere with file or web data

- Standout features: Simple abstractions for indexing, retrieval, and routing

- Use cases: Personal assistants, knowledge bots, RAG demos (GitHub)

3. LangChain

LangChain is a comprehensive framework that enables developers to build applications powered by language models. It offers tools for chaining together different components like prompt templates, memory, and agents to create complex workflows.

- GitHub stars: ~72k

- Deployment: Python, JavaScript, supported on all major clouds

- Standout features: Tool chaining, agents, prompt templates, integrations galore

- Use cases: End-to-end LLM applications, data agents, dynamic chat flows

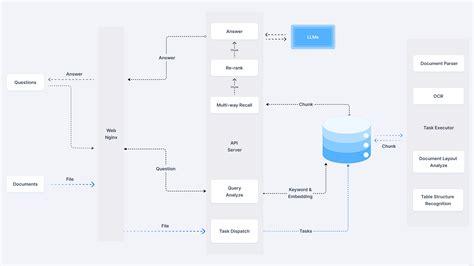

4. RAGFlow

RAGFlow is an open-source engine focused on deep document understanding. It provides a streamlined workflow for businesses to implement RAG systems, emphasizing truthful question-answering backed by citations from complex data formats.

- GitHub stars: ~1.1k

- Deployment: Docker; supports FastAPI-based microservices

- Standout features: Chunk visualizer, Weaviate integration, flexible configs

- Use cases: Lightweight RAG backends, enterprise prototypes (jina.ai)

5. txtAI

txtAI is an all-in-one AI framework that combines semantic search with RAG capabilities. It allows for building applications that efficiently search, index, and retrieve information, supporting various data types and formats.

- GitHub stars: ~3.9k

- Deployment: Python-based; runs with just a few lines of code.

- Standout features: Extremely lightweight, offline mode, scoring/ranking support

- Use cases: Embedding-based search engines, chat-with-PDFs, metadata Q&A (Introduction | LangChain, GitHub, GitHub)

6. Cognita

Cognita is a modular RAG framework designed for easy customization and deployment. It offers a frontend interface to experiment with different RAG configurations, making it suitable for both development and production environments.

- GitHub stars: Notable

- Deployment: Docker + TrueFoundry integrations

- Standout features: API-driven, UI-ready, easy to scale

- Use cases: Business-facing AI assistants, data-backed chatbots (TrueFoundry, LlamaIndex)

7. LLMWare

LLMWare provides a unified framework for building enterprise-grade RAG applications. It emphasizes using small, specialized models that can be deployed privately, ensuring data security and compliance.

- GitHub stars: ~2.5k

- Deployment: CLI tool, APIs, customizable project templates

- Standout features: No-code pipelines, document parsing tools

- Use cases: Document agents, knowledge assistants (LinkedIn)

8. STORM

STORM is a research assistant that extends the concept of outline-driven RAG. It focuses on generating comprehensive articles by synthesizing information from various sources, making it ideal for content creation tasks.

- GitHub stars: Niche

- Deployment: Source-code installation

- Standout features: Co-STORM reasoning engine, graph-based exploration

- Use cases: Custom RAG setups, research QA pipelines (GitHub)

9. R2R

R2R (Reason to Retrieve) is an advanced AI retrieval system supporting RAG with production-ready features. It offers multimodal content ingestion, hybrid search, and knowledge graph integration, catering to complex enterprise needs.

- GitHub stars: Niche

- Deployment: REST API, supports hybrid and graph search

- Standout features: Multimodal RAG, document validation

- Use cases: AI research tools, academic agents (YouTube, Open Source For You, Weaviate Newsletter)

10. EmbedChain

EmbedChain is a Python library that simplifies the creation and deployment of AI applications using RAG models. It supports various data types, including PDFs, images, and web pages, making it versatile for different use cases.

- GitHub stars: ~3.5k

- Deployment: Python lib, also available as a hosted SaaS

- Standout features: Single-command app setup, API-first

- Use cases: Fast prototyping, building knowledge bots for any domain (LLMWare AI for Complex Enterprises)

11. RAGatouille

RAGatouille integrates advanced retrieval methods like ColBERT into RAG pipelines. It allows for modular experimentation with different retrieval techniques, enhancing the flexibility and performance of RAG systems.

- GitHub stars: Niche but growing

- Deployment: Python package

- Standout features: Retrieval experimentation, modular inputs

- Use cases: Evaluating retrieval techniques in RAG pipelines

12. Verba

Verba is a customizable personal assistant that utilizes RAG to query and interact with data. It integrates with Weaviate's context-aware database, enabling efficient information retrieval and interaction.

- GitHub stars: Modest

- Deployment: Local and cloud supported

- Standout features: Contextual memory, personal data Q&A

- Use cases: Build-your-own assistant, data exploration (LinkedIn, Brandfetch)

13. Jina AI

Jina AI offers tools for building multimodal AI applications with scalable deployment options. It supports various communication protocols, making it suitable for developers aiming to build and scale AI services.

- GitHub stars: ~18k (main org)

- Deployment: Docker, REST/gRPC/WebSocket APIs

- Standout features: Multimodal pipelines, hybrid search

- Use cases: Enterprise-grade apps that combine text, image, or video

14. Neurite

Neurite is an emerging RAG framework that simplifies building AI-powered applications. Its emphasis on developer experience and rapid prototyping makes it an attractive option for experimentation.

- GitHub stars: Small but promising

- Deployment: Source setup

- Standout features: Neural-symbolic fusion

- Use cases: AI research experiments, prototype systems (GitHub, Hugging Face, GitHub)

15. LLM-App

LLM-App is a framework for building applications powered by large language models. It provides templates and tools to streamline the development process, making integrating RAG capabilities into various applications easier.

- GitHub stars: Emerging

- Deployment: Git-based deployment and CLI tools

- Standout features: App starter templates, OpenAI-ready

- Use cases: Personal RAG projects, hackathon tools

Each framework offers unique features tailored to different use cases and deployment preferences. Depending on your specific requirements, ease of deployment, customization, or integration capabilities, you can choose the framework that best aligns with your project's goals.

Choosing the Right RAG Framework

Selecting the appropriate RAG framework depends on your specific needs: legal document analysis, academic research, or lightweight local development. Use this table to quickly compare popular open-source RAG frameworks based on how they're deployed, how customizable they are, their support for advanced retrieval, integration capabilities, and what each tool is best used for.

|

Framework |

Deployment |

Customizability |

Advanced Retrieval |

Integration Support |

Best For |

|

Haystack |

Docker, K8s, Hugging Face |

High |

Yes |

Elasticsearch, Hugging Face |

Enterprise search & QA |

|

LlamaIndex |

Python (local/cloud) |

High |

Yes |

LangChain, FAISS |

Document-aware bots |

|

LangChain |

Python, JS, Cloud |

High |

Yes |

OpenAI, APIs, DBs |

LLM agents & pipelines |

|

RAGFlow |

Docker |

Medium |

Yes |

Weaviate |

Legal or structured docs |

|

txtAI |

Local (Python) |

Medium |

Basic |

Transformers |

Lightweight local dev |

|

Cognita |

Docker + UI |

High |

Yes |

TrueFoundry |

GUI-based business tools |

|

LLMWare |

CLI, APIs |

High |

Yes |

Private LLMs |

Private enterprise RAG |

|

STORM |

Source install |

High |

Yes |

LangChain, LangGraph |

Research assistants |

|

R2R |

REST API |

High |

Yes |

Multimodal |

Academic & hybrid RAG |

|

EmbedChain |

Python, SaaS |

Medium |

Basic |

Web, PDF, Images |

Rapid prototyping |

|

RAGatouille |

Python |

High |

Yes |

ColBERT |

Retriever experimentation |

|

Verba |

Cloud/Local |

Medium |

Basic |

Weaviate |

Contextual assistants |

|

Jina AI |

Docker, REST/gRPC |

High |

Yes |

Multimodal APIs |

Scalable multimodal apps |

|

Neurite |

Source setup |

Medium |

No |

N/A |

Experimentation |

|

LLM-App |

CLI, Git |

Medium |

No |

OpenAI |

Hackathon LLM apps |

Common Pitfalls When Implementing RAG

RAG systems can be robust, but they also come with sharp edges. If you're not careful, your model's answers might end up worse than if you'd used no retrieval. Here are four common issues to avoid—and what to do instead.

1. Indexing too much junk

Not everything needs to go into your vector store. Dumping every document, blog post, or email thread might feel thorough, but it just pollutes your search. The retriever pulls in low-value context, which the model must sort through (often misused). Instead, be selective. Only index content that's accurate, well-written, and useful. Clean up before you store.

2. Ignoring token limits

LLMs have short memories. Something gets cut off if your prompt, plus all the retrieved chunks, exceeds the model's token limit. That "something" might be the part that mattered. Instead, keep prompts tight. Limit the number of retrieved chunks or summarize them before sending them to the model.

3. Optimizing for recall, not precision

It's tempting to retrieve more documents to ensure you "cover everything." But if the extra results are loosely related, you crowd the prompt with fluff. The model gets distracted or, worse, confused. Instead, aim for high precision. A few highly relevant chunks are better than a long list of weak matches.

4. Flying blind without logs

When the model gives a bad answer, do you know why? You're debugging in the dark if you're not logging the query, retrieved documents, and the final prompt. Instead, Log the full RAG flow. That includes user input, retrieved content, what was sent to the model, and the model's response.

Conclusion

RAG isn't a magic fix for everything, but when used correctly, it's one of the most effective ways to make LLMs more innovative, practical, and grounded in the data that matters to your business.

The key is knowing what you're working with. Choosing the right framework is part of it, but success comes down to how well you understand your data and how carefully you tune your retrieval pipeline. Garbage in, garbage out still applies, especially when you're building systems that generate language, not just retrieve facts.

Get your indexing right, watch your token usage, and monitor the flow end-to-end. When all those pieces click, RAG can transform your AI applications from impressive to genuinely helpful.

Tech writer specializing in AI, ML, and data science, making complex ideas clear and accessible.

FAQs

What is a RAG framework used for?

RAG frameworks help LLMs answer questions using real-time data, like documents, websites, or internal wikis.

Do I need to fine-tune my model to use RAG?

No. RAG frameworks work by retrieving relevant data and adding it to the prompt. You don’t have to retrain the base model.

What kinds of data can RAG frameworks use?

That depends on the framework. Many support PDFs, websites, markdown files, databases, and more.

Can RAG frameworks work with OpenAI or Hugging Face models?

Yes, most frameworks are model-agnostic and integrate easily with APIs from OpenAI, Cohere, Anthropic, and Hugging Face.

What’s the difference between vector search and keyword search in RAG?

Vector search uses embeddings to find semantically relevant text, while keyword search relies on exact word matches.