Course

Databricks SQL is a powerful tool designed for data management and analytics within the Databricks Lakehouse platform. This platform integrates data engineering, data science, and business analytics into a unified experience. Therefore, Databricks SQL is important for data professionals looking to streamline their data workflows, query execution, and BI tasks without the complexity of traditional infrastructure management.

In this article, I will explore the components, features, and tools of Databricks SQL and show practical examples of creating and using Databricks SQL warehouse. As we get started, I highly recommend taking DataCamp’s Introduction to Databricks course to learn about Databricks as a data warehousing solution for Business Intelligence (BI), leveraging SQL-optimized capabilities to create queries and analyze data.

What is Databricks SQL?

Databricks SQL is a robust analytics tool within the Databricks Lakehouse platform that allows data professionals to run SQL queries, analyze data, and create interactive dashboards. Designed with a serverless architecture, Databricks SQL combines the flexibility of data lakes with the governance and performance capabilities of data warehouses.

The key components of Databricks SQL include SQL Warehouses, SQL Editors, and SQL Dashboards. Don't worry if you are not familiar with each of these because I'll go into the meaning of each down below.

Databricks SQL vs. SQL Server and Others

Unlike other SQL dialects like MySQL, PostgreSQL, or SQL Server, which are designed for transactional databases, Databricks SQL is optimized for large-scale analytics in a Lakehouse environment. Traditional SQL databases focus on structured data storage, whereas Databricks SQL integrates with data lakes while preserving the performance of a data warehouse. With serverless SQL warehouses, there’s no need to manage infrastructure, as resources are provisioned automatically and scale elastically.

Also, I want to say, in Databricks SQL, query execution is faster because it is accelerated by the Photon engine, which supports vectorized processing. Last thing to say: Databricks SQL is built for BI and collaboration; it offers native support for Power BI, Tableau, and Looker.

Core Components of Databricks SQL

Databricks SQL core components facilitate efficient querying, data visualization, and collaboration among users. Below is an overview of the primary tools and features available within Databricks SQL.

SQL Warehouses

SQL Warehouses serve as the computational resources for executing SQL queries in Databricks SQL. They are designed to handle varying workloads and provide performance optimizations based on the type of warehouse selected. There are three main types of SQL Warehouses:

- Classic: This type supports basic functionalities and is suitable for entry-level performance. It utilizes the existing compute layer in your cloud account but lacks advanced features like Intelligent Workload Management (IWM) and Predictive IO.

- Pro: Pro SQL Warehouses offer enhanced performance with support for Photon, a vectorized query engine that speeds up SQL operations and improves security options.

- Serverless Eliminates infrastructure management by automatically provisioning resources, scaling elastically, and ensuring low-latency query execution. They are ideal for environments with fluctuating workloads and offer cost-effective performance.

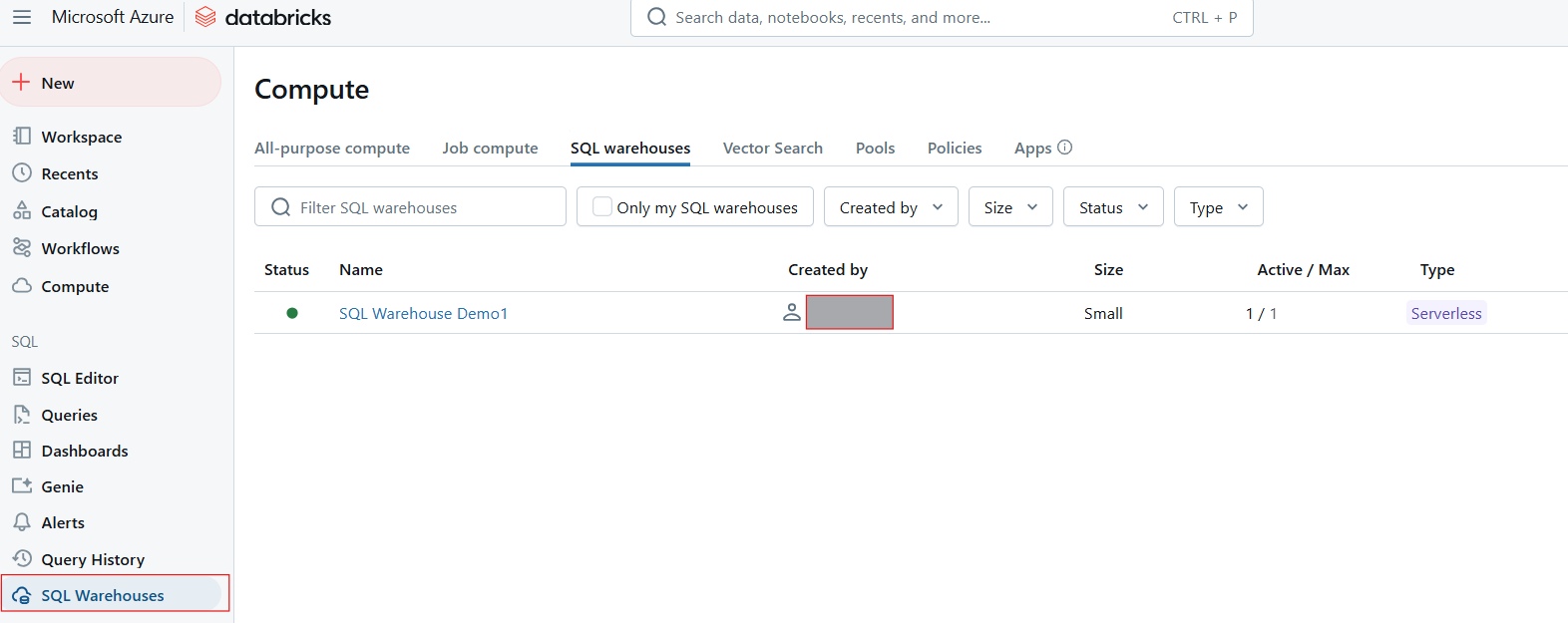

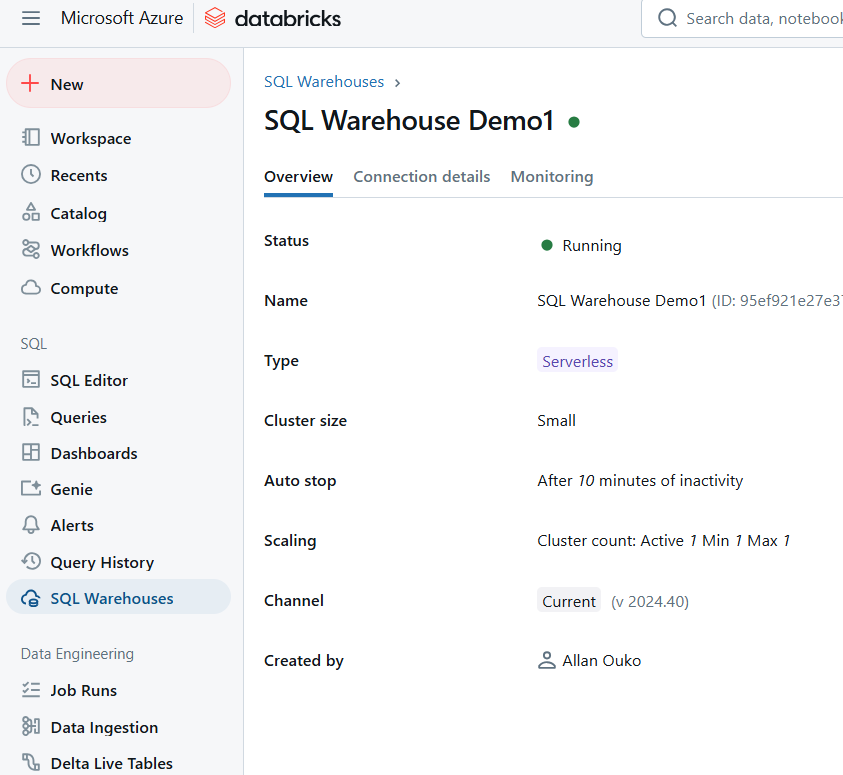

SQL Warehouse in Databricks SQL. Image by Author.

SQL Editor

The SQL Editor is a user-friendly interface that enables users to write, execute, and manage SQL queries. Key features of the SQL Editor include:

- Intuitive Querying: Supports syntax highlighting, autocomplete, and error detection for efficient query writing.

- Data Preview: Users can preview data directly within the editor, making it easier to understand datasets before executing complex queries.

- Query Result Caching: This feature enhances performance by caching previous query results, allowing for faster response times on repeated queries.

- Parameterized Queries: Users can create dynamic queries by incorporating parameters, which simplifies the process of running similar queries with different values.

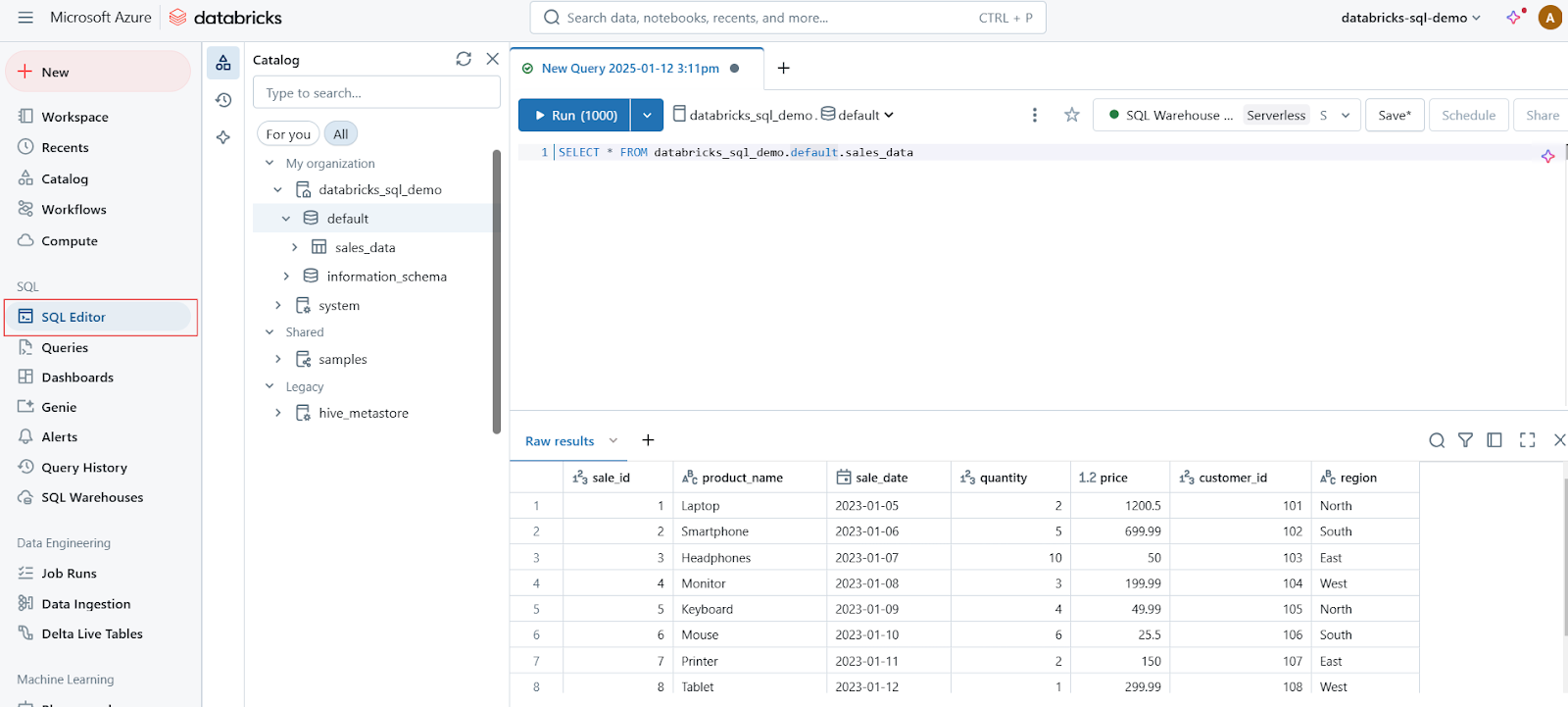

SQL Editor in Databricks SQL. Image by Author.

Dashboards

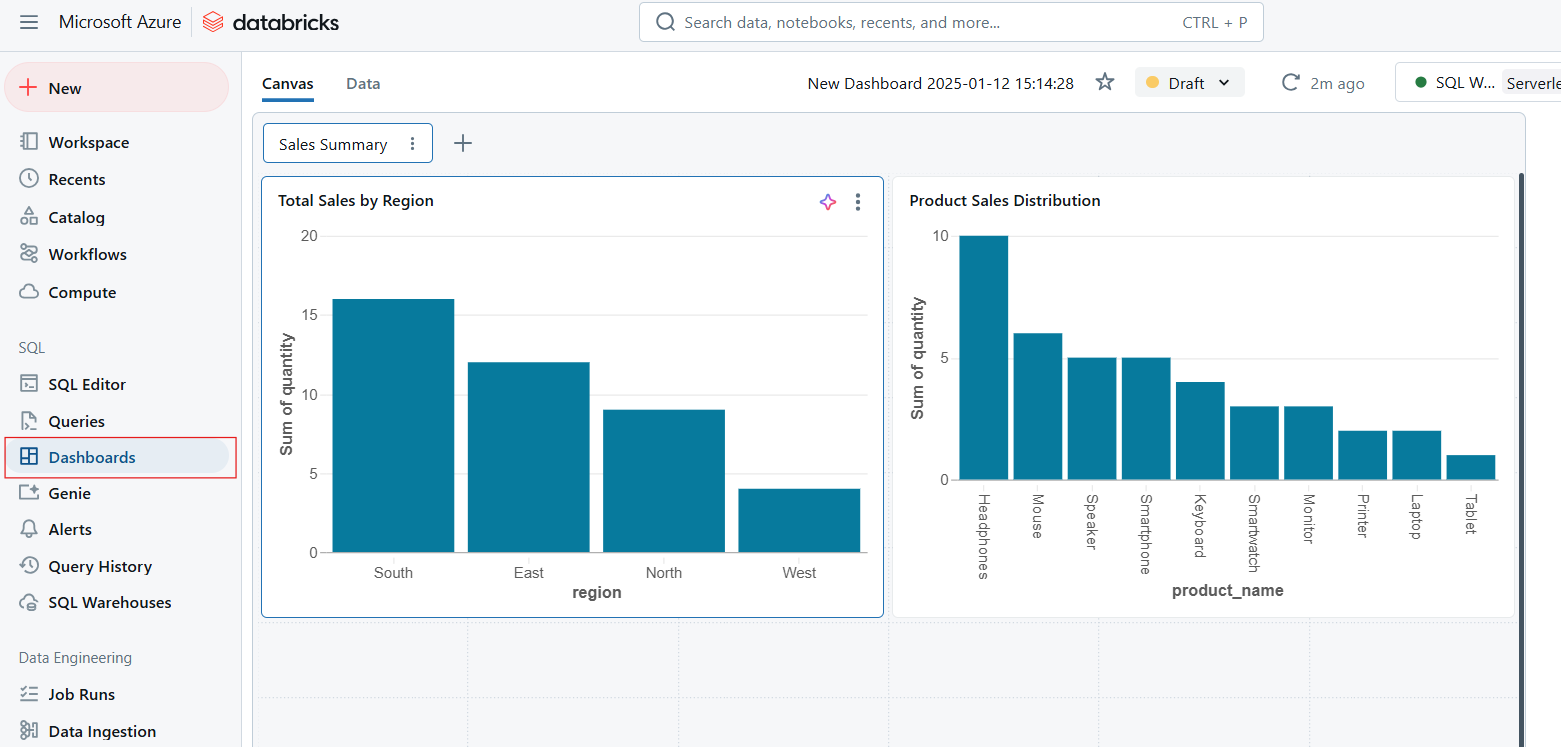

Dashboards enable data visualization by transforming query results into interactive charts, graphs, and widgets. Dashboards are ideal for monitoring key metrics and communicating insights effectively across an organization. The key capabilities of dashboards include the following:

- Real-Time Analytics: Automatically refresh dashboards based on live query results.

- Collaboration: Share dashboards across teams to promote data-driven decisions.

- Customization: Choose from a variety of visualization types and layout options to suit specific needs.

Dashboards in Databricks SQL. Image by Author.

Alerts

Alerts in Databricks SQL provide a way to monitor data changes and trigger notifications when specified conditions are met. Alerts are essential for maintaining data quality and ensuring timely responses to important changes. The alert functionality works in the following way.

- Set Conditions: Define thresholds or rules based on query results.

- Trigger Notifications: Receive alerts via email, Slack, or other integrations when conditions are satisfied.

- Proactive Monitoring: Stay informed about critical events, such as data anomalies or system issues, in real-time.

Query history and profiling

Finally, I want to say that Databricks SQL offers tools for tracking query history and profiling:

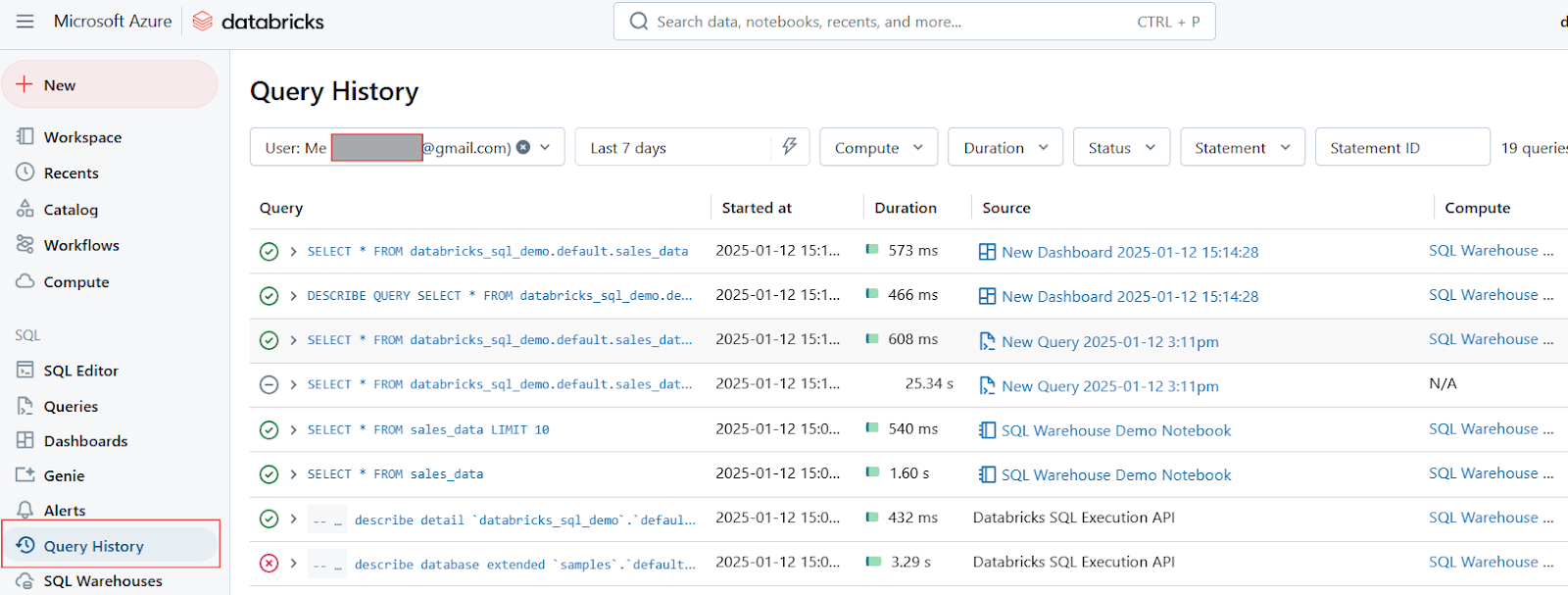

- Query History: This feature allows users to review previously executed queries, providing insights into their performance over time. It helps identify trends or recurring issues.

- Query Profiling: Query profiling tools analyze the efficiency of executed queries, highlighting bottlenecks and suggesting optimizations. This capability is important for improving overall query performance and resource utilization.

Query History in Databricks SQL. Image by Author.

Key Features and Tools in Databricks SQL

Databricks SQL is packed with advanced features and tools. These features are designed to enhance performance, streamline workflows, and enable seamless integration with other tools. Let us look at some of the key things that make Databricks SQL such a great choice.

Photon Engine

The Photon Engine is a high-performance, vectorized query engine developed by Databricks to accelerate SQL query execution significantly. The key benefits of the Photon Engine include the following:

- Vectorized Execution: Unlike traditional row-by-row processing, Photon utilizes vectorized execution, which processes data in batches. This approach allows it to leverage advanced CPU instructions like SIMD (Single Instruction, Multiple Data) for better efficiency.

- Integration with Existing Workloads: Photon is fully compatible with Apache Spark APIs, meaning that users can enable it without modifying existing code. This seamless integration allows organizations to benefit from enhanced performance without the need for extensive rework.

- Caching and Optimization: The engine includes an advanced query optimizer and a caching layer that intelligently selects which data to cache, further improving query performance.

CloudFetch and Async I/O

CloudFetch and Async I/O are features designed to enhance data transfer speeds and improve the handling of small files during query execution. These features work in the following ways:

- CloudFetch: This feature optimizes how results are written back to cloud storage by allowing parallel writes across all compute nodes. It significantly reduces the time taken to transfer large datasets back to clients, achieving up to 10 times faster performance in real-world scenarios.

- Async I/O: This capability enhances the handling of small files by reading multiple blocks of data simultaneously while processing current blocks. This approach can boost overall query performance by up to 12 times when dealing with small files (e.g., 1MB). It is particularly effective for scenarios involving many small files or "cold data" that isn't cached.

Integration with BI tools

Databricks SQL integrates seamlessly with popular Business Intelligence (BI) tools, making it easier for teams to analyze and visualize data. The supported integrations include the following:

- Power BI: Users can connect their Databricks workspace directly with Power BI, allowing for easy synchronization of data models. For example, analysts can publish reports directly from Databricks into Power BI, maintaining defined relationships between tables without manual intervention.

- Tableau: Users can leverage live connections to visualize data stored in Databricks SQL. This integration allows for real-time analytics and interactive dashboards that reflect the latest data updates.

- dbt: Databricks SQL also integrates with dbt (data build tool), enabling teams to manage their transformations directly within the Databricks environment. This integration supports incremental models and materialized views, streamlining the process of building and maintaining robust data pipelines.

Serverless architecture

Databricks SQL’s serverless architecture eliminates the need for manual infrastructure management by automatically provisioning and scaling resources based on workload demands. The serverless architecture will automatically adjust resources to handle varying query loads without downtime. This feature ensures that users do not need to configure or maintain clusters manually. The serverless architecture is also cost-efficient, as users pay only for the resources used during query execution.

For example, a retail company experiences high query loads during peak business hours. The serverless architecture automatically scales up resources to ensure consistent performance and scales down during off-hours to save costs.

Step-by-Step Guide to Creating and Using Databricks SQL Warehouses

Databricks SQL Warehouses are essential for executing SQL queries efficiently within the Databricks environment. Now, in this section, I will walk you through the process of creating SQL warehouse and using it with notebooks. If you need to refresh your knowledge about data warehouses, I recommend taking our Data Warehousing Concepts course to learn the fundamentals of data modeling and data transformation.

Creating a Databricks SQL Warehouse

Follow these steps to create a SQL Warehouse using the Databricks web UI:

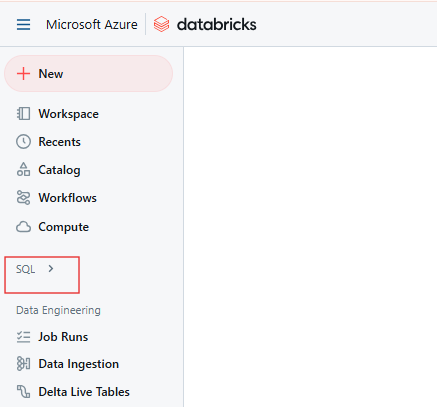

Step 1: Navigate to the Databricks SQL page

From the Databricks workspace, click on the SQL icon in the sidebar.

Databricks SQL page. Image by Author.

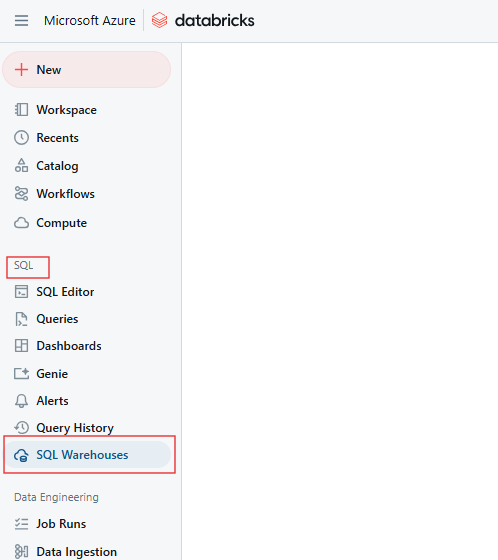

Step 2: Open the SQL Warehouses tab

On the SQL page, navigate to the SQL Warehouses tab.

Databricks SQL Warehouse tab. Image by Author.

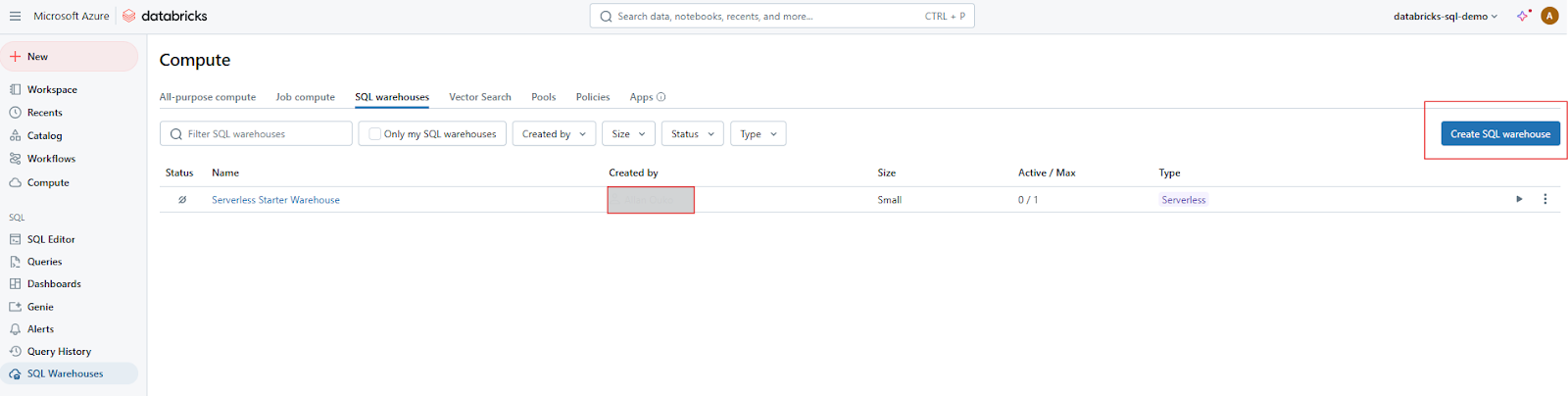

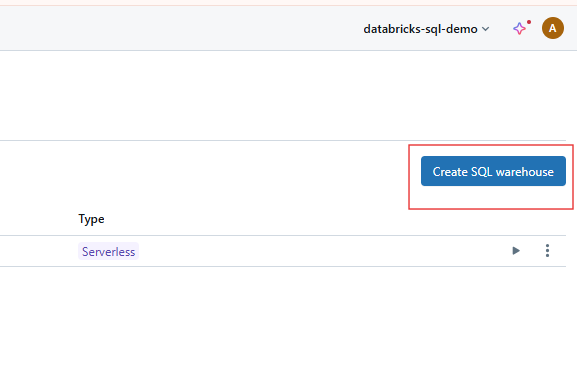

Step 3: Click on "Create Warehouse"

Click the Create Warehouse button to start configuring your new SQL Warehouse.

Creating SQL Warehouse on Databricks SQL. Image by Author.

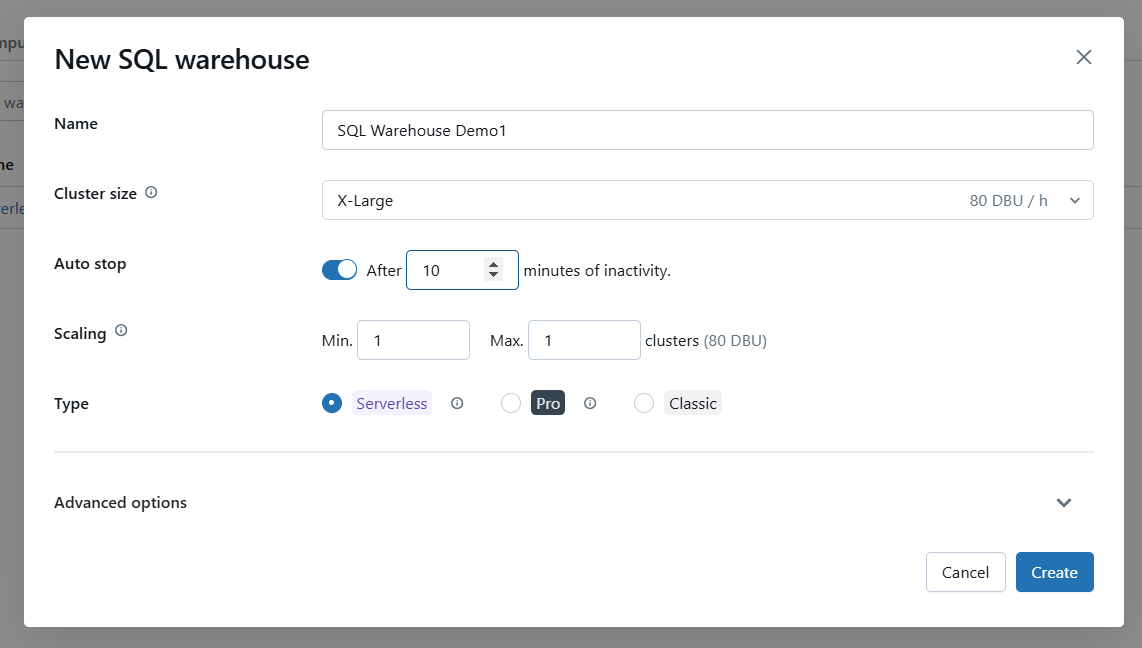

Step 4: Configure basic settings

Configure the warehouse settings using the following options.

- Name: Provide a unique and descriptive name for your SQL Warehouse.

- Cluster Size: Choose the size of the cluster based on your workload requirements. It goes without saying that larger clusters handle more concurrent queries and larger datasets.

- Auto-Stop: Set the idle timeout period to automatically stop the warehouse when it’s not in use. Ten minutes seems reasonable to me. This saves costs by releasing resources when idle.

- Scaling: Enable Auto-Scaling to adjust resources dynamically based on the query load. Specify the minimum and maximum number of clusters within which to scale.

- Serverless Option: If available in your account, choose Serverless SQL Warehouse for simplified management and automatic scaling.

Configure basic settings for Databricks SQL Warehouse. Image by Author.

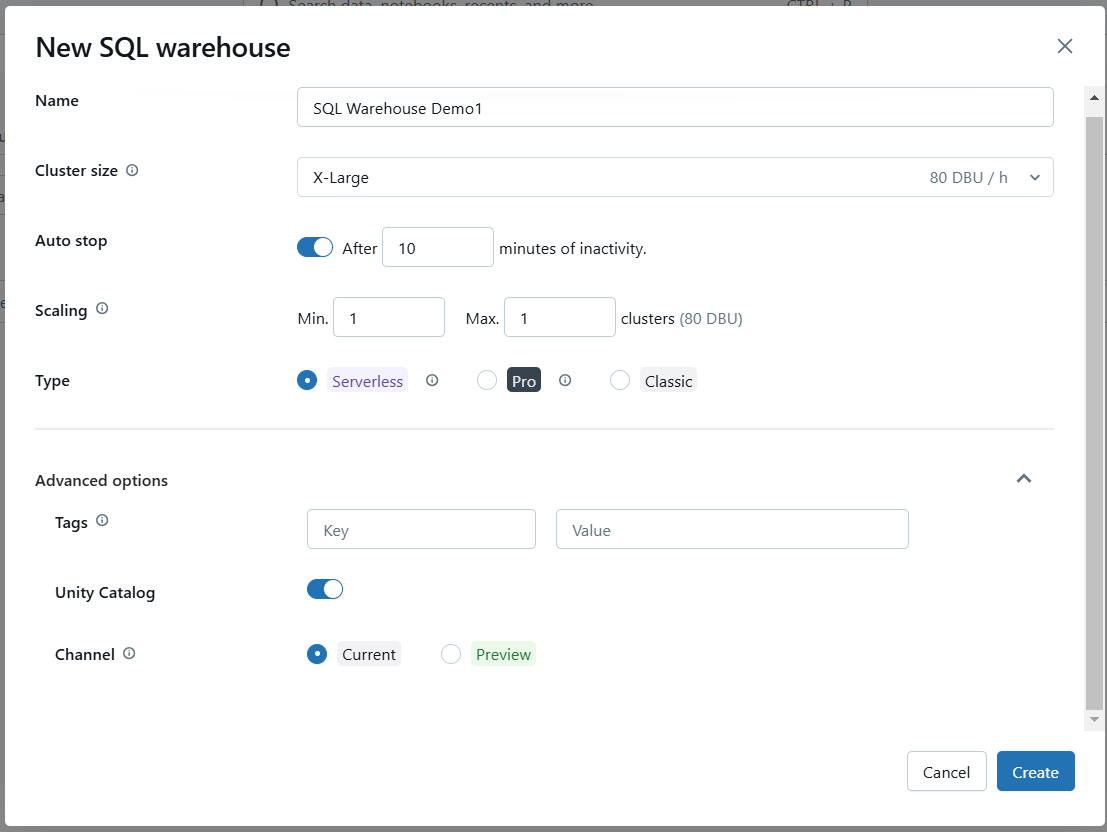

Step 5: Advanced options (Optional)

If needed, configure advanced options such as enabling Photon or setting specific SQL configurations.

Configure advanced settings for Databricks SQL Warehouse. Image by Author.

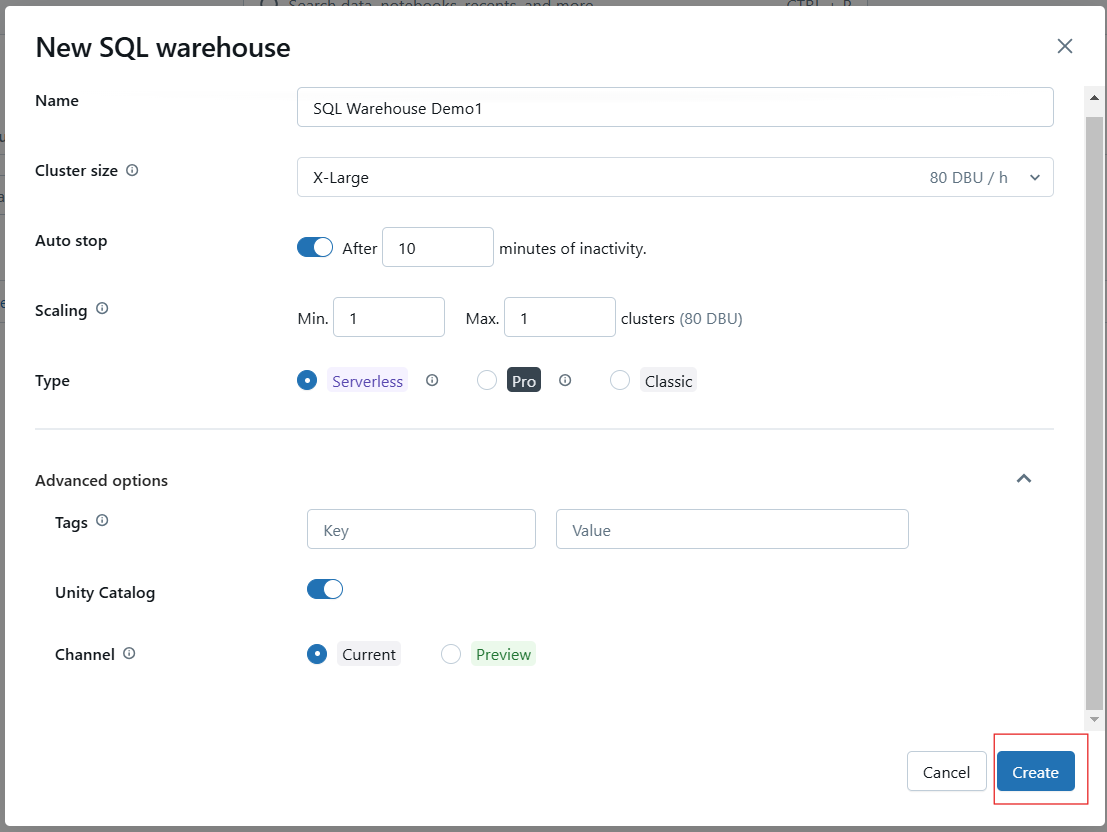

Step 6: Save and start the warehouse

Click Create to save your configuration. Once created, start the warehouse to make it operational.

Creating the Databricks SQL Warehouse. Image by Author.

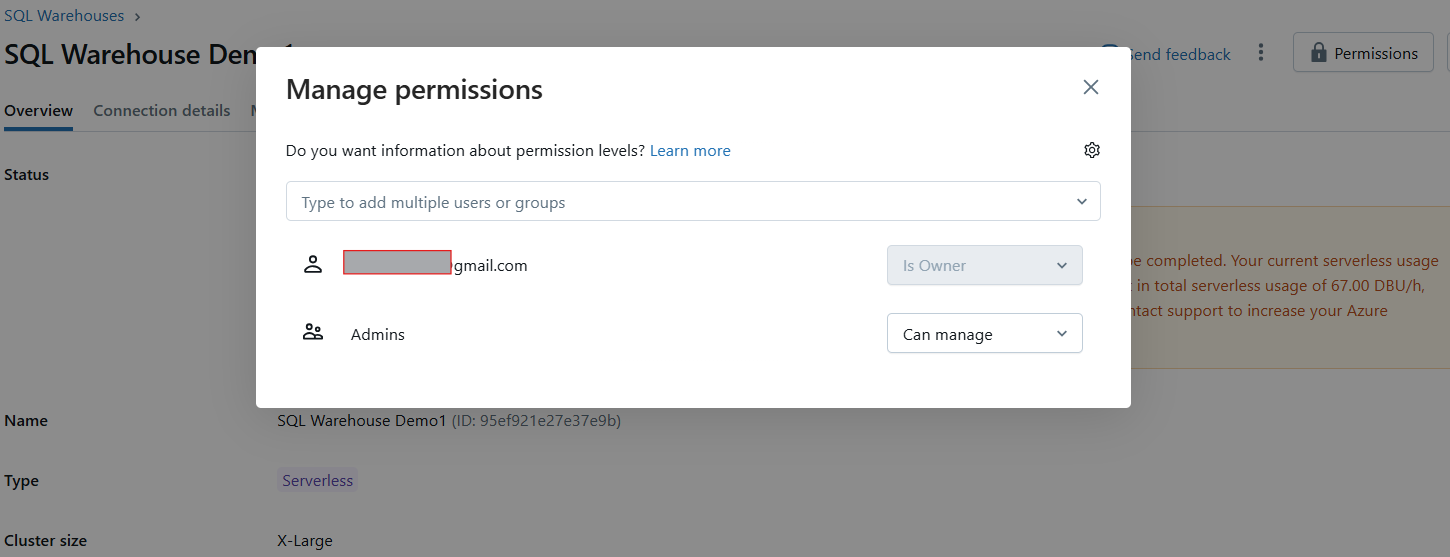

Step 7: Set permissions (Optional)

After creation, a permissions modal will appear where you can grant users or groups access to the warehouse.

Managing permissions of Databricks SQL Warehouse. Image by Author.

Once created, your SQL warehouse will start automatically, allowing you to execute queries immediately.

Example of Databricks SQL Warehouse. Image by Author.

Using a Notebook with a Databricks SQL Warehouse

To execute SQL queries in a notebook using your newly created SQL warehouse, follow these steps:

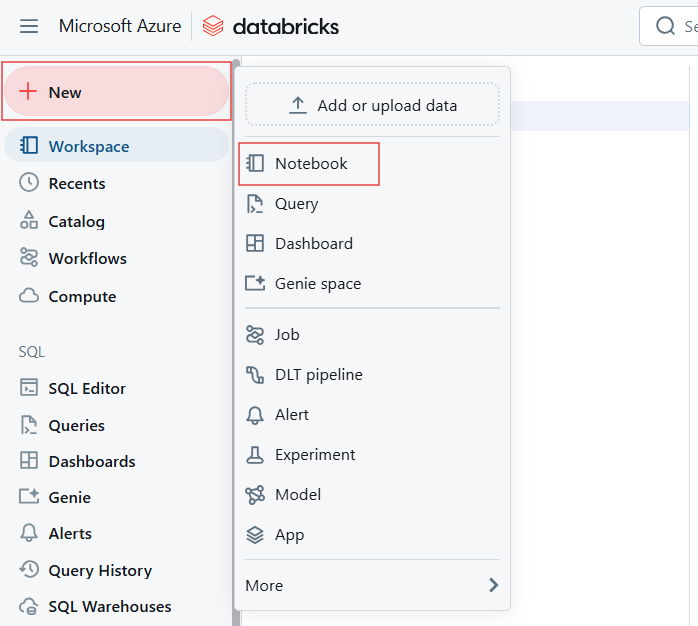

Step 1: Open or Create a Notebook

From the Databricks workspace, create a new notebook or open an existing one.

Creating a new Notebook to create Databricks SQL Warehouse. Image by Author.

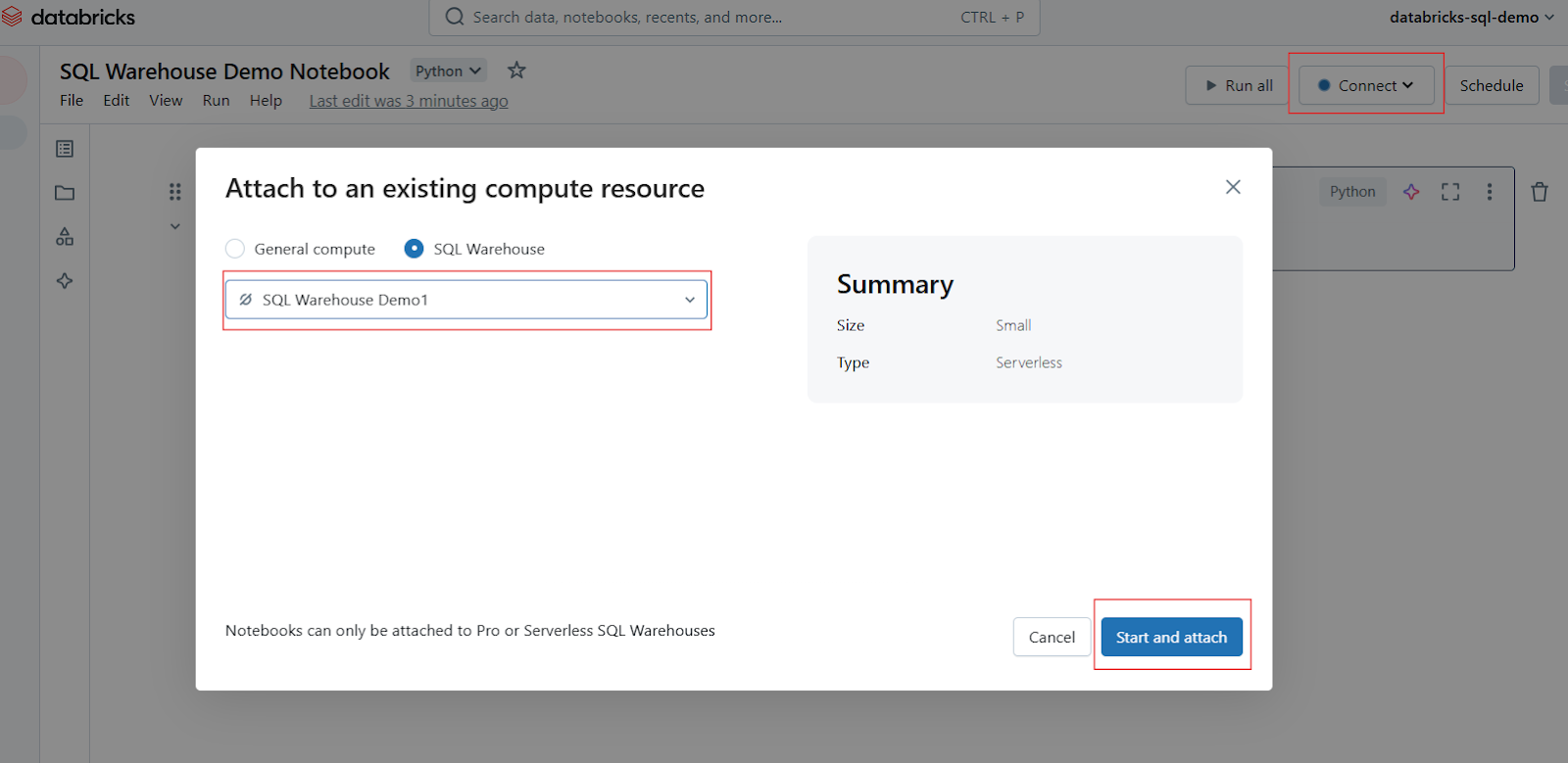

Step 2: Attach the SQL Warehouse

In the Notebook toolbar, locate the compute selector or connect (usually displayed at the top). Click on it to open a dropdown menu showing available compute resources. Select your SQL warehouse from the list. If it’s not visible, click on More… to view all available warehouses. Click on the desired SQL warehouse and then select Start and attach.

Attach Notebook in Databricks SQL Warehouse. Image by Author.

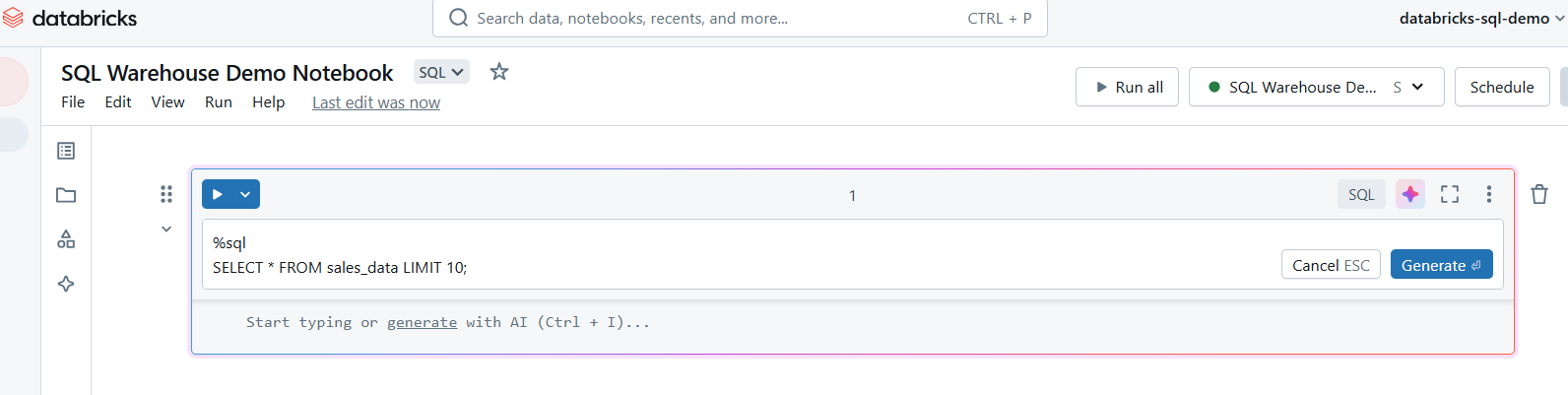

Step 3: Write SQL queries

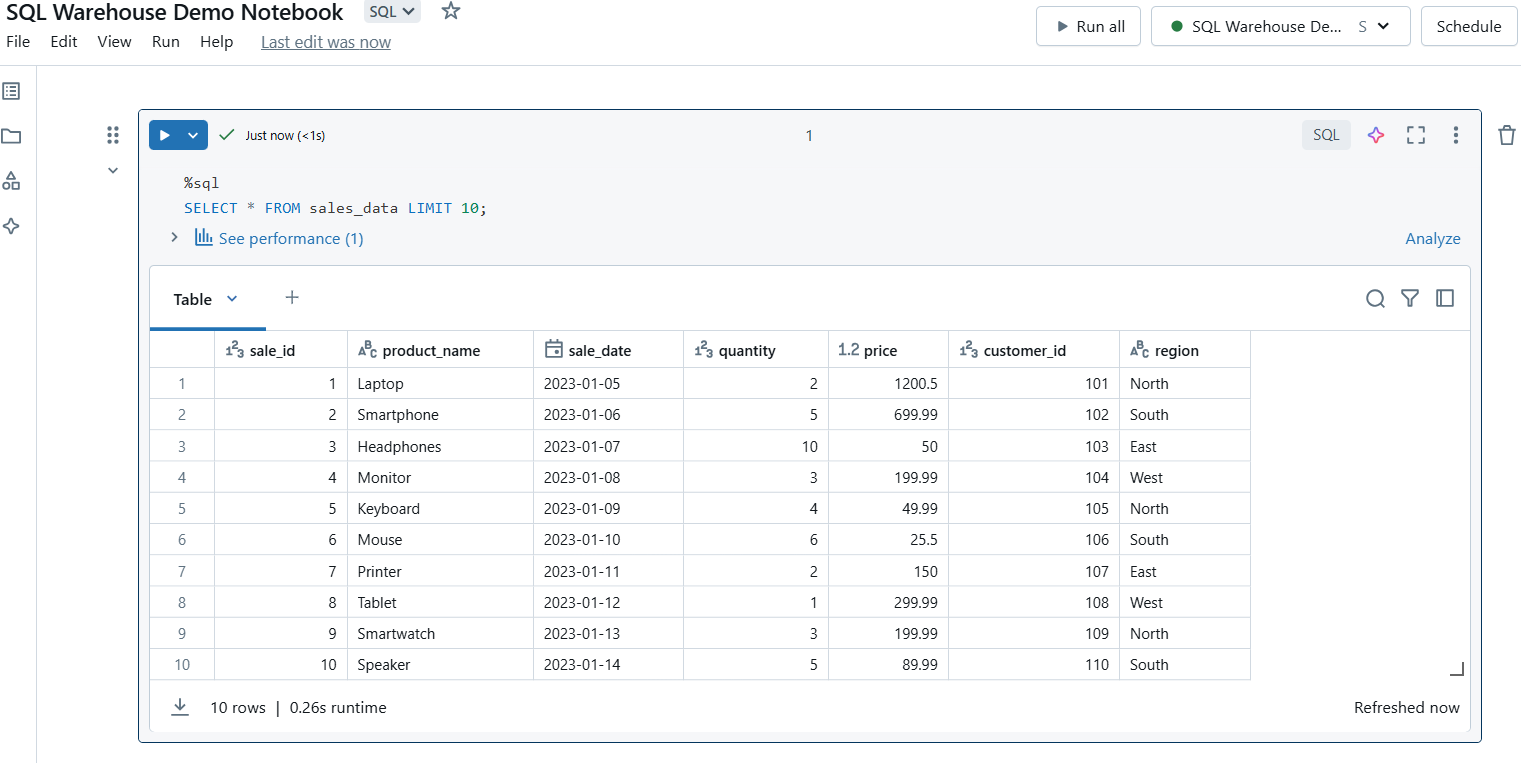

Once attached, you can create cells in your notebook for SQL queries. Use the %sql magic command to execute SQL queries in the notebook.

Write SQL queries in Databricks SQL Warehouse. Image by Author.

Step 4: Execute the queries

Run the cell to execute your query. The results will be displayed directly below the cell, making it easy to preview data.

Execute SQL queries in Databricks SQL Warehouse. Image by Author.

Considerations for using a Notebook with a SQL Warehouse

The following are some considerations when using notebooks with SQL Warehouses:

-

Query Limits: SQL Warehouses are optimized for analytical queries, not for frequent, low-latency transactional queries.

-

Data Preview: For large datasets, consider limiting your query results, such as using the

LIMITclause to avoid performance bottlenecks. -

Query Caching: Take advantage of result caching to speed up repeated queries.

-

Resource Usage: Monitor the SQL Warehouse’s utilization to ensure the cluster size and scaling settings meet your workload needs.

Conclusion

Databricks SQL is a powerful platform that bridges the gap between data lakes and data warehouses, providing a unified solution for modern data analytics and business intelligence. Whether you're building dashboards, optimizing queries, or monitoring data with alerts, Databricks SQL offers the flexibility and performance needed to handle today’s data challenges. I encourage you to explore the features and capabilities of Databricks SQL to enhance your data workflows, empower collaboration, and drive smarter, faster decision-making.

If you are interested in becoming a professional data engineer, I highly recommend taking our Understanding Data Engineering course to learn how data engineers store and process data to facilitate collaboration with data scientists. I also recommend taking our Data-Driven Decision-Making in SQL course to learn how to use SQL to support decision-making using real-world projects. Also, of course, don't forget to look at our great assortment of cloud courses.

Cloud Courses

Databricks SQL FAQs

What is Databricks SQL, and how does it differ from traditional SQL databases?

Databricks SQL is a serverless analytics tool designed for the Databricks Lakehouse platform, combining the scalability of data lakes with the performance of data warehouses.

How do I optimize performance in Databricks SQL?

Use the Photon engine for faster query execution, enable query result caching, and configure auto-scaling in SQL Warehouses to handle workloads efficiently.

Can Databricks SQL be integrated with existing BI tools like Power BI and Tableau?

Yes, Databricks SQL seamlessly integrates with popular BI tools, enabling real-time data visualization and enhanced collaboration across teams.

What are the different types of SQL warehouses in Databricks SQL, and how do I choose the right one?

SQL Warehouses come in Classic, Pro, and Serverless options. Choose based on workload needs: Classic for basic queries, Pro for advanced use cases, and Serverless for simplicity and scalability.

How does Databricks SQL handle data governance and security?

Databricks SQL supports fine-grained access controls row and column-level security, and integrates with identity providers for robust governance.