Course

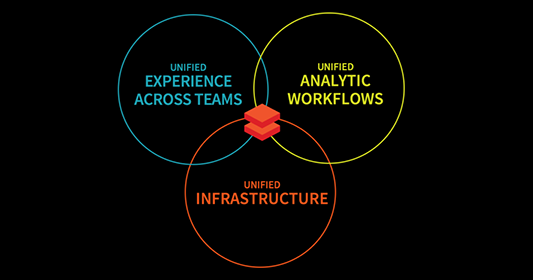

Databricks is an open analytics platform for building, deploying, and maintaining data, analytics, and AI solutions at scale. It is built on Apache Spark and integrates with any of the three major cloud providers (AWS, Azure, or GCP), allowing us to manage and deploy cloud infrastructure on our behalf while offering any data science application you can imagine.

Today I want to walk you through a comprehensive introduction to Databricks, covering its core features, practical applications, and structured learning paths to get you started. From setting up your environment to mastering data processing, orchestration, and visualization, you’ll find everything you need to get started with Databricks.

Why Learn Databricks?

Learning Databricks and having some solid foundations in it might have several main positive advantages for you:

Databricks has many applications

Databricks has broad applications that empower businesses to transform data, clean, process, and optimize large datasets for insights. It enables advanced analytics for better decision-making through data exploration and visualization; it supports the development and deployment of predictive models and AI solutions.

Databricks will give you an edge

Databricks offers a competitive edge with its cloud compatibility. Built on Apache Spark, it integrates with top data tools like AWS, Azure, and Google Cloud.. Mastery of Databricks positions you as a leader in any industry that cares about data.

Key Features of Databricks

Databricks is a comprehensive platform for data engineering, analytics, and machine learning, combining five main features that enhance scalability, collaboration, and workflow efficiency.

Key features of Databricks. Image by Author.

Unified data platform

Databricks combines all three data engineering, data science, and machine learning workflows into a single platform. This further simplifies data processing, model training, and deployment. By unifying these domains, Databricks accelerates AI initiatives, helping businesses transform siloed data into actionable insights while fostering collaboration.

Apache Spark integration

Apache Spark, the leading distributed computing framework, is deeply integrated with Databricks. This allows Databricks to handle Spark configuration automatically. Therefore, users can focus on building data solutions without worrying about the setup.

Furthermore, Spark’s distributed processing power is ideal for big data tasks, and Databricks enhances it with enterprise-level security, scalability, and optimization.

Delta Lake

Delta Lake is the backbone of Databricks’ Lakehouse architecture, which adds features like ACID transactions, schema enforcement, and real-time data consistency. This ensures data reliability and accuracy, making Delta Lake a critical tool for managing both batch and streaming data.

MLflow

MLflow is an open-source platform for managing the entire machine learning lifecycle. Fom tracking experiments to managing model deployment, MLflow simplifies the process of building and operationalizing ML models.

Additionally, with the latest integrations for generative AI tools like OpenAI and Hugging Face, MLflow extends its capabilities to include cutting-edge applications such as chatbots, document summarization, and sentiment analysis.

Collaboration tools

Databricks fosters collaboration through:

- Interactive Notebooks: Combine code, markdown, and visuals to document workflows and share insights seamlessly.

- Real-Time Sharing: Collaborate live on notebooks for instant feedback and streamlined teamwork.

- Version Control Features: Track changes and integrate with Git for secure and efficient project management.

How to Start Learning Databricks

Getting started with Databricks can be both exciting and overwhelming. That’s why the first step in learning any new technology is to have a clear understanding of your goals—why you want to learn it and how you plan to use it.

Set clear goals

Before diving in, define what you want to achieve with Databricks.

Are you looking to streamline big data processing as a data engineer? Or are you focused on harnessing its ML capabilities to build and deploy predictive models?

By defining your main objectives, you can create a focused learning plan accordingly. Here are some tips depending on your main aspiration:

- If your focus is data engineering, prioritize learning about Databricks' tools for data ingestion, transformation, and management, as well as its seamless integration with Apache Spark and Delta Lake.

- If your focus is machine learning, focus on understanding MLflow for experiment tracking, model management, and deployment, as well as leveraging the platform’s built-in support for libraries like TensorFlow and PyTorch.

Start with the basics:

Getting started with Databricks can be easier than you might think. To do so, I will guide you into a step-by-step approach, so you can easily familiarize yourself with the platform’s essentials.

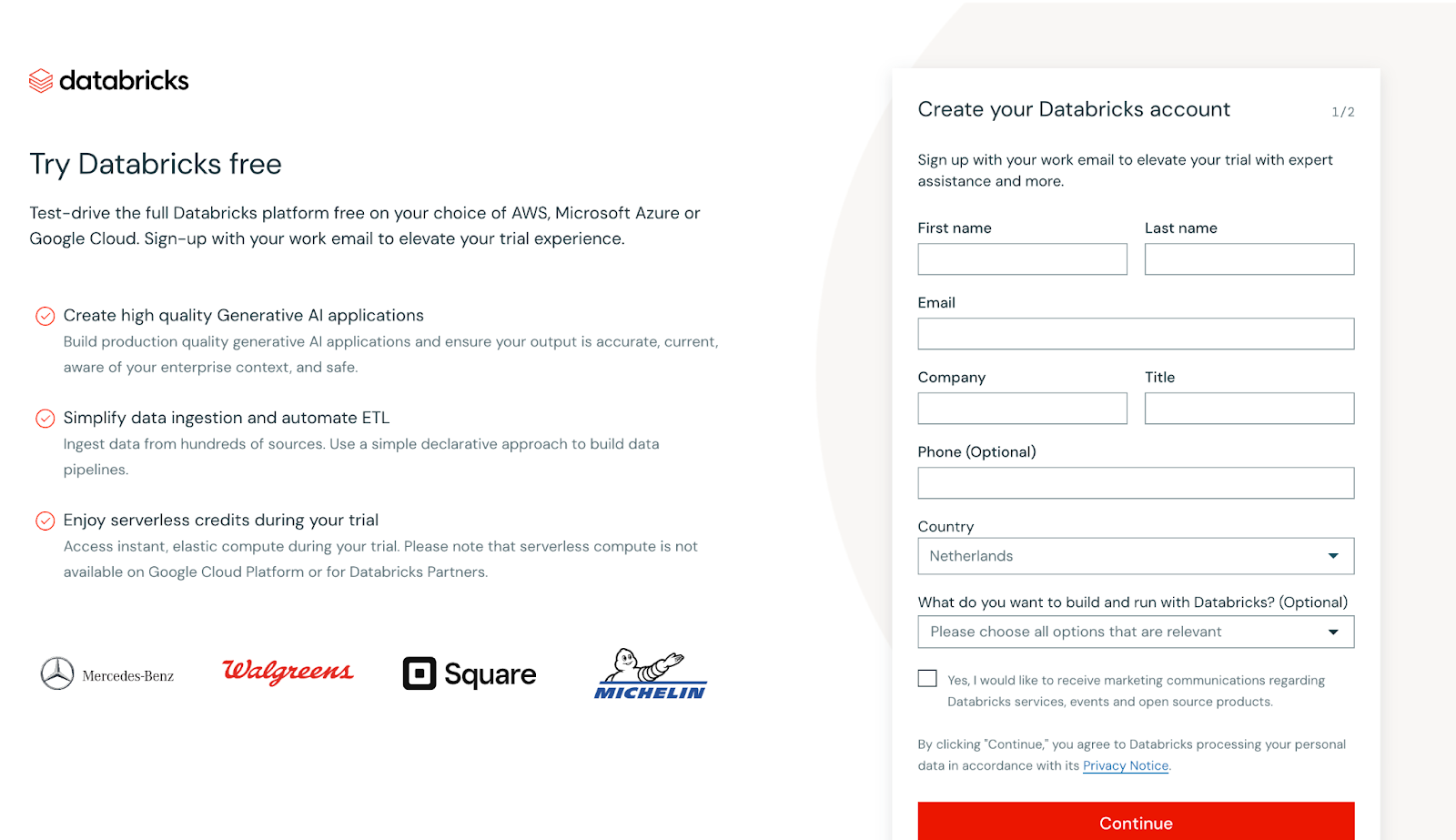

Sign up for free

Begin by creating a free account on Databricks Community Edition, which provides access to core features of the platform at no cost. This edition is perfect for hands-on exploration, allowing you to experiment with Workspaces, Clusters, and Notebooks without needing a paid subscription.

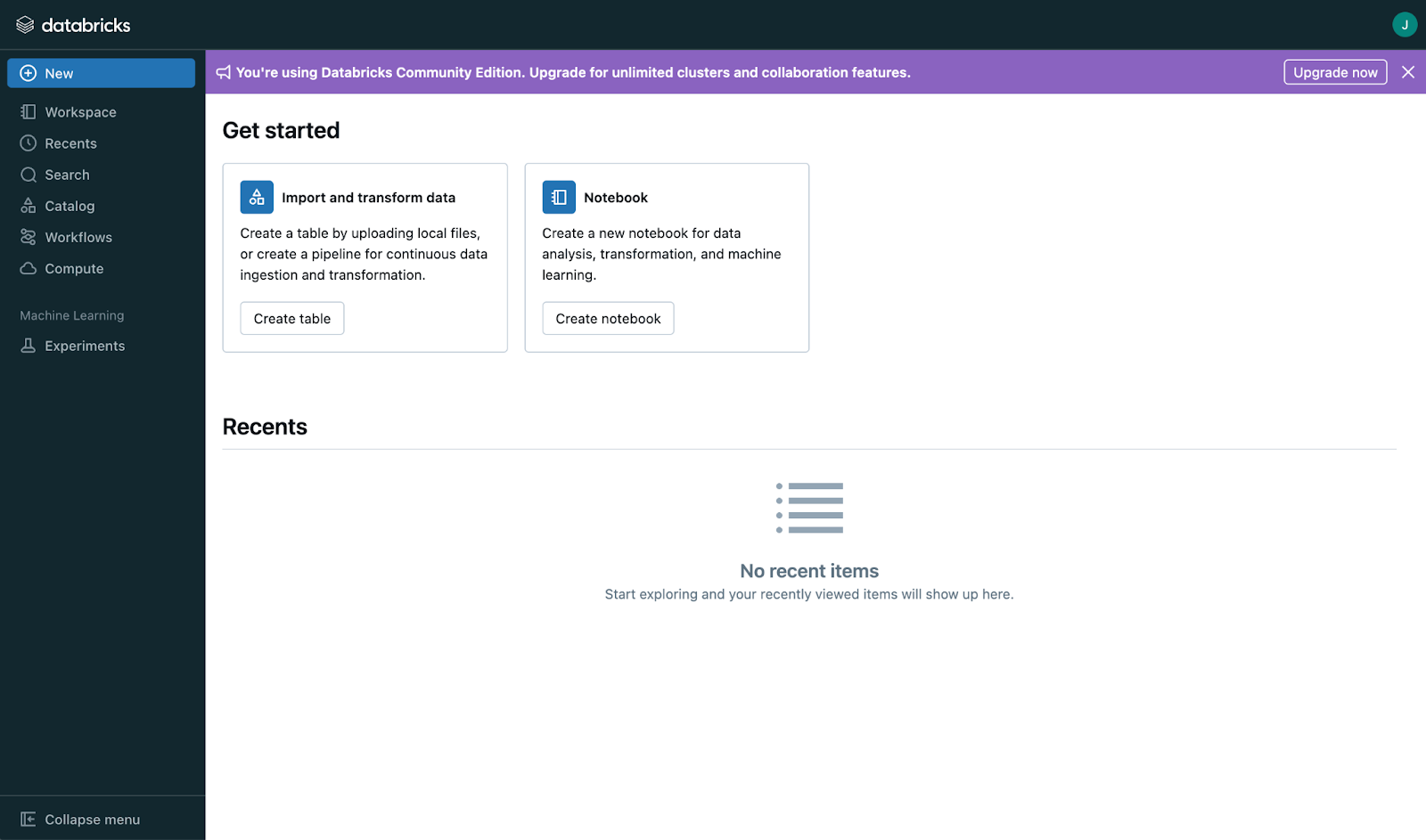

Screenshot of Databricks signing up main view. Image by Author

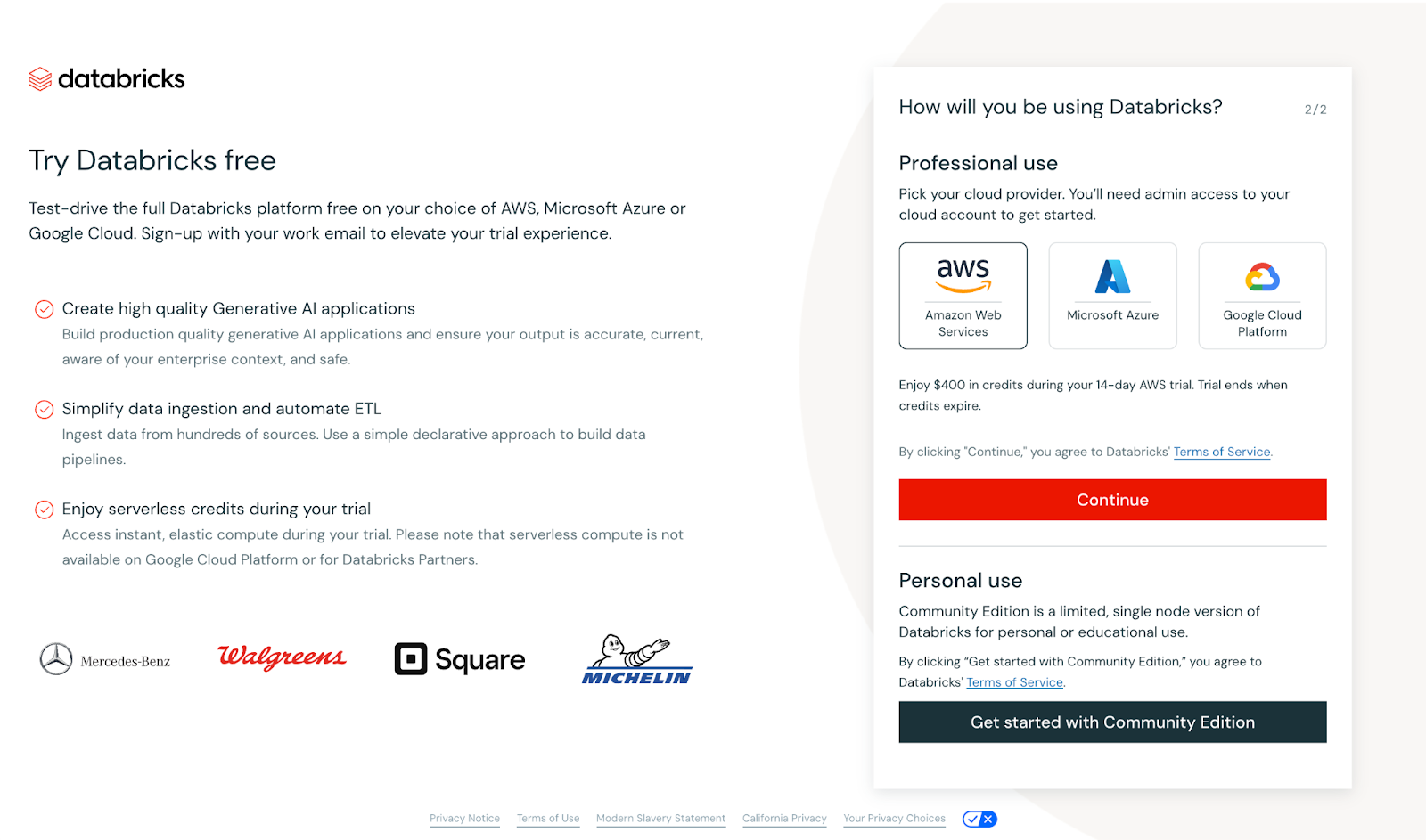

Once you give your details, the following view will appear.

Screenshot of Databricks signing up main view. Image by Author

In this case, you’ll be prompted to either set up a cloud provider or proceed with the Community Edition. To keep things accessible, we’ll use the Community Edition. While it offers fewer features than the Enterprise version, it’s ideal for smaller use cases like tutorials and doesn’t require a cloud provider setup.

After selecting the Community Edition, verify your email address. Once verified, you’ll be greeted with a main dashboard that looks like this:

Screenshot of Databricks main page. Image by Author

Begin with the interface

Once logged in, take time to understand the layout. At first glance, the interface might seem basic, but once you explore further or upgrade your account, you’ll uncover lots of great features:

- Workspaces: This is where you organize your projects, notebooks, and files. Think of it as the hub for all your work.

- Notebooks: Write and execute code in various programming languages within the same notebook.

- Cluster Management: These are groups of virtual machines that handle data processing. They provide the computing power you’ll need for tasks like data transformations and machine learning.

- Table Management: Organize and analyze structured data efficiently.

- Dashboard Creation: Build interactive dashboards directly in the workspace to visualize insights.

- Collaborative Editing: Work on notebooks in real-time with teammates for seamless collaboration.

- Version Control: Track notebook changes and manage versions effortlessly.

- Job Scheduling: Automate notebook and script execution at specified intervals for streamlined workflows.

Learn core concepts

Databricks has three core concepts that will remain basic for any professional willing to master it:

- Clusters: The backbone of Databricks, clusters are computing environments that execute your code. Learn how to create, configure, and manage them to suit your processing needs.

- Jobs: Automate repetitive tasks by creating jobs that run your notebooks or scripts on a schedule, streamlining workflows.

- Notebooks: These are interactive documents where you write and execute code, visualize results, and document findings. Notebooks support multiple languages like Python, SQL, and Scala, making them versatile for various tasks.

Cloud Courses

Databricks Learning Plan

Here is my idea of a three-step learning plan.

Step 1: Master Databricks fundamentals

Start your journey by building a strong foundation in Databricks Essentials.

Data management

Managing data effectively is at the core of any data platform, and Databricks simplifies this process with robust tools for loading, transforming, and organizing data at scale. Here's an overview of the key aspects of data management in Databricks:

Loading data

Databricks supports a variety of data sources, making it easy to ingest data from structured, semi-structured, and unstructured formats.

- Supported Data Formats: CSV, JSON, Parquet, ORC, Avro, and more.

- Data Sources: Cloud storage systems like AWS S3, Azure Data Lake, and Google Cloud Storage, as well as relational databases and APIs.

- Auto Loader: A feature of Databricks that simplifies loading data from cloud storage in a scalable, incremental manner, perfect for handling continuously growing datasets.

Transforming data

Once your data is ingested, Databricks provides powerful tools for cleaning and transforming it to prepare it for analysis or machine learning workflows.

- DataFrames: These provide an intuitive way to perform transformations, similar to SQL or pandas in Python. You can filter, aggregate, and join datasets with ease.

- SparkSQL: For those familiar with SQL, Databricks allows you to query and manipulate your data directly using SQL commands.

- Delta Lake: Enhance data transformations with Delta Lake’s support for schema enforcement, versioning, and real-time consistency.

Managing data

Databricks enables seamless management of your data across different stages of the workflow.

- Data Lakehouse: Databricks combines the best of data lakes and data warehouses, providing a single platform for all data needs.

- Partitioning: Optimize performance and storage by partitioning your datasets for faster queries and processing.

- Metadata Handling: Databricks automatically tracks and updates metadata for your datasets, simplifying data governance and query optimization.

Apache Spark basics

Familiarize yourself with Spark’s core concepts, including:

- RDDs (Resilient Distributed Datasets): The foundational data structure for distributed computing.

- DataFrames: High-level abstractions for structured data, enabling efficient processing and analysis.

- SparkSQL: A SQL interface for querying and manipulating data in Spark.

Suggested resources

- Databricks Academy: Start with their Fundamentals learning path for guided lessons.

- Follow DataCamp Introduction to DataBricks course to get started and understand this platform's basics.

- You can use your usual Cloud-Provider documentation to get started with Databricks as well. For instance, Azure has some good introductory content to Databricks.

Step 2: Get hands-on with Databricks

The best way to learn any new tool, including Databricks, is through hands-on practice. By applying the concepts you’ve learned to real-world scenarios, you’ll not only build confidence but also deepen your understanding of the platform’s powerful capabilities.

Engage in mini-projects

Practical projects are an excellent way to explore Databricks’ features while developing key skills. Here are a few starter projects to consider:

- Build an End-to-End Data Engineering Project

- Follow a complete data engineering workflow by implementing an ETL process on Databricks.

- Extract data from a cloud storage service, such as AWS S3 or Azure Blob Storage.

- Transform the data using Spark for tasks like cleansing, deduplication, and aggregations.

- Load the processed data into a Delta table for efficient querying and storage.

- Add value by creating a dashboard or report that visualizes insights from the processed data.

- Reference: Follow this project here.

You can find many other projects (from intermediate to advanced) on the following website about learning based on projects. These projects not only help reinforce core concepts but also prepare you for more complex workflows in data engineering and machine learning.

Use Databricks Community Edition labs

The Databricks Community Edition provides a free, cloud-based environment perfect for experimentation. Use it to access:

- Prebuilt Labs: Hands-on labs designed to teach you key skills like working with clusters, notebooks, and Delta Lake.

- Interactive Notebooks: Practice coding directly in Databricks notebooks, which support Python, SQL, Scala, and R.

- Collaborative Features: Experiment with real-time collaboration tools to simulate team-based projects.

Showcase your skills

Creating a diverse portfolio is an excellent way to showcase your expertise. Documenting and presenting your projects is just as important as building them. Use GitHub or a personal website to:

- Provide detailed descriptions of each project, including objectives, tools used, and outcomes.

- Share code repositories with clear documentation to make them accessible to others.

- Include visuals such as screenshots, diagrams, or dashboards to illustrate results.

- Write a blog post or project summary to highlight challenges, solutions, and key learnings.

By combining hands-on experience with a polished portfolio, you’ll effectively demonstrate your expertise and stand out in the competitive Azure ecosystem.

Step 3: Deepen your skills in specialized areas

- Data Engineering: Recommend focusing on Delta Lake and stream processing.

- Machine Learning: Suggest studying MLflow for model tracking and deployment.

- Certifications: List certifications like Databricks Certified Associate Developer for Apache Spark and Databricks Certified Professional Data Scientist.

Once you’ve mastered the fundamentals of Databricks and gained hands-on experience, the next step is to focus on specialized areas that align with your career goals. Whether you’re interested in data engineering, machine learning, or gaining certifications to validate your skills, this step will help you build advanced expertise.

Data engineering

Data engineering is at the heart of building scalable data pipelines, and Databricks offers powerful tools to support this work. To advance your skills in this area:

| Focus Area | Key Activities and Learning Goals |

|---|---|

| Learn Delta Lake | Understand Delta Lake’s role in ensuring data reliability and consistency with features like ACID transactions and schema enforcement Explore how Delta Lake facilitates batch and streaming data processing on a unified platform Practice creating, querying, and optimizing Delta tables for high-performance analytics |

| Dive into Stream Processing | Study Spark Structured Streaming to build real-time data pipelines Experiment with use cases like event stream processing, log analytics, and real-time dashboards Learn how to integrate streaming data with Delta Lake for continuous data flow management |

Machine learning

Databricks’ machine learning ecosystem speeds up model development and deployment. To specialize in this area:

| Focus Area | Key Activities and Learning Goals |

|---|---|

| Master MLflow | Use MLflow to track experiments, log parameters, and evaluate metrics from your models Learn about the MLflow Model Registry to manage models throughout their lifecycle, from development to production Explore advanced features like GenAI support for creating applications such as chatbots and document summarization |

| Focus on Deployment | Practice deploying machine learning models as REST APIs or using frameworks like Azure ML and Amazon SageMaker Experiment with Databricks Model Serving to scale deployments efficiently |

| Incorporate Advanced Libraries | Use TensorFlow, PyTorch, or Hugging Face Transformers to build sophisticated models within Databricks. |

Get certified

Certifications validate your expertise and make you stand out in the job market. Databricks offers several certifications tailored to different roles and skill levels:

| Certification | Description |

|---|---|

| Databricks Certified Associate Developer for Apache Spark | Designed for developers, this certification focuses on Spark programming concepts, DataFrame APIs, and Spark SQL. |

| Databricks Certified Data Engineer Associate | Covers key skills like building reliable data pipelines using Delta Lake and optimizing data storage for analytics. |

| Databricks Certified Professional Data Scientist | For advanced practitioners, this certification tests your ability to build, deploy, and monitor machine learning models using Databricks tools. |

Databricks Learning Resources

Courses and tutorials

- Databricks Academy: Offers official training paths covering core topics like data engineering, machine learning, and Databricks SQL. Perfect for gaining certifications and mastering Databricks tools.

- DataCamp: Provides beginner-friendly introductions to Databricks and advanced guides focused on getting certifications.

- Coursera and edX: Feature courses from leading institutions and industry experts focusing on Databricks workflows and Spark programming.

- Udemy: Offers cost-effective courses on topics like ETL pipelines, Delta Lake, and Databricks fundamentals.

- Community Resources: Explore blogs, YouTube channels (like Databricks or TheSeattleGuy), and tutorials from experienced users for practical, hands-on learning.

Documentation and community support

- Databricks Documentation: A comprehensive resource for understanding platform features, APIs, and best practices. Includes tutorials, FAQs, and guides to help beginners and advanced users alike.

- Databricks Community Edition: A free environment for testing and learning Databricks without needing a cloud account.

- User Forums: Join Databricks’ community forums or participate in discussions on platforms like Stack Overflow for troubleshooting and knowledge sharing.

- GitHub Repositories: Access open-source projects, example notebooks, and code snippets shared by Databricks engineers and the community like the 30 days of Databricks challenge.

Cheat sheets and reference guides

- Spark Command Cheat Sheets: Use Standford’s Spark commands cheatsheet to enhance your learning experience.

- Delta Lake Quick Start: Downloadable guides to set up Delta Lake for efficient data storage and transactions.

- Databricks CLI and API Guides: Use these for managing your Databricks environment programmatically.

To master Databricks effectively, it’s essential to leverage a mix of structured courses, official documentation, and quick-reference materials. Below are key resources that can guide your learning journey and support you as you build expertise.

Staying Updated with Databricks

Continuous learning

Staying current with Databricks is essential as the platform frequently evolves with new features and enhancements.

- Refresh Your Skills: Regularly revisit foundational concepts like Spark, Delta Lake, and MLflow, and explore updates to stay ahead.

- Experiment with New Features: Utilize the free Community Edition or sandbox environments to test recently introduced tools and capabilities.

- Explore Certification Options: Databricks offers certification programs that validate your skills and help you stay aligned with the latest industry practices.

Following blogs and news

- Databricks Official Blog: Stay informed about product announcements, best practices, and success stories in Databricks official blog.

- Tech News Platforms: Follow sources like TechCrunch, InfoWorld, and Medium to learn how Databricks is driving innovation across industries.

Engaging with the community

- Join User Groups: Participate in Databricks-focused groups on Meetup, LinkedIn, or Discord to share knowledge and learn collaboratively.

- Attend Conferences: Events like the Databricks Summit are excellent for networking and exploring cutting-edge use cases.

- Participate in Forums: Engage in discussions on platforms like Stack Overflow, Databricks community forums, and Reddit’s data engineering subreddits.

Subscribing to DataFramed

Subscribe to the DataFramed podcast for insights into the latest trends and to hear expert interviews. One great Databricks-related episode I recommend even features the CTO of Databricks: [AI and the Modern Data Stack] How Databricks is Transforming Data Warehousing and AI with Ari Kaplan, Head Evangelist & Robin Sutara, Field CTO at Databricks.

Subscribe to DataFramed

Subscribe to DataFramed wherever you get your podcasts.

Conclusion

Databricks empowers professionals to solve challenges and unlock career opportunities. I'm happy I learned Databricks, and I encourage you to keep studying. Remember to:

-

Define Your Goals: Align your learning path with specific objectives, whether in data engineering, AI, or analytics.

-

Utilize Resources: Leverage structured courses, community editions, and hands-on projects to gain experience.

-

Stay Engaged: Follow updates, participate in the Databricks community, and embrace continuous learning to stay ahead.

Josep is a freelance Data Scientist specializing in European projects, with expertise in data storage, processing, advanced analytics, and impactful data storytelling.

As an educator, he teaches Big Data in the Master’s program at the University of Navarra and shares insights through articles on platforms like Medium, KDNuggets, and DataCamp. Josep also writes about Data and Tech in his newsletter Databites (databites.tech).

He holds a BS in Engineering Physics from the Polytechnic University of Catalonia and an MS in Intelligent Interactive Systems from Pompeu Fabra University.

Frequently Asked Questions When Learning Databricks

What is Databricks, and why is it important for data professionals?

Databricks is an open analytics platform for building, deploying, and maintaining data, analytics, and AI solutions at scale. Its integration with major cloud providers (AWS, Azure, GCP) and robust tools make it a game-changer for data professionals by streamlining big data processing and enabling advanced analytics and machine learning.

What are the three main advantages of learning Databricks?

Career Growth: High demand and shortage of skilled professionals; 2) Broad Applications: Enables data transformation, advanced analytics, and machine learning; 3) Competitive Edge: Offers compatibility with AWS, Azure, GCP, and integrates seamlessly with Apache Spark.

What key features make Databricks a comprehensive platform?

Key features include a unified data platform combining data engineering, analytics, and machine learning, Apache Spark integration for distributed processing, Delta Lake for data consistency, MLflow for managing machine learning lifecycles, and collaborative tools like interactive notebooks.

How can beginners get started with Databricks?

Beginners can start by defining their learning goals, signing up for the free Databricks Community Edition, exploring the interface (workspaces, clusters, notebooks), and working on hands-on projects such as building ETL pipelines or experimenting with MLflow.

How can professionals stay updated with Databricks?

Stay updated by regularly revisiting core concepts like Delta Lake and MLflow, experimenting with new features in the Community Edition, following the Databricks Official Blog, and engaging with the Databricks community through forums, conferences, and user groups.