Track

Gone are the days when we were happy with large language models that can only process text. We now demand multimodal LLMs capable of understanding and interacting with text, images, and videos.

Enter Llama 3.2 11B & 90B vision models, Meta AI’s first open-source multimodal models, capable of processing both text and image inputs.

In this hands-on guide, I will take you through the process of creating a multimodal customer support assistant with the help of Llama 3.2 and Gradio. By the end of this tutorial, you will have a fully functional web application that can analyze textual descriptions and uploaded images to generate helpful solutions - just like a support ticket assistant would!

If you need a quick introduction to Llama 3.2 before we get started, I recommend reading this Llama 3.2 guide.

Initial Setup

In this hands-on demo, we’ll be using the Llama3.2-11B-Vision model (multimodal). Before starting to code, let’s make sure we have all the necessary dependencies.

We need a few libraries to make everything work. The key ones are:

- Transformers: The core library for working with models like Llama 3.2.

- Torch: The deep learning library that powers our model.

- Gradio: For building our user interface.

Run the following commands to install the necessary dependencies:

!pip3 install -U transformers bitsandbytes accelerate peft -q

!pip3 install gradio -qLoad the Llama 3.2 Model and Processor

Now, let’s load the Llama 3.2 model and processor. We’ll make use of Hugging Face’s transformers library to load the model and processor, making sure the model runs on GPU if it’s available, or defaults to CPU otherwise. Being an 11B parameter model, it works well on an A100 GPU in Google Colab.

In the code block below:

- We set up the required imports.

- We load both the model and the processor with GPU support, if available. This ensures the app runs efficiently, especially when processing large amounts of data.

- An important piece of code to notice here is

tie_weight(), which ensures that the weights of the input and output embedding layers are identical. This reduces memory consumption and can improve performance.

import torch

from PIL import Image

import gradio as gr

from transformers import MllamaForConditionalGeneration, AutoProcessor

def load_model():

model_id = "meta-llama/Llama-3.2-11B-Vision-Instruct"

device = "cuda" if torch.cuda.is_available() else "cpu" # Check if GPU is available

model = MllamaForConditionalGeneration.from_pretrained(

model_id,

torch_dtype=torch.float16 if torch.cuda.is_available() else torch.float32,

device_map="auto", # Automatically map to available device

offload_folder="offload", # Offload to disk if necessary

)

model.tie_weights() # Tying weights for efficiency

processor = AutoProcessor.from_pretrained(model_id)

print(f"Model loaded on: {device}")

return model, processorWhy Gradio?

Gradio is a lightweight Python library that allows us to quickly build machine-learning apps with web-based interfaces. Instead of writing complex HTML or JavaScript, we can define our app’s components (like text boxes, buttons, or images) directly in Python.

Here’s what a basic Gradio UI looks like:

Gradio has a few benefits:

- No installation hassle: Gradio apps are hosted and shared with a few lines of Python code. Built-in sharing allows access via a public link.

- Collaborative interface: Allows live demos of models shared with collaborators or the public. Multiple users can interact simultaneously.

- Supports multiple input and output types: Offers a range of input/output components beyond text and images.

- Minimal configuration for cloud hosting: Easy deployment on platforms like Hugging Face Spaces or other cloud services.

- Cross-platform integration: Interfaces can be embedded into other web applications, Jupyter Notebooks, or blog posts.

- API auto-generation: Automatically generates an API for the app.

- Built-in security: Includes file size limits and other measures to prevent malicious use.

For this demo, Gradio makes it simple for users to input both text and images and see the output (analysis of text and image, in this case) in real time. It’s perfect for showcasing the power of models like Llama 3.2 in a user-friendly environment.

Develop AI Applications

Building the Llama 3.2 Multimodal App

Now that we have already set up our imports and have successfully set up our model, let’s move forward with the main part of the app—processing the inputs (text and image) and generating the response.

Text-to-text generation

We start by defining a function that takes in user text and, optionally, an image. This function then uses the Llama 3.2 model to generate a response.

def process_ticket(text, image=None):

model, processor = load_model()

try:

if image:

# Resize the image for consistency

image = image.convert("RGB").resize((224, 224))

prompt = f"<|image|><|begin_of_text|>{text}"

# Process both the image and text input

inputs = processor(images=[image], text=prompt, return_tensors="pt").to(model.device)

else:

prompt = f"<|begin_of_text|>{text}"

# Process text-only input

inputs = processor(text=prompt, return_tensors="pt").to(model.device)

# Generate response (restrict token length for faster output)

outputs = model.generate(**inputs, max_new_tokens=200)

# Decode the response from tokens to text

response = processor.decode(outputs[0], skip_special_tokens=True)

return response

except Exception as e:

print(f"Error processing ticket: {e}")

return "An error occurred while processing your request."This function handles two types of input within the loop:

- Text-only: If no image is provided, the model generates a response based on the text input.

- Text + Image: If an image is provided, the model processes both the text and image before generating the response.

Once the input type is identified, it is passed to a processor sourced from the transformer library to process the input. Then, the model generates an output within the range of max_new_tokens.

Creating the Gradio Interface

The Gradio interface binds everything together and enables us to run tests in a web-based format. This interface allows users to submit text and images of an issue they are facing and see the AI-generated solution.

Let’s take a look at the code and then explain it.

def create_interface():

text_input = gr.Textbox(

label="Describe your issue",

placeholder="Describe the problem you're experiencing",

lines=4,

)

image_input = gr.Image(label="Upload a Screenshot (Optional)", type="pil")

# Output element

output = gr.Textbox(label="Suggested Solution", lines=5)

# Create the Gradio interface

interface = gr.Interface(

fn=process_ticket, # Function to process inputs

inputs=[text_input, image_input], # User inputs (text and image)

outputs=output, # AI-generated output

title="Multimodal Customer Support Assistant",

description="Submit a description of your issue, along with an optional screenshot, and get AI-powered suggestions.",

)

# Launch the interface with debug mode

interface.launch(debug=True)In the code above, we:

- Define two inputs:

text_inputfor textimage_inputfor image- Specify an output box to display the response.

- Create the interface, passing parameters such as:

- Inputs

- Function to process input

- Generated output from the model

- Title of the interface

- Description (if required)

- Set up the basic user interface.

- Launch the interface with

debug = Trueto debug the errors. Once the code works fine, switch it back toFalse.

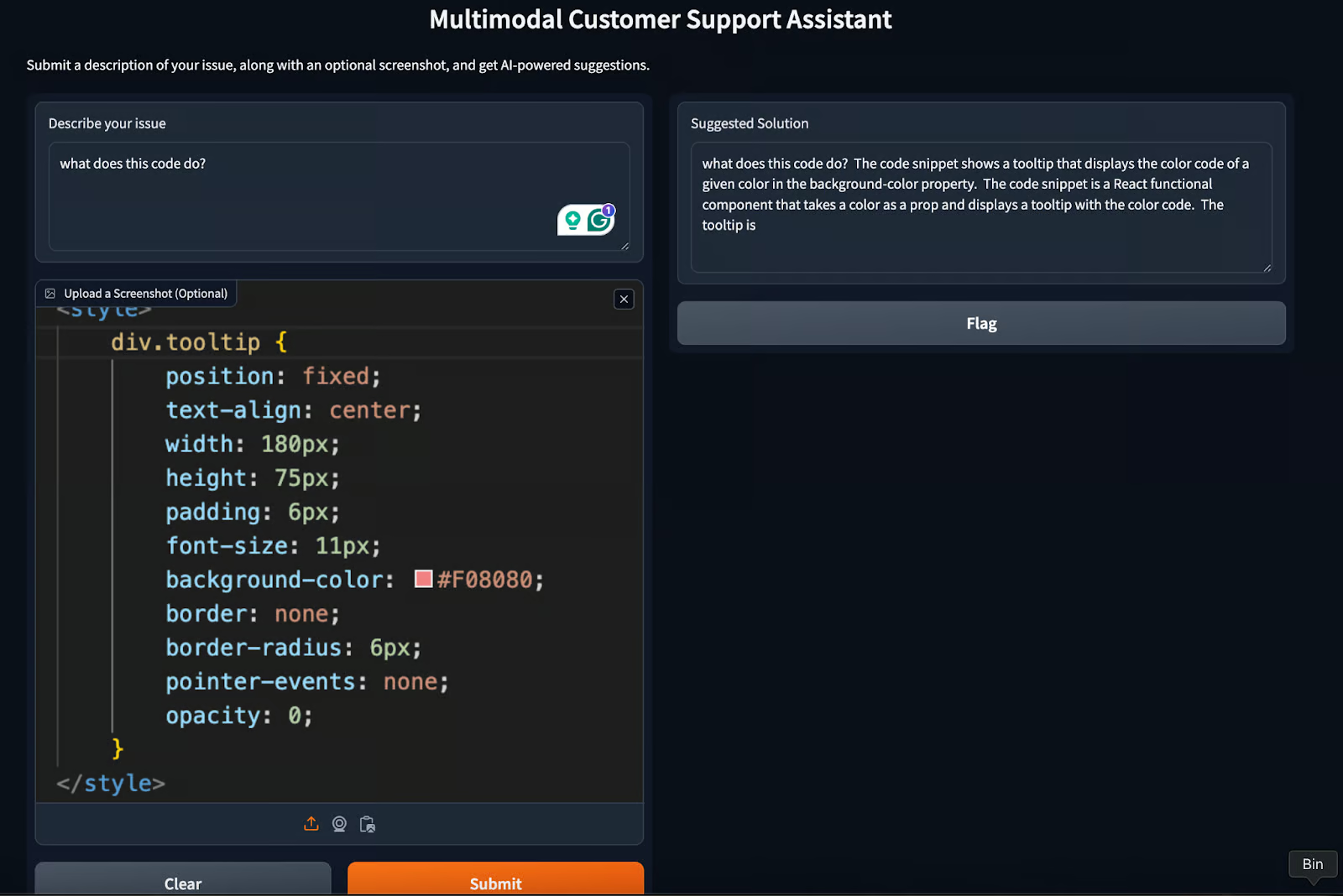

The final interface will look like this:

Our multimodal customer support assistant Gradio application is ready! To get the desired response, try changing the max_new_tokens parameter or playing around with the prompt a bit.

Use cases for Llama 3.2 and Gradio

In addition to the demo we created in this tutorial, there are a few other use cases that require minimal effort. These include:

- Education and tutoring: Students can upload visual aids like graphs or diagrams alongside their questions, and the model can generate comprehensive responses incorporating both visual and textual information.

- Content creation: Improve the creation of captions, blog posts, and social media content by generating text based on images.

- Real estate virtual assistance: Assist agents and clients by processing property images and answering related questions. Generate property descriptions or analyze visual details from photos.

Best Practices for Developing with Llama 3.2 and Gradio

For every use case, there are a few tips that every developer can use while developing an app like the one we already built. Here are a few best practices which a developer can adopt while working with models like llama3.2.

Handling latency

Since multimodal tasks can be resource-intensive, reducing latency is key. Consider optimizing the model for faster responses by using caching, model pruning, or limiting the number of tokens generated.

Error handling

It’s important to put mechanisms in place to handle errors. In cases where the model fails to generate a meaningful response (e.g., due to poor image quality), we can provide fallback responses or error messages. We can even opt for human feedback, which, in return, helps to improve the model.

Performance monitoring

Tracking the app's performance, such as response times and user interaction data, can help optimize the interface and even improve the user experience. By noting performance time, we can try to optimize the model latency using libraries like bits and bytes.

Conclusion

In this guide, we learned how to combine Llama 3.2's multimodal capabilities and Gradio's intuitive interface. From customer support to education and content creation, the potential applications are vast and varied.

By adhering to best practices like latency management, error handling, and performance monitoring, we can ensure our Llama 3.2 and Gradio applications are robust, efficient, and user-friendly.

To learn more, I recommend these tutorials:

Earn a Top AI Certification

I am a Google Developers Expert in ML(Gen AI), a Kaggle 3x Expert, and a Women Techmakers Ambassador with 3+ years of experience in tech. I co-founded a health-tech startup in 2020 and am pursuing a master's in computer science at Georgia Tech, specializing in machine learning.