Course

Whether you're just starting out or looking to level up your cloud data engineering career, understanding how to answer technical questions with confidence is key.

In this guide, you’ll find a curated list of common Azure data engineer interview questions—covering everything from data ingestion and transformation to storage, security, and real-time analytics.

Use this as a go-to resource to review core concepts, brush up on Azure best practices, and get comfortable with real-world scenarios you’ll likely face during technical interviews.

What Is Azure Data Engineering, and Why Is It Important?

Azure data engineering focuses on designing, building, and managing data pipelines using Microsoft Azure. It ensures efficient data ingestion, processing, storage, and analysis for business intelligence, machine learning, and analytics.

Mastering data engineering in Azure not only strengthens your technical foundation but also opens the door to in-demand roles like Data Engineer, Cloud Architect, or AI/ML Engineer—especially as more organizations move their data infrastructure to the cloud.

To build a strong foundation before diving into interviews, consider exploring the fundamentals in Understanding Microsoft Azure Architecture and Services. With that, let’s get started with the questions you may encounter in your interview!

Become Azure AZ-900 Certified

Basic Azure Data Engineer Interview Questions

To showcase your expertise in Azure data engineering, you should have a solid grasp of core Azure services and data pipeline concepts. Questions like the following will assess your ability to design, optimize, and manage a basic cloud-based data workflow.

What are the core Azure data services, and how do they differ in functionality?

Azure provides core data services for managing, processing, and analyzing data, including:

- Azure Data Factory (ADF) – A cloud-based ETL service for orchestrating and automating data movement.

- Azure Synapse Analytics – A data warehousing and analytics service for querying large datasets with SQL and big data processing.

- Azure Databricks – A big data and AI/ML platform on Apache Spark for large-scale transformations, real-time analytics, and machine learning.

There are many others, but the above are the most important ones for data engineers.

How can you create a simple data pipeline using Azure Data Factory?

Azure Data Factory (ADF) creates pipelines to move and transform data. A basic pipeline includes:

- Create a data factory – Set up an ADF instance in the Azure portal.

- Define a pipeline – Use a Copy Data Activity to transfer data.

- Configure source and destination – Connect sources (e.g., Azure Blob Storage) and destinations (e.g., Azure SQL Database).

- Trigger and monitor – Run and track the pipeline execution.

For a step-by-step walkthrough, check out our Azure Data Factory tutorial.

What is ETL, and how does it work?

ETL (Extract, Transform, Load) is a data integration process that gathers, processes, and stores data for analysis. Its three stages are:

- Extract – Data is collected from databases, APIs, and cloud storage sources.

- Transform – Data is cleaned, standardized, and aggregated through filtering, deduplication, and format conversion.

- Load – Processed data is stored in a data warehouse, lake, or analytical database for reporting.

What is the difference between ETL and ELT?

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are data-processing approaches, but they differ when and where the transformation occurs.

With ETL, Data is transformed before loading; however, with ELT, Data is transformed after loading.

ETL is better when you have structured data that needs to be pre-processed and high-quality for business intelligence (BI) dashboards.

You can learn more about the practical trade-offs in our ETL vs ELT guide.

What are the key differences between Azure Blob Storage, Azure Data Lake Storage, and Azure SQL Database?

Azure provides these different options to meet various data storage and processing needs. Here’s a comparison of the most popular options:

|

Feature |

Azure Blob Storage |

Azure Data Lake Storage (ADLS) |

Azure SQL Database |

|

Purpose |

General-purpose object storage for unstructured data |

Optimized for big data analytics on top of Blob storage |

Fully managed relational database service |

|

Data type |

Unstructured (e.g., text, images, video, logs) |

Structured, semi-structured, and unstructured |

Structured (tables, rows, columns) |

|

Use cases |

Backup files, media content, logs, raw data storage |

Big data processing, analytics, data lake architecture |

OLTP (transactional systems), reporting, business apps |

|

Integration |

Integrates with most Azure services and SDKs |

Integrates with big data tools like Azure Synapse, HDInsight |

Integrates with Power BI, Azure Data Factory, Logic Apps |

|

Performance tiers |

Hot, Cool, Archive tiers for cost optimization |

Optimized for throughput and parallel processing |

Built-in performance tiers (General Purpose, Business Critical) |

|

Security |

Role-based access control (RBAC), encryption at rest |

RBAC, POSIX-style ACLs for granular permissions |

Advanced threat protection, encryption, auditing |

|

Query support |

Limited (via Azure Data Lake or custom logic) |

Supports hierarchical namespace and analytics with U-SQL/Parquet |

Full SQL query support |

|

Cost model |

Pay-as-you-go based on storage and access tier |

Similar to Blob, but may incur higher processing costs |

Pay per DTU/vCore and storage tier |

When should you use Azure Data Lake Storage instead of Azure Blob Storage?

As we have seen in the previous comparison, Azure Blob Storage and Azure Data Lake Storage (ADLS) store data, but ADLS is optimized for big data analytics and it’s built on top of .

Choose ADLS when:

- You need a hierarchical namespace: ADLS Gen2 supports directories and subdirectories, making it easier to organize and manage massive datasets—especially in data lake architectures.

- You require big data analytics performance: ADLS is optimized for high-throughput and parallel processing with tools like Azure Synapse Analytics, Azure Databricks, and HDInsight.

- You want granular access control: ADLS supports POSIX-style access control lists (ACLs) in addition to Azure RBAC, allowing for fine-grained security at the file and folder level.

- You work with structured or semi-structured formats: ADLS integrates well with formats like Parquet, Avro, and ORC, commonly used in analytics and machine learning pipelines.

- Scalability and performance are critical: For enterprise-scale data processing and distributed compute environments, ADLS provides the necessary architecture and throughput.

What are the fundamental SQL commands for data extraction in Azure, and how do they work?

In Azure, SQL is widely used for querying data from services like Azure SQL Database, Azure Synapse Analytics, and Azure Data Explorer.

Understanding core SQL commands is essential for tasks like data exploration, transformation, and reporting. Key SQL commands:

SELECT– Retrieves data from tables.WHERE– Filters records by condition.ORDER BY– Sorts results.GROUP BY– Groups rows with the same values.HAVING– Filters grouped records.JOIN– Combines data from multiple tables.

Example: Extracting Insights with SQL in Azure Synapse:

SELECT d.name AS department, AVG(e.salary) AS avg_salary

FROM employees e

JOIN departments d ON e.dept_id = d.id

WHERE e.status = 'active'

GROUP BY d.name

HAVING AVG(e.salary) > 80000

ORDER BY avg_salary DESC;This query might be used in Azure Synapse Analytics to analyze average salaries by department, filtering for only active employees and high-paying departments.

If you’re new to Synapse, start with this Azure Synapse beginner’s guide to build confidence.

How can you transform data using SQL in Azure, and what are some standard transformation techniques?

In Azure, data transformation using SQL is commonly performed in services like Azure SQL Database, Azure Synapse Analytics (Dedicated or Serverless SQL pools), or via mapping data flows in Azure Data Factory.

These transformations help shape raw data into clean, structured, and insightful formats for reporting and analytics.

Here are some widely used SQL operations for data transformation:

- Aggregation (

SUM,AVG,COUNT) – Summarizes data. CASEstatements – Applies conditional logic.- String functions (

UPPER,LOWER,CONCAT) – Modifies text. - Date functions (

YEAR,MONTH,DATEDIFF) – Extracts date details. - Window functions (

RANK,ROW_NUMBER,LEAD,LAG) – Enables analytics.

Example: Transformation Query in Azure Synapse SQL

SELECT

customer_id,

UPPER(TRIM(customer_name)) AS cleaned_name,

SUM(order_amount) AS total_spent,

RANK() OVER (ORDER BY SUM(order_amount) DESC) AS spending_rank,

CASE

WHEN SUM(order_amount) > 5000 THEN 'High Value'

WHEN SUM(order_amount) > 1000 THEN 'Medium Value'

ELSE 'Low Value'

END AS customer_segment

FROM sales_data

GROUP BY customer_id, customer_name;The above kind of SQL transformation could be part of a Synapse pipeline used to power dashboards or feed into machine learning models.

What is the difference between batch data ingestion and real-time data ingestion in Azure?

Data ingestion moves data from sources to storage or processing systems in Azure. It generally falls into two types, depending on the timeliness and frequency of data processing:

- Batch ingestion: Collects data over time and loads it at intervals (e.g., hourly, daily). Used for reports, data warehousing, and ETL. Common Azure Services:

- Azure Data Factory

- Azure Synapse Pipelines

- Azure SQL Data Sync

- Real-time ingestion: Continuously processes incoming data for instant analysis. It is used for fraud detection, IoT, and real-time analytics. Common Azure Services:

- Azure Event Hubs

- Azure IoT Hub

- Azure Stream Analytics

- Azure Data Explorer

To understand when to use each ingestion method, see our Batch vs Stream Processing breakdown.

How does Azure Data Factory handle batch ingestion?

Azure Data Factory is an ETL (Extract, Transform, Load) service that helps move and transform large volumes of data. How it works:

- Connects to various data sources (SQL databases, blob storage, on-premises files).

- Schedules and orchestrates batch data movement at specific intervals (e.g., hourly, nightly) or in response to events.

- Applies transformations using Mapping Data Flows, stored procedures or custom scripts (via Azure Databricks, HDInsight, or Azure Functions).

Intermediate Azure Data Engineer Interview Questions

Having covered the basics, let's move on to some intermediate-level Azure data engineer interview questions that explore your technical proficiency in implementing and using these fundamental concepts.

How does Azure Databricks handle large-scale data transformation using Apache Spark?

Azure Databricks is a cloud-based analytics platform optimized for Apache Spark. It enables efficient large-scale data processing through distributed computing. It uses Spark’s model for large data transformations, partitioning data and processing it in parallel across cluster nodes. Features for large-scale transformation:

- Distributed computing: Spark divides large datasets into partitions and distributes them across a cluster of nodes.

- Lazy evaluation: Transformations in Spark (e.g.,

map,filter,groupBy) are not executed immediately. Instead, Spark builds a logical execution plan and waits until an action (e.g.,count,collect,save) is called. - Resilient Distributed Datasets (RDDs): RDDs are the core data abstraction in Spark, allowing fault-tolerant, parallel operations.

- High-level APIs: In addition to RDDs, Azure Databricks supports DataFrames and Spark SQL, which are more performant and easier to use for most data engineering tasks.

- Delta Lake support: Azure Databricks also supports Delta Lake, a storage layer that adds ACID transactions and schema enforcement on top of Parquet files

You can explore how Delta Lake enables ACID transactions in our Delta Lake tutorial.

What are the key differences between transformations and actions in Apache Spark within Azure Databricks?

In Azure Databricks, Spark operations are classified into Transformations and Actions. Here are their differences:

|

Aspect |

Transformations |

Actions |

|

Definition |

Operations that define a new dataset from an existing one |

Operations that trigger the execution of transformations |

|

Execution |

Lazy – they build a logical plan but don't run immediately |

Eager – they force Spark to execute the DAG and compute results |

|

Result |

Returns a new RDD or DataFrame (transformed dataset) |

Returns a value to the driver or writes data to storage |

|

Purpose |

Used to define what should be done |

Used to specify when to execute and retrieve data |

|

Examples |

map(), filter(), select(), groupBy(), withColumn() |

count(), collect(), show(), save(), write() |

|

Optimization |

Enables Spark to optimize the execution plan before running |

Executes the optimized plan |

How does Azure Data Factory orchestrate data workflows, and what are its key components?

Azure Data Factory (ADF) is a cloud-based data integration service for orchestrating and automating workflows across various sources and destinations. Key ADF components for orchestration:

- Pipelines – Containers defining data movement and transformation.

- Activities – Tasks within pipelines (e.g., Copy, Data Flow, Stored Procedure).

- Datasets – References to data in Blob Storage, SQL, Data Lakes, etc.

- Linked services – Connections to data sources and destinations.

What are the different triggers in Azure Data Factory, and how do they work?

Triggers in Azure Data Factory automate pipeline runs based on schedules or events. The three main types are:

- Schedule trigger – Runs pipelines at set intervals (e.g., hourly, daily). Example: Loads sales data from an API nightly.

- Tumbling window trigger – Executes in fixed time windows with no overlap. Example: Processes sensor data in hourly batches.

- Event-based trigger – Fires when an event occurs (e.g., file upload). Example: Starts pipeline when a new CSV is added to Blob Storage.

How do Azure Synapse Analytics and Azure Databricks differ in architecture and primary use cases?

While both Azure Synapse Analytics and Azure Databricks are designed for large-scale data processing, they serve different purposes, follow different architectural models, and cater to distinct user personas.

Here are their main differences:

|

Category |

Azure Synapse Analytics |

Azure Databricks |

|

Architecture |

Tightly integrated SQL engines (dedicated + serverless) |

Apache Spark-based distributed clusters |

|

Primary interface |

Synapse Studio (SQL Editor, Data Explorer, Pipelines) |

Collaborative notebooks (Python, Scala, SQL, R) |

|

Best for |

Data warehousing, BI, reporting, and batch analytics |

Big data processing, data science, ML, streaming workloads |

|

Language support |

Primarily T-SQL, with limited support for Spark |

Python, Scala, SQL, R, and full Spark support |

|

Data formats |

Structured and semi-structured (Parquet, CSV, JSON) |

Structured, semi-structured, and unstructured (text, images, video) |

|

Integration |

Native Power BI, Data Factory, and SQL tooling |

MLflow, Delta Lake, AutoML, advanced ML frameworks (TensorFlow, etc.) |

|

Processing type |

Optimized for batch and interactive SQL queries |

Optimized for distributed, in-memory, real-time & iterative workloads |

|

User personas |

Data analysts, BI developers, SQL developers |

Data engineers, data scientists, ML engineers |

For a deeper breakdown, read our Azure Synapse vs. Databricks comparison.

When should you use Azure Synapse versus. Databricks for real-time data processing?

Azure Synapse and Databricks both support real-time processing but differ in approach.

Use Azure Synapse for real-time BI and event ingestion when:

- You need structured, near-real-time insights for dashboards and reporting.

- Your data is coming from sources like Event Hubs or IoT Hub and landing in Synapse via Data Flows or serverless SQL pools.

- You’re building a low-complexity analytics layer with T-SQL and pushing results to Power BI.

- Latency on the order of seconds to minutes is acceptable.

Use Azure Databricks for low-latency streaming and ML pipelines when:

- You need millisecond-to-second latency for use cases like fraud detection, anomaly detection, or real-time recommendations.

- You’re processing large-scale or semi-structured/unstructured data using Spark Structured Streaming.

- You plan to run AI/ML models inline with your real-time pipeline.

- You require fine-grained control over streaming logic and scalability.

What is Role-Based Access Control (RBAC) in Azure, and how does it help secure data?

Role-Based Access Control (RBAC) is an Azure security model that limits resource access based on user roles. Instead of full access, RBAC grants only the permissions needed for each role.

RBAC assigns roles to users, groups, or apps at various scopes—like subscriptions, resource groups, or specific resources. Common roles include:

- Owner

- Contributor

- Reader

- Data reader/Writer

Key benefits:

- Prevents unauthorized data access

- Minimizes risk by enforcing the least privilege

- Enables auditing to track access and changes

How does Azure ensure data security using encryption, and what is the role of Azure Purview in governance?

Azure secures data through encryption at rest and in transit:

- Encryption at rest: Data is automatically encrypted using Azure Storage Service Encryption (SSE).

- Encryption in transit: Data is protected during transfer using TLS (Transport Layer Security).

- Column-level encryption: Sensitive database columns (e.g., in Azure SQL) can be individually encrypted. Example: Encrypting credit card numbers for compliance.

Azure Purview is a unified solution that helps:

- Discover and classify sensitive data across cloud and on-prem systems.

- Track data lineage to visualize flow through pipelines.

- Ensure compliance with regulations like GDPR and HIPAA.

What is data partitioning, and how does it improve performance in Azure data processing?

Data partitioning divides large datasets into smaller, manageable chunks (partitions) based on criteria like time, region, or ID.

Example: Partitioning in Azure Data Lake Storage.

A retail company storing sales data in Azure Data Lake Storage (ADLS) can organize it by year, month, and day instead of using one large file:

/sales_data/year=2023/month=12/day=01/

/sales_data/year=2023/month=12/day=02/

/sales_data/year=2023/month=12/day=03/This structure lets queries target only relevant partitions, greatly improving performance.

How can indexing and caching optimize query performance in Azure Synapse Analytics?

In Azure Synapse Analytics, large-scale queries can be optimized with indexing and caching to improve speed and efficiency.

- Indexes reduce scanned data and speed up queries. Synapse supports clustered and non-clustered column store indexes. Example: Creating a non-clustered index on

CustomerIDenables faster lookups, avoiding full table scans. - Caching stores frequently accessed query results in memory to avoid recomputation. Example: Using a materialized view on the

SalesDatatable enables instant retrieval of precomputed aggregations.

Advanced-Azure Data Engineer Interview Questions

Let's explore some advanced interview questions for those seeking more senior roles or aiming to demonstrate a deep knowledge of specialized or complex Azure data engineering.

How does Azure Stream Analytics process real-time data from Event Hubs, and what performance optimizations are possible?

Azure Stream Analytics (ASA) is a real-time processing engine that ingests, analyzes, and transforms data from sources like Azure Event Hubs, IoT Hub, and Blob Storage. It lets users filter, aggregate, and route data to destinations such as Azure SQL, Power BI, or Data Lake Storage.

How ASA works with Event Hubs:

- Ingestion: Event Hubs buffers real-time data streams.

- Processing: ASA uses SQL-like queries to process events in real time.

- Output: Results are sent to destinations like Data Lake, Cosmos DB, or Power BI.

Performance optimizations:

- Parallel processing: Use Streaming Units (SUs) to scale for high event volume.

- Partitioning: Configure Event Hub partitions to distribute processing load efficiently.

How can you implement an event-driven architecture using Azure Event Hubs and Azure Functions for real-time data processing?

Event-driven architecture enables systems to respond instantly to events, ideal for real-time analytics, monitoring, and automation. Azure Event Hubs, a scalable event ingestion service, works seamlessly with Azure Functions for processing.

Workflow with Event Hubs and Azure Functions:

- Event producer: Apps, IoT devices, or logs send events to Event Hubs.

- Processing: Azure Function listens to the stream and triggers custom logic.

- Output: Processed events go to Cosmos DB, SQL, Data Lake, or Power BI.

Example architecture using Azure Event Hubs and Azure Funtions to process large volumes of data in near real time. Source: Microsoft Learn.

How can you integrate Azure Synapse Analytics with Apache Spark for advanced big data analytics, and what are the key benefits?

Azure Synapse Analytics natively supports Apache Spark pools, allowing you to run Spark-based big data and machine learning workloads directly within the Synapse environment—without deploying external infrastructure.

This tight integration bridges the gap between big data engineering, machine learning, and enterprise analytics, making it easy to work with both structured and unstructured data at scale.

How the integration works:

- Built-in Spark pools: You can create Apache Spark pools within Synapse Studio to run notebooks in PySpark, Scala, SQL, or .NET for Spark.

- Access to SQL pools: Spark jobs can directly read from and write to Synapse SQL dedicated pools and serverless pools, enabling seamless data movement between warehousing and big data workloads.

- Unified workspace: Notebooks, SQL scripts, pipelines, and datasets live in the same Synapse workspace, simplifying collaboration between data engineers, data scientists, and BI developers.

- Data Lake and Delta integration: Spark can process data stored in Azure Data Lake Storage Gen2, and supports Delta Lake for ACID transactions and schema enforcement.

- Integration with pipelines: Spark notebooks can be orchestrated in Synapse Pipelines, allowing you to automate complex ETL workflows that span both SQL and Spark.

How can you optimize the cost of an Azure data engineering solution while maintaining performance and scalability?

Cost optimization in Azure means selecting the right services, minimizing resource use, and using automation—all while preserving performance and scalability. Key strategies:

- Choose efficient storage and compute – Use Azure Blob Storage for raw data instead of costly databases.

- Streamline pipelines – Enable auto-scaling in Azure Data Factory’s Integration Runtime to pay only for what you use.

- Reduce compute costs – Use Spot VMs for interruptible Databricks workloads to save up to 90%.

What are the best practices for performance tuning in Azure data engineering workflows?

Performance tuning involves optimizing data processing, query execution, and scaling to ensure efficient resource use. Here are some best practices:

- Storage and file formats

- Use Parquet or Delta Lake for faster, columnar reads.

- Partition data by commonly filtered fields (e.g.,

date) to reduce scan time. - Enable compression to lower storage costs and improve I/O.

- Query Tuning (Azure Synapse)

- Use materialized views to precompute joins or aggregations.

- Keep table stats and indexes up to date.

- Avoid

SELECT *—only retrieve needed columns. - Pipeline Optimization (ADF)

- Use Copy Activity for simple data movement; Data Flows for transformations.

- Parameterize pipelines for reusability.

- Monitor and scale integration runtimes efficiently.

- Spark Tuning (Databricks)

- Cache reused data selectively.

- Use broadcast joins with small tables to avoid shuffles.

- Tune Spark configs (e.g.,

shuffle.partitions) based on workload.

How can you integrate Azure Machine Learning (ML) with Azure Data Factory for automated model training and inference?

Integrating Azure ML with Azure Data Factory (ADF) enables automated model training, deployment, and inference within a data pipeline. Integration steps:

- Prepare data: Use ADF to ingest raw data from sources like Blob Storage, SQL, or a Data Lake.

- Train and deploy model: Create an Azure ML pipeline to train and register the model.

- Run inference: Connect ADF to Azure ML Batch Endpoints for large-scale inference.

- Automate retraining: Schedule ADF pipelines to retrain models regularly.

How can Azure Databricks and Azure Machine Learning work together for scalable machine learning training and deployment?

Azure Databricks and Azure Machine Learning (Azure ML) can be integrated for scalable data processing, model training, and deployment in the cloud. Integration steps:

- Data prep in Databricks: Use Apache Spark to clean and transform large datasets.

- Train and register model: Train models (e.g., MLlib, Scikit-learn) in Databricks and register them in Azure ML.

- Deploy via Azure ML: Use Azure ML Managed Online Endpoints for real-time inference.

- Automate with Pipelines: Schedule workflows using Azure ML Pipelines or Databricks Jobs.

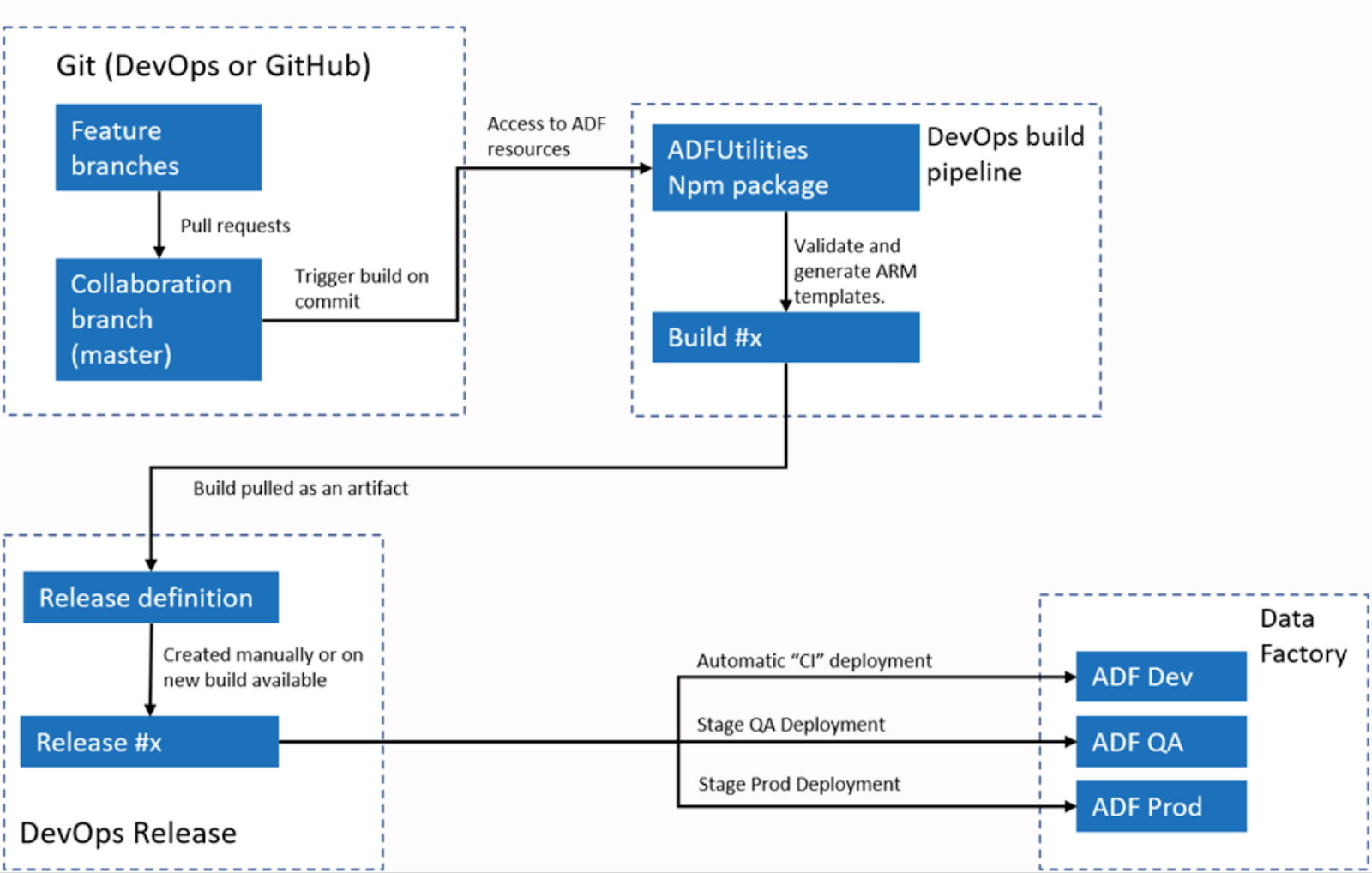

How can you implement CI/CD for Azure Data Factory pipelines using Azure DevOps?

CI/CD (Continuous Integration/Deployment) for Azure Data Factory (ADF) automates pipeline deployment, minimizing manual effort and ensuring consistency across environments.

Steps to implement CI/CD with Azure DevOps:

- Enable Git integration: Link ADF to Azure Repos (Git) for version control.

- Set up a build pipeline: Export ADF pipelines as ARM (Azure Resource Manager) templates.

- Create a release pipeline: Deploy pipelines to staging/production using ARM templates.

- Automate testing: Use validation scripts to check pipeline integrity before deployment.

Example architecture of automated publishing for CI/CD in Azure with ADF. Source: Microsoft Learn.

To go further, explore how CI/CD principles apply to ML pipelines in our CI/CD for Machine Learning course.

How can Terraform automate infrastructure deployment for data pipelines in Azure?

Terraform is an Infrastructure-as-Code (IaC) tool that automates and standardizes the provisioning of Azure resources like Data Factory, Data Lake, Synapse, and Databricks—ensuring consistency, scalability, and repeatability.

Steps to use Terraform for Azure Data engineering pipelines:

- Set up Terraform: Install Terraform CLI and authenticate with Azure CLI.

- Define infrastructure: Write Terraform scripts for required components like Data Factory, Storage, and Databricks.

- Deploy resources: Use

terraform planandapplyto provision infrastructure automatically. - Automate with Azure DevOps: Store scripts in Azure Repos and integrate with CI/CD pipelines.

Get up and running quickly with our Terraform getting started guide.

Scenario-Based Data Engineer Interview Questions

Demonstrating your Azure data engineering knowledge is essential, but showcasing that you know when to use it properly will make you stand out in your interview. This section will review applying your Azure data engineer knowledge to practical situations.

Your company must process large volumes of transactional data from multiple sources daily. How would you design a scalable batch data pipeline in Azure for daily transactional processing?

To process large daily volumes of transactional data, design an Azure-based batch pipeline as follows:

- Data ingestion: Use Azure Data Factory (ADF) to ingest data from SQL databases, CSV files, and APIs. ADF automates and schedules data movement.

- Storage: Store raw data in Azure Data Lake Storage (ADLS) in Parquet format for efficient queries.

- Processing: Perform batch transformations and aggregations using Azure Synapse or Databricks.

- Serving: Load processed data into Azure SQL Database or Synapse for BI and reporting.

- Automation: Schedule daily runs with ADF Triggers.

Your team must process real-time IoT sensor data to detect anomalies and trigger alerts. How would you design a scalable streaming data pipeline in Azure?

Design a low-latency, event-driven streaming pipeline as follows:

- Ingestion: Use Azure Event Hubs or IoT Hubs to capture high-frequency sensor data.

- Processing: Analyze data in real-time using Azure Stream Analytics or Databricks with Spark Structured Streaming.

- Storage and alerts: Store data in Azure Cosmos DB for fast access or Data Lake for historical analysis.

Example architecture for real-time insights through data stores in Azure. Source: Microsoft Learn.

Your company processes e-commerce order data. How would you design a hybrid pipeline for e-commerce data processing?

Use a hybrid pipeline combining real-time and batch processing:

- Real-time (fraud detection): Ingest order events via Azure Event Hubs.

- Batch (financial reporting): Store raw transactions in Azure Data Lake Storage (ADLS).

- Orchestration: Use Azure Logic Apps to trigger real-time alerts and integrate with fraud detection services.

You are building a scalable data pipeline that needs to process large-scale log data. How would you design a scalable log data pipeline for real-time and historical insights?

Use a Lambda architecture combining real-time and batch processing:

- Real-time insights: Ingest logs using Azure Event Hubs.

- Historical analysis: Store logs in Azure Data Lake (ADLS) in Parquet format.

- Scalability: Monitor pipeline performance with Azure Monitor and Log Analytics.

Your team is experiencing slow query performance when running analytics on large datasets. How would you optimize slow query performance on large datasets in Azure Data Lake?

Slow queries often stem from poor data organization, oversized files, or missing indexes. Optimize with these strategies:

- Efficient partitioning: Partition by date, category, or region to limit scanned data.

- Columnar formats: Use Parquet or Delta Lake over CSV/JSON for faster queries.

- File size optimization: Minimize numerous small files to reduce metadata overhead.

- Caching and indexing: Use Synapse materialized views to cache results.

- Query pushdown: Apply Spark SQL predicate pushdown to filter data before loading.

Learn why Parquet is ideal for big data pipelines in our Apache Parquet tutorial.

Your organization stores petabytes of data in Azure Data Lake, but your analytics costs are increasing. How would you optimize storage costs in Azure Data Lake without sacrificing performance?

To reduce costs while maintaining performance, apply a layered strategy:

- Use Hierarchical Namespace (HNS): Enables directory-level access and boosts metadata performance.

- Optimize file formats: Convert CSV/JSON to Parquet or Delta Lake to cut costs.

- Lifecycle management: Move rarely accessed data to cool or archive tiers.

- Enable compression: Use Snappy or Gzip to compress Parquet files.

- Leverage Delta Lake: Auto-compacts small files and removes redundant data with

VACUUM.

How can you fix slow queries caused by tiny files in Azure Data Lake?

Tiny files create metadata overhead and slow down queries. To optimize:

- Use Databricks Auto Optimize: Enable Delta Lake Auto Compaction to merge small files automatically.

- Improve ingestion strategy: In Azure Data Factory, use larger batch sizes to avoid generating many small files.

- Use OPTIMIZE with Z-ordering: Periodically compact Delta tables and cluster data to speed up scans.

- Leverage Synapse managed tables: Store pre-aggregated data in dedicated SQL pools to avoid repeatedly reading raw small files.

Your organization is experiencing slow performance when joining multiple large datasets. How can you improve join performance on large datasets in Azure Data Lake?

Slow joins often result from data shuffling, poor formats, or inefficient execution. To optimize:

- Partition and cluster on join keys: Reduces data movement during joins.

- Use optimized formats: Convert CSV/JSON to Parquet or Delta Lake for better performance.

- Enable bucketing in Spark: Pre-bucket tables on join keys to reduce shuffling.

- Optimize queries in Synapse: Apply

HASH DISTRIBUTIONon large fact tables for faster joins.

Your company handles highly sensitive financial data and is building a secure data pipeline in Azure. How can you secure a data pipeline in Azure to meet compliance requirements?

Protect data through encryption at rest and in transit:

- At rest: Azure encrypts stored data using Storage Service Encryption (SSE) with Microsoft-managed keys.

- In transit: Use TLS 1.2+ for secure transfers via Data Factory, Synapse, or Event Hubs.

- For Databricks: Enable SSL when accessing Data Lake with Spark.

These steps ensure end-to-end data protection across the pipeline.

Your organization is building a data pipeline in Azure that processes sensitive customer information. How can you design secure, fine-grained access control in an Azure data pipeline?

To protect sensitive customer data, combine RBAC and Managed Identities:

- RBAC for granular permissions: Assign least-privilege roles in Storage, Synapse, and Data Factory.

- Managed identities for authentication: Avoid storing credentials; use Managed Identities for service access.

- Row-Level Security (RLS): Apply RLS in Synapse or SQL Database to restrict access by user role.

This approach ensures secure, role-based access across the pipeline.

Tips for Preparing for an Azure Data Engineering Interview

Preparing for an Azure data engineering interview can be daunting, but a structured approach helps. Here’s my recommendation:

Understand the big picture

Azure Data Engineers don't just move data—they design systems that power analytics, machine learning, and real-time decision-making. Be ready to explain how your work supports data analysts, scientists, and business goals. Demonstrating this context-awareness sets you apart.

Example: "How would you design a pipeline to support both real-time fraud detection and daily reporting?"

Focus on problem-solving, not just tools

You don’t need to be an expert in every Azure service—but you do need to show how you break down problems and reason through solutions. Focus on:

- Identifying the problem clearly

- Choosing relevant tools or services

- Explaining trade-offs and scalability

Practice talking through open-ended architecture questions aloud.

Tailor prep to the job description

Pay attention to the specific technologies and responsibilities mentioned in the posting. Are they using Synapse? Emphasizing streaming? Mentioning DevOps or CI/CD?

Align your preparation accordingly and have relevant project examples ready.

Use the STAR method for behavioral questions

Prepare 2–3 stories using the STAR format (Situation, Task, Action, Result) to show:

- Collaboration with teams

- Handling data quality or performance issues

- Making design decisions under constraints

Know how to monitor and tune systems

Interviewers love when candidates talk about observability and performance. Make sure you’re comfortable discussing:

- Metrics like latency, throughput, or data freshness

- Azure Monitor and Log Analytics

- How you'd debug a slow pipeline or failed job

Practice with hands-on labs and projects

The best way to build confidence is through practice. Use:

- DataCamp’s Data Engineer Career Track for guided learning

- Azure’s free-tier services to run pipelines and Spark jobs

- GitHub sample projects to simulate real-world scenarios

For quick reference during your hands-on prep, the Azure CLI Cheat Sheet is a handy resource.

Demonstrate curiosity and a growth mindset

You don’t need to know everything—but show that you're eager to learn. If asked about something unfamiliar, describe how you'd go about investigating or testing it.

Example response: "I haven’t worked with Azure Stream Analytics directly, but I’d start by reviewing the official docs, checking integration options with Event Hub, and testing a simple pipeline in a dev workspace."

Ask smart questions at the end

Wrap up interviews with thoughtful questions that show engagement, such as:

- "How does your team decide between Synapse and Databricks for analytics workloads?"

- "What are the biggest bottlenecks you're trying to solve right now?"

- "How do new team members usually ramp up in their first 90 days?

Azure data engineering technical interview prep checklist

|

Category |

What to focus on |

|

Core concepts |

Understand ETL vs ELT, batch vs streaming, data pipelines, schema design. |

|

Azure services |

Know how to use Azure Data Factory, Synapse, Databricks, and Data Lake. |

|

Data ingestion and movement |

Learn how to connect to multiple data sources, move and transform data efficiently. |

|

Data storage |

Choose between Blob Storage, ADLS, and Azure SQL based on use case. |

|

Data transformation |

Practice using Mapping Data Flows, Spark, and SQL for transformation. |

|

Performance and monitoring |

Know how to tune pipelines and monitor performance using Azure Monitor and Log Analytics. |

|

ML and analytics integration |

Be ready to integrate with Power BI, ML models, and real-time dashboards. |

|

Security and governance |

Brush up on RBAC, managed identities, data encryption, and data lineage. |

|

Scenario design |

Practice designing end-to-end solutions for real business cases. |

Conclusion

Preparing for an Azure Data Engineering interview means more than just knowing the tools—it’s about understanding how to design reliable, scalable, and efficient data solutions using the Azure ecosystem. In this guide, we covered essential topics from core services like Azure Data Factory, Synapse Analytics, and Databricks, to advanced concepts like real-time processing, performance tuning, and end-to-end pipeline design.

To deepen your knowledge and gain hands-on practice, check out DataCamp Azure courses and tutorials . With the right preparation, you'll be ready to tackle both technical and scenario-based interview questions with confidence!

If you're preparing for entry-level certifications, the Microsoft Azure Fundamentals (AZ-900) track is a great place to start.

Become Azure AZ-900 Certified

I am a data scientist with strong expertise in Python, Machine Learning, and AWS, complemented by a master's degree focused on Machine Learning using Python. My interests span artificial intelligence, data science, machine learning, and Python programming. I am passionate about exploring the mathematical foundations of machine learning algorithms. I regularly write blogs on Python and machine learning topics, sharing insights and knowledge to help others in the field.