Track

Batch and stream processing are two fundamental approaches to handling and analyzing data. Understanding both methods is important for leveraging the strengths of each approach in different data-driven scenarios, from historical analysis to real-time decision-making.

A data professional should understand both approaches' strengths and weaknesses and the best place to implement each approach for their ETL and ELT processes.

In this article, we will define batch and stream processing, their differences, and how to choose the right approach for your specific use case.

What Is Batch Processing?

Batch processing is a method in which large volumes of collected data are processed in chunks or batches.

This approach is especially effective for resource-intensive jobs, repetitive tasks, and managing extensive datasets where real-time processing isn’t required. It is ideal for applications like data warehousing, ETL (Extract, Transform, Load), and large-scale reporting.

Due to its versatility in meeting various business needs, batch processing remains a widely adopted choice for data processing.

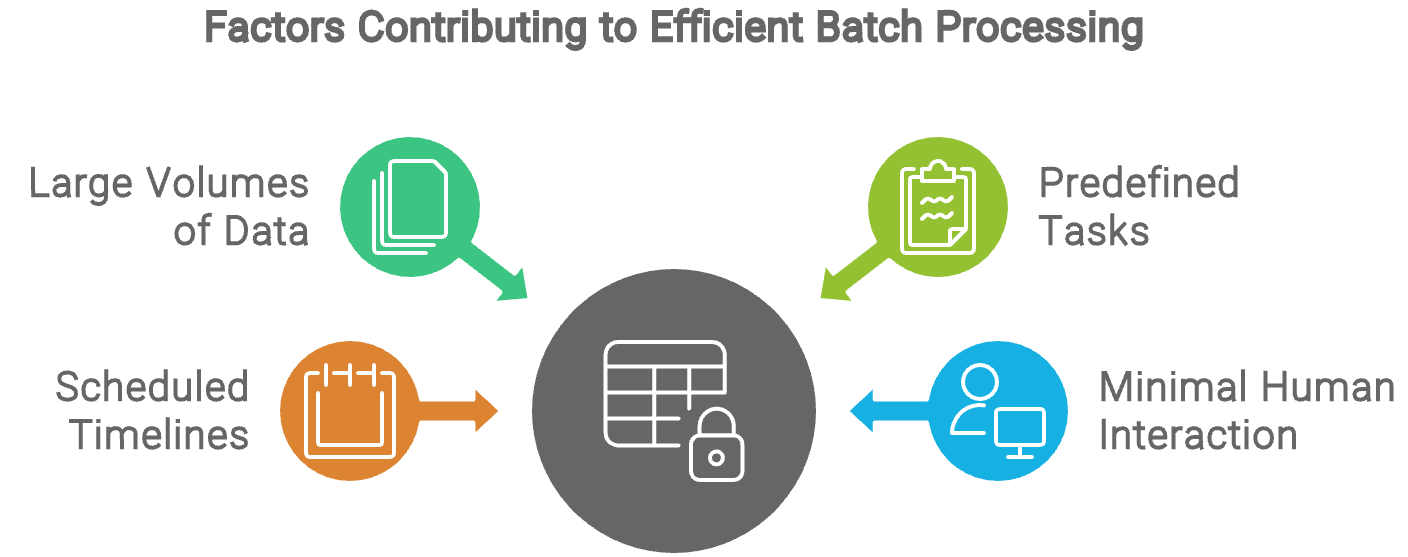

Data batch processing is mainly automated, requiring minimal human interaction once the process is set up. Tasks are predefined, and the system executes them according to a scheduled timeline, typically during off-peak hours when computing resources are readily available.

Human involvement is usually limited to configuring the initial parameters, troubleshooting errors if they arise, and reviewing the output, making batch processing a highly efficient and hands-off approach to managing large-scale data tasks.

There are a variety of ETL tools for batch processing. A common tool is Apache Airflow, which allows users to quickly build up data orchestration pipelines that can run on a set schedule and have simple monitoring. Explore different tools to find the one that best fits your business needs!

What Is Stream Processing?

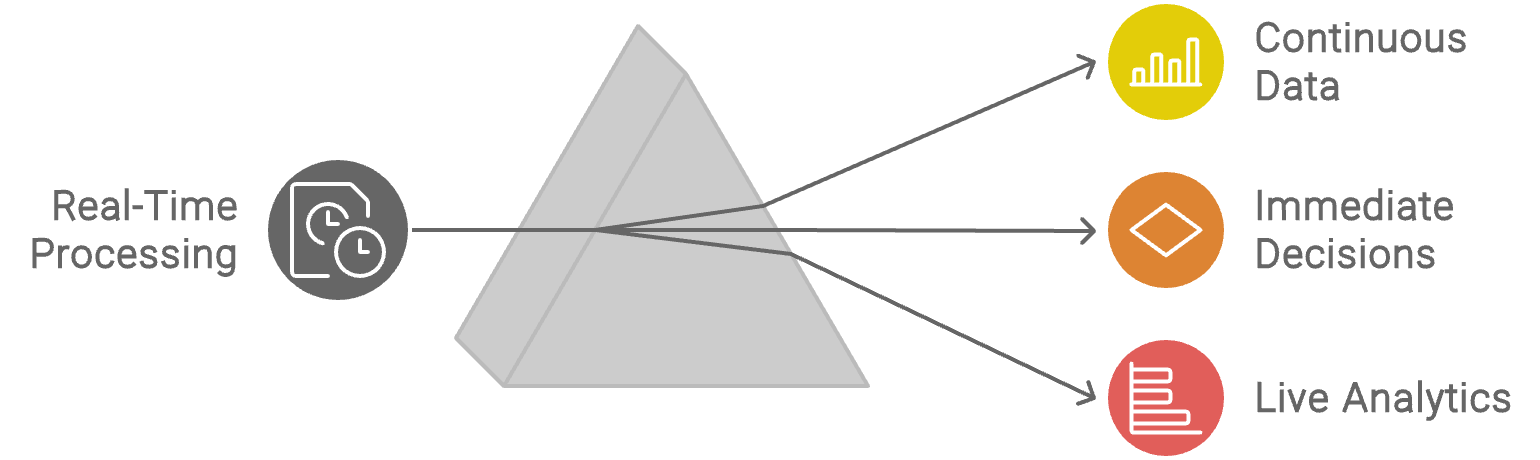

Stream processing, sometimes called streaming processing or real-time data processing, is a data processing approach designed to handle and analyze data in real time as it flows through a system.

Unlike batch processing, which involves collecting and processing data in large, discrete chunks at scheduled intervals, stream processing deals with data continuously and incrementally.

Data is collected from various sources such as sensors, logs, transactions, social media feeds, or other live data sources.

Data streams are then processed as they are received, involving a series of operations such as filtering, transforming, and aggregating the data. This allows for real-time implementation such as live analytics, triggering alerts, real-time dashboards, or feeding into other systems for further action. These insights are often used to influence immediate decisions.

Streaming processing applications include real-time analytics for financial markets, fraud detection, network traffic monitoring, recommendation engines, and more.

Streaming systems often include capabilities for constant monitoring and managing data flows and processing pipelines to support high-velocity data. This includes tracking the system's performance, the health of the data streams, and the outcomes of the processing tasks.

One popular framework is AWS Kinesis, which is combined with Lambda. Amazon Kinesis is a cloud-based service that allows you to collect, process, and analyze real-time, streaming data, whereas Lambda supports complex functions and automation.

Differences Between Batch Processing and Streaming Processing

Now that we have defined batch and streaming processes, let’s highlight some of their differences.

Data latency

Batch and streaming methods have distinct differences when considering how quickly data can be processed and analyzed.

- Streaming processing: Low.

- Streaming processing handles data as it arrives, enabling near real-time analysis and decision-making. This is ideal for applications where immediate responses are crucial.

- Batch processing: High.

- Data is collected over a period and processed in chunks at scheduled intervals. This approach is suitable for scenarios where the timing of the analysis is less critical.

Data volume

The amount of data each method can manage at any given time also varies significantly.

- Streaming processing: Real-time.

- It can handle high volumes of continuous data, but scalability depends on the system’s design and infrastructure. Managing massive volumes of real-time data requires robust and scalable systems.

- Batch processing: Large chunks.

- Typically better suited for huge volumes of data that can be processed in chunks. Batch processing systems can aggregate vast amounts of data before processing.

Complexity

The complexity involved in setting up and maintaining these processing methods is another important difference.

- Streaming processing: High.

- Requires complex infrastructure to manage continuous data streams, ensure real-time processing, and handle state management and fault tolerance issues.

- Batch processing: Low.

- Batch processing systems are generally more straightforward to implement and manage, as data processing is done in predefined intervals and can be optimized for large-scale operations.

Use cases

Different processing methods lend themselves to different types of applications and use cases.

- Streaming processing: Scenarios requiring real-time insights and immediate action.

- Examples include monitoring social media for brand sentiment, real-time traffic management, or live streaming analytics.

- Batch processing: Scenarios where data can be processed in intervals without immediate action.

- Examples include periodic reporting, data warehousing, and large-scale data transformations.

Infrastructure and cost

The infrastructure requirements and associated costs also differ between batch and streaming processing.

- Streaming processing:

- Infrastructure: Requires specialized infrastructure to handle continuous data streams, including high-throughput data pipelines, real-time processing engines, and often complex distributed systems.

- Cost: Potentially higher due to the need for high-performance computing resources, continuous monitoring, and scaling to manage real-time data efficiently.

- Batch processing:

- Infrastructure: Typically requires infrastructure that supports periodic data processing and storage, such as data warehouses or Hadoop clusters. The infrastructure can be less complex compared to streaming systems.

- Cost: Large-scale processing costs are generally lower, as it can leverage existing storage and computing resources without requiring continuous operations.

|

Batch processing |

Stream processing |

|

|

Data latency |

High latency, processes at set schedules |

Low latency, processes in real-time |

|

Data volume |

Large chunks at once and can handle well-schedule vast volumes of data |

Large volumes constantly need to be handled carefully |

|

Complexity |

Lower complexity due to the predictability of data and easier to manage |

Higher complexity due to higher velocity, volume, and variety of data |

|

Use cases |

Processing data that is analyzed periodically, such as monthly reports or weekly performance metrics |

Constant analysis of data for things such as fraud alerts, live streaming analytics, and IoT processing |

|

Infrastructure and cost |

Less complex infrastructure that focuses on supporting parallel processes and lower cost as resources can be shared more easily |

Very complex infrastructure that demands constant attention and flexibility while incurring significant costs due to the need for constant scaling |

Batch vs streaming processing: Summary of differences

Common Use Cases for Batch Processing

Batch processing has diverse applications that cater to different business needs, especially in situations involving large datasets or routine operations. Below are several examples of scenarios where batch processing serves as a practical solution:

Data warehousing and ETL

Batch processing is commonly used in data warehousing environments for ETL processes. It ensures that the data warehouse is consistently up-to-date while minimizing impact on operational systems.

It focuses on aggregating data from various sources, transforming it into a suitable format, and efficiently loading it into a centralized data warehouse at scheduled intervals.

Periodic reporting

Many organizations use batch processing to generate periodic reports, such as monthly sales summaries or quarterly performance reviews.

Businesses can efficiently produce comprehensive reports that provide valuable insights into their operations by collecting and processing data at regular intervals.

Historical data analysis

Batch processing is well-suited for analyzing historical data, as it can regularly process and examine large datasets accumulated over extended periods.

Businesses can analyze years of sales data, customer interactions, or operational metrics in a single batch job. This comprehensive analysis can help identify trends and anomalies indicating operational inefficiencies or risks.

Large-scale data migrations

Batch processing can efficiently move large volumes of data from one system to another. By processing the migration in batches, organizations can minimize downtime and ensure a smoother transition while maintaining data integrity.

Common Use Cases for Streaming Processing

Streaming processing is particularly well-suited for scenarios where timely insights and immediate responses are critical. Here are some specific examples of where streaming processing excels.

Real-time analytics and monitoring

Streaming processing enables the real-time analysis of incoming data, providing instant insights into trends, customer behavior, and potential issues.

For instance, a sudden spike in negative mentions on social media can be detected immediately, allowing the company to respond quickly.

Fraud detection

By analyzing transaction patterns in real time, the system can identify anomalies or suspicious behavior—such as unusual spending patterns or transactions from unexpected locations—and trigger alerts or block transactions to prevent fraud. This protects both consumers and the business by lowering the risk of erroneous transactions.

Live data feeds and event processing

Television networks use streaming processing to provide live updates and information during broadcasts.

A great example is sporting events. Real-time data streams (such as scores, player statistics, and play-by-play actions) are processed to deliver up-to-date information and enhance viewer engagement with live commentary and interactive features.

IoT data processing

In smart cities, streaming processing manages data from sensors embedded in traffic lights, parking meters, and public transport systems. Real-time analysis of this data helps optimize traffic flow, monitor air quality, and manage public transportation systems efficiently.

By detecting anomalies in travel patterns that could indicate potential issues, city officials can make proactive adjustments and minimize traffic.

Choosing the Right Approach: Factors to Consider

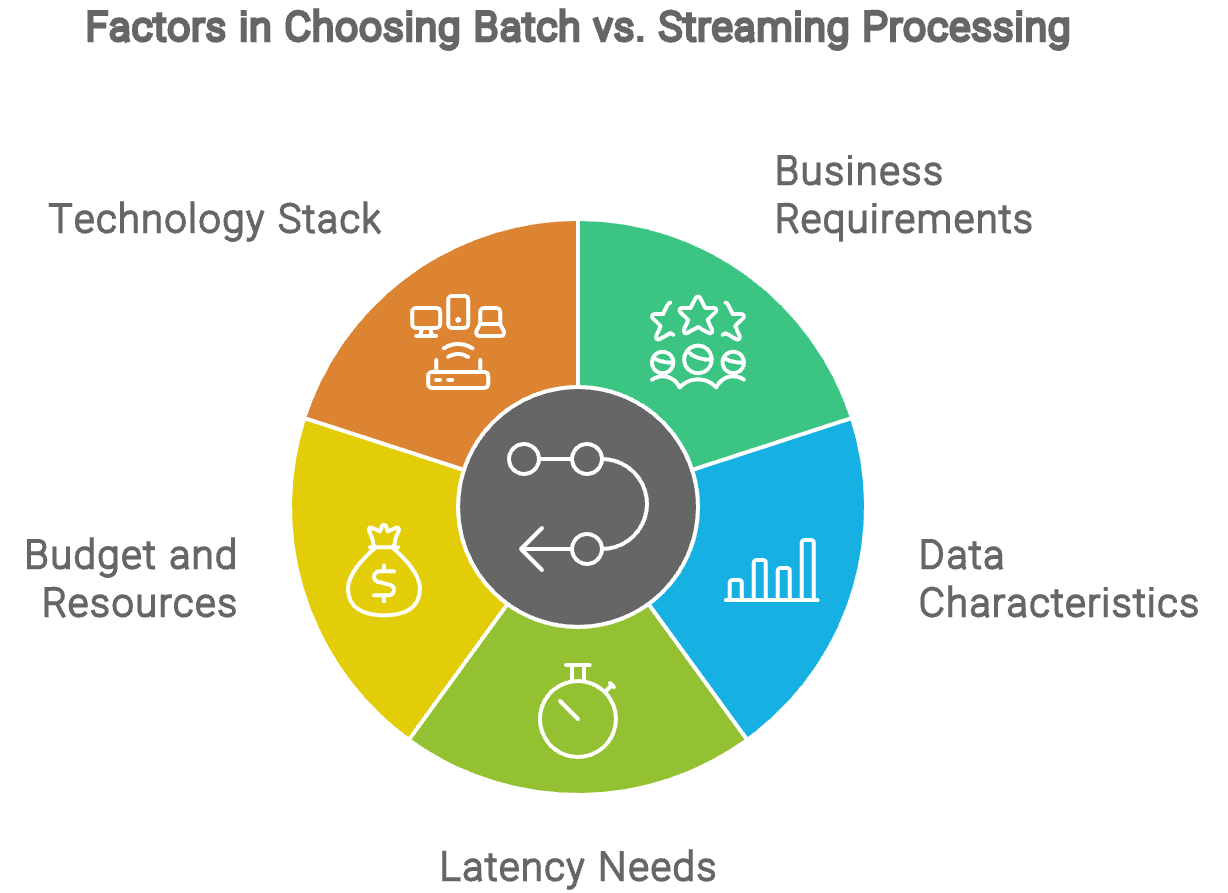

When deciding between batch and streaming processing, consider many factors such as business requirements, data characteristics, latency needs, budget, resources, and technology stack. These components determine the ideal approach for your business and its data needs.

Business requirements

Each business has unique needs, and understanding how the data workflow impacts business goals is vital to implementing the proper processing framework.

- Batch processing: Choose batch processing if your business needs involve generating periodic reports, analyzing historical data, or performing large-scale data transformations where immediate responses are not crucial. This approach suits scenarios like monthly financial reporting or end-of-day data aggregation.

- Stream processing: Opt for streaming processing if your business requires real-time insights and immediate action. This is ideal for applications such as fraud detection, live traffic management, or real-time customer engagement, where timely data analysis is critical for decision-making and operational efficiency.

Data characteristics

Know what processing methods best suit different kinds of data. Batch processing works best with predictable datasets, whereas stream processing is designed to handle a more variable data structure.

- Batch processing is best suited for large volumes of historical or aggregated data that need not be analyzed immediately. If your data is collected in bulk and processed periodically, batch processing will efficiently handle this workload.

- Stream processing is ideal for continuous, high-velocity data that needs to be processed and analyzed as it arrives. Streaming processing will meet these demands if your data constantly flows and requires real-time processing, such as data from IoT sensors or live social media feeds.

Latency needs

Understanding your business needs is important, but understanding how much data delay is acceptable is crucial. Whether data must be in real time or can be handled periodically will be the deciding factor in batch vs. streaming.

- Batch processing: If your application can tolerate some delay and doesn’t require immediate insights, batch processing is appropriate. The higher latency of batch processing is acceptable for generating reports or analyzing trends where real-time analysis is not essential.

- Stream processing: Choose streaming processing if your application demands low latency and real-time responses. This approach is necessary for scenarios requiring immediate feedback, such as monitoring live transactions or detecting real-time anomalies.

Budget and resources

Budget and resource constraints may limit your choice. Your organization may prioritize utilizing existing infrastructure, and you must make your pipelines fit into that existing infrastructure.

- Batch processing: Generally, batch processing is less complex and often more cost-effective for handling large volumes of data. It typically requires less ongoing maintenance and can be implemented with less specialized infrastructure, making it a more budget-friendly option for large-scale data operations.

- Stream processing: Streaming processing can be more expensive due to the need for specialized infrastructure and technology to handle continuous data flows. It may involve higher costs for real-time processing engines and scaling resources, so ensure your budget can accommodate these needs.

Technology stack

Different technology stacks have varying capabilities, and the choice of tools can significantly impact whether batch or streaming processing better suits your needs.

- Batch processing: If your existing technology stack includes modern data warehousing solutions like Google BigQuery, Amazon Redshift, or Snowflake, you might lean towards batch processing. Tools like Apache Spark (in batch mode) or Azure Data Factory are often employed to handle large-scale batch operations. These platforms allow you to process vast amounts of data in scheduled intervals, making them ideal for tasks like ETL/ELT pipelines, periodic reporting, and data aggregation.

- Stream processing: If your technology stack includes real-time processing tools like Apache Kafka, Apache Flink, or Amazon Kinesis, and your infrastructure is designed to handle continuous data flows, streaming processing might be more appropriate. These technologies are designed to support low-latency, real-time data analytics and decision-making. Cloud-native services like Google Cloud Dataflow and AWS Lambda can facilitate seamless real-time data processing in modern infrastructures.

Conclusion

Aligning your choice with business requirements, data characteristics, latency needs, budget, and existing technology will ensure you select the most effective approach for your data processing needs.

Choose batch processing if you need to handle large volumes of historical data with periodic analysis and have budget constraints. Opt for streaming processing if real-time data analysis and immediate actions are crucial and you have the necessary budget and resources for more complex and high-performance infrastructure.

In my experience, streaming is needed only in very few scenarios. Most of the time, you can get away with batch processing.

If you want to learn more about ETL/ELT, stream processing, batch processing, and building pipelines, try the following resources from DataCamp:

FAQs

What is the main difference between batch processing and stream processing?

Batch processing collects and processes data in large chunks at scheduled intervals, ideal for tasks like generating reports and analyzing historical data. In contrast, stream processing handles data in real-time as it flows through the system, making it suitable for applications that need immediate insights, such as fraud detection or live monitoring.

How do I decide whether to use batch or stream processing?

Your choice depends on several factors. Streaming processing is the way to go if you need real-time insights and immediate actions. If your project involves large volumes of data where real-time analysis is not critical, batch processing may be more appropriate. Consider your data characteristics, latency requirements, budget, and the existing technology stack.

What are some common use cases for batch processing?

Batch processing is commonly used for tasks that don’t require immediate results, such as generating monthly financial reports, performing large-scale data transformations, and managing data warehousing. It works well for periodic analysis and data aggregation, where processing can be scheduled off-peak times.

What challenges are associated with stream processing?

Streaming processing can be complex and costly. It requires specialized infrastructure to handle continuous data streams, high-performance computing resources, and ongoing monitoring. Additionally, managing real-time data flows and ensuring system reliability can be challenging, mainly when dealing with very high volumes of data.

How does the choice between batch and stream processing affect cost?

Batch processing is generally more cost-effective because it leverages existing infrastructure and runs jobs during off-peak hours, reducing the need for high-cost resources. Stream processing, however, often incurs higher costs due to the need for specialized, high-performance infrastructure and continuous data handling, which can be more expensive to maintain and scale.

I am a data scientist with experience in spatial analysis, machine learning, and data pipelines. I have worked with GCP, Hadoop, Hive, Snowflake, Airflow, and other data science/engineering processes.