Course

Azure Data Factory (ADF) is Microsoft’s cloud-based data integration service tailored for modern organizations. It empowers users to design, manage, and automate workflows that handle data movement and transformation tasks at an enterprise scale.

ADF stands out for its user-friendly, no-code interface, which allows both technical and non-technical users to build data pipelines easily. Its extensive integration capabilities support over 90 native connectors, enabling data flow across diverse sources, including on-premises systems and cloud-based services.

In this guide, I offer a comprehensive introduction to Azure Data Factory, covering its components and features and providing a hands-on tutorial to help you create your first data pipeline.

What is Azure Data Factory?

Azure Data Factory (ADF) is a cloud-based data integration service designed to orchestrate and automate data workflows.

It is used to collect, transform, and deliver data, ensuring that insights are readily accessible for analytics and decision-making.

With its scalable and serverless architecture, ADF can handle workflows of any size—from simple data migrations to complex data transformation pipelines.

ADF bridges the gap between data silos, enabling users to move and transform data between on-premises systems, cloud services, and external platforms. Whether you’re working with big data, operational databases, or APIs, Azure Data Factory provides the tools to connect, process, and unify data efficiently.

Features of Azure Data Factory

Here are some of the most important features that ADF offers.

1. Data integration

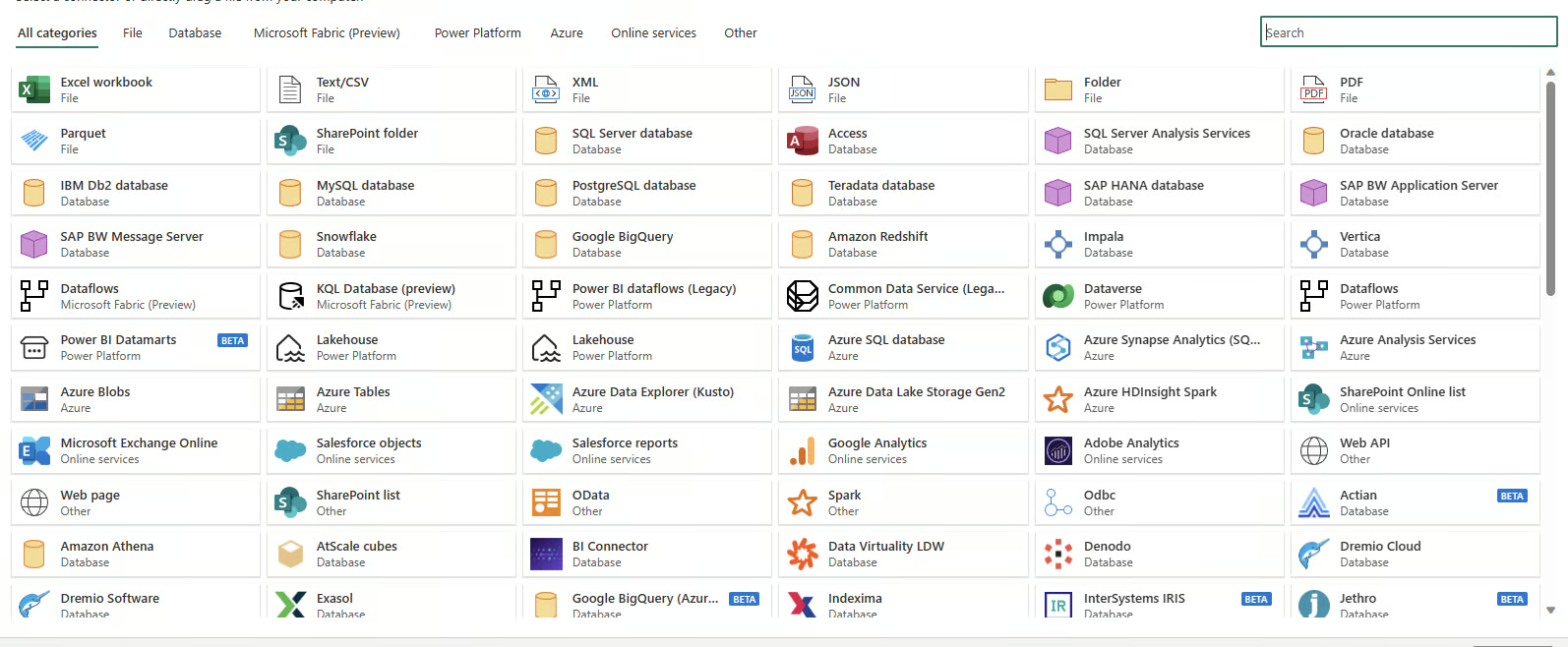

Azure Data Factory supports integration with over 100 native connectors, including cloud-based and on-premises systems. It includes support for SQL databases, NoSQL systems, REST APIs, and file-based data sources, allowing you to unify data workflows regardless of the source or format. It is the foundational engine that also powers the data integration capabilities in Microsoft Fabric, Microsoft's unified data platform.

Data connectors available in Azure Data Factory

2. No-code pipeline authoring

ADF’s drag-and-drop interface simplifies how users create data pipelines. With prebuilt templates, guided configuration wizards, and an intuitive visual editor, even users with no coding expertise can design comprehensive end-to-end workflows.

No-code authoring experience in Azure Data Factory

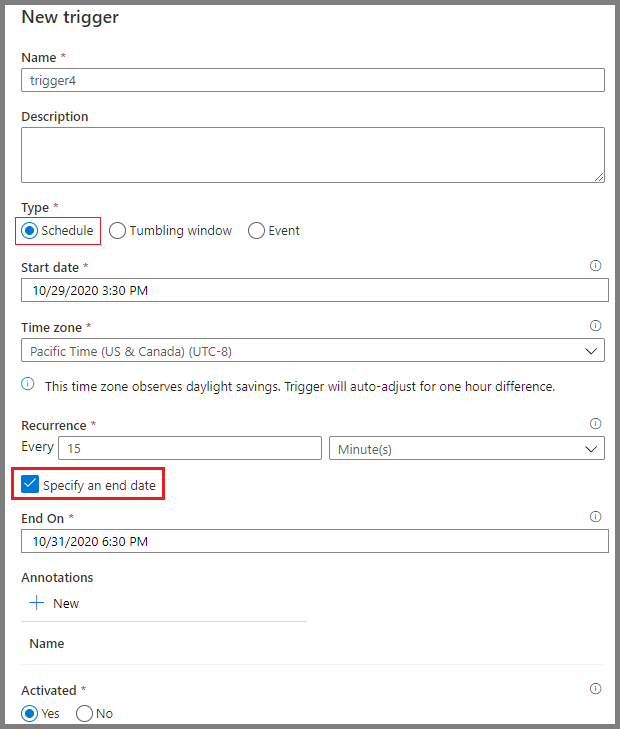

3. Scheduling

Azure Data Factory’s scheduling tools offer workflow automation. Users can set up triggers based on specific conditions, such as a file’s arrival in cloud storage or scheduled time intervals. These scheduling options eliminate the need for manual interventions and ensure workflows are executed consistently and reliably.

Scheduling pipelines in Azure Data Factory

Core Components of Azure Data Factory

Understanding the core components of Azure Data Factory is essential to building efficient workflows.

1. Pipelines

Pipelines are the backbone of Azure Data Factory. They represent data-driven workflows that define the steps required to move and transform data.

Each pipeline serves as a container for one or more activities, executed sequentially or in parallel, to achieve the desired data flow.

These pipelines enable data engineers to create end-to-end processes, such as ingesting raw data, transforming it into a usable format, and loading it into target systems.

Example of simple pipeline in Azure Data Factory

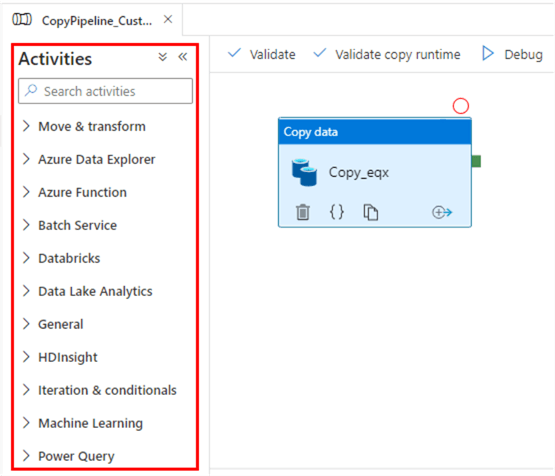

2. Activities

Activities are the functional building blocks of pipelines, each performing a specific operation. They are broadly categorized into:

- Data movement activities: These activities facilitate data transfer between different storage systems. For example, the "Copy data" activity moves data from Azure Blob Storage to an Azure SQL Database.

- Data transformation activities: These activities allow you to manipulate or process data. For instance, data flows or custom scripts can be used to transform data formats, aggregate values, or cleanse datasets.

- Control flow activities: These manage the logical flow of execution within pipelines. Examples include conditional branching, loops, and parallel execution, which provide flexibility in handling complex workflows.

Activities in Azure Data Factory

3. Datasets

Datasets are representations of the data utilized in activities. They define the schema, format, and location of the data being ingested or processed.

For instance, a dataset might describe a CSV file in Azure Blob Storage or a table in an Azure SQL Database. Datasets are the intermediary layer connecting activities to the actual data sources and destinations.

Datasets in Azure Data Factory

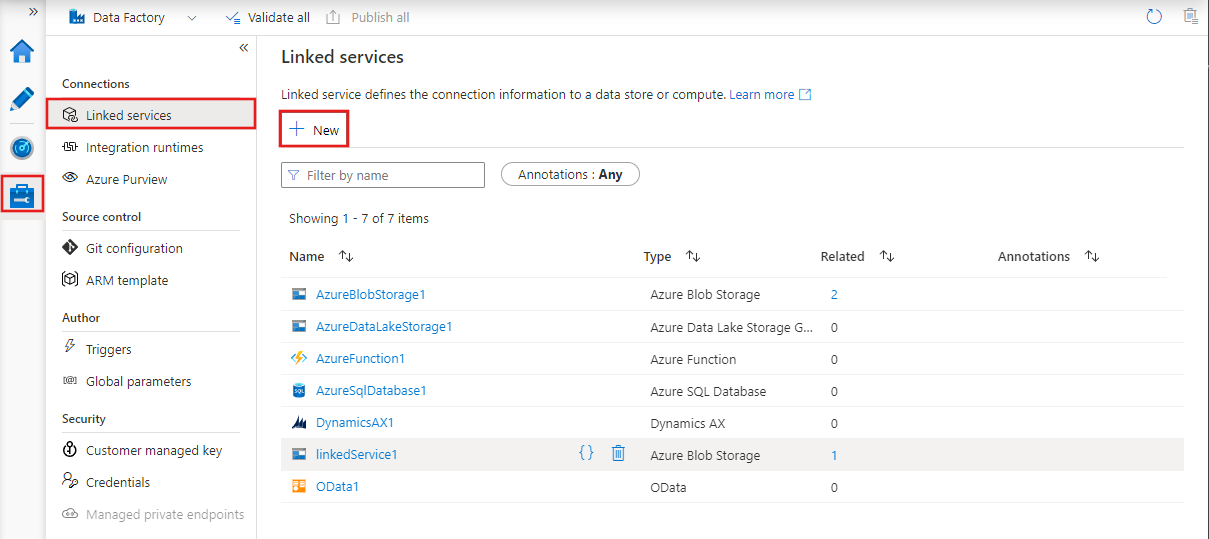

4. Linked services

Linked services are connection strings that enable activities and datasets to access external systems and services.

They act as bridges between Azure Data Factory and the external resources it interacts with, such as databases, storage accounts, or compute environments.

For example, a linked service can connect to an on-premises SQL Server or a cloud-based data lake.

Linked services in Azure Data Factory

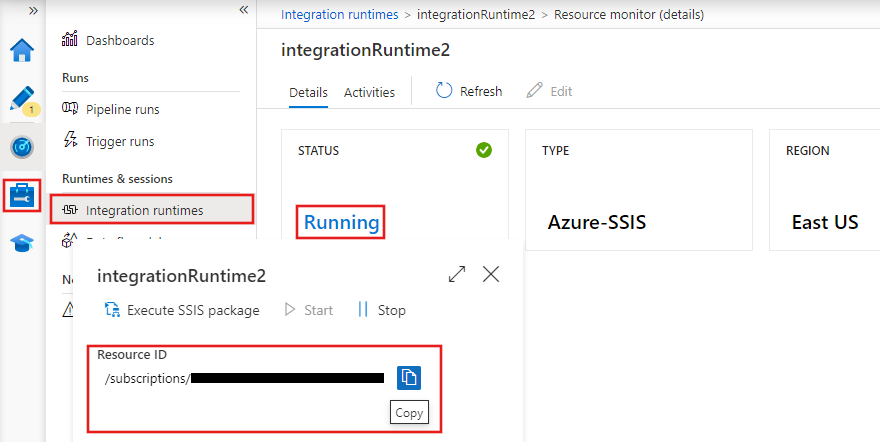

5. Integration runtimes

Integration runtimes (IRs) are the compute environments that power data movement, transformation, and activity execution within Azure Data Factory. ADF provides three types of integration runtimes:

- Azure IR: Handles cloud-based data integration tasks and is managed entirely by Azure.

- Self-hosted IR: Supports data movement between on-premises systems and the cloud, making it ideal for hybrid scenarios.

- SSIS IR: Enables the execution of SQL Server Integration Services (SSIS) packages within Azure, allowing you to reuse existing SSIS workflows in the cloud.

Integration runtimes in Azure Data Factory

Master Azure From Scratch

Setting Up Azure Data Factory

Now, let’s move to the practical section of this guide!

1. Pre-requisites

1. An active Azure subscription.

2. A resource group for managing Azure resources.

2. Creating an Azure Data Factory instance

1. Log in to the Azure portal.

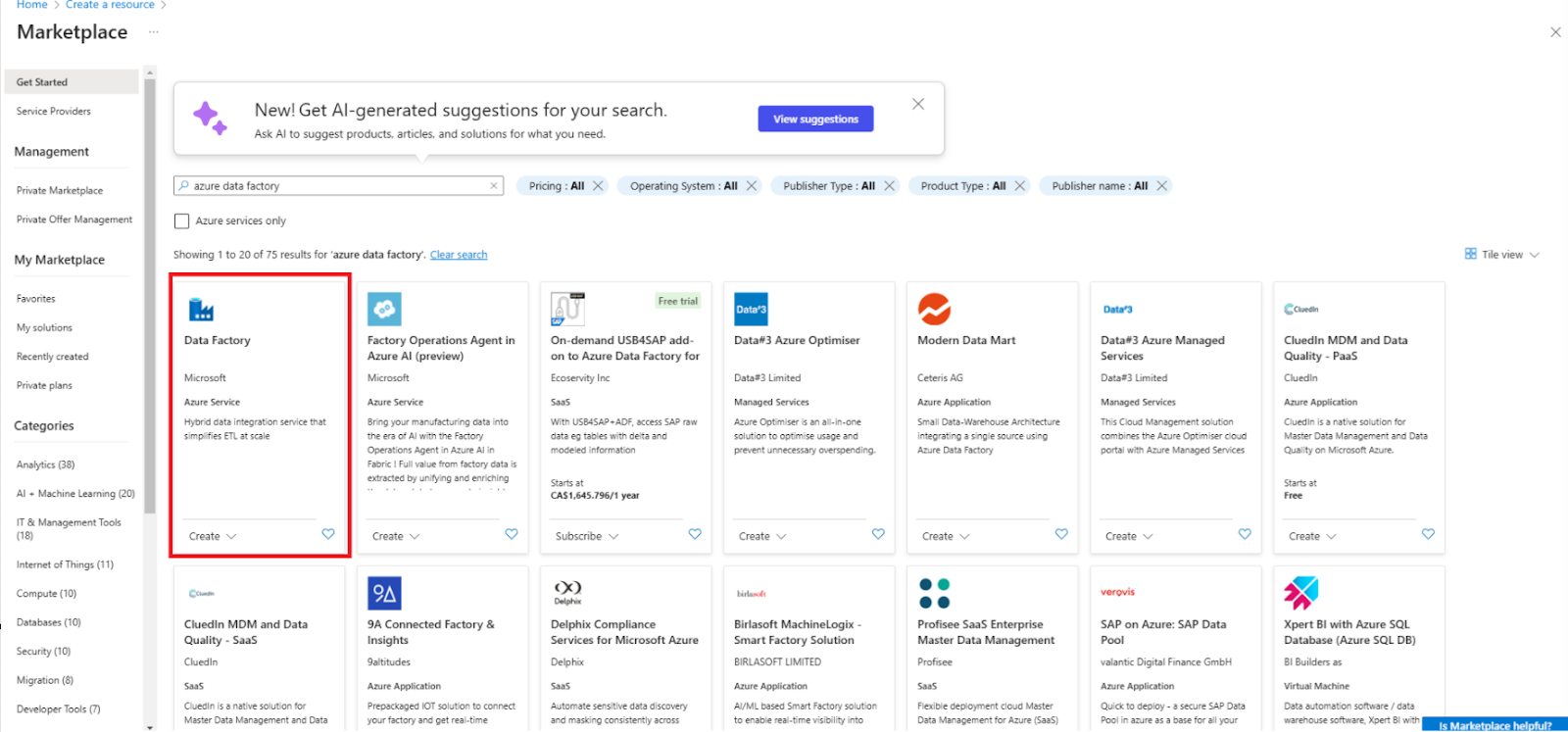

2. Navigate to Create a resource and select Data Factory.

Create a new Data Factory resource

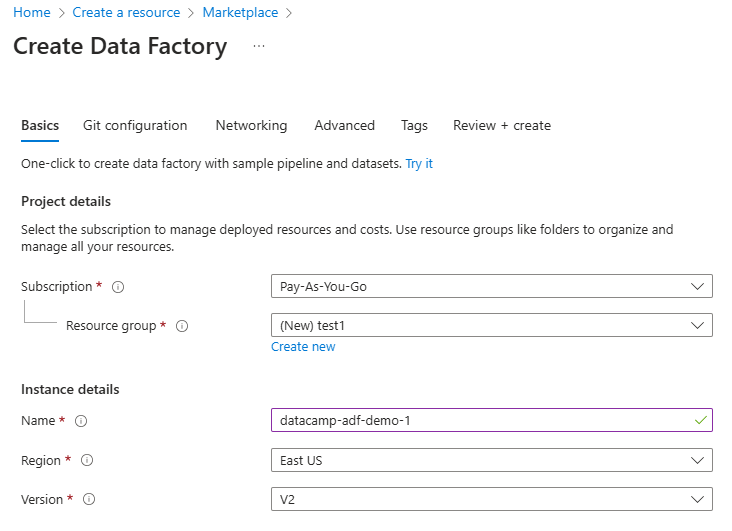

3. Fill out the required fields, including subscription, resource group, and region.

Configure Data Factory resource

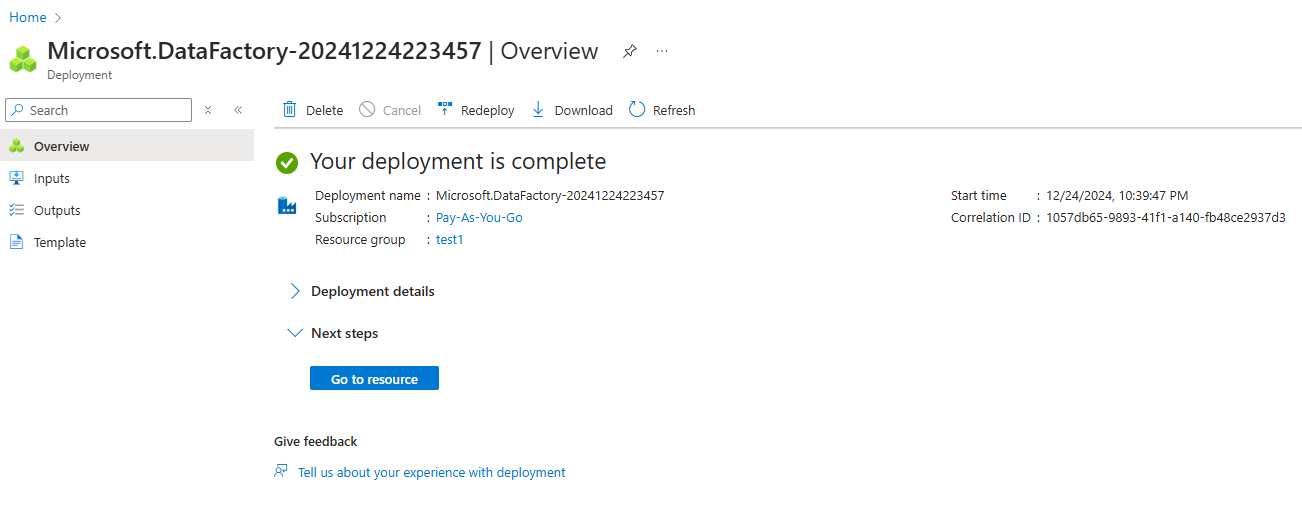

4. Review and create the instance.

Azure Data Factory instance created

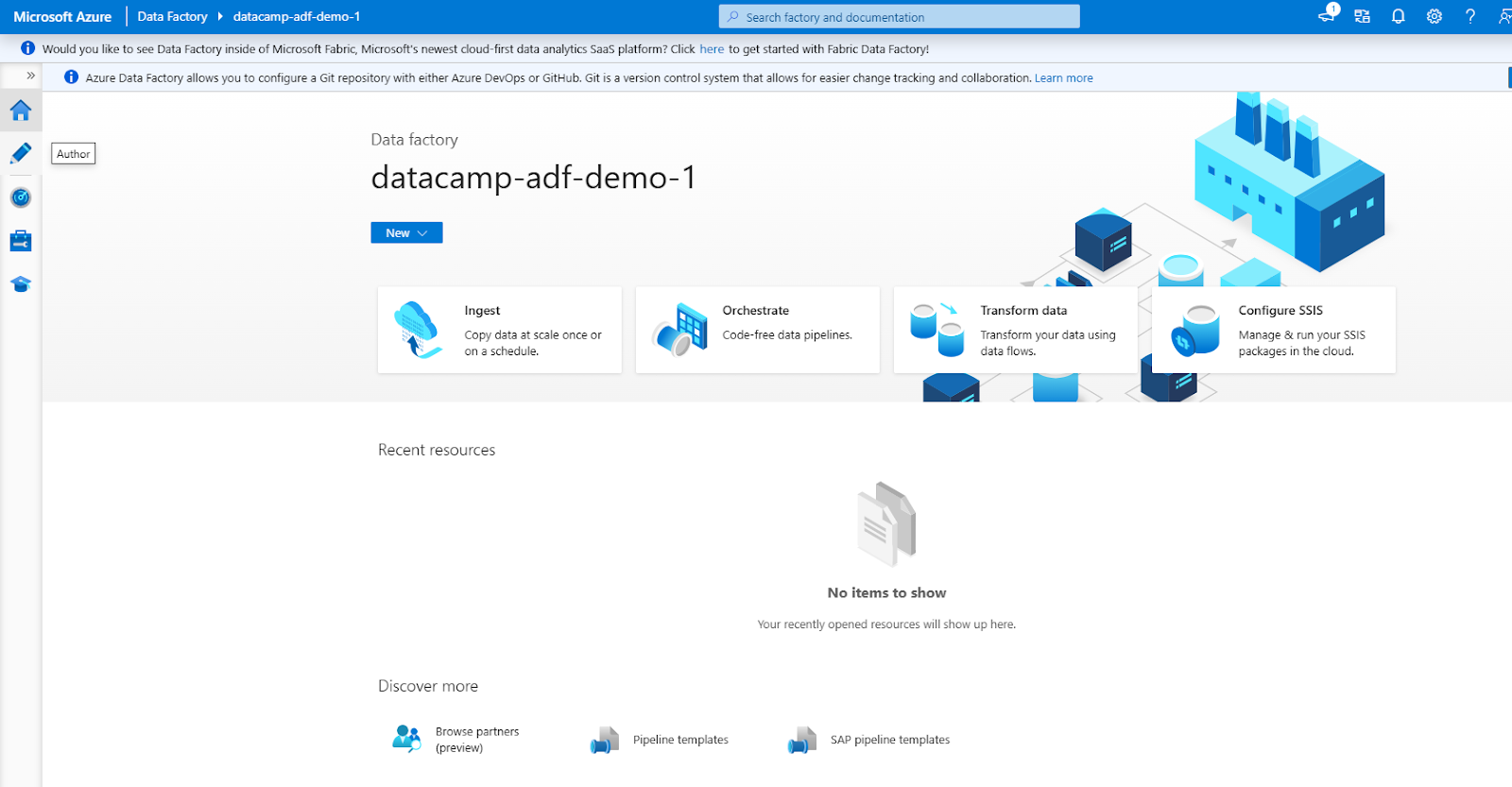

3. Navigating the ADF interface

The ADF interface consists of the following main sections (accessible via the left-hand navigation menu)

1. Author: For creating and managing pipelines.

2. Monitor: To track pipeline runs and troubleshoot issues.

3. Manage: For configuring linked services and integration runtimes.

Azure Data Factory Interface

Building Your First Pipeline in Azure Data Factory

Let’s walk through the steps to create a simple data pipeline.

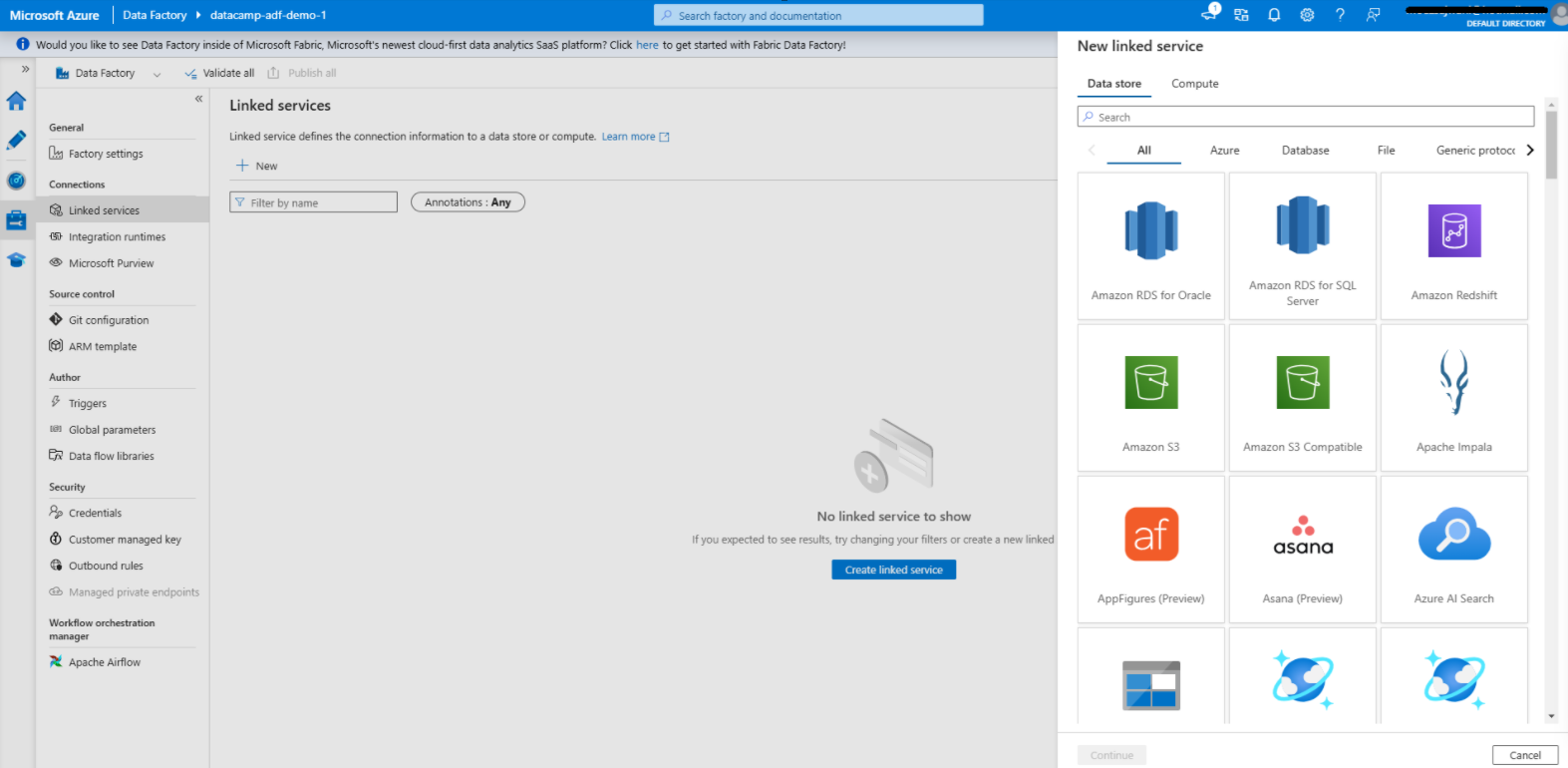

Step 1: Create linked services

Creating Linked service in Azure Data Factory

1. Navigate to the Manage tab

- Open your Azure Data Factory instance, and go to the Manage tab in the ADF interface. This is where you define linked services, which connect your data sources and destinations.

2. Add a linked service for the data source

- Click on Linked services under the Manage tab.

- Select + New to create a new linked service.

- From the list of available options, select the data source you want to connect to, such as Azure Blob Storage.

- Provide the required connection details, such as the storage account name and authentication method (e.g., account key or managed identity).

- Test the connection to ensure everything is set up correctly, and click Create.

3. Add a linked service for the data destination

- Repeat the process for the data destination, such as Azure SQL Database.

- Select the appropriate destination type, configure the connection settings (e.g., server name, database name, and authentication method), and test the connection.

- Once verified, save the linked service.

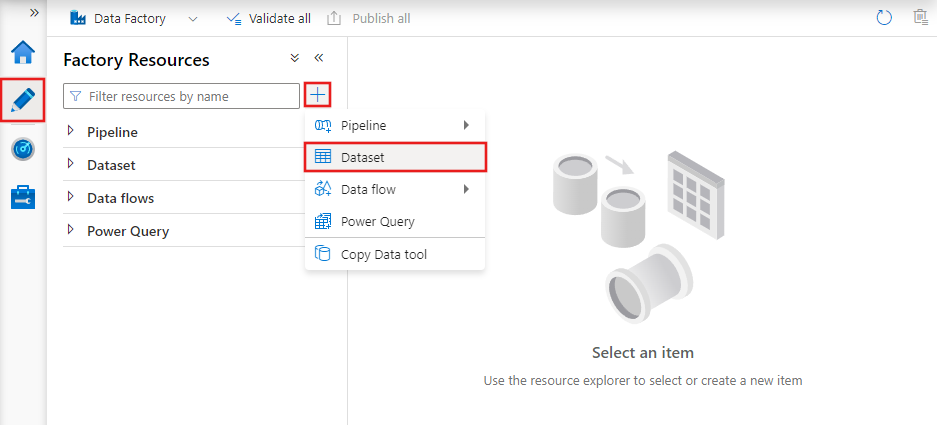

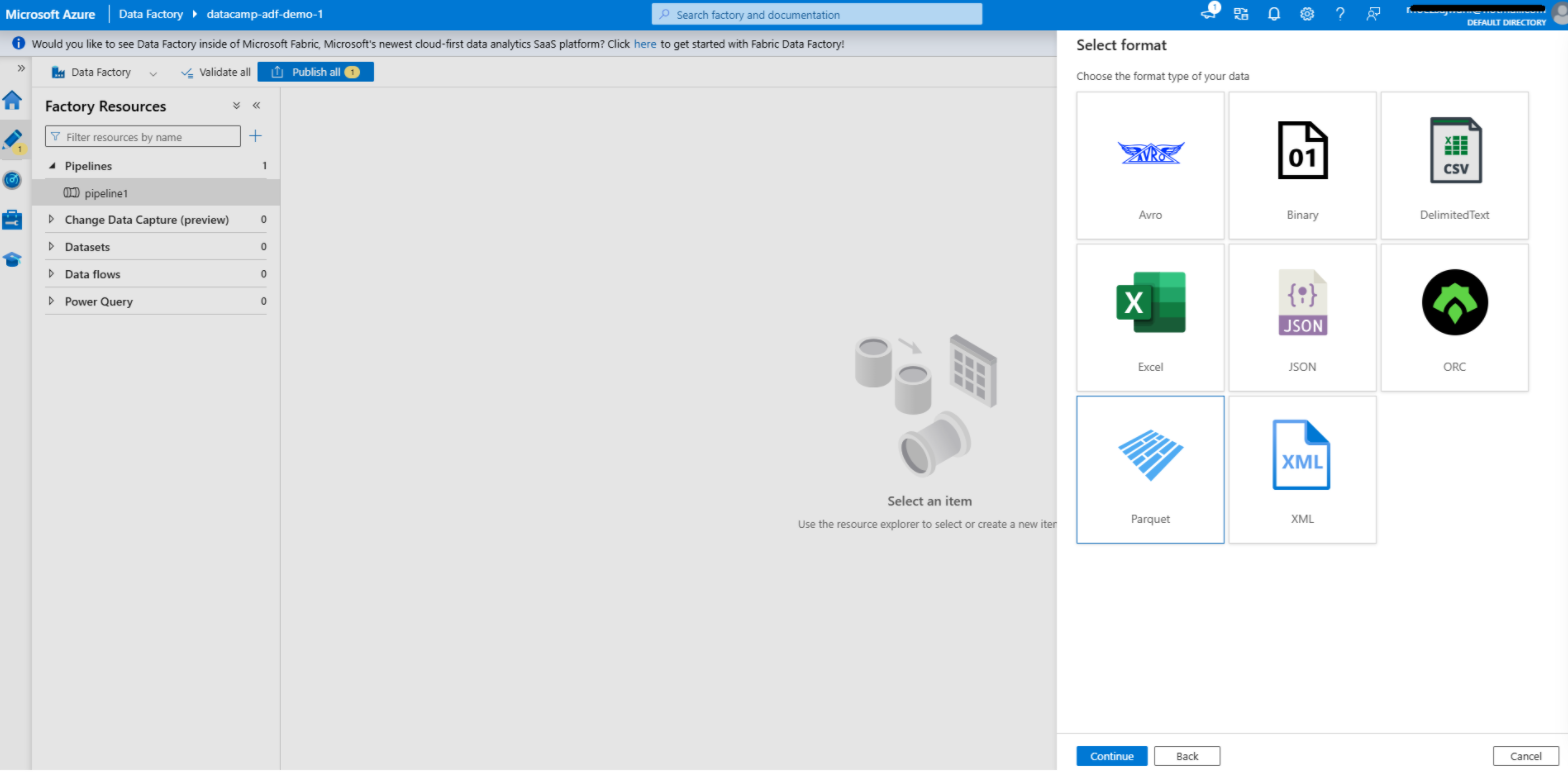

Step 2: Create a dataset

Creating dataset in Azure Data Factory

1. Navigate to the Author tab

- Open the Author tab in your Azure Data Factory interface. This is where you design and manage your pipelines, datasets, and other workflow components.

2. Add a dataset for the source

- Click on the + button and select Dataset from the dropdown menu.

- Choose the data store type that matches your source linked service. For example, if your source is Azure Blob Storage, select the corresponding data store type, such as Delimited Text, Parquet, or another relevant option.

- Configure the dataset:

- Linked service: Select the linked service you created earlier for the data source.

- File path: Specify the path or container where your source data resides.

- Schema and format: Define the data format (e.g., CSV, JSON) and import the schema if applicable. This allows ADF to understand the structure of your data.

- Click OK to save the dataset.

3. Add a dataset for the destination

- Repeat the process for the destination dataset.

- Choose the data store type that matches your destination linked service. For example, if your destination is Azure SQL Database, select the appropriate type, such as Table.

- Configure the dataset:

- Linked service: Select the linked service you created for the destination.

- Table name or path: Specify the table or destination path where the data will be written.

- Schema: Optionally define or import the schema for the destination dataset to ensure compatibility with the source data.

- Save the dataset.

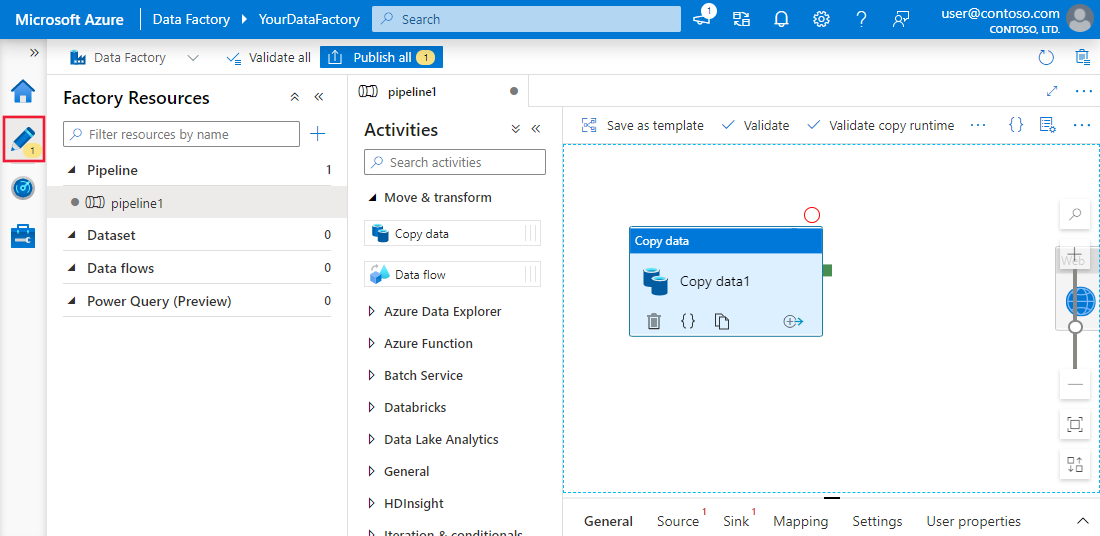

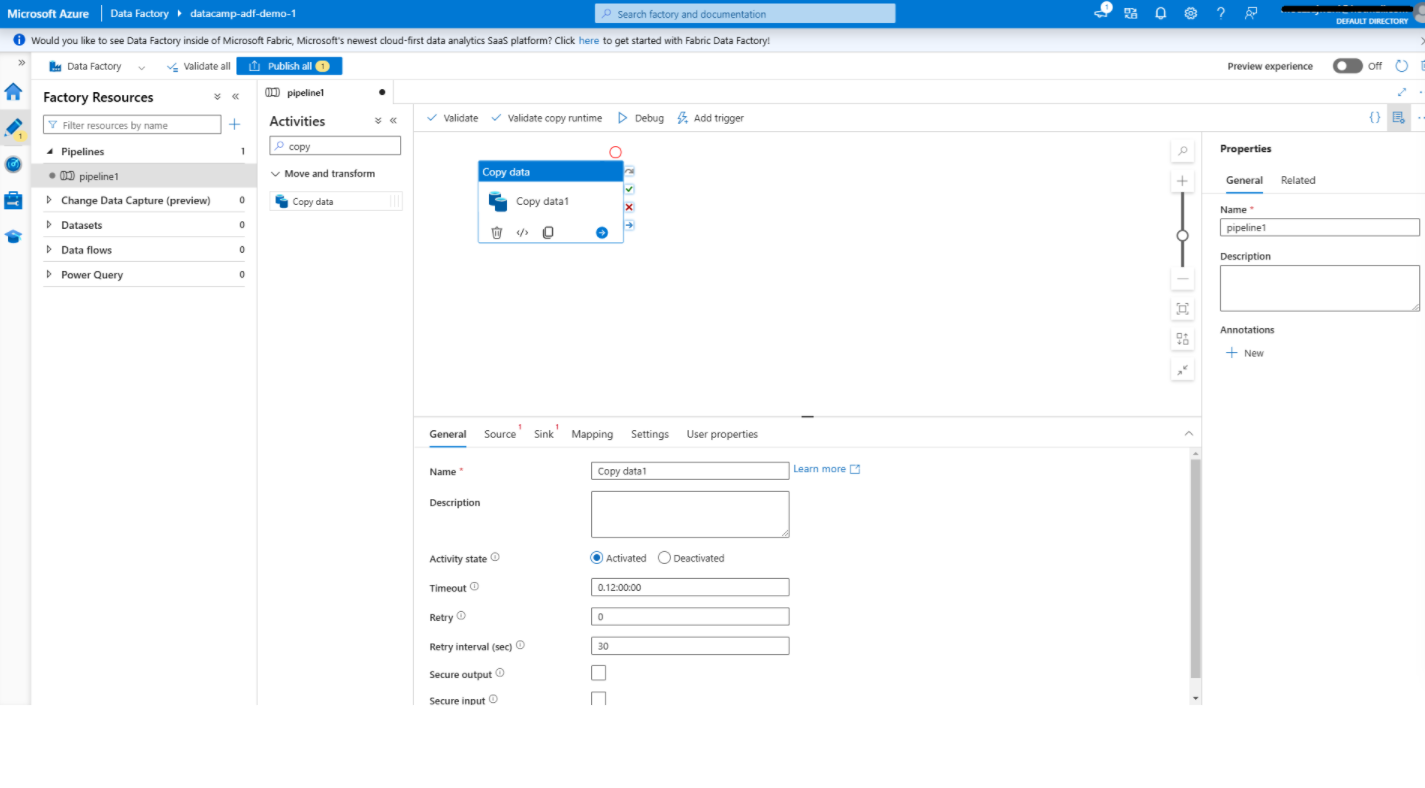

Step 3: Add activities

Adding a copy data activity in Azure Data Factory

1. Open the Pipeline editor

- In the Author tab, create a new pipeline by clicking + and selecting Pipeline.

- This will open the pipeline editor, a visual interface where you can design your data workflows.

2. Add the copy data activity

- From the toolbox on the left, locate the Copy data activity under the Move & Transform category.

- Drag the Copy data activity onto the canvas. This activity moves data from the source to the destination.

3. Configure the copy data activity

- Click on the Copy data activity to open its settings pane.

- Under the Source tab:

- Select the source dataset you created earlier.

- Configure additional options such as file or folder filters if needed.

- Under the Sink tab:

- Select the destination dataset.

- Specify any additional settings, such as how to handle existing data in the destination (e.g., overwrite or append).

- Use the Mapping tab to align the fields or columns from the source to the destination, ensuring data compatibility.

- Save your configuration.

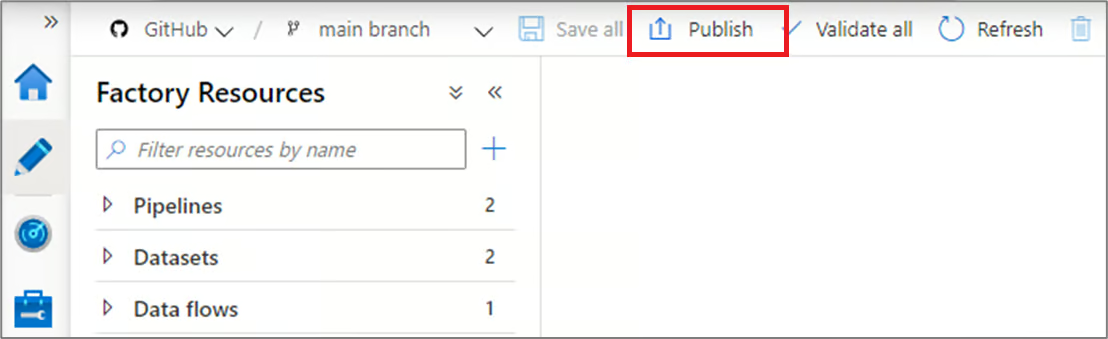

Step 4: Publish and run the pipeline

Publishing pipelines in Azure Data Factory

1. Publish your pipeline

- Once your pipeline is configured, click Publish in the toolbar.

- This saves your pipeline and makes it ready for execution. Without publishing, changes made to your pipeline remain as drafts and cannot be run.

2. Run the pipeline

- To test your pipeline, click Add Trigger at the top and select Trigger Now for a manual run. This allows you to verify that the pipeline is functioning as expected.

- Alternatively, set up an automated schedule:

- Go to the Triggers tab and create a new trigger.

- Define the trigger conditions, such as a time-based schedule (e.g., every day at 8:00 AM) or an event-based condition (e.g., file arrival in Azure Blob Storage).

- Associate the trigger with your pipeline to enable automation.

Azure Data Factory Integration and Transformation Capabilities

Azure Data Factory offers powerful data integration and transformation features that simplify complex workflows and enhance productivity. In this section, we will review these features.

1. Data flows

Data flows provide a visual environment for defining transformation logic, making it easier for users to manipulate and process data without needing to write complex code. Common tasks performed with data flows include:

- Aggregations: Summarize data to extract meaningful insights, such as calculating total sales or average performance metrics.

- Joins: Combine data from multiple sources to create enriched datasets for downstream processes.

- Filters: Select specific subsets of data based on defined criteria, helping to focus on relevant information.

Data flows also support advanced operations like column derivations, data type conversions, and conditional transformations, making them versatile tools for handling diverse data requirements.

2. Integration with Azure Synapse Analytics

ADF integrates seamlessly with Azure Synapse Analytics, providing a unified platform for big data processing and advanced analytics. This integration enables users to:

- Orchestrate end-to-end data workflows that include data ingestion, preparation, and analytics.

- Leverage Synapse’s powerful query engine to process large datasets efficiently.

- Create data pipelines that feed directly into Synapse Analytics for machine learning and reporting use cases.

This synergy between ADF and Synapse helps streamline workflows and reduces the complexity of managing separate tools for data integration and analysis.

3. Scheduling and monitoring pipelines

- Scheduling: As mentioned, ADF's scheduling capabilities offer robust automation features. Users can define triggers based on time intervals (e.g., hourly, daily) or events (e.g., the arrival of a file in Azure Blob Storage).

- Monitoring: The Monitor tab in Azure Data Factory, combined with Azure Monitor, provides real-time tracking and diagnostics for pipeline executions. Users can view detailed logs, track progress, and quickly identify bottlenecks or failures. Alerts and notifications can also be configured easily.

Azure Data Factory Use Cases

After an in-depth review of ADF’s features and components, let’s see what we could use it for.

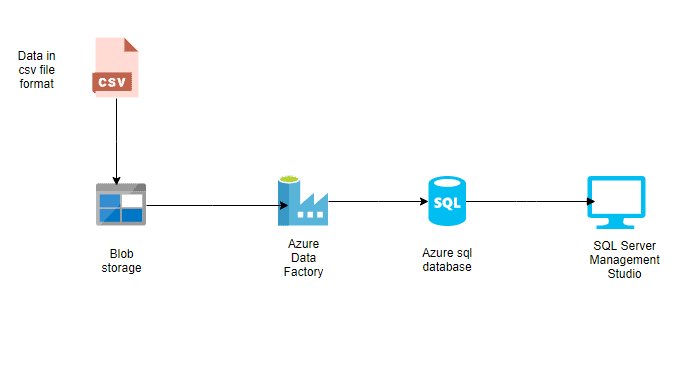

1. Data migration

ADF is a powerful tool for migrating data from on-premises systems to cloud-based platforms. It simplifies complex migrations by automating data movement, ensuring data integrity, and minimizing downtime.

For instance, you can use ADF to migrate data from an on-premises SQL Server to an Azure SQL Database with minimal manual intervention. By leveraging built-in connectors and integration runtimes, ADF ensures a secure and efficient migration process, accommodating both structured and unstructured data.

2. ETL for data warehousing

Extract, transform, and load (ETL) processes are at the heart of modern data warehousing. Azure Data Factory streamlines these workflows by integrating data from multiple sources, applying transformation logic, and loading it into a data warehouse.

For example, ADF can consolidate sales data from different regions, transform it into a unified format, and load it into Azure Synapse Analytics. This streamlined process enables you to maintain up-to-date, high-quality data for reporting and decision-making.

|

Check out 23 Best ETL Tools in 2024 and why to choose them. |

3. Data integration for data lakes

Data lakes serve as a central repository for diverse datasets, enabling advanced analytics and machine learning. ADF facilitates ingesting data from various sources into Azure Data Lake Storage, supporting batch and streaming scenarios.

For instance, you can use ADF to collect log files, social media feeds, and IoT sensor data into a single data lake. By providing data transformation and integration tools, ADF ensures the data lake is well-organized and ready for downstream analytics and AI workloads.

Best Practices for Using Azure Data Factory

Lastly, it’s worth reviewing some best practices for using ADF effectively.

1. Modular pipeline design

To create maintainable and scalable workflows, design pipelines with reusable components. Modular design allows for easier debugging, testing, and updating of individual pipeline sections. For example, instead of embedding data transformation logic in every pipeline, create a reusable pipeline that can be invoked across multiple workflows. This reduces redundancy and enhances consistency across projects.

2. Optimize data movement

- Use compression: To minimize data transfer times and reduce network bandwidth usage, compress large datasets before moving them. For instance, using gzip or similar methods can significantly speed up the movement of large files.

- Select the right integration runtime: The choice of integration runtime (Azure IR, Self-hosted IR, or SSIS IR) is critical in optimizing performance. For example, self-hosted IR can be used for on-premises data movement to ensure secure and efficient transfers, while Azure IR is ideal for cloud-native operations.

3. Implement robust error handling

- Retry policies: Configure retry policies for transient errors, such as temporary network disruptions or server timeouts. This ensures pipelines can recover and complete successfully without manual intervention.

- Set up alerts: Implement alerts and notifications to proactively inform your team of pipeline failures or performance issues. Use tools like Azure Monitor to configure custom alerts based on specific error types or execution delays, ensuring quick resolution and minimal downtime.

So, how is Azure Data Factory different from Databricks? If you are curious and want to discover the differences between Azure Data Factory and Databricks, check out Azure Data Factory vs Databricks: A Detailed Comparison blog.

Azure Data Factory vs. Microsoft Fabric

As you master Azure Data Factory, it is critical to understand its evolution: Microsoft Fabric.

While Azure Data Factory (ADF) remains a robust, standalone Platform-as-a-Service (PaaS) solution widely used in enterprise, Microsoft has introduced Fabric as the future of its data ecosystem. Fabric is an all-in-one SaaS platform that unifies Data Factory, Synapse Analytics, and Power BI into a single environment.

Should you use ADF or Fabric?

- Stick with ADF if: You need a mature, highly customizable PaaS solution with deep integration into legacy on-premises systems, or if you require granular control over infrastructure (like dedicated Integration Runtimes).

- Look at Fabric if: You are building a new modern data platform and want a unified experience where data engineering, data warehousing, and Power BI visualization happen in one workspace without moving data (thanks to OneLake).

Note: ADF pipelines and Fabric Data Factory pipelines are highly similar, so the skills you learn in ADF today are directly transferable to Fabric. You can take our Introduction to Microsoft Fabric course to learn more.

Conclusion

Azure Data Factory simplifies the process of building, managing, and scaling data pipelines in the cloud. It provides an intuitive platform that caters to both technical and non-technical users, enabling them to integrate and transform data from various sources efficiently.

By leveraging its features, such as code-free pipeline authoring, integration capabilities, and monitoring tools, users can easily create scalable and reliable workflows.

To learn more about Azure Data Factory, I recommend checking out the Top 27 Azure Data Factory Interview Questions and Answers.

If you want to explore Azure's backbone, including topics like containers, virtual machines, and more, my recommendation is this amazing free course on Understanding Microsoft Azure Architecture and Services.

Cloud Courses

Azure Data Factory FAQs

Is Azure Data Factory an ETL or ELT tool?

It supports both. ADF is traditionally used for ELT (Extract, Load, Transform) where raw data is loaded into a cloud destination before processing. However, with Mapping Data Flows, it provides full visual ETL capabilities, allowing you to transform data in-flight without writing code.

What is the difference between Azure Data Factory and Microsoft Fabric?

Azure Data Factory (ADF) is a standalone PaaS (Platform as a Service) tool focused purely on data integration. Microsoft Fabric is a unified SaaS (Software as a Service) platform that includes Data Factory capabilities alongside Power BI, Synapse, and Data Science tools in a single environment.

Do I need coding skills to use Azure Data Factory?

No. ADF is primarily a low-code/no-code platform with a drag-and-drop interface for building pipelines. However, knowing SQL is highly recommended for database interactions, and Python is useful if you plan to use advanced orchestration features like Airflow.

How does Azure Data Factory pricing work?

ADF uses a pay-as-you-go consumption model. You are not charged a monthly flat fee; instead, costs are calculated based on the number of activity runs, data movement hours, and the duration of data flow execution. This makes it cost-effective for both small and large workloads.

Can Azure Data Factory connect to on-premises data?

Yes. You can securely connect to on-premises servers (like SQL Server, Oracle, or file systems) by installing the Self-Hosted Integration Runtime on a local machine within your network. This acts as a secure gateway/bridge to the cloud without opening firewall ports.

What is the difference between ADF and Databricks?

ADF is an orchestrator designed to schedule and manage workflows. Databricks is a compute engine optimized for heavy data processing using Spark and Python. In many architectures, ADF triggers Databricks notebooks to perform complex transformations.

How does ADF handle security?

Azure Data Factory offers enterprise-grade security, including Managed Identity for seamless authentication without managing credentials, support for Azure Key Vault to store secrets, and Private Endpoints (via Azure Private Link) to ensure data traffic never enters the public internet.