Course

cloud-based storage and computation frameworks to provide flexible and high-performance data storage and analytics capabilities.

Businesses can now consolidate data into centralized cloud storage and perform analytics within the same environment. Cloud data warehousing is crucial for modern data analytics as it can support real-time data processing, advanced analytics, and machine learning applications.

Snowflake and Google BigQuery are two of the main options when it comes to cloud data warehouses. Snowflake has a unique architecture that separates storage and compute resources and is cloud agnostic allowing flexibility in any environment.

Google BigQuery is a fully-managed, serverless solution within Google Cloud Platform which excels at analyzing massive complex datasets and has integrated machine learning capabilities. Both products offer powerful features for data storage, processing, and analytics which allow data professionals to more efficiently and effectively answer business questions with their data.

As organizations continue to accumulate vast amounts of data, learning these platforms plays a crucial role in starting a career in data.

This guide will examine Snowflake vs BigQuery, exploring their features, similarities, and key differences. We will introduce both products, showcase their advantages and disadvantages, and make some key comparisons on critical features such as performance, security, and cost.

What is Snowflake?

Snowflake is a cloud data warehouse service that has its own architecture focused on providing flexible resource scaling, cost management, and minimizing latency. If you are interested in learning more, take a look at our Introduction to Snowflake for Beginners article.

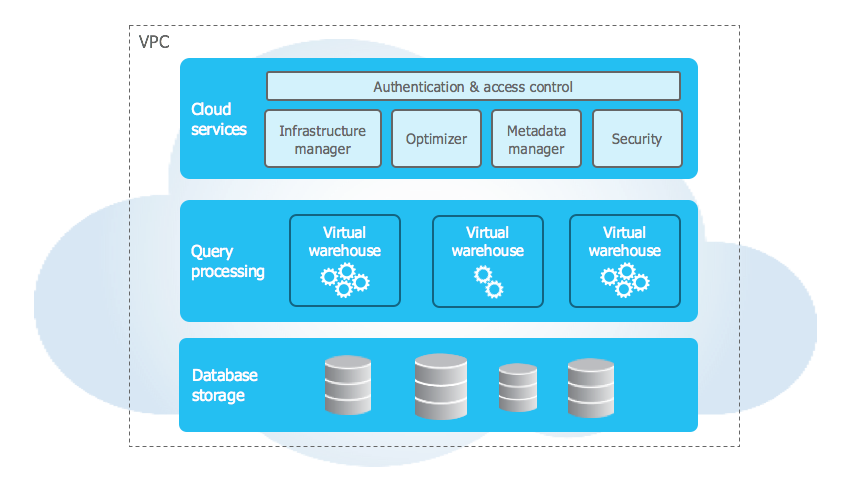

Snowflake’s architecture

Snowflake's architecture has three main principles: multi-cluster computing, shared data, and separation of storage and compute resources.

Multi-cluster computing

Multi-cluster computing is a scalable architecture where multiple independent compute clusters, known as virtual warehouses, provide computational resources to meet demand. A great feature is Snowflake’s auto-scaling where more copies of the same cluster will be created to meet compute demand.

Data layer

Snowflake's data layer is a crucial component of its architecture, designed for efficient data management and storage. It has both a central repository and distributed data network. The central data repository holds the entire enterprise’s data and is accessible to all virtual warehouses. For better performance and scaling, the virtual warehouses store a portion of the entire data set that is most-often used.

Storage and compute separation

Snowflake separates storage from compute, allowing each compute cluster to scale independently from the volume of storage. The storage layer uses cloud storage to persistently hold all data, while the compute layer consists of virtual warehouses that process queries. This separation enables more flexible resource management and cost control.

Snowflake key features

Snowflake offers several key features that make it a powerful and flexible data warehousing solution:

Virtual warehouses

Virtual warehouses are Snowflake's MPP (massively parallel processing) compute clusters that execute SQL queries. They can be scaled up or down independently, allowing users to match computing power to specific workload needs.

Additionally, each virtual warehouse is completely independent and does not share resources with others, ensuring that each virtual warehouse does not impact the performance of others. This flexibility enables efficient resource allocation and cost management, as users can adjust compute capacity on-demand without affecting data storage.

Time travel

Time Travel is a feature that allows users to access historical data at any point within a set period. This is useful for data recovery, auditing, and analyzing data changes over time. Users can query data as it existed at specific points in the past, or even restore tables, schemas, or databases to a previous state.

Secure data sharing

Snowflake's secure data sharing feature allows organizations to share live, governed data with other Snowflake accounts without moving or copying the data. This seamless sharing occurs directly within Snowflake, maintaining data integrity and security. It enables real-time data access for partners, suppliers, or customers, fostering data collaboration while ensuring data governance and control.

Snowflake’s primary architecture (Source: docs.snowflake.com)

Snowflake advantages and disadvantages

Snowflake offers many advantages over other cloud data warehouse solutions with its architecture design and cloud-agnostic foundation. However, it has its weaknesses including potentially high costs and complexity.

Advantages

Snowflake has a number of advantages due to its ability to easily integrate with any cloud system, scale flexibility, and handle diverse workloads..

- Cloud-agnostic: Connecting to multiple cloud providers, including AWS, Azure, and Google Cloud, is straightforward. Companies can choose their preferred cloud infrastructure or even adopt a multi-cloud strategy.

- Flexible scaling: The architecture allows users to adjust their compute capacity (via virtual warehouses) up or down based on immediate needs. This flexibility enables efficient resource utilization and can help optimize costs for varying workloads.

- Diverse workload handling: The multi-cluster shared data architecture excels at managing various types of workloads simultaneously. Whether it's data loading, transformation, ad-hoc querying, or complex analytics, Snowflake can efficiently handle diverse data processing needs.

Disadvantages

There are a few disadvantages with Snowflake tied to its scaling costs and management complexity. The greater flexibility adds some extra complexity with management and the pricing can be somewhat challenging to predict.

- Higher costs at scale: While Snowflake's pricing model can be cost-effective for many use cases, its pay-per-second billing for compute resources costs can escalate quickly for large-scale operations when not managed carefully.

- Complexity in management: Effectively managing and optimizing a Snowflake deployment can be complex, especially for larger organizations. Configuring virtual warehouses, tuning performance, and managing data sharing and security policies all require significant expertise.

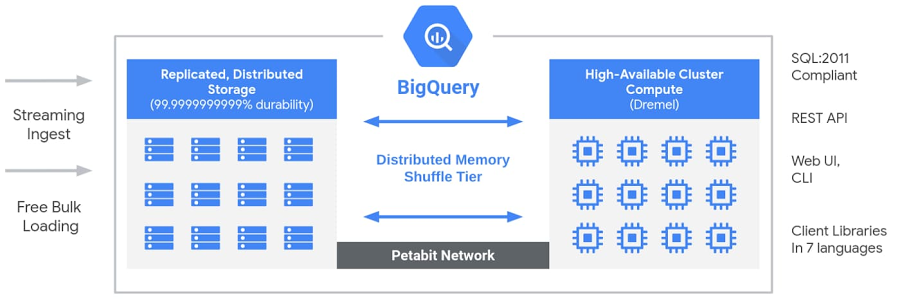

What is BigQuery?

BigQuery is Google’s enterprise data warehouse with powerful analytical and machine learning functionality within the Google Cloud Platform. Because BigQuery is a part of the Google Cloud ecosystem, it is able to interact with other components of Google Cloud such as Google Drive, Sheets, and Cloud Storage. This allows for users to quickly and easily transfer, analyze, and share data within the Google Cloud Platform.

BigQuery Architecture (Source: Google Cloud Blog)

BigQuery architecture

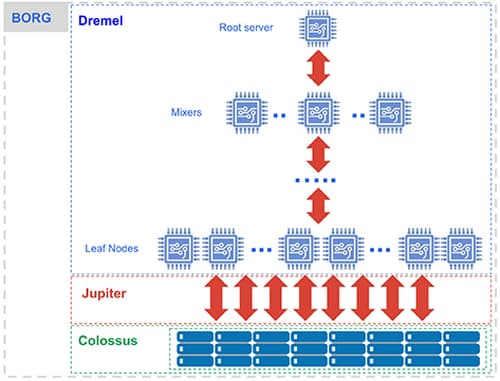

BigQuery is built upon Google’s Dremel engine which focuses on scalable computational resources to allow for real time analysis of read-only columnar data.

To utilize BigQuery, users will have a SQL-based interface where you can run optimized GoogleSQL. It operates similarly to MapReduce where it uses a query execution engine, logical planner, and query parser for optimization.

The main difference is that Dremel utilizes aggregator trees for query execution which allows for real-time analysis whereas the MapReduce framework naturally has some latency.

BigQuery Dremel (Source: Google Cloud Blog)

BigQuery Key Features

Bigquery SQL

Running SQL in BigQuery comes with the advantage of getting to use Google’s unique SQL syntax. It provides many of the same features of other SQL languages with a few extra bells and whistles. A recent feature of Google SQL that is useful is the GROUP BY ALL function.

Additionally, GoogleSQL offers the ability to do things like iterative loops and usage of variables more easily than some other SQL languages. Even without a deep knowledge of GoogleSQL, users can still easily leverage BigQuery with standard SQL. Make sure to check out this article on how to get started in BigQuery.

BigQuery ML

Creating machine learning models natively in BigQuery is quite easy. You can use SQL syntax to CREATE models of all kinds. It offers simple models like linear regression and logistic regression, as well as complex models such as boosted trees and neural networks. Models are then stored in BigQuery itself and can be easily used to generate results that are then saved as tables. This occurs natively in BigQuery without the need to import external packages.

BigQuery advantages

BigQuery has a number of advantages that make it a powerful tool due to its integration with GCP and the Dremel system:

- Integrated Machine learning capability: BigQuery makes it very easy to get started training and predicting with the built-in models. The data lives natively in BigQuery and can be wrangled inside the ecosystem easily.

- Cost-effective structure for data mining: Because BigQuery focuses on the amount of data processed, highly complex and computationally intensive analysis does not add extra cost. This allows for more opportunities to perform extensive analysis on data to extract meaningful patterns.

- Automatic scaling: Because it is built on Google Cloud, BigQuery easily scales up and down based on the needs of the user.

BigQuery disadvantages

Even with these advantages, there are a few weaknesses. Some of these are due to the fact that BigQuery was designed to exist in the Google Cloud. Working with the data outside of that ecosystem can be challenging for a less technically inclined data professional:

- Limited external integration: Because it lives in the Google Cloud Platform, there is limited native integration outside of the Google ecosystem. Performing data egress will require additional technical knowledge in other languages such as Python, Java, or usage of the command line to extract data from BigQuery.

- Unoptimized query cost: If queries are not written with data volume in mind, then there can be higher costs associated with these unoptimized queries. Making sure to be mindful about the volume of data being processed is key for managing costs

Snowflake vs BigQuery Performance and Scalability

Both BigQuery and Snowflake offer similar performance and scalability with slight nuances to both. In simpler situations, Snowflake is a better choice. As your data grows and becomes more complex, BigQuery gains an advantage with its more efficient cost-structure and near limitless scaling.

Snowflake

Snowflake’s benchmark performance on the TPC-H outperforms BigQuery. This means on most standard business questions, Snowflake will perform better. Due to its multi-cluster computing and shared data architecture, Snowflake easily scales up to meet the demands of any enterprise.

BigQuery

While BigQuery may not be as efficient as Snowflake on some simpler queries, it excels with complex analysis. The native automatic scaling requires very little management to increase computational resources. While there are some limits, due to shared resources within an organization, being mindful about writing efficient queries the process the minimum amount of data will make sure performance stays optimal.

BigQuery vs Snowflake: Integration and Ecosystem

Snowflake

Snowflake runs in a cloud-agnostic ecosystem that is not tied to any particular cloud platform. That means it can be integrated with other cloud platforms easily using the appropriate connectors. This creates a smooth experience for creating ETL and analytical pipelines that feed into other parts of the cloud enterprise.

Around Snowflake exists an ecosystem of 60+ third-party tools and technologies that provide native connectivity to Snowflake. These integrations make it easier to prepare, migrate, and visualize data with these third-party tools. For more advanced BI functionality, Snowflake also has the Snowflake Marketplace, which provides access to unique datasets, apps, and AI products built specifically for the Snowflake environment.

BigQuery

Since it is built in the Google Cloud Platform, BigQuery is more suited for folks who are heavily invested in GCP. If you have most of your processes within the Google Cloud Platform such as Google Compute Engine, Cloud Storage, and Cloud Run then it may be beneficial to use BigQuery as a means of keeping your data pipelines within the same environment. By keeping everything inside the environment, costs and computational time can be optimized. Additionally, the native integration with AI/ML tools in BigQuery make it perfect for organizations that utilize AI-driven analytics. Data pipelines can feed data straight to BigQuery which can then train models/predict outcomes based on that data.

Security and Compliance

Security is a top concern for users of Cloud services. Ensuring proper compliance with government standards is important. Luckily, cloud providers tend to provide ample guidance regarding the security and compliance which allows users to easily configure their data in a way which meets their security standards. Both Snowflake and BigQuery have similar security features with role-based access management and data recovery features.

Snowflake

Snowflake offers features for creating a secure and reliable experience for organizations. One of these is the Time Travel feature, which allows data recovery in the event of accidental deletion.

Another data protection feature is Fail-safe, which retains historical data seven days after the Time Travel retention period expires. This data is not directly accessible but can be recovered by Snowflake which ensures data resilience.

For access control, Snowflake combines discretionary access control and role-based access control into a single robust access control system. This allows organizations to precisely manage access privileges in a simple role-based manner.

Snowflake also prioritizes security by employing strong encryption standards in order to protect data both in transit and at rest. For data at rest, Snowflake encrypts all stored data with a hierarchical key model which is automatically rotated by Snowflake and managed automatically without any need for customer intervention.

BigQuery

BigQuery also offers some data recovery thanks to its time travel capabilities allowing users to recover deleted tables within the seven day time-travel window. However, if the entire dataset is deleted then users are unable to recover lost data unlike in Snowflake. That means deletion needs to be handled a little more carefully within BigQuery.

BigQuery’s security is primarily managed by Google for data at rest. Google provides IAM which offers comprehensive access control and monitoring. This access can be managed at any level from entire projects down to the specific tables within a particular project. All data-at-rest within the Google cloud environment is also encrypted using Google Cloud-managed keys. If extra security is required, users can provide their own private keys which can further encrypt data within the Google Cloud.

Snowflake vs BigQuery: Cost Comparison

Both Snowflake and BigQuery have two components to their cost: storage and compute. Storage is the cost of storing data within the warehouse regardless of usage. Compute costs are calculated either based on the amount of compute utilized or the amount of data processed.

Storage Costs

- Snowflake: Operates on a pay-as-you-go model. On demand storage pricing is $23 per TB / per month. Capacity storage pricing starts the same as on demand storage pricing, but the rate lowers based on the amount of the account’s average contract value (ACV).

- BigQuery: Offers 10GB free each month. Afterwards, it is $20 per TB per month for active logical storage, $10 per TB for long-term logical storage. It is then $40 per TB per month for active physical storage, $20 per TB per month for long-term physical storage.

Compute Costs

- Snowflake: Utilizes credits for charging for compute based on compute time and performance levels. Credits are priced based on the edition of Snowflake, and the credits consumed per hour depends on the level of virtual warehouse chosen (X-Small uses 1 credit/hour, Medium uses 4 credits/hour, etc.).

- BigQuery: Charges based on data processed per query (on-demand at $5 per TB) or capacity compute. Capacity compute is more predictable as it charges by the slot hour and leverages the autoscaler.

BigQuery vs Snowflake: Which to use

Now that we’ve gone through some major components of both tools, let's review their key highlights. This should help in deciding which tool to utilize for your enterprise:

|

Components |

Snowflake |

BigQuery |

|

Performance |

Designed for usual business tasks and big data jobs |

Designed for complex analytics including ML built natively into query engine |

|

Scalability |

Can automatically scale using virtual warehouses with its multi-cluster architecture |

Scales natively based on needs |

|

Integration and Ecosystem |

Cloud agnostic, integrates with other cloud platforms through various connectors |

Primarily focused on the Google Cloud Platform, data can be transferred out but easiest to manage in GCP |

|

Security and Compliance |

User-based security protocols and recovery on an organizational and role level. Has data recovery protocols within seven days |

Google IAM with Google-cloud managed keys or user managed keys. Also provides a seven day recovery window |

|

Storage Cost |

On-demand storage at $23/TB/Month with contract-based pricing that is lower |

Based on type of storage: $20/TB of active and $10 for long-term. C |

|

Compute Cost |

Based on compute time used, tiered based on performance levels |

Based on data processed at $5/TB |

Conclusion

Both Snowflake and BigQuery have their unique advantages and disadvantages. If your business is primarily interested in straightforward analysis, large volumes of data, and quick queries then Snowflake may be a better option with its auto-scaling virtual warehouses and compute-based pricing.

If your business goals are more complex analytics with machine learning or heavy integration into the Google Cloud Platform space then BigQuery is the go-to option with its auto-scaling compute management, native ML integration, and data processed based pricing.

Learning about both is the best option for getting a job as a data professional and DataCamp offers a multitude of resources to learn more.

Snowflake vs BigQuery FAQs

Are there certifications available for either Snowflake or BigQuery?

Yes! There are a few Snowflake Certifications and some Google Cloud certifications which require bigQuery knowledge.

Do these cloud data warehouses support SQL transactions?

Yes, they support many of the same SQL procedures and protocols that other SQL languages do.

How does Snowflake support data egress?

It utilizes connectors to different cloud platforms such as GCP, AWS, and Azure. The egress is controlled by permissions and API connections.

How does Google BigQuery handle data?

BigQuery stores data in a columnar format.

What are some options for controlling ETL into/out of Snowflake and BigQuery?

Tools like Apache Airflow and NiFi are great ways to control ETL pipelines for data flow into and out of Snowflake and BigQuery.

I am a data scientist with experience in spatial analysis, machine learning, and data pipelines. I have worked with GCP, Hadoop, Hive, Snowflake, Airflow, and other data science/engineering processes.