Track

As applications increasingly rely on interconnected services, APIs, and microservices, I have found that integration testing is a crucial part of ensuring high software quality.

Early in my development journey, I realized that relying solely on unit tests was not enough. Integration testing became the glue that confirmed individual components worked together to create a good user experience.

Understanding integration testing is important for both junior and seasoned data practitioners. This guide explores the fundamentals of integration testing, best practices, tools, and how to incorporate it within CI/CD pipelines.

Understanding Integration Testing

Integration testing is a strategic process that verifies whether your entire system can function smoothly when its individual pieces come together.

In an age where applications rely on multiple interdependent components, from internal modules to third-party services, integration testing is a safety net that catches issues that unit testing cannot.

Ensuring that the different components of your system communicate and collaborate as expected is crucial regardless of whether you are building an e-commerce site, a machine learning pipeline, or a SaaS platform.

Integration testing helps mitigate risk, reduce post-deployment issues, and deliver a seamless user experience.

Definition and core objectives

Integration testing is the process of combining two or more software modules and testing them as a group.

It evaluates whether the modules interact correctly according to specified requirements. This includes testing interfaces, data exchanges, and communication protocols that bind the modules together.

It goes beyond unit testing, which focuses on verifying the functionality of isolated components such as functions, methods, or classes. While unit testing tells us if a component works on its own, integration testing reveals whether it works properly when combined with others.

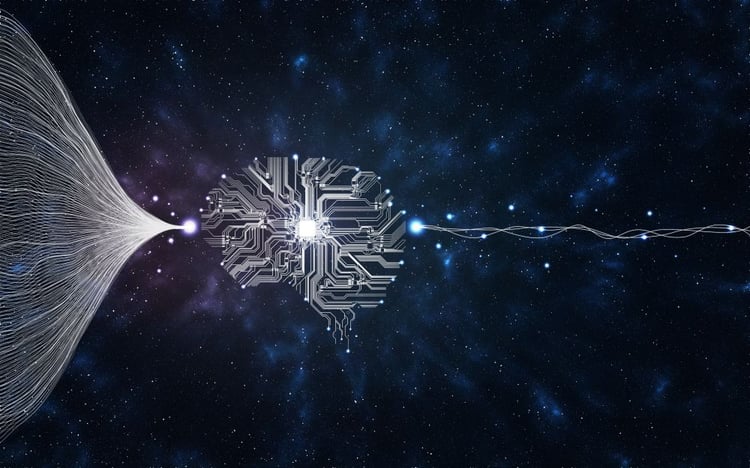

The main goals of integration testing include:

- Detecting interface mismatches between software components: These could include inconsistent method signatures, incorrect data types, or improper use of API endpoints.

- Verifying data integrity and consistency across modules: Data passed between modules must be accurately transformed and remain consistent throughout the process.

- Identifying errors in inter-component communication: Integration testing helps uncover failures in message-passing systems, event-driven architectures, or API-based communications.

- Validating workflows and business logic across modules: Many business processes span multiple modules, so integration testing is needed to ensure they behave as expected end-to-end.

- Evaluating behavior under real dependencies: Integration tests simulate how the application will behave in a production-like environment, often using real services or virtualized proxies.

Image showcasing key objectives of integration testing.

To understand this better, we can look at a practical example of an E-commerce application.

Consider an online shopping platform. The following components must work together:

- A product catalog service

- A shopping cart module

- A payment gateway

- An order fulfillment system

While unit testing might validate that the cart calculates totals correctly, integration testing checks whether:

- The product catalog provides accurate item details to the cart

- The cart passes the correct total and tax data to the payment gateway

- The payment response triggers the correct logic in the fulfillment system

If even one link in this chain is broken (the payment gateway expects a currency code in a different format, for example), the system could fail.

Integration testing makes sure that the chain remains unbroken.

> To build a solid foundation in software testing principles, explore the Introduction to Testing in Python course.

Importance in modern software development

With the increasing complexity and modularity of modern applications, integration testing has become non-negotiable.

The systems we use are rarely monolithic. They are often composed of semi-independent services and components, sometimes built by different teams and even in different programming languages or environments.

Here are a few architectural trends that have increased the relevance of integration testing:

Microservices architecture

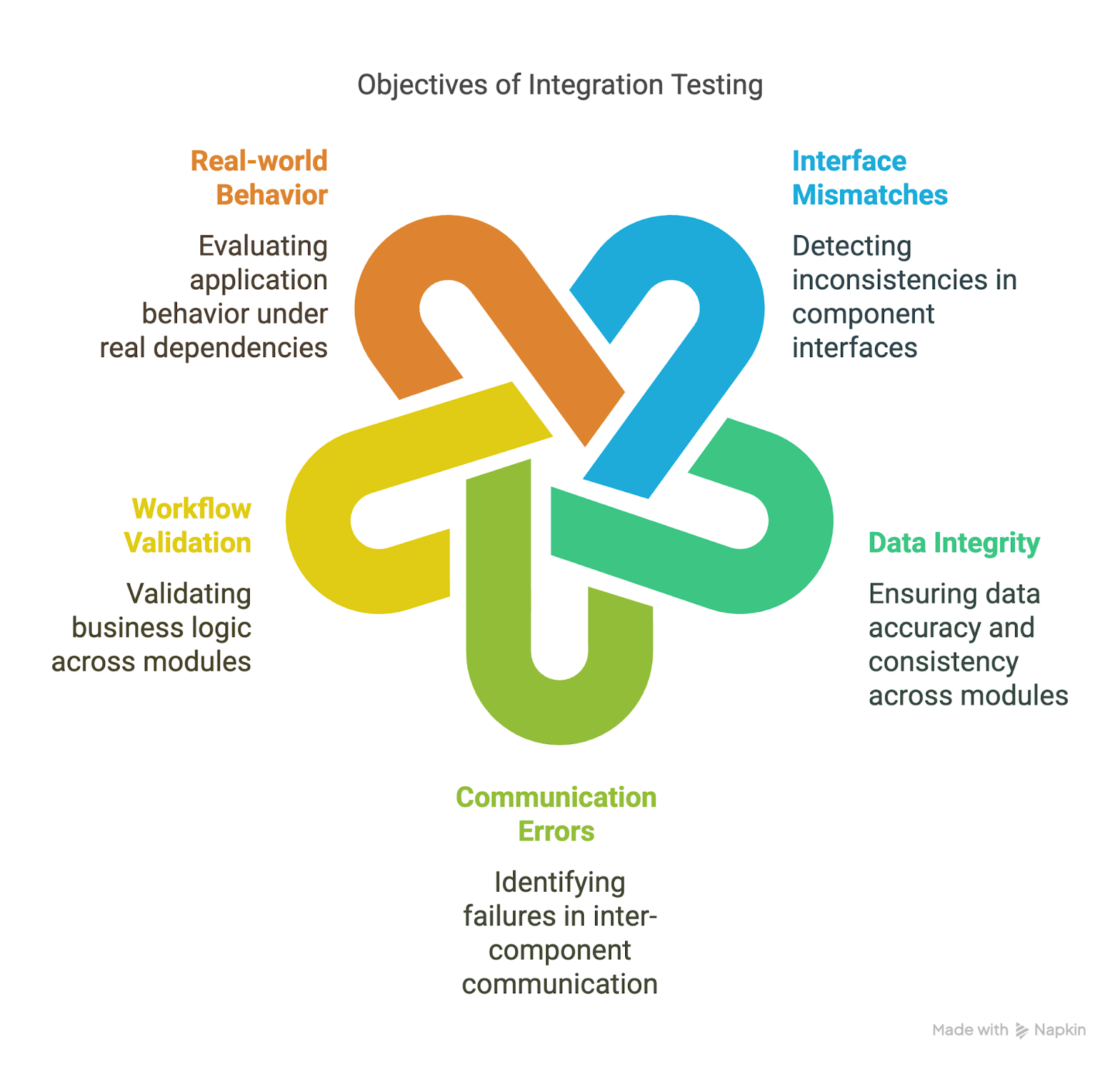

Image displaying a microservices architecture. Source: Microsoft Azure

In microservices, functionality is broken down into independent services that communicate over APIs or message queues.

Each service can be updated, deployed, and scaled individually. However, with this flexibility comes the challenge of integration:

- How do you ensure the authentication service integrates properly with the user management system?

- What happens if a new version of the inventory service changes its API signature?

Integration testing helps catch these issues before they impact users.

APIs and third-party services

Image showing third-party API integration in software systems.

Integration testing ensures these connections behave as expected, even when the underlying services are updated, regardless of whether you are using third-party APIs (e.g., Stripe, Twilio, Google Maps) or exposing your own.

Distributed systems

Image showing distributed systems with interconnected components.

With data spread across systems like cloud databases, microservices, and event-driven pipelines, integration testing verifies that all parts of the system interact reliably across a distributed network.

Agile and DevOps support

Image of Agile and DevOps practices.

Modern development practices like Agile, CI/CD, and DevOps emphasize continuous feedback and fast iterations.

Integration testing supports these goals by:

- Providing early detection of inter-component failures

- Ensuring stable builds for deployment

- Enabling test automation in CI/CD pipelines

Ultimately, integration testing helps developers avoid the issue of “works on my machine” by ensuring their code works with everything else it depends on.

> To understand how integration testing fits within CI/CD workflows, check out the CI/CD for Machine Learning course.

Contrasting with unit testing

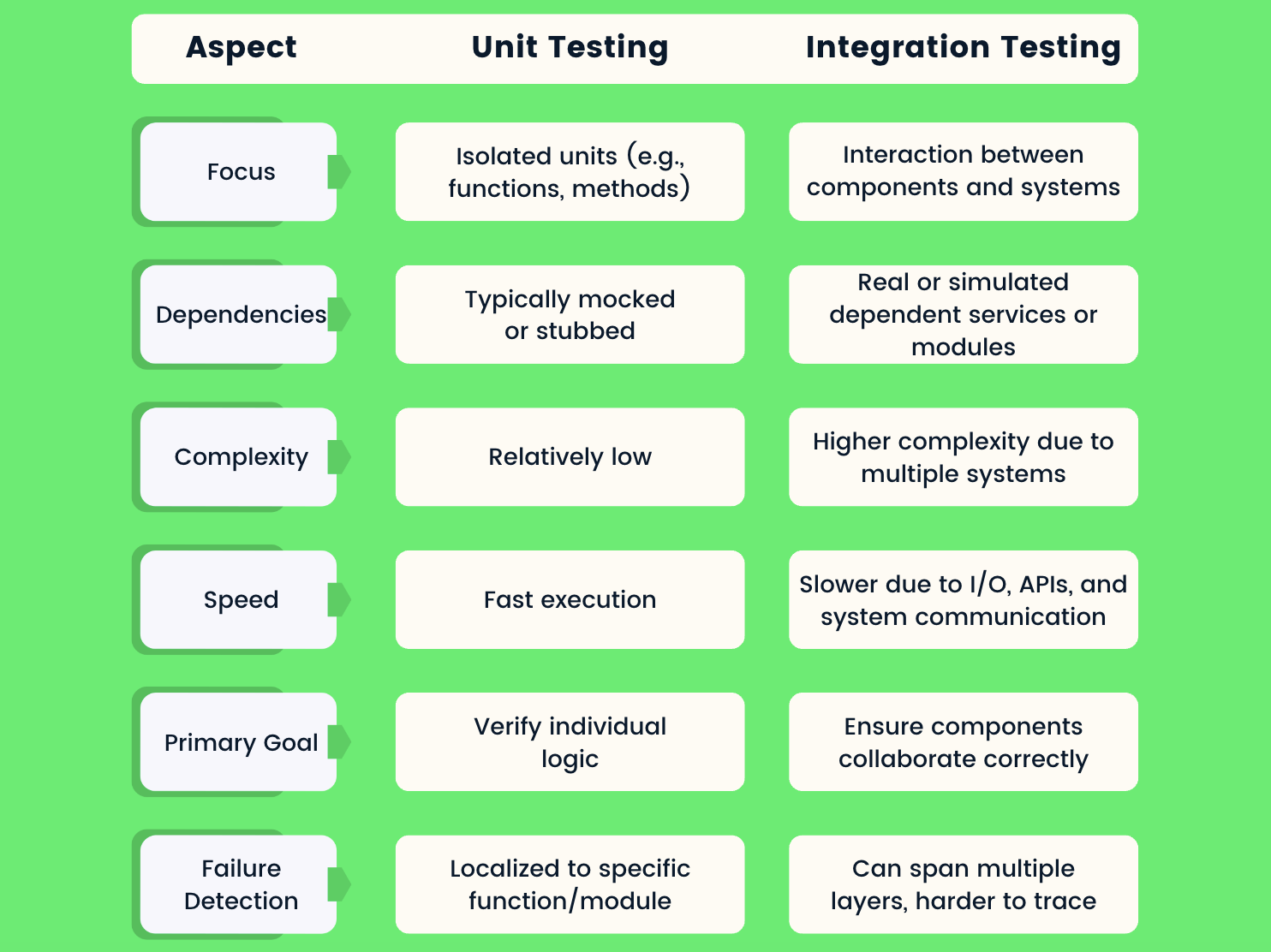

While both unit testing and integration testing are essential, they serve different purposes and are executed at different stages of the development cycle.

The image below compares different aspects of unit tests and integration tests.

Image comparing unit tests and integration tests.

It should be noted that both are necessary:

- Unit tests act as the foundation, which ensures that individual blocks are solid.

- Integration tests act as the mortar, which ensures the blocks hold together when assembled.

Skipping integration testing is like building a house with perfect bricks but no glue, which means you might get it standing, but it won’t stand for long.

Strategies and Methodologies for Integration Testing

Integration testing is not a one-size-fits-all process.

Choosing the right strategy depends on several factors, including the architecture of your application, the structure of your development team, the maturity of your components, and the frequency of changes.

It is crucial that you carefully select the integration strategy that works best for you, as it can streamline the testing process, reduce defect leakage, and enable faster feedback loops in Agile and DevOps environments.

Integration testing strategies broadly fall into two categories: non-incremental (e.g., big-bang) and incremental (e.g., top-down, bottom-up, and hybrid).

Each has its benefits, limitations, and ideal use cases.

Non-incremental approaches

Non-incremental approaches involve integrating all components at once, without any phased or staged process, which can simplify planning but increase the risk of integration failures.

Big-bang approach

The big-bang integration strategy is one of the most straightforward but also the riskiest.

In this approach, all or most individual modules are developed independently and then integrated at once to test the entire system as a whole.

How it works:

- Developers complete all modules and only begin integration testing after all units are available.

- All modules are "plugged in" simultaneously.

- System-level tests are executed to assess whether the integrated system functions correctly.

The table below contains the benefits and challenges of this approach:

|

Benefits |

Challenges |

|

Simple implementation: Requires minimal planning or coordination across modules during development |

Difficult to isolate defects: When an error occurs, it can be challenging to trace the root cause, as multiple modules are involved. |

|

Time-efficient for small systems: If the system is small and has limited interactions between components, this approach may be faster |

Late defect detection: Interface or integration issues are discovered late in the cycle, often during a critical phase close to release. |

|

Complex debugging: When many modules are involved, debugging becomes time-consuming and labor-intensive. |

When to use it:

- Suitable for small-scale projects or early-stage prototypes with few interdependencies.

- May be appropriate when all modules are relatively simple and developed by a small, tightly coordinated team.

- Not recommended for large systems, mission-critical applications, or projects with frequent updates.

It should be noted that in practice, the big-bang approach can become a bottleneck if used in modern CI/CD environments, where continuous integration and frequent testing are critical to success.

Incremental approaches

Incremental integration testing addresses many of the pitfalls of the big-bang strategy by introducing modules one at a time or in small groups, testing as they are integrated.

This allows issues to be detected early and traced more easily, which reduces the scope of debugging and rework.

There are several variations of the incremental approach, each with its own workflow, tooling considerations, and benefits.

Top-down integration testing

In top-down integration, testing begins with higher-level (or parent) modules, often those responsible for user-facing functionality, and gradually integrates lower-level (or child) modules.

Until the lower-level components are available, stubs are used to simulate their behavior.

How it works:

- The topmost modules in the software hierarchy are integrated and tested first.

- Stubs are created to mimic the responses of yet-to-be-integrated lower-level modules.

- Lower modules are replaced with actual components as they become ready.

The table below contains the benefits and challenges of this approach:

|

Benefits |

Challenges |

|

Early validation of critical workflows: Prioritizes top-level logic, which often includes business rules and user interfaces. |

Stub development can be time-consuming: Creating realistic and functional stubs may require considerable effort, especially for complex behaviors. |

|

Supports prototype testing: Useful for building early UI demos or business logic validations, even when all backend services aren’t ready. |

Delayed testing of lower-level components: Foundational services like data storage, logging, or infrastructure components may be validated late in the process. |

|

Early bug discovery in key modules: Since top modules usually direct the system’s behavior, this approach allows you to catch significant design or flow errors early. |

When to use it:

- Ideal for user-centric applications where front-end logic and business rules need to be validated early.

- Recommended when upper layers are being developed first and backend services are still under construction.

Bottom-up integration testing

The bottom-up approach starts by testing the lowest-level modules, those that often provide core services or utility functions, and incrementally integrates higher-level components.

Drivers, which simulate calling behavior from higher modules, are used to test the lower-level components.

How it works:

- Integration begins with foundational components, such as database access layers, utility libraries, or core APIs.

- These modules are tested using custom drivers that emulate requests from higher modules.

- Once lower modules are validated, higher-level components are introduced incrementally.

The table below contains the benefits and challenges of this approach:

|

Benefits |

Challenges |

|

Early validation of core functionality: Ensures that the system’s foundation is solid before layering on more complex logic. |

Drivers can be hard to build and maintain: Especially for complex interactions, drivers may require significant development effort. |

|

Helps catch data-related bugs early: Particularly useful in applications with complex data transformations or backend logic. |

User interface and end-to-end logic tested late: Delays full system validation until much of the system is already in place. |

|

Minimizes risk of downstream failures: If lower modules are flawed, higher modules will likely fail too. This approach prevents that cascade. |

When to use it:

- Excellent for data-intensive applications, backend-heavy systems, or when the core infrastructure is prioritized over presentation logic.

- Commonly used in microservice architectures, where core services are tested in isolation first.

Sandwich (hybrid) integration testing

The sandwich (or mixed) strategy combines both top-down and bottom-up approaches.

Testing is performed simultaneously on both ends of the module hierarchy.

High-level modules are tested using stubs, and low-level modules are tested using drivers.

Integration proceeds toward the middle layer from both directions.

How it works:

- Top and bottom modules are integrated and tested in parallel.

- Middle modules are gradually introduced to connect the two layers.

- Stubs and drivers are used where modules are missing.

The table below contains the benefits and challenges of this approach:

|

Benefits |

Challenges |

|

Comprehensive and balanced: Provides both early validation of business logic and solid testing of core infrastructure. |

Requires careful coordination: Development teams must synchronize their progress on both ends of the system. |

|

Reduces integration risks: By integrating and validating multiple parts of the system in parallel, defects can be detected faster. |

Increased complexity: Managing both stubs and drivers, along with overlapping dependencies, can introduce technical overhead. |

|

Supports continuous delivery models: Well-suited for Agile teams working in parallel on different layers of the stack. |

When to use it:

- Large-scale or layered applications, especially those with critical high-level workflows and low-level services.

- Ideal for enterprise systems, multi-team projects, or when simultaneous development of front-end and backend services is ongoing.

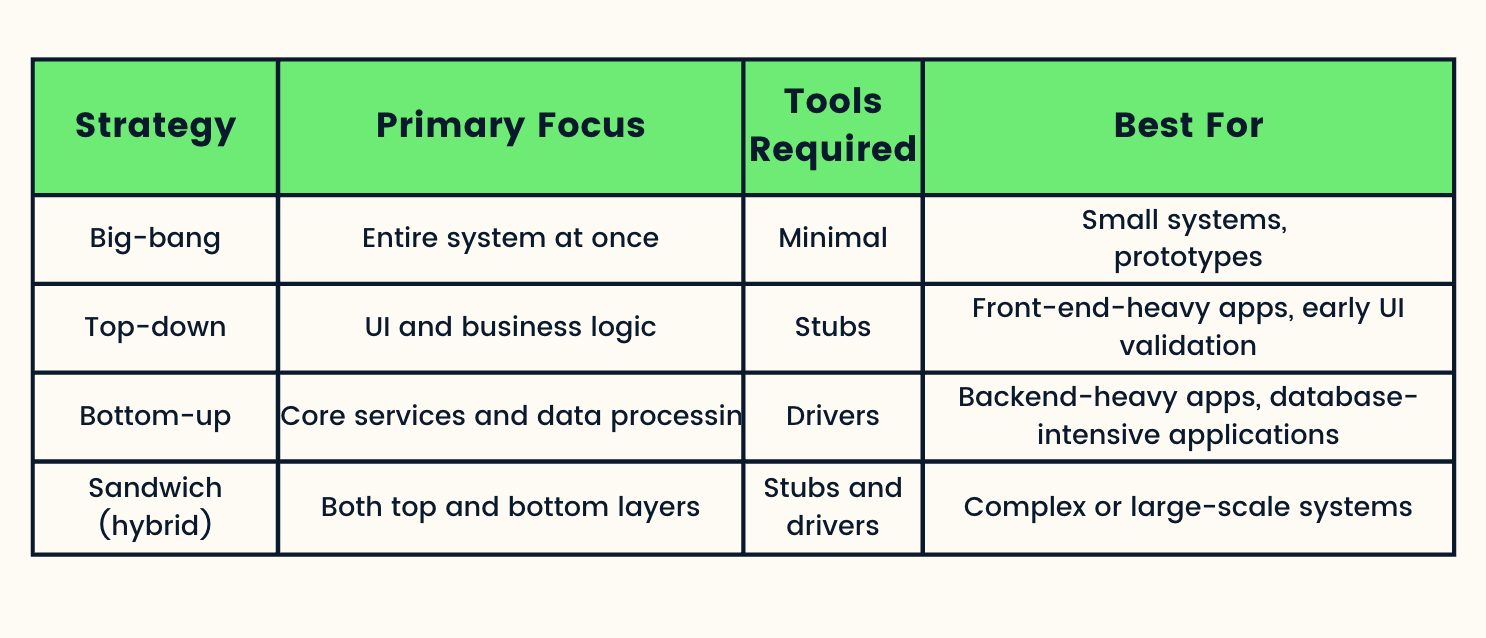

Summary of Integration Testing Strategies

The following image provides a high-level overview of the main integration testing strategies, highlighting their structure, flow, and typical use cases.

Image summarizing integration testing strategies.

Choosing the right integration testing strategy is about team workflows, system architecture, and project timelines.

In many real-world scenarios, teams blend multiple strategies to balance test coverage with speed, maintainability, and confidence in delivery.

Best Practices for Effective Integration Testing

Integration testing, when done right, becomes a powerful safeguard against system failures and regressions.

However, simply performing integration tests is not enough. How and when they are executed greatly influences their effectiveness.

Adopting strategic best practices can help teams ensure consistency, scalability, and faster feedback loops, all while reducing costs and risks.

Below are some of the key best practices every team should consider when designing and implementing integration tests.

Early and continuous testing

"Test early, test often" is one of the most fundamental principles in modern software testing.

Delaying integration testing until the end of development is a high-risk strategy, often leading to late-stage surprises, expensive debugging, and missed delivery timelines.

Here is why early testing matters:

- Catch defects when they are cheaper to fix: According to industry research, the cost to fix a bug increases exponentially the later it's found in the development cycle. Detecting integration issues early can save both time and money.

- Accelerates feedback loops: In Agile and DevOps workflows, continuous feedback is essential. Early integration tests help identify regressions and misalignments quickly.

- Improves developer confidence: When developers have immediate feedback on integration points, they are more confident in making iterative changes.

- Supports test-driven development (TDD): Starting integration testing early supports test-first or behavior-driven practices, especially in microservice or API-first development.

Here is how to implement it:

- Introduce integration testing alongside unit testing as part of your feature branch workflows.

- Integrate with your CI pipelines using tools like Jenkins, GitHub Actions, or GitLab CI/CD.

- Trigger automated integration tests on every merge, pull request, or deployment to staging.

As someone who has worked in this space, my advice is: do not wait until all the components are finished. Start testing early by using mocks or stubs to simulate missing pieces. This will enable faster feedback and reduced integration risk.

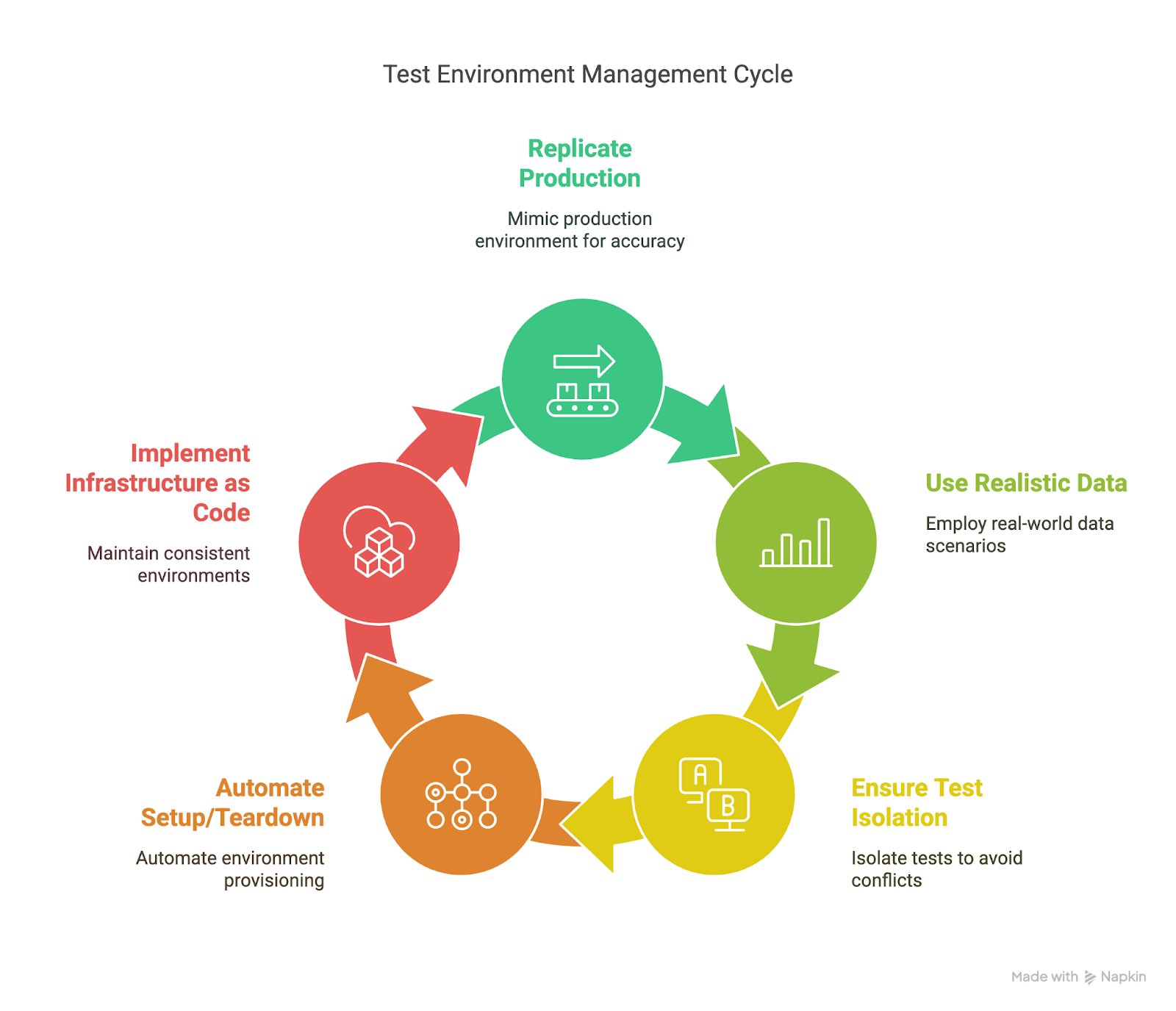

Environment and data management

An often-overlooked but crucial aspect of reliable integration testing is the quality of the environment and test data.

Unstable or inconsistent environments lead to false positives, flaky tests, and wasted debugging time.

Here are some key considerations:

- Replicate production as closely as possible: Test environments should mimic production in terms of OS, software versions, network configurations, and data flows.

- Use realistic test data: Test data should represent real-world scenarios, including edge cases, unusual values, and large datasets.

- Ensure test isolation: Tests should not share mutable data unless explicitly coordinated. Data isolation avoids conflicts and reduces flakiness.

- Automate environment setup and teardown: Use containerization (e.g., Docker), virtualization, or cloud-based environments to automate provisioning and cleanup.

- Use Infrastructure as Code (IaC): Tools like Terraform, Pulumi, or Ansible help maintain consistent environments across development, testing, and production.

Image showcasing best practices for managing test environments and data in integration testing.

Mocking and service virtualization

Real-world systems often rely on external services which include payment gateways, third-party APIs, and authentication providers, that may not always be available or controllable in a test environment.

This is where mocking and service virtualization become essential.

Mocks are lightweight simulations of real services. They return fixed responses and are usually used in unit tests or basic integration tests.

Service virtualization is a more advanced approach that emulates the actual behavior of complex services, including latency, errors, rate limits, and various response types.

Some of the benefits of mocking and service virtualization include:

- Decouple testing from availability of third-party services: Teams can continue testing even when external APIs are down or not yet implemented.

- Control test scenarios: Simulate edge cases and error conditions that may be difficult or unsafe to produce with real services.

- Improve test stability: Eliminate variables such as rate limits, slow responses, or unexpected updates in third-party systems.

> If you are interested in advanced testing automation, learn how to automate machine learning testing in this DeepChecks Tutorial on DataCamp.

Cross-functional collaboration

Integration testing should not be siloed within QA or development teams.

Effective testing requires collaboration between multiple roles, which ensures that both business requirements and technical integrations are verified thoroughly.

Some of the key stakeholders in integration testing include:

- Developers: Ensure interfaces are implemented and consumed correctly.

- QA/Test Engineers: Design comprehensive test scenarios and validate outcomes.

- DevOps Engineers: Maintain consistent environments, integrate tests into CI/CD, and monitor test performance.

- Product Owners/Business Analysts: Validate that test cases align with real-world workflows and user expectations.

Effective collaboration involves several key practices.

Teams should conduct test case reviews with cross-functional participants before implementation to align expectations and uncover edge cases early.

Using a shared test management system like TestRail, Zephyr, or Xray promotes visibility and accountability across roles.

Collaborative tools such as Confluence and Jira help link user stories directly to integration tests, ensuring traceability.

Additionally, scheduling regular integration demos enables stakeholders to observe how components interact and whether they align with intended user flows.

> If you want to learn how DevOps tools streamline collaboration, take a look at this Azure DevOps Tutorial.

Tools and Technologies for Integration Testing

Selecting the right tool can greatly enhance your integration testing efforts.

Testsigma

Testsigma is a low-code, AI-powered testing platform ideal for teams aiming for faster development cycles. It supports:

- Cross-browser and mobile testing

- Integration with CI/CD tools

- Automated test creation in plain English

It is beginner-friendly and ideal for teams working on web and mobile apps with limited coding bandwidth.

Selenium and Citrus

Selenium is a well-known tool for browser-based automation, and while it is typically associated with UI testing, it also facilitates integration testing for web apps.

Citrus, on the other hand, excels in message-based testing, such as:

- REST and SOAP APIs

- JMS and Kafka messaging

- Mail servers

Together, they provide robust coverage for both web and backend systems.

Tricentis Tosca

Tricentis Tosca offers a model-based testing approach and is ideal for enterprises dealing with complex integrations, such as:

- SAP environments

- Mainframes

- APIs and microservices

Challenges and Mitigation Strategies in Integration Testing

Integration testing is critical for validating system behavior, but it comes with its own set of challenges.

Below are three common issues and how you can effectively address them:

Environment configuration inconsistencies

Differences between development, testing, and production environments can lead to hard-to-diagnose bugs that only appear after deployment.

To mitigate this, use containerization tools like Docker to create consistent, reproducible environments.

Configuration management tools like Ansible or Terraform can help automate setup across environments.

Incorporating CI/CD practices ensures that environment provisioning is consistent and version-controlled.

Flaky tests and data dependencies

Flaky tests can produce inconsistent results due to timing issues, shared state, or unstable data sources.

You can reduce this flakiness by isolating test data using fixtures or mocks, which ensures each test runs with a known and controlled dataset.

Reset scripts can restore the system to a clean state before each run, which enhances test repeatability.

Third-party service reliability

External services can introduce latency or failures that disrupt test reliability.

To minimize this impact, use service virtualization or mock APIs to simulate.

You can also implement fallback logic in your application to handle service outages gracefully, both in testing and production environments.

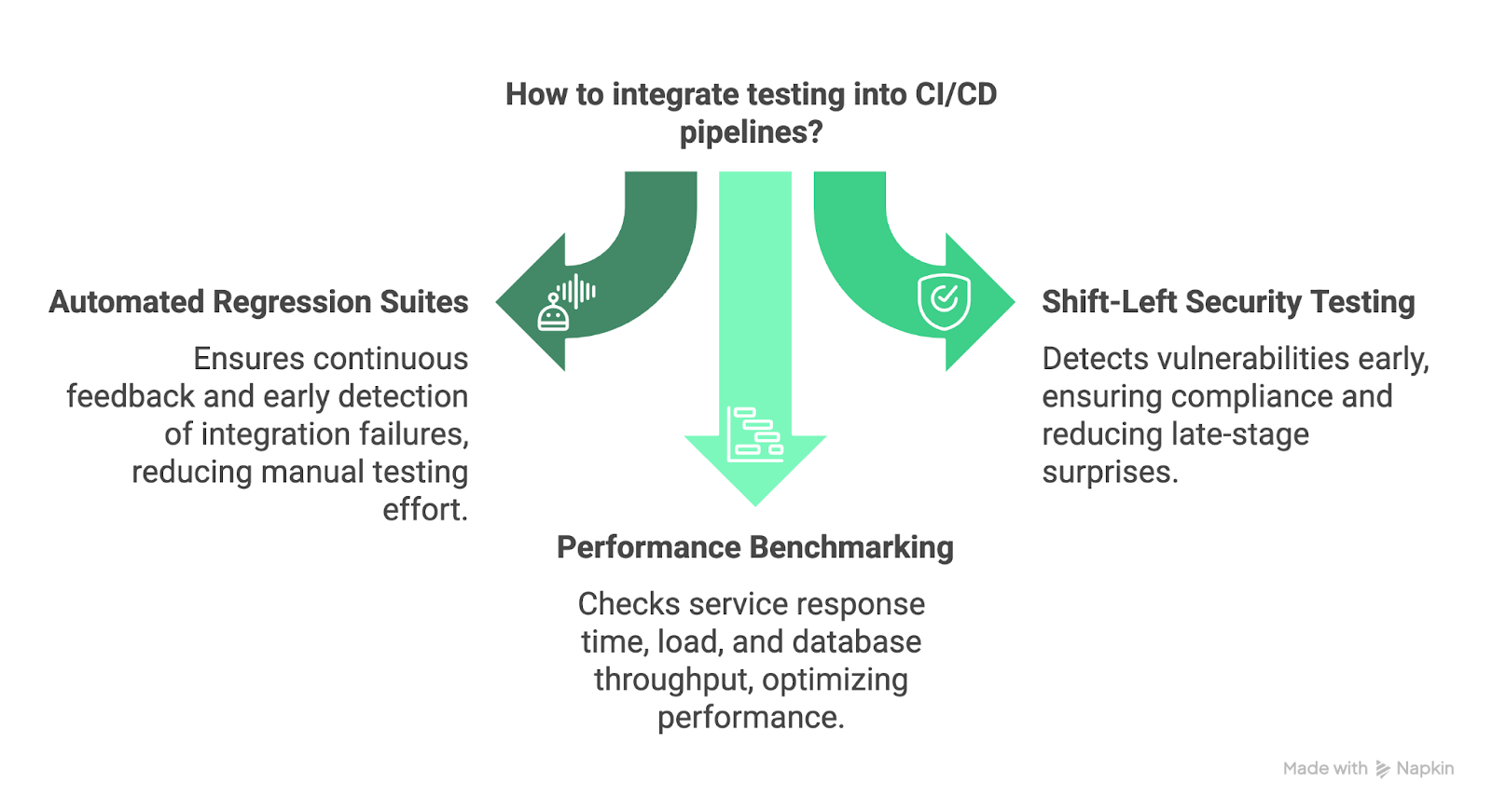

Integration Testing in CI/CD Pipelines

CI/CD has redefined how we build and release software. In this section, we will discuss how integration testing fits perfectly into this paradigm.

Image on how to include integration testing into CI/CD pipelines.

Automated regression suites

Automated integration tests should be part of your regression suite and executed with every build or merge.

Some of the benefits of this include:

- Continuous feedback

- Early detection of integration failures

- Reduced manual testing effort

Integrate tools like Jenkins, GitHub Actions, or GitLab CI/CD for seamless execution.

Shift-left security testing

Incorporating security tests within integration suites helps detect vulnerabilities early.

Examples include:

- Authentication/authorization checks

- Injection attack simulations

- API security tests using OWASP ZAP or Postman

Shifting security left ensures compliance and reduces late-stage surprises.

Performance benchmarking

Integration tests within CI/CD pipelines can also run performance checks:

- Response time of services

- Load and stress testing

- Database throughput and latency

Tools like JMeter, Locust, and k6 can benchmark performance as part of your pipeline.

> For a deep dive into applying CI/CD pipelines in a real-world setting, check out the CI/CD in Data Engineering tutorial.

Conclusion

As software systems grow more modular and interconnected, integration testing plays a critical role in making sure everything works together as intended.

You can minimize bugs, enhance system reliability, and accelerate delivery cycles with the right strategies in place.

To continue building your expertise in software testing, explore DataCamp’s Introduction to Testing in Python course, which lays a strong foundation in essential testing practices.

If you are aiming to integrate robust testing strategies into modern deployment pipelines, have a look at the CI/CD for Machine Learning course, which offers practical insights into automating workflows and maintaining high software quality.

Become a Python Developer

FAQs

What is the main goal of integration testing?

The primary goal of integration testing is to verify that different modules or components of a software application work together as expected, which ensures smooth data flow and interface compatibility.

How does integration testing differ from unit testing?

While unit testing checks individual functions or methods in isolation, integration testing focuses on the interaction between modules to catch issues that occur when components are combined.

When should integration testing be performed in the development lifecycle?

Integration testing should start as early as possible, ideally right after unit testing, and continue throughout the development lifecycle to catch issues early and often.

What are the most common integration testing strategies?

The most common strategies include big-bang, top-down, bottom-up, and sandwich (hybrid) integration testing, each suitable for different system structures and testing goals.

How can I handle flaky tests in integration testing?

Flaky tests can be mitigated by stabilizing environments, isolating test data, mocking unreliable services, and improving test design to reduce dependency on timing and external factors.

Why is mocking or service virtualization important in integration testing?

Mocking and service virtualization allow teams to simulate unavailable or unstable external services, ensuring that integration tests can run reliably and continuously without real dependencies.

How does integration testing fit into a CI/CD pipeline?

Integration tests are incorporated into CI/CD pipelines as part of automated regression suites, enabling continuous feedback, early bug detection, and performance/security validation.

What are the biggest challenges in integration testing?

Common challenges include inconsistent test environments, managing test data, dependency on third-party services, and debugging failures across multiple integrated components.

Can integration testing improve security and performance?

Yes, by including security scans and performance benchmarks in integration test suites, teams can identify vulnerabilities and bottlenecks before deployment.