Course

The quality of your data directly impacts the accuracy of your analysis and model performance. Why? Because raw data often contains inconsistencies, errors, and irrelevant information that can distort results and lead to flawed insights. Data preprocessing is a way to mitigate this problem. Namely, it is the process of transforming raw data into a clean, structured format.

In this blog post, I will cover:

- What data preprocessing?

- Steps in data preprocessing

- Techniques for data preprocessing with examples

- Tools for data preprocessing

- Best practices for data preprocessing

Let’s get into it!

What is Data Preprocessing?

Data preprocessing is a key aspect of data preparation. It refers to any processing applied to raw data to ready it for further analysis or processing tasks.

Traditionally, data preprocessing has been an essential preliminary step in data analysis. However, more recently, these techniques have been adapted to train machine learning and AI models and make inferences from them.

Thus, data preprocessing may be defined as the process of converting raw data into a format that can be processed more efficiently and accurately in tasks such as:

- Data analysis

- Machine learning

- Data science

- AI

Become a Data Engineer

Steps in Data Preprocessing

Data preprocessing involves several steps, each addressing specific challenges related to data quality, structure, and relevance.

Let’s take a look at these key steps, which generally go in the following order:

Step 1: Data cleaning

Data cleaning is the process of identifying and correcting errors or inconsistencies in the data to ensure it is accurate and complete. The objective is to address issues that can distort analysis or model performance.

For example:

- Handling missing values: Using strategies like mean/mode imputation, deletion, or predictive models to fill in or remove missing data.

- Removing duplicates: Eliminating duplicate records to ensure each entry is unique and relevant.

- Correcting inconsistent formats: Standardizing formats (e.g., date formats, string cases) to maintain consistency.

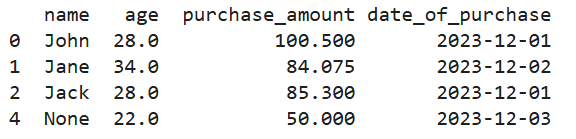

Here’s how it looks in Python:

# Creating a manual dataset

data = pd.DataFrame({

'name': ['John', 'Jane', 'Jack', 'John', None],

'age': [28, 34, None, 28, 22],

'purchase_amount': [100.5, None, 85.3, 100.5, 50.0],

'date_of_purchase': ['2023/12/01', '2023/12/02', '2023/12/01', '2023/12/01', '2023/12/03']

})

# Handling missing values using mean imputation for 'age' and 'purchase_amount'

imputer = SimpleImputer(strategy='mean')

data[['age', 'purchase_amount']] = imputer.fit_transform(data[['age', 'purchase_amount']])

# Removing duplicate rows

data = data.drop_duplicates()

# Correcting inconsistent date formats

data['date_of_purchase'] = pd.to_datetime(data['date_of_purchase'], errors='coerce')

print(data)

Output of code above

Step 2: Data integration

Data integration involves combining data from multiple sources to create a unified dataset. This is often necessary when data is collected from different source systems.

Some techniques used in data integration include:

- Schema matching: Aligning fields and data structures from different sources to ensure consistency.

- Data deduplication: Identifying and removing duplicate entries across multiple datasets.

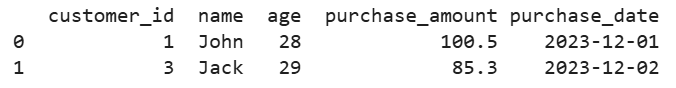

For example, let’s say we have customer data from multiple databases. Here’s how we would merge it into a single view:

# Creating two manual datasets

data1 = pd.DataFrame({

'customer_id': [1, 2, 3],

'name': ['John', 'Jane', 'Jack'],

'age': [28, 34, 29]

})

data2 = pd.DataFrame({

'customer_id': [1, 3, 4],

'purchase_amount': [100.5, 85.3, 45.0],

'purchase_date': ['2023-12-01', '2023-12-02', '2023-12-03']

})

# Merging datasets on a common key 'customer_id'

merged_data = pd.merge(data1, data2, on='customer_id', how='inner')

print(merged_data)

Output of code above

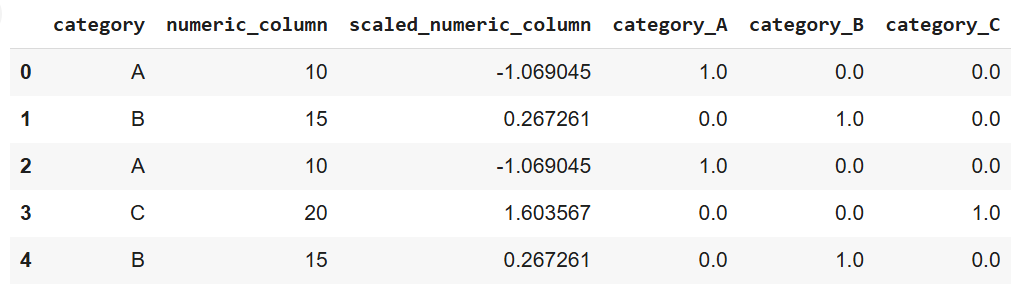

Step 3: Data transformation

Data transformation converts data into formats suitable for analysis, machine learning, or mining.

For example:

- Scaling and normalization: Adjusting numeric values to a common scale is often necessary for algorithms that rely on distance metrics.

- Encoding categorical variables: Converting categorical data into numerical values using one-hot or label encoding techniques.

- Feature engineering and extraction: Creating new features or selecting important ones to improve model performance.

Here’s how it looks in Python, using scikit-learn:

from sklearn.preprocessing import StandardScaler, OneHotEncoder

# Creating a manual dataset

data = pd.DataFrame({

'category': ['A', 'B', 'A', 'C', 'B'],

'numeric_column': [10, 15, 10, 20, 15]

})

# Scaling numeric data

scaler = StandardScaler()

data['scaled_numeric_column'] = scaler.fit_transform(data[['numeric_column']])

# Encoding categorical variables using one-hot encoding

encoder = OneHotEncoder(sparse_output=False)

encoded_data = pd.DataFrame(encoder.fit_transform(data[['category']]),

columns=encoder.get_feature_names_out(['category']))

# Concatenating the encoded data with the original dataset

data = pd.concat([data, encoded_data], axis=1)

print(data)

Output of code above

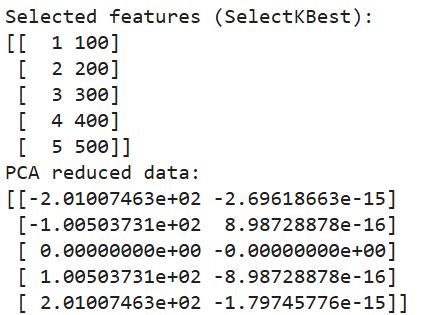

Step 4: Data reduction

Data reduction simplifies the dataset by reducing the number of features or records while preserving the essential information. This helps speed up analysis and model training without sacrificing accuracy.

Techniques for data reduction include:

- Feature selection: Choosing the most important features contributing to the analysis or model's performance.

- Principal component analysis (PCA): A dimensionality reduction technique that transforms data into a lower-dimensional space.

- Sampling methods: Reducing the size of the dataset by selecting representative samples is useful for handling large datasets.

And here’s how we implement dimensionality reduction in Python:

from sklearn.decomposition import PCA

from sklearn.feature_selection import SelectKBest, chi2

# Creating a manual dataset

data = pd.DataFrame({

'feature1': [10, 20, 30, 40, 50],

'feature2': [1, 2, 3, 4, 5],

'feature3': [100, 200, 300, 400, 500],

'target': [0, 1, 0, 1, 0]

})

# Feature selection using SelectKBest

selector = SelectKBest(chi2, k=2)

selected_features = selector.fit_transform(data[['feature1', 'feature2', 'feature3']], data['target'])

# Printing selected features

print("Selected features (SelectKBest):")

print(selected_features)

# Dimensionality reduction using PCA

pca = PCA(n_components=2)

pca_data = pca.fit_transform(data[['feature1', 'feature2', 'feature3']])

# Printing PCA results

print("PCA reduced data:")

print(pca_data)

Output of code above

Common Techniques for Data Preprocessing with Examples

We’ve established that preprocessing raw data is essential to ensure it is well-suited for analysis or machine learning models. We’ve also covered the steps involved with the process.

In this section, we will explore various techniques for handling common issues during the preprocessing phase. Additionally, we will explore data augmentation, a useful technique for creating synthetic data in specific contexts like image or text datasets.

Handling missing data

Missing data can negatively impact the performance of a machine learning model or analysis. There are several strategies to handle missing values effectively:

- Imputation: This technique involves filling in missing values with a calculated estimate, such as the mean, median, or mode of the available data. Advanced methods include predictive modeling, where missing values are predicted based on relationships within the data.

# Note: This is dummy code and not expected to run on its own

from sklearn.impute import SimpleImputer

imputer = SimpleImputer(strategy='mean') # Replace 'mean' with 'median' or 'most_frequent' if needed

data['column_with_missing'] = imputer.fit_transform(data[['column_with_missing']])- Deletion: Removing rows or columns with missing values is a straightforward solution. However, it should be used cautiously as it can lead to loss of valuable data, especially if many entries are missing.

data.dropna(inplace=True) # Removes rows with any missing values- Modeling missing values: In cases where the missing data pattern is more complex, machine learning models can predict the missing values based on the rest of the dataset. This can improve accuracy by incorporating relationships between different variables.

Outlier detection and removal

Outliers are extreme values that deviate significantly from the rest of the data, which, like missing values, can distort analysis and model performance. Various techniques can be used to detect and handle outliers:

- Z-Score method: This approach measures how many standard deviations a data point is from the mean. Data points beyond a certain threshold (e.g., ±3 standard deviations) can be considered outliers.

# Note: this is dummy code.

# It won’t work unless a data with a column named “column” is imported

from scipy import stats

z_scores = stats.zscore(data['column']) outliers = abs(z_scores) > 3 # Identifying outliers- Interquartile range (IQR): IQR is the range between the first quartile (Q1) and the third quartile (Q3). Values beyond 1.5 times the IQR above Q3 or below Q1 are considered outliers.

Q1 = data['column'].quantile(0.25)

Q3 = data['column'].quantile(0.75)

IQR = Q3 - Q1

outliers = (data['column'] < (Q1 - 1.5 * IQR)) | (data['column'] > (Q3 + 1.5 * IQR))- Visual techniques: Visualization methods like box plots, scatter plots, or histograms can help detect outliers in a dataset. Once identified, outliers can either be removed or transformed, depending on their influence on the analysis.

Data encoding

When working with categorical data, encoding is necessary to convert categories into numerical representations that machine learning algorithms can process. Common encoding techniques include:

- One-hot encoding: As mentioned before, this method creates binary columns for each category.

from sklearn.preprocessing import OneHotEncoder

encoder = OneHotEncoder(sparse_output=False)

encoded_data = encoder.fit_transform(data[['categorical_column']])- Label encoding: Label encoding assigns a unique numerical value to each category. However, this method can introduce an unintended ordinal relationship between categories if they don’t have a natural order.

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

data['encoded_column'] = le.fit_transform(data['categorical_column'])- Ordinal encoding: Ordinal encoding is used when categorical variables have an inherent order, like low, medium, and high. Each category is mapped to a corresponding integer value that reflects its ranking.

from sklearn.preprocessing import OrdinalEncoder

oe = OrdinalEncoder(categories=[['low', 'medium', 'high']])

data['ordinal_column'] = oe.fit_transform(data[['ordinal_column']])Data scaling and normalization

Scaling and normalization ensure that numerical features are on a similar scale, which is particularly important for algorithms that rely on distance metrics (e.g., k-nearest neighbors, SVMs).

- Min-max scaling: This technique scales data to a specified range, typically 0 to 1. It's useful when all features need to have the same scale.

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler()

data[['scaled_column']] = scaler.fit_transform(data[['numeric_column']])- Standardization (Z-Score normalization): This method scales data such that the mean becomes 0 and the standard deviation becomes 1, helping models perform better with normally distributed features.

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

data[['standardized_column']] = scaler.fit_transform(data[['numeric_column']])Data augmentation

Data augmentation is a technique for artificially increasing the size of a dataset by creating new, synthetic examples. This is especially useful for image or text datasets in deep learning models, where large amounts of data are required for robust model performance.

- Image augmentation: Techniques like rotating, flipping, scaling, or adding noise to images help create variations that improve model generalization.

from tensorflow.keras.preprocessing.image import ImageDataGenerator

datagen = ImageDataGenerator(

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

augmented_images = datagen.flow_from_directory('image_directory', target_size=(150, 150))- Text augmentation: For text data, augmentation methods include synonym replacement, random insertion, and back-translation, where a sentence is translated into another language and then back into the original language, introducing variations.

import nlpaug.augmenter.word as naw

# install nlpaug here: https://github.com/makcedward/nlpaug

aug = naw.SynonymAug(aug_src='wordnet')

augmented_text = aug.augment("This is a sample text for augmentation.")Tools for Data Preprocessing

While you can implement data processing using pure Python code, powerful tools have been developed to handle various tasks and make the overall process more efficient. Here are a few examples:

Python libraries

There are quite a few specialized libraries for data preprocessing in Python. Here are 3 of the most popular:

- Pandas: Python's most commonly used library for data manipulation and cleaning. It provides flexible data structures, primarily DataFrame and Series, which enable you to handle and manipulate structured data efficiently. Pandas supports operations like handling missing data, merging datasets, filtering data, and reshaping.

import pandas as pd

# Load a sample dataset

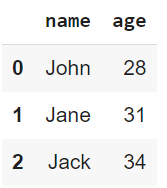

data = pd.DataFrame({

'name': ['John', 'Jane', 'Jack'],

'age': [28, 31, 34]

})

print(data)

Output of code above

- NumPy: A fundamental library for numerical computations. It supports large, multi-dimensional arrays and matrices and mathematical functions to operate on these arrays. NumPy is often the foundation for many higher-level data processing libraries, such as Pandas.

import numpy as np

# Create an array and perform element-wise operations

array = np.array([1, 2, 3, 4])

squared_array = np.square(array)

print(squared_array)![]()

Output of code above

- Scikit-learn: Widely used for machine learning tasks but also offers numerous preprocessing utilities, such as scaling, encoding, and data transformation. Its preprocessing module contains tools for handling categorical data, scaling numerical data, feature extraction, and more.

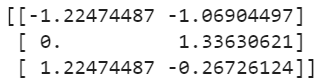

from sklearn.preprocessing import StandardScaler

# Standardize data

data = [[10, 2], [15, 5], [20, 3]]

scaler = StandardScaler()

scaled_data = scaler.fit_transform(data)

print(scaled_data)

Output of code above

Cloud platforms

On-premise systems may not be able to handle large datasets effectively. In such situations, cloud platforms offer scalable, efficient solutions that enable you to process vast amounts of data across distributed systems.

Some cloud platform tools to consider include:

- AWS Glue: A fully managed ETL service by Amazon Web Services. It automatically discovers and organizes data and prepares it for analytics. Glue supports data cataloging and can connect to AWS services like S3 and Redshift.

- Azure Data Factory: A cloud-based data integration service from Microsoft. It supports building ETL and ELT pipelines for large-scale data. Azure Data Factory allows users to move data between various services, preprocess it using transformations, and orchestrate workflows using a visual interface.

Automation tools

Automating the repetitive steps of preprocessing data can save time and reduce errors – especially when dealing with machine learning models and large datasets. Here are some tools that offer built-in preprocessing pipelines:

- AutoML platforms: AutoML is short for Automated Machine Learning (and it means what it says on the tin). In other words, these platforms automate several stages of the machine learning workflow. Platforms like Google's AutoML, Microsoft's Azure AutoML, and H2O.ai's AutoML provide automated pipelines that handle tasks like feature selection, data transformation, and model selection with minimal user intervention.

- Preprocessing pipelines in scikit-learn: Scikit-learn provides the Pipeline class, which helps streamline and automate the preprocessing steps. It allows you to string together multiple preprocessing operations into a single, executable workflow, ensuring that preprocessing tasks are applied consistently:

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler, OneHotEncoder

from sklearn.impute import SimpleImputer

from sklearn.compose import ColumnTransformer

# Example Pipeline combining different preprocessing tasks

numeric_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy='mean')),

('scaler', StandardScaler())

])

categorical_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy='most_frequent')),

('encoder', OneHotEncoder())

])

preprocessor = ColumnTransformer(transformers=[

('num', numeric_transformer, ['age']),

('cat', categorical_transformer, ['category'])

])

preprocessed_data = preprocessor.fit_transform(data)Best Practices for Data Preprocessing

It’s essential to follow the best practices to maximize the effectiveness of your preprocessing efforts. That said, below are some practices I’d encourage you to consider:

Understand the data

Before you dive into preprocessing, it’s important to understand the dataset thoroughly. Conduct exploratory data analysis to identify the structure of the data at hand. What you want to understand specifically are:

- Key features

- Potential anomalies

- Relationships

Without first understanding the dataset’s characteristics, it’s quite likely you may apply incorrect preprocessing methods, which will distort the data.

Automate repetitive steps

Preprocessing often involves repetitive tasks. Automating these tasks by building pipelines ensures consistency and efficiency and reduces the odds of manual errors. To streamline workflows, leverage pipelines in tools like scikit-learn or cloud-based platforms.

Document preprocessing steps

Clear documentation helps achieve two objectives:

- Reproducibility

- Understanding (for yourself at a later date or for others in your team).

Every decision, transformation, or filtering step should be recorded, including its reasoning. This will significantly enhance collaboration among team members and help you pick up projects where you left off.

Iterative improvements

Data preprocessing is not a one-time task – it should be an iterative process. As models evolve and provide feedback on their performance, use this information to revisit and refine preprocessing steps, as it can lead to better results. For instance, feature engineering may reveal new useful features, or tuning outlier handling may improve model accuracy – use this feedback to update your preprocessing steps.

Conclusion

Data preprocessing plays a critical role in the success of any data project. Proper preprocessing ensures that raw data is transformed into a clean, structured format, which helps models and analyses yield more accurate, meaningful insights.

In this article, I’ve shared various techniques to help implement data preprocessing. Still, the most important thing to note is that this process is not a one-time effort but an iterative process! Continuous refinement leads to improved model performance and better decision-making. A well-prepared dataset sets the foundation for any successful data AI initiative.

To continue your learning, I recommend checking out the following excellent resources:

Become an ML Scientist