Track

Both Google AI and OpenAI have a history of releasing cutting-edge AI technologies. Yet the landscape has changed with the introduction of ChatGPT, which has ignited a new race where big tech companies like Google are desperately trying to launch similar AI models.

In this post, we will learn about OpenAI and Google AI's recent developments and what we can expect in the future. Moreover, we will also learn how advancement in AI is changing the field of data science and how we can use it to boost productivity.

OpenAI

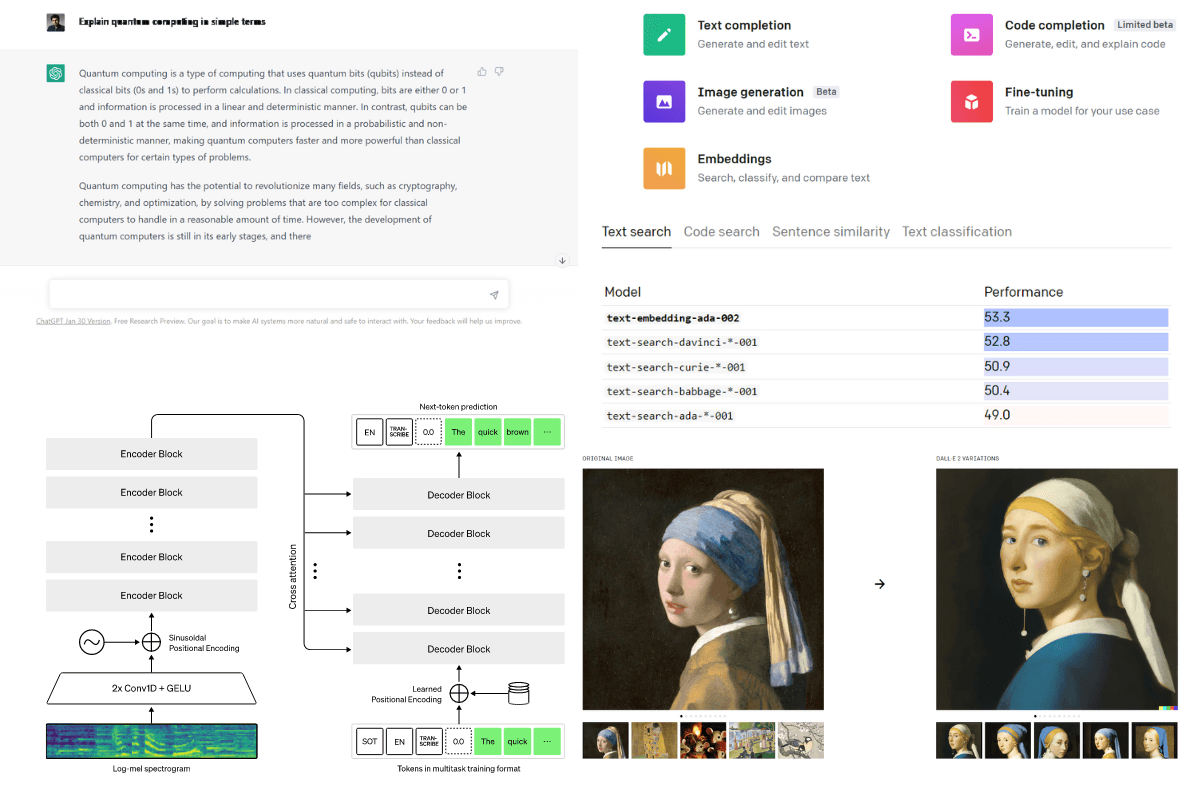

OpenAI API is a platform that allows us to access cutting-edge generative models via API. We can generate high-quality images using DALLE-2, text and code generation using GPT-3, and use embedding for other language-related tasks. Furthermore, it allows you to moderate results, add rate limits, and fine-tune the model on a specific dataset. Learn about GPT-3 by reading our Beginner's Guide to GPT-3 blog.

Image by Author | Source OpenAI

These all are commercial products, and they follow the pay-as-you-go model, but from time to time, OpenAI has released open-source tools and models, such as:

- Whisper: Speech recognition model using large-scale weak supervision.

- OpenAI Baselines: Implementation of reinforcement learning algorithms.

- Gym: A toolkit for the development and evaluation of reinforcement learning algorithms.

- GPT2: Code and model implementation of the paper “Language Models are Unsupervised Multitask Learners“

- DALL-E: PyTorch package for the discrete VAE used for DALL·E

The APIs, toolkits, and large language models are great, but they don’t manage to come close to the success of ChatGPT.

The ChatGPT model was trained using Reinforcement Learning from Human Feedback (RLHF), similar to InstructGPT (a better version of GPT-3), but the data collection stage is slightly different.

How is it different from the previous generations of models? The conversation AI was able to ask follow-up questions, challenge incorrect premises, admit mistakes, and demonstrate safety mitigations.

Recently, OpenAI partner Microsoft has introduced an improved version of ChatGPT. With OpenAI’s iterative deployment, we can see a new wave of AI technologies that understand our needs and provide us with assistance.

If you are completely new to Artificial intelligence, take a no-code AI Fundamentals course. It covers the basics of machine learning, like supervised and unsupervised learning, deep learning, and beyond.

GPT-4

Apart from ChatGPT, we are expecting GPT-4, which will be the most advanced large language model. In the Greylock podcast, OpenAI CEO Sam Altman has disclosed a little information about GPT-4. He said that rumors about GPT-4 on Twitter are false, it will not have 100 trillion parameters, and people are begging to be disappointed. He also disclosed that "we will release the GPT-4 when we think it is safe and functional".

Image by Author

What can you expect in GPT-4?

- The model size won’t be much bigger than GPT-3.

- Optimized large models using new parameterization (μP).

- Model training will use optimal compute, by increasing the number of training tokens to reach minimal loss.

- It will be a text-only model. GPT-4 won’t be multimodal like DALL-E 2.

- It might use sparsity to reduce computing costs.

- Just like ChatGPT, it will be more AI aligned on following our intention and adhering to our values.

Learn all about GPT-1, GPT-2, GPT-3, and GPT-4 by reading our Everything We Know About GPT-4 So Far post.

Creation of safe AGI

During the interview with StrictlyVC, Altman disclosed a few points on how far we are developing AGI (Artificial General Intelligence).

He said: “The closer we get, the harder it is to answer. Because I think it’s going to be much blurrier and much more of a gradual transition than people think.”

He also debunked the rumors about AGI. In the interview, he said OpenAI does not have AGI that can learn like humans.

Image by Author

OpenAI is on the right path to developing safe AGI, but it is far from perfect. The AGI people are talking about is a multimodal model that can understand speech, text, image, and video. It will be the combination of ChatGPT, DALLE-2, Whisper, a video generations model, and other reinforcement learning algorithms.

Multimodal Models

To develop AGI, OpenAI needs to work on multimodal models, not just text-to-image, but text-to-video and audio-to-video and audio. It means you will be able to talk to a natural-sounding and looking bot. It has already been achieved by a few developers, such as the AI-generated Twitch streamer persona. It is not perfect, but it is a start.

During the StrictlyVC program, Sam Altman disclosed that they are working on a video model, and we can safely assume that it will be the text-to-video generation with an audio model, adding another layer of complexity. We already have a video generation technology where developers are overlapping frames generated using the stable diffusion model.

Google AI

Google AI is the backbone of Google’s ecosystem. It is used in Google maps, photos, applications, and Cloud. Google has been at the forefront of developing AI tools and models. Most products are available on the Google Cloud, from AutoML to state-of-the-art large vision and language models.

It has also released multiple tools and state-of-the-art models that have changed the space of natural language processing, speech processing, and computer vision. From TensorFlow to BERT (Bidirectional Encoder Representations from Transformers), Google has paved a new way for AI and machine learning research and development.

Gif from LaMDA

In 2020, Google research introduced Meena, a neural conversational model that understands the context and learns to respond sensibly. After that, Google released the LaMDA model, which is similar to ChatGPT. It was a breakthrough conversation technology built using transformers but trained on dialogues.

Every year, we see new technology from Google, and in the future, we can expect an advanced Google search engine powered by AI Bard, language, vision, generative mode, and multimodel models that will make AI multipurpose.

Google AI Bard

After the launch of ChatGPT, people started spreading rumors of how it is the ‘Google killer.’ The extended partnership between Microsoft and OpenAI made that more likely. Microsoft is ready to take on Google in the search engine business with its OpenAI-infused Bing.

To counter that, Google launched its version of ChatGPT called Google AI Bard. Check out our comparison of ChatGPT and Google Bard in a separate article.

Gif from Bard and new AI features

In the recent Google AI updates, Sundar Pichai, the CEO of Google and Alphabet, introduced a new experimental conversation AI service called Bard that is powered by LaMDA. Google has announced that it has given access to trusted testers, and in the coming weeks, it will be available to the public.

Bard uses information from the web to provide high-quality responses. Unlike the ChatGPT model, Bard is a service that combines the world’s knowledge with power, intelligence, and creativity. Initially, Google is releasing it with a lightweight model version of LaMDA, and with time it will introduce more powerful language models.

Language, vision, and generative models

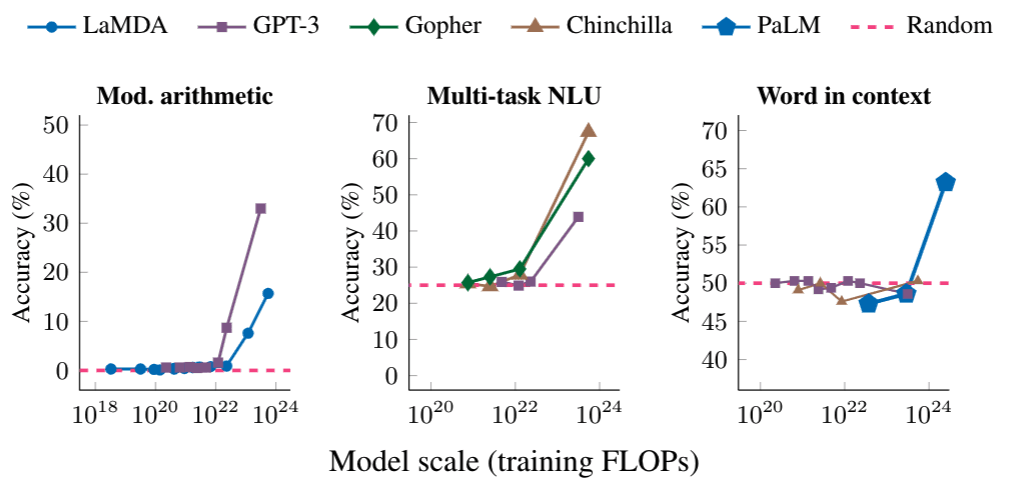

According to recent reports from Google Research, they have made advancements in language technologies, computer vision, and generative models.

Image from Google AI

- Large Language Models: Pathways Language Model (PaLM) and LaMDA have shown promising results, and in the future, you can expect even better language models that can be used for conversational AI and other Natural Language Processing tasks.

- Computer Vision: MaxViT (Multi-Axis Vision Transformer), Pix2Seq ( Language Modeling Framework for Object Detection), and developments in 2D to 3D imaging using large motion frame Interpolation.

- Image Generation: Advanced photo-realistic image generation models: Imagen (diffusion model) and Parti (autoregressive transformer architecture). Both use text input to generate image pixels.

- Video Generation: Last year, Google AI worked on Imagen Video and Phenaki. Imagen Video uses cascaded diffusion models to generate high-resolution videos, whereas Phenaki uses open domain textual description to generate variable-length videos.

Start your Deep Learning (AI) career by taking our Deep Learning in Python skill track. The skill track consists of four courses that will take your machine learning skill to the next level.

Multimodal models

Most ML models focus on a single modality of data (text classification, image segmentation, or speech recognition). But due to DALL-E 2 and Stable Diffusion, companies are now focusing on multimodal models to achieve state-of-the-art results.

Google AI has achieved multimodality by passing the data through modality-specific processing layers and mixing the features from different modalities via the bottleneck layer. Combining modalities can also increase the performance of single-modality tasks.

Gif from Google AI

Here are some of the latest research developments from Google AI on multimodal model developments:

- Locked-image Tuning (LiT) adds language understanding to existing pre-trained image models.

- PaLI can perform many tasks in over 100 languages, such as visual question answering, image captioning, object detection and translating it into another language, image classification, and more.

- VDTTS Visually-Driven Text-To-Speech model that takes the text and the original video frames of the actor and generates speech output to match it with the original video while maintaining the timing and emotion.

- Look and Talk is a multimodal technology that uses both video and audio as input to make the conversation with Google assistant natural. The model learns several visual and audio clues to more accurately determine if the person is talking to Google assistant or not.

- 4D-Net combines 3-D point cloud data from autonomous vehicles and combines it with other sensory data to better understand the environment and objects, and make better decisions.

We can assume that in the future, you will see the adoption of these models in Google ecosystems to increase user engagement and develop new products.

AI and Data Science

Modern AI is driven by data science, algorithms, and data engineering. To create cutting-edge technology like ChatGPT and LaMDA, we must start from the basics, understand how to handle the data and apply various natural language processing and reinforcement learning techniques, learn about deep learning algorithms and transformers, and model optimization.

Start your data science career by completing Data Scientist with Python career track. It will teach you essential skills for becoming a professional data scientist.

Image by Author

So, let’s answer the elephant in the room. Will AI replace data scientists, analysts, or engineers? The simple answer is “NO.” Maybe in the far future, but even then, we will have different jobs, more creative and decision-making jobs. With the development of AI, we will also grow.

GitHub Copilot, DALL-E 2, ChatGPT, and other cutting-edge technologies are here to assist us. They are here to make us productive and efficient.

Andrej Karpathy, Previously Director of AI at Tesla and OpenAI says:

“Copilot has dramatically accelerated my coding, it's hard to imagine going back to "manual coding." Still learning to use it but it already writes ~80% of my code, ~80% accuracy. I don't even really code, I prompt. & edit.” - Twitter

It shows that many people who understand technology will use it to get better at programming, logic, data analysis, and decision-making.

How can data scientists use AI?

- Generating real-life data while maintaining user privacy.

- Writing elegant and efficient code.

- Prototyping the product.

- Performing better data analysis by using prompts.

- Generating complex SQL queries using natural language.

- Creating better reports for non-technical stakeholders.

- Perform complex statistical analysis with an explanation.

- Learn new programming languages, skills, frameworks, and concepts.

- Using AI-driven AutoML and model optimizing tools for building state-of-the-art ML solutions.

- Building automation scripts to save time and avoid mistakes.

A New Era of Advancement in AI

Microsoft, with the partnership of OpenAI, has integrated ChatGPT into the new Bing search engine, which is called a copilot for the web. With this announcement, a new race to achieve tech supremacy has started.

Microsoft is planning to integrate ChatGPT and other GPT models into its ecosystem. It will change everything as users won’t even have to leave the application. They can perform searches, add content, edit the content, and come up with ideas by just chatting with a bot.

Bing Copilot Demo

At the Paris event, Google launched its version of ChatGPT called Bard. It is powered by a lightweight LaMDA. The Bard is quite similar to Microsoft’s copilot for the web; it is also integrated into Google's search engine and offers similar features.

The Downsides of Advancement in AI

- Large language models like GPT can be used for disinformation campaigns in the future -openai.com. It has the potential to cause both physical and emotional harm.

- Content generated using AI is SEO optimized and harder to detect. Students and professionals are using it to create original work without learning or understanding the concept. Read more about education-related risks and opportunities on OpenAI API documentation.

- Copyright issues are real. These models were trained on text available online, and some are protected properties. You cannot just steal someone else's work to justify the advancement in AI.

- Sometimes, AI produces factually wrong answers. This could cause huge problems in the future if these models get implemented in the law enforcement department.

These issues will be resolved, and we will see new legislation regulating the use of AI. The schools and content giants have already started implementing AI-generated content policies.

So, what can we do about it? We can start learning about these new technologies and start using them ethically. You can start learning AI by enrolling in our Machine Learning Scientist with Python career track. The career track will teach you the essential machine-learning skills for landing a job as an AI engineer.