Track

Snowflake is a cloud-based data platform that addresses the fundamental challenges of modern data management. Launched in 2014, it provides organizations with a centralized solution for storing and processing large-scale data operations.

Traditional data management systems often present organizations with significant limitations. These systems typically require companies to choose between query performance, concurrent user access, and cost-effectiveness. Snowflake’s architecture was developed to eliminate these constraints through its approach to data storage and computation.

This guide examines Snowflake’s architectural framework and operational mechanisms. While the platform incorporates complex technologies, this explanation will focus on making these concepts accessible to readers with a basic understanding of data systems.

The guide will address:

- The fundamental principles of Snowflake’s data storage system

- The architectural components that form its infrastructure

- The platform’s approach to concurrent data access

- The technical advantages that drive its adoption

This analysis will provide you with a foundational understanding of how Snowflake functions within modern data infrastructure.

For readers new to Snowflake, the Introduction to Snowflake for Beginners provides essential background knowledge.

Core Concepts of Snowflake Architecture

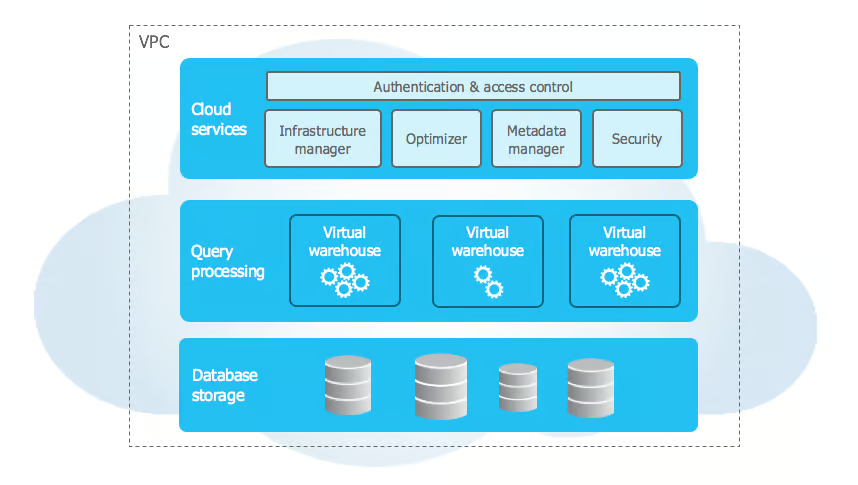

Snowflake’s architecture differs from traditional data warehouse designs by using modern cloud principles to solve scalability and performance challenges. The architecture implements a multi-layered approach that divides storage, compute, and services into separate but connected components.

Snowflake architecture layers

Source: Snowflake documentation

Snowflake’s architecture uses a unique three-layer design that separates core functionalities while maintaining seamless integration. Let’s examine each layer in detail:

1. Storage layer

Snowflake’s storage layer relies on cloud object storage (Amazon S3, Azure Blob Storage, or Google Cloud Storage) and organizes data into immutable micro-partitions (50–500MB) in a compressed columnar format. These micro-partitions store metadata like min/max column values, enabling efficient query pruning.

This layer is self-optimizing, requiring no manual maintenance. It intelligently selects the best compression algorithm per column based on data type and patterns, ensuring high compression ratios and fast analytical queries by reading only necessary columns.

2. Compute layer

The compute layer consists of virtual warehouses—independent MPP clusters that execute SQL queries and DML operations. Each warehouse runs multiple nodes in parallel, operating in complete isolation to prevent performance interference.

These stateless resources can be started, stopped, resized, or cloned without affecting data. Resizing redistributes workloads automatically, while the auto-suspension feature pauses inactive warehouses and resumes them within seconds when needed.

3. Services layer

The services layer orchestrates Snowflake’s operations, managing a distributed metadata store that tracks tables, views, security policies, and queries. The query optimizer leverages this metadata to generate efficient execution plans based on data distribution, compute resources, and access patterns.

It ensures ACID compliance with advanced concurrency control while handling authentication via SSO, MFA, and role-based access control across all levels. This layer also manages session handling and security enforcement.

How the layers work together

The interaction between Snowflake’s layers enables powerful capabilities like secure data sharing, multi-cluster computing, and dynamic scalability. Data sharing is metadata-driven—only pointers are exchanged, while access is controlled with fine-grained security policies. Consumers can query shared data using their own compute resources without duplication.

Multi-cluster computing allows independent compute clusters to access the same storage layer while maintaining separate caches and ensuring consistency. Workloads can be isolated by dedicating warehouses to ETL, BI, or data science. Snowflake’s architecture also supports dynamic scalability, allowing storage and compute to scale independently while the services layer optimizes resource allocation and query performance.

For a deeper dive into these concepts and more, you can explore the Introduction to Snowflake course.

Snowflake’s Data Warehouse Architecture

Snowflake’s data warehouse architecture builds on its three-layer design to provide flexible data modeling and efficient query processing. It supports structured and semi-structured data while optimizing performance and simplifying management.

Data model

Snowflake accommodates structured data using relational database concepts, supporting SQL data types, constraints, and relationships through primary and foreign keys. Sensitive information benefits from column-level encryption. For semi-structured data, Snowflake natively handles JSON, XML, Parquet, and Avro using the VARIANT data type, automatically inferring schemas and enabling efficient querying with specialized functions like FLATTEN and PARSE_JSON.

At the storage level, data is automatically divided into 50–500MB micro-partitions, each storing metadata like min/max column values and null frequencies. Snowflake tracks natural clustering and reorganizes data periodically to improve query efficiency, eliminating the need for manual partition management.

Query processing architecture

Snowflake processes queries through multiple coordinated layers that optimize execution. The query optimizer transforms SQL queries into logical execution plans and evaluates multiple physical plans based on table size, indexing, and caching. Using a cost model, it selects the most efficient approach, determining join algorithms, sorting methods, and data movement strategies.

The execution engine distributes queries across parallel processing nodes. By leveraging metadata, it prunes unnecessary partitions and reads only relevant columns in Snowflake’s columnar storage format, improving efficiency. A 24-hour results cache further enhances performance by reusing previously computed query results when underlying data remains unchanged.

Snowflake’s architecture enables efficient data access patterns. Zero-copy cloning allows instant table duplication without copying data, while time travel preserves historical versions for point-in-time queries. Multi-version concurrency control (MVCC) ensures transaction consistency, allowing high-concurrency workloads without locking conflicts. These optimizations, combined with intelligent caching and partition pruning, enable Snowflake to deliver scalable, high-performance analytics with minimal manual intervention.

Advanced Snowflake Features

Snowflake offers advanced features across resource management, security, integration, and monitoring to enhance enterprise capabilities.

Resource management

Its dynamic resource management includes warehouse scheduling and auto-scaling, automatically pausing inactive warehouses and adjusting compute resources based on workload demands. Administrators can set automated start/stop schedules and leverage detailed resource metrics to optimize costs and performance.

Query governance

The query governance features provide precise control over resource consumption, including dynamic limits, intelligent queuing, and custom query routing, ensuring efficient workload management.

Enterprise Integration

For enterprise integration, Snowflake supports stored procedures in JavaScript and Java, allowing developers to implement complex business logic beyond SQL.

Version control enables easy rollback, while error handling ensures smooth execution with detailed logging. The platform’s secure data exchange framework allows organizations to share and monetize datasets via private data marketplaces.

Companies can control access, track usage, and implement automated billing, creating new revenue opportunities while maintaining compliance and security.

These features collectively strengthen Snowflake’s position as a comprehensive data platform, offering scalability, automation, and security. With intelligent workload management, seamless integrations, and robust governance tools, organizations can optimize performance, reduce costs, and securely collaborate on data assets.

Architecture Comparisons

Let’s compare Snowflake’s architecture with traditional data warehouses and modern competitors to understand its unique value proposition.

Traditional data warehouses

Key architectural differences:

- Traditional systems tightly couple storage and compute

- Physical data partitioning requires manual maintenance

- Hardware capacity planning needed for peak workloads

- Limited ability to handle semi-structured data

Cost model comparison:

- Traditional requires upfront hardware investment

- Capacity must account for future growth

- Maintenance costs include hardware and facilities

- License costs typically based on processor cores

Performance characteristics:

- Limited by physical hardware constraints

- Concurrent user scaling requires hardware upgrades

- Query performance degrades with user count

- Resource contention between workloads

Modern competitors

1. Amazon Redshift

- Architecture: Cluster-based; manual vacuum operations; uses zone maps

- Cost: Node-based hourly pricing; limited to AWS ecosystem

2. Google BigQuery

- Architecture: Serverless with slot-based pricing; automatic query caching (24-hour retention)

- Cost: Pay-per-byte scanned; limited compute control

3. Azure Synapse

- Architecture: Hybrid (serverless & dedicated pools); complex resource management; supports both rowstore and columnstore

- Cost: DTU or vCore-based pricing; integration with Azure services

Additional performance considerations:

1. Query optimization

- BigQuery: Automatic optimization, limited user control

- Redshift: Manual vacuum and analyze operations

- Synapse: Requires statistics updates and indexing

2. Concurrency handling

- BigQuery: Slot allocation system

- Redshift: Queue-based workload management

- Synapse: Resource class assignments

3. Storage architecture

- BigQuery: Columnar storage with automatic sharding

- Redshift: Node-based distribution with slice management

- Synapse: Rowstore and columnstore options

4. Cost structure variations

- BigQuery: Pay per byte scanned

- Redshift: Node-based hourly pricing

- Synapse: DTU or vCore-based pricing

5. Integration capabilities

- BigQuery: Native GCP service integration

- Redshift: AWS ecosystem integration

- Synapse: Azure platform services

These architectural differences impact:

- Operational complexity

- Resource management

- Cost predictability

- Performance optimization

- Ecosystem integration

For a structured approach to mastering these concepts, refer to the comprehensive Snowflake learning guide.

Here is a table summarizing these differences:

| Aspect | BigQuery | Redshift | Synapse | Snowflake |

|---|---|---|---|---|

| Architecture | Serverless | Cluster-based | Hybrid (serverless & dedicated) | Multi-cluster, shared data |

| Storage | Columnar with auto-sharding | Node-based distribution | Rowstore & columnstore | Micro-partitioned columnar |

| Query Optimization | Automatic, limited control | Manual vacuum/analyze | Manual indexing & stats | Automatic optimization |

| Concurrency | Slot-based allocation | Queue-based WLM | Resource classes | Virtual warehouse scaling |

| Pricing Model | Per byte scanned | Node-based hourly | DTU/vCore-based | Per-second warehouse usage |

| Integration | GCP native services | AWS ecosystem | Azure platform | Multi-cloud support |

| Resource Management | Automated | Manual node management | Complex pool management | Automated with manual control |

| Data Type Support | Strong semi-structured | Limited semi-structured | Limited semi-structured | Native semi-structured |

| Maintenance | Minimal | Regular vacuum required | Index maintenance needed | Zero maintenance |

| Caching | 24-hour automatic | User-managed | Limited built-in | Automatic result caching |

Conclusion

Snowflake has changed how companies work with data in the cloud by making it easier and more efficient than older systems. The way Snowflake is built allows companies to store and process their data separately, which helps them save money while still getting fast results. Thanks to Snowflake's strong security features, companies can feel confident that their data is secure. The system works smoothly across different cloud providers, giving businesses more flexibility in where they keep their data.

For anyone wanting to learn more about Snowflake, there are many helpful resources and training materials available on DataCamp.

- Snowflake Tutorial For Beginners: From Architecture to Running Databases

- Introduction to Data Modeling in Snowflake Course

- How to Learn Snowflake in 2025: A Complete Guide

The platform continues to grow with new features like artificial intelligence capabilities that make it even more powerful for businesses. Companies that use Snowflake can adapt quickly as their data needs change over time. The future looks bright for Snowflake as more organizations choose it as their main platform for managing data.

Snowflake Architecture FAQs

What makes Snowflake's architecture different from traditional data warehouses?

Snowflake uses a unique three-layer architecture that separates storage, compute, and services. Unlike traditional systems that tightly couple these components, Snowflake's design enables independent scaling, better resource management, and improved cost efficiency.

How does Snowflake handle concurrent user access?

Snowflake manages concurrent access through virtual warehouses - independent compute clusters that can be scaled up or down as needed. Multiple warehouses can access the same data simultaneously without performance degradation, while the services layer ensures data consistency.

What is the medallion architecture in Snowflake?

The medallion architecture is a data organization pattern with three layers: Bronze (raw data), Silver (standardized data), and Gold (business-ready data). This structure enables progressive data refinement while maintaining data lineage and quality control.

How does Snowflake's storage system work?

Snowflake uses micro-partitions (50-500MB chunks) stored in a columnar format with automatic optimization. The system maintains metadata about each partition's content, enabling efficient query processing through partition pruning and intelligent caching.

How does Snowflake compare to other cloud data warehouses?

Snowflake differs from competitors like Redshift, BigQuery, and Synapse through its multi-cloud support, automatic optimization features, and unique pricing model based on per-second warehouse usage. Each platform has distinct approaches to resource management and query processing.

I am a data science content creator with over 2 years of experience and one of the largest followings on Medium. I like to write detailed articles on AI and ML with a bit of a sarcastıc style because you've got to do something to make them a bit less dull. I have produced over 130 articles and a DataCamp course to boot, with another one in the makıng. My content has been seen by over 5 million pairs of eyes, 20k of whom became followers on both Medium and LinkedIn.