Course

As natural language processing (NLP) continues growing rapidly, large language models (LLMs) have become very powerful tools, able to handle an increasing number of tasks. However, one ongoing challenge has been adapting these general models to highly specialized areas, such as medical literature or software documentation.

This is where Retrieval-Augmented Fine-Tuning (RAFT) comes in, a new technique that could transform domain-specific NLP. RAFT combines the strengths of Retrieval-Augmented Generation (RAG), a method that combines LLMs with external data sources, and fine-tuning, enabling models to not only learn domain-specific knowledge but also learn how to accurately retrieve and understand external context to perform tasks.

In this article, we'll explore the inner workings of RAFT, look at its advantages, and see how this cutting-edge technique could revolutionize how we approach domain-specific NLP tasks. To get started, check out some resources on RAG, such as our introduction to RAG and practical applications of RAG.

What is Retrieval Augmented Fine-Tuning (RAFT)

Retrieval Augmented Fine-Tuning (RAFT) is a groundbreaking approach to language models that combines the benefits of RAG and fine-tuning. This technique tailors language models to specific domains by enhancing the models' ability to comprehend and utilize domain-specific knowledge while ensuring robustness against inaccurate retrievals.

RAFT is specifically designed to address the challenges of adapting LLMs to specialized domains. In these contexts, general knowledge reasoning becomes less critical, and the primary focus shifts towards maximizing accuracy with respect to a predefined set of domain-specific documents.

Understanding RAG vs Fine-Tuning

What is RAG?

Retrieval-Augmented Generation (RAG) is a technique that enhances language models by integrating a retrieval module that sources relevant information from external knowledge bases.

This retrieval module fetches relevant documents based on the input query. The language model then uses this additional context to generate the final output.

Operating under a 'retrieve-and-read' paradigm, RAG has proven highly effective in various NLP tasks, including language modeling and open-domain question answering.

However, these language models haven’t been trained to retrieve accurate domain-specific documents and instead only has general domain knowledge from pre-training.

As noted in the original paper, existing in-context retrieval methods are equivalent to taking an open-book exam without knowing which documents are most relevant for answering the question.

To learn more about RAG, check out how RAG can be used with GPT and Milvus to perform Questions Answering.

What is fine-tuning?

Fine-tuning is a widely adopted approach for adapting pre-trained LLMs to downstream tasks. This process involves further training the model on task-specific data, enabling it to learn patterns and align with the desired output format.

Fine-tuning has proven successful in various NLP applications, such as summarization, questions answering, and dialogue generation. However, traditional fine-tuning methods may struggle to leverage external domain-specific knowledge or handle imperfect retrievals during inference.

Using a similar analogy as before, fine-tuning is like memorizing documents and answering questions without referencing them during the exam. The problem with this approach is that fine-tuning can be costly, and the fine-tuned knowledge may become outdated. Additionally, these fine-tuning methods are not as responsive as RAG-based methods.

To learn more about fine-tuning, here’s an introductory guide to fine-tuning LLMs.

Why not both? Combining RAG and Fine-Tuning with RAFT

Intuition behind RAFT

RAFT recognizes the limitations of existing approaches and aims to combine the strengths of RAG and fine-tuning. By incorporating domain-specific documents during the fine-tuning process, RAFT enables the model to learn patterns specific to the target domain while also enhancing its ability to understand and utilize external context effectively.

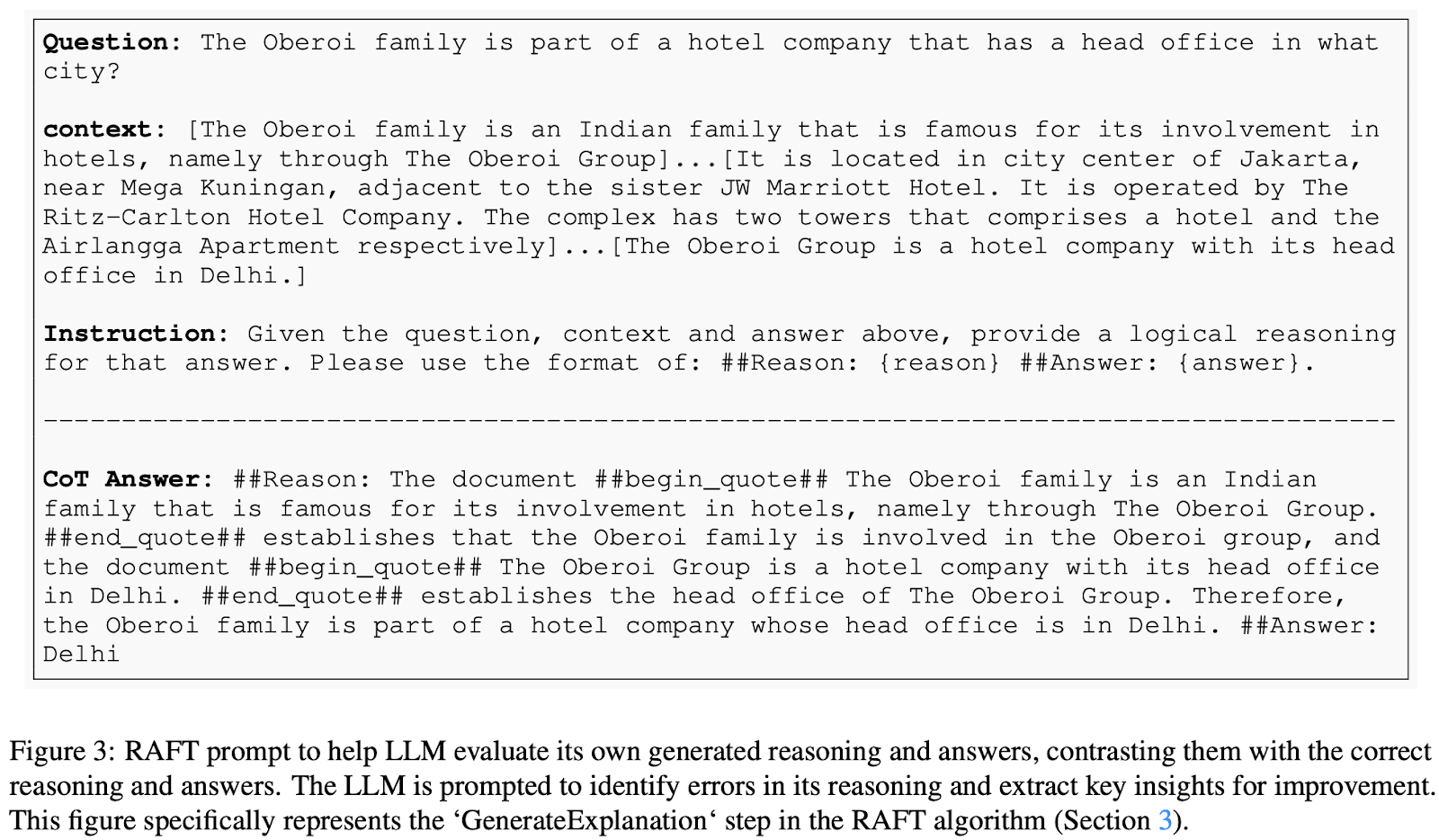

At a high level, in RAFT, the training data consists of questions, documents (both relevant and irrelevant), and corresponding chain-of-thought-style answers generated from the relevant documents. The model is trained to answer questions based on these provided documents, including distractor documents that do not contain relevant information. This approach teaches the model to identify and prioritize relevant information while disregarding irrelevant content.

Key Components of RAFT

Let's dive deeper into the mechanics of how Retrieval-Augmented Fine-Tuning works. RAFT proposes a novel method to prepare fine-tuning data to train in-domain RAG answering models. In RAFT, each data point in the training dataset consists of:

- A question (Q)

- A set of documents (Dk), which are broken down into two types:

- “Oracle” documents — documents that contain the answer to the question. There can be multiple oracle documents for each question.

- “Distractor” documents — documents that do not contain relevant information for answering the question.

- A chain-of-thought style answer (A*): An answer generated from the oracle documents that includes a detailed reasoning process.

In the RAFT fine-tuning training dataset, each question is paired with a set of documents, some containing the answers and some not, along with a chain-of-thought style answer. This structure is particularly useful for training the model to distinguish between useful and irrelevant information when deriving answers.

To further enhance the model's learning, the RAFT training dataset includes a mix of question types:

- Questions with both Oracle and Distractor documents: A percentage 𝑃 of the questions will include both oracle and distractor documents. This helps the model learn to identify and prioritize relevant information.

- Questions with only Distractor documents: The remaining 1−𝑃 percentage of questions will only have distractor documents. This mimics traditional fine-tuning, training the model to handle questions without relying on external documents.

Finally, the chain-of-thought style answers incorporate segments from the oracle documents and a detailed reasoning process. This approach enhances the model’s accuracy in answering questions by teaching it to form a reasoning chain using relevant segments from the original context.

Image Source: RAFT: Adapting Language Model to Domain Specific RAG

Fine-tuning process

Once the training data is prepared, the fine-tuning process involves:

- Training the model: Using supervised learning, the model is trained to generate chain-of-thought style answers from the provided context, which includes questions and sets of documents.

- Objective: The goal is for the model to learn to identify and prioritize relevant information from the oracle documents while disregarding the distractor documents.

Inference

During the inference stage, our fine-tuned model will be presented with a question and the top-K documents retrieved by the RAG pipeline. Note that the retriever module operates independently of RAFT.

Results from RAFT

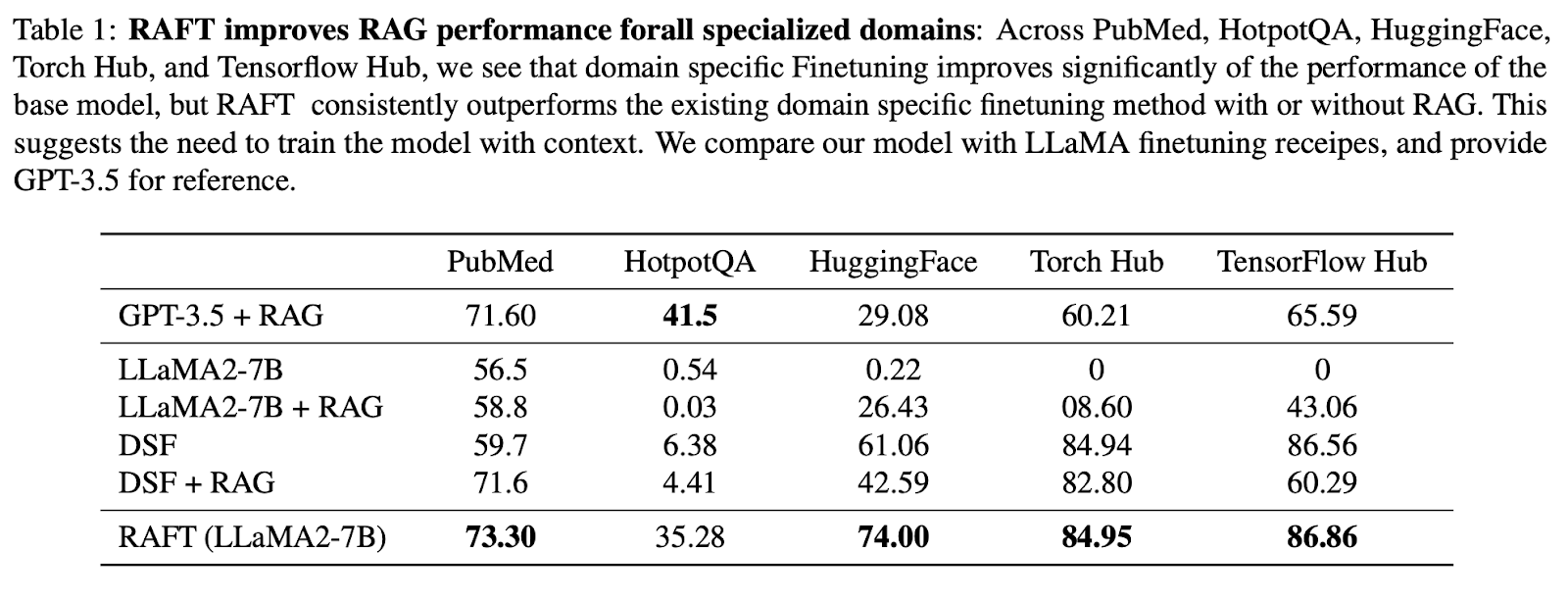

To demonstrate that RAFT excels at extracting relevant information from in-domain documents and answering questions, the original paper compared the RAFT approach to a general-purpose model with RAG and to domain-specific fine-tuned models. Specifically, they fine-tuned Llama-2 using the RAFT method to create the RAFT 7B model, and compared it to the following:

- Llama-2 with 0-shot prompting

- Llama-2 with RAG

- Domain-specific Fine-tuning (DSF) Llama-2 (a standard supervised fine-tuning process)

- DSF + RAG (Domain-specific fine-tuned Llama-2 with RAG)

- GPT-3.5 + RAG

These models were evaluated on three types of datasets to assess the performance of RAFT across a range of domains:

- General knowledge datasets — including Natural Questions, Trivia QA, and HotpotQA, focusing on common knowledge question answering.

- Software documentation (APIs) — including HuggingFace, Torch Hub, and TensorFlow Hub, focusing on generating correct executable API calls based on documentation.

- Domain-specific datasets — including PubMedQA, focusing on biomedical and biological research questions.

Overall, the results show that RAFT consistently outperformed the supervised fine-tuning method, both with and without RAG, across PubMed, HotpotQA, and the other API datasets.

When compared to domain-specific fine-tuning (DSF), RAFT significantly outperformed DSF. Interestingly, DSF with RAG did not lead to better performance and, in fact, performed worse.

This indicates that DSF lacks the capability to extract relevant information from provided documents effectively. By utilizing RAFT, we train the model to both process documents accurately and provide an appropriate answering style.

The RAFT approach even outperformed GPT-3.5 with RAG, which is a much larger language model, demonstrating the effectiveness of RAFT.

Image source: RAFT: Adapting Language Model to Domain Specific RAG

Ablation studies

In addition to the main experiments, the original paper also conducted several ablation studies to understand the impact of various components on the performance of RAFT:

- Effect of Chain-of-Thought — The results show that in most instances, RAFT with Chain-of-Thought (CoT) outperformed RAFT without it.

- Proportion of Oracle Documents — What is the optimal proportion of oracle documents to include in the training data? The ablation study reveals that the optimal percentage of training instances that should include the oracle documents varies across datasets, with 80% achieve consistently strong performance across datasets.

- Number of Distractor Documents — The ablation study found that the training setting of one oracle document with four distractor documents is optimal for balancing between training the model's to prioritize and select relevant information without overwhelming it with irrelevant documents.

How to experiment with RAFT

To implement RAFT, researchers and practitioners can follow the steps outlined in the paper. This includes generating chain-of-thought-style answers from relevant documents, incorporating distractor documents during training, and fine-tuning the model using supervised learning techniques. The authors have also open-sourced the code and provided a demo to facilitate further experimentation and adoption of RAFT.

Learn More About RAG and Fine-Tuning

RAFT represents a significant advancement in the field of domain-specific language modeling, offering a powerful solution for adapting LLMs to specialized domains. By combining the strengths of RAG and fine-tuning, RAFT equips language models with the ability to effectively leverage domain-specific knowledge while maintaining robustness against retrieval inaccuracies.

For those interested in diving deeper into the world of RAG and fine-tuning, we recommend exploring the following resources:

As the demand for domain-specific language models continues to grow, techniques like RAFT will play a crucial role in enabling more accurate and reliable NLP applications across various industries and domains.

Ryan is a lead data scientist specialising in building AI applications using LLMs. He is a PhD candidate in Natural Language Processing and Knowledge Graphs at Imperial College London, where he also completed his Master’s degree in Computer Science. Outside of data science, he writes a weekly Substack newsletter, The Limitless Playbook, where he shares one actionable idea from the world's top thinkers and occasionally writes about core AI concepts.