Cours

Large language models (LLMs) keep growing in complexity and size, and deploying them poses significant challenges.

LLM distillation emerges as a powerful solution to this problem, enabling the transfer of knowledge from a larger, more complex language model (the "teacher") to a smaller, more efficient version (the "student").

A recent example in the AI world is the distillation of GPT-4o mini (student) from GPT-4o (teacher).

This process can be likened to a teacher imparting wisdom to a student, where the goal is to distill essential knowledge without the cumbersome baggage of the larger model's complexity. Let’s find out more!

Develop AI Applications

What Is LLM Distillation?

LLM distillation is a technique that seeks to replicate the performance of a large language model while reducing its size and computational demands.

Imagine a seasoned professor sharing their expertise with a new student. The professor, representing the teacher model, conveys complex concepts and insights, while the student model learns to mimic these teachings in a more simplified and efficient manner.

This process not only retains the core competencies of the teacher but also optimizes the student for faster and more versatile applications.

Why Is LLM Distillation Important?

The increasing size and computational requirements of large language models prevent their widespread adoption and deployment. High-performance hardware and increasing energy consumption often limit the accessibility of these models, particularly in resource-constrained environments such as mobile devices or edge computing platforms.

LLM distillation addresses these challenges by producing smaller and faster models, making them ideal for integration across a broader range of devices and platforms.

This innovation not only democratizes access to advanced AI but also supports real-time applications where speed and efficiency are highly valued. By enabling more accessible and scalable AI solutions, LLM distillation helps advance the practical implementation of AI technologies.

How LLM Distillation Works: The Knowledge Transfer Process

The LLM distillation process involves several techniques that ensure the student model retains key information while operating more efficiently. Here, we explore the key mechanisms that make this knowledge transfer effective.

Teacher-student paradigm

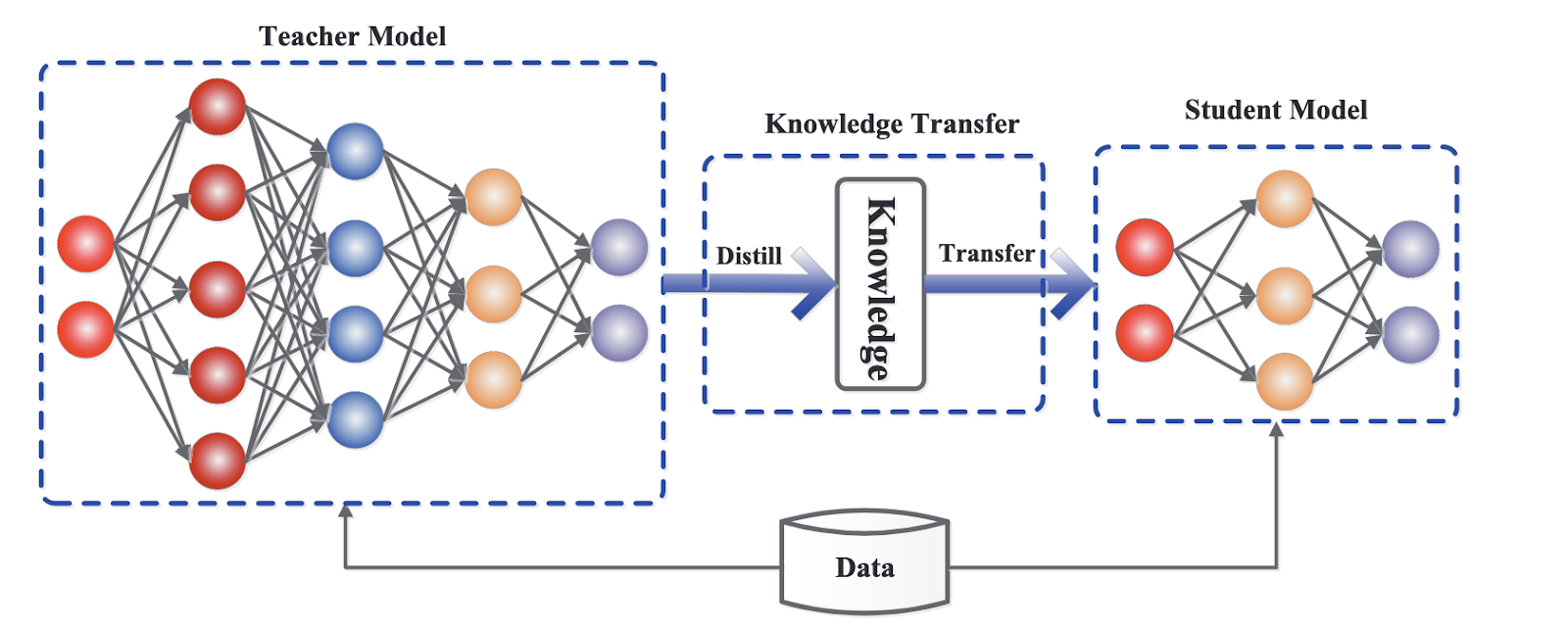

The teacher-student paradigm is at the heart of LLM distillation, a foundational concept that drives the knowledge transfer process. In this setup, a larger, more advanced model imparts its knowledge to a smaller, more lightweight model.

The teacher model, often a state-of-the-art language model with extensive training and computational resources, serves as a rich source of information. On the other hand, the student is designed to learn from the teacher by mimicking its behavior and internalizing its knowledge.

The student model's primary task is to replicate the teacher's outputs while maintaining a much smaller size and reduced computational requirements. This process involves the student observing and learning from the teacher's predictions, adjustments, and responses to various inputs.

By doing so, the student can achieve a comparable level of performance and understanding, making it suitable for deployment in resource-constrained environments.

Distillation techniques

Various distillation techniques are employed to transfer knowledge from the teacher to the student. These methods ensure that the student model not only learns efficiently but also retains the essential knowledge and capabilities of the teacher model. Here are some of the most prominent techniques used in LLM distillation.

Knowledge distillation (KD)

One of the most distinguished techniques in LLM distillation is knowledge distillation (KD). In KD, the student model is trained using the teacher model's output probabilities, known as soft targets, alongside the ground truth labels, referred to as hard targets.

Soft targets provide a nuanced view of the teacher's predictions, offering a probability distribution over possible outputs rather than a single correct answer. This additional information helps the student model capture the subtle patterns and intricate knowledge encoded in the teacher's responses.

By using soft targets, the student model can better understand the teacher's decision-making process, leading to more accurate and reliable performance. This approach not only preserves the critical knowledge from the teacher but also enables a smoother and more effective training process for the student.

Generic framework for knowledge distillation. Source

Other distillation techniques

Beyond KD, several other techniques can improve the LLM distillation process:

- Data augmentation: This involves generating additional training data using the teacher model. By creating a larger and more inclusive dataset, the student can be exposed to a broader range of scenarios and examples, improving its generalization performance.

- Intermediate layer distillation: Instead of focusing solely on the final outputs, this method transfers knowledge from the intermediate layers of the teacher model to the student. By learning from these intermediate representations, the student can capture more detailed and structured information, leading to better overall performance.

- Multi-teacher distillation: A student model can benefit from learning from multiple teacher models. By aggregating knowledge from various teachers, the student can achieve a more comprehensive understanding and improved robustness, as it integrates different perspectives and insights.

Benefits of LLM Distillation

LLM distillation offers a range of considerable benefits that develop the usability and efficiency of language models, making them more practical for diverse applications.

Here, we explore some of the key advantages.

Reduced model size

One of the primary benefits of LLM distillation is the creation of noticeably smaller models. By transferring knowledge from a large teacher model to a smaller student model, the resulting student retains much of the teacher's capabilities while being a fraction of its size.

This reduction in model size leads to:

- Faster inference: Smaller models process data more quickly, leading to faster response times.

- Reduced storage requirements: Smaller models take up less space, making it easier to store and manage them, especially in environments with limited storage capacity.

Improved inference speed

The smaller size of distilled models translates directly to improved inference speed. This is particularly important for applications that require real-time processing and quick responses.

Here’s how this benefit manifests:

- Real-time applications: Faster inference speeds make it feasible to deploy distilled models in real-time applications such as chatbots, virtual assistants, and interactive systems where latency is a vital factor.

- Resource-constrained devices: Distilled models can be deployed on devices with limited computational resources, such as smartphones, tablets, and edge devices, without compromising performance.

Lower computational costs

Another noteworthy advantage of LLM distillation is the reduction in computational costs. Smaller models require less computational power to run, which leads to cost savings in several areas:

- Cloud environments: Running smaller models in cloud environments reduces the need for expensive, high-performance hardware and lowers energy consumption.

- On-premise deployments: Smaller models mean lower infrastructure costs and maintenance expenses for organizations that prefer on-premise deployments.

Broader accessibility and deployment

Distilled LLMs are more versatile and accessible, allowing for deployment across platforms. This expanded reach has several implications:

- Mobile devices: Distilled models can be deployed on mobile devices, enabling advanced AI features in portable, user-friendly formats.

- Edge devices: The ability to run on edge devices brings AI capabilities closer to where data is generated, reducing the need for constant connectivity and enhancing data privacy.

- Wider applications: From healthcare to finance to education, distilled models can be integrated into a multitude of applications, making advanced AI accessible to more industries and users.

Applications of Distilled LLMs

The benefits of LLM distillation extend far beyond just model efficiency and cost savings. Distilled language models can be applied across a wide range of natural language processing (NLP) tasks and industry-specific use cases, making AI solutions accessible across various fields.

Efficient NLP tasks

Distilled LLMs excel in many natural language processing tasks. Their reduced size and enhanced performance make them ideal for tasks that require real-time processing and lower computational power.

Chatbots

Distilled LLMs enable the development of smaller, faster chatbots that can smoothly handle customer service and support tasks. These chatbots can understand and respond to user queries in real time, providing a seamless customer experience without the need for extensive computing.

Text summarization

Summarization tools powered by distilled LLMs can condense news articles, documents, or social media feeds into concise summaries. This helps users quickly grasp the key points without reading through lengthy texts.

Machine translation

Distilled models make translation services faster and more accessible across devices. They can be deployed on mobile phones, tablets, and even offline applications, providing real-time translation with reduced latency and computational overhead.

Other tasks

Distilled LLMs are not only valuable for common NLP tasks but also excel in specialized areas that require quick processing and accurate outcomes.

- Sentiment analysis: Analyzing the sentiment of texts, such as reviews or social media posts, becomes easier and faster with distilled models, allowing businesses to gauge public opinion and customer feedback quickly.

- Question answering: Distilled models can power systems that answer user questions accurately and promptly, amplifying the user experience in applications like virtual assistants and educational tools.

- Text generation: Creating coherent and contextually relevant text, whether for content creation, storytelling, or automated report generation, is streamlined with distilled LLMs.

Industry use cases

Distilled LLMs are not just limited to general NLP tasks. They can also impact many industries by improving processes and user experiences, and driving innovation.

Healthcare

In the healthcare industry, distilled LLMs can process patient records and diagnostic data more efficiently, enabling faster and more accurate diagnoses. These models can be deployed in medical devices, supporting doctors and healthcare professionals with real-time data analysis and decision-making.

Finance

The finance sector benefits from distilled models through upgraded fraud detection systems and customer interaction models. By quickly deciphering transaction patterns and customer queries, distilled LLMs help prevent fraudulent activities and provide personalized financial advice and support.

Education

In education, distilled LLMs facilitate the creation of adaptive learning systems and personalized tutoring platforms. These systems can analyze student performance and offer tailored educational content, enhancing learning outcomes and making education more accessible and impactful.

Implementing LLM Distillation

Implementing LLM distillation involves a series of steps and the use of specialized frameworks and libraries designed to facilitate the process. Here, we explore the tools and steps necessary to distill a large language model.

Frameworks and libraries

To streamline the distillation process, a few frameworks and libraries are available, each offering unique features to support LLM distillation.

Hugging Face transformers

The Hugging Face transformers library is a popular tool for implementing LLM distillation. It includes a Distiller class that simplifies the process of transferring knowledge from a teacher model to a student model.

Using the Distiller class, practitioners can leverage pre-trained models, fine-tune them on specific datasets, and employ distillation techniques to achieve optimal results.

Other libraries

Aside from Hugging Face Transformers, many other libraries support LLM distillation:

- TensorFlow model optimization: This library provides tools for model pruning, quantization, and distillation, making it a versatile choice for creating models.

- PyTorch distiller: PyTorch

Distilleris designed to compress deep learning models, including support for distillation techniques. It offers a range of utilities to manage the distillation process and improve model efficiency. - DeepSpeed: Developed by Microsoft, DeepSpeed is a deep learning optimization library that includes features for model distillation, allowing for the training and deployment of large models.

Steps involved

Implementing LLM distillation requires careful planning and execution. Here are the key steps involved in the process.

Data preparation

The first step in the distillation process is to prepare a suitable dataset for training the student model. The dataset should be representative of the tasks the model will perform, ensuring that the student model learns to generalize well.

Data augmentation techniques can also enhance the dataset, providing the student model with a broader range of examples to learn from.

Teacher model selection

Selecting an appropriate teacher model is necessary for successful distillation. The teacher model should be a well-performing, pre-trained model with a high level of accuracy on the target tasks. The quality and attributes of the teacher model directly influence the performance of the student model.

Distillation process

The distillation process involves the following steps:

- Training setup: Initialize the student model and configure the training environment, including hyperparameters such as learning rate and batch size.

- Knowledge transfer: Use the teacher model to generate soft targets (probability distributions) for the training data. These soft targets, along with the hard targets (ground truth labels), are used to train the student model.

- Training loop: Train the student model using a combination of soft targets and hard targets. The objective is to minimize the loss function, which measures the difference between the student model's predictions and the soft targets provided by the teacher model.

Evaluation Metrics

Evaluating the performance of the distilled model is essential to ensure it meets the desired criteria. Common evaluation metrics include:

- Accuracy: A measure of the percentage of correct predictions made by the student model compared to the ground truth.

- Inference speed: Assesses the time taken by the student model to process inputs and generate outputs.

- Model size: Evaluate the reduction in model size and the associated benefits in terms of storage and computational efficiency.

- Resource utilization: Monitors the computational resources required by the student model during inference, confirming whether meets the constraints of the deployment environment.

I cover LLM evaluation more in-depth in this article on LLM Evaluation: Metrics, Methodologies, Best Practices.

LLM Distillation: Challenges and Best Practices

While LLM distillation offers numerous benefits, it also presents several challenges that must be addressed to ensure successful implementation.

Knowledge loss

One of the primary hurdles in LLM distillation is the potential for knowledge loss. During the distillation process, some of the nuanced information and features of the teacher model may need to be fully captured by the student model, leading to a decrease in performance. This issue can be particularly pronounced in tasks that require deep understanding or specialized knowledge.

Here are a few strategies we can implement to mitigate knowledge loss:

- Intermediate layer distillation: Transferring knowledge from intermediate layers of the teacher model can help the student model capture more detailed and structured information.

- Data augmentation: Using augmented data generated by the teacher model can provide the student model with a broader range of training examples, helping its learning process.

- Iterative distillation: Refining the student model through multiple rounds of distillation can lead to it progressively capturing more of the teacher's knowledge.

Hyperparameter tuning

Careful hyperparameter tuning is vital for the success of the distillation process. Key hyperparameters, such as temperature and learning rate, significantly influence the student model's ability to learn from the teacher:

- Temperature: This parameter controls the smoothness of the probability distribution generated by the teacher model. Higher temperatures produce softer probability distributions, which can help the student model learn more thoroughly from the teacher's predictions.

- Learning Rate: Adjusting the learning rate is essential to balance the speed and stability of the training process. An appropriate learning rate makes sure that the student model converges to an optimal solution without overfitting or underfitting.

Evaluating effectiveness

Evaluating the effectiveness of the distilled model is an indispensable step to guarantee that it meets the desired performance criteria, particularly with its predecessors and alternatives. This involves comparing the performance of the student to the teacher and other baselines to understand how well the distillation process has preserved or advanced model functionality.

To gauge the effectiveness of the distilled model, it’s important to focus on the following metrics:

- Accuracy: Measure how the student model’s accuracy compares to the teacher model and other baselines, providing insight into any loss or retention of precision.

- Inference speed: Compare the inference speed of the student model against the teacher model, highlighting improvements in processing time.

- Model size: Assess the differences in model size between the student and teacher models, as well as other baselines, to evaluate the efficiency gains from distillation.

- Resource utilization: Analyze how the resource usage of the student model compares to that of the teacher model, ensuring that the student model offers a more economical alternative without compromising performance.

Best practices

Adhering to best practices can increase the effectiveness of LLM distillation. These guidelines emphasize experimentation, continuous evaluation, and strategic implementation.

- Experimentation: Regularly experiment with different distillation techniques and hyperparameter settings to identify the best configurations for your specific use case.

- Continuous evaluation: Continuously evaluate the performance of the student model using relevant benchmarks and datasets. Iterative testing and refinement are key to achieving optimal results.

- Balanced training: Verify a balanced training process is being implemented by combining soft targets from the teacher model with hard targets. This approach helps the student model capture nuanced knowledge while maintaining accuracy.

- Regular updates: Stay informed about the latest advancements in LLM distillation research and incorporate new techniques and findings into your distillation process.

Research and Future Directions

The field of LLM distillation is rapidly evolving. This section explores the latest trends, current research challenges, and emerging techniques in LLM distillation.

Latest research and advancements

Recent research in LLM distillation has focused on developing novel techniques and architectures to enhance the efficiency and effectiveness of the distillation process. Some notable advancements include:

- Progressive distillation: This involves distilling knowledge in stages, where intermediate student models are progressively distilled from the teacher model. This technique has shown promise in improving the performance and stability of the final student model.

- Task-agnostic distillation: Researchers are exploring methods to distill knowledge in a task-agnostic manner, allowing the student model to generalize across different tasks without requiring task-specific fine-tuning. This approach can greatly reduce the training time and computational resources needed for new applications.

- Cross-modal distillation: Another emerging area is the distillation of knowledge across different modalities, such as text, images, and audio. Cross-modal distillation aims to create versatile student models that can handle multiple types of input data, expanding the applicability of distilled models.

Open problems and future directions

Despite significant progress, several challenges and open research questions remain in the field of LLM distillation:

- Enhancing generalization: One of the key challenges is improving the generalization ability of distilled models. Ensuring that student models perform well across a variety of tasks and datasets remains an ongoing area of research.

- Cross-domain knowledge transfer: Effective knowledge transfer across different domains is another critical area. Developing methods to distill knowledge that can be applied to new and distinct domains without significant loss of performance is an important goal.

- Scalability: Scaling distillation techniques to handle increasingly larger models and datasets efficiently is a persistent challenge. Research is focused on optimizing the distillation process to make it more scalable.

Emerging techniques

Emerging techniques and innovations are continually being developed to address these challenges and push the field forward. Some promising approaches are:

- Zero-shot and few-shot learning adaptations: Integrating zero-shot and few-shot learning capabilities into distilled models is a burgeoning area of research. These techniques enable models to perform tasks with little to no task-specific training data, enhancing their versatility and practicality.

- Self-distillation: In self-distillation, the student model is trained using its predictions as soft targets. This approach can improve the model's performance and robustness by using its learned knowledge iteratively.

- Adversarial distillation: Combining adversarial training with distillation techniques is another innovative approach. Adversarial distillation involves training the student model to not only mimic the teacher but also to be robust against adversarial attacks, improving its security and reliability.

Conclusion

LLM distillation is a pivotal technique in making large language models more practical and efficient. By transferring essential knowledge from a complex teacher model to a smaller student model, distillation preserves performance while reducing size and computational demands.

This process enables faster, more accessible AI applications across various industries, from real-time NLP tasks to specialized use cases in healthcare and finance. Implementing LLM distillation involves careful planning and the right tools, but the benefits—such as lower costs and broader deployment—are substantial.

As research continues to advance, LLM distillation will play an increasingly important role in democratizing AI, making powerful models more accessible and usable in diverse contexts.