Track

Managing a database effectively and properly requires understanding the fundamentals of data models.

In database management systems (DBMS), data models serve as the architectural blueprints that define how information is stored, related, and accessed.

They are the translation layer between business requirements (“We need to track customers, orders, and products”) and technical implementation (“We’ll store this in four tables connected by foreign keys”).

A well-designed data model helps to:

- Defines the scope of the system

- Specifies the rules that keep data valid

- Ensures long-term scalability

- Improves performance

In this guide, we’ll explore both classical and modern data models, abstraction levels, design processes, and advanced optimization strategies — all illustrated with a PostgreSQL e-commerce dataset.

Why Data Models Are Crucial

Here are some quick reasons why you should know about data models and why they are important.

- Bridge between teams: Business analysts and developers work from the same logical structure.

- Data integrity: Constraints prevent invalid, incomplete, or duplicate data.

- Performance: Well-modeled databases support fast lookups and joins.

- Scalability: Anticipates future growth and new requirements.

- Consistency: Ensures that data means the same thing across different parts of the system.

What Is a Data Model in DBMS?

A data model in a Database Management System (DBMS) is a blueprint used by databases to define how data is organized, stored, and accessed.

The datamodel provides a structured way to represent data elements and their relationships, enabling efficient data management and manipulation.

Having a data model helps to answer questions like:

- “What kinds of data do we have?”

- “How does one type of data relate to another?”

- “What rules must this data follow?”

A data model makes all the required information and answers to these questions easily understandable by database administrators.

Purposes of a data model

A data model structures and organizes information in a database. This ensures clarity and consistency in how data is handled within a system.

Here are some common reasons why a data model is needed:

- Structure: Organizes information logically.

- Relationships: Maps out how data elements interact.

- Constraints: Maintains data integrity and enforces business logic.

- Abstraction: Shields users from worrying about how the data is physically stored.

Why it matters

Without a defined data model, you risk having:

- Duplicated data being stored in different formats.

- Orphan records that have no valid parent entity.

- Inconsistent interpretations of the same data.

- Poor query performance due to an unoptimized structure.

Here’s a simple SQL query showing how a database can be modified to include structure, relationships, and constraints.

ALTER TABLE Orders

ADD CONSTRAINT fk_customer

FOREIGN KEY (CustomerID)

REFERENCES Customer(CustomerID);This enforces that every order is tied to an existing customer, which is a simple but powerful way to maintain integrity.

Sample Dataset Setup Using PostgreSQL

Before we move on to further explanations, let’s create and use a simple e-commerce dataset to demonstrate concepts throughout this guide.

We’ll include the following entities in the dataset.

- Customer: Stores buyer information.

- Product: Stores items available for sale.

- Orders: Records each purchase transaction.

- OrderItems: Stores details of which products are in each order.

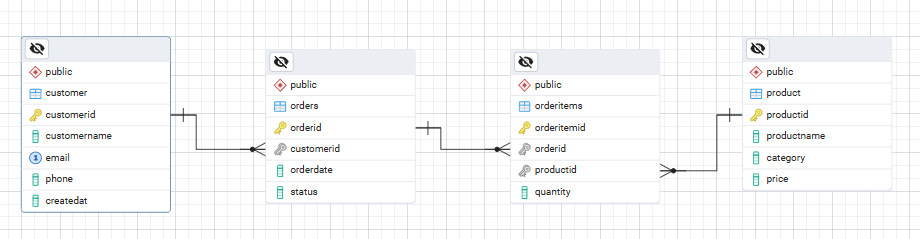

Here’s a simple ASCII ER Diagram showing the relationships between the tables we’ll create.

Customer ───< Orders ───< OrderItems >─── ProductThis corresponds to this logic:

- One customer → multiple orders.

- One order → multiple order items.

- One product → can appear in many orders.

Creating table schema

Next, I’ll be creating the tables and running them in PostgreSQL to run the queries.

Here’s the schema and SQL code to create the necessary tables.

CREATE TABLE Customer (

CustomerID SERIAL PRIMARY KEY,

CustomerName VARCHAR(100) NOT NULL,

Email VARCHAR(100) UNIQUE NOT NULL,

Phone VARCHAR(20),

CreatedAt TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

CREATE TABLE Product (

ProductID SERIAL PRIMARY KEY,

ProductName VARCHAR(100) NOT NULL,

Category VARCHAR(50),

Price NUMERIC(10, 2) NOT NULL

);

CREATE TABLE Orders (

OrderID SERIAL PRIMARY KEY,

CustomerID INT NOT NULL,

OrderDate DATE NOT NULL,

Status VARCHAR(20) DEFAULT 'Pending',

FOREIGN KEY (CustomerID) REFERENCES Customer(CustomerID)

);

CREATE TABLE OrderItems (

OrderItemID SERIAL PRIMARY KEY,

OrderID INT NOT NULL,

ProductID INT NOT NULL,

Quantity INT NOT NULL CHECK (Quantity > 0),

FOREIGN KEY (OrderID) REFERENCES Orders(OrderID),

FOREIGN KEY (ProductID) REFERENCES Product(ProductID)

);Inserting sample data

Next, let’s insert some basic information into the

INSERT INTO Customer (CustomerName, Email, Phone)

VALUES

('Alice Brown’, 'alice@example.com', '91234567'),

('Bob McKee', 'bob@example.com', '98765432');

INSERT INTO Product (ProductName, Category, Price)

VALUES

('Laptop', 'Electronics', 1200.00),

('Wireless Mouse', 'Electronics', 25.50),

('Office Chair', 'Furniture', 150.00);

INSERT INTO Orders (CustomerID, OrderDate, Status)

VALUES

(1, '2025-08-01', 'Shipped'),

(2, '2025-08-02', 'Pending');

INSERT INTO OrderItems (OrderID, ProductID, Quantity)

VALUES

(1, 1, 1),

(1, 2, 2),

(2, 3, 1);Your ER diagram for the database should look like this:

Key Components of a DBMS Data Model

A robust data model consists of four main components:

1. Entities

In data modeling, entities represent the core, distinct objects or concepts within a system that we want to store data about. Entities are often represented as tables in a database.

Examples of entities in our dataset:

CustomerProductOrdersOrderItems

These entities become the core “nouns” of the database.

Entities are the foundation. Every other aspect of the model, such as attributes, relationships, and constraints, builds on them.

2. Attributes

In data modeling, attributes are characteristics or properties that describe an entity. They represent the specific data points that define an entity, like a customer's name, email, or address.

Examples:

- For

Customer:CustomerName,Email,Phone. - For

Product:ProductName,Price,Category.

Put simply, attributes are the details you care about for each entity. They are typically columns in a table.

3. Relationships

Relationships in a data model define how different entities or tables are connected, representing associations between them. Think of them as logical connections between entities.

Examples in our dataset:

- One

Customercan have manyOrders(1-to-many). - One

Ordercan contain manyProductsviaOrderItems(many-to-many resolved through a junction table).

Relationships ensure that the data model represents real-world associations accurately.

4. Constraints

In data modeling, constraints are rules that limit the values allowed in a database, ensuring data accuracy, consistency, and integrity.

Constraints help maintain the integrity of the database, preventing invalid or inconsistent data from being entered.

Examples:

UNIQUEconstraint onCustomer.Email.CHECKconstraint to ensureQuantity > 0inOrderItems.

PostgreSQL Example

Here’s an example of using some types of constraints in an SQL query.

CREATE TABLE OrderItems_Constraint (

OrderItemID SERIAL PRIMARY KEY,

OrderID INT NOT NULL,

ProductID INT NOT NULL,

Quantity INT NOT NULL CHECK (Quantity > 0),

FOREIGN KEY (OrderID) REFERENCES Orders(OrderID),

FOREIGN KEY (ProductID) REFERENCES Product(ProductID)

);In this example, we used the PRIMARY, CHECK, NOT NULL, and REFERENCES constraints.

Types of Data Models in DBMS

Different types of data models exist in databases. This reflects the way in which data is stored according to the nature of the data.

Classical models

Firstly, let’s look at some common classical models used in database management systems.

Hierarchical model

A hierarchical data model organizes data in a tree-like structure, where each record (node) has a single parent (except for the root) and can have multiple children.

- Structure: Data stored in a tree format; each parent can have multiple children, but each child has only one parent.

- Use cases: Legacy banking, airline reservation systems.

- Pros: Very fast for one-to-many lookups.

- Cons: Difficult to handle many-to-many relationships.

Example (XML-like representation):

<Customer id="1">

<Name>Alice Brown</Name>

<Orders>

<Order id="1" date="2025-08-01"/>

</Orders>

</Customer>Modern equivalents often appear in XML/JSON storage, but the model itself dates back to early mainframe DBMS like IMS.

Network Model

A network data model is a flexible way to represent data and relationships, especially useful for complex, many-to-many connections.

It uses a graph structure with nodes (representing entities) and edges (representing relationships) to organize data, allowing for more efficient and direct access paths compared to hierarchical models.

- Structure: Graph with records connected via pointers.

- Pros: Handles complex many-to-many relationships.

- Cons: Complex to query and maintain.

Relational Model

The relational data model is a way to structure data into tables with rows and columns, enabling efficient storage, retrieval, and management of information.

- Structure: Data in tables, rows, and columns.

- Pros: SQL support, ACID compliance, normalization.

- Cons: Joins can be expensive at scale.

Example query from our dataset:

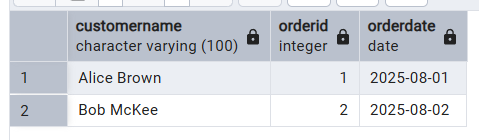

SELECT c.CustomerName, o.OrderID, o.OrderDate

FROM Customer c

JOIN Orders o ON c.CustomerID = o.CustomerID;This query demonstrates the use of the JOIN function to create relationships between the Customer table and the Orders table.

This produces the following table as a result:

Modern models

As database technology advances, some modern models have also surfaced. Here are some examples:

Object-oriented model

The object-oriented model combines database and object-oriented programming concepts. It supports inheritance and encapsulation.

Object-relational model

The object-relational model is a hybrid of relational and object-oriented.

NoSQL models

NoSQL data models represent a departure from the rigid, table-based structures of traditional relational databases, offering flexible schemas and diverse data organization methods to handle large volumes of unstructured and semi-structured data.

Here are some examples of NoSQL models:

- Key-Value: Redis (fast lookups).

- Document: MongoDB (flexible JSON).

- Column-Family: Cassandra (wide-column storage).

- Graph: Neo4j (relationship-heavy data).

Here’s an example of a document data model (MongoDB document):

{

"CustomerName": "Alice Brown",

"Orders": [

{"ProductName": "Laptop", "Quantity": 1}

]

}Data Modeling Abstraction Levels

The process of data modeling occurs at three different levels – conceptual, logical, and physical.

These levels are the different stages of detail used when designing a database or information system. They help manage complexity by focusing on specific aspects of the data and its structure.

Conceptual

The conceptual data model is the highest-level, technology-agnostic abstraction in the data modeling process. It focuses on defining the key entities and their relationships in a system without delving into technical details.

This layer captures business requirements and relationships.

Example: Our ER diagram showing Customer → Orders.

Logical

The logical data model is the second abstraction level. It outlines entities, attributes, and relationships with no mention of any specific database.

This layer converts the conceptual model into a DBMS-specific schema. It defines tables, keys, and relationships without storage details.

Physical

The physical data model layer implements a schema with indexes, partitions, and storage parameters.

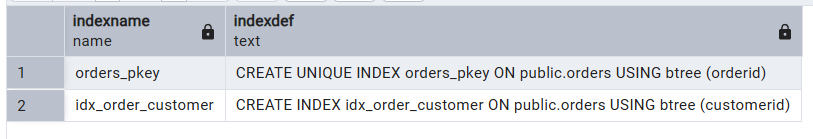

Here’s an example of an index:

CREATE INDEX idx_order_customer ON Orders(CustomerID);Now we’ll view this index using this query:

SELECT indexname, indexdef

FROM pg_indexes

WHERE tablename = 'orders';As you can see in the index below, we created a new index called idx_order_customer.

Data Modeling Process

Data modeling is a structured approach to translating business needs into a technical blueprint that governs how data will be stored, related, and retrieved.

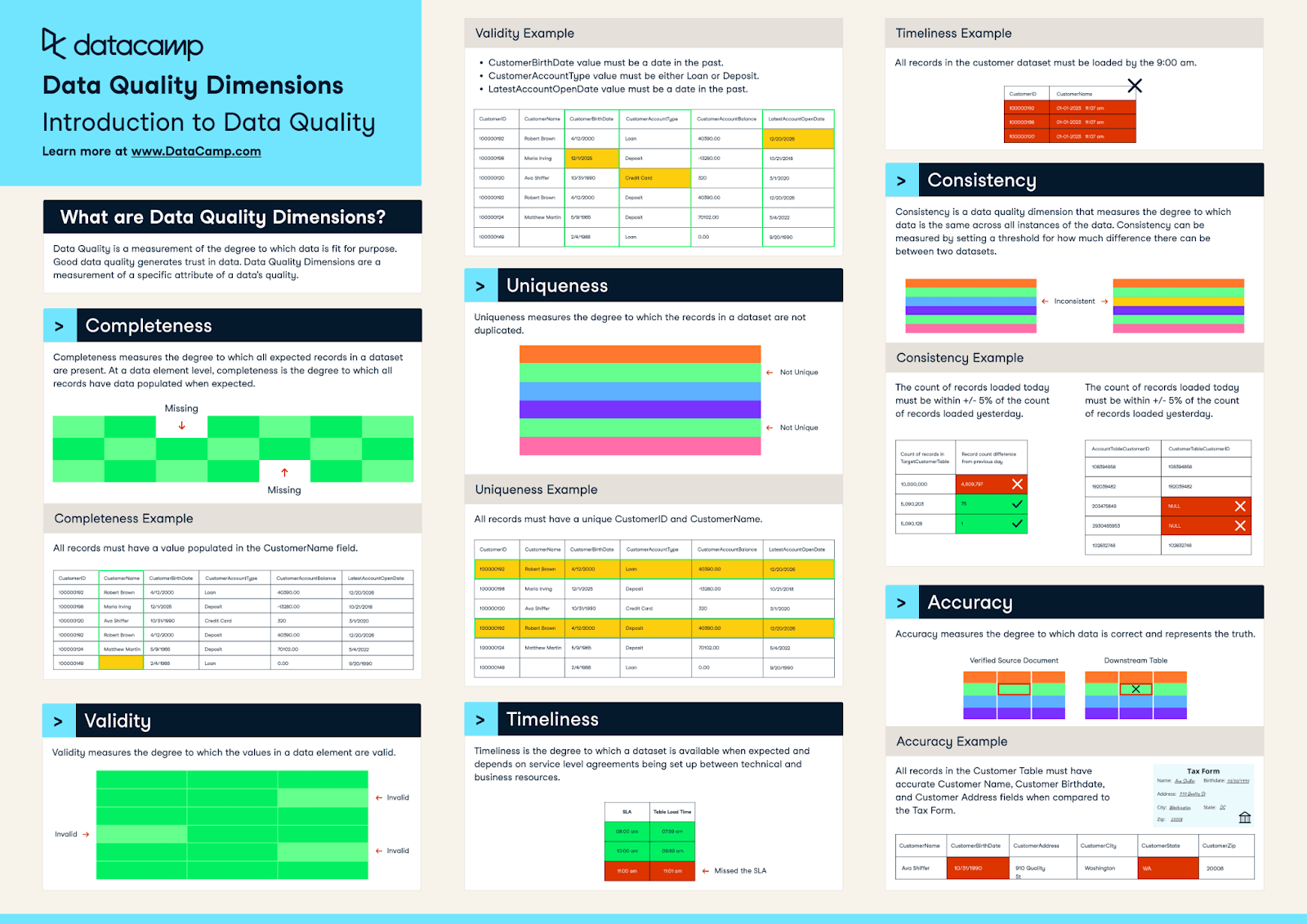

When done well, data modeling reduces redundancy, improves data quality, and makes future maintenance easier.

Source: Data Quality Dimensions Cheat Sheet

The process typically moves in stages. Let’s break these down.

1. Requirement gathering

Requirement gathering is the foundation of the entire data modeling process. At this stage, you’re trying to understand what the business needs are and why.

There are several ways to gather requirements effectively.

- Stakeholder interviews allow you to talk directly to the people who will use or depend on the database, such as business analysts, managers, or developers.

- Document reviews help you understand existing systems by looking at past reports, workflows, or legacy database schemas.

- Workshops can bring different departments together to align on data definitions and identify gaps.

The output of this phase should be a clear set of documented requirements describing the data entities, relationships, and business rules.

For example, in our sample retail dataset, stakeholders might say they want to track customer orders, products, and order dates so they can calculate metrics like total sales per product category and repeat customer rates.

2. Conceptual design

The conceptual design takes those business requirements and turns them into a visual, high-level representation of the data.

At this stage, you focus on what entities exist and how they relate, without worrying about technical details like primary key types or indexing strategies.

This is where Entity-Relationship (ER) diagrams come in. ER diagrams show entities (e.g., Customer, Product, Order), their attributes, and the relationships between them.

For our dataset:

Customermight include CustomerID, Name, Email.Productmight include ProductID, Name, Category.Ordermight include OrderID, OrderDate.OrderItemmight include OrderItemID, OrderID, ProductID, Quantity, Price.

The relationships could be:

- One customer can place many orders.

- One order can contain many order items.

- One product can appear in many order items.

This diagram becomes a common reference point for both business and technical teams.

3. Logical design

Logical design builds upon the conceptual model by applying database rules, particularly normalization.

Normalization is the process of structuring a relational database to minimize redundancy and dependency. It’s often done in stages (1NF, 2NF, 3NF), each with specific requirements.

- In 1NF, you ensure that all fields are atomic (no multiple values in one column) and that there are no repeating groups.

- 2NF removes partial dependencies—meaning that if you have a composite key, all non-key attributes must depend on the entire key.

- 3NF removes transitive dependencies, ensuring non-key attributes only depend on the primary key and not on other non-key attributes.

For our example, the customer’s email address belongs only in the Customer table, not duplicated in the Order table. Similarly, product category information should reside in the Product table, not scattered across multiple records in OrderItem.

4. Physical design

Physical design takes the logical model and adapts it for a specific database system, like PostgreSQL or MySQL. This stage involves decisions about data types, storage optimization, and performance tuning.

Indexes are a key consideration here. For example, adding an index to OrderDate in the Order table can significantly speed up queries that filter by date.

Partitioning can also be applied for large datasets, splitting data into manageable chunks by date, range, or hash keys to improve query performance.

You also decide on constraints, such as foreign keys, to enforce data integrity at the database level.

5. Validation

Once the design is complete, validations must be made before going live.

This involves loading sample data, ideally, realistic test data that mirrors expected volumes, and running queries that simulate real-world usage.

For example, in our retail dataset, you might run a query to calculate sales totals by month, ensuring the numbers match what the business expects.

You’d also test edge cases, such as what happens when an order is placed with no items (which should be impossible if constraints are correct).

6. Deployment

The final step is moving the validated design into production. This should be done using version-controlled migration scripts so that changes are tracked over time.

Deployment also involves setting up monitoring for performance and errors, and ensuring that the schema is well-documented so that future changes can be made without confusion.

Post-deployment, it’s common to periodically review the design as new requirements emerge as well.

Advantages of Data Models

A well-designed data model offers multiple benefits.

- Clarity: Data models provide a shared language between business and technical teams. When everyone agrees on what a “Customer” means and how it relates to “Orders,” misunderstandings are reduced, and development moves faster.

- Integrity: Constraints like foreign keys and unique indexes ensure that invalid data cannot be entered into the system. This protects data quality and makes reporting more reliable.

- Performance: Through the use of indexes and optimizing joins, data models can greatly improve query speed. This is especially important for analytical queries that process large datasets.

- Scalability: A good model is easier to extend. If the business wants additional features to track promotions or loyalty points further down the pipeline, you can add new tables without breaking existing workflows.

Disadvantages of Data Models

- Complexity: In large systems, the data model can be intricate, with hundreds of tables and relationships. This can intimidate newcomers and require specialized skills to navigate.

- Cost: Hiring skilled data modelers and purchasing enterprise modeling tools can be expensive. In small companies, this might represent a significant investment.

- Rigidity: Relational schemas aren’t as flexible as NoSQL solutions. Changes to models require careful planning to avoid breaking downstream applications and dependencies.

- Vendor lock-in: Relying heavily on proprietary database features (like Oracle-specific indexing or SQL Server’s advanced partitioning) can make future migrations costly and complex.

Advanced Modeling Methodologies

Data modeling can also have advanced methods for implementation.

1. Dimensional modeling

Dimensional modeling is common in data warehousing, where the goal is to make analytical queries intuitive and fast.

This involves using specific schemas:

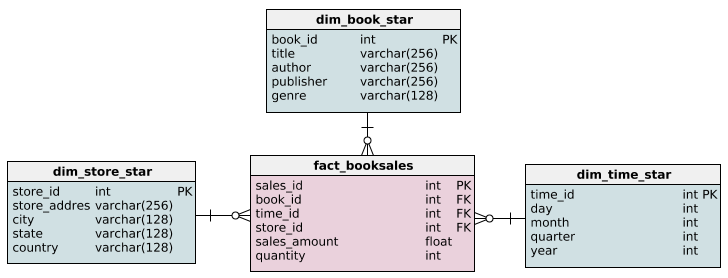

- Star schema: One central fact table (e.g.,

OrderItems) stores metrics, while multiple dimension tables (e.g.,Customer,Product,Date) store descriptive attributes. This structure is easy for BI tools to query.

Here’s what it might look like:

Source: Querying the star schema

- Snowflake schema: Dimension tables are normalized into sub-tables, reducing storage but requiring more joins. For instance,

Productcould be split intoProductandCategorytables.

2. Entity-relationship (ER) modeling

ER modeling is more typical in transactional systems. It emphasizes normalization to ensure data integrity. In some cases, denormalization is selectively applied to improve read performance, especially for frequently accessed data.

3. Polyglot modeling

Polyglot modeling uses different database types for different workloads.

For example, you might use PostgreSQL for order transactions and MongoDB for a product catalog with flexible attributes. This allows you to use the right tool for the right job, but it does increase operational complexity.

Performance Optimization and Design Principles

1. Common anti-patterns

- Over-normalization: Splitting data into too many small tables can lead to excessive joins and slow queries.

- Under-indexing: Failing to index frequently filtered columns can cause full table scans and performance issues.

2. NoSQL considerations

In NoSQL systems, design is often based on access patterns rather than strict normalization. Data is organized to minimize the number of queries needed for common operations, and partitioning is essential to avoid bottlenecks.

3. ACID trade-offs

Relational systems like PostgreSQL fully support ACID properties, ensuring data consistency. Many NoSQL databases relax these guarantees to improve speed and scalability, trading off consistency for eventual convergence.

Conclusion

Data models are a crucial part of database design and can take on many forms. However, in modern enterprises, to ensure that these models are able to achieve their best performance, optimizations have to be done accordingly.

Technology is constantly evolving and being updated, and ongoing learning and adaptation are a need in the data industry. To learn more about databases, check out our course on Database Design or Introduction to Relational Databases in SQL to get started.

Prefer reading instead? Our articles on Data Modeling Tools or DBMS Interview Questions might be helpful.

Data Models in DBMS FAQs

What are the main differences between the hierarchical and relational models?

The primary difference between hierarchical and relational database models lies in their structure and how they handle relationships between data. Hierarchical models organize data in a tree-like structure with parent-child relationships, while relational models use tables with rows and columns, linked by keys.

How does the network model handle many-to-many relationships?

The network model handles many-to-many relationships by using a graph structure where entities are represented as nodes and relationships as edges. This allows for flexible connections between entities.

What are the advantages of using an object-oriented database model?

Object-oriented database models offer several advantages, primarily related to handling complex data and improving development efficiency.

How does the entity-relationship model improve database design?

The entity-relationship (ER) model significantly enhances database design by providing a visual and structured approach to defining data requirements.

What are the key features of a physical data model?

A physical data model outlines the specific implementation details of a database, including tables, columns, data types, relationships, indexes, and storage structures.

I'm Austin, a blogger and tech writer with years of experience both as a data scientist and a data analyst in healthcare. Starting my tech journey with a background in biology, I now help others make the same transition through my tech blog. My passion for technology has led me to my writing contributions to dozens of SaaS companies, inspiring others and sharing my experiences.