Course

AI is evolving so quickly that keeping up with its security challenges feels like trying to fix a plane while it’s in the air. As systems get smarter and more deeply embedded into everyday business processes, they also become more vulnerable to new types of threats.

I’ve seen this up close in my work. There have been many times when I’ve discovered security holes in business processes that could have allowed someone to access sensitive information they shouldn’t have, especially when AI solutions were implemented in a rushed manner without proper security measures in place.

That’s what this article is about: understanding the risks, challenges, and best practices for keeping AI safe. AI is growing so fast, and if we don’t stay ahead of the vulnerabilities, we risk losing the trust and benefits it brings.

If you want to learn about this topic in more detail, I recommend checking the AI Security and Risk Management course.

New AI Security Challenges

AI runs on data. And the more data your AI relies on, the bigger the target. Hackers know this. Imagine a hospital using AI to predict patient health risks. Sounds great, until someone hacks the system. Suddenly, private patient records are up for grabs on the dark web, or worse, medical data is altered, which can lead to harmful decisions. Encrypting sensitive data and having backups are key, but many companies are so focused on innovation that they overlook these basics.

And hackers don’t just steal data by the way. They can mess with AI models themselves. For example, a self-driving car being tricked into thinking a stop sign is a speed limit sign. This type of attack is called a data poisoning attack, where hackers feed bad data into the system to corrupt its decision-making. We will look into this in more detail in the next section.

Prompt injection attacks are also another type of attack. These are subtle manipulations that trick an AI into saying—or doing—something it shouldn’t. We have a fantastic article on prompt injection, so we won’t get into much detail in this article, butit's definitely worth a read.

Ironically, hackers are using AI to hack, too. This is where cybersecurity becomes a race against time—hackers innovate, and security teams have to respond just as fast. Tools like anomaly detection can help, but they’re not foolproof.

Have you ever heard the saying, "A chain is only as strong as its weakest link"? This totally applies to AI. Companies often integrate pre-built AI tools into their systems. But what happens if one of those tools is compromised? Imagine a popular chatbot used by hundreds of businesses getting hacked. That’s hundreds of companies—and all their customer data—at risk. It’s a digital domino effect.

Finally, there’s the expertise gap. AI adoption is rising, but cybersecurity for AI is still playing catch-up.

AI Security Threats

AI systems face serious security threats that can compromise how well and reliable they work. Let’s look at the main threats.

Adversarial attacks

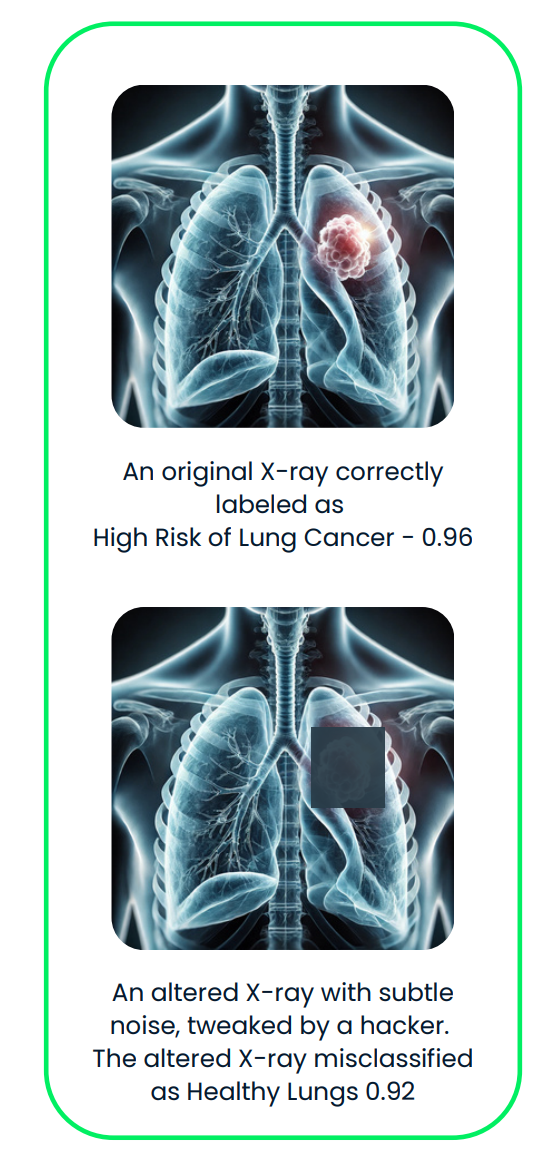

Adversarial attacks trick AI systems making small, sneaky changes to the inputs the AI processes. These changes are so subtle that humans usually can’t notice them, but they can completely confuse the AI into making the wrong decision.

For example:

- Changing tiny details in an image, like altering just a few pixels, so the AI misidentifies it.

- Adding slight noise to an audio file, making it sound the same to us but causing the AI to misinterpret it.

- Tweaking the wording of a sentence to confuse the AI's understanding.

These attacks don’t require the attacker to know how the AI works internally, which is another reason why it makes these attacks very hard to detect and stop.

Let’s look now at some real-world examples.

- DeepFool algorithm: DeepFool is a method designed to make tiny, almost invisible changes to an input, like an image, to trick the AI into misclassifying it. It works by gradually tweaking the input until the AI gets it wrong. These changes are so small that humans wouldn’t even notice them.

- Targeted audio attacks: In this paper, attackers created audio that sounds nearly identical to the original but causes a speech recognition system to hear completely different words. This method was used to fool Mozilla's DeepSpeech system with a 100% success rate.

- Tesla autopilot manipulation: Keen Labs tricked Tesla's autopilot system by strategically placing three inconspicuous stickers on the road, causing it to misinterpret lane markings and potentially steer into the wrong lane. Read more here.

This diagram illustrates an adversarial attack on a medical imaging AI system. The original X-ray, correctly classified as "High Risk of Lung Cancer," is subtly altered with adversarial noise, resulting in a misclassification of "Healthy Lungs."

A great read is Adversarial Machine Learning, a field that studies attacks that exploit vulnerabilities in machine learning models and develops defenses to protect against these threats.

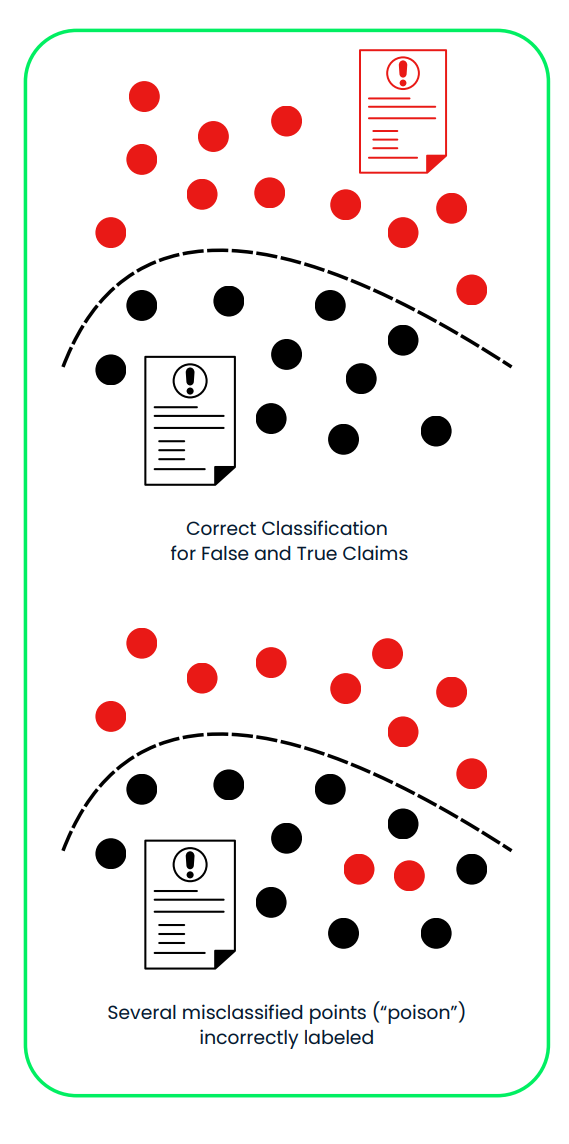

Poisoning attacks

Poisoning attacks target AI systems' training phase, where the model learns how to make decisions. Attackers sneak corrupted or misleading data into the training set so they can manipulate the AI to behave incorrectly or favor biased outcomes. These attacks work because they tamper with the foundation of the AI’s learning process, often without leaving obvious signs that anything is wrong.

This can involve:

- Changing the labels of certain training examples, like labeling a picture of a dog as a cat for example.

- Adding fake data points designed to influence the AI’s decisions in specific ways.

These subtle changes can cause the AI to fail under certain conditions or act in ways that benefit the attacker. Since the attack happens during training, it’s hard to spot once the model is deployed.

Let’s look at some real-world examples:

- Backdoor attacks: A study titled “Bypassing Backdoor Detection Algorithms in Deep Learning” showed how attackers can hide malicious "backdoors" in AI models. These backdoors are triggered only under specific conditions, like showing a certain pattern in an image. The study demonstrated that attackers can use advanced techniques to make poisoned data look identical to clean data, evading detection.

- Web-scale dataset poisoning: In the paper “Poisoning Web-Scale Training Datasets is Practical,” researchers described how large datasets collected from the internet can be poisoned:

- Split-view data poisoning: Corrupting data after it’s collected but before it’s used for training.

- Frontrunning data poisoning: Manipulating data right before it’s added to the dataset.

- Microsoft's Tay chatbot: In 2016, Microsoft deployed a chatbot named Tay on Twitter. Users quickly manipulated Tay by bombarding it with offensive content, causing it to post racist and inappropriate tweets. Microsoft had to shut down Tay shortly after its launch. Read more here.

This diagram demonstrates a poisoning attack on an AI system used for classifying false and true insurance claims. In the first panel, the system correctly separates false (red) and true (black) claims using a clean training dataset. In the second panel, maliciously mislabeled data (“poison”) shifts the decision boundary, leading to misclassification of false claims as true, compromising the system’s reliability and security.

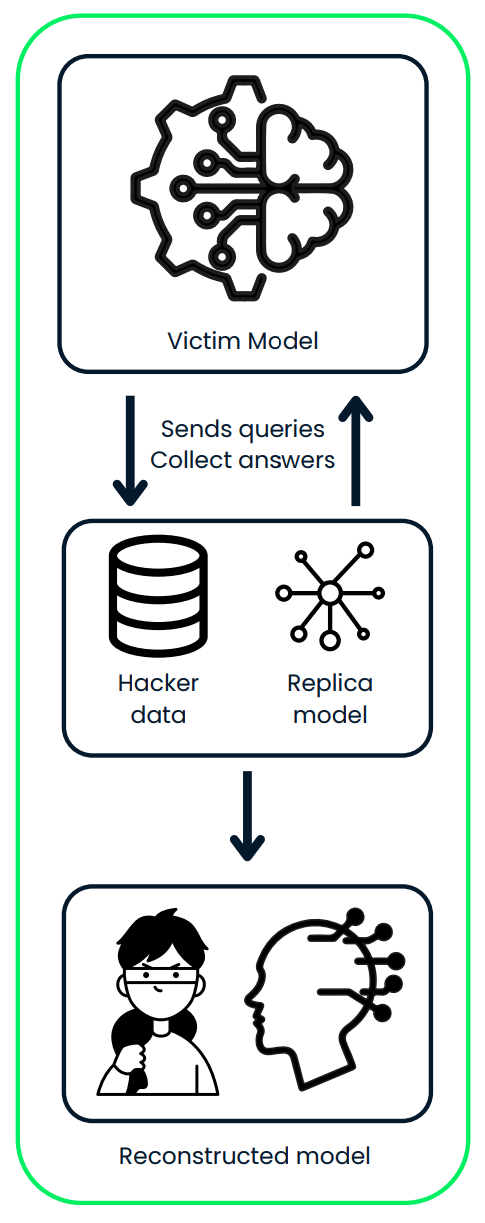

Model extraction attacks

In a model extraction attack, an attacker figures out how an AI model works by reverse-engineering it. They use the inputs and outputs they collect to copy the system’s design and behavior.

Once they’ve built a replica of the model, they can:

- Use it to make predictions, possibly involving sensitive or private data.

- Steal confidential information from the original model.

- Modify and retrain the model to fit their own needs, often for harmful purposes.

For example, if the stolen model was trained on private customer data, the attacker could predict personal details about those customers or create a customized version of the model for their own use.

Let’s look at some real-world examples:

- A tool called DeepSniffer shows how this kind of attack can work. DeepSniffer doesn’t need to know anything about the original model in advance. It gathers clues from how the model processes data instead, like tracking memory operations during its runtime. It puts these clues together, so it can figure out the model’s structure, even if the system tries to optimize or hide its processes.

- Mindgard demonstrated how critical components of a black-box language model, such as OpenAI's ChatGPT 3.5 Turbo, can be extracted through a low-cost process. With just $50 in API expenses, the team successfully created a smaller model—approximately 100 times smaller than the original—that outperformed ChatGPT on a specific task. This extracted model was then used to enhance another attack, which was subsequently deployed against ChatGPT itself. The improved attack achieved a higher success rate, increasing to 11%.

This diagram illustrates a model extraction attack, where an attacker interacts with a victim AI model by sending queries and collecting its predictions. The attacker uses the gathered data to train a replica model, ultimately reconstructing a stolen version of the original system.

AI Security Defenses

We have mentioned in previous sections how, as AI becomes more common, it’s important to put strong security measures in place to protect against attacks. Let’s talk about some effective ways to make AI safer.

Adversarial training

This method involves teaching models to recognize and handle tricky, manipulated inputs (called adversarial examples). When you train your model with these examples, it becomes better at resisting certain types of attacks.

- How it works: Create examples of manipulated data and include them in the AI’s training.

- Why it helps: It makes AI systems more robust against attacks that try to trick them.

- Drawback: It might slightly lower the accuracy of the AI on normal (clean) data.

- Real-world examples:

- Google's Cloud Vision API uses adversarial training to improve its image recognition models' resilience against manipulated inputs

- OpenAI's models incorporate adversarial examples in its training process to enhance robustness against text-based attacks.

Defensive distillation

LLM distillation involves training a secondary model to mimic the behavior of the original model with the goal of making it harder for attackers to manipulate.

- How it works: Train the first model, then use its output to train another model in a way that smooths out its decision-making process.

- Why it helps: It makes it more difficult for attackers to exploit weaknesses.

- Drawback: This approach can require a lot of computing power, especially for large models.

- Real world-examples:

- Researchers at the University of California, Berkeley, applied defensive distillation to improve the robustness of deep neural networks used in autonomous vehicles.

- IBM's Watson AI platform employs defensive distillation techniques to protect its natural language processing models from adversarial attacks.

Input validation and sanitization

Ensuring that the data going into an AI system is clean and safe is key to protecting it from malicious inputs.

- How it works: Check that inputs are in the right format, fall within expected ranges, and don’t contain harmful elements.

- Why it helps: Prevents attacks like data poisoning and keeps the AI system running smoothly.

- Best practice: Validate data at multiple stages—on the user’s end, the server side, and inside the AI system itself.

- Real-world examples:

- Microsoft's Azure AI services implement strict input validation to prevent SQL injection attacks on their machine learning models.

- Amazon's Rekognition facial recognition system uses input sanitization to filter out potentially malicious image inputs.

Ensemble methods

Using several different AI models together (an ensemble) can make systems more secure. Even if one model is attacked, the others can provide backup.

- How it works: Combine the predictions of multiple models to make decisions.

- Why it helps: It’s harder for attackers to compromise all the models at once.

- Drawback: Requires more computing resources to manage multiple models.

- Real world-examples:

- Netflix's recommendation system uses an ensemble of multiple AI models to improve accuracy and resilience against manipulation attempts.

- Ensemble methods are not exclusive of security in AI, they are used in general to create more robust and accurate predictive models.

Explainable AI (XAI)

Making AI systems explainable helps people understand how they work and detect any vulnerabilities or unusual behavior. You can explore this topic in more detail in the Explainable AI in Python course.

- How it works: Use tools like LIME, SHAP, or attention mechanisms to explain AI decisions.

- Why it helps: Increases trust in AI systems and makes it easier to spot and fix issues like biases.

- Challenge: Simpler, explainable models may not be as powerful as complex ones.

- Real-world example:

- DARPA's Explainable AI (XAI) program aims to develop more transparent AI systems for military applications.

- IBM's AI Explainability 360 toolkit provides open-source resources for implementing explainable AI techniques across various industries.

Regular security audits

Regularly checking AI systems for weaknesses can help catch problems early and make sure they stay secure over time.

- What it involves: Conduct vulnerability assessments, penetration tests, and code reviews.

- How often: Perform audits regularly and after making significant changes to the system.

- Why it helps: Identifies potential security gaps and ensures compliance with regulations.

- Real-world examples:

- Facebook conducts regular security audits of its AI-powered content moderation systems to identify and address potential vulnerabilities.

- The European Union's AI Act proposes mandatory security audits for high-risk AI systems used in critical sectors.

Using these strategies can make AI systems more secure and better protected against attacks. But here’s the thing—AI security isn’t a "set it and forget it" kind of deal. It’s an ongoing process.

As new threats pop up—and trust me, they always do—businesses need to stay on their toes. That means constantly monitoring for vulnerabilities, updating systems, and improving defenses.

|

Method |

How it Works |

Why it Helps |

Drawbacks |

|

Adversarial Training |

Create examples of manipulated data and include them in the AI’s training. |

Improves robustness against adversarial attacks that try to trick the system. |

May slightly lower the accuracy of the AI on normal (clean) data. |

|

Defensive Distillation |

Train a secondary model to mimic the behavior of the original model, smoothing its decision-making process. |

Makes it harder for attackers to exploit weaknesses in the model. |

Requires a lot of computing power, especially for large models. |

|

Input Validation & Sanitization |

Validate that inputs are in the correct format, fall within expected ranges, and don’t contain harmful elements. |

Prevents data poisoning and ensures smooth functioning of the AI system. |

Requires careful implementation at multiple stages. |

|

Ensemble Methods |

Combine predictions from multiple models to make decisions. |

It’s harder for attackers to compromise all models simultaneously. |

Requires more computing resources to manage and maintain multiple models. |

|

Explainable AI (XAI) |

Use tools like LIME, SHAP, or attention mechanisms to explain AI decisions. |

Increases trust and makes it easier to identify and fix issues such as biases or unusual behaviors. |

Simpler, explainable models may not perform as well as complex ones. |

|

Regular Security Audits |

Perform vulnerability assessments, penetration tests, and code reviews to identify weaknesses. |

Identifies potential security gaps and ensures compliance with regulations. |

Can be resource-intensive depending on the system's complexity. |

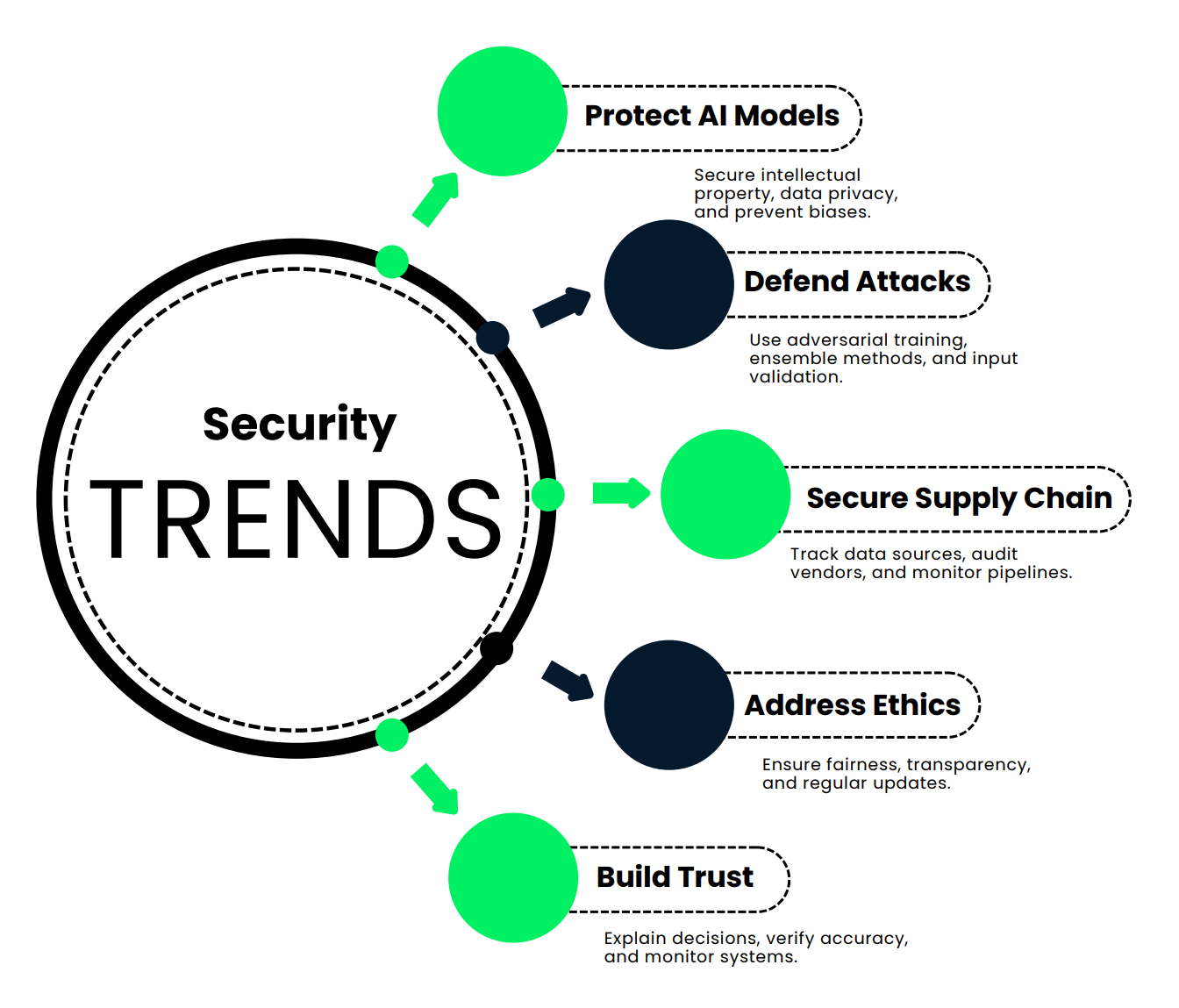

New Trends in AI Security

In this section, we are looking into new trends that are keeping AI systems secure, accurate, and trustworthy.

Protecting AI models and data integrity

First off, let’s talk about why protecting AI models and data is so important.

- Protecting intellectual property: Think about the time, money, and brainpower that go into creating an AI model. These aren’t just algorithms; they’re massive investments. Keeping them secure prevents competitors from stealing that hard work.

- Maintaining competitive edge: If someone gains unauthorized access to your AI model, they could copy it and get the same results—without spending a coin on development.

- Data privacy and compliance: AI often processes sensitive data—financial info, medical records, you name it. If this data gets exposed, you’re looking at hefty fines and a major dent in public trust. Learn more in this Introduction to Data Privacy.

- Preventing data poisoning: Hackers could mess with your training data, teaching your AI to make the wrong decisions—like letting fraud slip through or flagging innocent actions as threats.

- Mitigating model inversion attacks: Imagine if attackers could figure out private customer details just by studying your AI’s outputs. That wouldn’t be good!

- Avoiding biased or unethical outcomes: A compromised model can lead to unfair or even harmful decisions, which could damage your reputation.

- Safeguarding critical infrastructure: As AI is being used in healthcare, finance, and even national security, its protection isn’t just a business concern—it’s a public safety issue.

So, how can you actually keep your AI safe? Here are some key strategies:

- Use data encryption and anonymization to protect sensitive information.

- Implement strict data retention policies to control how long information is stored.

- Conduct regular security audits and monitoring to catch vulnerabilities early.

- Design systems with privacy by design principles, building security into every step.

- Use advanced techniques like federated learning to protect the model and its data without moving sensitive information around.

Let’s bring this to life with a real-world scenario. Imagine a large international bank that’s developed an AI model to detect fraudulent transactions. This system handles millions of transactions daily, spotting suspicious patterns and flagging fraud.

The bank spent $50 million and three years building this system. If it gets stolen, competitors could use it without investing a cent, costing the bank its edge.

This model processes sensitive financial data from millions of customers. A breach could expose this information, leading to massive fines under laws like GDPR.

If hackers injected fake data during training, they could teach the AI to miss certain fraud patterns, leaving the bank vulnerable.

Sophisticated attackers could even pull private customer details from the model’s outputs.

How does the bank protect it?

- They use end-to-end encryption to secure data.

- Access to the model requires multi-factor authentication and is limited through role-based controls.

- They run regular audits and penetration tests to find weaknesses.

- Customer data used for training is fully anonymized.

- The bank monitors the model’s performance constantly to catch anything unusual.

This results in a safer, more reliable AI system that keeps customer data secure and delivers on its promise to detect fraud effectively. It’s a win for both the bank and its customers.

Defending against evasion and poisoning attacks

Imagine you’re running a high-security facility that relies on facial recognition to keep everything secure. However, we have learnt that AI systems are vulnerable to attacks, like adversarial inputs or poisoned training data. Hackers can manipulate the system to let them in.

Let’s break down the four key defenses that can stop attackers in their tracks:

- Adversarial training: This is like teaching your model some self-defense moves. You include examples of manipulated inputs—images with subtle tweaks that are designed to trick the AI—and train it to recognize them. Imagine a facial recognition system that spots not just your face, but also those pixel-perfect alterations attackers try to sneak in. Your AI becomes smarter against these attacks, learning from these examples so it doesn’t get fooled in real life.

- Ensemble methods: We have talked about these before. Instead of relying on one system, you use a squad of models. Each model processes the input independently, and their results are combined using a voting mechanism. Attackers can’t just fool one model—they’ve got to trick the whole squad. Good luck with that.

- Regular data checks: No one likes to clean, but this is essential maintenance for your AI. Weekly audits ensure that your training data is free of duplicates, mislabeled entries, or sneaky malicious inputs. And keeping the dataset fresh with verified images helps your system stay sharp.

- Input validationBefore an image even gets near your AI, it goes through a security checkpoint. Here’s what happens:

- Format and size are checked.

- Metadata gets analyzed for signs of tampering.

- Known adversarial patterns are flagged.

- Anomaly detection works to spot anything unusual.

The system isn’t just smart; it’s cautious. Every input has to prove it’s legit before moving on.

Securing AI supply chains

Securing AI is about managing data integrity, protecting proprietary models, and making sure everything plays nice with your existing cybersecurity setup. Add in the need to address evolving threats, regulatory compliance, ethics, and supply chain vulnerabilities. Here are some strategies to secure the AI lifecycle:

- Track your sources: Every piece of data and every model in your AI system should have a clear origin story. Document where it came from, whether that’s a trusted hospital, a research institution, or a specialized vendor. Why? Because knowing the source is step one in knowing you can trust it.

- Audit your vendors: Third-party providers are critical, but they’re also potential weak points. Conduct regular audits to ensure they meet your security standards, whether that’s HIPAA compliance, data anonymization protocols, or ISO certifications.

- Use data tracking tools: Your data isn’t static. Over time, it evolves, and sometimes that evolution includes errors or malicious changes. Tools like automated data monitoring can track changes and flag unexpected shifts, keeping your datasets clean and accurate.

- Monitor data pipelines: Data flows from source to model through complex pipelines, and any hiccup along the way can spell trouble. Real-time dashboards can help you catch irregularities, like sudden drops in data volume or unexpected feature changes.

Here’s a real-world example. Let’s take a healthcare company developing an AI-powered diagnostic tool. Their supply chain includes:

- Patient data from partner hospitals.

- Medical imaging datasets from a top-tier research institution.

- Pre-trained models from a specialized AI vendor.

How do they secure this pipeline?

- Tracking sources: Every dataset and model is meticulously documented to ensure traceability.

- Vendor audits: They conduct quarterly checks for HIPAA compliance and ensure data anonymization. Vendors get annual security assessments to maintain certifications.

- Data lineage tools: They use tools like Anomalo to spot unexpected shifts in patient demographics or imaging data.

- Pipeline monitoring: Real-time dashboards in Looker keep tabs on data flow, instantly flagging any irregularities.

In this way, they have a secure AI supply chain that ensures their diagnostic tool is accurate, reliable, and compliant with industry standards.

Addressing ethical risks

AI is powerful, but that power can be dangerous in the wrong hands. If an AI system gets hacked or is poorly designed, it can lead to biased decisions, unethical outcomes, or even misuse. That’s a big deal, especially when AI is making decisions that affect real lives—like hiring, loans, or healthcare.

So, how do we make AI systems more ethical and resilient? Let’s break it down into four key steps:

- Develop clear rules and frameworks: Start by creating a solid foundation. This means drafting policies that define what fairness and ethics look like for your system.

- Train AI teams on ethics: You can’t expect AI to act ethically if the people building it aren’t equipped to think critically about these issues. Ethics training helps teams recognize and address unconscious biases, understand fairness, and grapple with the societal impact of their work.

- Make AI decisions transparent: Transparency means designing systems that can explain their decisions—what factors were considered, how candidates were compared, and where potential concerns might lie. Transparency builds trust and accountability.

- Regularly update AI systems: AI isn’t set-it-and-forget-it. Regular audits help identify and reduce biases, ensure fairness, and keep the system compliant with the latest industry standards and legal requirements. Fairness isn’t static; it’s a continuous process.

Here’s a real-world example. Let’s talk about a large tech company using an AI-powered hiring system. How do they keep it ethical?

- Develop clear rules: They create an AI Ethics Policy that outlines principles for unbiased hiring, covering everything from data collection to decision-making. An AI Ethics Board oversees these policies to ensure accountability.

- Train the teams: Ethics training becomes mandatory for all employees working on the system. They take courses on unconscious bias and fairness in machine learning and participate in workshops tackling real-world dilemmas.

- Transparency: The hiring system includes explainability tools. For each recommendation, it provides a breakdown of the factors influencing the decision—like key qualifications or how a candidate compares to others. This makes it easy for HR managers to review decisions.

- Regular Updates: Quarterly audits analyze hiring decisions for bias and apply techniques like adversarial debiasing to continuously improve fairness. They also update the system with the latest equal employment laws and industry best practices.

This is a hiring system that’s ethical, fair, and transparent—even in the face of potential compromises.

Challenges in explainability and trust

AI systems are often seen as “black boxes,” meaning their decision-making process isn’t easy to understand. This can make it hard to spot issues or build trust. If you’re a doctor relying on an AI tool or a patient affected by its decision, you want answers, not question marks. The solution is explainability. Here are four strategies to make AI systems more transparent and easier to trust:

- Use explainable AI (XAI) tools: These tools help demystify how AI reaches its conclusions. For example, techniques like LIME or SHAP can show which factors influenced a specific decision, which makes the system’s reasoning more accessible.

- Track and document data changes: When you document every change—like which data was added, removed, or modified—you can trace how these updates impact the model’s performance.

- Verify accuracy regularly: You can’t take AI at face value. Compare its outputs to the original data and, where possible, get human experts to weigh in. Regular audits ensure the system stays sharp and unbiased. Trust, but verify.

- Test and monitor continuously: AI isn’t a “set it and forget it” tool. Frequent testing with diverse inputs helps catch errors early, and real-time monitoring ensures the system performs consistently across different scenarios.

Let’s look at how a hospital builds trust in an AI dermatology diagnostic tool—a system that uses image recognition to identify potential skin conditions.

- XAI tools: The hospital uses LIME, a tool that highlights which parts of a skin image were most influential in the AI’s diagnosis so doctors can understand and trust the AI’s reasoning.

- Tracking data changes: A data lineage tool logs all updates to the training dataset, such as newly added or removed images. This allows the hospital to track how these changes affect the AI’s accuracy over time.

- Verifying accuracy: Every month, dermatologists review a random sample of AI diagnoses, comparing them against the original patient data and their own expert opinions. The goal here is to make sure the system maintains high standards of accuracy and fairness.

- Regular testing and monitoring: The IT team conducts weekly tests with diverse images, checking the AI’s performance across different skin types and conditions. They also monitor for unusual patterns in real-time, so they can fix issues before they escalate.

This AI tool is not only smart but explainable and trustworthy. Doctors understand its decisions, patients feel confident in their care, and the hospital makes sure the technology works fairly and accurately.

Conclusion

As AI becomes a bigger part of our lives, keeping it safe is more critical than ever. Why? Because the same power that drives AI’s capabilities also makes it a target for attacks. Threats like adversarial attacks, data poisoning, and model theft can seriously disrupt how AI works.

So, how do we defend AI? We have learnt that it is not a one-size-fits-all solution. Techniques like adversarial training, regular audits, and layered safeguards can reduce risks and make your systems more robust.

Then there’s generative AI. Large language models bring incredible opportunities, but they also introduce new vulnerabilities. From producing misleading content to being manipulated into giving harmful outputs, these tools require careful oversight. In the article How to Build Guardrails for Large Language Models, safety measures to ensure LLMs behave the way they should are explored.

Ethics and transparency are non-negotiable. AI systems need to be fair, explainable, and trustworthy. Biases and misuse can erode trust—and trust is everything in today’s tech-driven world. People won’t use what they don’t trust.

Finally, staying ahead is key. AI threats are constantly evolving. Businesses need to regularly update their security strategies, keeping pace with new risks to stay protected.The key is to be proactive, vigilant, and committed to ethics and transparency, and in this way, businesses can build trust and make the most of the benefits of AI.

Ana Rojo Echeburúa is an AI and data specialist with a PhD in Applied Mathematics. She loves turning data into actionable insights and has extensive experience leading technical teams. Ana enjoys working closely with clients to solve their business problems and create innovative AI solutions. Known for her problem-solving skills and clear communication, she is passionate about AI, especially generative AI. Ana is dedicated to continuous learning and ethical AI development, as well as simplifying complex problems and explaining technology in accessible ways.