Track

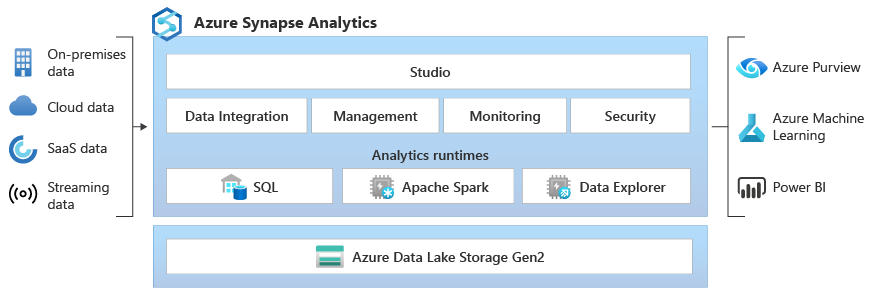

Microsoft Synapse Analytics, formerly known as Azure SQL Data Warehouse, is an integrated analytics service offering a unified platform for big data and data warehousing.

By seamlessly combining enterprise data warehousing and big data analytics, Synapse allows users to ingest, prepare, manage, and serve data for immediate business intelligence and machine learning needs. This powerful service supports multiple languages such as SQL, Python, and Spark, enabling a broad range of data processing and transformation capabilities. Furthermore, its serverless architecture ensures scalability to handle any volume of data, making it an indispensable tool for modern data professionals.

This guide provides essential topics and questions to help you prepare for your Synapse interview. These questions reflect my own experience interviewing and working with data professionals using Synapse, providing valuable insight into what hiring managers look for.

In addition to working through this interview guide, I suggest browsing Microsoft Azure Synapse Analytics Documentation for specific questions you might have along the way.

Basic Synapse Interview Questions

At the basic level, questions will cover your foundational knowledge of Synapse, including tasks such as navigating the Synapse Studio, understanding its core components, and performing simple data exploration. Expect these questions if you have limited Synapse experience or if the interviewer is assessing your fundamental understanding.

What are the key topics you should be familiar with?

If you are asked to give a high-level overview of Synapse, you should be able to describe Synapse Analytics and its role in a modern data landscape.

- Core features and user interface: Understand the Synapse Studio, its different hubs (Data, Develop, Integrate, Monitor, Manage), and their functionalities.

- Simple use cases: Provide examples of how organizations use Synapse, including basic architecture insights.

What is Microsoft Synapse Analytics, and what are its key features?

Synapse Analytics is a limitless analytics service that brings together enterprise data warehousing and big data analytics. Key features include:

- SQL Pool (Data Warehouse): A distributed query engine for high-performance analytics on structured data.

- Spark Pool: Integration with Apache Spark for big data processing and machine learning.

- Data Lake Support: Native integration with Azure Data Lake Storage Gen2 for analysing data in place.

- Synapse Studio: A unified workspace for data preparation, data warehousing, big data analytics, and AI tasks.

- Data Integration: Built-in data integration capabilities with Azure Data Factory.

Explain the core architecture of Synapse Analytics.

The core architecture includes:

- Synapse Studio: The central UI for all Synapse activities.

- SQL Pools: Provide T-SQL-based querying capabilities for structured data. Dedicated SQL pools provide guaranteed resources, while serverless SQL pools offer on-demand querying of data in the data lake.

- Spark Pools: Provide Apache Spark as a service for big data processing, data engineering, and machine learning tasks.

- Data Lake Storage: Azure Data Lake Storage Gen2 serves as the foundation for storing large volumes of structured, semi-structured, and unstructured data.

- Pipelines: Data integration pipelines powered by Azure Data Factory for ETL/ELT processes.

How do you query data in Synapse Analytics?

You can query data in Synapse using:

- T-SQL: Using the SQL Pool for structured data.

- Spark SQL: Using Spark Pools for data in the data lake.

- Serverless SQL Pool: Query data directly in the data lake using T-SQL without provisioning resources.

Intermediate Synapse Interview Questions

These questions gauge your deeper understanding of Synapse and its configuration. You'll need to demonstrate your ability to manage resources, implement data processing workflows, and optimize performance.

This builds upon your basic knowledge and requires understanding of:

- Resource management: How to provision and manage SQL Pools and Spark Pools.

- Data integration: Creating and managing data pipelines using Synapse Pipelines (Azure Data Factory).

- Performance tuning: Understanding query optimization techniques and data partitioning strategies.

How do you create and manage SQL Pools in Synapse Analytics?

To create a SQL Pool, you navigate to the Synapse Studio, select the "Manage" hub, and then "SQL pools." You can then configure the pool's performance level (Data Warehouse Units - DWUs) based on your workload requirements.

Managing involves monitoring performance, scaling resources, and pausing/resuming the pool as needed.

Explain how Spark is used in Synapse Analytics.

Synapse uses Spark Pools to provide Apache Spark as a service. This allows you to:

- Process large datasets using Spark DataFrames.

- Perform data engineering tasks using languages like Python, Scala, and Java.

- Build and train machine learning models using Spark MLlib.

What are data pipelines, and how do you create them in Synapse?

Data pipelines are automated workflows for data ingestion, transformation, and loading. In Synapse, you create them using the "Integrate" hub, which provides a visual interface (Azure Data Factory) to design and manage pipelines.

These pipelines can connect to various data sources, perform transformations using activities like data flow or stored procedure execution, and load data into target systems.

How do you monitor and manage resources in Synapse Analytics?

You can monitor resources via the "Monitor" hub in Synapse Studio. This hub provides insights into SQL Pool and Spark Pool performance, pipeline executions, and overall system health. You can also use Azure Monitor for more detailed monitoring and alerting capabilities.

Describe the data storage options available in Synapse Analytics?

Synapse Analytics offers a variety of data storage options to suit different needs and scenarios, ensuring flexibility and efficiency in handling diverse datasets. These options include:

- Azure data lake storage Gen2: Primary storage for the data lake, optimized for large-scale analytics.

- SQL pool storage: Stores structured data within the data warehouse.

- Azure blob storage: Can be used for storing data, though Data Lake Storage Gen2 is generally preferred for analytics workloads.

Advanced Synapse Interview Questions

Advanced users are expected to handle performance optimization, create complex workflows, and implement sophisticated analytics and machine learning models. These questions are typical for senior data positions or roles with a DevOps component.

This builds on basic and intermediate knowledge, requiring practical experience in:

- Performance optimization: Tuning SQL Pool and Spark Pool configurations, optimizing queries, and managing data partitioning.

- Machine learning: Integrating Synapse with Azure Machine Learning, deploying models, and managing the model lifecycle.

- CI/CD pipelines: Implementing continuous integration and continuous deployment for Synapse solutions.

What strategies do you use for performance optimization in Synapse?

Proper Indexing: Optimize SQL Pool performance by using clustered columnstore indexes and appropriate non-clustered indexes.

- Data partitioning: Partition data in SQL Pools and Data Lake Storage based on query patterns to improve performance.

- Query optimization: Use query hints, rewrite complex queries, and analyze query execution plans to identify bottlenecks.

- Resource allocation: Right-size SQL Pools and Spark Pools based on workload requirements.

How can you implement CI/CD pipelines for Synapse Analytics?

Use Azure DevOps or GitHub Actions to automate the build, test, and deployment of Synapse solutions. This includes:

- Source control: Store Synapse artifacts (SQL scripts, Spark notebooks, pipelines) in a version control system.

- Automated testing: Implement unit tests and integration tests for SQL scripts and data pipelines.

- Automated deployment: Use Azure Resource Manager (ARM) templates or Synapse APIs to deploy changes to different environments (dev, test, prod).

Explain how to handle complex analytics in Synapse Analytics?

Handling complex analytics and ensuring seamless data operations within Synapse Analytics requires a multifaceted approach. This involves automated testing, deployment strategies, and advanced analytical techniques.

Below, you'll find essential methods to achieve these goals effectively:

- Advanced SQL Queries: Utilize window functions, common table expressions (CTEs), and other advanced T-SQL features for complex data analysis in SQL Pools.

- Spark for Big Data Processing: Use Spark Pools to process large datasets, perform complex transformations, and build machine learning models.

- Integration with Azure Machine Learning: Leverage Azure Machine Learning for advanced analytics scenarios, such as predictive modeling and anomaly detection.

How do you deploy machine learning models in Synapse Analytics?

Deploying machine learning models in Synapse Analytics involves several key steps, from training and registering models to deploying them for practical use. Here is a structured approach to successfully deploying machine learning models:

- Train models: Train machine learning models using Spark MLlib in Synapse or Azure Machine Learning.

- Register models: Register trained models in Azure Machine Learning Model Registry.

- Deploy models: Deploy models as REST endpoints using Azure Machine Learning or integrate them into Synapse pipelines for batch scoring.

Synapse Interview Questions for Data Engineer Roles

Data Engineers are responsible for designing, building, and maintaining data pipelines, ensuring data quality, and optimizing performance. For Data Engineer positions focusing on Synapse, you should understand:

- Data Pipeline Architecture: Design scalable and reliable data pipelines for ETL/ELT processes.

- Real-time processing: Implement real-time data ingestion and processing using Azure Event Hubs, Azure Stream Analytics, and Synapse.

- Data security: Implement security measures to protect data at rest and in transit.

How do you design data pipelines in Synapse Analytics?

When designing data pipelines in Synapse Analytics, several key components must be considered to ensure efficient and reliable data processing:

- Identify data sources: Determine the data sources (e.g., databases, APIs, files) and their ingestion methods.

- Design ETL/ELT processes: Create data pipelines using Synapse Pipelines (Azure Data Factory) to extract, transform, and load data.

- Implement data quality checks: Add data validation and error handling steps to ensure data quality.

- Automate pipeline execution: Schedule pipelines to run automatically using triggers and schedule-based execution.

What are the best practices for ETL processes in Synapse Analytics?

In the context of Synapse Analytics, implementing effective ETL (Extract, Transform, Load) processes is crucial for ensuring efficient data management and analytics. The following best practices are recommended to optimize ETL processes within Synapse Analytics:

- Use delta lake: Use Delta Lake for storing data in the data lake to enable ACID transactions and data versioning.

- Modular code: Write modular and reusable code in Synapse notebooks and SQL scripts.

- Parameterization: Parameterize pipelines and SQL scripts to make them more flexible and reusable.

- Monitoring: Monitor pipeline executions and performance using Synapse Studio and Azure Monitor.

How do you handle real-time data processing in Synapse?

As a data engineer, one encounters numerous challenges that require innovative solutions and robust methodologies. Working with and handling real-time data is one of them. Here are some experiences and techniques commonly employed:

- Ingest real-time data: Use Azure Event Hubs or Azure IoT Hub to ingest real-time data streams.

- Process data with stream analytics: Use Azure Stream Analytics to perform real-time transformations and aggregations.

- Load data into synapse: Load processed data into SQL Pools or Data Lake Storage for real-time analytics.

How do you ensure data security in Synapse Analytics?

Ensuring data security in Synapse Analytics is paramount to protect sensitive information and maintain compliance with regulatory standards. Several key strategies can be implemented to safeguard data within the Synapse environment:

- Access control: Implement role-based access control (RBAC) to manage access to Synapse resources.

- Data encryption: Enable encryption at rest and in transit using Azure Key Vault.

- Network security: Use virtual network (VNet) integration and private endpoints to secure network access.

- Auditing: Enable auditing to track user activity and data access.

- Data masking: Implement data masking to protect sensitive data.

Final Thoughts

This guide has equipped you with key insights to confidently tackle your Microsoft Synapse Analytics interview, whether you're a data engineer or software engineer.

Remember to showcase not only your theoretical knowledge but also your practical experience in designing data pipelines, optimizing performance, and ensuring robust data security.

Beyond the technical aspects, highlight your problem-solving skills and ability to learn continuously, as Synapse Analytics is a rapidly evolving platform. Stay curious, keep exploring Microsoft's resources, and demonstrate your passion for leveraging data to drive impactful business outcomes. Good luck!

Become Azure AZ-900 Certified

Lead BI Consultant - Power BI Certified | Azure Certified | ex-Microsoft | ex-Tableau | ex-Salesforce - Author

FAQs about Azure Synapse Interviews

What is the best way to prepare for a Microsoft Synapse Analytics interview?

The most effective way to prepare is through hands-on experience. Work with Synapse Studio, create SQL and Spark Pools, build data pipelines, and explore the integration with Azure Data Lake Storage. Microsoft Learn offers excellent learning paths and tutorials. Additionally, understand common data warehousing and big data concepts.

How important is it to know both SQL and Spark for a Synapse Analytics role?

The importance depends on the specific role. Data engineers often need strong skills in both SQL and Spark for data processing and transformation. Data scientists might focus more on Spark for machine learning tasks. However, a general understanding of both is beneficial, as Synapse integrates both engines seamlessly.

What are the key differences between Dedicated SQL Pools and Serverless SQL Pools in Synapse Analytics, and when would I use each?

Dedicated SQL Pools provide provisioned resources for consistent performance and are suitable for workloads with predictable usage patterns.

Serverless SQL Pools offer on-demand querying of data in the data lake without provisioning resources, making them ideal for ad-hoc analysis and exploration.

Use Dedicated SQL Pools for production data warehousing and Serverless SQL Pools for quick data discovery and analysis.

I have experience with Azure Data Factory. How much of that knowledge is transferable to Synapse Pipelines?

A significant amount of your knowledge is transferable. Synapse Pipelines are built on the same foundation as Azure Data Factory, sharing the same visual interface and activity library. Understanding data integration concepts, pipeline design, and activity configuration will greatly accelerate your learning curve with Synapse Pipelines.

What kind of questions can I expect regarding data security and governance in Synapse Analytics?

Expect questions about implementing role-based access control (RBAC), encrypting data at rest and in transit, using Azure Key Vault for managing secrets, configuring network security with virtual networks and private endpoints, and implementing data masking to protect sensitive information. Demonstrating your understanding of these security measures will be critical.