Track

Big data technologies are essential as the size and complexity of data being produced by businesses is increasing every day. Traditional tools are not sufficient to handle big data, and that’s where these technologies enable efficient data management and extraction of insights that drive informed decision-making. Keeping up with the latest tools and trends is crucial for professionals interested in making or accelerating their career in this domain.

What Is Big Data Technology?

Big data technology refers to the tools and frameworks that process, store, and analyze complex and large datasets.

According to Gartner, the definition of big data is “High volume, high-velocity and high-variety information assets that demand cost-effective, innovative forms of information processing for enhanced insight and decision making.”

Big Data Characteristics. Source of Image: Author

- Volume: The scale at which there has been volume growth in data has been enormous. Every second, massive amounts of data is being generated from social media, sensors, financial transactions, and more. Managing this scale of volume requires advanced storage systems and scalable processing power to keep up.

- Variety: Data comes in all forms. It can be structured, like neatly organized data in spreadsheets and databases. Or it can be unstructured, like text, images, videos, and social media posts that don’t fit into a simple format. Then we also have semi-structured data, like JSON and XML files, that falls somewhere in between. Each type of data requires different approaches to analyze and make sense of.

- Next is velocity, which refers to the speed with which the data is getting generated and needs to be processed. With IoT (internet of things) devices, and real-time streams from social media, financial transactions, the ability to quickly capture, process, and analyze this data has become critical for making timely decisions.

- Veracity: Making sure data is accurate and reliable is crucial because bad data can lead to poor decisions and even harm a business. But at the same time, if we try too hard to achieve perfect data that can slow things down, leading to delays in decision-making. So, a perfect balance has to be met in line with the business sensibilities.

Big data technologies enable organizations to efficiently handle structured and unstructured data and derive meaningful insights. Since data is a strategic asset, big data technologies have become essential for maintaining sustainable competitive advantage across industries such as healthcare, finance, and retail. Big data is no longer a technical requirement, it has evolved into being a business imperative.

Types of Big Data Technologies

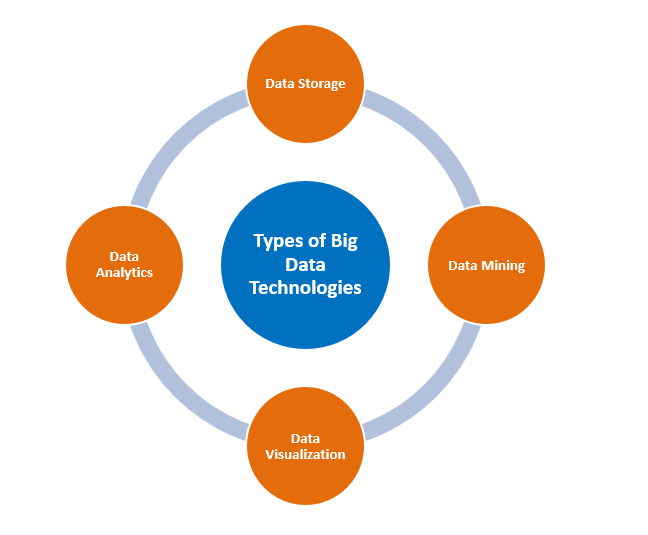

Types of Big Data Technologies. Source of Image: Author

Data storage

Apache Hadoop

Hadoop is an open-source framework that provides scalable storage by distributing data across clusters of machines. It is designed to scale up from a single server to thousands of machines, making it a fundamental and powerful framework in big data infrastructures. Hadoop’s cost-effectiveness also makes it an attractive option for organizations managing massive amounts of data at a lower price point compared to traditional databases.

Hadoop has two main components:

- HDFS: which is a scalable storage system that breaks data into smaller blocks and spreads them across multiple servers. This means that even if some servers fail, the data is still safe.

- MapReduce, on the other hand, is a programming model that splits big tasks into smaller pieces, which can be processed at the same time, speeding up the process.

Apache Spark

Apache Spark is another powerful tool for big data. Unlike Hadoop’s MapReduce, Spark processes data in memory, making it much faster and more efficient. It can also handle a variety of tasks, including batch processing, real-time data, machine learning, and graph analysis, making it a versatile choice for different big data needs.

Spark’s resilient distributed datasets, RDDs, allow it to store data in memory and process it quickly, while ensuring fault tolerance. Spark can also work with Hadoop’s HDFS for storage while taking advantage of its fast processing capabilities.

MongoDB

A NoSQL database, MongoDB is designed for unstructured data. Its flexibility in handling varying data types makes it ideal for fast-growing data environments and dynamic applications. MongoDB's ability to scale horizontally makes it particularly useful in scenarios where relational databases fall short, such as social media platforms and real-time analytics. It’s often used for real-time data analytics, content management systems, and Internet of Things (IoT) applications, where flexibility and speed are key.

Data mining

RapidMiner

Altair’s RapidMiner is a data analytics and AI platform that supports the full data science lifecycle, allowing users to mine large datasets and build predictive models with ease.

Its open-source nature and integration with machine learning libraries give it an edge for those who want fast prototyping and production deployment.

The unified platform is designed for different skill sets – from data scientists and engineers to business analysts and executives, to do just that in one unified environment.

You can also integrate RapidMiner with multiple machine learning libraries like TensorFlow, Weka, and H2O, allowing you to experiment with different algorithms and approaches quickly.

Presto

Presto says it is a “fast and reliable SQL query engine for data analytics and the open lakehouse.”

The engine provides one simple ANSI SQL interface, enabling querying of large datasets from multiple sources. It also provides real-time analytics. Presto is efficient for companies needing interactive analysis on distributed data. Presto’s ability to query data lakes without the need for data transformation makes it a favorite for data engineers seeking flexibility and performance.

Data analytics

Apache Spark

Big data analytics is probably one of the terms that comes to people's minds when Apache Spark is mentioned. That’s because Spark excels in large-scale data processing with its in-memory architecture, enabling real-time analytics and faster data processing.

In terms of utilizing batch and streaming data, Spark is well-equipped for the task and this quality is why it is normally employed in machine learning, real time processing and even when analyzing graphs.

The most important feature in this regard is the iterative computation of Spark, which is the reason for its wide application in machine learning problems where multiple rounds of computational extensive machine learning is required.

Besides, API support for Python, Java and Scala and other programming languages makes it easy to transition from one developer to another and hence throughout organizations.

Splunk

Splunk’s real-time data analytics and AI capabilities allow businesses to monitor machine-generated data, detect anomalies, and make data-driven decisions faster.

Splunk has indeed been a great asset for organizations whose operations are dependent on the instantaneous availability of operational intelligence. It gathers and processes a variety of machine-produced data, including but not limited to logs, metrics, and events, that help to determine what is going on at any organizational IT systems.

Splunk also enjoys a substantial feature called Reporting, which helps end-users build complex multi-page dashboards that are easy to interpret and pleasing to the eyes.

Data visualization

Tableau

The website of Tableau defines it as “a visual analytics platform transforming the way we use data to solve problems—empowering people and organizations to make the most of their data.”

As a leader in the business intelligence domain, it provides users with a powerful, intuitive interface to create dynamic visualizations that offer deep insights. Its ability to integrate data from various sources makes it that much more compatible with business user requirements.

Its overall strength lies in its versatility, from creating high-level executive dashboards to detailed, drill-down reports for data analysts.

Power BI

According to Microsoft, “Power BI is a collection of software services, apps, and connectors that work together to turn your unrelated sources of data into coherent, visually immersive, and interactive insights.”

Power BI integrates seamlessly with other tools, especially the Microsoft ones, and allows for creating comprehensive, interactive reports. The teams can collaborate in real time because of its cloud-based service.

Applications of Big Data Technologies

Healthcare

Big data is revolutionizing the healthcare sector through numerous application areas:

One of its key applications is predictive analytics, where variables such as patient history, genetics, blood pressure levels, and other lifestyle data points are analyzed to predict the likelihood of diseases. This enables early intervention and personalized treatments, helping to prevent or manage conditions more effectively while reducing healthcare costs.

Preventive patient monitoring is another area where big data shines. Real-time health data is collected through wearable devices that allows early detection of abnormalities with timely intervention. This is especially useful for managing chronic conditions and preventing hospital readmissions.

In medical research, big data accelerates the discovery of new drugs and treatments by analyzing vast datasets from clinical trials, genomic studies, and patient records. This is done through better identification, testing and evaluation of drug effectiveness, leading to faster breakthroughs.

Last but not the least, linear and non-linear optimization techniques could be used, facilitated by big data technologies, to optimize scheduling, reduce wait times, and improve overall care delivery.

Finance

Financial institutions use big data for a lot of use cases such as:

- Quantitative trading uses algorithms to analyze real-time market data, historical prices, and trends to execute trades faster than ever. This requires dealing with a high volume of real-time data, an area requiring big data capabilities.

- Fraud detection is easily the most widely recognized application. Big data analytics can spot patterns and anomalies in a real time basis, and flag suspicious transactions for further investigation. This helps financial institutions prevent fraud, protect customers, and reduce financial losses.

- Unsupervised machine learning techniques can be used on big data to power customer analytics, which in turn will enable strategic decisions related to targeted marketing, investment recommendations, and customized financial planning.

- Finally, big data enhances operational efficiency by identifying bottlenecks and automating processes. From predictive maintenance to process optimization, financial institutions can cut costs, improve productivity, and deliver better services.

Retail

Retailers leverage big data in several application areas such as:

- Big data helps retailers optimize supply chain and inventory management by analyzing historical sales data, demand, and supplier performance. This ensures that on the one hand product shortages are avoided, but also on the other hand, inventory carrying costs don’t go up.

- Location analytics is another area where big data plays a differentiator role. By leveraging and analyzing geographic and demographic data, retail chains are able to take analytically driven decisions on store locations, store types as well as strategy.

- Big data is also transforming the retail landscape by turning vast amounts of customer data into actionable insights. Analyzing customer behavior, such as purchase history and browsing patterns, enables these retail players to offer tailored product suggestions. This improves the shopping experience and boosts sales and customer loyalty.

Emerging Trends in Big Data Technologies

Edge computing

As per the definition by IBM, “Edge computing is a distributed computing framework that brings enterprise applications closer to data sources such as IoT devices or local edge servers. This proximity to data at its source can deliver strong business benefits, including faster insights, improved response times and better bandwidth availability”.

The proximity to data enables its processing closer to the source, which reduces latency and improves the speed of decision-making. This is super-critical in IoT applications where real-time processing is essential. Also, since you don’t need to transfer data to centralized locations, edge computing can also reduce lower bandwidth usage and response times. This means faster and accurate data-driven decision making.

AI integration

As per the definition from RST, “AI integration entwines artificial intelligence capabilities directly into products and systems. Rather than AI operating as an external tool, integration embeds its analytical prowess natively to enhance all facets of performance.”

Integrating artificial intelligence (AI) with big data has transformed how companies analyze and act on data. AI algorithms, particularly machine learning (ML) models, allow systems to detect patterns, make predictions, and automate decision-making processes.

For example, in manufacturing, AI integration helps with predictive maintenance, allowing companies to identify when equipment is likely to fail and take preventive action. This reduces downtime and operational costs. Similarly, AI-driven anomaly detection can identify unusual transactions in finance, helping to prevent fraud in real time.

Hybrid cloud environments

According to Microsoft, “A hybrid cloud—sometimes called a cloud hybrid—is a computing environment that combines an on-premises datacenter with a public cloud, allowing data and applications to be shared between them.”

A hybrid cloud architecture combines the best of both public cloud scalability and on-premise security. It offers the flexibility to store sensitive data securely in-house while using the cloud for larger, less sensitive tasks. Hybrid cloud environments also provide cost efficiency by allowing businesses to scale their infrastructure up or down depending on their needs.

Challenges in Implementing Big Data Technologies

There are several advantages of implementing big data technologies, however, they come with their own set of challenges. Some of these considerations are listed below:

- Data integration: Integrating data from various sources – structured, unstructured, semi structured - poses challenges in ensuring consistency and accuracy.

- Scalability: As data volumes grow, the infrastructure must be able to scale efficiently without performance degradation.

- Security concerns: Securing sensitive data within big data ecosystems requires robust encryption, access controls, and compliance with regulations. With regulations such as GDPR and CCPA, organizations worldwide are facing increased pressure to implement security practices while ensuring user privacy.

- High costs: Implementing and maintaining big data infrastructure requires substantial investment in both technology and talent.

- Data quality and governance: As data is collected from multiple sources, ensuring its accuracy, consistency, and reliability becomes a challenge. Also, lack of governance can result in compliance issues and business risks.

Conclusion

Big data technologies are a strategic requirement for organizations to attain competitive advantages by gaining actionable insights from massive datasets. From storage to analytics and visualization, these tools are the pillars on which the modern day data-driven decision-making is based upon. Staying informed on emerging trends like AI integration, edge computing, and hybrid cloud architectures will allow businesses to scale efficiently and innovate in data management. Mastering these technologies empowers organizations to transform raw data into valuable assets, driving competitive advantage in a data-centric economy. However, it's important to understand the cost and other challenges before taking any implementation decisions.

For further learning, consider exploring the following sources:

- A Guide to Big Data Training: Explore the importance of big data training and DataCamp’s business solutions.

- Transfer Learning: Leverage Insights from Big Data: Discover what transfer learning is, its key applications, and why it's essential for data scientists.

- Unlocking the Power of Data Science in the Cloud: Exasol's cloud analytics leaders discuss the benefits of cloud migration, economic triggers, success stories, and the importance of flexibility.

- Flink vs. Spark: Comparing Flink vs. Spark, two open-source frameworks at the forefront of batch and stream processing.

Seasoned professional in data science, artificial intelligence, analytics, and data strategy.

FAQs

What is big data?

Big data refers to vast amounts of structured and unstructured data that are too large or complex for traditional data-processing software to manage efficiently.

How does big data benefit the retail industry?

Big data helps retailers optimize store locations, personalize marketing, manage inventory, and improve customer experience through location and behavioral insights.

What is edge computing in big data?

Edge computing processes data closer to its source, reducing latency and enabling faster, real-time decision-making, especially useful in IoT applications.

How does big data help with fraud detection in finance?

Big data analyzes transaction patterns in real time, identifying anomalies and preventing fraudulent activities by flagging unusual behavior.

What are the challenges in implementing big data technologies?

Common challenges include data integration, scalability, security concerns, high costs, maintaining data quality, and real-time data processing.