Course

If you’re preparing for a cloud engineering interview, you’ve come to the right place. This article covers some of the most commonly asked questions to help you practice and build confidence. Whether you aim for a role in cloud engineering, DevOps, or MLOps, these questions will test your understanding of cloud concepts, architecture, and best practices.

To make this guide even more practical, I’ve included examples of services from the biggest cloud providers—AWS, Azure, and GCP—so you can see how different platforms approach cloud solutions. Let’s dive in!

Basic Cloud Engineer Interview Questions

These fundamental questions check your understanding of cloud computing concepts, services, and deployment models. Your interview will normally start with a few similar questions.

1. What are the different types of cloud computing models?

The three main cloud computing models are:

- Infrastructure as a Service (IaaS): Provides virtualized computing resources over the internet (e.g., Amazon EC2, Google Compute Engine).

- Platform as a Service (PaaS): Offers a development environment with tools, frameworks, and infrastructure for building applications (e.g., AWS Elastic Beanstalk, Google App Engine).

- Software as a Service (SaaS): Delivers software applications over the internet on a subscription basis (e.g., Google Workspace, Microsoft 365).

2. What are the benefits of using cloud computing?

These are some of the most important benefits of cloud computing:

- Reduced cost: No need for on-premises hardware, reducing infrastructure costs.

- Scalability: Easily scale resources up or down based on demand.

- Reliability: Cloud providers offer high availability with multiple data centers.

- Security: Advanced security measures, encryption, and compliance certifications.

- Accessibility: Access resources from anywhere with an internet connection.

3. What are the different types of cloud deployment models?

There are four main models:

- Public cloud: Services are shared among multiple organizations and managed by third-party providers (e.g., AWS, Azure, GCP).

- Private cloud: Exclusive to a single organization, offering greater control and security.

- Hybrid cloud: A mix of public and private clouds, allowing data and applications to be shared between them.

- Multi-cloud: Utilizes multiple cloud providers to avoid vendor lock-in and enhance resilience.

Cloud deployment models. Image by Author.

4. What is virtualization, and how does it relate to cloud computing?

Virtualization is the process of creating virtual instances of computing resources, such as servers, storage, and networks, on a single physical machine. It enables cloud computing by allowing efficient resource allocation, multi-tenancy, and scalability.

Technologies like Hyper-V, VMware, and KVM are commonly used for virtualization in cloud environments.

5. What are cloud regions and availability zones?

A cloud region is a geographically distinct area where cloud providers host multiple data centers. An availability zone (AZ) is a physically separate data center within a region designed to offer redundancy and high availability.

For example, AWS has multiple regions worldwide, each containing two or more AZs for disaster recovery and fault tolerance.

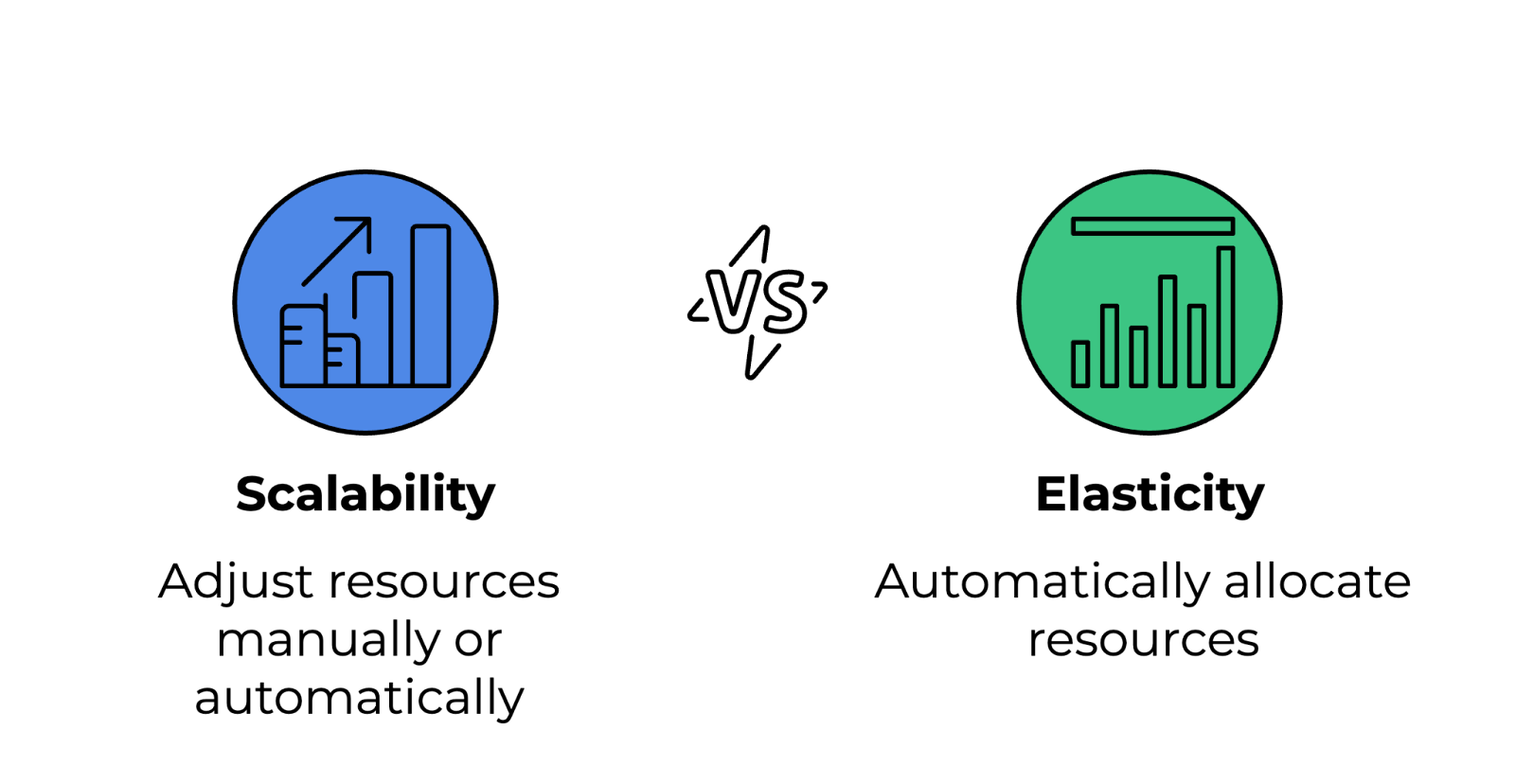

6. How does cloud elasticity differ from cloud scalability?

Here are the distinctions between these two concepts:

- Scalability: The ability to increase or decrease resources manually or automatically to accommodate growth. It can be vertical (scaling up/down by adding more power to existing instances) or horizontal (scaling out/in by adding or removing instances).

- Elasticity: The ability to automatically allocate and deallocate resources in response to real-time demand changes. Elasticity is a key feature of serverless computing and auto-scaling services.

Difference between scalability and elasticity. Image by Author.

7. What are the key cloud service providers, and how do they compare?

The following table lists the major cloud providers, their strengths, and use cases:

|

Cloud provider |

Strengths |

Use cases |

|

Amazon Web Services (AWS) |

Largest cloud provider with a vast range of services. |

General-purpose cloud computing, serverless, DevOps. |

|

Microsoft Azure |

Strong in enterprise and hybrid cloud solutions. |

Enterprise applications, hybrid cloud, Microsoft ecosystem integration. |

|

Google Cloud Platform (GCP) |

Specializes in big data, AI/ML, and Kubernetes. |

Machine learning, data analytics, container orchestration. |

|

IBM Cloud |

Focuses on AI and enterprise cloud solutions. |

AI-driven applications, enterprise cloud transformation. |

|

Oracle Cloud |

Strong in databases and enterprise applications. |

Database management, ERP applications, enterprise workloads. |

8. What is serverless computing, and how does it work?

Serverless computing is a cloud execution model where the cloud provider manages infrastructure automatically, allowing developers to focus on writing code. Users only pay for actual execution time rather than provisioning fixed resources. Examples include:

- AWS Lambda

- Azure Functions

- Google Cloud Functions

9. What is object storage in the cloud?

Object storage is a data storage architecture where files are stored as discrete objects within a flat namespace instead of hierarchical file systems. It is highly scalable and used for unstructured data, backups, and multimedia storage. Examples include:

- Amazon S3 (AWS)

- Azure Blob Storage (Azure)

- Google Cloud Storage (GCP)

10. What is a content delivery network (CDN) in cloud computing?

A CDN is a network of distributed servers that cache and deliver content (e.g., images, videos, web pages) to users based on their geographic location. This reduces latency, improves website performance, and enhances availability. Popular CDNs include:

- Amazon CloudFront

- Azure CDN

- Cloudflare

Cloud Courses

Intermediate Cloud Engineer Interview Questions

These questions dive deeper into cloud networking, security, automation, and performance optimization, testing your ability to effectively design, manage, and troubleshoot cloud environments.

11. What is a virtual private cloud (VPC), and why is it important?

A virtual private cloud (VPC) is a logically isolated section of a public cloud that allows users to launch resources in a private network environment. It provides greater control over networking configurations, security policies, and access management.

In a VPC, users can define IP address ranges using CIDR blocks. Subnets can be created to separate public and private resources, and security groups and network ACLs help enforce network access policies.

12. How does a load balancer work in the cloud?

Load balancers distribute incoming network traffic across multiple servers to ensure high availability, fault tolerance, and better performance.

There are different types of load balancers:

- Application load balancers (ALB): Operate at Layer 7 (HTTP/HTTPS), routing traffic based on content rules.

- Network load balancers (NLB): Work at Layer 4 (TCP/UDP), providing ultra-low latency routing.

- Classic load balancers (CLB): Legacy option for balancing between Layer 4 and 7.

13. What is IAM (identity and access management), and how is it used?

IAM is a framework that controls who can access cloud resources and what actions they can perform. It helps enforce the principle of least privilege and secures cloud environments.

In IAM, users and roles define identities with specific permissions, policies grant or deny access using JSON-based rules, and multi-factor authentication (MFA) adds an extra security layer for critical operations.

14. What are security groups and network ACLs, and how do they differ?

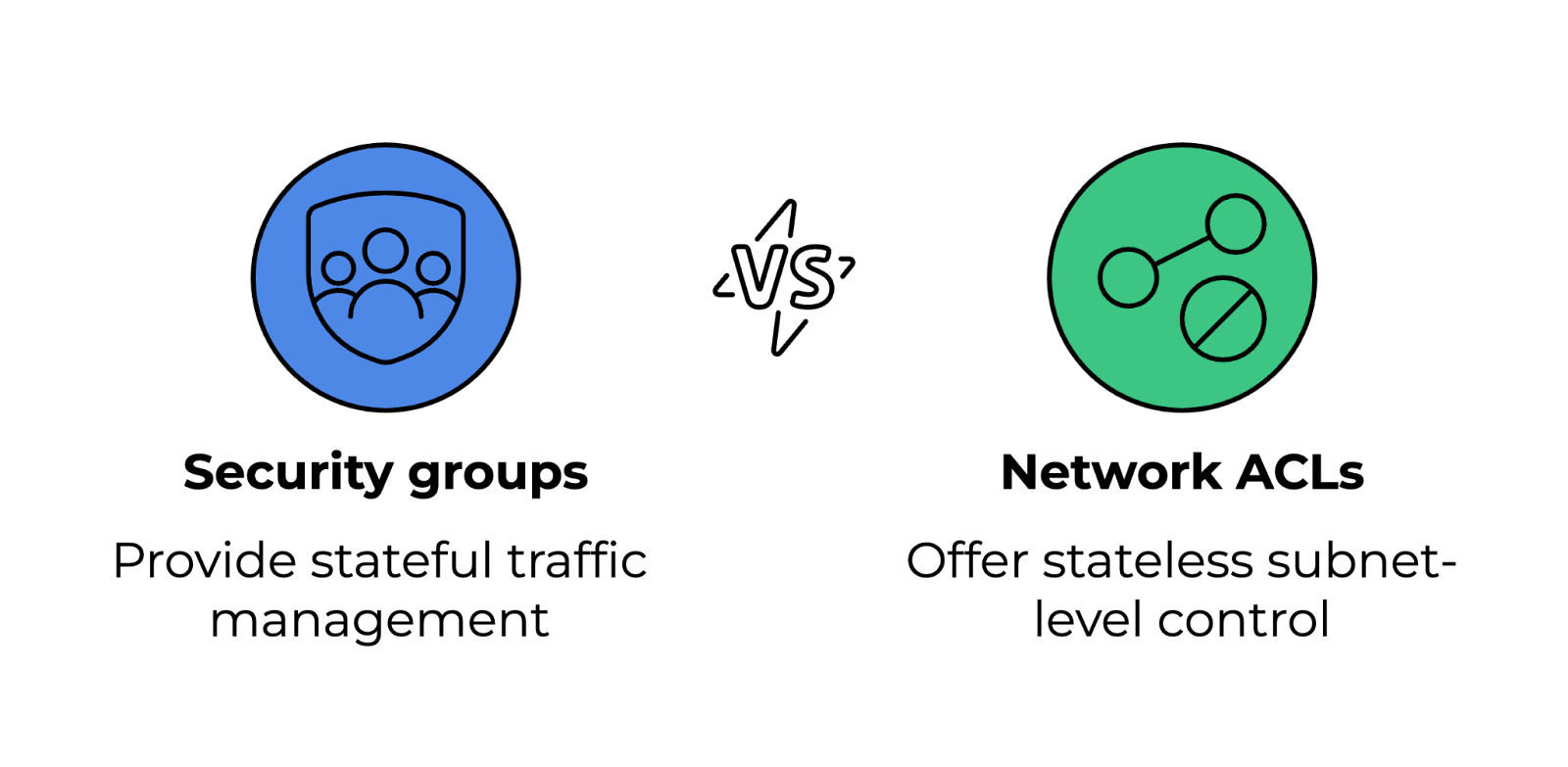

Security groups and network ACLs (access control lists) control inbound and outbound traffic to cloud resources but function at different levels.

- Security groups: Act as firewalls, allowing or denying traffic based on rules. They are stateful, meaning changes in inbound rules automatically reflect in outbound rules.

- Network ACLs: Control traffic at the subnet level and are stateless. They require explicit inbound and outbound rules for bidirectional traffic.

Comparing security groups and network ACLs. Image by Author.

15. What is a bastion host, and why is it used?

A bastion host is a secure jump server for accessing cloud resources in a private network. Instead of exposing all servers to the internet, it acts as a gateway for remote connections.

To enhance security, it should have strict firewall rules, allowing SSH or RDP access only from trusted IPs. Multi-factor authentication (MFA) and key-based authentication should be used for secure access, and logging and monitoring should be enabled to track unauthorized login attempts.

16. How does autoscaling work in the cloud?

Autoscaling allows cloud environments to dynamically adjust resources based on demand, ensuring cost efficiency and performance. It works in two ways:

- Horizontal scaling (scaling out/in): Adds or removes instances based on load.

- Vertical scaling (scaling up/down): Adjusts the resources (CPU, memory) of an existing instance.

Cloud providers offer autoscaling groups, which work with load balancers to distribute traffic effectively.

17. How do you ensure cloud cost optimization?

Managing cloud costs effectively requires monitoring usage and selecting the right pricing models. Cost optimization strategies include:

- Using reserved instances for long-term workloads to get discounts.

- Leveraging spot instances for short-lived workloads.

- Setting up budget alerts and cost monitoring tools like AWS Cost Explorer or Azure Cost Management.

- Right-sizing instances by analyzing CPU, memory, and network usage.

Want to master AWS security and optimize cloud costs? Check out the AWS Security and Cost Management course to learn essential best practices.

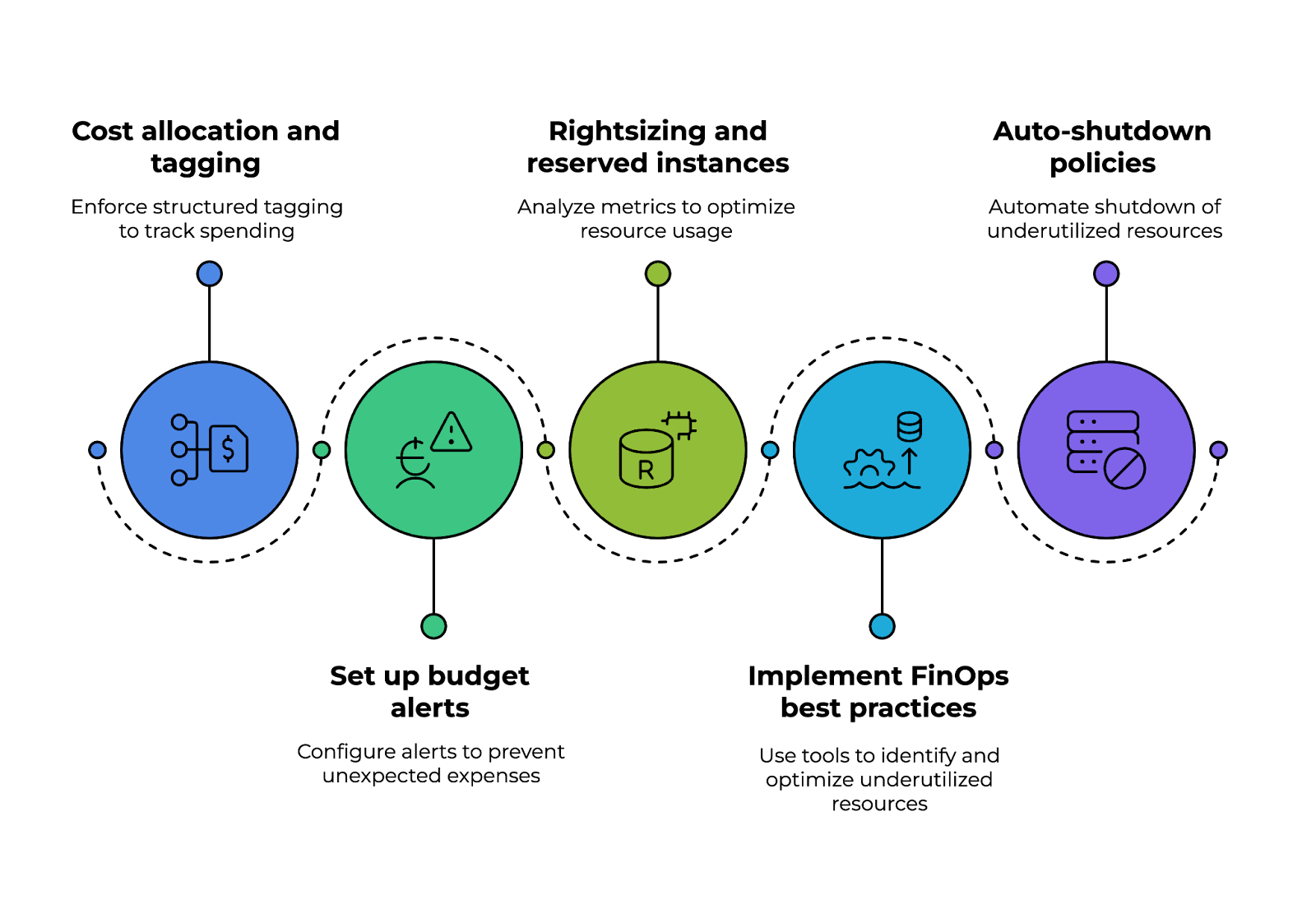

Optimizing cloud costs four pillars. Image by Author.

18. What are the differences between Terraform and CloudFormation?

Terraform and AWS CloudFormation are both infrastructure-as-code (IaC) tools, but they have some differences:

|

Feature |

Terraform |

AWS CloudFormation |

|

Cloud support |

Cloud-agnostic, supports AWS, Azure, GCP, and others. |

AWS-specific, designed exclusively for AWS resources. |

|

Configuration language |

Uses HashiCorp configuration language (HCL). |

Uses JSON/YAML templates. |

|

State management |

Maintains a state file to track infrastructure changes. |

Uses stacks to manage and track deployments. |

19. How do you monitor cloud performance and troubleshoot issues?

Monitoring tools help detect performance bottlenecks, security threats, and resource overuse. Common monitoring solutions include:

- AWS CloudWatch: Monitors metrics, logs, and alarms.

- Azure Monitor: Provides application and infrastructure insights.

- Google Cloud Operations (formerly Stackdriver): Offers real-time logging and monitoring.

20. How does containerization improve cloud deployments?

Containers package applications with dependencies, making them lightweight, portable, and scalable. Compared to virtual machines, containers use fewer resources since multiple containers can run on a single OS.

Docker and Kubernetes allow faster deployment and rollback. Additionally, they scale easily with orchestration tools like Kubernetes and Amazon ECS/EKS.

Looking to sharpen your containerization skills? The Containerization and Virtualization track covers Docker, Kubernetes, and more to help you build scalable cloud applications.

21. What is a service mesh, and why is it used in cloud applications?

A service mesh is an infrastructure layer that manages service-to-service communication in microservices-based cloud applications. It provides:

- Traffic management: Enables intelligent routing and load balancing.

- Security: Implements mutual TLS encryption for secure communication.

- Observability: Tracks request flows and logs for debugging.

Popular service mesh solutions include Istio, Linkerd, and AWS App Mesh.

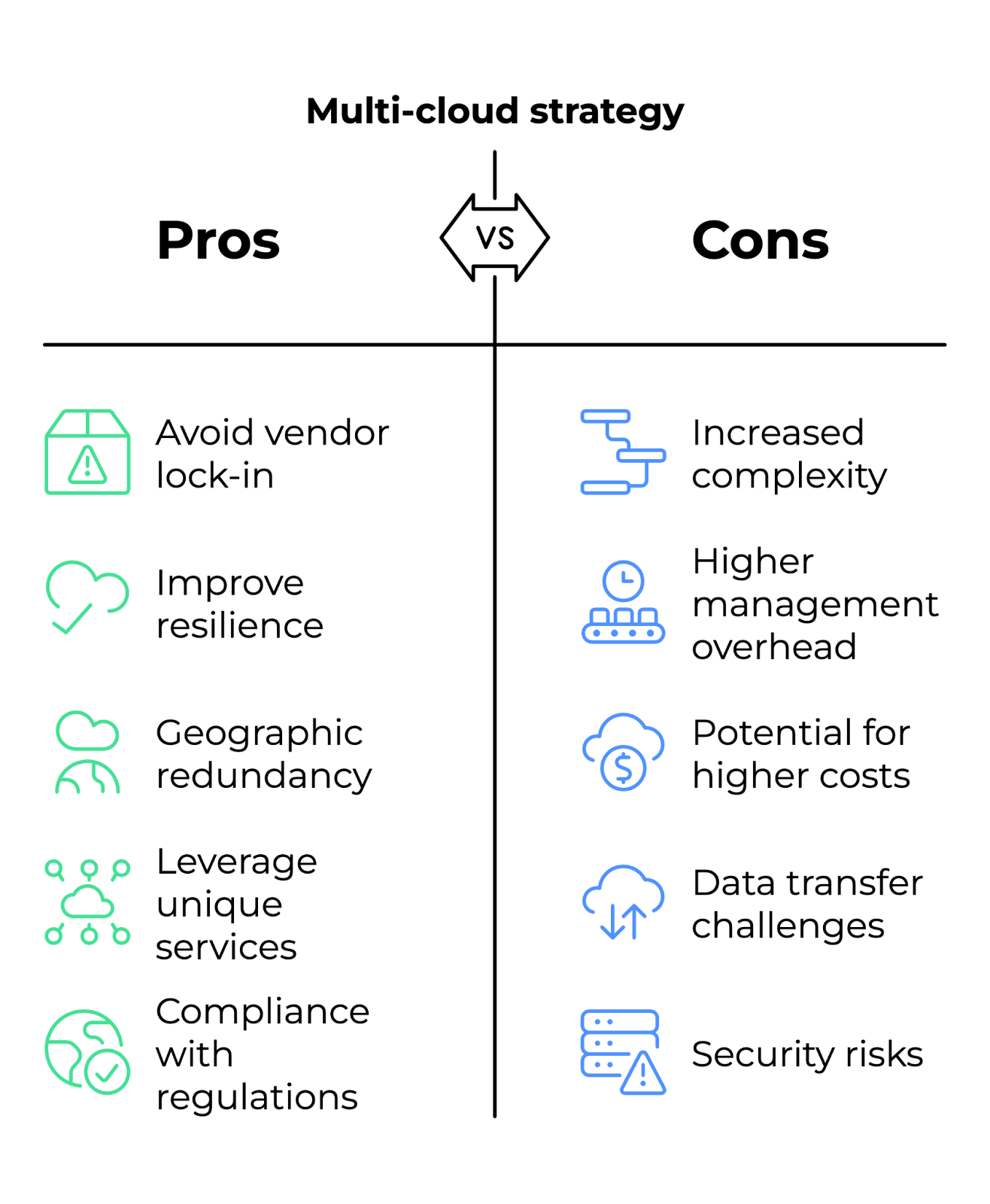

22. What is a multi-cloud strategy, and when should a company use it?

A multi-cloud strategy involves using multiple cloud providers (AWS, Azure, GCP) to avoid vendor lock-in and improve resilience.

Companies choose this approach when they need geographic redundancy for disaster recovery, want to leverage unique services from different providers (e.g., AWS for compute, GCP for AI), or require compliance with regional regulations that restrict cloud provider choices.

Multi-cloud strategy pros and cons. Image by Author.

Advanced Cloud Engineer Interview Questions

These questions test your ability to design scalable solutions, manage complex cloud infrastructures, and handle critical scenarios.

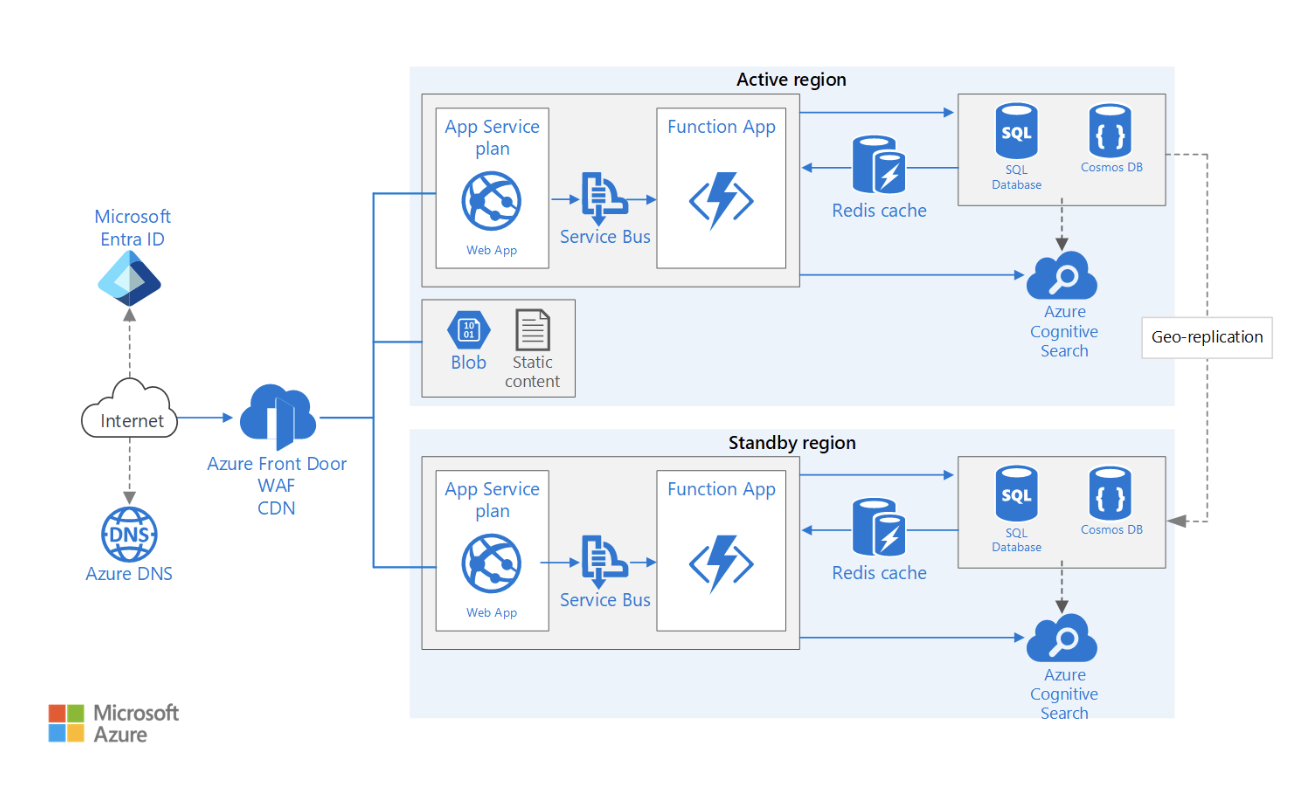

23. How do you design a multi-region, highly available cloud architecture?

A multi-region architecture ensures minimal downtime and business continuity by distributing resources across multiple geographic locations.

When designing such an architecture, several factors must be considered. These are some of them:

- Data replication: Use global databases (e.g., Amazon DynamoDB Global Tables, Azure Cosmos DB) to sync data across regions while maintaining low-latency reads and writes.

- Traffic distribution: Deploy global load balancers (e.g., AWS Global Accelerator, Azure Traffic Manager) to route users to the nearest healthy region.

- Failover strategy: Implement active-active (both regions handling traffic) or active-passive (one standby region) failover models with Route 53 DNS failover.

- Stateful vs. stateless applications: To enable seamless region switching, ensure that session data is stored centrally (e.g., ElastiCache, Redis, or a shared database) rather than on individual instances.

- Compliance and latency considerations: Evaluate data sovereignty laws (e.g., GDPR, HIPAA) and optimize user proximity to reduce latency.

Highly available multi-region web application architecture example. Image source: Microsoft Learn

24. How do you handle security in a cloud-native application with a zero trust model?

The zero trust model assumes no entity, whether inside or outside the network, should be trusted by default.

To implement zero trust in cloud environments:

- Identity verification: Enforce strong authentication using multi-factor authentication (MFA) and federated identity providers (e.g., Okta, AWS IAM Identity Center).

- Least privilege access: Apply role-based access control (RBAC) or attribute-based access control (ABAC) to grant permissions based on job roles and real-time context.

- Micro-segmentation: Use firewalls, network policies, and service meshes (e.g., Istio, Linkerd) to isolate workloads and enforce strict communication rules.

- Continuous monitoring and auditing: Deploy security information and event management (SIEM) solutions (e.g., AWS GuardDuty, Azure Sentinel) to detect and respond to anomalies.

- End-to-end encryption: Ensure TLS encryption for all communications and implement customer-managed keys (CMK) for data encryption at rest.

25. How do you implement an effective cloud cost governance strategy?

A successful strategy starts with cost allocation and tagging, where organizations enforce structured tagging (e.g., department, project, owner) to track spending across teams and improve financial visibility.

Automated budget alerts should be set up using tools like AWS Budgets, Azure Cost Management, or GCP Billing Alerts to prevent unexpected expenses. These solutions provide real-time monitoring and notifications when usage approaches predefined thresholds.

Another aspect is rightsizing and reserved instances. By continuously analyzing instance utilization metrics such as CPU and memory, teams can determine whether workloads should be adjusted or migrated to reserved instances or spot instances, which offer significant cost savings.

Implementing FinOps best practices further enhances cost efficiency. Automated cost anomaly detection tools like Kubecost (for Kubernetes environments) and AWS Compute Optimizer help proactively identify underutilized resources and optimize them.

Finally, auto-shutdown policies play an essential role in reducing waste. Serverless functions, such as AWS Lambda or Azure Functions, can automatically shut down underutilized resources outside business hours, preventing unnecessary expenses.

Cloud cost governance strategy implementation pillars. Image by Author.

26. How do you optimize data storage performance in a cloud-based data lake?

A data lake requires efficient storage, retrieval, and processing of petabyte-scale data. Some optimization strategies include:

- Storage tiering: Use Amazon S3 Intelligent-Tiering, Azure Blob Storage Tiers to move infrequently accessed data to cost-effective storage classes.

- Partitioning and indexing: Implement Hive-style partitioning for query acceleration and leverage AWS Glue Data Catalog, Google BigQuery partitions for better indexing.

- Compression and file format selection: Use Parquet or ORC over CSV/JSON for efficient storage and faster analytics processing.

- Data lake query optimization: Utilize serverless query engines like Amazon Athena, Google BigQuery, or Presto for faster data access without provisioning infrastructure.

27. What are the considerations for designing a cloud-native CI/CD pipeline?

One of the foundational aspects of a CI/CD pipeline is code versioning and repository management, which enables efficient collaboration and change tracking. Tools like GitHub Actions, AWS CodeCommit, or Azure Repos help manage source code, enforce branching strategies, and streamline pull request workflows.

Build automation and artifact management play crucial roles in maintaining consistency and reliability in software builds. Using Docker-based builds, JFrog Artifactory, or AWS CodeArtifact, teams can create reproducible builds, store artifacts securely, and ensure version control across development environments.

Security is another critical consideration. Integrating SAST (static application security testing) tools, such as SonarQube or Snyk, allows early detection of vulnerabilities in the codebase. Additionally, enforcing signed container images ensures that only verified and trusted artifacts are deployed.

A robust multi-stage deployment strategy helps minimize risks associated with software releases. Approaches like canary, blue-green, or rolling deployments enable gradual rollouts, reducing downtime and allowing real-time performance monitoring. Using feature flags, teams can control which users experience new features before a full release.

Finally, Infrastructure as Code (IaC) integration is essential for automating and standardizing cloud environments. By using Terraform, AWS CloudFormation, or Pulumi, teams can define infrastructure in code, maintain consistency across deployments, and enable the provisioning of cloud resources.

Implementing a cloud-native CI/CD pipeline. Image by Author.

28. How do you implement disaster recovery (DR) for a business-critical cloud application?

Disaster recovery (DR) is essential for ensuring business continuity in case of outages, attacks, or hardware failures. A strong DR plan includes the following:

- Recovery point objective (RPO) and recovery time objective (RTO): Define acceptable data loss (RPO) and downtime duration (RTO).

- Backup and replication: Use cross-region replication, AWS Backup, or Azure Site Recovery to maintain up-to-date backups.

- Failover strategies: Implement active-active (hot standby) or active-passive (warm/cold standby) architectures.

- Testing and automation: Regularly test DR plans with chaos engineering tools like AWS Fault Injection Simulator or Gremlin.

29. What are the challenges of managing Kubernetes at scale in a cloud environment?

Managing large-scale Kubernetes (K8s) clusters presents operational and performance challenges. Key areas to address include:

- Cluster autoscaling: Use Cluster Autoscaler or Karpenter to dynamically adjust node counts based on workload demands.

- Workload optimization: Implement horizontal pod autoscaler (HPA) and vertical pod autoscaler (VPA) for efficient resource allocation.

- Networking and service mesh: Leverage Istio or Linkerd to handle inter-service communication and security.

- Observability and troubleshooting: Deploy Prometheus, Grafana, and Fluentd for monitoring logs, metrics, and traces.

- Security hardening: Use pod security policies (PSP), role-based access control (RBAC), and container image scanning to mitigate vulnerabilities.

Scenario-Based Cloud Engineer Interview Questions

Scenario-based questions evaluate your ability to analyze real-world cloud challenges, troubleshoot issues, and make architectural decisions under different constraints.

Your responses should demonstrate practical experience, decision-making, and trade-offs when solving cloud problems. Since there are no right or wrong answers, I included some examples to guide your thinking process.

30. Your company is experiencing high latency in a cloud-hosted web application. How would you diagnose and resolve the issue?

Example answer:

High latency in a cloud application can be caused by several factors, including network congestion, inefficient database queries, suboptimal instance placement, or load balancing misconfigurations.

To diagnose the issue, I would start by isolating the bottleneck using cloud monitoring tools. The first step would be to analyze the application response times and network latency by checking logs, request-response times, and HTTP status codes. If the issue is network-related, I would use a traceroute or ping test to check for increased round-trip times between users and the application. If a problem exists, enabling a CDN could help cache static content closer to users and reduce latency.

If the database queries are causing delays, I would profile slow queries and optimize them by adding proper indexing or denormalizing tables. Additionally, if the application is under high traffic, enabling horizontal scaling with autoscaling groups or read replicas can reduce the load on the primary database.

If latency issues persist, I would check the application's compute resources, ensuring it runs in the correct availability zone closest to end users. If necessary, I would migrate workloads to a multi-region setup or use edge computing solutions to process requests closer to the source.

31. Your company is planning to migrate a legacy on-premises application to the cloud. What factors would you consider, and what migration strategy would you use?

Example answer:

The first step is to conduct a cloud readiness assessment, evaluating whether the application can be migrated as-is or requires modifications. One approach is to use the “6 R’s of cloud migration”:

- Rehosting (lift-and-shift)

- Replatforming

- Repurchasing

- Refactoring

- Retiring

- Retaining

A lift-and-shift approach would be ideal if the goal is a quick migration with minimal changes. If performance optimization and cost efficiency are priorities, I would consider re-platforming by moving the application to containers or serverless computing, allowing better scalability. For applications with monolithic architectures, refactoring into microservices may be necessary to enhance performance and maintainability.

I would also focus on data migration, ensuring that databases are replicated to the cloud with minimal downtime.

Security and compliance would be another major concern. Before deployment, I would ensure that the application meets regulatory requirements (e.g., HIPAA, GDPR) by implementing encryption, IAM policies, and VPC isolation.

Finally, I would perform testing and validation in a staging environment before switching over production traffic.

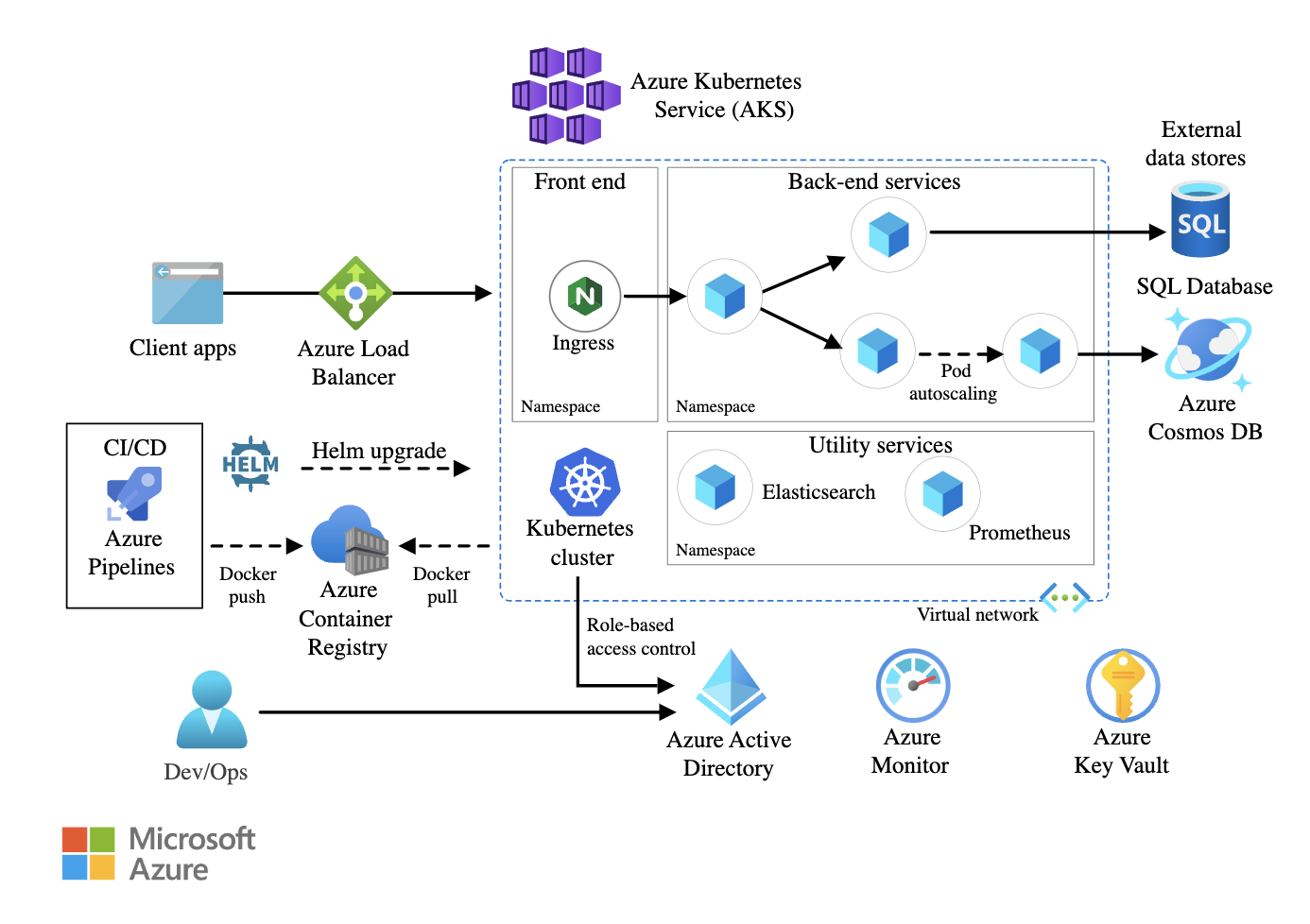

32. You need to ensure high availability for a business-critical microservices application running on Kubernetes. How would you design the architecture?

Example answer:

At the infrastructure level, I would deploy the Kubernetes cluster across multiple availability zones (AZs). This ensures that traffic can be routed to another zone if one AZ goes down. I would use Kubernetes Federation to manage multi-cluster deployments for on-prem or hybrid setups.

Within the cluster, I would implement pod-level resilience by setting up ReplicaSets and horizontal pod autoscalers (HPA) to scale workloads dynamically based on CPU/memory utilization. Additionally, pod disruption budgets (PDBs) would ensure that a minimum number of pods remain available during updates or maintenance.

For networking, I would use a service mesh to manage service-to-service communication, enforcing retries, circuit breaking, and traffic shaping policies. A global load balancer would distribute external traffic efficiently across multiple regions.

Persistent storage is another critical aspect. If the microservices require data persistence, I would use container-native storage solutions. I would configure cross-region backups and automated snapshot policies to prevent data loss.

Finally, monitoring and logging are essential for maintaining high availability. I would integrate Prometheus and Grafana for real-time performance monitoring and use ELK stack or AWS CloudWatch Logs to track application health and detect failures proactively.

Example of a microservices architecture using Azure Kubernetes Service (AKS). Image source: Microsoft Learn

33. A security breach is detected in your cloud environment. How would you investigate and mitigate the impact?

Example answer:

Upon detecting a security breach, my immediate response would be to contain the incident, identify the attack vector, and prevent further exploitation. I would first isolate the affected systems to limit the damage by revoking compromised IAM credentials, restricting access to the affected resources, and enforcing security group rules.

The next step would be log analysis and investigation. Audit logs would reveal suspicious activities such as unauthorized access attempts, privilege escalations, or unexpected API calls. If an attacker exploited a misconfigured security policy, I would identify and patch the vulnerability.

To mitigate the impact, I would rotate credentials, revoke compromised API keys, and enforce MFA for all privileged accounts. If the breach involved data exfiltration, I would analyze logs to trace data movement and notify relevant authorities if regulatory compliance was affected.

Once containment is confirmed, I would conduct a post-incident review to strengthen security policies.

34. Your company wants to implement a multi-cloud strategy. How would you design and manage such an architecture?

Example answer:

To design a multi-cloud architecture, I would start with a common identity and access management (IAM) framework, such as Okta, AWS IAM Federation, or Azure AD, to ensure authentication across clouds. This would prevent siloed access control and reduce identity sprawl.

Networking is a key challenge in multi-cloud environments. I would use interconnect services like AWS Transit Gateway, Azure Virtual WAN, or Google Cloud Interconnect to facilitate secure cross-cloud communication. Additionally, I would implement a service mesh to standardize traffic management and security policies.

Data consistency across clouds is another critical factor. I would ensure cross-cloud replication using global databases like Spanner, Cosmos DB, or AWS Aurora Global Database. If latency-sensitive applications require data locality, I would use edge computing solutions to reduce inter-cloud data transfer.

Finally, cost monitoring and governance would be essential to prevent cloud sprawl. Using FinOps tools like CloudHealth, AWS Cost Explorer, and Azure Cost Management, I would track spending, enforce budget limits, and optimize resource allocation dynamically.

Conclusion

Preparing for a cloud engineer interview requires a solid understanding of cloud fundamentals, architecture, security, and best practices. Keep exploring cloud services, stay updated with industry trends, and, most importantly, get hands-on experience with AWS, Azure, or GCP.

The AWS Cloud Practitioner track is a great place to start if you want to know more about AWS. If you're new to Microsoft Azure, the Azure Fundamentals (AZ-900) track will help you build a strong foundation. And for those looking to dive into Google Cloud Platform (GCP), the Introduction to GCP course is the perfect starting point.

Good luck with your interview!

AWS Cloud Practitioner

Thalia Barrera is a Senior Data Science Editor at DataCamp with a master’s in Computer Science and over a decade of experience in software and data engineering. Thalia enjoys simplifying tech concepts for engineers and data scientists through blog posts, tutorials, and video courses.